#YouTube data scraper

Explore tagged Tumblr posts

Text

youtube

#Scrape Amazon Products data#Amazon Products data scraper#Amazon Products data scraping#Amazon Products data collection#Amazon Products data extraction#Youtube

0 notes

Text

ShadowDragon sells a tool called SocialNet that streamlines the process of pulling public data from various sites, apps, and services. Marketing material available online says SocialNet can “follow the breadcrumbs of your target’s digital life and find hidden correlations in your research.” In one promotional video, ShadowDragon says users can enter “an email, an alias, a name, a phone number, a variety of different things, and immediately have information on your target. We can see interests, we can see who friends are, pictures, videos.”

The leaked list of targeted sites include ones from major tech companies, communication tools, sites focused around certain hobbies and interests, payment services, social networks, and more. The 30 companies the Mozilla Foundation is asking to block ShadowDragon scrapers are Amazon, Apple, BabyCentre, BlueSky, Discord, Duolingo, Etsy, Meta’s Facebook and Instagram, FlightAware, Github, Glassdoor, GoFundMe, Google, LinkedIn, Nextdoor, OnlyFans, Pinterest, Reddit, Snapchat, Strava, Substack, TikTok, Tinder, TripAdvisor, Twitch, Twitter, WhatsApp, Xbox, Yelp, and YouTube.

437 notes

·

View notes

Text

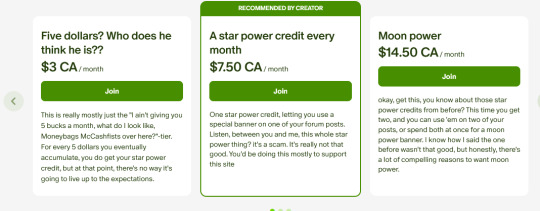

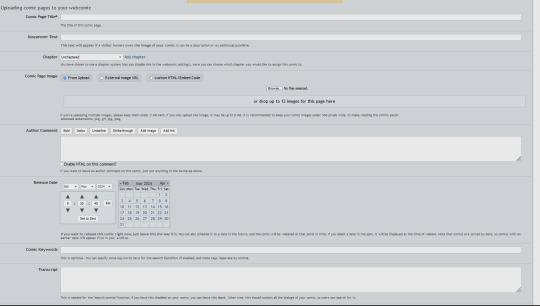

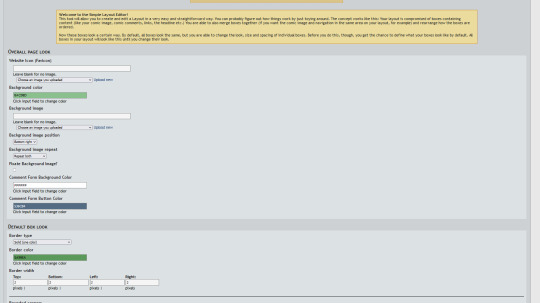

All the cool kids use ComicFury 😘

Hey y'all! If you love independent comic sites and have a few extra dollars in your pocket, please consider supporting ComicFury, the owner Kyo has been running it for nearly twenty years and it's one of the only comic hosting platforms left that's entirely independent and reminiscent of the 'old school' days that I know y'all feel nostalgic over.

(kyo's sense of humor is truly unmatched lmao)

Here are some of the other great features it offers:

Message board forums! It's a gift from the mid-2000's era gods!

Entirely free-to-use HTML and CSS editing! You can use the provided templates, or go wild and customize the site entirely to your liking! There's also a built-in site editor for people like me who want more control over their site design but don't have the patience to learn HTML/CSS ;0

In-depth site analytics that allow you to track and moderate comments, monitor your comic's performance per week, and let you see how many visitors you get. You can also set up Google Analytics on your site if you want that extra touch of data, without any bullshit from the platform. Shit, the site doesn't come with ads, but you can run ads on your site. The site owners don't ask questions, they don't take a cut. Pair your site with ComicAd and you'll be as cool as a crocodile alligator !

RSS feeds! They're like Youtube subscriptions for millennials and Gen X'ers!

NSFW comics are allowed, let the "female presenting nipples" run free! (just tag and content rate them properly!)

Tagging. Tagging. Remember that? The basic feature that every comic site has except for the alleged "#1 webcomic site"? The independent comic site that still looks the same as it did 10 years ago has that. Which you'd assume isn't that big a deal, but isn't it weird that Webtoons doesn't?

Blog posts. 'Nuff said.

AI-made comics are strictly prohibited. This also means you don't have to worry about the site owners sneaking in AI comics or installing AI scrapers (cough cough)

Did I mention that the hosting includes actual hosting? Meaning for only the cost of the domain you can change your URL to whatever site name you want. No extra cost for hosting because it's just a URL redirect. No stupid "pro plan" or "gold tier" subscription necessary, every feature of the site is free to use for all. If this were a sponsored Pornhub ad, this is the part where I'd say "no credit card, no bullshit".

Don't believe me? Alright, look at my creator backend (feat stats on my old ass 2014 comic, I ain't got anything to hide LOL)

TRANSCRIPTS! CHAPTER ORGANIZATION! MASS PAGE UPLOADING! MULTIPLE CREATOR SUPPORT! FULL HTML AND CSS SUPPORT! SIMPLIFIED EDITORS! ACTUAL STATISTICS THAT GIVE YOU WEEKLY BREAKDOWNS! THE POWER OF CHOICE!!

So yeah! You have zero reasons to not use and support ComicFury! It being "smaller" than Webtoons shouldn't stop you! Regain your independence, support smaller platforms, and maybe you'll even find that 'tight-knit community' that we all miss from the days of old! They're out there, you just gotta be willing to use them! ( ´ ∀ `)ノ~ ♡

#comicfury#support small platforms#webcomic platforms#webcomic advice#please reblog#also i'm posting my original work over there so if you want pure unhinged weeb puff that's where you can find it LOL#and no this isn't a 'sponsored post'#but i have been paid in the currency known as good faith to promote the shit out of it#because i don't wanna see sites like this die out#we already lost smackjeeves#comicfury is one of the only survivors left

377 notes

·

View notes

Text

Poisoning the fic scraper well

Okay, hear me out. I remember well when pretty much every fic came with an author's disclaimer like "I don't own this property or the characters, please don't sue me" etc. What if the new thing to do needs to be to insert some nonsense lines into fic to poison the well for the AI data scrapers, AI generated audio books, Youtube slop creators etc.

Like, instead of a line or ***s to indicate a scene change we go

Swordfishing the butterfly on the monster. Commodity mules suckering tits. Heeby deeby affecting lovers in the searchlight. //Lorum ipsum no AI no masters.//

Or just a random selection of profanity

Cuntsnatcher bastard fucking assholes son of a syphilitic goat scrape THIS into your pipe and shove it up your ass.

Hell, if we put it under a spoiler tag or turned the font white or something, our actual human readers wouldn't even have to SEE it, just the soulless machines that are trying to turn a profit on other people's work.

IDK, just some food for thought.

#fanfic discourse#fanworks#ai bullshit#fanfic scraping#people who feed fic to ai can kiss my ass#ao3#ao3 fanfic scrapers

6 notes

·

View notes

Text

🔥🔥🔥AiDADY Review: Your All-in-One Tool for Digital Success

Comes with powerful features: AI Dady Review

✅ Brand New AI Tech “AIdady” Replaces Dozens Of Expensive OverPriced Recurring Paid Platforms

✅ Say Goodbye to Paying Monthly To ClickFunnels, Convertri, Wix, Shopify, Shutterstock, Canva, Hubspot e.t.c

✅ Built-in Websites & Funnel Builder: Create Unlimited High Converting Funnels For Any Offer & Stunning Websites For Any Niche Using AI In Just Seconds

✅ Built-in Salescopy & Email Copy Writer: Create Unlimited Sales Copies & Email copies At Ease With AI

✅ Built-in Store Builder: Create Unlimited AI Optimized Affiliate Stores With Winning Products At Lightning Fast Speed

✅ Built-in Blog Builder: Create Unlimited Stunning SEO Optimized Blogs with ease

✅ Built-in AI Content Creator: Create SEO Optimized, Plagiarism-free Contents for any niche using AI

✅ Built-in Stock Platform: Millions of Stock Images, Graphics, Animations, Videos, Vectors, GIFS, and Audios

✅ Built-in Travel Affiliate Sites Creator: Create Unlimited AI driven travel affiliate sites in seconds to grab more attention, traffic & sales

✅ All the AI Apps you’ll ever need for online AI business & digital marketing under one single dashboard

✅ Built-in Ebook & Course Affiliate Sites Creator: Create ebook or course affiliate sites with AI in seconds

✅ 250+ Webtool Website Creator Included

✅ Unlimited Ai Chatbots, Database, AI Code, IOS & Android App Code Creator Included

✅ Built-in Unlimited Internet Data Scraper in any niche, Background Removal & App Monetization

✅ Get 100% Free hosting, No Domain Or Hosting Required

✅ Unlimited Funnels, Unlimited Websites, Unlimited Blogs, Unlimited Stores, Unlimited Affiliate sites & Unlimited Youtube-Like Video Websites

✅ Replace Expensive Monthly OverPriced Platforms With One-Time Payment & Save $27,497.41 Each Year

✅ Start Your Own Digital Marketing Agency & AI Business Without Any Tech Skills or Experience

✅ 100% Cloud Based. Nothing To Download Or Install

✅ Lifetime Access With No Recurring Monthly Payments...

✅ Commercial licence included: create & sell as many funnels, websites, blogs, stores, affiliate sites etc as you want.

✅ Newbie Friendly, Easy-To-Use Dashboard

AI Dady Review: Funnels

FE - ($17)

OTO1: Pro Version ($47)

OTO2 - Unlimited Access ($37)

OTO3: DFY Version ($147)

OTO4: Agency Edition ($147)

OTO5: Automation ($37)

OTO6: Reseller ($67)

>>>>Get Instant Access Now

2 notes

·

View notes

Text

today was grocery day, so i tried making this roasted vegetable pasta i saw on youtube. (the actual measurements are in the comments) i made it once before, and it wasn’t great—it was extremely under seasoned, salty and kinda soupy when i followed the recipe, so i edited the recipe for my own purposes, and now i’m offering it to you (and the tumblr ai data scraper, i suppose)

roasted vegetable pasta recipe

to a baking/casserole dish, add:

2 cups of cherry tomatoes, whole

1/2 of a zucchini, skinned and chopped

3 mini bell peppers, chopped

6 cloves of garlic, skinned

5 quarters of an artichoke heart (in oil, unless you have fresh)

1/2 of a yellow onion, chopped

1/2 cup of sliced mushrooms

1 handful of curly parsley, finely chopped and squeezed with a paper towel

1 handful of basil, finely chopped and squeezed with a paper towel

3 sprigs of fresh thyme

drizzle olive oil, balsamic vinegar and honey

season with salt, pepper and italian seasoning mix (mine is dried parsley, basil, thyme, marjoram, and oregano, but you could throw in garlic powder, onion powder and red pepper flakes too. that’s a little too much garlic for me but everyone’s lust for garlic is different)

mix everything together by hand, so the vegetables are well oiled and seasoned

cover with tinfoil and bake @ 400F for one hour

make al dente pasta—i like cavatappi but this is up to you, smaller noodles work better

at the end, mix up your vegetables, smushing the tomatoes, garlic, zucchini, etc. and slowly incorporate pasta water and cream/vegan cream until it reaches a sauce consistency (can vary depending on vegetable and pan size,) probably about half a cup to a cup of pasta water, and 5-ish tablespoons of cream, but you’ll know when it becomes a thick sauce. this was the biggest hurdle in the original because you have to add them back and forth teaspoons at a time, or it’ll get soupy and weird. i think maybe she wanted it to be soupy? unclear but i didn’t care for it.

finish by incorporating grated parmigiana and romano cheese/vegan cheese into the sauce

serve with more grated cheese and basil leaves on top

if you wanna make more, measure with your heart and how much stuff the pan will hold. the most important part of this is honestly not following the recipe too closely and seasoning the fuck out of the vegetables. i omitted sun dried tomatoes in oil because i think they’re kinda salty and gross, and spinach because there was just way too much water, but you decide your own destiny. if you do decide to include spinach, i would throw it in with the pasta at the last minute, and then add it to the sauce. this would also probably be awesome with eggplant and mozzarella or burrata, also

#what if i become a recipe guy. will you still love me. if i’m the goth recipe guy#anyway i really like pasta so i’m gonna make this all the time now. it makes an insane amount of food and it’s delicious#recipes

2 notes

·

View notes

Text

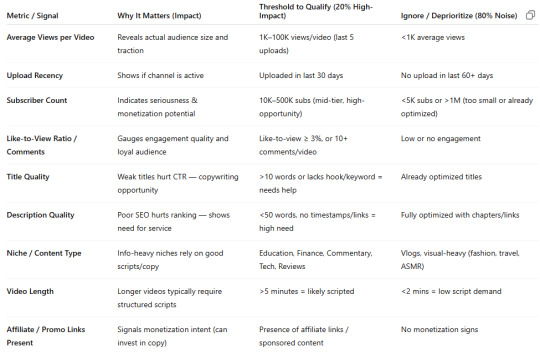

Metrics for analyzing

Providing copywriting services to YouTube channels is a smart niche—many creators need help with titles, descriptions, hooks, scripts, and even SEO optimization. To assess whether a channel qualifies for your service (and what kind of service they might need), your scraper should collect both quantitative and qualitative data points.

Here’s a detailed breakdown of key metrics and inferences you can build:

1. Basic Channel Information

Channel Name

Channel URL

Channel Description: Helps identify niche/genre (e.g., tech, beauty, education).

Country (if available)

2. Engagement & Reach

These indicate whether the channel is active, growing, and potentially monetized (i.e., worth investing in copywriting).

Subscriber Count

Total Video Count

Total Views

Average Views per Video

View-to-subscriber ratio (helps assess engagement)

Upload Frequency (e.g., X videos/week or month)

Recent Upload Dates (to confirm activity)

Video Age Analysis: Are they consistently posting for 6+ months?

3. Content Performance (Recent 5–10 Videos)

Scrape video-level metadata to infer how content performs and how optimized it is:

Title Length and Structure

Presence of Keywords in Title/Description

Clickbait vs. Informational Titles

Average Views per Recent Video

Like-to-View Ratio

Comment Count

Video Duration Trends

Scripted Content? (Inferred from style/genre—e.g., educational likely is)

Thumbnail Quality (optional, using image recognition or tagging)

4. SEO and Copy Optimization Check

Scrape and analyze:

Video Descriptions: Are they SEO-friendly, engaging, or just minimal?

Use of Tags: How many and which ones? Relevant or generic?

Chapters/Timestamps in Description

Hashtags Used

External Links (e.g., affiliate, social, Patreon)

5. Channel Niche / Target Market

Use the scraped channel and video descriptions, titles, and tags to cluster:

Topic Categories: Tech, Gaming, Beauty, Education, Vlogging, Finance, etc.

Content Type: Entertainment, tutorials, storytelling, reviews, commentary

Target Audience: Kids, general, professionals, niche communities

6. Monetization Signals

Sponsorship Mentions

Affiliate Links

Merch Store / Patreon / Memberships

Professional Thumbnails, Intro, Branding (suggests budget + seriousness)

7. Pain Point Signals for Copywriting

These can help your system prioritize channels that likely need help:

Poor Titles (vague, long, unstructured)

Empty or weak Descriptions

Low engagement despite high subs

Inconsistent uploads despite decent views

No timestamps or SEO optimization

Channels with good visuals but bad copy

Bonus: Score & Segment Channels

You can use the above to compute a lead score for each channel:

Activity Score: Upload frequency + recent activity

Engagement Score: View-to-sub ratio + like/comment ratios

Optimization Score: Title/desc quality + tag usage + SEO elements

Copywriting Need Score: Signs of poor copy vs. high potential

Segment them into:

High-need, high-potential: Poor copy, decent reach

High-performing but scalable: Good copy, could scale with help

Low engagement or inactive: Not qualified now

Would you like help creating the scraper logic or a scoring algorithm?

1 note

·

View note

Text

Denas Grybauskas, Chief Governance and Strategy Officer at Oxylabs – Interview Series

New Post has been published on https://thedigitalinsider.com/denas-grybauskas-chief-governance-and-strategy-officer-at-oxylabs-interview-series/

Denas Grybauskas, Chief Governance and Strategy Officer at Oxylabs – Interview Series

Denas Grybauskas is the Chief Governance and Strategy Officer at Oxylabs, a global leader in web intelligence collection and premium proxy solutions.

Founded in 2015, Oxylabs provides one of the largest ethically sourced proxy networks in the world—spanning over 177 million IPs across 195 countries—along with advanced tools like Web Unblocker, Web Scraper API, and OxyCopilot, an AI-powered scraping assistant that converts natural language into structured data queries.

You’ve had an impressive legal and governance journey across Lithuania’s legal tech space. What personally motivated you to tackle one of AI’s most polarising challenges—ethics and copyright—in your role at Oxylabs?

Oxylabs have always been the flagbearer for responsible innovation in the industry. We were the first to advocate for ethical proxy sourcing and web scraping industry standards. Now, with AI moving so fast, we must make sure that innovation is balanced with responsibility.

We saw this as a huge problem facing the AI industry, and we could also see the solution. By providing these datasets, we’re enabling AI companies and creators to be on the same page regarding fair AI development, which is beneficial for everyone involved. We knew how important it was to keep creators’ rights at the forefront but also provide content for the development of future AI systems, so we created these datasets as something that can meet the demands of today’s market.

The UK is in the midst of a heated copyright battle, with strong voices on both sides. How do you interpret the current state of the debate between AI innovation and creator rights?

While it’s important that the UK government favours productive technological innovation as a priority, it’s vital that creators should feel enhanced and protected by AI, not stolen from. The legal framework currently under debate must find a sweet spot between fostering innovation and, at the same time, protecting the creators, and I hope in the coming weeks we see them find a way to strike a balance.

Oxylabs has just launched the world’s first ethical YouTube datasets, which requires creator consent for AI training. How exactly does this consent process work—and how scalable is it for other industries like music or publishing?

All of the millions of original videos in the datasets have the explicit consent of the creators to be used for AI training, connecting creators and innovators ethically. All datasets offered by Oxylabs include videos, transcripts, and rich metadata. While such data has many potential use cases, Oxylabs refined and prepared it specifically for AI training, which is the use that the content creators have knowingly agreed to.

Many tech leaders argue that requiring explicit opt-in from all creators could “kill” the AI industry. What’s your response to that claim, and how does Oxylabs’ approach prove otherwise?

Requiring that, for every usage of material for AI training, there be a previous explicit opt-in presents significant operational challenges and would come at a significant cost to AI innovation. Instead of protecting creators’ rights, it could unintentionally incentivize companies to shift development activities to jurisdictions with less rigorous enforcement or differing copyright regimes. However, this does not mean that there can be no middle ground where AI development is encouraged while copyright is respected. On the contrary, what we need are workable mechanisms that simplify the relationship between AI companies and creators.

These datasets offer one approach to moving forward. The opt-out model, according to which content can be used unless the copyright owner explicitly opts out, is another. The third way would be facilitating deal-making between publishers, creators, and AI companies through technological solutions, such as online platforms.

Ultimately, any solution must operate within the bounds of applicable copyright and data protection laws. At Oxylabs, we believe AI innovation must be pursued responsibly, and our goal is to contribute to lawful, practical frameworks that respect creators while enabling progress.

What were the biggest hurdles your team had to overcome to make consent-based datasets viable?

The path for us was opened by YouTube, enabling content creators to easily and conveniently license their work for AI training. After that, our work was mostly technical, involving gathering data, cleaning and structuring it to prepare the datasets, and building the entire technical setup for companies to access the data they needed. But this is something that we’ve been doing for years, in one way or another. Of course, each case presents its own set of challenges, especially when you’re dealing with something as huge and complex as multimodal data. But we had both the knowledge and the technical capacity to do this. Given this, once YouTube authors got the chance to give consent, the rest was only a matter of putting our time and resources into it.

Beyond YouTube content, do you envision a future where other major content types—such as music, writing, or digital art—can also be systematically licensed for use as training data?

For a while now, we have been pointing out the need for a systematic approach to consent-giving and content-licensing in order to enable AI innovation while balancing it with creator rights. Only when there is a convenient and cooperative way for both sides to achieve their goals will there be mutual benefit.

This is just the beginning. We believe that providing datasets like ours across a range of industries can provide a solution that finally brings the copyright debate to an amicable close.

Does the importance of offerings like Oxylabs’ ethical datasets vary depending on different AI governance approaches in the EU, the UK, and other jurisdictions?

On the one hand, the availability of explicit-consent-based datasets levels the field for AI companies based in jurisdictions where governments lean toward stricter regulation. The primary concern of these companies is that, rather than supporting creators, strict rules for obtaining consent will only give an unfair advantage to AI developers in other jurisdictions. The problem is not that these companies don’t care about consent but rather that without a convenient way to obtain it, they are doomed to lag behind.

On the other hand, we believe that if granting consent and accessing data licensed for AI training is simplified, there is no reason why this approach should not become the preferred way globally. Our datasets built on licensed YouTube content are a step toward this simplification.

With growing public distrust toward how AI is trained, how do you think transparency and consent can become competitive advantages for tech companies?

Although transparency is often seen as a hindrance to competitive edge, it’s also our greatest weapon to fight mistrust. The more transparency AI companies can provide, the more evidence there is for ethical and beneficial AI training, thereby rebuilding trust in the AI industry. And in turn, creators seeing that they and the society can get value from AI innovation will have more reason to give consent in the future.

Oxylabs is often associated with data scraping and web intelligence. How does this new ethical initiative fit into the broader vision of the company?

The release of ethically sourced YouTube datasets continues our mission at Oxylabs to establish and promote ethical industry practices. As part of this, we co-founded the Ethical Web Data Collection Initiative (EWDCI) and introduced an industry-first transparent tier framework for proxy sourcing. We also launched Project 4β as part of our mission to enable researchers and academics to maximise their research impact and enhance the understanding of critical public web data.

Looking ahead, do you think governments should mandate consent-by-default for training data, or should it remain a voluntary industry-led initiative?

In a free market economy, it is generally best to let the market correct itself. By allowing innovation to develop in response to market needs, we continually reinvent and renew our prosperity. Heavy-handed legislation is never a good first choice and should only be resorted to when all other avenues to ensure justice while allowing innovation have been exhausted.

It doesn’t look like we have already reached that point in AI training. YouTube’s licensing options for creators and our datasets demonstrate that this ecosystem is actively seeking ways to adapt to new realities. Thus, while clear regulation is, of course, needed to ensure that everyone acts within their rights, governments might want to tread lightly. Rather than requiring expressed consent in every case, they might want to examine the ways industries can develop mechanisms for resolving the current tensions and take their cues from that when legislating to encourage innovation rather than hinder it.

What advice would you offer to startups and AI developers who want to prioritise ethical data use without stalling innovation?

One way startups can help facilitate ethical data use is by developing technological solutions that simplify the process of obtaining consent and deriving value for creators. As options to acquire transparently sourced data emerge, AI companies need not compromise on speed; therefore, I advise them to keep their eyes open for such offerings.

Thank you for the great interview, readers who wish to learn more should visit Oxylabs.

#Advice#ai#AI development#ai governance#AI industry#AI innovation#AI systems#ai training#AI-powered#API#approach#Art#Building#Companies#compromise#content#content creators#copyright#course#creators#data#data collection#data protection#data scraping#data use#datasets#deal#developers#development#Digital Art

0 notes

Text

BIGDATASCRAPING

Powerful web scraping platform for regular and professional use, offering high-performance data extraction from any website. Supports collection and analysis of data from diverse sources with flexible export formats, seamless integrations, and custom solutions. Features specialized scrapers for Google Maps, Instagram, Twitter (X), YouTube, Facebook, LinkedIn, TikTok, Yelp, TripAdvisor, and Google News, designed for enterprise-level needs with prioritized support.

1 note

·

View note

Text

Automated Web Scraping Services for Smarter Insights

Transforming Business Intelligence with Automated Web Scraping Services

In today’s data-driven economy, staying ahead means accessing the right information—fast and at scale. At Actowiz Solutions, we specialize in delivering automated web scraping solutions that help businesses across ecommerce, real estate, social platforms, and B2B directories gain a competitive edge through real-time insights.

Let’s explore how automation, AI, and platform-specific scraping are revolutionizing industries.

Why Automate Web Scraping?

Manually collecting data from websites is time-consuming and inefficient. With our automated web scraping services, powered by Microsoft Power Automate, you can streamline large-scale data collection processes—perfect for businesses needing continuous access to product listings, customer reviews, or market trends.

ChatGPT for Web Scraping: AI Meets Automation

Leveraging the capabilities of AI, our solution for ChatGPT web scraping simplifies complex scraping workflows. From writing extraction scripts to generating data patterns dynamically, ChatGPT helps reduce development time while improving efficiency and accuracy.

eBay Web Scraper for E-commerce Sellers

Whether you're monitoring competitor pricing or extracting product data, our dedicated eBay web scraper provides access to structured data from one of the world’s largest marketplaces. It’s ideal for sellers, analysts, and aggregators who rely on updated eBay information.

Extract Trends and Consumer Preferences with Precision

Tracking what’s hot across categories is critical for strategic planning. Our services allow businesses to extract marketplace trends, helping you make smarter stocking, marketing, and pricing decisions.

Use a Review Scraper to Analyze Customer Sentiment

Understanding customer feedback has never been easier. Our review scraper pulls reviews and ratings from platforms like Google, giving you valuable insight into brand perception and service performance.

Scrape YouTube Comments for Audience Insights

If you're running video marketing campaigns, you need feedback at scale. With our YouTube comments scraper, built using Selenium and Python, you can monitor user engagement, sentiment, and trending topics in real-time.

TikTok Scraping with Python for Viral Content Discovery

TikTok trends move fast—our TikTok scraping in Python service helps brands and analysts extract video metadata, hashtags, and engagement stats to stay ahead of viral trends.

Extract Business Leads with TradeIndia Data

For B2B marketers, sourcing accurate leads is key. Use our TradeIndia data extractor to pull business contact details, categories, and product listings—ideal for targeting suppliers or buyers in India’s top B2B portal.

Zillow Web Scraping for Real Estate Intelligence

Need real estate pricing, listings, or rental trends? Our Zillow web scraping solutions give you access to up-to-date property data, helping you analyze market shifts and investment opportunities.

Final Thoughts

Automated web scraping is no longer a luxury—it’s a necessity. Whether you're in ecommerce, social media, real estate, or B2B, Actowiz Solutions offers the tools and expertise to extract high-quality data that fuels business growth.

Get in touch today to discover how our automation-powered scraping services can transform your decision-making with real-time intelligence.

#AutomatedWebScrapingServices#ChatGPTWebScraping#EBayWebScraper#ExtractMarketplaceTrends#ReviewScraper#YouTubeCommentsScraper#ZillowWebScraping#TradeIndiaDataExtractor

0 notes

Text

#web scraping#businessgrowth#leadgeneration#marketingtools#web development#dataautomation#python#data scraping#technology#linkedintips

0 notes

Text

Tired of guessing what works on YouTube? Let the data guide you!

If you’re a content creator, marketer, or just someone curious about YouTube trends, you know how valuable channel insights can be. But who has time to manually collect all that data?

That’s where the YouTube Channel Scraper comes in.

With this tool, you can:

• Quickly gather channel stats like subscribers, views, and upload frequency.

• Dive into video details: titles, descriptions, upload dates, and even performance metrics.

• Use these insights to plan your content, analyze competitors, or find what audiences love.

And the best part? You don’t need any coding skills. Just set your parameters and let it work its magic.

Stop wasting time on manual research and start focusing on what matters—growing your YouTube presence.

Try it now and take your YouTube strategy to the next level: YouTube Channel Scraper

#YouTubeStrategy #ContentPlanning #YouTubeGrowth #DataTools #DigitalMarketing

0 notes

Text

youtube

Howard Hackathon - "2am Turnup" At some point during every 24hr hackathon, energy gets looowww. You have to do so something to pick everyone up! Sometimes this is a run around the building, or group jumping jacks. Howard University and Hampton University set a new bar, with the "2am Turn-Up!" The main hackathon room had a full AV and sound system, which the kids found and put to good use. One of the Howard students DJ'd from his Macbook. Here are some Howard University hackers declaring once and for all that Howard University is "The Real HU(tm)." Of course Hampton University had an equal response. 1st prize went to an iOS app and REST API for aggregating NCAA sailing results and making them available. They quoted Google's mission to "Organize the world's information and make it universally accessible." They then noticed that Google will provide soccer or World series scores, but not college sailing. So they made a scraper/parser/data persistence layer. Then they made a REST API. Then they made an app. The app used some very cool parallax and blur effects. http://mekka-tech.com via YouTube https://www.youtube.com/watch?v=lMQVxrft8iw

0 notes

Text

How to start learning a coding?

Starting to learn coding can be a rewarding journey. Here’s a step-by-step guide to help you begin:

Choose a Programming Language

Beginner-Friendly Languages: Python, JavaScript, Ruby.

Consider Your Goals: What do you want to build (websites, apps, data analysis, etc.)?

Set Up Your Development Environment

Text Editors/IDEs: Visual Studio Code, PyCharm, Sublime Text.

Install Necessary Software: Python interpreter, Node.js for JavaScript, etc.

Learn the Basics

Syntax and Semantics: Get familiar with the basic syntax of the language.

Core Concepts: Variables, data types, control structures (if/else, loops), functions.

Utilize Online Resources

Interactive Tutorials: Codecademy, freeCodeCamp, Solo Learn.

Video Tutorials: YouTube channels like CS50, Traversy Media, and Programming with Mosh.

Practice Regularly

Coding Challenges: LeetCode, HackerRank, Codewars.

Projects: Start with simple projects like a calculator, to-do list, or personal website.

Join Coding Communities

Online Forums: Stack Overflow, Reddit (r/learn programming).

Local Meetups: Search for coding meetups or hackathons in your area.

Learn Version Control

Git: Learn to use Git and GitHub for version control and collaboration.

Study Best Practices

Clean Code: Learn about writing clean, readable code.

Design Patterns: Understand common design patterns and their use cases.

Build Real Projects

Portfolio: Create a portfolio of projects to showcase your skills.

Collaborate: Contribute to open-source projects or work on group projects.

Keep Learning

Books: “Automate the Boring Stuff with Python” by Al Sweigart, “Eloquent JavaScript” by Marijn Haverbeke.

Advanced Topics: Data structures, algorithms, databases, web development frameworks.

Sample Learning Plan for Python:

Week 1-2: Basics (Syntax, Variables, Data Types).

Week 3-4: Control Structures (Loops, Conditionals).

Week 5-6: Functions, Modules.

Week 7-8: Basic Projects (Calculator, Simple Games).

Week 9-10: Advanced Topics (OOP, Data Structures).

Week 11-12: Build a Portfolio Project (Web Scraper, Simple Web App).

Tips for Success:

Stay Consistent: Practice coding daily, even if it’s just for 15-30 minutes.

Break Down Problems: Divide problems into smaller, manageable parts.

Ask for Help: Don’t hesitate to seek help from the community or peers.

By following this structured approach and leveraging the vast array of resources available online, you'll be on your way to becoming proficient in coding. Good luck!

TCCI Computer classes provide the best training in online computer courses through different learning methods/media located in Bopal Ahmedabad and ISCON Ambli Road in Ahmedabad.

For More Information:

Call us @ +91 98256 18292

Visit us @ http://tccicomputercoaching.com/

#computer technology course#computer coding classes near me#IT computer course#computer software courses#computer coding classes

0 notes

Text

Mastering Python: Advanced Strategies for Intermediate Learners

Delving Deeper into Core Concepts

As an intermediate learner aiming to level up your Python skills, it’s crucial to solidify your understanding of fundamental concepts. Focus on mastering essential data structures like lists, dictionaries, sets, and tuples.

Considering the kind support of Learn Python Course in Hyderabad, Whatever your level of experience or reason for switching from another programming language, learning Python gets much more fun.

Dive into the intricacies of Object-Oriented Programming (OOP), exploring topics such as classes, inheritance, and polymorphism. Additionally, familiarize yourself with modules, packages, and the Python Standard Library to harness their power in your projects.

Exploring Advanced Python Techniques

Once you’ve strengthened your core knowledge, it’s time to explore more advanced Python topics. Learn about decorators, context managers, and other advanced techniques to enhance the structure and efficiency of your code. Dive into generators, iterators, and concurrency concepts like threading, multiprocessing, and asyncio to tackle complex tasks and optimize performance in your projects.

Applying Skills Through Practical Projects

Practical application is key to mastering Python effectively. Start by working on hands-on projects that challenge and expand your skills. Begin with smaller scripts and gradually progress to larger, more complex applications. Whether it’s building web scrapers, automation tools, or contributing to open-source projects, applying your knowledge in real-world scenarios will solidify your understanding and boost your confidence as a Python developer.

Leveraging Online Resources and Courses

Take advantage of the plethora of online resources available to advance your Python skills further. Enroll in advanced Python courses offered by platforms like Coursera, edX, and Udemy to gain in-depth knowledge of specific topics. Additionally, explore tutorials on YouTube channels such as Corey Schafer and Tech with Tim for valuable insights and practical guidance.

Engaging with the Python Community

Active participation in the Python community can accelerate your learning journey. Join discussions on forums like Stack Overflow and Reddit’s r/learnpython and r/Python to seek help, share knowledge, and network with fellow developers. Attend local meetups, workshops, and Python conferences to connect with industry professionals and stay updated on the latest trends and best practices.

Enrolling in the Best Python Certification Online can help people realise Python's full potential and gain a deeper understanding of its complexities.

Enriching Learning with Python Books

Python books offer a wealth of knowledge and insights into advanced topics. Dive into titles like “Fluent Python” by Luciano Ramalho, “Effective Python” by Brett Slatkin, and “Python Cookbook” by David Beazley and Brian K. Jones to deepen your understanding and refine your skills.

Enhancing Problem-Solving Abilities

Strengthen your problem-solving skills by tackling coding challenges on platforms like LeetCode, HackerRank, and Project Euler. These platforms offer a diverse range of problems that will challenge your algorithmic thinking and hone your coding proficiency.

Exploring Related Technologies

Expand your horizons by exploring technologies that complement Python. Delve into web development with frameworks like Django and Flask, explore data science with libraries like Pandas, NumPy, and Scikit-Learn, and familiarize yourself with DevOps tools such as Docker and Kubernetes.

Keeping Abreast of Updates

Python is a dynamic language that evolves continuously. Stay updated with the latest developments by following Python Enhancement Proposals (PEPs), subscribing to Python-related blogs and newsletters, and actively participating in online communities. Keeping abreast of updates ensures that your skills remain relevant and up-to-date in the ever-evolving landscape of Python development.

#python course#python training#python#technology#tech#python online training#python programming#python online course#python online classes

0 notes