#aiframeworks

Explore tagged Tumblr posts

Text

AI Frameworks Help Data Scientists For GenAI Survival

AI Frameworks: Crucial to the Success of GenAI

Develop Your AI Capabilities Now

You play a crucial part in the quickly growing field of generative artificial intelligence (GenAI) as a data scientist. Your proficiency in data analysis, modeling, and interpretation is still essential, even though platforms like Hugging Face and LangChain are at the forefront of AI research.

Although GenAI systems are capable of producing remarkable outcomes, they still mostly depend on clear, organized data and perceptive interpretation areas in which data scientists are highly skilled. You can direct GenAI models to produce more precise, useful predictions by applying your in-depth knowledge of data and statistical techniques. In order to ensure that GenAI systems are based on strong, data-driven foundations and can realize their full potential, your job as a data scientist is crucial. Here’s how to take the lead:

Data Quality Is Crucial

The effectiveness of even the most sophisticated GenAI models depends on the quality of the data they use. By guaranteeing that the data is relevant, AI tools like Pandas and Modin enable you to clean, preprocess, and manipulate large datasets.

Analysis and Interpretation of Exploratory Data

It is essential to comprehend the features and trends of the data before creating the models. Data and model outputs are visualized via a variety of data science frameworks, like Matplotlib and Seaborn, which aid developers in comprehending the data, selecting features, and interpreting the models.

Model Optimization and Evaluation

A variety of algorithms for model construction are offered by AI frameworks like scikit-learn, PyTorch, and TensorFlow. To improve models and their performance, they provide a range of techniques for cross-validation, hyperparameter optimization, and performance evaluation.

Model Deployment and Integration

Tools such as ONNX Runtime and MLflow help with cross-platform deployment and experimentation tracking. By guaranteeing that the models continue to function successfully in production, this helps the developers oversee their projects from start to finish.

Intel’s Optimized AI Frameworks and Tools

The technologies that developers are already familiar with in data analytics, machine learning, and deep learning (such as Modin, NumPy, scikit-learn, and PyTorch) can be used. For the many phases of the AI process, such as data preparation, model training, inference, and deployment, Intel has optimized the current AI tools and AI frameworks, which are based on a single, open, multiarchitecture, multivendor software platform called oneAPI programming model.

Data Engineering and Model Development:

To speed up end-to-end data science pipelines on Intel architecture, use Intel’s AI Tools, which include Python tools and frameworks like Modin, Intel Optimization for TensorFlow Optimizations, PyTorch Optimizations, IntelExtension for Scikit-learn, and XGBoost.

Optimization and Deployment

For CPU or GPU deployment, Intel Neural Compressor speeds up deep learning inference and minimizes model size. Models are optimized and deployed across several hardware platforms including Intel CPUs using the OpenVINO toolbox.

You may improve the performance of your Intel hardware platforms with the aid of these AI tools.

Library of Resources

Discover collection of excellent, professionally created, and thoughtfully selected resources that are centered on the core data science competencies that developers need. Exploring machine and deep learning AI frameworks.

What you will discover:

Use Modin to expedite the extract, transform, and load (ETL) process for enormous DataFrames and analyze massive datasets.

To improve speed on Intel hardware, use Intel’s optimized AI frameworks (such as Intel Optimization for XGBoost, Intel Extension for Scikit-learn, Intel Optimization for PyTorch, and Intel Optimization for TensorFlow).

Use Intel-optimized software on the most recent Intel platforms to implement and deploy AI workloads on Intel Tiber AI Cloud.

How to Begin

Frameworks for Data Engineering and Machine Learning

Step 1: View the Modin, Intel Extension for Scikit-learn, and Intel Optimization for XGBoost videos and read the introductory papers.

Modin: To achieve a quicker turnaround time overall, the video explains when to utilize Modin and how to apply Modin and Pandas judiciously. A quick start guide for Modin is also available for more in-depth information.

Scikit-learn Intel Extension: This tutorial gives you an overview of the extension, walks you through the code step-by-step, and explains how utilizing it might improve performance. A movie on accelerating silhouette machine learning techniques, PCA, and K-means clustering is also available.

Intel Optimization for XGBoost: This straightforward tutorial explains Intel Optimization for XGBoost and how to use Intel optimizations to enhance training and inference performance.

Step 2: Use Intel Tiber AI Cloud to create and develop machine learning workloads.

On Intel Tiber AI Cloud, this tutorial runs machine learning workloads with Modin, scikit-learn, and XGBoost.

Step 3: Use Modin and scikit-learn to create an end-to-end machine learning process using census data.

Run an end-to-end machine learning task using 1970–2010 US census data with this code sample. The code sample uses the Intel Extension for Scikit-learn module to analyze exploratory data using ridge regression and the Intel Distribution of Modin.

Deep Learning Frameworks

Step 4: Begin by watching the videos and reading the introduction papers for Intel’s PyTorch and TensorFlow optimizations.

Intel PyTorch Optimizations: Read the article to learn how to use the Intel Extension for PyTorch to accelerate your workloads for inference and training. Additionally, a brief video demonstrates how to use the addon to run PyTorch inference on an Intel Data Center GPU Flex Series.

Intel’s TensorFlow Optimizations: The article and video provide an overview of the Intel Extension for TensorFlow and demonstrate how to utilize it to accelerate your AI tasks.

Step 5: Use TensorFlow and PyTorch for AI on the Intel Tiber AI Cloud.

In this article, it show how to use PyTorch and TensorFlow on Intel Tiber AI Cloud to create and execute complicated AI workloads.

Step 6: Speed up LSTM text creation with Intel Extension for TensorFlow.

The Intel Extension for TensorFlow can speed up LSTM model training for text production.

Step 7: Use PyTorch and DialoGPT to create an interactive chat-generation model.

Discover how to use Hugging Face’s pretrained DialoGPT model to create an interactive chat model and how to use the Intel Extension for PyTorch to dynamically quantize the model.

Read more on Govindhtech.com

#AI#AIFrameworks#DataScientists#GenAI#PyTorch#GenAISurvival#TensorFlow#CPU#GPU#IntelTiberAICloud#News#Technews#Technology#Technologynews#Technologytrends#govindhtech

2 notes

·

View notes

Text

youtube

Four Frameworks to Help You Prioritize Generative AI Solutions

Explore four powerful frameworks designed to help you effectively prioritize generative AI solutions. Learn how to evaluate and implement AI strategies that align with your business goals and maximize impact.

#animation#art#branding#accounting#artwork#artists on tumblr#machine learning#artificial intelligence#architecture#youtube#GenerativeAI#AISolutions#AIFrameworks#Prioritization#BusinessStrategy#Youtube

2 notes

·

View notes

Text

🎯 Building Smarter AI Agents: What’s Under the Hood?

At CIZO, we’re often asked — “What frameworks do you use to build intelligent AI agents?” Here’s a quick breakdown from our recent team discussion:

Core Frameworks We Use: ✅ TensorFlow & PyTorch – for deep learning capabilities ✅ OpenAI Gym – for reinforcement learning ✅ LangChain – to develop conversational agents ✅ Google Cloud AI & Azure AI – for scalable, cloud-based solutions

Real-World Application: In our RECOVAPRO app, we used TensorFlow to train personalized wellness models — offering users AI-driven routines tailored to their lifestyle and recovery goals.

📈 The right tools aren’t just about performance. They make your AI agents smarter, scalable, and more responsive to real-world needs.

Let’s build AI that works for people — not just data.

💬 Curious about how we apply these frameworks in different industries? Let’s connect! - https://cizotech.com/

#innovation#cizotechnology#techinnovation#ios#mobileappdevelopment#appdevelopment#iosapp#app developers#mobileapps#ai#aiframeworks#deeplearning#tensorflow#pytorch#openai#cloudai#aiapplications

0 notes

Text

What if Your Game Starts Thinking Contact GamesDApp https://www.gamesd.app/ai-game-development

#AIGameDevelopment#AIGameDevelopmentCompany#AIGameDevelopmentServices#CustomGameDevelopment#GameDevelopment#AIinGaming#SmartGameDesign#AIgamedevelopers#GameDevTools#AIframeworks

0 notes

Text

Why stick to one AI tool when today’s innovations demand synergy?

Modern AI development doesn’t rely on a single model or library it thrives on integrated ecosystems.

Here are 8 powerful tools every aspiring AI Engineer should know:

GPT-4 (OpenAI) – Powerful LLM for reasoning, generation, and comprehension

LangChain – Framework to connect LLMs with external data and APIs

DeepSpeed (by Microsoft) – Speeds up training of large models efficiently

AutoGen (Microsoft) – Enables multi-agent conversations and collaborative AI workflows

Hugging Face Transformers – Open-source hub for state-of-the-art NLP models

Runway ML – AI for media creators: image, video, and animation generation

LLM Guard – Secure and sanitize LLM outputs to prevent risks (e.g., prompt injection, PII leaks)

Gradio – Instantly demo AI models with shareable web interfaces

These tools work best together, not in silos.

At School of Core AI we don’t teach tools in isolation. We teach you how to orchestrate them together to build scalable, real-world GenAI apps.

#GenerativeAI#GPT4#LangChain#DeepSpeed#AutoGen#HuggingFace#RunwayML#Gradio#AItools#MachineLearning#OpenSourceAI#AIFrameworks#MultiAgentAI#LLMengineer#AgenticAI#AIEducation#SchoolOfCoreAI#LearnAI

1 note

·

View note

Text

How to Build AI from Scratch

Artificial Intelligence (AI) is revolutionizing industries worldwide. From chatbots to autonomous cars, AI is driving innovation and growth. If you’re wondering how to build AI from scratch, this guide will help you understand the step-by-step process and tools required to develop your own AI system.

What is Artificial Intelligence?

Artificial Intelligence is the simulation of human intelligence processes by machines, especially computer systems. AI systems are designed to learn, reason, and solve problems, making them essential in modern technologies like healthcare, finance, marketing, robotics, and eCommerce.

Steps to Build AI from Scratch

1. Define the AI Project Objective

Start by identifying the problem your AI system will solve. Determine if your AI will handle tasks like:

Image recognition

Natural language processing (NLP)

Predictive analytics

Chatbot development

Recommendation engines

2. Learn Programming Languages for AI

Python is the most recommended programming language for AI development due to its simplicity and robust libraries. Other useful languages include:

R

Java

C++

Julia

3. Gather and Prepare Data

Data is the core of any AI system. Collect relevant and clean datasets that your AI model will use to learn and improve accuracy.

Popular data sources:

Kaggle datasets

Government open data portals

Custom data collection tools

4. Choose the Right AI Algorithms

Select algorithms based on your project requirements:

Machine Learning (ML): Decision Trees, Random Forest, SVM

Deep Learning (DL): Convolutional Neural Networks (CNN), Recurrent Neural Networks (RNN)

NLP: Transformer models like BERT, GPT

5. Use AI Development Frameworks

Leverage powerful AI libraries and frameworks for faster development:

TensorFlow

PyTorch

Keras

Scikit-learn

OpenCV (for computer vision tasks)

6. Train Your AI Model

Feed your AI model with training datasets. Monitor its performance using accuracy metrics, precision, recall, and loss functions. Optimize the model for better results.

7. Test and Deploy Your AI Model

Test the AI system in real-time environments. Once it meets the accuracy benchmark, deploy it using:

Google Cloud AI

Amazon Web Services (AWS)

Microsoft Azure AI

On-premises servers

8. Continuous Monitoring and Improvement

AI is an evolving system. Regular updates and retraining are essential for maintaining its efficiency and relevance.

Applications of AI

Healthcare: Disease diagnosis, drug discovery

eCommerce: Personalized recommendations

Finance: Fraud detection, trading algorithms

Marketing: Chatbots, customer segmentation

Automotive: Self-driving vehicles

Final Thoughts

Building AI from scratch is a rewarding journey that combines technical skills, creativity, and problem-solving. Whether you’re creating a simple chatbot or a complex AI model, the future belongs to AI-powered businesses and professionals.

Start learning today, experiment with projects, and stay updated with the latest AI advancements. AI will continue to shape industries—being a part of this revolution will open countless opportunities.

Follow For More Insights: Reflextick Creative Agency

#ArtificialIntelligence#AI#MachineLearning#DeepLearning#AIDevelopment#TechTrends#PythonAI#AIApplications#DataScience#TechInnovation#AIProjects#FutureTechnology#AIFrameworks#AIInBusiness#AITutorials

1 note

·

View note

Text

Build Your Own AI Agent: A Step-by-Step Guide

Want to create an AI agent for automation and efficiency? This guide walks you through the process, from choosing the right technology stack to deploying your AI assistant. Learn how to integrate AI into your business and improve productivity. With Truefirms, you can find top AI development agencies to help bring your project to life. Whether you're a startup or an enterprise, AI agents can streamline operations and enhance customer experiences. Get started today with our detailed step-by-step guide!

Read more: Build Your Own AI Agent: A Simple Guide

0 notes

Text

AI News Brief 🧞♀️: The Ultimate Guide to LangChain and LangGraph: Which One is Right for You?

#AgenticAI#LangChain#LangGraph#AIFrameworks#MachineLearning#DeepLearning#NaturalLanguageProcessing#NLP#AIApplications#ArtificialIntelligence#AIDevelopment#AIResearch#AIEngineering#AIInnovation#AIIndustry#AICommunity#AIExperts#AIEnthusiasts#AIStartups#AIBusiness#AIFuture#AIRevolution#dozers#FraggleRock#Spideysense#Spiderman#MarvelComics#JimHenson#SpiderSense#FieldsOfTheNephilim

0 notes

Text

🚀 Exploring Generative AI architecture for enterprises? 🌐 Dive into cutting-edge development frameworks, tools, and implementation strategies that are reshaping industries! From neural network innovations to advanced data pipelines, the future is brimming with potential. 🌟 Discover how companies are leveraging these technologies to drive growth, enhance efficiency, and unlock new opportunities. Stay ahead with insights on emerging trends and best practices in AI deployment. Ready to revolutionize your enterprise?

🔗 Read more:

#GenerativeAI#AIArchitecture#TechTrends#EnterpriseTech#AIFrameworks#FutureOfAI#Innovation#DataScience#BusinessGrowth

0 notes

Text

Demystifying Chatbot AI: A Simple Guide to Choosing right AI Frameworks

Discover the essential factors in selecting the perfect AI framework for your chatbot project. This comprehensive guide simplifies the complex landscape of chatbot AI, helping you make informed decisions. Learn how to navigate through options efficiently. Looking for expert assistance? Contact our AI chatbot development company for tailored solutions to elevate your project.

#AIChatbotDevelopmentCompany#AIChatbotDevelopment#AI#AIChatbotComparison#AIFrameworks#NLP#ML#RoboticProcessAutomation

0 notes

Text

How Open Source AI Works? Its Advantages And Drawbacks

What Is Open-source AI?

Open source AI refers to publicly available AI frameworks, methodologies, and technology. Everyone may view, modify, and share the source code, encouraging innovation and cooperation. Openness has sped AI progress by enabling academics, developers, and companies to build on each other’s work and create powerful AI tools and applications for everyone.

Open Source AI projects include:

Deep learning and neural network frameworks PyTorch and TensorFlow.

Hugging Face Transformers: Language translation and chatbot NLP libraries.

OpenCV: A computer vision toolbox for processing images and videos.

Through openness and community-driven standards, open-source AI increases accessibility to technology while promoting ethical development.

How Open Source AI Works

The way open-source AI operates is by giving anybody unrestricted access to the underlying code of AI tools and frameworks.

Community Contributions

Communities of engineers, academics, and fans create open-source AI projects like TensorFlow or PyTorch. They add functionality, find and solve errors, and contribute code. In order to enhance the program, many people labor individually, while others are from major IT corporations, academic institutions, and research centers.

Access to Source Code

Open Source AI technologies’ source code is made available on websites such as GitHub. All the instructions needed for others to replicate, alter, and comprehend the AI’s operation are included in this code. The code’s usage is governed by open-source licenses (such MIT, Apache, or GPL), which provide rights and restrictions to guarantee equitable and unrestricted distribution.

Building and Customizing AI Models

The code may be downloaded and used “as-is,” or users can alter it to suit their own requirements. Because developers may create bespoke AI models on top of pre-existing frameworks, this flexibility permits experimentation. For example, a researcher may tweak a computer vision model to increase accuracy for medical imaging, or a business could alter an open-source chatbot model to better suit its customer service requirements.

Auditing and Transparency

Because anybody may examine the code for open source AI, possible biases, flaws, and mistakes in AI algorithms can be found and fixed more rapidly. Because it enables peer review and community-driven changes, this openness is particularly crucial for guaranteeing ethical AI activities.

Deployment and Integration

Applications ranging from major business systems to mobile apps may be linked with open-source AI technologies. Many tools are accessible to a broad range of skill levels because they provide documentation and tutorials. Open-source AI frameworks are often supported by cloud services, allowing users to easily expand their models or incorporate them into intricate systems.

Continuous Improvement

Open-source AI technologies allow users to test, improve, update, and fix errors before sharing the findings with the community. Open Source AI democratizes cutting-edge AI technology via cross-sector research and collaboration.

Advantages Of Open-Source AI

Research and Cooperation: Open-source AI promotes international cooperation between organizations, developers, and academics. They lessen effort duplication and speed up AI development by sharing their work.

Transparency and Trust: Open source AI promotes better trust by enabling people to examine and comprehend how algorithms operate. Transparency ensures AI solutions are morally and fairly sound by assisting in the detection of biases or defects.

Startups: Smaller firms, and educational institutions that cannot afford proprietary solutions may employ open-source AI since it is typically free or cheap.

Developers: May customize open-source AI models to meet specific needs, improving flexibility in healthcare and finance. Open Source AI allows students, developers, and data scientists to explore, improve, and participate in projects.

Open-Source AI Security and Privacy issues: Unvetted open source projects may provide security issues. Attackers may take advantage of flaws in popular codebases, particularly if fixes or updates are sluggish.

Quality and Upkeep: Some open-source AI programs have out-of-date models or compatibility problems since they don’t get regular maintenance or upgrades. Projects often depend on unpaid volunteers, which may have an impact on the code’s upkeep and quality.

Complexity: Implementing Open Source AI may be challenging and may call for a high level of experience. Users could have trouble with initial setup or model tweaking in the absence of clear documentation or user assistance.

Ethics and Bias Issues: Training data may introduce biases into even open-source AI, which may have unforeseen repercussions. Users must follow ethical standards and do thorough testing since transparent code does not always translate into equitable results.

Commercial Competition: Open-source initiatives do not have the funds and resources that commercial AI tools possess, which might impede scaling or impede innovation.

Drawbacks

Open source AI is essential to democratizing technology.

Nevertheless, in order to realize its full potential and overcome its drawbacks, it needs constant maintenance, ethical supervision, and active community support.

Read more on Govindhtech.com

#OpensourceAI#Deeplearning#PyTorch#TensorFlow#AImodels#AIprograms#AIdevelopment#AItechnologies#AIframeworks#AItools#News#Technews#Technology#technologynews#Technologytrends#govindhtech

0 notes

Text

youtube

Four Frameworks to Help You Prioritize Generative AI Solutions Explore four powerful frameworks designed to help you effectively prioritize generative AI solutions. Learn how to evaluate and implement AI strategies that align with your business goals and maximize impact.

#art#artwork#branding#accounting#animation#architecture#artists on tumblr#machine learning#youtube#artificial intelligence#GenerativeAI#AISolutions#AIFrameworks#Prioritization#BusinessStrategy#Youtube

1 note

·

View note

Text

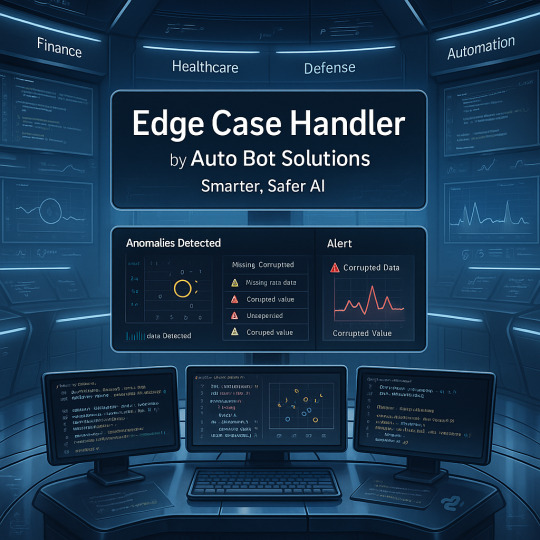

We’ve all seen it your model’s performing great, then one weird data point sneaks in and everything goes sideways. That single outlier, that unexpected format, that missing field it’s enough to send even a robust AI pipeline off course.

That’s exactly why we built the Edge Case Handler at Auto Bot Solutions.

It’s a core part of the G.O.D. Framework (Generalized Omni-dimensional Development) https://github.com/AutoBotSolutions/Aurora designed to think ahead automatically flagging, handling, and documenting anomalies before they escalate.

Whether it’s:

Corrupted inputs,

Extreme values,

Missing data,

Inconsistent types, or

Pattern anomalies you didn’t even anticipate

The module detects and responds gracefully, keeping systems running even in unpredictable environments. It’s about building AI you can trust, especially when real-time, high stakes decisions are on the line think finance, autonomous systems, defense, or healthcare.

Some things it does right out of the box:

Statistical anomaly detection

Missing data strategies (imputation, fallback, or rejection)

Live & log-based debugging

Input validation & consistency enforcement

Custom thresholds and behavior control Built in Python. Fully open-source. Actively maintained. GitHub: https://github.com/AutoBotSolutions/Aurora/blob/Aurora/ai_edge_case_handling.py Full docs & templates:

Overview: https://autobotsolutions.com/artificial-intelligence/edge-case-handler-reliable-detection-and-handling-of-data-edge-cases/

Technical details: https://autobotsolutions.com/god/stats/doku.php?id=ai_edge_case_handling

Template: https://autobotsolutions.com/god/templates/ai_edge_case_handling.html

We’re not just catching errors we’re making AI more resilient, transparent, and real-world ready.

#AIFramework#AnomalyDetection#DataIntegrity#AI#AIModule#DataScience#OpenSourceAI#ResilientAI#PythonAI#GODFramework#MachineLearning#AutoBotSolutions#EdgeCaseHandler

0 notes

Text

Code Stars & Smart Planets: AI Game Development in the Digital Solar System

Imagine a galaxy where game worlds orbit around intelligent cores, each planet powered by something more than just code—something smart. Welcome to the Digital Solar System, a realm where AI game development has taken the role of the blazing sun, and every innovative game mechanic or immersive world is a “smart planet” shaped by artificial intelligence.

In this universe, traditional development models are being eclipsed by intelligent systems that learn, adapt, and evolve, just like the games they help create. And as more studios explore this expanding space, one thing is clear: the future of gaming is orbiting around AI.

Code Stars: The Core of AI Innovation

In our cosmic analogy, AI is the star—the energy source that fuels every orbiting planet in a game’s ecosystem. AI game development has revolutionized how we design, build, and interact with digital experiences. From character behavior to storytelling, artificial intelligence is no longer a back-end add-on; it's a central force.

AI-driven engines enable:

Procedural content generation (think landscapes that build themselves),

NPCs that adapt to your playstyle, and

Narratives that shift based on your decisions.

These “code stars” reshape how we define gameplay, engagement, and storytelling.

Smart Planets: The Features Orbiting AI

Orbiting around these AI cores are the planets, each representing a major feature or improvement powered by AI game development services.

Planet Dialogue

Using large language models, AI now creates realistic conversations with NPCs. Instead of scripted lines, you get dynamic, evolving dialogue that feels human. Your choices matter. Your words shape the world.

Planet Personalization

AI learns from your playstyle, adapting difficulty, story paths, and even item drops. Every player's experience becomes unique, turning games into highly personalized journeys.

Planet Procedura

Need a world? Let AI build it. From terrain generation to weather systems, AI algorithms like GANs (Generative Adversarial Networks) craft stunning, complex environments on the fly.

Planet MetaCore

As the metaverse grows, AI helps power interconnected worlds with seamless transitions, intelligent avatars, and cross-game logic. AI makes the metaverse not just bigger, but smarter.

And don't forget the moonlets—smaller, yet crucial features like:

Automated bug detection

Predictive analytics for balancing

AI-powered voiceovers

Real-time facial animation

Together, these smart planets orbit AI, creating game systems that are dynamic, responsive, and alive.

The Digital Gravity: Why AI Holds Everything Together

In the cosmos of game design, AI is the gravity. It keeps the ecosystem coherent, balanced, and ever-expanding. It doesn’t just power individual systems—it connects them.

AI enables:

Coherent storylines that evolve organically

Multiplayer balancing based on real-time data

Faster and more efficient testing and QA cycles

For AI game developers, this means faster iterations, smarter design choices, and games that grow and learn with their players. For gamers, it means experiences that feel less like programs and more like living worlds.

Tools of the Trade: AI Frameworks Powering the Digital Solar System

To build these code stars and smart planets, AI game developers rely on an arsenal of cutting-edge frameworks and technologies:

Unity ML-Agents Toolkit Train intelligent agents that can navigate, fight, or interact within your game world.

Unreal Engine’s Behavior Trees + MetaHuman Add deeply realistic AI behavior and visual fidelity to your characters.

OpenAI’s GPT models Power-rich, unscripted dialogue systems that adapt to player input.

GANs (Generative Adversarial Networks) Automatically generate landscapes, textures, and even music.

NVIDIA Omniverse Collaborate in real-time while simulating photorealistic game physics and environments.

These tools aren’t science fiction—they’re here, now, and changing the game at warp speed.

Building Your Game System: Why It’s Time to Orbit AI

The tools are here. The tech is ready. Whether you're an indie studio or a AAA publisher, now is the moment to integrate AI into your development process. Ask yourself:

Can your game benefit from adaptive storytelling?

Are your NPCs believable?

Can you cut dev time with procedural systems?

If the answer is “yes” (and it likely is), then it’s time to work with an AI game development company that understands how to unlock this potential.

Why Choose GamesDApp?

At GamesDApp, we don’t just use AI—we harness it to craft experiences that players remember. As a leading AI game development company, we offer a complete suite of AI game development services that push boundaries and redefine gameplay.

Why clients choose us:

Custom AI Solutions tailored to your game type and genre

A team of expert AI game developers with real-world project success

End-to-end support from ideation to post-launch updates

Proven track record in AI-integrated metaverse and GameFi platforms

Cutting-edge experimentation with AI NPCs, procedural storytelling, and more

When you work with GamesDApp, you’re not just building a game—you’re building a smart, scalable, and unforgettable experience.

Final Thoughts: The Universe is Expanding—Don’t Miss the Rocket

AI game development isn’t the future—it’s the now. The gaming universe is evolving into something more intelligent, immersive, and interactive than ever before. Those who embrace this shift will build not just games, but galaxies.

So here’s the final question: Will your next game be just another planet… or the star of its own digital solar system?

Let GamesDApp help you launch.

#AIGameDevelopment#AIGameDevelopmentCompany#AIGameDevelopmentServices#CustomGameDevelopment#GameDevelopment#AIinGaming#SmartGameDesign#AIgamedevelopers#GameDevTools#AIframeworks

0 notes

Text

Ethics of AI in Decision Making: Balancing Business Impact & Technical Innovation

Discover the Ethics of AI in Decision Making—balancing business impact & innovation. Learn AI governance, compliance & responsible AI practices today!

Artificial Intelligence (AI) has transformed industries, driving innovation and efficiency. However, as AI systems increasingly influence critical decisions, the ethical implications of their deployment have come under scrutiny. Balancing the business benefits of AI with ethical considerations is essential to ensure responsible and sustainable integration into decision-making processes.

The Importance of AI Ethics in Business

AI ethics refers to the principles and guidelines that govern the development and use of AI technologies to ensure they operate fairly, transparently, and without bias. In the business context, ethical AI practices are crucial for maintaining trust with stakeholders, complying with regulations, and mitigating risks associated with AI deployment. Businesses can balance innovation and responsibility by proactively managing bias, enhancing AI transparency, protecting consumer data, and maintaining legal compliance. Ethical AI is not just about risk management—it’s a strategic benefit that improves business credibility and long-term success. Seattle University4 Leaf Performance

Ethical Challenges in AI Decision Making

AI Decision Making: Implementing AI in decision-making processes presents several ethical challenges:

Bias and Discrimination: AI systems can unexpectedly perpetuate existing training data biases, leading to unfair outcomes. For instance, biased hiring algorithms may favor certain demographics over others.

Transparency and Explainability: Many AI models operate as "black boxes," making it difficult to understand how decisions are made. This ambiguity can interfere with accountability and belief.

Privacy and Surveillance: AI's ability to process vast amounts of data raises concerns about individual privacy and the potential for intrusive surveillance.

Job Displacement: Automation driven by AI can lead to significant workforce changes, potentially displacing jobs and necessitating reskilling initiatives.

Accountability: Determining responsibility when AI systems cause harm or make erroneous decisions is complex, especially when multiple stakeholders are involved.

Developing an Ethical AI Framework

To navigate these challenges, organizations should establish a comprehensive AI ethics framework. Key components include:

Leadership Commitment: Secure commitment from organizational leadership to prioritize ethical AI development and deployment.Amplify

Ethical Guidelines: Develop clear guidelines that address issues like bias mitigation, transparency, and data privacy.

Stakeholder Engagement: Involve diverse stakeholders, including ethicists, legal experts, and affected communities, in the AI development process.

Continuous Monitoring: Implement mechanisms to regularly assess AI systems for ethical compliance and address any emerging issues.

For example, IBM has established an AI Ethics Board to oversee and guide the ethical development of AI technologies, ensuring alignment with the company's values and societal expectations.

IBM - United States

Case Studies: Ethical AI in Action

Healthcare: AI in Diagnostics

In healthcare, AI-powered diagnostic tools have the potential to improve patient outcomes significantly. However, ethical deployment requires ensuring that these tools are trained on diverse datasets to avoid biases that could lead to misdiagnosis in underrepresented populations. Additionally, maintaining patient data privacy is paramount.

Finance: Algorithmic Trading

Financial institutions utilize AI for algorithmic trading to optimize investment strategies. Ethical considerations involve ensuring that these algorithms do not manipulate markets or engage in unfair practices. Transparency in decision-making processes is also critical to maintain investor trust.

The Role of AI Ethics Specialists

As organizations strive to implement ethical AI practices, the role of AI Ethics Specialists has become increasingly important. These professionals are responsible for developing and overseeing ethical guidelines, conducting risk assessments, and ensuring compliance with relevant regulations. Their expertise helps organizations navigate the complex ethical landscape of AI deployment.

Regulatory Landscape and Compliance

Governments and regulatory bodies are establishing frameworks to govern AI use. For instance, the European Union's AI Act aims to ensure that AI systems are safe and respect existing laws and fundamental rights. Organizations must stay informed about such regulations to ensure compliance and avoid legal repercussions.

Building Trust through Transparency and Accountability

Transparency and accountability are foundational to ethical AI. Organizations can build trust by:

Documenting Decision Processes: Clearly document how AI systems make decisions to facilitate understanding and accountability.

Implementing Oversight Mechanisms: Establish oversight committees to monitor AI deployment and address ethical concerns promptly.

Engaging with the Public: Communicate openly with the public about AI use, benefits, and potential risks to foster trust and understanding.

Conclusion

Balancing the ethics of AI in decision-making involves a multidimensional approach that integrates ethical principles into business strategies and technical development. By proactively addressing ethical challenges, developing robust frameworks, and fostering a culture of transparency and accountability, organizations can harness the benefits of AI while mitigating risks. As AI continues to evolve, ongoing dialogue and collaboration among stakeholders will be essential to navigate the ethical complexities and ensure that AI serves as a force for good in society.

Frequently Asked Questions (FAQs)

Q1: What is AI ethics, and why is it important in business?

A1: AI ethics refers to the principles guiding the development and use of AI to ensure fairness, transparency, and accountability. In business, ethical AI practices are vital for maintaining stakeholder trust, complying with regulations, and mitigating risks associated with AI deployment.

Q2: How can businesses address bias in AI decision-making?

A2: Businesses can address bias by using diverse and representative datasets, regularly auditing AI systems for biased outcomes, and involving ethicists in the development process to identify and mitigate potential biases.

Q3: What role do AI Ethics Specialists play in organizations?

A3: AI Ethics Specialists develop and oversee ethical guidelines, conduct risk assessments, and ensure that AI systems comply with ethical standards and regulations, helping organizations navigate the complex ethical landscape of AI deployment.

Q4: How can organizations ensure transparency in AI systems?

A4: Organizations can ensure transparency by documenting decision-making processes, implementing explainable AI models, and communicating openly with stakeholders about how AI systems operate and make decisions.

#AI#EthicalAI#AIethics#ArtificialIntelligence#BusinessEthics#TechInnovation#ResponsibleAI#AIinBusiness#AIRegulations#Transparency#AIAccountability#BiasInAI#AIForGood#AITrust#MachineLearning#AIImpact#AIandSociety#DataPrivacy#AICompliance#EthicalTech#AlgorithmicBias#AIFramework#AIethicsSpecialist#AIethicsGovernance#AIandDecisionMaking#AITransparency#AIinFinance#AIinHealthcare

0 notes

Text

Introduction to the LangChain Framework

LangChain is an open-source framework designed to simplify and enhance the development of applications powered by large language models (LLMs). By combining prompt engineering, chaining processes, and integrations with external systems, LangChain enables developers to build applications with powerful reasoning and contextual capabilities. This tutorial introduces the core components of LangChain, highlights its strengths, and provides practical steps to build your first LangChain-powered application.

What is LangChain?

LangChain is a framework that lets you connect LLMs like OpenAI's GPT models with external tools, data sources, and complex workflows. It focuses on enabling three key capabilities: - Chaining: Create sequences of operations or prompts for more complex interactions. - Memory: Maintain contextual memory for multi-turn conversations or iterative tasks. - Tool Integration: Connect LLMs with APIs, databases, or custom functions. LangChain is modular, meaning you can use specific components as needed or combine them into a cohesive application.

Getting Started

Installation First, install the LangChain package using pip: pip install langchain Additionally, you'll need to install an LLM provider (e.g., OpenAI or Hugging Face) and any tools you plan to integrate: pip install openai

Core Concepts in LangChain

1. Chains Chains are sequences of steps that process inputs and outputs through the LLM or other components. Examples include: - Sequential chains: A linear series of tasks. - Conditional chains: Tasks that branch based on conditions. 2. Memory LangChain offers memory modules for maintaining context across multiple interactions. This is particularly useful for chatbots and conversational agents. 3. Tools and Plugins LangChain supports integrations with APIs, databases, and custom Python functions, enabling LLMs to interact with external systems. 4. Agents Agents dynamically decide which tool or chain to use based on the user’s input. They are ideal for multi-tool workflows or flexible decision-making.

Building Your First LangChain Application

In this section, we’ll build a LangChain app that integrates OpenAI’s GPT API, processes user queries, and retrieves data from an external source. Step 1: Setup and Configuration Before diving in, configure your OpenAI API key: import os from langchain.llms import OpenAI # Set API Key os.environ = "your-openai-api-key" # Initialize LLM llm = OpenAI(model_name="text-davinci-003") Step 2: Simple Chain Create a simple chain that takes user input, processes it through the LLM, and returns a result. from langchain.prompts import PromptTemplate from langchain.chains import LLMChain # Define a prompt template = PromptTemplate( input_variables=, template="Explain {topic} in simple terms." ) # Create a chain simple_chain = LLMChain(llm=llm, prompt=template) # Run the chain response = simple_chain.run("Quantum computing") print(response) Step 3: Adding Memory To make the application context-aware, we add memory. LangChain supports several memory types, such as conversational memory and buffer memory. from langchain.chains import ConversationChain from langchain.memory import ConversationBufferMemory # Add memory to the chain memory = ConversationBufferMemory() conversation = ConversationChain(llm=llm, memory=memory) # Simulate a conversation print(conversation.run("What is LangChain?")) print(conversation.run("Can it remember what we talked about?")) Step 4: Integrating Tools LangChain can integrate with APIs or custom tools. Here’s an example of creating a tool for retrieving Wikipedia summaries. from langchain.tools import Tool # Define a custom tool def wikipedia_summary(query: str): import wikipedia return wikipedia.summary(query, sentences=2) # Register the tool wiki_tool = Tool(name="Wikipedia", func=wikipedia_summary, description="Retrieve summaries from Wikipedia.") # Test the tool print(wiki_tool.run("LangChain")) Step 5: Using Agents Agents allow dynamic decision-making in workflows. Let’s create an agent that decides whether to fetch information or explain a topic. from langchain.agents import initialize_agent, Tool from langchain.agents import AgentType # Define tools tools = # Initialize agent agent = initialize_agent(tools, llm, agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION, verbose=True) # Query the agent response = agent.run("Tell me about LangChain using Wikipedia.") print(response) Advanced Topics 1. Connecting with Databases LangChain can integrate with databases like PostgreSQL or MongoDB to fetch data dynamically during interactions. 2. Extending Functionality Use LangChain to create custom logic, such as summarizing large documents, generating reports, or automating tasks. 3. Deployment LangChain applications can be deployed as web apps using frameworks like Flask or FastAPI. Use Cases - Conversational Agents: Develop context-aware chatbots for customer support or virtual assistance. - Knowledge Retrieval: Combine LLMs with external data sources for research and learning tools. - Process Automation: Automate repetitive tasks by chaining workflows. Conclusion LangChain provides a robust and modular framework for building applications with large language models. Its focus on chaining, memory, and integrations makes it ideal for creating sophisticated, interactive applications. This tutorial covered the basics, but LangChain’s potential is vast. Explore the official LangChain documentation for deeper insights and advanced capabilities. Happy coding! Read the full article

#AIFramework#AI-poweredapplications#automation#context-aware#dataintegration#dynamicapplications#LangChain#largelanguagemodels#LLMs#MachineLearning#ML#NaturalLanguageProcessing#NLP#workflowautomation

0 notes