#apify

Explore tagged Tumblr posts

Text

🌟 Unlock Business Insights with the Contact Info Scraper 🌟

Looking for a powerful, efficient tool to extract accurate business contact information? Meet the Contact Info Scraper by Dainty Screw on Apify. 🚀

💡 What It Does:

• Extract business emails, phone numbers, addresses, and more from websites.

• Perfect for building targeted outreach lists, lead generation, or enhancing your marketing campaigns.

• Works seamlessly with dynamic and static websites.

📈 Why Choose This Scraper?

• Fast & Accurate: Saves you hours of manual data collection.

• User-Friendly: Easy-to-use interface, even for non-techies.

• Customizable: Tailor the scraper to meet your unique business needs.

🔧 Who Can Benefit?

• Marketers: Boost your outreach campaigns.

• Entrepreneurs: Build B2B connections with ease.

• Freelancers: Gather leads for your clients in no time.

🌐 Start scraping smarter today! Try it now and take your business to the next level.

👉 Check it out here: Contact Info Scraper on Apify

💻 Need Custom Automations? Contact us to build your dream scraper.

#DataScraping #BusinessGrowth #AutomationTools #LeadGeneration #Apify

2 notes

·

View notes

Text

Using indeed jobs data for business

The Indeed scraper is a powerful tool that allows you to extract job listings and associated details from the indeed.com job search website. Follow these steps to use the scraper effectively:

1. Understanding the Purpose:

The Indeed scraper is used to gather job data for analysis, research, lead generation, or other purposes.

It uses web scraping techniques to navigate through search result pages, extract job listings, and retrieve relevant information like job titles, companies, locations, salaries, and more.

2. Why Scrape Indeed.com:

There are various use cases for an Indeed jobs scraper, including:

Job Market Research

Competitor Analysis

Company Research

Salary Benchmarking

Location-Based Insights

Lead Generation

CRM Enrichment

Marketplace Insights

Career Planning

Content Creation

Consulting Services

3. Accessing the Indeed Scraper:

Go to the indeed.com website.

Search for jobs using filters like job title, company name, and location to narrow down your target job listings.

Copy the URL from the address bar after performing your search. This URL contains your search criteria and results.

4. Using the Apify Platform:

Visit the Indeed job scraper page

Click on the “Try for free” button to access the scraper.

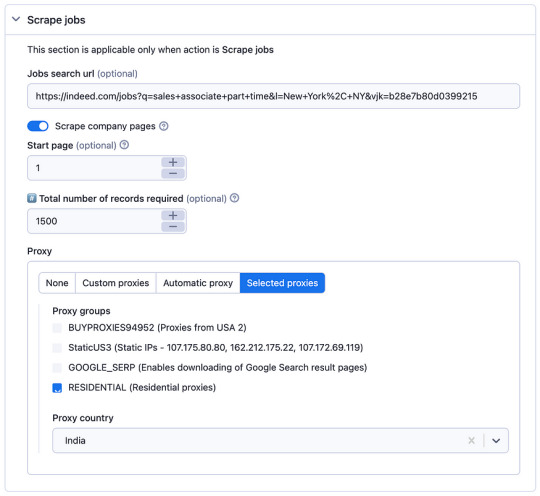

5. Setting up the Scraper:

In the Apify platform, you’ll be prompted to configure the scraper:

Insert the search URL you copied from indeed.com in step 3.

Enter the number of job listings you want to scrape.

Select a residential proxy from your country. This helps you avoid being blocked by the website due to excessive requests.

Click the “Start” button to begin the scraping process.

6. Running the Scraper:

The scraper will start extracting job data based on your search criteria.

It will navigate through search result pages, gather job listings, and retrieve details such as job titles, companies, locations, salaries, and more.

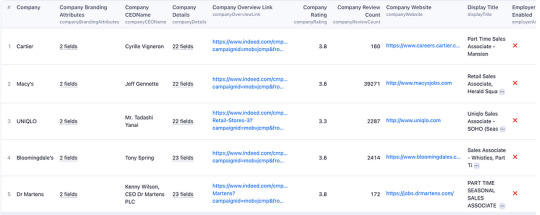

When the scraping process is complete, click the “Export” button in the Apify platform.

You can choose to download the dataset in various formats, such as JSON, HTML, CSV, or Excel, depending on your preferences.

8. Review and Utilize Data:

Open the downloaded data file to view and analyze the extracted job listings and associated details.

You can use this data for your intended purposes, such as market research, competitor analysis, or lead generation.

9. Scraper Options:

The scraper offers options for specifying the job search URL and choosing a residential proxy. Make sure to configure these settings according to your requirements.

10. Sample Output: — You can expect the output data to include job details, company information, and other relevant data, depending on your chosen settings.

By following these steps, you can effectively use the Indeed scraper to gather job data from indeed.com for your specific needs, whether it’s for research, business insights, or personal career planning.

2 notes

·

View notes

Text

Monitor Competitor Pricing with Food Delivery Data Scraping

In the highly competitive food delivery industry, pricing can be the deciding factor between winning and losing a customer. With the rise of aggregators like DoorDash, Uber Eats, Zomato, Swiggy, and Grubhub, users can compare restaurant options, menus, and—most importantly—prices in just a few taps. To stay ahead, food delivery businesses must continually monitor how competitors are pricing similar items. And that’s where food delivery data scraping comes in.

Data scraping enables restaurants, cloud kitchens, and food delivery platforms to gather real-time competitor data, analyze market trends, and adjust strategies proactively. In this blog, we’ll explore how to use web scraping to monitor competitor pricing effectively, the benefits it offers, and how to do it legally and efficiently.

What Is Food Delivery Data Scraping?

Data scraping is the automated process of extracting information from websites. In the food delivery sector, this means using tools or scripts to collect data from food delivery platforms, restaurant listings, and menu pages.

What Can Be Scraped?

Menu items and categories

Product pricing

Delivery fees and taxes

Discounts and special offers

Restaurant ratings and reviews

Delivery times and availability

This data is invaluable for competitive benchmarking and dynamic pricing strategies.

Why Monitoring Competitor Pricing Matters

1. Stay Competitive in Real Time

Consumers often choose based on pricing. If your competitor offers a similar dish for less, you may lose the order. Monitoring competitor prices lets you react quickly to price changes and stay attractive to customers.

2. Optimize Your Menu Strategy

Scraped data helps identify:

Popular food items in your category

Price points that perform best

How competitors bundle or upsell meals

This allows for smarter decisions around menu engineering and profit margin optimization.

3. Understand Regional Pricing Trends

If you operate across multiple locations or cities, scraping competitor data gives insights into:

Area-specific pricing

Demand-based variation

Local promotions and discounts

This enables geo-targeted pricing strategies.

4. Identify Gaps in the Market

Maybe no competitor offers free delivery during weekdays or a combo meal under $10. Real-time data helps spot such gaps and create offers that attract value-driven users.

How Food Delivery Data Scraping Works

Step 1: Choose Your Target Platforms

Most scraping projects start with identifying where your competitors are listed. Common targets include:

Aggregators: Uber Eats, Zomato, DoorDash, Grubhub

Direct restaurant websites

POS platforms (where available)

Step 2: Define What You Want to Track

Set scraping goals. For pricing, track:

Base prices of dishes

Add-ons and customization costs

Time-sensitive deals

Delivery fees by location or vendor

Step 3: Use Web Scraping Tools or Custom Scripts

You can either:

Use scraping tools like Octoparse, ParseHub, Apify, or

Build custom scripts in Python using libraries like BeautifulSoup, Selenium, or Scrapy

These tools automate the extraction of relevant data and organize it in a structured format (CSV, Excel, or database).

Step 4: Automate Scheduling and Alerts

Set scraping intervals (daily, hourly, weekly) and create alerts for major pricing changes. This ensures your team is always equipped with the latest data.

Step 5: Analyze the Data

Feed the scraped data into BI tools like Power BI, Google Data Studio, or Tableau to identify patterns and inform strategic decisions.

Tools and Technologies for Effective Scraping

Popular Tools:

Scrapy: Python-based framework perfect for complex projects

BeautifulSoup: Great for parsing HTML and small-scale tasks

Selenium: Ideal for scraping dynamic pages with JavaScript

Octoparse: No-code solution with scheduling and cloud support

Apify: Advanced, scalable platform with ready-to-use APIs

Hosting and Automation:

Use cron jobs or task schedulers for automation

Store data on cloud databases like AWS RDS, MongoDB Atlas, or Google BigQuery

Legal Considerations: Is It Ethical to Scrape Food Delivery Platforms?

This is a critical aspect of scraping.

Understand Platform Terms

Many websites explicitly state in their Terms of Service that scraping is not allowed. Scraping such platforms can violate those terms, even if it’s not technically illegal.

Avoid Harming Website Performance

Always scrape responsibly:

Use rate limiting to avoid overloading servers

Respect robots.txt files

Avoid scraping login-protected or personal user data

Use Publicly Available Data

Stick to scraping data that’s:

Publicly accessible

Not behind paywalls or logins

Not personally identifiable or sensitive

If possible, work with third-party data providers who have pre-approved partnerships or APIs.

Real-World Use Cases of Price Monitoring via Scraping

A. Cloud Kitchens

A cloud kitchen operating in three cities uses scraping to monitor average pricing for biryani and wraps. Based on competitor pricing, they adjust their bundle offers and introduce combo meals—boosting order value by 22%.

B. Local Restaurants

A family-owned restaurant tracks rival pricing and delivery fees during weekends. By offering a free dessert on orders above $25 (when competitors don’t), they see a 15% increase in weekend orders.

C. Food Delivery Startups

A new delivery aggregator monitors established players’ pricing to craft a price-beating strategy, helping them enter the market with aggressive discounts and gain traction.

Key Metrics to Track Through Price Scraping

When setting up your monitoring dashboard, focus on:

Average price per cuisine category

Price differences across cities or neighborhoods

Top 10 lowest/highest priced items in your segment

Frequency of discounts and offers

Delivery fee trends by time and distance

Most used upsell combinations (e.g., sides, drinks)

Challenges in Food Delivery Data Scraping (And Solutions)

Challenge 1: Dynamic Content and JavaScript-Heavy Pages

Solution: Use headless browsers like Selenium or platforms like Puppeteer to scrape rendered content.

Challenge 2: IP Blocking or Captchas

Solution: Rotate IPs with proxies, use CAPTCHA-solving tools, or throttle request rates.

Challenge 3: Frequent Site Layout Changes

Solution: Use XPaths and CSS selectors dynamically, and monitor script performance regularly.

Challenge 4: Keeping Data Fresh

Solution: Schedule automated scraping and build change detection algorithms to prioritize meaningful updates.

Final Thoughts

In today’s digital-first food delivery market, being reactive is no longer enough. Real-time competitor pricing insights are essential to survive and thrive. Data scraping gives you the tools to make informed, timely decisions about your pricing, promotions, and product offerings.

Whether you're a single-location restaurant, an expanding cloud kitchen, or a new delivery platform, food delivery data scraping can help you gain a critical competitive edge. But it must be done ethically, securely, and with the right technologies.

0 notes

Text

The Automation Myth: Why "Learn APIs" Is Bad Advice in the AI Era

You've heard it everywhere: "Master APIs to succeed in automation." It's the standard advice parroted by every AI expert and tech influencer. But after years in the trenches, I'm calling BS on this oversimplified approach.

Here's the uncomfortable truth: you can't "learn APIs" in any meaningful, universal way. Each platform implements them differently—sometimes radically so. Some companies build APIs with clear documentation and developer experience in mind (Instantly AI and Apify deserve recognition here), creating intuitive interfaces that feel natural to work with.

Then there are the others. The YouTube API, for example, forces you through labyrinthine documentation just to accomplish what should be basic tasks. What should take minutes stretches into hours or even days of troubleshooting and deciphering poorly explained parameters.

An ancient wisdom applies perfectly to AI automation: "There is no book, or teacher, to give you the answer." This isn't just philosophical—it's the practical reality of working with modern APIs and automation tools.

The theoretical knowledge you're stockpiling? Largely worthless until applied. Reading about RESTful principles or OAuth authentication doesn't translate to real-world implementation skills. Each platform has its quirks, limitations, and undocumented features that only reveal themselves when you're knee-deep in actual projects.

The real path forward isn't endless studying or tutorial hell. It's hands-on implementation:

Test the actual API directly

Act on what you discover through testing

Automate based on real results, not theoretical frameworks

While others are still completing courses on "API fundamentals," the true automation specialists are building, failing, learning, and succeeding in the real world.

Test. Act. Automate. Everything else is just noise.

1 note

·

View note

Text

Data/Web Scraping

What is Data Scraping ?

Data scraping is the process of extracting information from websites or other digital sources. It also Knows as web scraping.

Benefits of Data Scraping

1. Competitive Intelligence

Stay ahead of competitors by tracking their prices, product launches, reviews, and marketing strategies.

2. Dynamic Pricing

Automatically update your prices based on market demand, competitor moves, or stock levels.

3. Market Research & Trend Discovery

Understand what’s trending across industries, platforms, and regions.

4. Lead Generation

Collect emails, names, and company data from directories, LinkedIn, and job boards.

5. Automation & Time Savings

Why hire a team to collect data manually when a scraper can do it 24/7.

Who used Data Scraper ?

Businesses, marketers,E-commerce, travel,Startups, analysts,Sales, recruiters, researchers, Investors, agents Etc

Top Data Scraping Browser Extensions

Web Scraper.io

Scraper

Instant Data Scraper

Data Miner

Table Capture

Top Data Scraping Tools

BeautifulSoup

Scrapy

Selenium

Playwright

Octoparse

Apify

ParseHub

Diffbot

Custom Scripts

Legal and Ethical Notes

Not all websites allow scraping. Some have terms of service that forbid it, and scraping too aggressively can get IPs blocked or lead to legal trouble

Apply For Data/Web Scraping : https://www.fiverr.com/s/99AR68a

1 note

·

View note

Text

Top 15 Data Collection Tools in 2025: Features, Benefits

In the data-driven world of 2025, the ability to collect high-quality data efficiently is paramount. Whether you're a seasoned data scientist, a marketing guru, or a business analyst, having the right data collection tools in your arsenal is crucial for extracting meaningful insights and making informed decisions. This blog will explore 15 of the best data collection tools you should be paying attention to this year, highlighting their key features and benefits.

Why the Right Data Collection Tool Matters in 2025:

The landscape of data collection has evolved significantly. We're no longer just talking about surveys. Today's tools need to handle diverse data types, integrate seamlessly with various platforms, automate processes, and ensure data quality and compliance. The right tool can save you time, improve accuracy, and unlock richer insights from your data.

Top 15 Data Collection Tools to Watch in 2025:

Apify: A web scraping and automation platform that allows you to extract data from any website. Features: Scalable scraping, API access, workflow automation. Benefits: Access to vast amounts of web data, streamlined data extraction.

ParseHub: A user-friendly web scraping tool with a visual interface. Features: Easy point-and-click interface, IP rotation, cloud-based scraping. Benefits: No coding required, efficient for non-technical users.

SurveyMonkey Enterprise: A robust survey platform for large organizations. Features: Advanced survey logic, branding options, data analysis tools, integrations. Benefits: Scalable for complex surveys, professional branding.

Qualtrics: A comprehensive survey and experience management platform. Features: Advanced survey design, real-time reporting, AI-powered insights. Benefits: Powerful analytics, holistic view of customer experience.

Typeform: Known for its engaging and conversational survey format. Features: Beautiful interface, interactive questions, integrations. Benefits: Higher response rates, improved user experience.

Jotform: An online form builder with a wide range of templates and integrations. Features: Customizable forms, payment integrations, conditional logic. Benefits: Versatile for various data collection needs.

Google Forms: A free and easy-to-use survey tool. Features: Simple interface, real-time responses, integrations with Google Sheets. Benefits: Accessible, collaborative, and cost-effective.

Alchemer (formerly SurveyGizmo): A flexible survey platform for complex research projects. Features: Advanced question types, branching logic, custom reporting. Benefits: Ideal for in-depth research and analysis.

Formstack: A secure online form builder with a focus on compliance. Features: HIPAA compliance, secure data storage, integrations. Benefits: Suitable for regulated industries.

MongoDB Atlas Charts: A data visualization tool with built-in data collection capabilities. Features: Real-time data updates, interactive charts, MongoDB integration. Benefits: Seamless for MongoDB users, visual data exploration.

Amazon Kinesis Data Streams: A scalable and durable real-time data streaming service. Features: High throughput, real-time processing, integration with AWS services. Benefits: Ideal for collecting and processing streaming data.

Apache Kafka: A distributed streaming platform for building real-time data pipelines. Features: High scalability, fault tolerance, real-time data processing. Benefits: Robust for large-scale streaming data.

Segment: A customer data platform that collects and unifies data from various sources. Features: Data integration, identity resolution, data governance. Benefits: Holistic view of customer data, improved data quality.

Mixpanel: A product analytics platform that tracks user interactions within applications. Features: Event tracking, user segmentation, funnel analysis. Benefits: Deep insights into user behavior within digital products.

Amplitude: A product intelligence platform focused on understanding user engagement and retention. Features: Behavioral analytics, cohort analysis, journey mapping. Benefits: Actionable insights for product optimization.

Choosing the Right Tool for Your Needs:

The best data collection tool for you will depend on the type of data you need to collect, the scale of your operations, your technical expertise, and your budget. Consider factors like:

Data Type: Surveys, web data, streaming data, product usage data, etc.

Scalability: Can the tool handle your data volume?

Ease of Use: Is the tool user-friendly for your team?

Integrations: Does it integrate with your existing systems?

Automation: Can it automate data collection processes?

Data Quality Features: Does it offer features for data cleaning and validation?

Compliance: Does it meet relevant data privacy regulations?

Elevate Your Data Skills with Xaltius Academy's Data Science and AI Program:

Mastering data collection is a crucial first step in any data science project. Xaltius Academy's Data Science and AI Program equips you with the fundamental knowledge and practical skills to effectively utilize these tools and extract valuable insights from your data.

Key benefits of the program:

Comprehensive Data Handling: Learn to collect, clean, and prepare data from various sources.

Hands-on Experience: Gain practical experience using industry-leading data collection tools.

Expert Instructors: Learn from experienced data scientists who understand the nuances of data acquisition.

Industry-Relevant Curriculum: Stay up-to-date with the latest trends and technologies in data collection.

By exploring these top data collection tools and investing in your data science skills, you can unlock the power of data and drive meaningful results in 2025 and beyond.

1 note

·

View note

Text

What is web scraping and what tools to use for web scraping?

Web scraping is the process of extracting data from websites automatically using software scripts. This technique is widely used for data mining, price monitoring, sentiment analysis, market research and more.

BeautifulSoup

Scrapy

Selenium

Requests & LXML

ScraperAPI

Octoparse

Apify

Data Miner

Web Scraper

Puppeteer

Playwright

1 note

·

View note

Text

Sure, here is the article in markdown format as requested:

```markdown

Website Scraping Tools TG@yuantou2048

Website scraping tools are essential for extracting data from websites. These tools can help automate the process of gathering information, making it easier and faster to collect large amounts of data. Here are some popular website scraping tools that you might find useful:

1. Beautiful Soup: This is a Python library that makes it easy to scrape information from web pages. It provides Pythonic idioms for iterating, searching, and modifying parse trees built with tools like HTML or XML parsers.

2. Scrapy: Scrapy is an open-source and collaborative framework for extracting the data you need from websites. It’s fast and can handle large-scale web scraping projects.

3. Octoparse: Octoparse is a powerful web scraping tool that allows users to extract data from websites without writing any code. It supports both visual and code-based scraping.

4. ParseHub: ParseHub is a cloud-based web scraping tool that allows users to extract data from websites. It is particularly useful for handling dynamic websites and has a user-friendly interface.

5. Scrapy: Scrapy is a Python-based web crawling and web scraping framework. It is highly extensible and can be used for a wide range of data extraction needs.

6. SuperScraper: SuperScraper is a no-code web scraping tool that enables users to scrape data from websites by simply pointing and clicking on the elements they want to scrape. It's great for those who may not have extensive programming knowledge.

7. ParseHub: ParseHub is a cloud-based web scraping tool that offers a simple yet powerful way to scrape data from websites. It is ideal for large-scale scraping projects and can handle JavaScript-rendered content.

8. Apify: Apify is a platform that simplifies the process of scraping data from websites. It supports automatic data extraction and can handle complex websites with JavaScript rendering.

9. Diffbot: Diffbot is a web scraping API that automatically extracts structured data from websites. It is particularly good at handling dynamic websites and can handle most websites out-of-the-box.

10. Data Miner: Data Miner is a web scraping tool that allows users to scrape data from websites and APIs. It supports headless browsers and can handle dynamic websites.

11. Import.io: Import.io is a web scraping tool that turns any website into a custom API. It is particularly useful for extracting data from sites that require login credentials or have complex structures.

12. ParseHub: ParseHub is another cloud-based tool that can handle JavaScript-heavy sites and offers a variety of features including form filling, CAPTCHA solving, and more.

13. Bright Data (formerly Luminati): Bright Data provides a proxy network that helps in bypassing IP blocks and CAPTCHAs.

14. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features such as form filling, AJAX-driven content, and deep web scraping.

15. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features such as automatic data extraction and can handle dynamic content and JavaScript-heavy sites.

16. ScrapeStorm: ScrapeStorm is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

17. Scrapinghub: Scrapinghub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

18. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

19. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

20. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

Each of these tools has its own strengths and weaknesses, so it's important to choose the one that best fits your specific requirements.

20. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

21. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

22. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

23. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

24. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

25. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

26. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

27. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

28. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

29. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

28. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

30. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

31. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

32. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

33. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

34. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

35. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

36. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

37. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

38. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

39. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

38. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

39. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

40. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

41. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

42. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

43. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

44. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

45. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

46. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

47. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

48. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

49. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

50. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

51. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

52. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

53. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

54. ParseHub: ParseHub

加飞机@yuantou2048

王腾SEO

蜘蛛池出租

0 notes

Video

youtube

Apify vs. Leads Sniper: Which is the best Google Maps Scraper? 👌

1 note

·

View note

Text

📒 Unlock Business Data Effortlessly with the Advanced YellowPages Scraper!

Need reliable business information in bulk? Meet the Advanced YellowPages Scraper by Dainty Screw—the ultimate tool to extract data from YellowPages quickly and efficiently.

✨ What It Can Do:

• 📇 Extract business names, phone numbers, and addresses.

• 🌐 Collect website links, emails, and ratings.

• 📍 Scrape data for specific industries, categories, or locations.

• 🚀 Automate large-scale data collection with ease.

💡 Perfect For:

• Marketers generating leads.

• Businesses building directories.

• Researchers analyzing industry trends.

• Developers creating business data-driven applications.

🚀 Why This Scraper Stands Out:

• Accurate Results: Extracts the latest business data without errors.

• Customizable Options: Target your specific needs by location or category.

• Time-Saving Automation: Get thousands of results in minutes.

• Scalable & Reliable: Handles even the largest datasets with ease.

🔗 Start Scraping Today:

Get started with the Advanced YellowPages Scraper now: YellowPages Scraper

https://apify.com/dainty_screw/advanced-yellowpages-scraper

🙌 Say goodbye to manual searches and hello to smarter business data extraction. Boost your projects, leads, and insights today!

Tags: #YellowPagesScraper #BusinessData #WebScraping #LeadGeneration #DataAutomation #ApifyTools #BusinessDirectory #DataExtraction

#yellow pages#100 days of productivity#data scraping#3d printing#lead generation#yellow pages scraper#data automation#apify#apify automation

0 notes

Video

youtube

Apify vs. Leads Sniper: Which is the best Google Maps Scraper? 👌

0 notes

Video

youtube

Apify vs. Leads Sniper: Which is the best Google Maps Scraper? 👌

0 notes

Text

超凡蜘蛛池工具有哪些?

在互联网技术领域,"超凡蜘蛛池"通常指的是用于自动化抓取网页信息的工具集合。这些工具可以帮助用户高效地收集和处理网络数据,广泛应用于数据分析、市场研究等多个场景。下面,我们将介绍一些常见的超凡蜘蛛池工具。

1. Scrapy

Scrapy 是一个开源的 Python 框架,专为大规模爬虫项目设计。它提供了强大的功能集,包括自动处理登录、支持多种数据格式输出(如 JSON、CSV 等)、以及高度可定制化的中间件系统。对于需要频繁更新数据或处理大量页面的项目来说,Scrapy 是一个非常不错的选择。

2. Octoparse

Octoparse 是一款可视化无代码的网络爬虫软件,适合那些没有编程基础但又希望进行数据抓取的人群。通过简单的拖拽操作,用户可以快��创建复杂的爬虫任务,并且该软件还支持多线程加速和云服务部署等功能。

3. ParseHub

ParseHub 提供了一个基于云端的平台来构建和运行爬虫项目。它允许用户自定义规则来抓取特定类型的数据,并且能够处理动态加载的内容。此外,ParseHub 还提供 API 接口,方便与其他应用程序集成。

4. Apify

Apify 是一个全托管式的爬虫平台,用户只需要编写 JavaScript 代码即可完成整个爬虫流程。该平台内置了丰富的库函数,使得开发过程更加简便快捷。同时,Apify 支持分布式执行和调度管理,非常适合大型企业级应用。

5. Bright Data (原 Luminati)

Bright Data 是一家提供代理服务的公司,其产品可以帮助爬虫绕过网站的反爬机制。通过使用他们的代理池,开发者可以在不被检测的情况下访问目标站点,从而提高爬虫的成功率。

结语

以上就是我们今天介绍的几款超凡蜘蛛池工具。每种工具都有其独特的优势和适用场景,选择哪一种取决于你的具体需求和技术水平。如果你对某个工具感兴趣,不妨亲自试一试吧!

欢迎在评论区分享你使用过的其他超凡蜘蛛池工具或者提出任何问题!

加飞机@yuantou2048

谷歌留痕

SEO优化

0 notes

Text

Top 5 Amazon Scraping Services

An Amazon scraping service must:

Have enough industrial experience to handle the technicalities of web scraping must:

Provide a high-quality data set that has been through proper checks.

Deliver a dataset tailored to your preferences.

Know the legal landscape.

Be capable of scaling their service as your company grows.

Respond to your grievances quickly.

Here are the top 5 of them: 1. ScrapeHero 2. Apify 3. Zyte 4. Octoparse 5. Import.io

0 notes

Video

youtube

Apify vs. Leads Sniper: Which is the best Google Maps Scraper? 👌

0 notes

Text

Apify vs. Leads Sniper: Which is the best Google Maps Scraper? 👌

youtube

0 notes