#automata and language theory

Explore tagged Tumblr posts

Text

8 notes

·

View notes

Text

I need to rant for a moment and maybe some of you nerds on Tumblr will listen to me when I warn you about the dangers of invoking the empty language in a proof cause clearly, none of the students I TA for did.

In automata theory, a language refers to the set of input sequences that a machine accepts. This is equivalent to the user inputs that cause a program to close without error.

The empty language is a particular language that contains nothing in it. In other words, if a machine's language is empty, then every input you could try giving it will either generate an error or fail to shut the machine down.

And because the empty language is empty, there are many things about it that are vacuously true about it, and many contradicting statements can be simultaneously true about the empty language.

For example, the empty language is part of the set of languages that contain only palindromes and odd-length inputs (because there are no non-palindromes or even-length inputs in it). And the empty language is also part of the set of languages that contain only non-palindromes and even-length inputs (because there are no palindromes or odd-length inputs in it).

So whenever you do a proof about determining the characteristics of a language, you need to be very cautious about using the empty language in your proof because it may unexpectedly pass the vibe check.

Unless you 100% understand what will happen with the empty set, avoid using it in your proofs.

Anyway, this is just a long-winded way of saying I've had to take points off one particular exam question for most of the students I grade for something I've warned them constantly to avoid or be wary of.

22 notes

·

View notes

Text

interested in alien contact stories that present alien psychology as a way out of binaries (currently re foreigner) bc that then creates a larger overarching binary of “how they think” and “how we think.” possible to escape the nested binaries? i’m being promised [spoilers] a third potentially hostile alien race at some point, so maybe that could get us out

#foreigner blogging#taking the explorations of the language seriously in this continues to be a struggle bc chomsky and sapir-worf r fighting in my head and i’m#not sure these books want them to be. like bren is very much changed by speaking the atevi language#especially since these people r linguists it’s odd that no one is at all interested in thinking outside the behaviorist box even if just for#funsies in a random conversation. idk this is as far as my 1 semester of getting really invested in my automata theory class gets me#also while i’m here what is GOING ON with bren/jase. ‘the one human being on whom he’d focused all his remnant of humanity’ OK GAYASS

6 notes

·

View notes

Note

what's it like studying CS?? im pretty confused if i should choose CS as my major xx

hi there!

first, two "misconceptions" or maybe somewhat surprising things that I think are worth mentioning:

there really isn't that much "math" in the calculus/arithmetic sense*. I mostly remember doing lots of proofs. don't let not being a math wiz stop you from majoring in CS if you like CS

you can get by with surprisingly little programming - yeah you'll have programming assignments, but a degree program will teach you the theory and concepts for the most part (this is where universities will differ on the scale of theory vs. practice, but you'll always get a mix of both and it's important to learn both!)

*: there are some sub-fields where you actually do a Lot of math - machine learning and graphics programming will have you doing a lot of linear algebra, and I'm sure that there are plenty more that I don't remember at the moment. the point is that 1) if you're a bit afraid of math that's fine, you can still thrive in a CS degree but 2) if you love math or are willing to be brave there are a lot of cool things you can do!

I think the best way to get a good sense of what a major is like is to check out a sample degree plan from a university you're considering! here are some of the basic kinds of classes you'd be taking:

basic programming courses: you'll knock these out in your first year - once you know how to code and you have an in-depth understanding of the concepts, you now have a mental framework for the rest of your degree. and also once you learn one programming language, it's pretty easy to pick up another one, and you'll probably work in a handful of different languages throughout your degree.

discrete math/math for computer science courses: more courses that you'll take early on - this is mostly logic and learning to write proofs, and towards the end it just kind of becomes a bunch of semi-related math concepts that are useful in computing & problem solving. oh also I had to take a stats for CS course & a linear algebra course. oh and also calculus but that was mostly a university core requirement thing, I literally never really used it in my CS classes lol

data structures & algorithms: these are the big boys. stacks, queues, linked lists, trees, graphs, sorting algorithms, more complicated algorithms… if you're interviewing for a programming job, they will ask you data structures & algorithms questions. also this is where you learn to write smart, efficient code and solve problems. also this is where you learn which problems are proven to be unsolvable (or at least unsolvable in a reasonable amount of time) so you don't waste your time lol

courses on specific topics: operating systems, Linux/UNIX, circuits, databases, compilers, software engineering/design patterns, automata theory… some of these will be required, and then you'll get to pick some depending on what your interests are! I took cybersecurity-related courses but there really are so many different options!

In general I think CS is a really cool major that you can do a lot with. I realize this was pretty vague, so if you have any more questions feel free to send them my way! also I'm happy to talk more about specific classes/topics or if you just want an answer to "wtf is automata theory" lol

#asks#computer science#thank you for the ask!!! I love talking abt CS and this made me remember which courses I took lol#also side note I went to college at a public college in the US - things could be wildly different elsewhere idk#but these are the basics so I can't imagine other programs varying too widely??

10 notes

·

View notes

Text

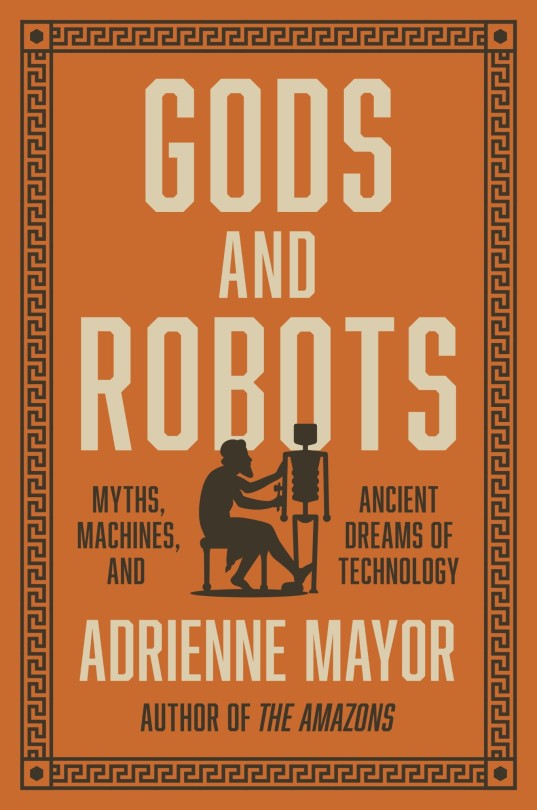

Gods and Robots: Myths, Machines, and Ancient Dreams of Technology. By Adrienne Mayor. Princeton University Press, 2018.

Rating: 4/5 stars

Genre: classics, mythology

Series: N/A

Summary: The fascinating untold story of how the ancients imagined robots and other forms of artificial life—and even invented real automated machines The first robot to walk the earth was a bronze giant called Talos. This wondrous machine was created not by MIT Robotics Lab, but by Hephaestus, the Greek god of invention. More than 2,500 years ago, long before medieval automata, and centuries before technology made self-moving devices possible, Greek mythology was exploring ideas about creating artificial life—and grappling with still-unresolved ethical concerns about biotechne, “life through craft.” In this compelling, richly illustrated book, Adrienne Mayor tells the fascinating story of how ancient Greek, Roman, Indian, and Chinese myths envisioned artificial life, automata, self-moving devices, and human enhancements—and how these visions relate to and reflect the ancient invention of real animated machines.As early as Homer, Greeks were imagining robotic servants, animated statues, and even ancient versions of Artificial Intelligence, while in Indian legend, Buddha’s precious relics were defended by robot warriors copied from Greco-Roman designs for real automata. Mythic automata appear in tales about Jason and the Argonauts, Medea, Daedalus, Prometheus, and Pandora, and many of these machines are described as being built with the same materials and methods that human artisans used to make tools and statues. And, indeed, many sophisticated animated devices were actually built in antiquity, reaching a climax with the creation of a host of automata in the ancient city of learning, Alexandria, the original Silicon Valley.A groundbreaking account of the earliest expressions of the timeless impulse to create artificial life, Gods and Robots reveals how some of today’s most advanced innovations in robotics and AI were foreshadowed in ancient myth—and how science has always been driven by imagination. This is mythology for the age of AI.

***Full review below.***

CONTENT WARNINGS: descriptions of myths that include violence and bestiality, mention of slavery

I first heard of this book when Adrienne Mayor was a guest on the Mindscape podcast. I found her ideas and arguments to be interesting, so I figured her book would expand on the concepts she explored on the podcast.

Overall, I think this book is an accessible intro for readers interested in classical studies and the history of science. Mayor writes clearly and without a lot of specialized terminology, so even if you're new to classics, you won't find this book difficult. I think the chapter about Talos was the most convincing and the most well-done, bringing together textual analysis and art history in a way I found intellectually pleasing.

As a scholar, however, I think this book could have gone a bit further, especially with its analysis of myths and texts. Mayor summarizes most of the myths she discusses, which is all well and good for situating the reader in the texts. But what I really wanted was more specific evidence and close reading: were there any interesting language choices used to describe this automaton? Did the Greeks have a certain vocabulary for artificial beings? As it stands, it felt like Mayor's textual analysis relied on general ideas, and while fine as a starting point, I really think it could have been pushed.

I also think there's some room to apply critical frameworks such as posthumanism and transhumanism theory. Applying such theories would enhance the meanings Mayor is trying to get at, but in all fairness, this may he beyond the scope of the book. Mayor seems to be relaying a history of ideas rather than trying to get at their interpretation, so maybe her book will function well as a springboard for other scholars and students to do this work.

Lastly, I do think this book could have been framed a little better to help me as a reader see some of the through-lines. There were moments when I wasn't sure how each of Mayor's examples fit in with the overall theme of "robots" or "artificial life," so maybe a better framing device would be the theme of biotechne rather than the sci fi approach that the book currently uses. Similarly, some further delineation between what constitutes "technology" versus magic (and even just "art") would have been helpful for seeing how each individual chapter builds upon the previous.

TL;DR: Gods and Robots is a good overview of the history of artifical life and technology in the ancient Greek world. This book is perhaps most useful as a springboard for further analysis, and historians, art historians, and literary scholars will find plenty to build on.

17 notes

·

View notes

Note

how do you feel about the thing in TCS where Theory A (algorithms, complexity, etc) is America and Theory B (formal languages, automata, logic, whatever) is Europe; how well does that mesh w your schema

I don't know what TCS is. The Computer Science? well anyway. I think this is a little bit true. I think this is true. although my favorite logician is haskell curry and he was american, and his outlook on logic, like philosophically and in terms of what he was interested in, is very refreshing and straightforward to me in contrast to like, russell, whose deal I Don't Get. so I think that logic could do with being a little more american than it is. but by virtue of historical circumstance, possibly mediated by inherent Essence, it is indeed the more european of the two.

13 notes

·

View notes

Text

THEORY OF COMPUTER SCIENCE: AUTOMATA, LANGUAGES AND COMPUTATION, Third Edition by Mishra and Chandrasekaran

Fulfills the needs of undergraduate degree and postgraduate courses students of computer science and engineering as well as those of the students offering courses in computer applications.

The book is available for purchase on our website. Click ttp://social.phindia.com/2doLtmpv.

Also available on Amazon, Kindle, Google Books and Flipkart.

#phibooks#philearning#phibookclub#ebook#education#undergraduate#textbooks#books#postgraduate#automata#theory of computer science#amazon#flipkart#kindle#google books#university books#student#college life#studying#exams#university#student life

2 notes

·

View notes

Text

Integration of AFL in Logistics Software: Enhancing Efficiency and Automation

In today’s rapidly evolving logistics industry, automation and digitization are key to streamlining operations. One such technological advancement is the integration of AFL (Automata and Formal Languages) into logistics software. By incorporating AFL, logistics companies can optimize their workflows, improve accuracy, and enhance operational efficiency.

What is AFL (Automata and Formal Languages)?

AFL refers to a mathematical framework used in computational theory to analyze and design language-based models, such as automation in logistics software. AFL concepts help in designing efficient algorithms for routing, scheduling, and process automation within the supply chain, reducing complexity and improving performance.

Key Benefits of Integrating AFL in Logistics Software

1. Optimized Routing and Scheduling

AFL enables the development of automated routing algorithms that optimize delivery schedules, reduce transit times, and minimize fuel costs. These models ensure efficient logistics operations.

2. Automation of Workflows

Using formal language principles, logistics software can automate data processing, inventory management, and shipment tracking, reducing human intervention and improving efficiency.

3. Error Reduction and Data Validation

AFL provides a structured approach to error handling and validation, ensuring that incorrect inputs are filtered out before processing. This minimizes disruptions in freight management.

4. Enhanced Decision-Making with AI Integration

AFL-based automata help build predictive models that assist in demand forecasting, intelligent dispatching, and optimizing logistics networks.

5. Streamlined Documentation and Compliance

By applying AFL concepts, logistics software can automate regulatory compliance, document generation, and verification processes, ensuring smoother international trade operations.

How QuickMove Technologies Enhances AFL Integration?

QuickMove Technologies specializes in intelligent logistics software solutions, leveraging AFL-based algorithms for automated scheduling, smart inventory management, and optimized freight forwarding.

For more details on how QuickMove Technologies integrates AFL in logistics software, visit: QuickMove Technologies.

Conclusion

AFL integration in logistics software is a game-changer, providing structured automation, intelligent decision-making, and operational efficiency. As logistics operations grow in complexity, leveraging AFL-based solutions ensures businesses remain competitive in an ever-evolving market.

Explore how QuickMove Technologies can transform your logistics operations: QuickMove Technologies.

0 notes

Text

Btech Computer Science

BTech in Computer Science: An Overview

Bachelor of Technology (BTech) in Computer Science is an undergraduate degree program that equips students with the knowledge and skills required to succeed in the dynamic field of computer science. This program typically spans four years and is designed to provide a deep understanding of computer systems, software development, algorithms, data structures, networking, and emerging technologies.

In today’s digital age, computer science plays a central role in driving innovation, shaping industries, and influencing almost every aspect of our lives. From artificial intelligence (AI) and machine learning (ML) to cloud computing and cybersecurity, a BTech in Computer Science opens doors to a wide range of career opportunities.

This article will explore the key aspects of a BTech in Computer Science, including its curriculum, career prospects, skills developed, and its significance in the ever-evolving tech landscape.

1. Curriculum of BTech Computer Science

The BTech in Computer Science curriculum is designed to provide a balanced blend of theoretical concepts and practical applications. The program typically includes courses in mathematics, programming, computer architecture, data structures, algorithms, databases, and software engineering. Here's an overview of the core subjects that students encounter during their studies:

Year 1: Foundation Courses

Mathematics: Topics such as calculus, linear algebra, discrete mathematics, and probability theory are covered in the first year. These are fundamental to understanding algorithms and data structures in computer science.

Programming Languages: Students learn languages such as C, C++, or Python. This is an essential part of the curriculum, as programming forms the backbone of software development.

Introduction to Computer Science: This includes an introduction to basic computer operations, hardware, and software fundamentals.

Year 2: Core Computer Science Courses

Data Structures and Algorithms: This is one of the most important subjects in computer science. Students learn how to organize and manage data efficiently, and how to design and analyze algorithms.

Computer Organization and Architecture: Students learn the structure of computer hardware, including processors, memory, input/output systems, and how they interact with software.

Database Management Systems (DBMS): This course focuses on the design, development, and management of databases, an essential skill for handling data in real-world applications.

Year 3: Advanced Topics

Operating Systems: This subject teaches how operating systems manage hardware and software resources, and how to work with system-level programming.

Software Engineering: Students learn about software development methodologies, project management, and quality assurance practices.

Computer Networks: This course covers the principles and protocols of networking, including the OSI model, TCP/IP, and routing algorithms.

Theory of Computation: This is an advanced topic focusing on automata theory, formal languages, and computational complexity.

Year 4: Specializations and Electives

In the final year, students have the opportunity to choose electives based on their areas of interest. Some of the popular electives include:

Artificial Intelligence (AI) and Machine Learning (ML): Students learn about intelligent systems, algorithms, and techniques used in AI and ML.

Cybersecurity: This course focuses on protecting computer systems, networks, and data from cyber threats.

Cloud Computing: Students learn about cloud architectures, services, and how to develop applications for cloud platforms.

Big Data Analytics: Students gain knowledge of analyzing and processing large datasets using various tools and techniques.

Mobile App Development: This focuses on developing applications for mobile devices using platforms like Android and iOS.

Additionally, students also undertake a final year project that allows them to apply the concepts they have learned throughout the course in a real-world scenario.

2. Skills Developed During the BTech in Computer Science

Throughout the course, students develop a wide range of technical and soft skills, preparing them for a successful career in technology. These skills include:

Technical Skills

Programming and Coding: Students become proficient in multiple programming languages like C, C++, Python, Java, and JavaScript.

Problem Solving: A BTech in Computer Science emphasizes logical thinking and problem-solving, which are essential for developing algorithms and software solutions.

System Design: Students learn how to design scalable and efficient software systems, ensuring they meet real-world requirements.

Data Management: The course provides deep knowledge of databases, including relational databases, NoSQL databases, and big data technologies.

Networking and Security: Knowledge of network protocols, data communication, and cybersecurity practices is a vital part of the curriculum.

Software Development: Students gain experience in the entire software development lifecycle, from design to coding to testing and maintenance.

Soft Skills

Teamwork and Collaboration: Most of the coursework, especially project work, involves collaboration, teaching students to work effectively in teams.

Communication: Students learn to communicate complex technical concepts to non-technical stakeholders, a skill critical for interacting with clients, managers, and team members.

Time Management: Managing deadlines for assignments, projects, and exams develops strong time management skills.

3. Career Opportunities for BTech Computer Science Graduates

A BTech in Computer Science offers a wide range of career opportunities across various industries. As technology continues to evolve, the demand for skilled computer science professionals has been steadily increasing. Here are some of the top career paths for BTech graduates:

Software Developer/Engineer

Software development is one of the most popular career options for computer science graduates. As a software developer, you will design, develop, and maintain software applications across various domains such as web development, mobile applications, gaming, and enterprise software solutions.

Data Scientist/Analyst

With the growing importance of big data, data scientists and analysts are in high demand. These professionals analyze large datasets to extract meaningful insights, helping businesses make data-driven decisions.

Cybersecurity Analyst

Cybersecurity is a rapidly growing field, with organizations looking for experts to protect their systems from cyber threats. Cybersecurity analysts monitor, analyze, and respond to security incidents to safeguard data and infrastructure.

Artificial Intelligence/Machine Learning Engineer

AI and ML engineers build intelligent systems and algorithms that enable machines to learn from data. These professionals work on creating autonomous systems, recommendation algorithms, and predictive models for various industries.

Cloud Architect

Cloud computing has become an essential part of modern infrastructure. Cloud architects design and implement cloud solutions for businesses, ensuring scalability, security, and reliability of applications hosted in the cloud.

Mobile App Developer

With the increasing use of smartphones, mobile app development has become a crucial area in computer science. Mobile app developers create applications for iOS and Android platforms, working on user interface design and functionality.

Systems Analyst

A systems analyst evaluates and designs IT systems for organizations, ensuring that technology solutions meet business requirements and improve overall efficiency.

Researcher/Academic

Graduates interested in academic pursuits can opt for a career in research, either in industry or academia. Computer science research is constantly evolving, with new innovations and technologies being developed regularly.

4. Future of BTech in Computer Science

The future of BTech in Computer Science is extremely promising. The rapid pace of technological advancements means that there will always be a need for skilled computer science professionals. Some of the key areas of growth include:

Artificial Intelligence and Machine Learning: AI and ML continue to evolve, with applications across industries ranging from healthcare to finance to autonomous vehicles.

Quantum Computing: As quantum computing becomes more advanced, computer scientists with specialized knowledge in this field will be in high demand.

Blockchain Technology: Blockchain has applications beyond cryptocurrencies, including supply chain management, voting systems, and secure transactions.

Internet of Things (IoT): The IoT revolution will create new opportunities for computer science professionals to develop connected devices and systems.

Robotics: Robotics is expected to grow significantly, particularly in automation and manufacturing industries.

Conclusion

A BTech in Computer Science provides students with a strong foundation in both theoretical and practical aspects of computing, preparing them for a wide range of career opportunities in the tech industry. With the world increasingly relying on technology, the demand for computer science professionals is expected to continue to rise.

In addition to technical expertise, graduates develop critical thinking, problem-solving, and communication skills, which are essential for success in the modern workforce. Whether pursuing software development, data science, AI, or cybersecurity, a BTech in Computer Science can lead to a fulfilling and rewarding career in one of the most dynamic and rapidly evolving fields of our time.

#BTech#ComputerScience#TechEducation#SoftwareEngineering#Coding#Programming#ArtificialIntelligence#MachineLearning#DataScience#Cybersecurity#TechCareers#CloudComputing#MobileAppDevelopment#BigData#IoT#Blockchain#TechInnovation#TechStudents#STEM#FutureOfTech#ComputerScienceDegree#TechSkills#TechTrends#InnovationInTech#AI#SoftwareDevelopment#EngineeringDegree#Technology#DigitalTransformation#Computing

1 note

·

View note

Text

Bill Gosper

Bill Gosper is a noted American computer scientist and mathematician celebrated for his significant contributions to computer science, especially in algorithms and programming languages. He played a key role in the development of the Lisp programming language and is recognized as an early pioneer of artificial intelligence. Gosper is particularly famous for his work on the "Game of Life" simulation, where he uncovered intricate patterns in cellular automata.

His mathematical interests extend to combinatorial game theory and number theory. Beyond academia, he has also influenced the creation of mathematical software tools and tackled various mathematical problems, often using computational methods. Gosper's innovative blend of mathematical rigor and computing has left a lasting mark on both fields.

Born: 26 April 1943 (age 81 years), New Jersey, United States

Education: Massachusetts Institute of Technology

Why did Bill Gosper invent Gosper island

Bill Gosper created Gosper Island as a part of his work in recursive structures and fractals within the field of mathematics, particularly in relation to cellular automata. Gosper Island is a self-similar fractal that arises from the process of generating a space-filling curve.

The importance of Gosper Island lies in its properties as a mathematical object. It serves as an example of how simple rules can lead to complex and interesting patterns. The island is often associated with the study of tiling, geometry, and the behavior of iterative processes. It is also notable for its representation of fractal principles, which have applications in various fields, including computer science, physics, and biology.

0 notes

Text

Comprehensive AKTU B.Tech IT Syllabus for All Years

The AKTU B.Tech 1st year syllabus lays the foundational framework for engineering students, covering essential concepts across core subjects, practical labs, and professional skills. The first year is divided into two semesters, focusing on mathematics, science fundamentals, engineering basics, and programming skills, vital for higher technical studies.

First Year B.Tech IT Syllabus

Semester 1

Core Subjects

Mathematics-I Introduces calculus, linear algebra, and differential equations for engineering problem-solving and applications.

Physics-I Covers mechanics, wave motion, and thermodynamics, tailored for engineering contexts.

Introduction to Programming (C Language) Focuses on programming fundamentals, data structures, algorithms, and hands-on coding practice.

Electrical Engineering Basics Provides a foundation in circuit theory, electrical machines, and power systems.

Professional Communication Enhances communication, writing, and presentation skills essential for professional growth.

Practical Labs

Physics Lab

Electrical Engineering Lab

Programming Lab (C Language)

Semester 2

Core Subjects

Mathematics-II Delves into advanced calculus, vector calculus, and linear transformations.

Chemistry Covers physical, inorganic, and organic chemistry, with emphasis on engineering materials.

Engineering Mechanics Introduces statics, dynamics, and mechanics of rigid bodies.

Computer System & Programming Explores computer architecture, assembly language, and structured programming.

Basic Electronics Engineering Focuses on electronic devices, circuits, and fundamental applications.

Practical Labs

Chemistry Lab

Basic Electronics Lab

Computer Programming Lab

Second Year B.Tech IT Syllabus

Semester 3

Core Subjects

Data Structures Using C Covers arrays, stacks, queues, linked lists, and trees for efficient data manipulation.

Discrete Mathematics Explores set theory, combinatorics, graph theory, and logic, forming a mathematical backbone for computing.

Digital Logic Design Introduces binary arithmetic, logic gates, combinational and sequential circuits.

Database Management Systems (DBMS) Focuses on relational databases, SQL, and the fundamentals of database design.

Computer Organization and Architecture Delves into CPU structure, memory hierarchy, and I/O systems.

Practical Labs

Data Structures Lab

Digital Logic Design Lab

DBMS Lab

Semester 4

Core Subjects

Operating Systems Covers process scheduling, memory management, file systems, and more.

Software Engineering Introduces software development life cycle, methodologies, and quality management practices.

Object-Oriented Programming (OOP) Using Java Covers OOP principles using Java, focusing on classes, inheritance, and polymorphism.

Theory of Automata & Formal Languages Studies automata theory, regular expressions, and context-free grammars.

Design and Analysis of Algorithms Focuses on algorithmic strategies, complexity analysis, and optimization techniques.

Practical Labs

Operating Systems Lab

Java Programming Lab

Algorithms Lab

Third Year B.Tech IT Syllabus

Semester 5

Core Subjects

Computer Networks Covers networking layers, TCP/IP, routing algorithms, and data communication.

Compiler Design Explores lexical analysis, syntax analysis, semantic analysis, and optimization techniques.

Web Technologies Introduces front-end and back-end web development using HTML, CSS, JavaScript, and server-side scripting.

Microprocessors and Interfacing Covers 8085/8086 microprocessors, interfacing, and assembly language programming.

Elective I Allows students to specialize in a subject area based on their interest.

Practical Labs

Computer Networks Lab

Microprocessor Lab

Web Technologies Lab

Semester 6

Core Subjects

Artificial Intelligence Covers foundational AI techniques, knowledge representation, and learning algorithms.

Distributed Systems Focuses on distributed computing models, coordination, and replication.

Mobile Computing Emphasizes mobile app development, wireless communication, and mobility management.

Advanced Database Systems Covers NoSQL databases, data warehousing, and database security measures.

Elective II Provides an additional specialization option.

Practical Labs

AI Lab

Mobile Application Lab

Distributed Systems Lab

Final Year B.Tech IT Syllabus

Semester 7

Core Subjects

Machine Learning Focuses on supervised, unsupervised learning algorithms, and evaluation models.

Cloud Computing Introduces cloud service models, deployment, and cloud security.

Information Security Covers cryptographic methods, network security, and security threats.

Elective III Tailored to specific industry-oriented needs and interests.

Practical Labs

Machine Learning Lab

Cloud Computing Lab

Major Project Phase I

Semester 8

Core Subjects

Big Data Analytics Explores data mining, the Hadoop ecosystem, and advanced analytics.

Entrepreneurship Development Prepares students with business planning, innovation, and management skills.

Major Project The culmination of academic knowledge in a comprehensive project.

This structured curriculum equips students with in-depth IT skills and knowledge, preparing them for a thriving career in technology and innovation.

1 note

·

View note

Text

Emergence of a Super-Hybrid Technical Language

"Libretto Lunaversitol: Notes Towards a Glottogenetic Process" by Andrew C. Wenaus, accompanied by biomorphic illustrations by Kenji Siratori, represents an innovative fusion of patamathematical formulae, the International Phonetic Alphabet (IPA), and abstract artistic expression. This work functions not just as a literary piece, but as a speculative, glottogenetic process—a birth of a new language, both visual and auditory.

The concept of patamathematics—a term that playfully combines "pataphysics" (the science of imaginary solutions) and mathematics—infuses this work with a unique structural integrity. Patamathematics serves as the foundation for generating new linguistic forms, akin to how mathematical functions generate graphs. Just as a function f(x) maps inputs to outputs, "Libretto Lunaversitol" maps the abstract and the tangible to create a multifaceted language.

The process of glottogenesis in Wenaus’s work can be understood through the lens of algebraic structures. If we consider the components of the book—text, IPA symbols, and visual elements—as sets, the interactions between them can be modeled using relations and functions. For example, let:

T be the set of textual elements,

P be the set of IPA symbols,

V be the set of visual elements.

We can define a relation R⊆T×P×V where each tuple (t,p,v)∈R represents the interaction between a textual element, its phonetic representation, and a corresponding visual element. The richness of the work lies in the non-linear, non-sequential mappings that challenge conventional linguistic structures.

"Libretto Lunaversitol" speculates on the emergence of a super-hybrid technical language that self-organizes outside human comprehension. This can be likened to emergent phenomena in complex systems, where local interactions give rise to global patterns. In mathematical terms, this is often described using cellular automata or neural networks.

Consider a cellular automaton where each cell represents a linguistic unit (a word, symbol, or sound). The state of each cell evolves according to a set of rules based on the states of its neighbors. This mirrors how the book’s elements interact to create new meanings and forms. The emergent language is a higher-order structure, an attractor in the state space of all possible configurations.

The analogy to big data and autonomous informational processes is particularly pertinent. In machine learning, optimization algorithms such as gradient descent iteratively adjust parameters to minimize a loss function. Similarly, "Libretto Lunaversitol" can be viewed as an optimization process where the "loss function" is the ineffability of human language and experience. The iterative refinement through textual, phonetic, and visual interplay seeks to minimize this ineffability, approaching a new mode of expression.

Wenaus’s work stands against purity and essentialism, embracing hybridity and multiplicity. Mathematically, this opposition can be framed in terms of fuzzy logic and set theory. Traditional set theory is binary—an element either belongs to a set or it does not. Fuzzy set theory, however, allows for degrees of membership. In "Libretto Lunaversitol," elements are not confined to a single identity but exist in a continuum of states.

For instance, if μT(x) denotes the membership function of element xxx in the set of textual elements T, then 0≤μT(x)≤1. This fluidity allows for a richer, more inclusive linguistic structure that can better capture the complexities of human experience and thought.

The biomorphic figures by Kenji Siratori and the musical score by Wenaus and Christina Marie Willatt add further layers of complexity. These elements can be modeled as transformations in a multi-dimensional space. Let Rn represent the space of all possible sensory inputs. The visual and auditory components can be seen as vectors in this space, transforming the perception of the textual and phonetic elements.

"Libretto Lunaversitol: Notes Towards a Glottogenetic Process" is a pioneering work that transcends traditional boundaries between language, art, and mathematics. By leveraging the principles of patamathematics, emergent systems, and fuzzy logic, Wenaus and Siratori create a super-hybrid technical language that challenges our understanding of communication and expression.

0 notes

Text

Exploring the Future: Pattern Recognition in AI

Summary: Pattern recognition in AI enables machines to interpret data patterns autonomously through statistical, syntactic, and neural network methods. It enhances accuracy, automates complex tasks, and improves decision-making across diverse sectors despite challenges in data quality and ethical concerns.

Introduction

Artificial Intelligence (AI) transforms our world by automating complex tasks, enhancing decision-making, and enabling more innovative technologies. In today's data-driven landscape, AI's significance cannot be overstated. A crucial aspect of AI is pattern recognition, which allows systems to identify patterns and make predictions based on data.

This blog aims to simplify pattern recognition in AI, exploring its mechanisms, applications, and emerging trends. By understanding pattern recognition in AI, readers will gain insights into its profound impact on various industries and its potential for future innovations.

What is Pattern Recognition in AI?

Pattern recognition in AI involves identifying regularities or patterns in data through algorithms and models. It enables machines to discern meaningful information from complex datasets, mimicking human cognitive abilities.

AI systems categorise and interpret patterns by analysing input data to make informed decisions or predictions. This process spans various domains, from image and speech recognition to medical diagnostics and financial forecasting.

Pattern recognition equips AI with recognising similarities or anomalies, facilitating tasks requiring efficient understanding and responding to recurring data patterns. Thus, it forms a foundational aspect of AI's capability to learn and adapt from data autonomously.

How Does Pattern Recognition Work?

Pattern recognition in artificial intelligence (AI) involves systematically identifying and interpreting patterns within data, enabling machines to learn and make decisions autonomously. This process integrates fundamental principles and advanced techniques to achieve accurate results across domains.

Statistical Methods

Statistical methods form the bedrock of pattern recognition, leveraging probability theory and statistical inference to analyse patterns in data. Techniques such as Bayesian classifiers, clustering algorithms, and regression analysis enable machines to discern patterns based on probabilistic models derived from training data.

By quantifying uncertainties and modelling relationships between variables, statistical methods enhance the accuracy and reliability of pattern recognition systems.

Syntactic Methods

Syntactic methods focus on patterns' structural aspects, emphasising grammatical or syntactic rules to parse and recognise patterns. This approach is beneficial in tasks like natural language processing (NLP) and handwriting recognition, where the sequence and arrangement of elements play a crucial role.

Techniques like formal grammars, finite automata, and parsing algorithms enable machines to understand and generate structured patterns based on predefined rules and constraints.

Neural Networks and Deep Learning

Neural networks and Deep Learning have revolutionised pattern recognition by mimicking the human brain's interconnected network of neurons. These models learn hierarchical representations of data through multiple layers of abstraction, extracting intricate features and patterns from raw input.

Convolutional Neural Networks (CNNs) excel in image and video recognition tasks. In contrast, Recurrent Neural Networks (RNNs) are adept at sequential data analysis, such as speech and text recognition. The advent of Deep Learning has significantly enhanced the scalability and performance of pattern recognition systems across diverse applications.

Steps Involved in Pattern Recognition

Understanding the steps involved in pattern recognition is crucial for solving complex problems in AI and Machine Learning. Mastering these steps fosters innovation and efficiency in developing advanced technological solutions. Pattern recognition involves several sequential steps to transform raw data into actionable insights:

Step 1: Data Collection

The process begins with acquiring relevant data from various sources, ensuring a comprehensive dataset that adequately represents the problem domain.

Step 2: Preprocessing

Raw data undergoes preprocessing to clean, normalise, and transform it into a suitable format for analysis. This step includes handling missing values, scaling features, and removing noise to enhance data quality.

Step 3: Feature Extraction

Feature extraction involves identifying and selecting meaningful features or attributes from the preprocessed data that best characterise the underlying patterns. Techniques like principal component analysis (PCA), wavelet transforms, and deep feature learning extract discriminative features essential for accurate classification.

Step 4: Classification

In the classification phase, extracted features categorise or label data into predefined classes or categories. Machine Learning algorithms such as Support Vector Machines (SVMS), Decision Trees, and K-Nearest Neighbours (K-NN) classifiers assign new instances to the most appropriate class based on learned patterns.

Step 5: Post-processing

Post-processing involves refining and validating the classification results, often through error correction, ensemble learning, or feedback mechanisms. Addressing uncertainties and refining model outputs ensures robustness and reliability in real-world applications.

Benefits of Pattern Recognition

Pattern recognition in AI offers significant advantages across various domains. Firstly, it improves accuracy and efficiency by automating the identification and classification of patterns within vast datasets. This capability reduces human error and enhances the reliability of outcomes in tasks such as image recognition or predictive analytics.

Moreover, pattern recognition enables the automation of complex tasks that traditionally require extensive human intervention. By identifying patterns in data, AI systems can autonomously perform tasks like anomaly detection in cybersecurity or predictive maintenance in manufacturing, leading to increased operational efficiency and cost savings.

Furthermore, the technology enhances decision-making by providing insights based on data patterns that humans might overlook. This capability supports businesses in making data-driven decisions swiftly and accurately, thereby gaining a competitive edge in dynamic markets.

Challenges of Pattern Recognition

Despite its benefits, pattern recognition in AI faces several challenges. Data quality and quantity issues pose significant hurdles as accurate pattern recognition relies on large volumes of high-quality data. Here are a few of the challenges:

Data Quality

Noisy, incomplete, or inconsistent data can significantly impact the performance of pattern recognition algorithms. Techniques like outlier detection and data cleaning are crucial for mitigating this challenge.

Variability in Patterns

Real-world patterns often exhibit variations and inconsistencies. An algorithm trained on a specific type of pattern might struggle to recognize similar patterns with slight variations.

High Dimensionality

Data with a high number of features (dimensions) can make it difficult for algorithms to identify the most relevant features for accurate pattern recognition. Dimensionality reduction techniques can help address this issue.

Overfitting and Underfitting

An overfitted model might perform well on training data but fail to generalize to unseen data. Conversely, an underfitted model might not learn the underlying patterns effectively. Careful selection and tuning of algorithms are essential to avoid these pitfalls.

Computational Complexity

Some pattern recognition algorithms, particularly deep learning models, can be computationally expensive to train and run, especially when dealing with large datasets. This can limit their applicability in resource-constrained environments.

Limited Explainability

While some algorithms excel at recognizing patterns, they might not be able to explain why they classified a particular data point as belonging to a specific pattern. This lack of interpretability can be a challenge in situations where understanding the reasoning behind the recognition is crucial.

Emerging Technologies and Methodologies

This section highlights how ongoing technological advancements are shaping the landscape of pattern recognition in AI, propelling it towards more sophisticated and impactful applications across various sectors.

Advances in Deep Learning and Neural Networks

Recent advancements in Deep Learning and neural networks have revolutionised pattern recognition in AI. These technologies mimic the human brain's ability to learn and adapt from data, enabling machines to recognise complex patterns with unprecedented accuracy.

Deep Learning, a subset of Machine Learning, uses deep neural networks with multiple layers to automatically extract features from raw data. This approach has significantly improved tasks such as image and speech recognition, natural language processing, and autonomous decision-making.

Role of Big Data and Cloud Computing

The proliferation of Big Data and cloud computing has provided the necessary infrastructure for handling vast amounts of data crucial for pattern recognition. Big Data technologies enable the collection, storage, and processing of massive datasets essential for training and validating complex AI models.

Cloud computing platforms offer scalable resources and computational power, facilitating the deployment and management of AI applications across various industries. This combination of Big Data and cloud computing has democratised access to AI capabilities, empowering organisations of all sizes to leverage advanced pattern recognition technologies.

Integration with Other AI Technologies

Pattern recognition in AI is increasingly integrated with other advanced technologies, such as reinforcement learning and generative models. Reinforcement learning enables AI systems to learn through interaction with their environment, making it suitable for tasks requiring decision-making and continuous learning.

Generative models, such as Generative Adversarial Networks (GANs), create new data instances similar to existing ones, expanding the scope of pattern recognition applications in areas like image synthesis and anomaly detection. These integrations enhance the versatility and effectiveness of AI-powered pattern recognition systems across diverse domains.

Future Trends and Potential Developments

Looking ahead, the future of pattern recognition in AI promises exciting developments. Enhanced interpretability and explainability of AI models will address concerns related to trust and transparency are a few of the many developments. Some other noteworthy developments include:

Domain-Specific AI

We will see a rise in AI models specifically designed for particular domains, such as medical diagnosis, financial forecasting, or autonomous vehicles. These specialized models will be able to leverage domain-specific knowledge to achieve superior performance.

Human-in-the-Loop AI

The future lies in a collaborative approach where AI and humans work together. Pattern recognition systems will provide recommendations and insights, while humans retain the ultimate decision-making power.

Bio-inspired AI

Drawing inspiration from the human brain and nervous system, researchers are developing neuromorphic computing approaches that could lead to more efficient and robust pattern recognition algorithms.

Quantum Machine Learning

While still in its early stages, quantum computing has the potential to revolutionize how we approach complex pattern recognition tasks, especially those involving massive datasets.

Frequently Asked Questions

What is Pattern Recognition in AI?

Pattern recognition in AI refers to the process where algorithms analyse data to identify recurring patterns. It enables machines to learn from examples, categorise information, and predict outcomes, mimicking human cognitive abilities crucial for applications like image recognition and predictive modelling.

How Does Pattern Recognition Work in AI?

Pattern recognition in AI involves statistical methods, syntactic analysis, and neural networks. Statistical methods like Bayesian classifiers analyse probabilities, syntactic methods parse structural patterns, and neural networks learn hierarchical representations. These techniques enable machines to understand data complexity and make informed decisions autonomously.

What are the Benefits of Pattern Recognition in AI?

Pattern recognition enhances accuracy by automating the identification of patterns in large datasets, reducing human error. It automates complex tasks like anomaly detection and predictive maintenance, improving operational efficiency across industries. Moreover, it facilitates data-driven decision-making, providing insights from data patterns that human analysis might overlook.

Conclusion

Pattern recognition in AI empowers machines to interpret and act upon data patterns autonomously, revolutionising industries from healthcare to finance. By leveraging statistical methods, neural networks, and emerging technologies like Deep Learning, AI systems can identify complex relationships and make predictions with unprecedented accuracy.

Despite challenges such as data quality and ethical considerations, ongoing advancements in big data, cloud computing, and integrative AI technologies promise a future where pattern

0 notes

Text

The importance of composition and why its always overlooked

A 700 word essay all ranting and tackling the importance of composition when it comes to any produced multimedia output

The importance of composition in any form of visual media cannot be overstated or overlooked. Whether it's in film, photography, graphic design, art or even in the arrangement of elements on a webpage. Composition plays a crucial role in conveying meaning, creating impact, and eliciting specific emotional responses from the audience.

When we say “Composition” it can mean everything but the most basic understanding of it is just a pre planned placements of visual elements for viewers. On videos an effective composition helps the viewer to experience and understand a very appealing and aesthetically pleasing visual presentation, a smart composition guides and conveys the intended message to the viewer’s eyes

On film, composition is a fundamental aspect of storytelling. One of the most iconic examples of masterful composition can be found in the works of the legendary filmmakers such as Stanley Kubrick’s the “The Shining” Christopher Nolan’s “Inception” Robert Egger’s “The Lighthouse” or any Satoshi Kon films.

In “The Shining," the meticulous composition of each frame adds layers of meaning and psychological tension to the narrative, for “Inception” the genius use of effects and scene hierarchy evokes the sense of urgency, to “The Lighthouse” the absence of color and the careful use of black and white really sells the psychological impact that the characters are experiencing, one of Satoshi Kon’s film called “Millennium Actress” uses unusual colors that defies the rules of color theory to a point that it makes the film grandiose and important.

Composition can be used to disorient and unsettle the audience, reinforcing the sense of isolation to which permeates the film and includes the viewer to not only engaged in the story but as well as experience every little detail that is happening in it.

From a technical standpoint, understanding the rules of composition is essential for creating visually compelling content.

In photography, Layout rules such as the rule of thirds, the golden ratio, center composition, diagonals, or just any of the mostly used types of cropping are a foundational principle that guides the arrangement of elements within the frame, which leads to a balanced and aesthetically pleasing images.

In graphic design, the use of grids and the concept of visual hierarchy are critical for organizing and prioritizing information in a way that is easily digestible for the viewer. Similarly, in web design too, the careful placement of elements, such as text, images, and interactive components, can greatly impact user experience and overall engagement to that web page.

In the world of music videos, composition takes on a whole new level of significance. The collaboration between a musician and a visual artist to create a compelling visual narrative is often what elevates a song to an enduring cultural phenomenon.

I remember a really good album from the hit video game called Nier and Nier Automata, where the composer didn't want all of the music in that game to feel generic and something we can recognized to other medium, music nowadays are interchangeable especially when it comes to composing, hence there is a very extensive copyright law for it, so what this composer did for the Nier Games that she created a whole new language dedicated to that game just to make 12 songs that are spoken in that unknown language to which are going to be played on that game, because she did what she did, it ultimately became one of my favorite albums ever, setting new standards for the medium, its something that we don't get to hear or experience any day (vague hope and city ruins are the best titles in that album)

Moving on now, when it comes to the impact of composition we don't notice it in our daily lives, but we encounter it every time we interact with technology. The layout of a smartphone interface, the design of a website, or the arrangement of elements in a digital advertisement, it is even on architecture, the way the layout of the house are designed, and also how the flow of people in between rooms and floors are designed, all of these are influenced by principles of composition. The careful consideration of visual balance, contrast, and focal points can greatly enhance the usability and overall appeal of these digital and non digital experiences.

In conclusion, the importance of composition cannot be overstated. Whether in the world of film, pop culture, digital design, or just any type of design careers, the way in which visual elements are arranged and presented has a profound impact on our perception and emotional response as well as our way of living. Understanding the technical rules of composition, combined with a flair for creative innovation and appropriate cultural references, can truly elevate any visual creation to a a masterpiece.

0 notes

Text

Know About M Tech CSE Syllabus

M tech cse syllabus, the MIT Department of Computer Science offers an undergraduate course, M Tech, which covers the fundamentals of computer science. This course has been designed to prepare students for future careers in the field of computer science. The syllabus covers subjects such as computational complexity, discrete mathematics, programming languages, automata theory, algorithms, data structures, and software engineering.

0 notes

Text

usually formal language theory is used in making programming languages & its usually taught in the last years of undergrad cs alongside automata theory... there's also computational linguistics which is kinda what AI is today

Found two guys who run a linguistics podcast and I thought huh nice I guess that's their field and then I found one about books and reading so I was like ok nice I guess they're linked interests and then I found one by the same guys about computer science????????? That's a lot of fields

36 notes

·

View notes