#aws lambda api gateway

Explore tagged Tumblr posts

Text

AWS Lambda Compute Service Tutorial for Amazon Cloud Developers

Full Video Link - https://youtube.com/shorts/QmQOWR_aiNI Hi, a new #video #tutorial on #aws #lambda #awslambda is published on #codeonedigest #youtube channel. @java @awscloud @AWSCloudIndia @YouTube #youtube @codeonedigest #codeonedigest #aws #amaz

AWS Lambda is a serverless compute service that runs your code in response to events and automatically manages the underlying compute resources for you. These events may include changes in state such as a user placing an item in a shopping cart on an ecommerce website. AWS Lambda automatically runs code in response to multiple events, such as HTTP requests via Amazon API Gateway, modifications…

View On WordPress

#amazon lambda java example#aws#aws cloud#aws lambda#aws lambda api gateway#aws lambda api gateway trigger#aws lambda basic#aws lambda code#aws lambda configuration#aws lambda developer#aws lambda event trigger#aws lambda eventbridge#aws lambda example#aws lambda function#aws lambda function example#aws lambda function s3 trigger#aws lambda java#aws lambda server#aws lambda service#aws lambda tutorial#aws training#aws tutorial#lambda service

0 notes

Text

Implementing API Gateway with Lambda Authorizer Using Terraform

Implementing a secure and scalable API Gateway with Lambda authorizer. Leverage Terraform to manage your resources efficiently.

Background: API Gateway with Lambda AuthorizerBenefits of Using API Gateway with Lambda AuthorizerOverview of the Terraform ImplementationDetailed Explanation of the Terraform CodeProviderVariableLocalsData SourcesIAM Roles and PoliciesIAM Role for Core FunctionIAM Role for Lambda Authorizer FunctionLambda Core FunctionLambda Authorizer FunctionBenefits of Using Environment VariablesAPI Gateway…

0 notes

Text

Securing and Monitoring Your Data Pipeline: Best Practices for Kafka, AWS RDS, Lambda, and API Gateway Integration

http://securitytc.com/T3Rgt9

3 notes

·

View notes

Text

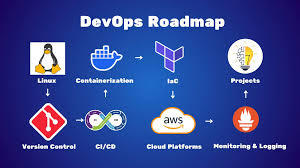

Mastering AWS DevOps in 2025: Best Practices, Tools, and Real-World Use Cases

In 2025, the cloud ecosystem continues to grow very rapidly. Organizations of every size are embracing AWS DevOps to automate software delivery, improve security, and scale business efficiently. Mastering AWS DevOps means knowing the optimal combination of tools, best practices, and real-world use cases that deliver success in production.

This guide will assist you in discovering the most important elements of AWS DevOps, the best practices of 2025, and real-world examples of how top companies are leveraging AWS DevOps to compete.

What is AWS DevOps

AWS DevOps is the union of cultural principles, practices, and tools on Amazon Web Services that enhances an organization's capacity to deliver applications and services at a higher speed. It facilitates continuous integration, continuous delivery, infrastructure as code, monitoring, and cooperation among development and operations teams.

Why AWS DevOps is Important in 2025

As organizations require quicker innovation and zero downtime, DevOps on AWS offers the flexibility and reliability to compete. Trends such as AI integration, serverless architecture, and automated compliance are changing how teams adopt DevOps in 2025.

Advantages of adopting AWS DevOps:

1 Faster deployment cycles

2 Enhanced system reliability

3 Flexible and scalable cloud infrastructure

4 Automation from code to production

5 Integrated security and compliance

Best AWS DevOps Tools to Learn in 2025

These are the most critical tools fueling current AWS DevOps pipelines:

AWS CodePipeline

Your release process can be automated with our fully managed CI/CD service.

AWS CodeBuild

Scalable build service for creating ready-to-deploy packages, testing, and building source code.

AWS CodeDeploy

Automates code deployments to EC2, Lambda, ECS, or on-prem servers with zero-downtime approaches.

AWS CloudFormation and CDK

For infrastructure as code (IaC) management, allowing repeatable and versioned cloud environments.

Amazon CloudWatch

Facilitates logging, metrics, and alerting to track application and infrastructure performance.

AWS Lambda

Serverless compute that runs code in response to triggers, well-suited for event-driven DevOps automation.

AWS DevOps Best Practices in 2025

1. Adopt Infrastructure as Code (IaC)

Utilize AWS CloudFormation or Terraform to declare infrastructure. This makes it repeatable, easier to collaborate on, and version-able.

2. Use Full CI/CD Pipelines

Implement tools such as CodePipeline, GitHub Actions, or Jenkins on AWS to automate deployment, testing, and building.

3. Shift Left on Security

Bake security in early with Amazon Inspector, CodeGuru, and Secrets Manager. As part of CI/CD, automate vulnerability scans.

4. Monitor Everything

Utilize CloudWatch, X-Ray, and CloudTrail to achieve complete observability into your system. Implement alerts to detect and respond to problems promptly.

5. Use Containers and Serverless for Scalability

Utilize Amazon ECS, EKS, or Lambda for autoscaling. These services lower infrastructure management overhead and enhance efficiency.

Real-World AWS DevOps Use Cases

Use Case 1: Scalable CI/CD for a Fintech Startup

AWS CodePipeline and CodeDeploy were used by a financial firm to automate deployments in both production and staging environments. By containerizing using ECS and taking advantage of CloudWatch monitoring, they lowered deployment mistakes by 80 percent and attained near-zero downtime.

Use Case 2: Legacy Modernization for an Enterprise

A legacy enterprise moved its on-premise applications to AWS with CloudFormation and EC2 Auto Scaling. Through the adoption of full-stack DevOps pipelines and the transformation to microservices with EKS, they enhanced time-to-market by 60 percent.

Use Case 3: Serverless DevOps for a SaaS Product

A SaaS organization utilized AWS Lambda and API Gateway for their backend functions. They implemented quick feature releases and automatically scaled during high usage without having to provision infrastructure using CodeBuild and CloudWatch.

Top Trends in AWS DevOps in 2025

AI-driven DevOps: Integration with CodeWhisperer, CodeGuru, and machine learning algorithms for intelligence-driven automation

Compliance-as-Code: Governance policies automated using services such as AWS Config and Service Control Policies

Multi-account strategies: Employing AWS Organizations for scalable, secure account management

Zero Trust Architecture: Implementing strict identity-based access with IAM, SSO, and MFA

Hybrid Cloud DevOps: Connecting on-premises systems to AWS for effortless deployments

Conclusion

In 2025, becoming a master of AWS DevOps means syncing your development workflows with cloud-native architecture, innovative tools, and current best practices. With AWS, teams are able to create secure, scalable, and automated systems that release value at an unprecedented rate.

Begin with automating your pipelines, securing your deployments, and scaling with confidence. DevOps is the way of the future, and AWS is leading the way.

Frequently Asked Questions

What distinguishes AWS DevOps from DevOps? While AWS DevOps uses AWS services and tools to execute DevOps, DevOps itself is a practice.

Can small teams benefit from AWS DevOps

Yes. AWS provides fully managed services that enable small teams to scale and automate without having to handle complicated infrastructure.

Which programming languages does AWS DevOps support

AWS supports the big ones - Python, Node.js, Java, Go, .NET, Ruby, and many more.

Is AWS DevOps for enterprise-scale applications

Yes. Large enterprises run large-scale, multi-region applications with millions of users using AWS DevOps.

1 note

·

View note

Text

Musings of an LLM Using Man

I know, the internet doesn’t need more words about AI, but not addressing my own usage here feels like an omission.

A good deal of the DC Tech Events code was written with Amazon Q. A few things led to this:

Being on the job market, I felt like I needed get a handle on this stuff, to at least have opinions formed by experience and not just stuff I read on the internet.

I managed to get my hands on $50 of AWS credit that could only be spent on Q.

So, I decided that DC Tech Events would be an experiment in working with an LLM coding assistant. I naturally tend to be a bit of an architecture astronaut. You could say Q exacerbated that, or at least didn’t temper that tendency at all. From another angle, it took me to the logical conclusion of my sketchiest ideas faster than I would have otherwise. To abuse the “astronaut” metaphor: Q got me to the moon (and the realization that life on the moon isn’t that pleasant) much sooner than I would have without it.

I had a CDK project deploying a defensible cloud architecture for the site, using S3, Cloudfront, Lambda, API Gateway, and DynamoDB. The first “maybe this sucks” moment came when I started working on tweaking the HTML and CSS, I didn’t have a good way to preview changes locally without a cdk deploy, which could take a couple of minutes.

That led to a container-centric refactor, that was able to run locally using docker compose. This is when I decided to share an early screenshot. It worked, but the complexity started making me feel nauseous.

This prompt was my hail mary:

Reimagine this whole project as a static site generator. There is a directory called _groups, with a yaml file describing each group. There is a directory called _single_events for events that don’t come from groups(also yaml). All “suggestions” and the review process will all happen via Github pull requests, so there is no need to provide UI or API’s enabling that. There is no longer a need for API’s or login or databases. Restructure the project to accomplish this as simply as possible.

The aggregator should work in two phases: one fetches ical files, and updates a local copy of the file only if it has updated (and supports conditional HTTP get via etag or last modified date). The other converts downloaded iCals and single event YAML into new YAML files:

upcoming.yaml : the remainder of the current month, and all events for the following month

per-month files (like july.yaml)

The flask app should be reconfigured to pull from these YAML files instead of dynamoDB.

Remove the current GithHub actions. Instead, when a change is made to main, the aggregator should run, freeze.py should run, and the built site should be deployed via github page

I don’t recall whether it worked on the first try, and it certainly wasn’t the end of the road (I eventually abandoned the per-month organization, for example), but it did the thing. I was impressed enough to save that prompt because it felt like a noteworthy moment.

I’d liken the whole experience to: banging software into shape by criticizing it. I like criticizing stuff! (I came into blogging during the new media douchebag era, after all). In the future, I think I prefer working this way, over not.

If I personally continue using this (and similar tech), am I contributing to making the world worse? The energy and environmental cost might be overstated, but it isn’t nothing. Is it akin to the other compromises I might make in a day, like driving my gasoline-powered car, grilling over charcoal, or zoning out in the shower? Much worse? Much less? I don’t know yet.

That isn’t the only lens where things look bleak, either: it’s the same tools and infrastructure that make the whiz-bang coding assistants work that lets search engines spit out fact-shaped, information-like blurbs that are only correct by coincidence. It’s shitty that with the right prompts, you can replicate an artists work, or apply their style to new subject matter, especially if that artist is still alive and working. I wonder if content generated by models trained on other model-generated work will be the grey goo fate of the web.

The title of this post was meant to be an X Files reference, but I wonder if cigarettes are in fact an apt metaphor: bad for you and the people around you, enjoyable (for some), and hard to quit.

0 notes

Link

0 notes

Text

Serverless Architecture for Scalable, Cost-Effective Web Apps

Serverless architecture helps businesses build web apps faster, cheaper, and without worrying about server maintenance. Cloud providers like AWS and Google Cloud take care of all the backend work, so developers can focus on writing code.

In this setup, apps scale automatically based on demand, and companies only pay for the actual usage, not idle time. This means less cost, better security, and quicker updates.

Popular tools include AWS Lambda, API Gateway, and Google Cloud Functions. These tools support tasks like running code, storing data, or building APIs - all without managing servers.

Use cases include mobile and web backends, real-time data processing, and scheduled jobs. Best practices include keeping code small, monitoring performance, and securing environments.

Overall, serverless makes building apps easier and more efficient. It’s ideal for growing businesses that want to stay fast, flexible, and budget-friendly.

#Serverless#CloudComputing#AWS#GoogleCloud#WebDevelopment#TechSimplified#ScalableApps#CostEffectiveTech

0 notes

Text

Integrating ROSA Applications with AWS Services (CS221)

As cloud-native architectures become the backbone of modern application deployments, combining the power of Red Hat OpenShift Service on AWS (ROSA) with native AWS services unlocks immense value for developers and DevOps teams alike. In this blog post, we explore how to integrate ROSA-hosted applications with AWS services to build scalable, secure, and cloud-optimized solutions — a key skill set emphasized in the CS221 course.

🚀 What is ROSA?

Red Hat OpenShift Service on AWS (ROSA) is a managed OpenShift platform that runs natively on AWS. It allows organizations to deploy Kubernetes-based applications while leveraging the scalability and global reach of AWS, without managing the underlying infrastructure.

With ROSA, you get:

Fully managed OpenShift clusters

Integrated with AWS IAM and billing

Access to AWS services like RDS, S3, DynamoDB, Lambda, etc.

Native CI/CD, container orchestration, and operator support

🧩 Why Integrate ROSA with AWS Services?

ROSA applications often need to interact with services like:

Amazon S3 for object storage

Amazon RDS or DynamoDB for database integration

Amazon SNS/SQS for messaging and queuing

AWS Secrets Manager or SSM Parameter Store for secrets management

Amazon CloudWatch for monitoring and logging

Integration enhances your application’s:

Scalability — Offload data, caching, messaging to AWS-native services

Security — Use IAM roles and policies for fine-grained access control

Resilience — Rely on AWS SLAs for critical components

Observability — Monitor and trace hybrid workloads via CloudWatch and X-Ray

🔐 IAM and Permissions: Secure Integration First

A crucial part of ROSA-AWS integration is managing IAM roles and policies securely.

Steps:

Create IAM Roles for Service Accounts (IRSA):

ROSA supports IAM Roles for Service Accounts, allowing pods to securely access AWS services without hardcoding credentials.

Attach IAM Policy to the Role:

Example: An application that uploads files to S3 will need the following permissions:{ "Effect": "Allow", "Action": ["s3:PutObject", "s3:GetObject"], "Resource": "arn:aws:s3:::my-bucket-name/*" }

Annotate OpenShift Service Account:

Use oc annotate to associate your OpenShift service account with the IAM role.

📦 Common Integration Use Cases

1. Storing App Logs in S3

Use a Fluentd or Loki pipeline to export logs from OpenShift to Amazon S3.

2. Connecting ROSA Apps to RDS

Applications can use standard drivers (PostgreSQL, MySQL) to connect to RDS endpoints — make sure to configure VPC and security groups appropriately.

3. Triggering AWS Lambda from ROSA

Set up an API Gateway or SNS topic to allow OpenShift applications to invoke serverless functions in AWS for batch processing or asynchronous tasks.

4. Using AWS Secrets Manager

Mount secrets securely in pods using CSI drivers or inject them using operators.

🛠 Hands-On Example: Accessing S3 from ROSA Pod

Here’s a quick walkthrough:

Create an IAM Role with S3 permissions.

Associate the role with a Kubernetes service account.

Deploy your pod using that service account.

Use AWS SDK (e.g., boto3 for Python) inside your app to access S3.

oc create sa s3-access oc annotate sa s3-access eks.amazonaws.com/role-arn=arn:aws:iam::<account-id>:role/S3AccessRole

Then reference s3-access in your pod’s YAML.

📚 ROSA CS221 Course Highlights

The CS221 course from Red Hat focuses on:

Configuring service accounts and roles

Setting up secure access to AWS services

Using OpenShift tools and operators to manage external integrations

Best practices for hybrid cloud observability and logging

It’s a great choice for developers, cloud engineers, and architects aiming to harness the full potential of ROSA + AWS.

✅ Final Thoughts

Integrating ROSA with AWS services enables teams to build robust, cloud-native applications using best-in-class tools from both Red Hat and AWS. Whether it's persistent storage, messaging, serverless computing, or monitoring — AWS services complement ROSA perfectly.

Mastering these integrations through real-world use cases or formal training (like CS221) can significantly uplift your DevOps capabilities in hybrid cloud environments.

Looking to Learn or Deploy ROSA with AWS?

HawkStack Technologies offers hands-on training, consulting, and ROSA deployment support. For more details www.hawkstack.com

0 notes

Text

Serverless Deployment using AWS Lambda and API Gateway

Serverless architecture offers a compelling solution with its pay-per-use model and automatic scaling. This blog post will explore building a scalable serverless architecture using AWS Lambda and API Gateway.

IntroductionUnderstanding Serverless ArchitectureCore Components: AWS Lambda and API GatewayBuilding a Scalable Serverless ArchitectureExample Architecture: Processing API Requests and Saving DataBenefits of Serverless ArchitectureConclusionRelated Posts Introduction In today’s fast-paced digital world, applications must handle fluctuating workloads without compromising performance or cost.…

0 notes

Text

What is AWS Lambda?

AWS Lambda is a powerful serverless computing service that allows developers to run code without managing servers. It automatically scales applications in response to demand, enabling event-driven architecture and microservices deployment. With Lambda, you pay only for the compute time you consume, making it cost-efficient for cloud-native applications. It integrates seamlessly with other AWS services like API Gateway, S3, DynamoDB, and CloudWatch, empowering developers to build scalable, high-performance, and secure applications. Lambda supports multiple programming languages and simplifies backend development by handling infrastructure management, making it ideal for modern DevOps and continuous integration/continuous deployment (CI/CD) pipelines.

0 notes

Text

Common Challenges in Mobile App Development Services and How to Overcome Them

In today’s digital-first world, mobile applications are central to how we interact with brands, services, and technology. As businesses race to meet consumer demand, the need for professional Mobile App Development Services has never been greater. However, developing a successful mobile app is a complex process with multiple challenges. From platform fragmentation to security and scalability, this blog outlines the common issues developers face and how to tackle them with modern solutions.

1. Platform Fragmentation: iOS, Android, and Beyond

One of the foremost challenges in Mobile App Development Services is the wide array of platforms and devices. iOS and Android dominate the market, but they have vastly different ecosystems, design standards, and user expectations.

The Problem: Developers must ensure that an app performs consistently across all devices and operating system versions. Maintaining separate codebases for each platform can also increase development time and costs.

The Solution: Embracing cross-platform development frameworks like Flutter, React Native, or Kotlin Multiplatform allows teams to write code once and deploy across multiple platforms with minimal friction. These tools offer native-like performance and reduce time-to-market significantly.

2. Choosing the Right Tech Stack

Selecting the wrong technology stack is a common pitfall that can lead to poor app performance and increased technical debt.

The Problem: A mismatch between the chosen technologies and the app’s requirements can make scaling difficult or result in a sluggish user experience.

The Solution: A professional Mobile App Development Services team conducts a thorough needs analysis before choosing technologies. The right tech stack should include scalable backend (Node.js, Django, or Firebase), high-performance databases (MongoDB, PostgreSQL), and cloud services (AWS, Azure, GCP) that align with the app’s goals.

3. Security and Data Privacy Concerns

With rising cyber threats and increasing regulations, app security is more critical than ever.

The Problem: Mobile apps often handle sensitive user data, making them targets for data breaches. Non-compliance with regulations like GDPR or HIPAA can result in legal penalties.

The Solution: Implement end-to-end encryption, use token-based authentication (OAuth2), and perform regular security audits. Secure APIs and trusted SDKs should be used. Partnering with experienced Mobile App Development Services ensures your app is compliant and secure from the ground up.

4. Performance Optimization

User expectations are high—if your app lags or crashes, users will uninstall it in seconds.

The Problem: Common issues include slow load times, high memory consumption, and frequent crashes—especially on low-end devices.

The Solution: Optimize images and assets, implement caching mechanisms, and minimize unnecessary background processes. Use tools like Firebase Performance Monitoring, AppDynamics, or New Relic to track real-time performance and fix bottlenecks before users notice them.

5. Scalability for Growth

Your app might perform well with a small user base, but what happens when it grows?

The Problem: Poorly designed apps often struggle to handle increased traffic or new features, leading to performance issues and downtime.

The Solution: Adopt a modular architecture and scalable backend services like AWS Lambda, Kubernetes, or Google Cloud Run. Microservices allow parts of your app to scale independently, ensuring seamless performance as user numbers grow.

6. Third-Party Integrations

Most apps require integration with payment gateways, analytics platforms, social media APIs, and more.

The Problem: These integrations can break due to API changes, SDK incompatibility, or lack of proper documentation, leading to unexpected app behavior.

The Solution: Use well-documented, stable SDKs and ensure version control. Conduct integration testing separately to isolate potential issues before deployment.

7. User Experience (UX) and UI Design

Great functionality is useless without an intuitive interface.

The Problem: Poor UX/UI can confuse users, resulting in high abandonment rates and negative reviews.

The Solution: Start with user research and create detailed user personas. Design wireframes and clickable prototypes using tools like Figma or Adobe XD. Follow platform-specific design systems such as Material Design (Android) and Apple Human Interface Guidelines (iOS) to ensure consistency and familiarity.

8. Continuous Testing and Maintenance

Launching the app is only the beginning.

The Problem: Many businesses underestimate the need for regular updates, bug fixes, and new feature rollouts. This can lead to outdated, vulnerable apps.

The Solution: Implement CI/CD pipelines using tools like Jenkins, GitHub Actions, or Bitrise for automated testing and deployment. Set up crash reporting tools like Sentry or Crashlytics to monitor post-launch performance and ensure ongoing maintenance through a structured support plan.

Conclusion

Mobile app development is a journey fraught with challenges—from choosing the right tools to maintaining high security and ensuring a flawless user experience. However, these obstacles are manageable with the right strategies and technical expertise. Businesses that invest in reliable Mobile App Development Services position themselves for long-term success in the competitive app marketplace.

0 notes

Text

Pass AWS SAP-C02 Exam in First Attempt

Crack the AWS Certified Solutions Architect - Professional (SAP-C02) exam on your first try with real exam questions, expert tips, and the best study resources from JobExamPrep and Clearcatnet.

How to Pass AWS SAP-C02 Exam in First Attempt: Real Exam Questions & Tips

Are you aiming to pass the AWS Certified Solutions Architect – Professional (SAP-C02) exam on your first try? You’re not alone. With the right strategy, real exam questions, and trusted study resources like JobExamPrep and Clearcatnet, you can achieve your certification goals faster and more confidently.

Overview of SAP-C02 Exam

The SAP-C02 exam validates your advanced technical skills and experience in designing distributed applications and systems on AWS. Key domains include:

Design Solutions for Organizational Complexity

Design for New Solutions

Continuous Improvement for Existing Solutions

Accelerate Workload Migration and Modernization

Exam Format:

Number of Questions: 75

Type: Multiple choice, multiple response

Duration: 180 minutes

Passing Score: Approx. 750/1000

Cost: $300

AWS SAP-C02 Real Exam Questions (Real Set)

Here are 5 real-exam style questions to give you a feel for the exam difficulty and topics:

Q1: A company is migrating its on-premises Oracle database to Amazon RDS. The solution must minimize downtime and data loss. Which strategy is BEST?

A. AWS Database Migration Service (DMS) with full load only B. RDS snapshot and restore C. DMS with CDC (change data capture) D. Export and import via S3

Answer: C. DMS with CDC

Q2: You are designing a solution that spans multiple AWS accounts and VPCs. Which AWS service allows seamless inter-VPC communication?

A. VPC Peering B. AWS Direct Connect C. AWS Transit Gateway D. NAT Gateway

Answer: C. AWS Transit Gateway

Q3: Which strategy enhances resiliency in a serverless architecture using Lambda and API Gateway?

A. Use a single Availability Zone B. Enable retries and DLQs (Dead Letter Queues) C. Store state in Lambda memory D. Disable logging

Answer: B. Enable retries and DLQs

Q4: A company needs to archive petabytes of data with occasional access within 12 hours. Which storage class should you use?

A. S3 Standard B. S3 Intelligent-Tiering C. S3 Glacier D. S3 Glacier Deep Archive

Answer: D. S3 Glacier Deep Archive

Q5: You are designing a disaster recovery (DR) solution for a high-priority application. The RTO is 15 minutes, and RPO is near zero. What is the most appropriate strategy?

A. Pilot Light B. Backup & Restore C. Warm Standby D. Multi-Site Active-Active

Answer: D. Multi-Site Active-Active

Click here to Start Exam Recommended Resources to Pass SAP-C02 in First Attempt

To master these types of questions and scenarios, rely on real-world tested resources. We recommend:

✅ JobExamPrep

A premium platform offering curated practice exams, scenario-based questions, and up-to-date study materials specifically for AWS certifications. Thousands of professionals trust JobExamPrep for structured and realistic exam practice.

✅ Clearcatnet

A specialized site focused on cloud certification content, especially AWS, Azure, and Google Cloud. Their SAP-C02 study guide and video explanations are ideal for deep conceptual clarity.Expert Tips to Pass the AWS SAP-C02 Exam

Master Whitepapers – Read AWS Well-Architected Framework, Disaster Recovery, and Security best practices.

Practice Scenario-Based Questions – Focus on use cases involving multi-account setups, migration, and DR.

Use Flashcards – Especially for services like AWS Control Tower, Service Catalog, Transit Gateway, and DMS.

Daily Review Sessions – Use JobExamPrep and Clearcatnet quizzes every day.

Mock Exams – Simulate the exam environment at least twice before the real test.

🎓 Final Thoughts

The AWS SAP-C02 exam is tough—but with the right approach, you can absolutely pass it on the first attempt. Study smart, practice real exam questions, and leverage resources like JobExamPrep and Clearcatnet to build both confidence and competence.

#SAPC02#AWSSAPC02#AWSSolutionsArchitect#AWSSolutionsArchitectProfessional#AWSCertifiedSolutionsArchitect#SolutionsArchitectProfessional#AWSArchitect#AWSExam#AWSPrep#AWSStudy#AWSCertified#AWS#AmazonWebServices#CloudCertification#TechCertification#CertificationJourney#CloudComputing#CloudEngineer#ITCertification

0 notes

Text

Build A Smarter Security Chatbot With Amazon Bedrock Agents

Use an Amazon Security Lake and Amazon Bedrock chatbot for incident investigation. This post shows how to set up a security chatbot that uses an Amazon Bedrock agent to combine pre-existing playbooks into a serverless backend and GUI to investigate or respond to security incidents. The chatbot presents uniquely created Amazon Bedrock agents to solve security vulnerabilities with natural language input. The solution uses a single graphical user interface (GUI) to directly communicate with the Amazon Bedrock agent to build and run SQL queries or advise internal incident response playbooks for security problems.

User queries are sent via React UI.

Note: This approach does not integrate authentication into React UI. Include authentication capabilities that meet your company's security standards. AWS Amplify UI and Amazon Cognito can add authentication.

Amazon API Gateway REST APIs employ Invoke Agent AWS Lambda to handle user queries.

User queries trigger Lambda function calls to Amazon Bedrock agent.

Amazon Bedrock (using Claude 3 Sonnet from Anthropic) selects between querying Security Lake using Amazon Athena or gathering playbook data after processing the inquiry.

Ask about the playbook knowledge base:

The Amazon Bedrock agent queries the playbooks knowledge base and delivers relevant results.

For Security Lake data enquiries:

The Amazon Bedrock agent takes Security Lake table schemas from the schema knowledge base to produce SQL queries.

When the Amazon Bedrock agent calls the SQL query action from the action group, the SQL query is sent.

Action groups call the Execute SQL on Athena Lambda function to conduct queries on Athena and transmit results to the Amazon Bedrock agent.

After extracting action group or knowledge base findings:

The Amazon Bedrock agent uses the collected data to create and return the final answer to the Invoke Agent Lambda function.

The Lambda function uses an API Gateway WebSocket API to return the response to the client.

API Gateway responds to React UI via WebSocket.

The chat interface displays the agent's reaction.

Requirements

Prior to executing the example solution, complete the following requirements:

Select an administrator account to manage Security Lake configuration for each member account in AWS Organisations. Configure Security Lake with necessary logs: Amazon Route53, Security Hub, CloudTrail, and VPC Flow Logs.

Connect subscriber AWS account to source Security Lake AWS account for subscriber queries.

Approve the subscriber's AWS account resource sharing request in AWS RAM.

Create a database link in AWS Lake Formation in the subscriber AWS account and grant access to the Security Lake Athena tables.

Provide access to Anthropic's Claude v3 model for Amazon Bedrock in the AWS subscriber account where you'll build the solution. Using a model before activating it in your AWS account will result in an error.

When requirements are satisfied, the sample solution design provides these resources:

Amazon S3 powers Amazon CloudFront.

Chatbot UI static website hosted on Amazon S3.

Lambda functions can be invoked using API gateways.

An Amazon Bedrock agent is invoked via a Lambda function.

A knowledge base-equipped Amazon Bedrock agent.

Amazon Bedrock agents' Athena SQL query action group.

Amazon Bedrock has example Athena table schemas for Security Lake. Sample table schemas improve SQL query generation for table fields in Security Lake, even if the Amazon Bedrock agent retrieves data from the Athena database.

A knowledge base on Amazon Bedrock to examine pre-existing incident response playbooks. The Amazon Bedrock agent might propose investigation or reaction based on playbooks allowed by your company.

Cost

Before installing the sample solution and reading this tutorial, understand the AWS service costs. The cost of Amazon Bedrock and Athena to query Security Lake depends on the amount of data.

Security Lake cost depends on AWS log and event data consumption. Security Lake charges separately for other AWS services. Amazon S3, AWS Glue, EventBridge, Lambda, SQS, and SNS include price details.

Amazon Bedrock on-demand pricing depends on input and output tokens and the large language model (LLM). A model learns to understand user input and instructions using tokens, which are a few characters. Amazon Bedrock pricing has additional details.

The SQL queries Amazon Bedrock creates are launched by Athena. Athena's cost depends on how much Security Lake data is scanned for that query. See Athena pricing for details.

Clear up

Clean up if you launched the security chatbot example solution using the Launch Stack button in the console with the CloudFormation template security_genai_chatbot_cfn:

Choose the Security GenAI Chatbot stack in CloudFormation for the account and region where the solution was installed.

Choose “Delete the stack”.

If you deployed the solution using AWS CDK, run cdk destruct –all.

Conclusion

The sample solution illustrates how task-oriented Amazon Bedrock agents and natural language input may increase security and speed up inquiry and analysis. A prototype solution using an Amazon Bedrock agent-driven user interface. This approach may be expanded to incorporate additional task-oriented agents with models, knowledge bases, and instructions. Increased use of AI-powered agents can help your AWS security team perform better across several domains.

The chatbot's backend views data normalised into the Open Cybersecurity Schema Framework (OCSF) by Security Lake.

#securitychatbot#AmazonBedrockagents#graphicaluserinterface#Bedrockagent#chatbot#chatbotsecurity#Technology#TechNews#technologynews#news#govindhtech

0 notes

Link

[ad_1] Welcome devs to the world of development and automation. Today, we are diving into an exciting project in which we will be creating a Serverless Image Processing Pipeline with AWS services. The project starts with creating S3 buckets for storing uploaded images and processed Thumbnails, and eventually using many services like Lambda, API Gateway (To trigger the Lambda Function), DynamoDB (storing image Metadata), and at last we will run this program in ECS cluster by creating a Docker image of the project. This project is packed with cloud services and development tech stacks like Next.js, and practicing this will further enhance your understanding of Cloud services and how they interact with each other. So with further ado, let’s get started! Note: The code and instructions in this post are for demo use and learning only. A production environment will require a tighter grip on configurations and security. Prerequisites Before we get into the project, we need to ensure that we have the following requirements met in our system: An AWS Account: Since we use AWS services for the project, we need an AWS account. A configured IAM User with required services access would be appreciated. Basic Understanding of AWS Services: Since we are dealing with many AWS services, it is better to have a decent understanding of them, such as S3, which is used for storage, API gateway to trigger Lambda function, and many more. Node Installed: Our frontend is built with Next.js, so having Node in your system is necessary. For Code reference, here is the GitHub repo. AWS Services Setup We will start the project by setting up our AWS services. First and foremost, we will create 2 S3 buckets, namely sample-image-uploads-bucket and sample-thumbnails-bucket. The reason for this long name is that the bucket name has to be unique all over the AWS Workspace. So to create the bucket, head over to the S3 dashboard and click ‘Create Bucket’, select ‘General Purpose’, and give it a name (sample-image-uploads-bucket) and leave the rest of the configuration as default. Similarly, create the other bucket named sample-thumbnails-bucket, but in this bucket, make sure you uncheck Block Public Access because we will need it for our ECS Cluster. We need to ensure that the sample-thumbnails-bucket has public read access, so that ECS Frontend can display them. For that, we will attach the following policy to that bucket: "Version": "2012-10-17", "Statement": [ "Sid": "PublicRead", "Effect": "Allow", "Principal": "*", "Action": "s3:GetObject", "Resource": "arn:aws:s3:::sample-thumbnails-bucket/*" ] After creating buckets, let’s move to our Database for storing image metadata. We will create a DynamoDb table for that. Go to your DynamoDb console, click on Create Table, give it a name (image_metadata), and in the primary key select string, name it image_id. AWS services will communicate with each other, so they need a role with proper permissions. To create a role, go to the IAM dashboard, select Role, and click on Create Role. Under trust identity type, select AWS service, and under use case, choose Lambda. Attach the following policies: AmazonS3FullAccess AmazonDynamoDBFullAccess CloudWatchLogsFullAccess Give this role a name (Lambda-Image-Processor-Role) and save it. Creating Lambda Function We have our Lambda role, buckets, and DynamoDb table ready, so now let’s create the Lambda function which will process the image and make the thumbnail out of it, since we are using the Pillow library to process the images, Lambda by default doesn’t provide that. To fix this, we will add a layer in the Lambda function. To do that, follow the following steps: Now go to your Lambda dashboard, click on Create a Function. Select Author from Scratch and choose Python 3.9 as the runtime language, give it a name: image-processor, and in the Code tab, you have the Upload from Option, select that, choose zip file, and upload your Zip file of the image-processor. Go to Configuration, and under the Permissions column, Edit the configuration by changing the existing role to the role we created Lambda-Image-Processor-Role. Now go to your S3 bucket (sample-image-uploads-bucket) and go to its Properties section and scroll down to Event Notification, here click on Create Event Notification, give it a name (trigger-image-processor) and in the event type, select PUT and select the lambda function we created (image-processor). Now, since Pillow doesn’t come built-in with the lambda library, we will do the following steps to fix that: Go to your Lambda function (image-processor) and scroll down to the Layer section, here click on Add Layer. In the Add Layer section, select Specify an ARN and provide this ARN arn:aws:lambda:us-east-1:770693421928:layer:Klayers-p39-pillow:1 . Change the region accordingly; I am using us-east-1. Add the layer. Now in the Code tab of your Lambda-Function you would be having a lambda-function.py, put the following content inside the lambda_function.py: import boto3 import uuid import os from PIL import Image from io import BytesIO import datetime s3 = boto3.client('s3') dynamodb = boto3.client('dynamodb') UPLOAD_BUCKET = '' THUMBNAIL_BUCKET = '' DDB_TABLE = 'image_metadata' def lambda_handler(event, context): record = event['Records'][0] bucket = record['s3']['bucket']['name'] key = record['s3']['object']['key'] response = s3.get_object(Bucket=bucket, Key=key) image = Image.open(BytesIO(response['Body'].read())) image.thumbnail((200, 200)) thumbnail_buffer = BytesIO() image.save(thumbnail_buffer, 'JPEG') thumbnail_buffer.seek(0) thumbnail_key = f"thumb_key" s3.put_object( Bucket=THUMBNAIL_BUCKET, Key=thumbnail_key, Body=thumbnail_buffer, ContentType='image/jpeg' ) image_id = str(uuid.uuid4()) original_url = f"https://UPLOAD_BUCKET.s3.amazonaws.com/key" thumbnail_url = f"https://THUMBNAIL_BUCKET.s3.amazonaws.com/thumbnail_key" uploaded_at = datetime.datetime.now().isoformat() dynamodb.put_item( TableName=DDB_TABLE, Item= 'image_id': 'S': image_id, 'original_url': 'S': original_url, 'thumbnail_url': 'S': thumbnail_url, 'uploaded_at': 'S': uploaded_at ) return 'statusCode': 200, 'body': f"Thumbnail created: thumbnail_url" Now, we will need another Lambda function for API Gateway because that will act as the entry point for our frontend ECS app to fetch image data from DynamoDB. To create the lambda function, go to your Lambda Dashboard, click on create function, select Author from scratch and python 3.9 as runtime, give it a name, get-image-metadata, and in the configuration, select the same role that we assigned to other Lambda functions (Lambda-Image-Processor-Role) Now, in the Code section of the function, put the following content: import boto3 import json dynamodb = boto3.client('dynamodb') TABLE_NAME = 'image_metadata' def lambda_handler(event, context): try: response = dynamodb.scan(TableName=TABLE_NAME) images = [] for item in response['Items']: images.append( 'image_id': item['image_id']['S'], 'original_url': item['original_url']['S'], 'thumbnail_url': item['thumbnail_url']['S'], 'uploaded_at': item['uploaded_at']['S'] ) return 'statusCode': 200, 'headers': "Content-Type": "application/json" , 'body': json.dumps(images) except Exception as e: return 'statusCode': 500, 'body': f"Error: str(e)" Creating the API Gateway The API Gateway will act as the entry point for your ECS Frontend application to fetch image data from DynamoDB. It will connect to the Lambda function that queries DynamoDB and returns the image metadata. The URL of the Gateway is used in our Frontend app to display images. To create the API Gateway, do the following steps: Go to the AWS Management Console → Search for API Gateway → Click Create API. Select HTTP API. Click on Build. API name: image-gallery-api Add integrations: Select Lambda and select the get_image_metadata function Select Method: Get and Path: /images Endpoint type: Regional Click on Next and create the API Gateway URL. Before creating the Frontend, let’s test the application manually. First go to your Upload S3 Bucket (sample-image-uploads-bucket) and upload a jpg/jpeg image; other image will not work as your function only processes these two types:In the Picture above, I have uploaded an image titled “ghibil-art.jpg” file, and once uploaded, it will trigger the Lambda function, that will create the thumbnail out of it named as “thumbnail-ghibil-art.jpg” and store it in sample-thumbnails-bucket and the information about the image will be stored in image-metadata table in DynamoDb. In the image above, you can see the Item inside the Explore Item section of our DynamoDb table “image-metadata.” To test the API-Gateway, we will check the Invoke URL of our image-gallery-API followed by /images. It will show the following output, with the curl command: Now our application is working fine, we can deploy a frontend to visualise the project. Creating the Frontend App For the sake of Simplicity, we will be creating a minimal, simple gallery frontend using Next.js, Dockerize it, and deploy it on ECS. To create the app, do the following steps: Initialization npx create-next-app@latest image-gallery cd image-gallery npm install npm install axios Create the Gallery Component Create a new file components/Gallery.js: 'use client'; import useState, useEffect from 'react'; import axios from 'axios'; import styles from './Gallery.module.css'; const Gallery = () => const [images, setImages] = useState([]); const [loading, setLoading] = useState(true); useEffect(() => const fetchImages = async () => try const response = await axios.get('https:///images'); setImages(response.data); setLoading(false); catch (error) console.error('Error fetching images:', error); setLoading(false); ; fetchImages(); , []); if (loading) return Loading...; return ( images.map((image) => ( new Date(image.uploaded_at).toLocaleDateString() )) ); ; export default Gallery; Make Sure to Change the Gateway-URL to your API_GATEWAY_URL Add CSS Module Create components/Gallery.module.css: .gallery display: grid; grid-template-columns: repeat(auto-fill, minmax(200px, 1fr)); gap: 20px; padding: 20px; max-width: 1200px; margin: 0 auto; .imageCard background: #fff; border-radius: 8px; box-shadow: 0 2px 5px rgba(0,0,0,0.1); overflow: hidden; transition: transform 0.2s; .imageCard:hover transform: scale(1.05); .thumbnail width: 100%; height: 150px; object-fit: cover; .date text-align: center; padding: 10px; margin: 0; font-size: 0.9em; color: #666; .loading text-align: center; padding: 50px; font-size: 1.2em; Update the Home Page Modify app/page.js: import Gallery from '../components/Gallery'; export default function Home() return ( Image Gallery ); Next.js’s built-in Image component To use Next.js’s built-in Image component for better optimization, update next.config.mjs: const nextConfig = images: domains: ['sample-thumbnails-bucket.s3.amazonaws.com'], , ; export default nextConfig; Run the Application Visit in your browser, and you will see the application running with all the thumbnails uploaded. For demonstration purposes, I have put four images (jpeg/jpg) in my sample-images-upload-bucket. Through the function, they are transformed into thumbnails and stored in the sample-thumbnail-bucket. The application looks like this: Containerising and Creating the ECS Cluster Now we are almost done with the project, so we will continue by creating a Dockerfile of the project as follows: # Use the official Node.js image as a base FROM node:18-alpine AS builder # Set working directory WORKDIR /app # Copy package files and install dependencies COPY package.json package-lock.json ./ RUN npm install # Copy the rest of the application code COPY . . # Build the Next.js app RUN npm run build # Use a lightweight Node.js image for production FROM node:18-alpine # Set working directory WORKDIR /app # Copy built files from the builder stage COPY --from=builder /app ./ # Expose port EXPOSE 3000 # Run the application CMD ["npm", "start"] Now we will build the Docker image using: docker build -t sample-nextjs-app . Now that we have our Docker image, we will push it to AWS ECR repo, for that, do the following steps: Step 1: Push the Docker Image to Amazon ECR Go to the AWS Management Console → Search for ECR (Elastic Container Registry) → Open ECR. Create a new repository: Click Create repository. Set Repository name (e.g., sample-nextjs-app). Choose Private (or Public if required). Click Create repository. Push your Docker image to ECR: In the newly created repository, click View push commands. Follow the commands to: Authenticate Docker with ECR. Build, tag, and push your image. You need to have AWS CLI configured for this step. Step 2: Create an ECS Cluster aws ecs create-cluster --cluster-name sample-ecs-cluster Step 3: Create a Task Definition In the ECS Console, go to Task Definitions. Click Create new Task Definition. Choose Fargate → Click Next step. Set task definition details: Name: sample-nextjs-task Task role: ecsTaskExecutionRole (Create one if missing). "Version": "2012-10-17", "Statement": [ "Sid": "Statement1", "Effect": "Allow", "Action": [ "ecr:GetDownloadUrlForLayer", "ecr:BatchGetImage", "ecr:GetAuthorizationToken", "ecr:BatchCheckLayerAvailability" ], "Resource": "arn:aws:ecr:us-east-1:624448302051:repository/sample-nextjs-app" ] Task memory & CPU: Choose appropriate values (e.g., 512MB & 256 CPU). Define the container: Click Add container. Container name: sample-nextjs-container. Image URL: Paste the ECR image URI from Step 1. Port mappings: Set 3000 for both container and host ports. Click Add. Click Create. Step 4: Create an ECS Service Go to “ECS” → Click Clusters → Select your cluster (sample-ecs-cluster). Click Create Service. Choose Fargate → Click Next step. Set up the service: Task definition: Select sample-nextjs-task. Cluster: sample-ecs-cluster. Service name: sample-nextjs-service. Number of tasks: 1 (Can scale later). Networking settings: Select an existing VPC. Choose Public subnets. Enable Auto-assign Public IP. Click Next step → Create service. Step 5: Access the Application Go to ECS > Clusters > sample-ecs-cluster. Click on the Tasks tab. Click on the running task. Find the Public IP under Network. Open a browser and go to:http://:3000 Your Next.js app should be live! 🚀 Conclusion This marks the end of the blog. Today, we divided into many AWS services: s3, IAM, ECR, Lambda function, ECS, Fargate, and API Gateway. We started the project by creating s3 buckets and eventually deployed our application in an ECS cluster. Throughout this guide, we covered containerizing the Next.js app, pushing it to ECR, configuring ECS task definitions, and deploying via the AWS console. This setup allows for automated scaling, easy updates, and secure API access—all key benefits of a cloud-native deployment. Potential production configurations may include changes like below: Implementing more restrictive IAM permissions, improving control over public access to S3 buckets (using CloudFront, pre-signed URLs, or a backend proxy instead of making the sample-thumbnails-bucket public) Adding error handling and pagination (especially for DynamoDB queries) Utilizing secure VPC/network configurations for ECS (like using an Application Load Balancer and private subnets instead of direct public IPs) Addressing scaling concerns by replacing the DynamoDB.scan operation within the metadata-fetching Lambda with the DynamoDB.query Using environment variables instead of a hardcoded API gateway URL in the Next.js code [ad_2] Source link

0 notes

Text

Developing Your Future with AWS Solution Architect Associate

Why Should You Get AWS Solution Architect Associate?

If you're stepping into the world of cloud computing or looking to level up your career in IT, the Aws certified solutions architect associate course is one of the smartest moves you can make. Here's why:

1. AWS Is the Cloud Market Leader

Amazon Web Services (AWS) dominates the cloud industry, holding a significant share of the global market. With more businesses shifting to the cloud, AWS skills are in high demand—and that trend isn’t slowing down.

2. Proves Your Cloud Expertise

This certification demonstrates that you can design scalable, reliable, and cost-effective cloud solutions on AWS. It's a solid proof of your ability to work with AWS services, including storage, networking, compute, and security.

3. Boosts Your Career Opportunities

Recruiters actively seek AWS-certified professionals. Whether you're an aspiring cloud engineer, solutions architect, or developer, this credential helps you stand out in a competitive job market.

4. Enhances Your Earning Potential

According to various salary surveys, AWS-certified professionals—especially Solution Architects—tend to earn significantly higher salaries compared to their non-certified peers.

5. Builds a Strong Foundation

The Associate-level certification lays a solid foundation for more advanced AWS certifications like the AWS Solutions Architect – Professional, or specialty certifications in security, networking, and more.

Understanding the AWS Shared Responsibility Model

The AWS Solutions Architect Associate Shared Responsibility Model defines the division of security and compliance duties between AWS and the customer. AWS is responsible for “security of the cloud,” while customers are responsible for “security in the cloud.”

AWS handles the underlying infrastructure, including hardware, software, networking, and physical security of its data centers. This includes services like compute, storage, and database management at the infrastructure level.

On the other hand, customers are responsible for configuring their cloud resources securely. This includes managing data encryption, access controls (IAM), firewall settings, OS-level patches, and securing applications and workloads.

For example, while AWS secures the physical servers hosting an EC2 instance, the customer must secure the OS, apps, and data on that instance.

This model enables flexibility and scalability while ensuring that both parties play a role in protecting cloud environments. Understanding these boundaries is essential for compliance, governance, and secure cloud architecture.

Best Practices for AWS Solutions Architects

The role of an AWS Solutions Architect goes far beyond just designing cloud environments—it's about creating secure, scalable, cost-optimized, and high-performing architectures that align with business goals. To succeed in this role, following industry best practices is essential. Here are some of the top ones:

1. Design for Failure

Always assume that components can fail—and design resilient systems that recover gracefully.

Use Auto Scaling Groups, Elastic Load Balancers, and Multi-AZ deployments.

Implement circuit breakers, retries, and fallbacks to keep applications running.

2. Embrace the Well-Architected Framework

Leverage AWS’s Well-Architected Framework, which is built around five pillars:

Operational Excellence

Security

Reliability

Performance Efficiency

Cost Optimization

Reviewing your architecture against these pillars helps ensure long-term success.

3. Prioritize Security

Security should be built in—not bolted on.

Use IAM roles and policies with the principle of least privilege.

Encrypt data at rest and in transit using KMS and TLS.

Implement VPC security, including network ACLs, security groups, and private subnets.

4. Go Serverless When It Makes Sense

Serverless architecture using AWS Lambda, API Gateway, and DynamoDB can improve scalability and reduce operational overhead.

Ideal for event-driven workloads or microservices.

Reduces the need to manage infrastructure.

5. Optimize for Cost

Cost is a key consideration. Avoid over-provisioning.

Use AWS Cost Explorer and Trusted Advisor to monitor spend.

Choose spot instances or reserved instances when appropriate.

Right-size EC2 instances and consider using Savings Plans.

6. Monitor Everything

Build strong observability into your architecture.

Use Amazon CloudWatch, X-Ray, and CloudTrail for metrics, tracing, and auditing.

Set up alerts and dashboards to catch issues early.

Recovery Planning with AWS

Recovery planning in AWS ensures your applications and data can quickly bounce back after failures or disasters. AWS offers built-in tools like Amazon S3 for backups, AWS Backup, Amazon RDS snapshots, and Cross-Region Replication to support data durability. For more robust strategies, services like Elastic Disaster Recovery (AWS DRS) and CloudEndure enable near-zero downtime recovery. Use Auto Scaling, Multi-AZ, and multi-region deployments to enhance resilience. Regularly test recovery procedures using runbooks and chaos engineering. A solid recovery plan on AWS minimizes downtime, protects business continuity, and keeps operations running even during unexpected events.

Learn more: AWS Solution Architect Associates

0 notes

Text

The Future of AWS Certified Developers: The Key Trends and Forecasts

Cloud computing has increased dramatically in recent years and Amazon Web Services (AWS) continues to lead the field. With companies rapidly moving to cloud computing, AWS certifications have become an ideal option for developers who want to grow their careers and higher pay. But as technology develops and so do expectations for AWS certified developers.

This article will look at what's in store for AWS Certified Developers, key trends affecting the industry in addition to what development professionals can anticipate to see in the next few years.

1. What is the reason AWS Certified Developers are in Demand?

AWS powers many of the biggest companies as well as startups and governments across the world. Companies rely on AWS-certified experts to design, build cloud-based solutions effectively. This is why AWS-certified developers are more valuable than ever before:

The growth of cloud Adoption Businesses are shifting to cloud, creating a greater need to hire AWS experts.

Security & Compliance - Companies require experts to protect their cloud infrastructures.

Serverless and Microservices Modern application development relies on AWS services such as Lambda as well as ECS.

Cost Optimization Cost Optimization AWS developers aid businesses to optimize cloud expenditure and improve efficiency.

With these considerations with in our minds, lets look at the most important trends that will define the next generation of AWS Certified Developers.

2. Important Trends that Shape the Future of AWS Developers

2.1. The growth of AI & Machine Learning in AWS

AWS has made significant investments on Artificial Intelligence (AI) and Machine Learning (ML) with services such as Amazon SageMaker, Rekognition, and Lex. AWS-certified developers need to improve their knowledge in AI/ML to create more intelligent applications.

The prediction is that AI as well as ML integration will become a key capability for AWS developers in 2025.

2.2. More widespread adoption of Serverless Computing

Serverless architecture reduces the need to manage infrastructure, making development of applications quicker as well as more effective. AWS services such as AWS Lambda API Gateway, and DynamoDB are accelerating the adoption of serverless computing.

The prediction is that serverless computing will be the dominant cloud technology for development, which makes AWS Lambda expertise a must-have expertise for developers.

2.3. Multi-Cloud & Hybrid Cloud Strategies

While AWS is the top cloud provider, a lot of companies are taking a multi-cloud strategy that integrates AWS along with Microsoft Azure and Google Cloud. AWS-certified developers need to understand hybrid cloud environments as well as tools like AWS Outposts, and Anthos.

Prediction: Developers who have multi-cloud expertise will enjoy an edge in jobs.

2.4. There is a demand Cloud Security & Compliance Experts

As cyber-attacks are growing, businesses are placing a high priority on Cloud security as well as compliance. AWS services such as AWS Shield Macie as well as Security Hub are essential for protecting cloud environments.

Prognosis: AWS security certificates (AWS Certification for Security-Specialty) will be highly useful as security threats to cloud computing increase.

2.5. Edge Computing & IoT Growth

The growth of Edge Computing and the Internet of Things (IoT) is changing industries such as automotive, healthcare manufacturing, and healthcare. AWS services such as AWS IoT Core, and AWS Greengrass are driving this change.

The prediction is that AWS experts equipped with IoT or Edge Computing expertise will be in high demand by 2026.

3. Skills and Certificates for Future AWS Developers

To stay competitive to stay ahead in this competitive AWS community, AWS developers need to constantly improve their skills. Here are the most sought-after qualifications and certifications that are essential:

In response to these trends, many developers are turning to comprehensive training like an AWS Developer Course to sharpen their skills and stay relevant.

Essential AWS Skills:

AI & Machine Learning - Use AWS SageMaker and Rekognition.

Serverless Architecture - Master AWS Lambda and API Gateway.

Cloud Security and Compliance Learn about IAM, Security Hub, and AWS Shield.

Multi-Cloud and Hybrid Cloud - Gain experience with Azure, Google Cloud, and AWS hybrid solutions.

The DevOps & Automation - Use AWS CodePipeline, CloudFormation, and Terraform.

AWS Certifications to Take into Account:

AWS Certified Developer Associate (For software developers who work using AWS)

AWS Certified Solutions Architect - Associate (For cloud solution design)

AWS certified DevOps Engineer Professional (For Automation and CI/CD)

AWS Certified Security Specific (For cloud security experts)

4. Career and Job Market Opportunities for AWS Developers

AWS developers are highly compensated professionals, earning between $100,000 and $150,000 per year based on experience and the location. Some of the most sought-after jobs for AWS-certified developers include:

Cloud Developer - Creates cloud-based applications by using AWS services.

Engineering DevOps Engineer. Manages pipelines for CI/CD along with cloud automation.

Cloud Security Engineer Specializes in AWS safety and security as well as compliance.

IoT Developer - works with AWS IoT and Edge Computing solutions.

Big Data Engineer Handles AWS analysis of data as well as Machine Learning solutions.

As cloud adoption continues to grow AWS-certified professionals will enjoy endless career options across a variety of sectors.

5. How can you keep up with the times As an AWS Developer?

To be successful in the constantly-changing AWS environment, developers must:

Stay Up-to-date - Keep up to date - AWS blogs, take part in webinars and discover the latest AWS features.

Experience with Build Projects is essential. Implement the real world AWS applications.

Join AWS Communities Join AWS Communities - Participate in forums such as AWS Post and also attend AWS events.

Earn Certifications - Continue to upgrade your capabilities by earning AWS certifications.

Explore AI and Serverless Technology - Stay up to date with the latest trends in AI/ML and serverless.

The most important factor to be successful being an AWS Certified Developer is continuous learning and adapting to the latest cloud technology.

Final Thoughts

The future for AWS Certified Developers is bright and full of potential. With new developments such as AI servers, serverless computing cloud-based multi-cloud, and cloud security influencing the market, AWS professionals must stay current and keep learning.

If you're a potential AWS developer Now is the perfect opportunity to get AWS certified and look into different career options.

0 notes