#aws lambda configuration

Explore tagged Tumblr posts

Text

AWS Lambda Compute Service Tutorial for Amazon Cloud Developers

Full Video Link - https://youtube.com/shorts/QmQOWR_aiNI Hi, a new #video #tutorial on #aws #lambda #awslambda is published on #codeonedigest #youtube channel. @java @awscloud @AWSCloudIndia @YouTube #youtube @codeonedigest #codeonedigest #aws #amaz

AWS Lambda is a serverless compute service that runs your code in response to events and automatically manages the underlying compute resources for you. These events may include changes in state such as a user placing an item in a shopping cart on an ecommerce website. AWS Lambda automatically runs code in response to multiple events, such as HTTP requests via Amazon API Gateway, modifications…

View On WordPress

#amazon lambda java example#aws#aws cloud#aws lambda#aws lambda api gateway#aws lambda api gateway trigger#aws lambda basic#aws lambda code#aws lambda configuration#aws lambda developer#aws lambda event trigger#aws lambda eventbridge#aws lambda example#aws lambda function#aws lambda function example#aws lambda function s3 trigger#aws lambda java#aws lambda server#aws lambda service#aws lambda tutorial#aws training#aws tutorial#lambda service

0 notes

Text

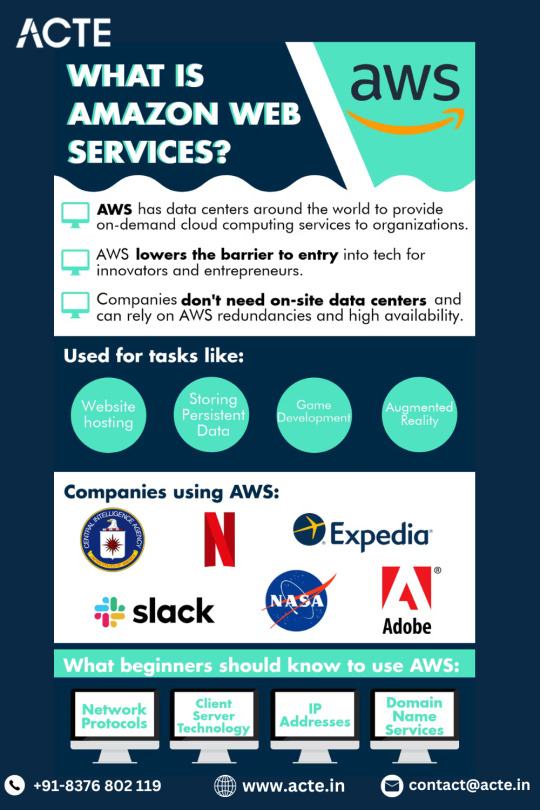

Level up your cloud skills with this AWS course: The ultimate guide to mastering Amazon Web Services!

Are you fascinated by the world of cloud computing? Do you want to enhance your skills and become a proficient Amazon Web Services (AWS) professional? Look no further! This comprehensive guide will walk you through the essential aspects of AWS education and training, providing you with the tools you need to excel in this rapidly evolving field. Whether you are a novice starting from scratch or an experienced IT professional aiming for career advancement, this AWS course will equip you with the knowledge and expertise to navigate the cloud with confidence.

Education: Building Blocks for Success

Understanding the Basics of Cloud Computing

Before diving into the intricacies of Amazon Web Services, it is crucial to comprehend the fundamentals of cloud computing. Explore the concept of virtualization, where physical resources are abstracted into virtual instances, allowing for greater efficiency, scalability, and flexibility. Familiarize yourself with key terms such as Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS). By understanding these building blocks, you will be prepared to harness the full power of AWS.

Navigating the AWS Management Console

The AWS Management Console is your gateway to the vast array of services provided by Amazon Web Services. Acquaint yourself with this user-friendly interface as we walk you through the various components and functionalities it offers. From launching virtual servers to configuring security settings, you will gain a solid foundation in managing AWS resources effectively.

Essential AWS Services to Master

AWS boasts a vast ecosystem of services, each designed to address specific computing needs. We will explore some of the core services that form the backbone of AWS, including Amazon Elastic Compute Cloud (EC2), Amazon Simple Storage Service (S3), Amazon Relational Database Service (RDS), and Amazon Lambda. Delve into the intricacies of these services and discover how they can revolutionize your cloud-based projects.

Guide to Start AWS Training

Choosing the Right Training Path

Embarking on your AWS training journey requires careful consideration of your individual goals and learning preferences. Evaluate the various training options available, such as self-paced online courses, instructor-led virtual classrooms, or hands-on workshops. Analyze your resources, time constraints, and preferred learning style to select the training path that aligns best with your needs.

Structured Learning Approach

Mastering AWS necessitates a systematic and well-structured approach. Discover the importance of laying a strong foundation by beginning with the AWS Certified Cloud Practitioner exam. From there, progress to more specialized certifications such as AWS Certified Solutions Architect, AWS Certified Developer, or AWS Certified SysOps Administrator. Each certification builds upon the previous one, creating a comprehensive knowledge base that will set you apart in this competitive industry.

Hands-On Experience and Real-World Projects

Theory alone is insufficient when it comes to mastering AWS. Develop your practical skills by engaging in hands-on labs and real-world projects that simulate the challenges you are likely to encounter in the field. Dive into deploying applications, configuring scalable infrastructure, and troubleshooting common issues. By actively applying your knowledge, you will solidify your understanding and gain invaluable experience.

Placement: Unlocking Opportunities

Leveraging AWS Certification in the Job Market

AWS certifications have emerged as a gold standard in the realm of cloud computing. Employers actively seek professionals who possess the skills and credentials to navigate and optimize AWS environments. Discover how an AWS certification can significantly enhance your employability, propel your career trajectory, and open doors to exciting job opportunities in a wide range of industries.

Showcasing your AWS Expertise

Once you have acquired the necessary skills and certifications, it is essential to effectively showcase your AWS expertise. Craft a captivating resume highlighting your AWS experiences and projects. Create a strong online presence through platforms like LinkedIn and GitHub, demonstrating your proficiency to potential employers. Actively participate in AWS forums and communities, exchanging knowledge and insights with fellow professionals and building a robust network.

Continuous Learning and Growth

AWS is a rapidly evolving platform, constantly introducing new services, features, and updates. Stay ahead of the curve by fostering a mindset of continuous learning and growth. Engage in ongoing professional development, attend AWS conferences and webinars, and subscribe to relevant industry publications. By embracing a culture of continuous improvement, you will remain at the forefront of AWS innovation and unlock limitless possibilities for advancement.

Embarking on the journey to mastering AWS Course at ACTE institute can be both exciting and challenging. However, armed with comprehensive education, a well-structured training approach, and a strategic placement strategy, you can level up your cloud skills and unlock a world of opportunities. Start your AWS training today, and transcend the realms of cloud computing like never before!

9 notes

·

View notes

Text

AWS DevOps Training: A Pathway to Cloud Mastery and Career Growth

With the cloud-based digital revolution of the modern times, organizations are evolving towards flexible methodologies and cloud infrastructure to stay in the running. Therefore, there exists a vast requirement for cloud platform specialists as well as DevOps professionals. AWS DevOps Training addresses this gap by offering quality learning platforms committed to automating processes, enhancing deployment lifecycles, and ensuring scalable applications operating with the support of Amazon Web Services (AWS).

What is AWS DevOps Training?

AWS DevOps Training is a program that integrates the powerful strength of AWS with the efficient simplicity of DevOps. The training equips students with the technical competence to create, deploy, and manage cloud applications in an agile automated environment. It gives prime importance to key DevOps best practices such as Continuous Integration and Continuous Deployment (CI/CD), Infrastructure as Code (IaC), configuration management, monitoring, and security. Training also includes popular tools like AWS CodePipeline, AWS CloudFormation, Docker, Jenkins, Git, and Terraform.

You're an IT operations expert, system administrator, or developer, AWS DevOps Training equips you with the understanding and skills to automate cloud infrastructure and have repeatable, reproducible deployments.

Why is AWS DevOps Training Important?

Implementation of DevOps in cloud environments has brought a paradigm shift in software deployment and delivery by organizations. As the global cloud provider leader, AWS offers a wide range of services that are optimized for DevOps. AWS DevOps Training gives a person the opportunity to utilize these services in order to:

Automate repetitive tasks and reduce deployment failure

Improve speed and frequency of software release

Improve system reliability and scalability

Maximize cross-functional collaboration between development and operations teams

Automate security and compliance across all phases of deployment

Companies today focus more on DevOps-based processes, and these AWS DevOps trained professionals certified are today in high demand in finance, healthcare, e-commerce, and IT industries.

Who Should Take AWS DevOps Training?

AWS DevOps Training is best suited for:

Software developers looking to automate deployment pipelines

System administrators who want to transition from on-premises environments to cloud environments

DevOps engineers interested in enhancing their AWS skills

Cloud architects and consultants managing hybrid or multi-cloud systems

Anyone preparing for the AWS Certified DevOps Engineer – Professional exam

The course often includes hands-on labs, case studies, real-time projects, and certification guidance, making it practical and results-driven.

Key Skills You’ll Gain from AWS DevOps Training

At the end of the training, students are expected to be proficient in skills like:

CI/CD pipeline building and maintenance with AWS tools

Infrastructure automation provisioning with AWS CloudFormation and Terraform

Cloud environment deployment monitoring with CloudWatch and AWS X-Ray

Application deployment management using ECS, EKS, and Lambda

Secure access implementation using IAM roles and policies

Merging all these in-demand skills not only boosts your technical resume but also makes you a valuable asset to any cloud-driven business.

Certification and Career Opportunities

Passing AWS DevOps Training is a valuable step toward achieving the AWS Certified DevOps Engineer – Professional certification. This certification validates your expertise in release automation and application management in AWS. It is industry-approved and often leads to roles like:

DevOps Engineer

Cloud Engineer

Site Reliability Engineer (SRE)

Infrastructure Automation Specialist

Cloud Solutions Architect

As more and more companies move their operations to AWS, certified professionals will certainly be given competitive salaries, employment security, and career growth across the globe.

Conclusion

With the fast pace of digital transformation seen in a globalised economy, DevOps and cloud expertise can no longer be an option—a necessity. AWS DevOps Training is the perfect blend of agile techniques and cloud computing that enables professionals to construct the tools and skills required to thrive in the new world of IT. Whether starting the journey in the cloud or extending DevOps skills, the training creates new avenues to innovation, leadership, and professional achievement.

0 notes

Text

Driving Innovation with AWS Cloud Development Tools

Amazon Web Services (AWS) has established itself as a leader in cloud computing, providing businesses with a comprehensive suite of services to build, deploy, and manage applications at scale. Among its most impactful offerings are AWS cloud development tools, which enable developers to optimize workflows, automate processes, and accelerate innovation. These tools are indispensable for creating scalable, secure, and reliable cloud-native applications across various industries.

The Importance of AWS Cloud Development Tools

Modern application development demands agility, automation, and seamless collaboration. AWS cloud development tools deliver the infrastructure, services, and integrations required to support the entire software development lifecycle (SDLC)—from coding and testing to deployment and monitoring. Whether catering to startups or large enterprises, these tools reduce manual effort, expedite releases, and uphold best practices in DevOps and cloud-native development.

Key AWS Development Tools

Here is an overview of some widely utilized AWS cloud development tools and their core functionalities:

1. AWS Cloud9

AWS Cloud9 is a cloud-based integrated development environment (IDE) that enables developers to write, run, and debug code directly in their browser. Pre-configured with essential tools, it supports multiple programming languages such as JavaScript, Python, and PHP. By eliminating the need for local development environments, Cloud9 facilitates real-time collaboration and streamlines workflows.

2. AWS CodeCommit

AWS CodeCommit is a fully managed source control service designed to securely host Git-based repositories. It offers features such as version control, fine-grained access management through AWS Identity and Access Management (IAM), and seamless integration with other AWS services, making it a robust option for collaborative development.

3. AWS CodeBuild

AWS CodeBuild automates key development tasks, including compiling source code, running tests, and producing deployment-ready packages. This fully managed service removes the need to maintain build servers, automatically scales resources, and integrates with CodePipeline along with other CI/CD tools, streamlining the build process.

4. AWS CodeDeploy

AWS CodeDeploy automates the deployment of code to Amazon EC2 instances, AWS Lambda, and even on-premises servers. By minimizing downtime, providing deployment tracking, and ensuring safe rollbacks in case of issues, CodeDeploy simplifies and secures the deployment process.

5. AWS CodePipeline

AWS CodePipeline is a fully managed continuous integration and continuous delivery (CI/CD) service that automates the build, test, and deployment stages of the software development lifecycle. It supports integration with third-party tools, such as GitHub and Jenkins, to provide enhanced flexibility and seamless workflows.

6. AWS CDK (Cloud Development Kit)

The AWS Cloud Development Kit allows developers to define cloud infrastructure using familiar programming languages including TypeScript, Python, Java, and C#. By simplifying Infrastructure as Code (IaC), AWS CDK makes provisioning AWS resources more intuitive and easier to maintain.

7. AWS X-Ray

AWS X-Ray assists developers in analyzing and debugging distributed applications by offering comprehensive insights into request behavior, error rates, and system performance bottlenecks. This tool is particularly valuable for applications leveraging microservices-based architectures.

Benefits of Using AWS Development Tools

Scalability: Effortlessly scale development and deployment operations to align with the growth of your applications.

Efficiency: Accelerate the software development lifecycle with automation and integrated workflows.

Security: Utilize built-in security features and IAM controls to safeguard your code and infrastructure.

Cost-Effectiveness: Optimize resources and leverage pay-as-you-go pricing to manage costs effectively.

Innovation: Focus on developing innovative features and solutions without the burden of managing infrastructure.

Conclusion

AWS development tools offer a robust, flexible, and secure foundation for building modern cloud-native applications. Covering every stage of development, from coding to deployment and monitoring, these tools empower organizations to innovate confidently, deliver software faster, and maintain a competitive edge in today’s dynamic digital environment. By leveraging this comprehensive toolset, businesses can streamline operations and enhance their ability to meet evolving challenges with agility.

0 notes

Text

Cloud Cost Optimization Strategies to Scale Without Wasting Resources

As startups and enterprises increasingly move to the cloud, one issue continues to surface: unexpectedly high cloud bills. While cloud platforms offer incredible scalability and flexibility, without proper optimization, costs can spiral out of control—especially for fast-growing businesses.

This guide breaks down proven cloud cost optimization strategies to help your company scale sustainably while keeping expenses in check. At Salzen Cloud, we specialize in helping teams optimize cloud usage without sacrificing performance or security.

💡 Why Cloud Cost Optimization Is Crucial

When you first migrate to the cloud, costs may seem manageable. But as your application usage grows, so do compute instances, storage, and data transfer costs. Before long, you’re spending thousands on idle resources, over-provisioned servers, or unused services.

Effective cost optimization enables you to:

🚀 Scale operations without financial waste

📈 Improve ROI on cloud investments

🛡️ Maintain agility while staying within budget

🧰 Top Strategies to Optimize Cloud Costs

Here are the key techniques we use at Salzen Cloud to help clients control and reduce cloud spend:

1. 📊 Right-Size Your Resources

Start by analyzing resource usage. Are you running t3.large instances when t3.medium would do? Are dev environments left running after hours?

Use tools like:

AWS Cost Explorer

Azure Advisor

Google Cloud Recommender

These tools analyze usage patterns and recommend right-sized instances, storage classes, and networking configurations.

2. 💤 Turn Off Idle Resources

Development, testing, or staging environments often run 24/7 unnecessarily. Schedule them to shut down after work hours or when not in use.

Implement automation with:

Lambda scripts or Azure Automation

Instance Scheduler on AWS

Terraform with time-based triggers

3. 💼 Use Reserved or Spot Instances

Cloud providers offer deep discounts for reserved or spot instances. Use:

Reserved Instances for predictable workloads (up to 72% savings)

Spot Instances for fault-tolerant or batch jobs (up to 90% savings)

At Salzen Cloud, we help businesses forecast and reserve the right resources to save long-term.

4. 📦 Leverage Autoscaling and Load Balancers

Autoscaling allows your application to scale up/down based on traffic, avoiding overprovisioning.

Pair this with intelligent load balancing to distribute traffic efficiently and prevent unnecessary compute usage.

5. 🧹 Clean Up Unused Resources

It’s common to forget about:

Unattached storage volumes (EBS, persistent disks)

Idle elastic IPs

Old snapshots or backups

Unused services (e.g., unused databases or functions)

Set monthly audits to remove or archive unused resources.

6. 🔍 Monitor Usage and Set Budgets

Implement detailed billing dashboards using:

AWS Budgets and Cost Anomaly Detection

Azure Cost Management

GCP Billing Reports

Set up alerts when costs approach defined thresholds. Salzen Cloud helps configure proactive cost monitoring dashboards for clients using real-time metrics.

7. 🏷️ Implement Tagging and Resource Management

Tag all resources by:

Environment (prod, dev, staging)

Department (engineering, marketing)

Owner or team

This makes it easier to track, allocate, and reduce costs effectively.

8. 🔐 Optimize Storage Tiers

Move rarely accessed data to cheaper storage classes:

AWS S3 Glacier / Infrequent Access

Azure Cool / Archive Tier

GCP Nearline / Coldline

Always evaluate storage lifecycle policies to automate this process.

⚙️ Salzen Cloud’s Approach to Smart Scaling

At Salzen Cloud, we take a holistic view of cloud cost optimization:

Automated audits and policy enforcement using Terraform, Kubernetes, and cloud-native tools

Cost dashboards integrated into CI/CD pipelines

Real-time alerts for overprovisioning or anomalous usage

Proactive savings plan strategies based on workload trends

Our team works closely with engineering and finance teams to ensure visibility, accountability, and savings at every level.

🚀 Final Thoughts

Cloud spending doesn’t have to be unpredictable. With a strategic approach, your startup or enterprise can scale confidently, innovate quickly, and spend smartly. The key is visibility, automation, and continuous refinement.

Let Salzen Cloud help you cut cloud costs—not performance.

0 notes

Text

AWS Introduces AWS MCP Servers for Serverless, ECS, & EKS

MCP AWS server

The AWS Labs GitHub repository now has Model Context Protocol (MCP) servers for AWS Serverless, Amazon ECS, and Amazon Elastic Kubernetes Service. Real-time contextual responses from open-source solutions trump AI development assistants' pre-trained knowledge. MCP servers provide current context and service-specific information to help you avoid deployment issues and improve service interactions, while AI assistant Large Language Models (LLM) use public documentation.

These open source solutions can help you design and deploy apps faster by using Amazon Web Services (AWS) features and configurations. These MCP servers enable AI code assistants with deep understanding of Amazon ECS, Amazon EKS, and AWS Serverless capabilities, speeding up the code-to-production process in your IDE or debugging production issues. They integrate with popular AI-enabled IDEs like Amazon Q Developer on the command line to allow you design and deploy apps using natural language commands.

Specialist MCP servers' functions:

With Amazon ECS MCP Server, applications can be deployed and containerised quickly. It helps configure AWS networking, load balancers, auto-scaling, task definitions, monitoring, and services. Real-time troubleshooting can fix deployment difficulties, manage cluster operations, and apply auto-scaling using natural language.

Amazon EKS MCP Server gives AI helpers contextual, up-to-date information for Kubernetes EKS environments. By providing the latest EKS features, knowledge base, and cluster state data, it enables AI code assistants more exact, customised aid throughout the application lifecycle.

The AWS Serverless MCP Server enhances serverless development. AI coding helpers learn AWS services, serverless patterns, and best practices. Integrating with the AWS Serverless Application Model Command Line Interface (AWS SAM CLI) to manage events and deploy infrastructure using tried-and-true architectural patterns streamlines function lifecycles, service integrations, and operational requirements. It also advises on event structures, AWS Lambda best practices, and code.

Users are directed to the AWS Labs GitHub repository for installation instructions, example settings, and other specialist servers, such as Amazon Bedrock Knowledge Bases Retrieval and AWS Lambda function transformation servers.

AWS MCP server operation

Giving Context: The MCP servers give AI assistants current context and knowledge about specific AWS capabilities, configurations, and even your surroundings (such as the EKS cluster state), eliminating the need for broad or outdated knowledge. For more accurate service interactions and fewer deployment errors, this is crucial.

They enable AI code assistance deep service understanding of AWS Serverless, ECS, and EKS. This allows the AI to make more accurate and tailored recommendations from code development to production issues.

The servers allow developers to construct and deploy apps using natural language commands using AI-enabled IDEs and tools like Amazon Q Developer on the command line. The AI assistant can use the relevant MCP server to get context or do tasks after processing the natural language query.

Aiding Troubleshooting and Service Actions: Servers provide tools and functionality for their AWS services. As an example:

Amazon ECS MCP Server helps configure load balancers and auto-scaling. Real-time debugging tools like fetch_task_logs can help the AI assistant spot issues in natural language queries.

The Amazon EKS MCP Server provides cluster status data and utilities like search_eks_troubleshoot_guide to fix EKS issues and generate_app_manifests to build Kubernetes clusters.

In addition to contextualising serverless patterns, best practices, infrastructure as code decisions, and event schemas, the AWS Serverless MCP Server communicates with the AWS SAM CLI. An example shows how it can help the AI helper discover best practices and architectural demands.

An AI assistant like Amazon Q can communicate with the right AWS MCP server for ECS, EKS, or Serverless development or deployment questions. This server can activate service-specific tools or provide specialised, current, or real-time information to help the AI assistant reply more effectively and accurately. This connection accelerates coding-to-production.

#AWSMCPserver#AmazonElasticContainerService#ModelContextProtocol#integrateddevelopmentenvironment#commandline#AmazonECS#technology#technews#technologynews#news#govindhtech

0 notes

Text

Integrating ROSA Applications with AWS Services (CS221)

As cloud-native architectures become the backbone of modern application deployments, combining the power of Red Hat OpenShift Service on AWS (ROSA) with native AWS services unlocks immense value for developers and DevOps teams alike. In this blog post, we explore how to integrate ROSA-hosted applications with AWS services to build scalable, secure, and cloud-optimized solutions — a key skill set emphasized in the CS221 course.

🚀 What is ROSA?

Red Hat OpenShift Service on AWS (ROSA) is a managed OpenShift platform that runs natively on AWS. It allows organizations to deploy Kubernetes-based applications while leveraging the scalability and global reach of AWS, without managing the underlying infrastructure.

With ROSA, you get:

Fully managed OpenShift clusters

Integrated with AWS IAM and billing

Access to AWS services like RDS, S3, DynamoDB, Lambda, etc.

Native CI/CD, container orchestration, and operator support

🧩 Why Integrate ROSA with AWS Services?

ROSA applications often need to interact with services like:

Amazon S3 for object storage

Amazon RDS or DynamoDB for database integration

Amazon SNS/SQS for messaging and queuing

AWS Secrets Manager or SSM Parameter Store for secrets management

Amazon CloudWatch for monitoring and logging

Integration enhances your application’s:

Scalability — Offload data, caching, messaging to AWS-native services

Security — Use IAM roles and policies for fine-grained access control

Resilience — Rely on AWS SLAs for critical components

Observability — Monitor and trace hybrid workloads via CloudWatch and X-Ray

🔐 IAM and Permissions: Secure Integration First

A crucial part of ROSA-AWS integration is managing IAM roles and policies securely.

Steps:

Create IAM Roles for Service Accounts (IRSA):

ROSA supports IAM Roles for Service Accounts, allowing pods to securely access AWS services without hardcoding credentials.

Attach IAM Policy to the Role:

Example: An application that uploads files to S3 will need the following permissions:{ "Effect": "Allow", "Action": ["s3:PutObject", "s3:GetObject"], "Resource": "arn:aws:s3:::my-bucket-name/*" }

Annotate OpenShift Service Account:

Use oc annotate to associate your OpenShift service account with the IAM role.

📦 Common Integration Use Cases

1. Storing App Logs in S3

Use a Fluentd or Loki pipeline to export logs from OpenShift to Amazon S3.

2. Connecting ROSA Apps to RDS

Applications can use standard drivers (PostgreSQL, MySQL) to connect to RDS endpoints — make sure to configure VPC and security groups appropriately.

3. Triggering AWS Lambda from ROSA

Set up an API Gateway or SNS topic to allow OpenShift applications to invoke serverless functions in AWS for batch processing or asynchronous tasks.

4. Using AWS Secrets Manager

Mount secrets securely in pods using CSI drivers or inject them using operators.

🛠 Hands-On Example: Accessing S3 from ROSA Pod

Here’s a quick walkthrough:

Create an IAM Role with S3 permissions.

Associate the role with a Kubernetes service account.

Deploy your pod using that service account.

Use AWS SDK (e.g., boto3 for Python) inside your app to access S3.

oc create sa s3-access oc annotate sa s3-access eks.amazonaws.com/role-arn=arn:aws:iam::<account-id>:role/S3AccessRole

Then reference s3-access in your pod’s YAML.

📚 ROSA CS221 Course Highlights

The CS221 course from Red Hat focuses on:

Configuring service accounts and roles

Setting up secure access to AWS services

Using OpenShift tools and operators to manage external integrations

Best practices for hybrid cloud observability and logging

It’s a great choice for developers, cloud engineers, and architects aiming to harness the full potential of ROSA + AWS.

✅ Final Thoughts

Integrating ROSA with AWS services enables teams to build robust, cloud-native applications using best-in-class tools from both Red Hat and AWS. Whether it's persistent storage, messaging, serverless computing, or monitoring — AWS services complement ROSA perfectly.

Mastering these integrations through real-world use cases or formal training (like CS221) can significantly uplift your DevOps capabilities in hybrid cloud environments.

Looking to Learn or Deploy ROSA with AWS?

HawkStack Technologies offers hands-on training, consulting, and ROSA deployment support. For more details www.hawkstack.com

0 notes

Text

Serverless vs. Containers: Which Cloud Computing Model Should You Use?

In today’s cloud-driven world, businesses are building and deploying applications faster than ever before. Two of the most popular technologies empowering this transformation are Serverless computing and Containers. While both offer flexibility, scalability, and efficiency, they serve different purposes and excel in different scenarios.

If you're wondering whether to choose Serverless or Containers for your next project, this blog will break down the pros, cons, and use cases—helping you make an informed, strategic decision.

What Is Serverless Computing?

Serverless computing is a cloud-native execution model where cloud providers manage the infrastructure, provisioning, and scaling automatically. Developers simply upload their code as functions and define triggers, while the cloud handles the rest.

Key Features of Serverless:

No infrastructure management

Event-driven architecture

Automatic scaling

Pay-per-execution pricing model

Popular Platforms:

AWS Lambda

Google Cloud Functions

Azure Functions

What Are Containers?

Containers package an application along with its dependencies and libraries into a single unit. This ensures consistent performance across environments and supports microservices architecture.

Containers are orchestrated using tools like Kubernetes or Docker Swarm to ensure availability, scalability, and automation.

Key Features of Containers:

Full control over runtime and OS

Environment consistency

Portability across platforms

Ideal for complex or long-running applications

Popular Tools:

Docker

Kubernetes

Podman

Serverless vs. Containers: Head-to-Head Comparison

Feature

Serverless

Containers

Use Case

Event-driven, short-lived functions

Complex, long-running applications

Scalability

Auto-scales instantly

Requires orchestration (e.g., Kubernetes)

Startup Time

Cold starts possible

Faster if container is pre-warmed

Pricing Model

Pay-per-use (per invocation)

Pay-per-resource (CPU/RAM)

Management

Fully managed by provider

Requires devops team or automation setup

Vendor Lock-In

High (platform-specific)

Low (containers run anywhere)

Runtime Flexibility

Limited runtimes supported

Any language, any framework

When to Use Serverless

Best For:

Lightweight APIs

Scheduled jobs (e.g., cron)

Real-time processing (e.g., image uploads, IoT)

Backend logic in JAMstack websites

Advantages:

Faster time-to-market

Minimal ops overhead

Highly cost-effective for sporadic workloads

Simplifies event-driven architecture

Limitations:

Cold start latency

Limited execution time (e.g., 15 mins on AWS Lambda)

Difficult for complex or stateful workflows

When to Use Containers

Best For:

Enterprise-grade microservices

Stateful applications

Applications requiring custom runtimes

Complex deployments and APIs

Advantages:

Full control over runtime and configuration

Seamless portability across environments

Supports any tech stack

Easier integration with CI/CD pipelines

Limitations:

Requires container orchestration

More complex infrastructure setup

Can be costlier if not optimized

Can You Use Both?

Yes—and you probably should.

Many modern cloud-native architectures combine containers and serverless functions for optimal results.

Example Hybrid Architecture:

Use Containers (via Kubernetes) for core services.

Use Serverless for auxiliary tasks like:

Sending emails

Processing webhook events

Triggering CI/CD jobs

Resizing images

This hybrid model allows teams to benefit from the control of containers and the agility of serverless.

Serverless vs. Containers: How to Choose

Business Need

Recommendation

Rapid MVP or prototype

Serverless

Full-featured app backend

Containers

Low-traffic event-driven app

Serverless

CPU/GPU-intensive tasks

Containers

Scheduled background jobs

Serverless

Scalable enterprise service

Containers (w/ Kubernetes)

Final Thoughts

Choosing between Serverless and Containers is not about which is better—it’s about choosing the right tool for the job.

Go Serverless when you need speed, simplicity, and cost-efficiency for lightweight or event-driven tasks.

Go with Containers when you need flexibility, full control, and consistency across development, staging, and production.

Both technologies are essential pillars of modern cloud computing. The key is understanding their strengths and limitations—and using them together when it makes sense.

#artificial intelligence#sovereign ai#coding#html#entrepreneur#devlog#linux#economy#gamedev#indiedev

1 note

·

View note

Text

The Rise of Serverless Architecture and Its Impact on Full Stack Development

The digital world is in constant flux, driven by the relentless pursuit of efficiency, scalability, and faster time-to-market. Amidst this evolution, serverless architecture has emerged as a transformative force, fundamentally altering how applications are built and deployed. For those seeking comprehensive full stack development services, this paradigm shift presents both exciting opportunities and new challenges. This article delves deep into the rise of serverless, exploring its core concepts, benefits, drawbacks, and, most importantly, its profound impact on full stack development.

Understanding the Serverless Revolution

At its core, serverless computing doesn't mean the absence of servers. Instead, it signifies a shift in responsibility. Developers no longer need to provision, manage, and scale the underlying server infrastructure. Cloud providers like AWS (with Lambda), Google Cloud (with Cloud Functions), and Microsoft Azure (with Azure Functions) handle these operational burdens. This allows full stack developers to focus solely on writing and deploying code, triggered by events such as HTTP requests, database changes, file uploads, and more.

The key characteristics of serverless architecture include:

No Server Management: The cloud provider handles all server-related tasks, including provisioning, patching, and scaling.

Automatic Scaling: Resources scale automatically based on demand, ensuring applications can handle traffic spikes without manual intervention.

Pay-as-you-go Pricing: Users are charged only for the compute time consumed when their code is running, leading to potential cost savings.

Event-Driven Execution: Serverless functions are typically triggered by specific events, making them highly efficient for event-driven architectures.

The Benefits of Embracing Serverless for Full Stack Developers

The adoption of serverless architecture brings a plethora of advantages for full stack developers:

Increased Focus on Code: By abstracting away server management, developers can dedicate more time and energy to writing high-quality code and implementing business logic. This leads to faster development cycles and quicker deployment of features.

Enhanced Scalability and Reliability: Serverless platforms offer built-in scalability and high availability. Applications can effortlessly handle fluctuating user loads without requiring developers to configure complex scaling strategies. The underlying infrastructure is typically highly resilient, ensuring greater application uptime.

Reduced Operational Overhead: The elimination of server maintenance tasks significantly reduces operational overhead. Full stack developers no longer need to spend time on server configuration, security patching, or infrastructure monitoring. This frees up valuable resources that can be reinvested in innovation.

Cost Optimization: The pay-as-you-go model can lead to significant cost savings, especially for applications with variable traffic patterns. You only pay for the compute resources you actually consume, rather than maintaining idle server capacity.

Faster Time to Market: The streamlined development and deployment process associated with serverless allows teams to release new features and applications more rapidly, providing a competitive edge.

Simplified Deployment: Deploying serverless functions is often simpler and faster than deploying traditional applications. Developers can typically deploy individual functions without needing to redeploy the entire application.

Integration with Managed Services: Serverless platforms seamlessly integrate with a wide range of other managed services offered by cloud providers, such as databases, storage, and messaging queues. This allows full stack developers to build complex applications using pre-built, scalable components.

Navigating the Challenges of Serverless Development

While the benefits are compelling, serverless architecture also presents certain challenges that full stack developers need to be aware of:

Cold Starts: Serverless functions can experience "cold starts," where there's a delay in execution if the function hasn't been invoked recently. This can impact the latency of certain requests, although cloud providers are continuously working on mitigating this issue.

Statelessness: Serverless functions are inherently stateless, meaning they don't retain information between invocations. Developers need to implement external mechanisms (like databases or caching services) to manage state.

Debugging and Monitoring: Debugging and monitoring distributed serverless applications can be more complex than traditional monolithic applications. Specialized tools and strategies are often required to trace requests and identify issues across multiple functions and services.

Vendor Lock-in: Choosing a specific cloud provider for your serverless infrastructure can lead to vendor lock-in, making it potentially challenging to migrate to another provider in the future.

Complexity Management: For large and complex applications, managing a multitude of individual serverless functions and their interactions can become challenging. Proper organization, documentation, and tooling are crucial.

Testing: Testing serverless functions in isolation and in integration with other services requires specific approaches and tools. Traditional testing methodologies may need to be adapted.

Security Considerations: While the cloud provider handles infrastructure security, developers are still responsible for securing their code and configurations within the serverless environment. Understanding the security implications of serverless is crucial.

The Impact on Full Stack Development Practices

The rise of serverless architecture is significantly reshaping the role and responsibilities of full stack developers:

Shift in Skillsets: While traditional backend skills remain relevant, full stack developers working with serverless need to develop expertise in cloud-specific services, event-driven programming, API design, and infrastructure-as-code (IaC) tools like Terraform or CloudFormation.

Increased Focus on API Design: With serverless functions often communicating via APIs, strong API design skills become even more critical for full stack developers. They need to design robust, scalable, and well-documented APIs.

Embracing Event-Driven Architectures: Serverless naturally lends itself to event-driven architectures. Full stack developers need to understand event sourcing, message queues, and other concepts related to building reactive systems.

DevOps Integration: While server management is abstracted, a DevOps mindset remains essential. Full stack developers need to be involved in CI/CD pipelines, automated testing, and monitoring to ensure the smooth operation of their serverless applications.

Understanding Cloud Ecosystems: A deep understanding of the specific cloud provider's ecosystem, including its serverless offerings, databases, storage solutions, and other managed services, is crucial for effective serverless development.

New Development Paradigms: Serverless encourages the adoption of microservices and function-as-a-service (FaaS) paradigms, requiring full stack developers to think differently about application decomposition and architecture.

Tooling and Ecosystem Evolution: The serverless ecosystem is constantly evolving, with new tools and frameworks emerging to simplify development, deployment, and monitoring. Full stack developers need to stay updated with these advancements.

Future Trends in Serverless and Full Stack Development

The future of serverless architecture and its impact on full stack development looks promising and dynamic:

Further Abstraction: Cloud providers will likely continue to abstract away more infrastructure complexities, making serverless even easier to adopt and use.

Improved Cold Start Performance: Ongoing research and development efforts will likely lead to significant improvements in cold start times, making serverless suitable for an even wider range of applications.

Enhanced Developer Tools: The tooling around serverless development will continue to mature, offering better debugging, monitoring, and testing capabilities.

Edge Computing Integration: Serverless principles are likely to extend to edge computing environments, enabling the development of distributed, event-driven applications closer to the data source.

AI and Machine Learning Integration: Serverless functions will play an increasingly important role in deploying and scaling AI and machine learning models.

Standardization and Interoperability: Efforts towards standardization across different cloud providers could reduce vendor lock-in and improve the portability of serverless applications.

Conclusion: Embracing the Serverless Future

Serverless architecture represents a significant evolution in how applications are built and deployed. For full stack developers, embracing this paradigm offers numerous benefits, including increased focus on code, enhanced scalability, reduced operational overhead, and faster time to market. While challenges such as cold starts, statelessness, and the need for new skillsets exist, the advantages often outweigh the drawbacks, especially for modern, scalable applications.

As the serverless ecosystem continues to mature and evolve, full stack developers who adapt to this transformative technology will be well-positioned to build innovative and efficient applications in the years to come. The rise of serverless is not just a trend; it's a fundamental shift that is reshaping the future of software development.

0 notes

Text

The Ultimate Roadmap to Web Development – Coding Brushup

In today's digital world, web development is more than just writing code—it's about creating fast, user-friendly, and secure applications that solve real-world problems. Whether you're a beginner trying to understand where to start or an experienced developer brushing up on your skills, this ultimate roadmap will guide you through everything you need to know. This blog also offers a coding brushup for Java programming, shares Java coding best practices, and outlines what it takes to become a proficient Java full stack developer.

Why Web Development Is More Relevant Than Ever

The demand for web developers continues to soar as businesses shift their presence online. According to recent industry data, the global software development market is expected to reach $1.4 trillion by 2027. A well-defined roadmap is crucial to navigate this fast-growing field effectively, especially if you're aiming for a career as a Java full stack developer.

Phase 1: The Basics – Understanding Web Development

Web development is broadly divided into three categories:

Frontend Development: What users interact with directly.

Backend Development: The server-side logic that powers applications.

Full Stack Development: A combination of both frontend and backend skills.

To start your journey, get a solid grasp of:

HTML – Structure of the web

CSS – Styling and responsiveness

JavaScript – Interactivity and functionality

These are essential even if you're focusing on Java full stack development, as modern developers are expected to understand how frontend and backend integrate.

Phase 2: Dive Deeper – Backend Development with Java

Java remains one of the most robust and secure languages for backend development. It’s widely used in enterprise-level applications, making it an essential skill for aspiring Java full stack developers.

Why Choose Java?

Platform independence via the JVM (Java Virtual Machine)

Strong memory management

Rich APIs and open-source libraries

Large and active community

Scalable and secure

If you're doing a coding brushup for Java programming, focus on mastering the core concepts:

OOP (Object-Oriented Programming)

Exception Handling

Multithreading

Collections Framework

File I/O

JDBC (Java Database Connectivity)

Java Coding Best Practices for Web Development

To write efficient and maintainable code, follow these Java coding best practices:

Use meaningful variable names: Improves readability and maintainability.

Follow design patterns: Apply Singleton, Factory, and MVC to structure your application.

Avoid hardcoding: Always use constants or configuration files.

Use Java Streams and Lambda expressions: They improve performance and readability.

Write unit tests: Use JUnit and Mockito for test-driven development.

Handle exceptions properly: Always use specific catch blocks and avoid empty catch statements.

Optimize database access: Use ORM tools like Hibernate to manage database operations.

Keep methods short and focused: One method should serve one purpose.

Use dependency injection: Leverage frameworks like Spring to decouple components.

Document your code: JavaDoc is essential for long-term project scalability.

A coding brushup for Java programming should reinforce these principles to ensure code quality and performance.

Phase 3: Frameworks and Tools for Java Full Stack Developers

As a full stack developer, you'll need to work with various tools and frameworks. Here’s what your tech stack might include:

Frontend:

HTML5, CSS3, JavaScript

React.js or Angular: Popular JavaScript frameworks

Bootstrap or Tailwind CSS: For responsive design

Backend:

Java with Spring Boot: Most preferred for building REST APIs

Hibernate: ORM tool to manage database operations

Maven/Gradle: For project management and builds

Database:

MySQL, PostgreSQL, or MongoDB

Version Control:

Git & GitHub

DevOps (Optional for advanced full stack developers):

Docker

Jenkins

Kubernetes

AWS or Azure

Learning to integrate these tools efficiently is key to becoming a competent Java full stack developer.

Phase 4: Projects & Portfolio – Putting Knowledge Into Practice

Practical experience is critical. Try building projects that demonstrate both frontend and backend integration.

Project Ideas:

Online Bookstore

Job Portal

E-commerce Website

Blog Platform with User Authentication

Incorporate Java coding best practices into every project. Use GitHub to showcase your code and document the learning process. This builds credibility and demonstrates your expertise.

Phase 5: Stay Updated & Continue Your Coding Brushup

Technology evolves rapidly. A coding brushup for Java programming should be a recurring part of your development cycle. Here’s how to stay sharp:

Follow Java-related GitHub repositories and blogs.

Contribute to open-source Java projects.

Take part in coding challenges on platforms like HackerRank or LeetCode.

Subscribe to newsletters like JavaWorld, InfoQ, or Baeldung.

By doing so, you’ll stay in sync with the latest in the Java full stack developer world.

Conclusion

Web development is a constantly evolving field that offers tremendous career opportunities. Whether you're looking to enter the tech industry or grow as a seasoned developer, following a structured roadmap can make your journey smoother and more impactful. Java remains a cornerstone in backend development, and by following Java coding best practices, engaging in regular coding brushup for Java programming, and mastering both frontend and backend skills, you can carve your path as a successful Java full stack developer.

Start today. Keep coding. Stay curious.

0 notes

Text

AWS Mastery Unveiled: Your Step-by-Step Journey into Cloud Proficiency

In today's rapidly evolving tech landscape, mastering cloud computing is a strategic move for individuals and businesses alike. Amazon Web Services (AWS), as a leading cloud services provider, offers a myriad of tools and services to facilitate scalable and efficient computing. With AWS Training in Hyderabad, professionals can gain the skills and knowledge needed to harness the capabilities of AWS for diverse applications and industries. Whether you're a seasoned IT professional or a beginner eager to dive into the cloud, here's a step-by-step guide to learning and mastering Amazon AWS.

1. Start with AWS Documentation:

The foundation of your AWS journey begins with the official AWS documentation. This vast resource provides detailed information, tutorials, and guides for each AWS service. Take the time to familiarize yourself with the terminologies and fundamental concepts. Understanding the basics lays a solid groundwork for more advanced learning.

2. Enroll in AWS Training and Certification:

AWS provides a dedicated training and certification program to empower individuals with the skills required in today's cloud-centric environment. Explore the AWS Training and Certification portal, which offers a range of courses, both free and paid. Commence your AWS certification journey with the AWS Certified Cloud Practitioner, progressively advancing to specialized certifications aligned with your career goals.

3. Hands-On Practice with AWS Free Tier:

Theory is valuable, but hands-on experience is paramount. AWS Free Tier allows you to experiment with various services without incurring charges. Seize this opportunity to get practical, testing different services and scenarios. This interactive approach reinforces theoretical knowledge and builds your confidence in navigating the AWS console.

4. Explore Online Courses and Tutorials:

Several online platforms offer structured AWS courses. Websites like Coursera, Udemy, and A Cloud Guru provide video lectures, hands-on labs, and real-world projects. These courses cover a spectrum of topics, from foundational AWS concepts to specialized domains like AWS security and machine learning. To master the intricacies of AWS and unlock its full potential, individuals can benefit from enrolling in the Top AWS Training Institute. This training ensures that professionals gain the expertise needed to navigate the complexities of AWS, empowering them to contribute effectively to their organizations' digital transformation and success.

5. Build Projects and Apply Knowledge:

The true test of your AWS proficiency lies in applying your knowledge to real-world projects. Start small, perhaps by deploying a static website on Amazon S3. As you gain confidence, move on to more complex projects, such as configuring a virtual server on Amazon EC2 or creating a serverless application using AWS Lambda. Practical application solidifies your understanding and hones your problem-solving skills.

6. Join AWS Communities and Forums:

Learning is a collaborative effort. Joining AWS communities and forums allows you to connect with like-minded individuals, seek advice, and share your experiences. Platforms like the AWS Developer Forums provide a space for discussing challenges and learning from others' insights. Networking within the AWS community can open doors to valuable opportunities and collaborations.

7. Read AWS Whitepapers and Case Studies:

AWS regularly publishes whitepapers covering best practices, architecture recommendations, and real-world case studies. Delve into these resources to gain deeper insights into how AWS services are applied in diverse scenarios. Whitepapers provide a wealth of knowledge on topics such as security, scalability, and cost optimization.

8. Experiment with AWS CLI and SDKs:

Command Line Interface (CLI) proficiency is a valuable skill for any AWS practitioner. Familiarize yourself with the AWS CLI, as well as Software Development Kits (SDKs) for your preferred programming languages. Automating tasks through the CLI and integrating AWS services into your applications enhances efficiency and allows for more sophisticated configurations.

9. Attend AWS Events and Webinars:

Stay abreast of the latest AWS trends, updates, and best practices by attending AWS events, webinars, and conferences. These platforms often feature expert speakers, product announcements, and in-depth discussions on specific AWS topics. Engaging with industry leaders and experts provides valuable insights into the current state and future direction of AWS.

10. Stay Updated and Adapt:

The cloud computing landscape is dynamic, with AWS continually introducing new services and updates. Subscribe to AWS newsletters, follow AWS blogs, and listen to AWS-focused podcasts to stay informed about the latest developments. Continuous learning is key to adapting to the evolving cloud technology landscape.

In conclusion, mastering Amazon AWS is a journey that combines theoretical understanding, hands-on experience, and active participation in the AWS community. By following these ten steps, you can develop a comprehensive skill set that empowers you to leverage AWS effectively, whether you're building applications, optimizing processes, or advancing your career in the cloud.

2 notes

·

View notes

Text

Serverless Computing: Simplifying Backend Development

Absolutely! Here's a brand new 700-word blog on the topic: "Serverless Computing: Simplifying Backend Development" — written in a clear, simple tone without any bold formatting, and including mentions of Hexadecimal Software and Hexahome Blogs.

Serverless Computing: Simplifying Backend Development

The world of software development is constantly evolving. One of the most exciting shifts in recent years is the rise of serverless computing. Despite the name, serverless computing still involves servers — but the key difference is that developers no longer need to manage them.

With serverless computing, developers can focus purely on writing code, while the cloud provider automatically handles server management, scaling, and maintenance. This approach not only reduces operational complexity but also improves efficiency, cost savings, and time to market.

What is Serverless Computing?

Serverless computing is a cloud computing model where the cloud provider runs the server and manages the infrastructure. Developers simply write functions that respond to events — like a file being uploaded or a user submitting a form — and the provider takes care of executing the function, scaling it based on demand, and handling all server-related tasks.

Unlike traditional cloud models where developers must set up virtual machines, install software, and manage scaling, serverless removes those responsibilities entirely.

How It Works

Serverless platforms use what are called functions-as-a-service (FaaS). Developers upload small pieces of code (functions) to the cloud platform, and each function is triggered by a specific event. These events could come from HTTP requests, database changes, file uploads, or scheduled timers.

The platform then automatically runs the code in a stateless container, scales the application based on the number of requests, and shuts down the container when it's no longer needed. You only pay for the time the function is running, which can significantly reduce costs.

Popular serverless platforms include AWS Lambda, Google Cloud Functions, Azure Functions, and Firebase Cloud Functions.

Benefits of Serverless Computing

Reduced infrastructure management Developers don’t have to manage or maintain servers. Everything related to infrastructure is handled by the cloud provider.

Automatic scaling Serverless platforms automatically scale the application depending on the demand, whether it's a few requests or thousands.

Cost efficiency Since you only pay for the time your code runs, serverless can be more affordable than always-on servers, especially for applications with variable traffic.

Faster development Serverless enables quicker development and deployment since the focus is on writing code and not on managing environments.

High availability Most serverless platforms ensure high availability and reliability without the need for additional configuration.

Use Cases of Serverless Computing

Serverless is suitable for many types of applications:

Web applications: Serverless functions can power APIs and backend logic for web apps.

IoT backends: Data from devices can be processed in real-time using serverless functions.

Chatbots: Event-driven logic for responding to messages can be handled with serverless platforms.

Real-time file processing: Automatically trigger functions when files are uploaded to storage, like resizing images or analyzing documents.

Scheduled tasks: Functions can be set to run at specific times for operations like backups or report generation.

Challenges of Serverless Computing

Like any technology, serverless computing comes with its own set of challenges:

Cold starts: When a function hasn’t been used for a while, it may take time to start again, causing a delay.

Limited execution time: Functions often have time limits, which may not suit long-running tasks.

Vendor lock-in: Each cloud provider has its own way of doing things, making it hard to move applications from one provider to another.

Debugging and monitoring: Tracking errors or performance in distributed functions can be more complex.

Despite these challenges, many teams find that the benefits of serverless outweigh the limitations, especially for event-driven applications and microservices.

About Hexadecimal Software

Hexadecimal Software is a leading software development company specializing in cloud-native solutions, DevOps, and modern backend systems. Our experts help businesses embrace serverless computing to build efficient, scalable, and low-maintenance applications. Whether you’re developing a new application or modernizing an existing one, we can guide you through your cloud journey. Learn more at https://www.hexadecimalsoftware.com

Explore More on Hexahome Blogs

To discover more about cloud computing, DevOps, and modern development practices, visit our blog platform at https://www.blogs.hexahome.in. Our articles are written in a simple, easy-to-understand style to help professionals stay updated with the latest tech trends.

0 notes

Text

Developing Your Future with AWS Solution Architect Associate

Why Should You Get AWS Solution Architect Associate?

If you're stepping into the world of cloud computing or looking to level up your career in IT, the Aws certified solutions architect associate course is one of the smartest moves you can make. Here's why:

1. AWS Is the Cloud Market Leader

Amazon Web Services (AWS) dominates the cloud industry, holding a significant share of the global market. With more businesses shifting to the cloud, AWS skills are in high demand—and that trend isn’t slowing down.

2. Proves Your Cloud Expertise

This certification demonstrates that you can design scalable, reliable, and cost-effective cloud solutions on AWS. It's a solid proof of your ability to work with AWS services, including storage, networking, compute, and security.

3. Boosts Your Career Opportunities

Recruiters actively seek AWS-certified professionals. Whether you're an aspiring cloud engineer, solutions architect, or developer, this credential helps you stand out in a competitive job market.

4. Enhances Your Earning Potential

According to various salary surveys, AWS-certified professionals—especially Solution Architects—tend to earn significantly higher salaries compared to their non-certified peers.

5. Builds a Strong Foundation

The Associate-level certification lays a solid foundation for more advanced AWS certifications like the AWS Solutions Architect – Professional, or specialty certifications in security, networking, and more.

Understanding the AWS Shared Responsibility Model

The AWS Solutions Architect Associate Shared Responsibility Model defines the division of security and compliance duties between AWS and the customer. AWS is responsible for “security of the cloud,” while customers are responsible for “security in the cloud.”

AWS handles the underlying infrastructure, including hardware, software, networking, and physical security of its data centers. This includes services like compute, storage, and database management at the infrastructure level.

On the other hand, customers are responsible for configuring their cloud resources securely. This includes managing data encryption, access controls (IAM), firewall settings, OS-level patches, and securing applications and workloads.

For example, while AWS secures the physical servers hosting an EC2 instance, the customer must secure the OS, apps, and data on that instance.

This model enables flexibility and scalability while ensuring that both parties play a role in protecting cloud environments. Understanding these boundaries is essential for compliance, governance, and secure cloud architecture.

Best Practices for AWS Solutions Architects

The role of an AWS Solutions Architect goes far beyond just designing cloud environments—it's about creating secure, scalable, cost-optimized, and high-performing architectures that align with business goals. To succeed in this role, following industry best practices is essential. Here are some of the top ones:

1. Design for Failure

Always assume that components can fail—and design resilient systems that recover gracefully.

Use Auto Scaling Groups, Elastic Load Balancers, and Multi-AZ deployments.

Implement circuit breakers, retries, and fallbacks to keep applications running.

2. Embrace the Well-Architected Framework

Leverage AWS’s Well-Architected Framework, which is built around five pillars:

Operational Excellence

Security

Reliability

Performance Efficiency

Cost Optimization

Reviewing your architecture against these pillars helps ensure long-term success.

3. Prioritize Security

Security should be built in—not bolted on.

Use IAM roles and policies with the principle of least privilege.

Encrypt data at rest and in transit using KMS and TLS.

Implement VPC security, including network ACLs, security groups, and private subnets.

4. Go Serverless When It Makes Sense

Serverless architecture using AWS Lambda, API Gateway, and DynamoDB can improve scalability and reduce operational overhead.

Ideal for event-driven workloads or microservices.

Reduces the need to manage infrastructure.

5. Optimize for Cost

Cost is a key consideration. Avoid over-provisioning.

Use AWS Cost Explorer and Trusted Advisor to monitor spend.

Choose spot instances or reserved instances when appropriate.

Right-size EC2 instances and consider using Savings Plans.

6. Monitor Everything

Build strong observability into your architecture.

Use Amazon CloudWatch, X-Ray, and CloudTrail for metrics, tracing, and auditing.

Set up alerts and dashboards to catch issues early.

Recovery Planning with AWS

Recovery planning in AWS ensures your applications and data can quickly bounce back after failures or disasters. AWS offers built-in tools like Amazon S3 for backups, AWS Backup, Amazon RDS snapshots, and Cross-Region Replication to support data durability. For more robust strategies, services like Elastic Disaster Recovery (AWS DRS) and CloudEndure enable near-zero downtime recovery. Use Auto Scaling, Multi-AZ, and multi-region deployments to enhance resilience. Regularly test recovery procedures using runbooks and chaos engineering. A solid recovery plan on AWS minimizes downtime, protects business continuity, and keeps operations running even during unexpected events.

Learn more: AWS Solution Architect Associates

0 notes

Photo

New Post has been published on https://codebriefly.com/building-and-deploying-angular-19-apps/

Building and Deploying Angular 19 Apps

Efficiently building and deploying Angular 19 applications is crucial for delivering high-performance, production-ready web applications. In this blog, we will cover the complete process of building and deploying Angular 19 apps, including best practices and optimization tips.

Table of Contents

Toggle

Why Building and Deploying Matters

Preparing Your Angular 19 App for Production

Building Angular 19 App

Key Optimizations in Production Build:

Configuration Example:

Deploying Angular 19 App

Deploying on Firebase Hosting

Deploying on AWS S3 and CloudFront

Automating Deployment with CI/CD

Example with GitHub Actions

Best Practices for Building and Deploying Angular 19 Apps

Final Thoughts

Why Building and Deploying Matters

Building and deploying are the final steps of the development lifecycle. Building compiles your Angular project into static files, while deploying makes it accessible to users on a server. Proper optimization and configuration ensure faster load times and better performance.

Preparing Your Angular 19 App for Production

Before building the application, make sure to:

Update Angular CLI: Keep your Angular CLI up to date.

npm install -g @angular/cli

Optimize Production Build: Enable AOT compilation and minification.

Environment Configuration: Use the correct environment variables for production.

Building Angular 19 App

To create a production build, run the following command:

ng build --configuration=production

This command generates optimized files in the dist/ folder.

Key Optimizations in Production Build:

AOT Compilation: Reduces bundle size by compiling templates during the build.

Tree Shaking: Removes unused modules and functions.

Minification: Compresses HTML, CSS, and JavaScript files.

Source Map Exclusion: Disables source maps for production builds to improve security and reduce file size.

Configuration Example:

Modify the angular.json file to customize production settings:

"configurations": "production": "optimization": true, "outputHashing": "all", "sourceMap": false, "namedChunks": false, "extractCss": true, "aot": true, "fileReplacements": [ "replace": "src/environments/environment.ts", "with": "src/environments/environment.prod.ts" ]

Deploying Angular 19 App

Deployment options for Angular apps include:

Static Web Servers (e.g., NGINX, Apache)

Cloud Platforms (e.g., AWS S3, Firebase Hosting)

Docker Containers

Serverless Platforms (e.g., AWS Lambda)

Deploying on Firebase Hosting

Install Firebase CLI:

npm install -g firebase-tools

Login to Firebase:

firebase login

Initialize Firebase Project:

firebase init hosting

Deploy the App:

firebase deploy

Deploying on AWS S3 and CloudFront

Build the Project:

ng build --configuration=production

Upload to S3:

aws s3 sync ./dist/my-app s3://my-angular-app

Configure CloudFront Distribution: Set the S3 bucket as the origin.

Automating Deployment with CI/CD

Setting up a CI/CD pipeline ensures seamless updates and faster deployments.

Example with GitHub Actions

Create a .github/workflows/deploy.yml file:

name: Deploy Angular App on: [push] jobs: build-and-deploy: runs-on: ubuntu-latest steps: - uses: actions/checkout@v2 - name: Set up Node.js uses: actions/setup-node@v2 with: node-version: '18' - run: npm install - run: npm run build -- --configuration=production - name: Deploy to S3 run: aws s3 sync ./dist/my-app s3://my-angular-app --delete

Best Practices for Building and Deploying Angular 19 Apps

Optimize for Production: Always use AOT and minification.

Use CI/CD Pipelines: Automate the build and deployment process.

Monitor Performance: Utilize tools like Lighthouse to analyze performance.

Secure the Application: Enable HTTPS and configure secure headers.

Cache Busting: Use hashed filenames to avoid caching issues.

Containerize with Docker: Simplifies deployments and scales easily.

Final Thoughts

Building and deploying Angular 19 applications efficiently can significantly enhance performance and maintainability. Following best practices and leveraging cloud hosting services ensure that your app is robust, scalable, and fast. Start building your next Angular project with confidence!

Keep learning & stay safe 😉

You may like:

Testing and Debugging Angular 19 Apps

Performance Optimization and Best Practices in Angular 19

UI/UX with Angular Material in Angular 19

0 notes

Text

Build A Smarter Security Chatbot With Amazon Bedrock Agents

Use an Amazon Security Lake and Amazon Bedrock chatbot for incident investigation. This post shows how to set up a security chatbot that uses an Amazon Bedrock agent to combine pre-existing playbooks into a serverless backend and GUI to investigate or respond to security incidents. The chatbot presents uniquely created Amazon Bedrock agents to solve security vulnerabilities with natural language input. The solution uses a single graphical user interface (GUI) to directly communicate with the Amazon Bedrock agent to build and run SQL queries or advise internal incident response playbooks for security problems.

User queries are sent via React UI.

Note: This approach does not integrate authentication into React UI. Include authentication capabilities that meet your company's security standards. AWS Amplify UI and Amazon Cognito can add authentication.

Amazon API Gateway REST APIs employ Invoke Agent AWS Lambda to handle user queries.

User queries trigger Lambda function calls to Amazon Bedrock agent.

Amazon Bedrock (using Claude 3 Sonnet from Anthropic) selects between querying Security Lake using Amazon Athena or gathering playbook data after processing the inquiry.

Ask about the playbook knowledge base:

The Amazon Bedrock agent queries the playbooks knowledge base and delivers relevant results.

For Security Lake data enquiries:

The Amazon Bedrock agent takes Security Lake table schemas from the schema knowledge base to produce SQL queries.

When the Amazon Bedrock agent calls the SQL query action from the action group, the SQL query is sent.

Action groups call the Execute SQL on Athena Lambda function to conduct queries on Athena and transmit results to the Amazon Bedrock agent.

After extracting action group or knowledge base findings:

The Amazon Bedrock agent uses the collected data to create and return the final answer to the Invoke Agent Lambda function.

The Lambda function uses an API Gateway WebSocket API to return the response to the client.

API Gateway responds to React UI via WebSocket.

The chat interface displays the agent's reaction.

Requirements

Prior to executing the example solution, complete the following requirements:

Select an administrator account to manage Security Lake configuration for each member account in AWS Organisations. Configure Security Lake with necessary logs: Amazon Route53, Security Hub, CloudTrail, and VPC Flow Logs.

Connect subscriber AWS account to source Security Lake AWS account for subscriber queries.

Approve the subscriber's AWS account resource sharing request in AWS RAM.

Create a database link in AWS Lake Formation in the subscriber AWS account and grant access to the Security Lake Athena tables.

Provide access to Anthropic's Claude v3 model for Amazon Bedrock in the AWS subscriber account where you'll build the solution. Using a model before activating it in your AWS account will result in an error.

When requirements are satisfied, the sample solution design provides these resources:

Amazon S3 powers Amazon CloudFront.

Chatbot UI static website hosted on Amazon S3.

Lambda functions can be invoked using API gateways.

An Amazon Bedrock agent is invoked via a Lambda function.

A knowledge base-equipped Amazon Bedrock agent.

Amazon Bedrock agents' Athena SQL query action group.

Amazon Bedrock has example Athena table schemas for Security Lake. Sample table schemas improve SQL query generation for table fields in Security Lake, even if the Amazon Bedrock agent retrieves data from the Athena database.

A knowledge base on Amazon Bedrock to examine pre-existing incident response playbooks. The Amazon Bedrock agent might propose investigation or reaction based on playbooks allowed by your company.

Cost

Before installing the sample solution and reading this tutorial, understand the AWS service costs. The cost of Amazon Bedrock and Athena to query Security Lake depends on the amount of data.

Security Lake cost depends on AWS log and event data consumption. Security Lake charges separately for other AWS services. Amazon S3, AWS Glue, EventBridge, Lambda, SQS, and SNS include price details.

Amazon Bedrock on-demand pricing depends on input and output tokens and the large language model (LLM). A model learns to understand user input and instructions using tokens, which are a few characters. Amazon Bedrock pricing has additional details.

The SQL queries Amazon Bedrock creates are launched by Athena. Athena's cost depends on how much Security Lake data is scanned for that query. See Athena pricing for details.

Clear up

Clean up if you launched the security chatbot example solution using the Launch Stack button in the console with the CloudFormation template security_genai_chatbot_cfn:

Choose the Security GenAI Chatbot stack in CloudFormation for the account and region where the solution was installed.

Choose “Delete the stack”.

If you deployed the solution using AWS CDK, run cdk destruct –all.

Conclusion

The sample solution illustrates how task-oriented Amazon Bedrock agents and natural language input may increase security and speed up inquiry and analysis. A prototype solution using an Amazon Bedrock agent-driven user interface. This approach may be expanded to incorporate additional task-oriented agents with models, knowledge bases, and instructions. Increased use of AI-powered agents can help your AWS security team perform better across several domains.

The chatbot's backend views data normalised into the Open Cybersecurity Schema Framework (OCSF) by Security Lake.

#securitychatbot#AmazonBedrockagents#graphicaluserinterface#Bedrockagent#chatbot#chatbotsecurity#Technology#TechNews#technologynews#news#govindhtech

0 notes

Text

Anton R Gordon on Securing AI Infrastructure with Zero Trust Architecture in AWS

As artificial intelligence becomes more deeply embedded into enterprise operations, the need for robust, secure infrastructure is paramount. AI systems are no longer isolated R&D experiments — they are core components of customer experiences, decision-making engines, and operational pipelines. Anton R Gordon, a renowned AI Architect and Cloud Security Specialist, advocates for implementing Zero Trust Architecture (ZTA) as a foundational principle in securing AI infrastructure, especially within the AWS cloud environment.