#aws lambda eventbridge

Explore tagged Tumblr posts

Text

AWS Lambda Compute Service Tutorial for Amazon Cloud Developers

Full Video Link - https://youtube.com/shorts/QmQOWR_aiNI Hi, a new #video #tutorial on #aws #lambda #awslambda is published on #codeonedigest #youtube channel. @java @awscloud @AWSCloudIndia @YouTube #youtube @codeonedigest #codeonedigest #aws #amaz

AWS Lambda is a serverless compute service that runs your code in response to events and automatically manages the underlying compute resources for you. These events may include changes in state such as a user placing an item in a shopping cart on an ecommerce website. AWS Lambda automatically runs code in response to multiple events, such as HTTP requests via Amazon API Gateway, modifications…

View On WordPress

#amazon lambda java example#aws#aws cloud#aws lambda#aws lambda api gateway#aws lambda api gateway trigger#aws lambda basic#aws lambda code#aws lambda configuration#aws lambda developer#aws lambda event trigger#aws lambda eventbridge#aws lambda example#aws lambda function#aws lambda function example#aws lambda function s3 trigger#aws lambda java#aws lambda server#aws lambda service#aws lambda tutorial#aws training#aws tutorial#lambda service

0 notes

Text

#AWS#AWS Step Functions#Step Functions#AWS CodePipeline#CodePipeline#Amazon EventBridge#EventBridge#Approval Flow#Approval Action#AWS CloudFormation#CloudFormation#AWS Lambda#Lambda#CI/CD#DevOps

0 notes

Text

AWS Transfer Family and GuardDuty Malware Protection for S3

S3 malware protection

Protecting against malware using AWS Transfer Family and GuardDuty

Businesses often must deliver online content safely. Public file transfer servers put the firm at risk from threat actors or unauthorised users submitting malware-infected files. Businesses can limit this risk by checking public-channel files for malware before processing.

AWS Transfer Family and Amazon GuardDuty may scan files transferred over a secure FTP (SFTP) server for malware as part of a transfer operation. GuardDuty automatically updates malware signatures every 15 minutes instead of scanning a container image, avoiding the need for human patching.

Prerequisites

What you need to implement the solution:

AWS account: This solution requires AWS access. If you don't have an AWS account, see Start developing today.

CLI: AWS Command Line Interface Install and link the AWS CLI to your account. Configure AWS account environment variables using your access token and secret access key.

The sample code will be fetched from GitHub using Git.

Terraform: Automation will use Terraform. Follow Terraform installation instructions to download and install.

Solution overview

This solution uses GuardDuty and Transfer Family. Smart threat detection service GuardDuty and secure file transfer service Transfer Family may be used to set up an SFTP server. AWS accounts, workloads, and data are protected by GuardDuty from odd and hazardous activity. The high-level solution uses these steps:

Transfer Family SFTP servers receive user file uploads.

Transfer Family workflows call AWS Lambda to conduct AWS Step Functions workflows.

Workflow begins after file upload.

Partial uploads to the SFTP server trigger an error handling Lambda function to report an error.

After a step function state machine runs a Lambda function to move uploaded files to an Amazon S3 bucket for processing, GuardDuty scans.

Step gets GuardDuty scan results as callbacks.

Clean or move infected files.

The process sends results using Amazon SNS. This might be an alert about a hazardous upload or problem that happened during the scan, or it could be a message about a successful upload and a clean scan that can be processed further.

Architecture and walkthrough of the solution

GuardDuty Malware Protection for S3 checks freshly uploaded S3 things. GuardDuty lets you monitor object prefixes or design a bucket-level malware defence approach.

This solution's procedure begins with file upload and continues through scanning and infection classification. From there, adjust the procedures for your use case.

Transfer Family uploads files using SFTP.

A successful upload starts the Managed Workflow Complete workflow and uploads the file to the Unscanned S3 bucket using Transfer Family. Successful uploads are managed by the Step Function Invoker Lambda function.

The Step Function The invoker starts the state machine and process by calling GuardDuty Scan Lambda.

GuardDuty Scan moves the file to Processing. The scanned files will come from this bucket.

GuardDuty automatically checks uploaded items. This implementation develops a Processing bucket malware prevention strategy.

After scanning, GuardDuty sends Amazon EventBridge the result.

A Lambda Callback function is invoked by an EventBridge rule after each scan. EventBridge calls the method with scan results. See Amazon EventBridge S3 item scan monitoring.

Lambda Callback alerts GuardDuty Scan using callback task integration. The Move File task receives GuardDuty scan results after returning to the Scan function.

If the scan finds no threats, the transport File operation will transport the file to the Clean S3 bucket for further processing.

Move File now posts to Success SNS to notify subscribers.

The Move File function will send the file to the Quarantine S3 bucket for extra analysis if the conclusion suggests that the file is dangerous. To warn the user to the upload of a potentially hazardous file, the function will further delete the file from the Processing bucket and publish a notification in the SNS’s Error topic.

Transfer Family will commence the Managed procedure Partial process if the file upload fails and is not entirely uploaded.

Controlled Workflow The Error Publisher function, which is used to report errors that emerge anywhere in the process, is called by the Partial error handling workflow.

The issue Publisher function detects the type of issue and adjusts the error status appropriately, depending on whether it is due to a partial upload or a problem elsewhere in the process. Then it will send an error message to the SNS Error Topic.

The GuardDuty Scan job has a timeout to broadcast an event to Error Topic if the file isn't scanned, requiring a manual intervention. If GuardDuty Scan fails, the Error clean up Lambda function is invoked.

Finally, the Processing bucket has an S3 Lifecycle policy. This ensures no file stays in the Processing bucket longer than a day.

Code base

The GitHub AWS-samples project implements this method using Terraform and Python-based Lambda functions.This solution may be built with AWS CloudFormation. The code includes everything needed to finish the procedure and demonstrate GuardDuty's malware protection plan and Transfer Family.

Install the fix

Applying this solution to testing.

Clone the repository to your working directory with Git.

Enter the root directory of the copied project.

Customise Terraform locals.tf's S3 bucket, SFTP server, and other variables.

Execute Terraform.

If everything seems good, run Terraform Apply and select Yes to construct resources.

Clear up

Preventing unnecessary costs requires cleaning up your resources after testing and examining the solution. Remove this solution's resources by running the following command in your cloned project's root directory:

This command deletes Terraform-created SFTP servers, S3 buckets, Lambda functions, and other resources. Answer “yes” to confirm deletion.

In conclusion

Follow the instructions in the post to analyse SFTP files uploaded to your S3 bucket for hazards and safe processing. The solution reduces exposure by securely scanning public uploads before sending them to other portions of your system.

#MalwareProtectionforS3#MalwareProtection#AWSTransferFamilyandGuardDuty#AWSTransferFamily#GuardDuty#SFTPserver#Technology#TechNews#technologynews#news#govindhtech

0 notes

Text

Anton R Gordon on Securing AI Infrastructure with Zero Trust Architecture in AWS

As artificial intelligence becomes more deeply embedded into enterprise operations, the need for robust, secure infrastructure is paramount. AI systems are no longer isolated R&D experiments — they are core components of customer experiences, decision-making engines, and operational pipelines. Anton R Gordon, a renowned AI Architect and Cloud Security Specialist, advocates for implementing Zero Trust Architecture (ZTA) as a foundational principle in securing AI infrastructure, especially within the AWS cloud environment.

Why Zero Trust for AI?

Traditional security models operate under the assumption that anything inside a network is trustworthy. In today’s cloud-native world — where AI workloads span services, accounts, and geographical regions — this assumption can leave systems dangerously exposed.

“AI workloads often involve sensitive data, proprietary models, and critical decision-making processes,” says Anton R Gordon. “Applying Zero Trust principles means that every access request is verified, every identity is authenticated, and no implicit trust is granted — ever.”

Zero Trust is particularly crucial for AI environments because these systems are not static. They evolve, retrain, ingest new data, and interact with third-party APIs, all of which increase the attack surface.

Anton R Gordon’s Zero Trust Blueprint in AWS

Anton R Gordon’s approach to securing AI systems with Zero Trust in AWS involves a layered strategy that blends identity enforcement, network segmentation, encryption, and real-time monitoring.

1. Enforcing Identity at Every Layer

At the core of Gordon’s framework is strict IAM (Identity and Access Management). He ensures all users, services, and applications assume the least privilege by default. Using IAM roles and policies, he tightly controls access to services like Amazon SageMaker, S3, Lambda, and Bedrock.

Gordon also integrates AWS IAM Identity Center (formerly AWS SSO) for centralized authentication, coupled with multi-factor authentication (MFA) to reduce credential-based attacks.

2. Micro-Segmentation with VPC and Private Endpoints

To prevent lateral movement within the network, Gordon leverages Amazon VPC, creating isolated environments for each AI component — data ingestion, training, inference, and storage. He routes all traffic through private endpoints, avoiding public internet exposure.

For example, inference APIs built on Lambda or SageMaker are only accessible through VPC endpoints, tightly scoped security groups, and AWS Network Firewall policies.

3. Data Encryption and KMS Integration

Encryption is non-negotiable. Gordon enforces encryption for data at rest and in transit using AWS KMS (Key Management Service). He also sets up customer-managed keys (CMKs) for more granular control over sensitive datasets and AI models stored in Amazon S3 or Amazon EFS.

4. Continuous Monitoring and Incident Response

Gordon configures Amazon GuardDuty, CloudTrail, and AWS Config to monitor all user activity, configuration changes, and potential anomalies. When paired with AWS Security Hub, he creates a centralized view for detecting and responding to threats in real time.

He also sets up automated remediation workflows using AWS Lambda and EventBridge to isolate or terminate suspicious sessions instantly.

Conclusion

By applying Zero Trust Architecture principles, Anton R Gordon ensures AI systems in AWS are not only performant but resilient and secure. His holistic approach — blending IAM enforcement, network isolation, encryption, and continuous monitoring — sets a new standard for AI infrastructure security.

For organizations deploying ML models and AI services in the cloud, following Gordon’s Zero Trust blueprint provides peace of mind, operational integrity, and compliance in an increasingly complex threat landscape.

0 notes

Text

🚀 Integrating ROSA Applications with AWS Services (CS221)

As cloud-native applications evolve, seamless integration between orchestration platforms like Red Hat OpenShift Service on AWS (ROSA) and core AWS services is becoming a vital architectural requirement. Whether you're running microservices, data pipelines, or containerized legacy apps, combining ROSA’s Kubernetes capabilities with AWS’s ecosystem opens the door to powerful synergies.

In this blog, we’ll explore key strategies, patterns, and tools for integrating ROSA applications with essential AWS services — as taught in the CS221 course.

🧩 Why Integrate ROSA with AWS Services?

ROSA provides a fully managed OpenShift experience, but its true potential is unlocked when integrated with AWS-native tools. Benefits include:

Enhanced scalability using Amazon S3, RDS, and DynamoDB

Improved security and identity management through IAM and Secrets Manager

Streamlined monitoring and observability with CloudWatch and X-Ray

Event-driven architectures via EventBridge and SNS/SQS

Cost optimization by offloading non-containerized workloads

🔌 Common Integration Patterns

Here are some popular integration patterns used in ROSA deployments:

1. Storage Integration:

Amazon S3 for storing static content, logs, and artifacts.

Use the AWS SDK or S3 buckets mounted using CSI drivers in ROSA pods.

2. Database Services:

Connect applications to Amazon RDS or Amazon DynamoDB for persistent storage.

Manage DB credentials securely using AWS Secrets Manager injected into pods via Kubernetes secrets.

3. IAM Roles for Service Accounts (IRSA):

Securely grant AWS permissions to OpenShift workloads.

Set up IRSA so pods can assume IAM roles without storing credentials in the container.

4. Messaging and Eventing:

Integrate with Amazon SNS/SQS for asynchronous messaging.

Use EventBridge to trigger workflows from container events (e.g., pod scaling, job completion).

5. Monitoring & Logging:

Forward logs to CloudWatch Logs using Fluent Bit/Fluentd.

Collect metrics with Prometheus Operator and send alerts to Amazon CloudWatch Alarms.

6. API Gateway & Load Balancers:

Expose ROSA services using AWS Application Load Balancer (ALB).

Enhance APIs with Amazon API Gateway for throttling, authentication, and rate limiting.

📚 Real-World Use Case

Scenario: A financial app running on ROSA needs to store transaction logs in Amazon S3 and trigger fraud detection workflows via Lambda.

Solution:

Application pushes logs to S3 using the AWS SDK.

S3 triggers an EventBridge rule that invokes a Lambda function.

The function performs real-time analysis and writes alerts to an SNS topic.

This serverless integration offloads processing from ROSA while maintaining tight security and performance.

✅ Best Practices

Use IRSA for least-privilege access to AWS services.

Automate integration testing with CI/CD pipelines.

Monitor both ROSA and AWS services using unified dashboards.

Encrypt data in transit and at rest using AWS KMS + OpenShift secrets.

🧠 Conclusion

ROSA + AWS is a powerful combination that enables enterprises to run secure, scalable, and cloud-native applications. With the insights from CS221, you’ll be equipped to design robust architectures that capitalize on the strengths of both platforms. Whether it’s storage, compute, messaging, or monitoring — AWS integrations will supercharge your ROSA applications.

For more details visit - https://training.hawkstack.com/integrating-rosa-applications-with-aws-services-cs221/

0 notes

Text

The Future of Cloud Computing: Trends and AWS's Role

Cloud computing continues to evolve, shaping the future of digital transformation across industries. As businesses increasingly migrate to the cloud, emerging trends like AI-driven cloud solutions, edge computing, and serverless architectures are redefining how applications are built and deployed. In this blog, we will explore the future of cloud computing and how AWS remains a key player in driving innovation.

Key Trends in Cloud Computing

1. AI and Machine Learning in the Cloud

Cloud providers are integrating AI and ML services into their platforms to automate tasks, optimize workflows, and enhance decision-making. AWS offers services like:

Amazon SageMaker — A fully managed service to build, train, and deploy ML models.

AWS Bedrock — A generative AI service enabling businesses to leverage foundation models.

2. Edge Computing and Hybrid Cloud

With the rise of IoT and real-time processing needs, edge computing is gaining traction. AWS contributes with:

AWS Outposts — Extends AWS infrastructure to on-premises environments.

AWS Wavelength — Brings cloud services closer to 5G networks for ultra-low latency applications.

3. Serverless and Event-Driven Architectures

Serverless computing is making application development more efficient by removing the need to manage infrastructure. AWS leads with:

AWS Lambda — Executes code in response to events without provisioning servers.

Amazon EventBridge — Connects applications using event-driven workflows.

4. Multi-Cloud and Interoperability

Businesses are adopting multi-cloud strategies to avoid vendor lock-in and enhance redundancy. AWS facilitates interoperability through:

AWS CloudFormation — Infrastructure as Code (IaC) to manage multi-cloud resources.

AWS DataSync — Seamless data transfer between AWS and other cloud providers.

5. Sustainability and Green Cloud Computing

Cloud providers are focusing on reducing carbon footprints. AWS initiatives include:

AWS Sustainability Pillar — Helps businesses design sustainable workloads.

Amazon’s Renewable Energy Commitment — Aims for 100% renewable energy usage by 2025.

AWS’s Role in the Future of Cloud Computing

1. Innovation in AI and ML

AWS continues to lead AI adoption with services like Amazon Titan, which provides foundational AI models, and AWS Trainium, a specialized chip for AI workloads.

2. Security and Compliance

With increasing cyber threats, AWS offers:

AWS Security Hub — Centralized security management.

AWS Nitro System — Enhances virtualization security.

3. Industry-Specific Cloud Solutions

AWS provides tailored solutions for healthcare, finance, and gaming with:

AWS HealthLake — Manages healthcare data securely.

AWS FinSpace — Analyzes financial data efficiently.

Conclusion

The future of cloud computing is dynamic, with trends like AI, edge computing, and sustainability shaping its evolution. AWS remains at the forefront, providing innovative solutions that empower businesses to scale and optimize their operations.

WEBSITE: https://www.ficusoft.in/aws-training-in-chennai/

0 notes

Text

What You'll Do

Design, develop, and maintain the backend infrastructure that powers our IoT applications, ensuring they are reliable, scalable, and ready for global expansion.

Develop and maintain production-ready Python code that is clean, well-structured, and optimized for performance.

Use AWS Lambda, DynamoDB, and other cloud services to build event-driven and serverless solutions.

Design, manage, and enhance CI/CD pipelines to streamline the development and deployment process, adhering to DevOps best practices.

Quickly resolve backend issues, ensuring system reliability, while continually exploring ways to improve and innovate.

Work closely with the IT team to deliver seamless, high-quality products.

Produce clear, comprehensive documentation and ensure code quality through rigorous testing.

What You’ll Bring:

Backend Development Expertise: At least 3+ years of experience as a Software Engineer, with a focus on backend systems.

Python Mastery: Strong proficiency in Python, with experience developing and deploying large-scale, production-ready applications.

Cloud & AWS Experience: Extensive knowledge of AWS services, including Lambda, DynamoDB, SQS, SNS, API Gateway, and EventBridge.

Serverless Enthusiast: Passion for serverless and event-driven architectures, and the ability to design scalable, efficient backend systems using these technologies.

Code Quality & DevOps Skills: Ability to write clean, maintainable code and experience with CI/CD pipelines and DevOps practices for seamless deployments.

Problem-Solving Mindset: Proactive approach to identifying and solving complex problems, with a commitment to delivering high-quality solutions.

Strong Documentation: Experience writing comprehensive, clear documentation that helps ensure smooth handovers and collaboration.

Nice to Have (but not essential)

Familiarity with telecom protocols like RADIUS, IPAM, and an understanding of computer networks or roaming technologies.

Experience with PostHog or similar analytics platforms.

0 notes

Text

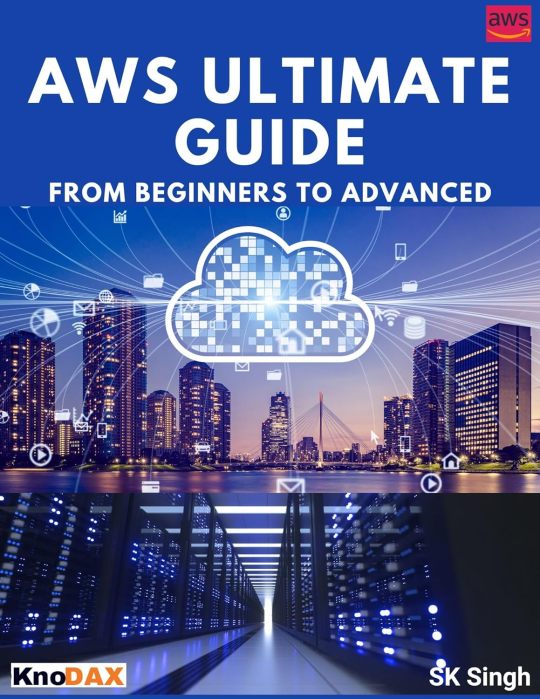

AWS Ultimate Guide: From Beginners to Advanced by SK Singh

This is a very comprehensive book on AWS, from beginners to advanced. The book has extensive diagrams to help understand topics much easier way.

To make understanding the subject a smoother experience, the book is divided into the following sections:

Cloud Computing

AWS Fundamentals (What is AWS, AWS Account, AWS Free Tier, AWS Cost & Billing Management, AWS Global Cloud Infrastructure (part I)), IAM, EC2)

AWS Advanced (EC2 Advanced, ELB, Advanced S3, Route 53, AWS Global Cloud Infrastructure (part II), Advanced Storage on AWS, AWS Monitoring, Audit, and Performance),

AWS RDS and Databases (AWS RDS and Cache, AWS Databases)

Serverless (Serverless Computing, AWS Integration, and Messaging)

Container & CI/CD (Container, AWS CI/CD services)

Data & Analytics (Data & Analytics)

Machine Learning (AWS ML/AI Services)

Security (AWS Security & Encryption, AWS Shared Responsibility Model, How to get Support on AWS, Advanced Identity)

Networking (AWS Networking)

Disaster Management (Backup, Recovery & Migrations)

Solutions Architecture (Cloud Architecture Key Design Principles, AWS Well-Architected Framework, Classic Solutions Architecture)

Includes AWS services/features such as IAM, S3, EC2, EC2 purchasing options, EC2 placement groups, Load Balancers, Auto Scaling, S3 Glacier, S3 Storage classes, Route 53 Routing policies, CloudFront, Global Accelerator, EFS, EBS, Instance Store, AWS Snow Family, AWS Storage Gateway, AWS Transfer Family, Amazon CloudWatch, EventBridge, CloudWatch Insights, AWS CloudTrail, AWS Config, Amazon RDS, Amazon Aurora, Amazon ElatiCache, Amazon DocumentDB, Amazon Keyspaces, Amazon Quantum Ledger Database, Amazon Timestream, Amazon Managed Blockchain, AWS Lambda, Amazon DynamoDB, Amazon API Gateway, SQS, SNS, SES, Amazon Kinesis, Amazon Kinesis Firehose, Amazon Kinesis Data Analytics, Amazon Kinesis Data Streams, Amazon Kinesis ECS, Amazon Kinesis ECR, Amazon EKS, AWS CloudFormation, AWS Elastic Beanstalk, AWS CodeBuild, AWS OpsWorks, AWS CodeGuru, AWS CodeCommit, Amazon Athena, Amazon Redshift, Amazon EMR, Amazon QuickSight, AWS Glue, AWS Lake Formation, Amazon MSK, Amazon Rekognition, Amazon Transcribe, Amazon Polly, Amazon Translate, Amazon Lex, Amazon Connect, Amazon Comprehend, Amazon Comprehend Medical, Amazon SageMaker, Amazon Forecast, Amazon Kendra, Amazon Personalize, Amazon Textract, Amazon Fraud Detector, Amazon Sumerian, AWS WAF, AWS Shield Standard, AWS Shield Advanced, AWS Firewall Manager, AWS GuardDuty, Amazon Inspector, Amazon Macie, Amazon Detective, SSM Session Manager, AWS Systems Manager, S3 Replication & Encryption, AWS Organization, AWS Control Tower, AWS SSO, Amazon Cognito, AWS VPC, NAT Gateway, VPC Endpoints, VPC Peering, AWS Transit Gateway, AWS Site-to-Site VPC, Database Management Service (DMS), and many others.

Order YOUR Copy NOW: https://amzn.to/4bfoHQy via @amazon

1 note

·

View note

Text

Preparing for Success: A Comprehensive Approach to the AWS Certified Developer Associate Exam

The AWS Certified Developer Associate Exam is a crucial step in establishing your expertise in developing applications on the AWS platform.

If you want to advance your career at the AWS Course in Pune, you need to take a systematic approach and join up for a course that best suits your interests and will greatly expand your learning path.

Aims to provide you with a comprehensive guide on how to pass the exam successfully.

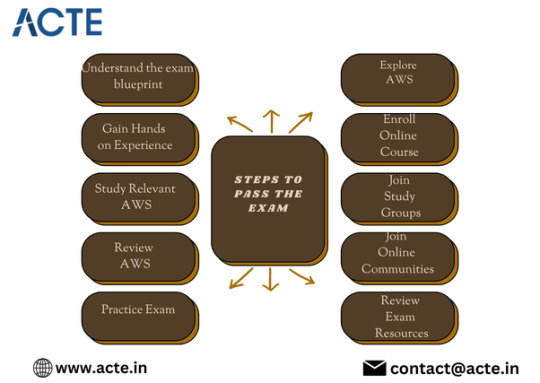

Some Steps to Increase Your Chances of Achieving the Certification:

Understand the Exam Blueprint: To start your preparation, familiarize yourself with the official AWS Certified Developer Associate Exam Guide. This document outlines the exam domains, subtopics, and their weightage. It serves as a roadmap for your study plan, enabling you to focus on the key areas that will be assessed.

Gain Hands-on Experience: Practical experience is paramount for success in the exam. Create an AWS Free Tier account and immerse yourself in working with various AWS services. Build and deploy applications using services such as EC2, S3, Lambda, DynamoDB, and API Gateway. The more hands-on experience you gain, the better you’ll understand the services and their integration.

Study Relevant AWS Services: The exam will test your knowledge of various AWS services. Develop a strong understanding of their features, use cases, and best practices. Some of the key services to focus on include EC2, ECS, Lambda, S3, EBS, RDS, DynamoDB, VPC, Route 53, CloudFront, SNS, SQS, Step Functions, EventBridge, CloudFormation, and the AWS SDKs. Dive into the AWS documentation, whitepapers, and FAQs to deepen your knowledge.

Review AWS Identity and Access Management (IAM): IAM is a critical aspect of AWS security and access management. Understand IAM roles, policies, users, groups, and permissions. Learn how to grant appropriate access to AWS resources while adhering to the principle of least privilege.

Familiarize Yourself with AWS SDKs and Developer Tools: Gain knowledge of the AWS SDKs available for various programming languages. Understand how to use them to interact with AWS services programmatically. Additionally, explore developer tools like AWS CLI, AWS SAM, and Cloud Formation for infrastructure-as-code deployment.

Practice with Sample Questions and Practice Exams: Utilize official AWS sample questions and practice exams to assess your knowledge and identify areas that require further study. This exercise will also help you become familiar with the exam format and improve time management skills.

If you want to learn more about AWS Certification Online, you contact best institute because they offer certifications and job placement opportunities. Experienced teachers can help you learn better. You can find these services both online and offline. Take things step by step and consider enrolling in a course if you’re interested.

7. Explore the AWS Well-Architected Framework: The AWS Well-Architected Framework provides architectural best . for building reliable, secure, efficient, and cost-effective AWS applications. Understand the five pillars of the framework (Operational Excellence, Security, Reliability, Performance Efficiency, and Cost Optimization) and how they apply to AWS services.

8. Enroll in Online Courses and Training: Consider enrolling in online courses specifically designed for the AWS Certified Developer Associate Exam. Platforms like Udemy, A Cloud Guru, and Linux Academy offer comprehensive courses taught by experienced instructors. These courses can provide structured learning and help you grasp complex concepts effectively.

9.Join Study Groups and Discussion Forums: Connect with fellow exam takers and AWS professionals in study groups or online forums. Engaging in discussions, sharing resources, and learning from each other’s experiences can significantly enhance your understanding and provide valuable insights.

10. Review Exam Readiness Resources: Before the exam, review the official exam readiness resources provided by AWS. These resources include exam guides, whitepapers, and FAQs related to the exam topics. Ensure you are aware of any updates or changes to the exam content.

Passing the AWS Certified Developer Associate Exam requires a combination of theoretical knowledge and practical skills. By following this comprehensive guide, dedicating sufficient time for preparation, and staying updated with the latest AWS services and best practices, you can increase your chances of success.

Remember to practice regularly, seek support from study groups, and leverage the available resources.

Good luck on your journey to becoming an AWS Certified Developer Associate!

0 notes

Text

Web3 gaming platform Immutable not too long ago introduced a partnership with Amazon Net Providers (AWS). This collaboration is ready to revolutionize the world of blockchain gaming, ushering in a brand new period of potentialities for sport builders within the crypto house. Immutable Joins AWS’s ISV Speed up Program In line with an Oct. 10 statement, Immutable has not too long ago joined AWS’s ISV Speed up Program, a gross sales program designed for firms leveraging AWS providers of their merchandise. Immutable’s blockchain gaming know-how, significantly Immutable X, which is Ethereum-compatible, will now be seamlessly built-in with AWS’s Activate program. #Immutable 🤝 @amazon Amazon Net Providers and Immutable are working collectively to form the way forward for gaming! Via our collaboration with Amazon, we are going to achieve entry to an unlimited pipeline of sport studio leads, help for profitable deal closures, and as much as $100k in AWS cloud… pic.twitter.com/SX7xfFqrtK — Immutable (@Immutable) October 10, 2023 Immutable’s Chief Industrial Officer, Jason Suen, expressed pleasure concerning the partnership, stating, “By becoming a member of AWS ISV Speed up and AWS Activate applications, we’re capable of present our huge community of sport builders with a turnkey resolution for shortly constructing and scaling web3 video games.” AWS Head of Startups, John Kearney, highlighted the importance of the gaming sector throughout the blockchain trade. He famous that quite a few studios are exploring crypto know-how’s integration into their video games and expressed anticipation for the long run on this dynamic subject. AWS can also be actively engaged with the Blockchain Sport Alliance, showcasing its dedication to advancing blockchain gaming. AWS, recognized for its cloud computing platforms and knowledge storage providers, affords numerous providers tailor-made to the gaming sector. These embrace cloud gaming providers, sport servers, sport safety providers, sport analytics, online game synthetic intelligence, and machine studying choices. AWS’s Position in Immutable’s Growth Immutable’s collaboration with AWS will open up an unlimited pipeline of sport studio leads and supply vital help for deal closures. This partnership affords the potential to simplify the expansion and enlargement of blockchain video games by offering entry to AWS assets, which can improve safety for potential purchasers and, finally, facilitate the closing of agreements with distinguished sport studios globally. Immutable’s platform is constructed on Amazon EventBridge and AWS Lambda, harnessing serverless providers that use occasions to attach software parts. This structure empowers the platform to scale successfully, dealing with a 10x enhance in partnered video games. Along with its collaboration with AWS, builders looking for to construct on Immutable’s blockchain also can make the most of AWS Activate, a program providing important perks equivalent to technical help, coaching, and a considerable $100,000 value of AWS cloud credit. In the meantime, Immutable has been making strides with its zero-knowledge Ethereum Digital Machine (zkEVM), which underwent public testing in collaboration with Polygon Labs in August. The zkEVM guarantees to decrease growth prices for sport builders whereas offering the safety and community results related to the Ethereum ecosystem. Immutable has additionally gained recognition and valuation, reaching $2.5 billion in March 2022, following a outstanding $200 million Collection C funding spherical primarily led by Singaporean state-owned funding agency Temasek. SPECIAL OFFER (Sponsored) Binance Free $100 (Unique): Use this link to register and obtain $100 free and 10% off charges on Binance Futures first month (terms).PrimeXBT Particular Provide: Use this link to register & enter CRYPTOPOTATO50 code to obtain as much as $7,000 in your deposits.

0 notes

Video

youtube

AWS Lambda with EventBridge Service | Step-by-Step Tutorial Full Video Link - https://youtu.be/ShrlSJ5S3yg Check out this new video on the CodeOneDigest YouTube channel! Learn how to setup aws lambda function? How to invoke lambda function using eventbridge event?@codeonedigest @awscloud @AWSCloudIndia @AWS_Edu @AWSSupport @AWS_Gov @AWSArchitecture

0 notes

Text

#AWS#AWS Step Functions#Step Functions#AWS Systems Manager#Systems Manager#SSM#Amazon EventBridge#EventBridge#Approval Flow#Approval Action#AWS CloudFormation#CloudFormation#AWS Lambda#Lambda#CI/CD#DevOps

0 notes

Text

AWS Location Service Simplifies App Location And Mapping

Amazon Location Service

Adding location information and mapping features to apps is simple and safe.

What is AWS Location Service?

With the help of the mapping service AWS Location Service, you can include geographic information and location features into apps, such as dynamic and static maps, geocodes and place searches, route planning, device tracking, and geofencing. You can save money, protect consumer data, and quickly connect Amazon Location Service with other AWS services.

Everything you need to build mapping applications

Create captivating maps

Create digital maps that highlight your locations, show off your data, and provide insights to help you grow your company. Incorporate satellite images, thorough roads, and areas of interest into your maps to provide users an engaging experience. You can quickly overlay your own data to find trends, make wise choices, and produce dynamic, rich experiences.

Elevate your applications with places search

Use name, category, and proximity to quickly search, display, and filter locations, companies, and places. Use autocomplete to streamline address entry and improve data integrity and productivity. Give users dynamic search capabilities so they can locate what they’re looking for more quickly. To offer individualized user experiences, incorporate location-based features such as POI, geocoding, and reverse geocoding.

Optimize routes, maximize productivity

Plan effective routes, expedite deliveries, and cut expenses by utilizing real-time traffic, car limitations, and turn-by-turn guidance. Determine truck-specific routes, ensure that you stop in the best possible sequence, and view the GPS traces of your moving trucks. By visualizing your serviceable region, you can determine the best paths between several start and finish sites and make more informed selections.

Track your asset in real-time

Turn on asset tracking and monitoring in real time to get complete insight into your company’s resources and operations. Transform data into useful insights to maximize operational efficiency, simplify productivity, and enhance asset usage.

Unlock the power of location-based monitoring

Use location-based monitoring to set off notifications automatically, giving you the ability to react to situations and lower risks. Recognize when people or cars depart prohibited locations. To improve operations and expedite cost management, filter for pertinent activities. Gain end-to-end visibility and turn data into actionable insights to boost productivity and asset usage.

Principal advantages

An outline of the main advantages of AWS Location Service is given in this section. The primary advantages are:

Access high-quality geospatial data

With an emphasis on four main advantages smooth AWS integration, data ownership and privacy, cost savings, and quick development Amazon Location Service provides a complete solution for incorporating geographic features into applications. The service allows you to swiftly integrate location-based functionality while keeping control over your data and lowering operating expenses by utilizing AWS infrastructure and security protections.

Integrate seamlessly

You may swiftly deploy and expedite application development using AWS Location Service’s smooth connections with AWS services. To expedite development processes, speed up deployment, and offer strong monitoring, security, and compliance, Amazon Location easily interacts with a range of AWS services. It integrates with Amazon CloudWatch to monitor metrics, AWS CloudTrail to document account activity, Amazon EventBridge for event-driven architectures using AWS Lambda, and AWS CloudFormation to provide resources consistently.

Additionally, Amazon Location uses resource tagging for effective administration and organization and AWS Identity and Access administration (IAM) for safe access restrictions. Faster development cycles, operational visibility, and adherence to security best practices are made possible by this thorough integration.

Own your data

You may lower the security risks of your application, safeguard critical data, and preserve user privacy with AWS Location Service. To guarantee the privacy and security of your company’s data, Amazon Location offers strong data management, rights, and secure access safeguards. AWS Key Management Service (AWS KMS) integration allows you to utilize your own encryption keys, anonymizes searches, and encrypts sensitive location data both in transit and at rest.

Your data is entirely yours, and no third party may use it for marketing or reselling. Using AWS’s well-established security services, Amazon Location also easily connects with AWS Identity and Access Management and Amazon Cognito for safe authentication and access restrictions, protecting your data and apps.

Reduce operating costs

You may get high-quality, reasonably priced geographic data from reliable data sources using AWS Location Service. There is no minimum cost or upfront commitment required with Amazon Location. You only pay for what you really use. Each time your application submits a request to the service, location data is charged.

Rapid development

Developers may easily include geolocation data and functionality into their apps with AWS Location Service. Amazon Location removes the need to create and manage intricate location data infrastructure by offering fully managed APIs. Additionally, developers may use the service’s smooth integration with AWS services like AWS CloudFormation, CloudWatch, CloudTrail, and EventBridge to create effective, event-driven architectures.

Prototyping, iteration, and deployment of location-based experiences are sped up by this integration, which simplifies the development process. Businesses can quickly add geographic capabilities to their services using Amazon Location, cutting down on time-to-market and freeing up developers to concentrate on creating core products.

Use cases

Standardize addresses and clean-up your data

Make that the format of the address data is consistent. Remove duplicates from data sets and fix any spelling or abbreviation mistakes. To properly inform resource planning, marketing efforts, and effective delivery, know where your consumers are.

Optimize your deliveries

To save transportation costs and increase on-time deliveries, find the optimal delivery route between sources and destinations based on trip time, distance, road features, and real-time traffic.

Visualize your data on interactive maps

Incorporate maps into your apps to provide experiences that are location-based. Help users locate particular addresses, search for areas of interest, and visualize company locations. To engage your consumers, turn on geotagging and location sharing with ease.

Track and trace your assets

Boost property, human, and supply chain resource traceability and transparency to maximize use, enhance data-driven decision-making, and reduce risk factors that drive costs.

Read more on Govindhtech.com

#AWSLocationService#AmazonLocation#AWSservices#AmazonLocationService#mapping#News#Technews#Technology#Technologynews#Technologytrends#Govindhtech

0 notes

Text

Leading Web3 gaming platform Immutable has announced a partnership with Amazon Web Services (AWS) in a bid to boost the Web3 gaming ecosystem. According to the blockchain gaming firm, the partnership would allow it to access a pipeline of game studio deals and support for successful deal closures. Immutable AWS Partnership Immutable announced the news about the partnership on X (formerly Twitter). The announcement stated that both Immutable and Amazon Web Services are working together to shape the future of gaming. “Amazon Web Services and Immutable are working together to shape the future of gaming! Through our collaboration with Amazon, we will gain access to a vast pipeline of game studio leads, support for successful deal closures, and up to $100k in AWS cloud credits per Immutable customer. When data from the cloud is validated on Ethereum, a viable model for blockchain gaming gets realized. Immutable has joined Amazon’s ISV Accelerate Program! This co-sell program allows us to access expert resources from AWS to help secure prospective customers and ultimately close deals with major game studios worldwide.” According to Immutable’s blog post, published on the 10th of October, Immutable revealed that Amazon Web Services had added it to a list of companies within its ISV (independent software vendors) Acceleration Program. The program allows companies to provide software solutions that can integrate with or run on Amazon Web Services. Furthermore, developers looking to build on the Immutable blockchain can join AWS Activate. AWS Activate provides developers with perks such as technical support, training, and $100,000 worth of Amazon Cloud Credits. The partnership will provide a significant boost to Web3 gaming. Web3 gaming is a fast-growing trend that uses blockchain technology to give players true ownership of in-game assets. Players can then trade these assets with other players. Nearly 100 million gamers are estimated to take to Web3 gaming over the next couple of years. AWS Committed To Expanding Web3 Gaming John Kearney, the AWS head of Startups Australia, stated that Immutable is an excellent example of a local Australian startup that has gone global. Kearney also reaffirmed Amazon Web Service’s commitment to help expand Web3 game development using its existing infrastructure. Immutable has been built using the Amazon EventBridge and the AWS Lambda. These are serverless services that use events to connect application components. This has allowed the platform to significantly boost scalability to handle a 10x increase in partnered games. The blog post stated, “Built with Amazon EventBridge, a serverless service that uses events to connect application components together, and AWS Lambda, a serverless compute service, Immutable’s serverless architecture has allowed the platform to scale effectively to support its rapidly expanding product suite. Through these services, Immutable has increased its scalability to handle a 10x increase in partnered games and improved reliability to enhance the customer experience through increased security and over 99 percent uptime.” Centralization Concerns About Gaming On Ethereum There have been several concerns about the centralization of gaming and Ethereum, along with the over-reliance on Amazon. Amazon is a market leader and has captured around a third of the cloud services market. According to a report published in 2022, a majority of the active Ethereum nodes were running through centralized web providers such as Amazon Cloud Services. However, Michael Powell, the Immutable product marketing lead, put to rest some of the concerns, stating, “A lot of blockchain purists are very big into the idea of decentralization and that everything has to be on-chain, and that’s a massive deviation from where game developers actually build.” Immutable-Polygon Collaboration Immutable also began the public testing of its zero-knowledge Ethereum Virtual Machine (zkEVM) in collaboration with Polygon Labs.

According to Immutable, the zero-knowledge Ethereum Virtual Machine will help lower developmental costs for game developers while ensuring security and providing the network effects that come with the larger Ethereum ecosystem. Immutable was valued at $2.5 billion in March 2022 after a successful $200 million Series C funding round. Temasek, a Singaporean state-owned investment firm, led the round.

0 notes

Text

Integrating ROSA Applications with AWS Services (CS221)

Introduction

Red Hat OpenShift Service on AWS (ROSA) is a fully managed OpenShift solution that allows organizations to deploy, manage, and scale containerized applications in the AWS cloud. One of the biggest advantages of ROSA is its seamless integration with AWS services, enabling developers to build robust, scalable, and secure applications.

In this blog, we will explore how ROSA applications can integrate with AWS services like Amazon RDS, S3, Lambda, IAM, and CloudWatch, ensuring high performance, security, and automation.

1️⃣ Why Integrate ROSA with AWS Services?

By leveraging AWS-native services, ROSA users can: ✅ Reduce operational overhead with managed services ✅ Improve scalability with auto-scaling and elastic infrastructure ✅ Enhance security with AWS IAM, security groups, and private networking ✅ Automate deployments using AWS DevOps tools ✅ Optimize costs with pay-as-you-go pricing

2️⃣ Key AWS Services for ROSA Integration

1. Amazon RDS for Persistent Databases

ROSA applications can connect to Amazon RDS (PostgreSQL, MySQL, MariaDB) for reliable and scalable database storage.

Use AWS Secrets Manager to securely store database credentials.

Implement VPC peering for private connectivity between ROSA clusters and RDS.

2. Amazon S3 for Object Storage

Store logs, backups, and application assets using Amazon S3.

Utilize S3 bucket policies and IAM roles for controlled access.

Leverage AWS SDKs to interact with S3 storage from ROSA applications.

3. AWS Lambda for Serverless Functions

Trigger Lambda functions from ROSA apps for event-driven automation.

Examples include processing data uploads, invoking ML models, or scaling workloads dynamically.

4. AWS IAM for Role-Based Access Control (RBAC)

Use IAM roles and policies to manage secure interactions between ROSA apps and AWS services.

Implement fine-grained permissions for API calls to AWS services like S3, RDS, and Lambda.

5. Amazon CloudWatch for Monitoring & Logging

Use CloudWatch Metrics to monitor ROSA cluster health, application performance, and scaling events.

Integrate CloudWatch Logs for centralized logging and troubleshooting.

Set up CloudWatch Alarms for proactive alerting.

3️⃣ Steps to Integrate AWS Services with ROSA

Step 1: Configure IAM Roles

1️⃣ Create an IAM Role with necessary AWS permissions. 2️⃣ Attach the role to your ROSA cluster via IAM OpenShift Operators.

Step 2: Secure Network Connectivity

1️⃣ Use AWS PrivateLink or VPC Peering to connect ROSA to AWS services privately. 2️⃣ Configure security groups to restrict access to the required AWS endpoints.

Step 3: Deploy AWS Services & Connect

1️⃣ Set up Amazon RDS, S3, or Lambda with proper security configurations. 2️⃣ Update your OpenShift applications to communicate with AWS endpoints via SDKs or API calls.

Step 4: Monitor & Automate

1️⃣ Enable CloudWatch monitoring for logs and metrics. 2️⃣ Implement AWS EventBridge to trigger automation workflows based on application events.

4️⃣ Use Case: Deploying a Cloud-Native Web App with ROSA & AWS

Scenario: A DevOps team wants to deploy a scalable web application using ROSA and AWS services.

🔹 Frontend: Runs on OpenShift pods behind an AWS Application Load Balancer (ALB) 🔹 Backend: Uses Amazon RDS PostgreSQL for structured data storage 🔹 Storage: Amazon S3 for storing user uploads and logs 🔹 Security: AWS IAM manages access to AWS services 🔹 Monitoring: CloudWatch collects logs & triggers alerts for failures

By following the above integration steps, the team ensures high availability, security, and cost-efficiency while reducing operational overhead.

Conclusion

Integrating ROSA with AWS services unlocks powerful capabilities for deploying secure, scalable, and high-performance applications. By leveraging AWS-managed databases, storage, serverless functions, and monitoring tools, DevOps teams can focus on innovation rather than infrastructure management.

🚀 Ready to build cloud-native apps with ROSA and AWS? Start your journey today!

🔗 Need expert guidance? www.hawkstack.com

0 notes

Text

Your Trusted Cloud Optimization Planning Partner in the USA

In today's fast-paced digital landscape, harnessing the potential of cloud computing is essential for businesses seeking to stay competitive and agile. Amazon Web Services (AWS) has emerged as a leader in the cloud industry, offering a wide array of services that empower organizations to scale, innovate, and streamline their operations. To help businesses make the most of AWS and its cloud event services, a reliable partner is essential. In the USA, one such partner stands out as a beacon of expertise and innovation: the Free Aws Cloud Event Services USA and Cloud Optimization Planning Company.

Unveiling the AWS Cloud Event Services Advantage AWS Cloud Event Services form the backbone of modern cloud-based applications and infrastructure. They enable businesses to seamlessly integrate, monitor, and respond to events across their AWS environments. These services facilitate real-time data processing, event-driven architectures, and automation, paving the way for unparalleled scalability, resilience, and cost efficiency. Key AWS Cloud Event Services include AWS EventBridge, Amazon CloudWatch Events, and AWS Step Functions.

1. AWS EventBridge: AWS EventBridge provides a serverless event bus that connects applications using events. It simplifies event-driven architecture by routing events from various sources to targets like AWS Lambda functions or AWS SNS topics. This enables businesses to build responsive and decoupled systems.

2. Amazon CloudWatch Events: Amazon CloudWatch Events makes it easy to respond to changes in your AWS resources. It can trigger automated responses such as scaling, remediation, or notifications based on predefined rules. This service enhances operational efficiency and system reliability.

3. AWS Step Functions: AWS Step Functions is a serverless orchestration service that allows businesses to coordinate distributed applications and microservices. It makes it simple to build and run applications that respond to various events and workflows.

The Free AWS Cloud Event Services and Cloud Optimization Planning Company Advantage For businesses in the USA, optimizing AWS Cloud Event Services and achieving peak performance across the AWS ecosystem can be a daunting task. This is where the Free AWS Cloud Event Services and Cloud Optimization Planning Company comes into play. Here's why they are your ideal partner:

1. Expertise in AWS Cloud Event Services: With a team of certified AWS professionals, this company possesses in-depth knowledge and hands-on experience with AWS Cloud Event Services. They can design, implement, and manage event-driven architectures tailored to your business needs.

2. Comprehensive Cloud Optimization Planning: The company offers holistic cloud optimization planning services, ensuring that your AWS infrastructure is cost-efficient, secure, and high-performing. They conduct thorough assessments and provide actionable recommendations.

3. Proactive Monitoring and Management: Continuous monitoring and management of AWS Cloud Event Services ensure that your systems are always responsive and efficient. They proactively identify and address issues, minimizing downtime and maximizing ROI.

4. Scalability and Flexibility: As your business grows, the company can scale AWS resources and event-driven solutions to accommodate changing demands. This scalability ensures that your cloud infrastructure keeps pace with your evolving requirements.

5. Cost Optimization: The company specializes in cost optimization, helping you avoid overspending on AWS services. By analyzing your usage patterns and implementing cost-saving strategies, they ensure you get the most value from your AWS investments.

In conclusion, AWS Cloud Event Services offer a powerful framework for modern businesses to build dynamic, event-driven applications. To unlock their full potential, partnering with a dedicated AWS Cloud Event Services and Cloud Optimization Planning Company in the USA is paramount. With their expertise, you can harness the capabilities of AWS Cloud Event Services while optimizing your cloud environment for efficiency, scalability, and cost-effectiveness. Embrace the future of cloud computing with the right partner by your side.

0 notes