#azureai

Text

Inteligência Artificial - Cooperação entre NVIDIA e Microsoft

A Microsoft e a NVIDIA anunciaram integrações importantes no campo da Inteligência Artificial generativa para empresas em todo o mundo. Saiba mais em: https://news.microsoft.com/2024/03/18/microsoft-and-nvidia-announce-major-integrations-to-accelerate-generative-ai-for-enterprises-everywhere/

______

Direitos de imagem: © Microsoft (via https://news.microsoft.com/)

#MSFT#Microsoft#NVDA#NVIDIA#IA#AI#MicrosoftAzure#Azure#Chip#SuperChip#GraceBlackwell#Omniverse#Copilot#AzureAI#Empresas#Business#enterprise#developer#healthcare#digitalization#europe#world#technology#future#futurenow

2 notes

·

View notes

Text

Grace Hopper Superchip: Most Powerful Blackwell Series

The business that makes AI chips debuted its Blackwell chip line in March, replacing its previous flagship processor, the Grace Hopper Superchip, which accelerated generative AI applications.

The introduction of chip maker Nvidia’s future artificial intelligence processors may be delayed by up to three months due to design problems, the tech-focused newspaper The Information reported.

Nvidia AI Chip

The article cited sources that assist in producing Nvidia’s server hardware and chips to suggest that the setback may have an impact on clients like Meta Platforms, Microsoft, and Alphabet’s Google, which have ordered chips totaling tens of billions of dolla

An Nvidia representative responded to the report via email, saying, “As we’ve stated before, Hopper demand is very strong, broad Blackwell sampling has started, and production is on track to ramp in the second half.”

The Information said, citing a Microsoft employee and another individual with knowledge of the situation, that Nvidia notified Microsoft and another significant cloud service provider this week of a delay in the production of its most powerful Grace Hopper Superchip in the Blackwell series.

AI Chip

The Scarcity of AI Chips Is Serious

The IT industry is reeling from a recent revelation from The Information that revealed possible delays in Nvidia’s future AI chips.

Wide-ranging ramifications stem from this setback, potentially impacting big tech companies like Microsoft, Google, and Meta.

Leading the charge in the AI revolution has been Nvidia, the unchallenged monarch of AI chips. Even though its GPUs were once intended for gaming, they have shown to be incredibly skilled at performing the intricate calculations needed for AI model training and operation. The business has taken advantage of this edge, emerging as the preferred option for both startups and major tech companies.

Blackwell AI Chip

The Blues at Blackwell

Particular problems with Nvidia’s Blackwell line of AI chips are mentioned in the paper. With the potential to power increasingly complex and demanding AI applications, these Grace Hopper Superchip were predicted to represent the next great step forward in AI performance. But according to reports, design faults have surfaced, delaying the introduction by at least three months.

A ripple effect from this delay can affect the whole tech industry. AI drives innovation in search, advertising, virtual assistants, and autonomous automobiles for these companies. They may struggle to develop new products and services without high-performance AI chips.

Microsoft AI Chip

Microsoft: AI Copilot and Cloud Computing

The timing of the delay couldn’t be worse for Microsoft. With AI Copilot and its Azure cloud platform at the centre of its strategy, the corporation has made significant investments in Azure AI is already a significant source of income, and AI Copilot is seen to have the ability to revolutionize productivity software. For both projects to be successful and large-scale, powerful AI chips are a major dependency.

Azure’s capacity to provide its clients with cutting-edge AI services may be impacted by a delay in Nvidia’s chips. This can impede the uptake of AI-powered apps and perhaps push users to rival businesses. Furthermore, without access to the newest AI hardware, the development of AI Copilot which aspires to revolutionize human-computer interaction may be hampered.

Google AI Chip

Google: Look Up Dominance and AI Studies

Another pioneer in AI, Google, depends mostly on Nvidia CPUs for its data centres. Google search engine generates billions of dollars, and AI algorithms improve it. Google’s AI research branch, DeepMind, is also pioneering AI technology.

Google may find it more difficult to sustain its lead in search and to advance AI research if there is a scarcity of Grace Hopper Superchip. Due to their possible access to more sophisticated gear, rivals may have an advantage in the AI race as a result.

Meta: AI-Powered Feeds and Ambitions for the Metaverse

The goal of Meta, a company that was originally Facebook, is to create the metaverse a virtual environment where users may communicate with one another and virtual items. To generate realistic landscapes and experiences, this goal demands vast processing capacity, including artificial intelligence.

The business heavily use AI to customize the material that users view in their feeds. Meta’s development in these two areas could be impeded by a delay in Nvidia’s chips. It might have an effect on how the metaverse develops and how users interact with its platforms.

The More Wide-Reaching Effect

The delay in Nvidia’s chips may have an impact on the whole tech sector in addition to the major players in the market. Businesses that use AI to power their goods and services may find it difficult to grow. It might also have an effect on the auto industry, which is progressively utilizing AI for self-driving vehicles.

Nvidia Grace Hopper Superchip

Nvidia’s Reaction and Possible Substitutes

Although acknowledging the delay, Nvidia has insisted that manufacturing will increase in the second half of the year. It’s likely that the business is working nonstop to fix the design flaws and make sure the Grace Hopper Superchip arrive as soon as feasible.

Other chip manufacturers, like AMD and Intel, might profit from the circumstance in the interim. These businesses have been making significant investments in the creation of AI chips, and they might be able to partially replace Nvidia. They probably won’t be able to match Nvidia’s scale and performance right now, though.

The Path Ahead

The tech industry has suffered a major blow due to Nvidia’s AI processor delay. It draws attention to how important hardware is in fostering AI advancement. In order to address the growing needs of their consumers, chip makers must make research and development investments as the demand for AI continues to rise.

The upcoming months hold great importance for both Nvidia and its clientele. Customers must discover strategies to lessen the impact on their AI initiatives, and the enterprise must perform perfectly to recoup from the delay. How this plays out could have an impact on AI’s future as well as the IT sector overall.

Potential sections to follow:

More in-depth analysis of particular AI applications (including recommendation systems, computer vision, and natural language processing) that were hampered by the delay

An examination of possible substitute chip manufacturers and their competencies

Analysing the long-term effects on the market for AI chips

Examining the moral issues surrounding the supply chain and the creation of AI chips

Read more on govindhtech.com

#GraceHopperSuperchip#MostPowerfulBlackwellSeries#Nvidia#ai#gpu#AIchips#AIapplications#artificialintelligence#NvidiaAIChip#Microsoft#AImodel#virtualassistants#AICopilot#cloudplatform#NvidiaCPU#cpu#AzureAI#AIhardware#computervision#supplychain#technology#technews#news#govindhtech

0 notes

Text

Elevate Your Educational Institution with Managed IT Services from ECF Data Solutions!

At ECF Data Solutions, we specialize in tailored IT solutions designed specifically for the education sector. Safeguard student data and ensure seamless access to essential teaching and learning tools with our Managed IT Services. Let us handle the tech complexities while you prioritize your students' growth and success. Discover how ECF Services can assist you in optimizing your educational management:

Cloud management

AI for Education Tools

Managed IT Services

Powered by Azure AI, our innovative solutions revolutionize education by providing personalized insights, optimizing administrative processes, and fostering inclusivity. Experience the transformative power of Azure AI Translator for language translation, Azure OpenAI Service for virtual learning assistants, and Azure AI Content Safety for content filtering. Learn more about our comprehensive IT solutions and propel your institution towards excellence with ECF Data Solutions!

Contact us: +1(702) 780-7900

1 note

·

View note

Video

youtube

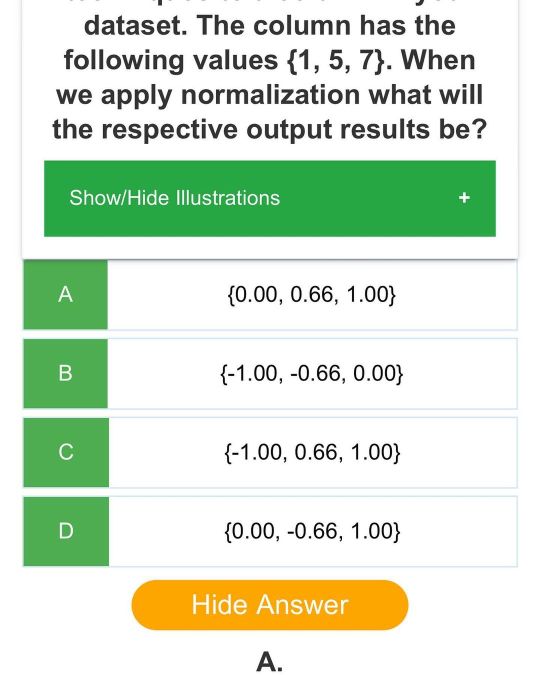

Azure AI Fundamentals ai-900 part4 practice question

0 notes

Text

youtube

Microsoft Fabric: The Future of Enterprise Analytics.

Mode of Training: Online

Contact us: +91 9989971070.

Join us on WhatsApp: https://bit.ly/47eayBz

Watch Demo Video: https://youtu.be/Fpoe2YF_uW8?si=hTCKsNDgcfM-RoTh

Visit: https://visualpath.in/microsoft-fabric-online-training-hyderabad.html

To subscribe to the Visualpath channel & get regular updates on further courses: https://www.youtube.com/c/Visualpath

#Microsoft#fabric#Azure#microsoftazure#AzureAI#OnlineLearning#training#trendingcourses#handsonlearning#visualpathedu#softwaredevelopment#PowerBI#DataFactory#synapse#dataengineering#DataAnalytics#DataWarehouse#datascience#Youtube

0 notes

Text

"Unleashing Innovation: NVIDIA H100 GPUs and Quantum-2 InfiniBand on Microsoft Azure"

#aiinnovation#highperformancecomputing#nvidiah100#azureai#quantum2infiniband#microsoftazure#futureoftechnology#gpucomputing#innovationinai#techupdates#aIoncloud#cloudcomputing#hpc#aiinsights#cuttingedgetech#supercomputing#nvidiaquantum2#aIonazure#techbreakthrough#jjbizconsult

0 notes

Text

セガサミーHDが幼児向け絵本プロジェクター「ドリームスイッチ」開発に生成AI活用:AzureAIを基におもちゃデザインを事前学習

生成AIの導入でおもちゃ開発が劇的に進化

セガサミーホールディングス(HD)は、幼児向け絵本プロジェクター「ドリームスイッチ」シリーズの開発に生成AIを活用し始めました。この生成AIは、米マイクロソフトの「Azure…

View On WordPress

0 notes

Photo

Machine Learning For Dummies on iOs: https://apps.apple.com/us/app/machine-learning-for-dummies-p/id1610947211 Windows: https://www.microsoft.com/en-ca/p/machine-learning-for-dummies-ml-ai-ops-on-aws-azure-gcp/9p6f030tb0mt Web/Android: https://www.amazon.com/gp/product/B09TZ4H8V6 #MachineLearning #AI #ArtificialIntelligence #ML #MachineLearningForDummies #MLOPS #NLP #ComputerVision #AWSMachineLEarning #AzureAI #GCPML https://www.instagram.com/p/CejSHqnp0-3/?igshid=NGJjMDIxMWI=

#machinelearning#ai#artificialintelligence#ml#machinelearningfordummies#mlops#nlp#computervision#awsmachinelearning#azureai#gcpml

0 notes

Link

Azure Functions for Python just went to GA. And it works great with Cognitive Search!!

0 notes

Photo

R Support in Azure Machine Learning http://ehelpdesk.tk/wp-content/uploads/2020/02/logo-header.png [ad_1] In this episode we will discuss ... #androiddevelopment #angular #azureai #azuremachinelearning #azureml #azuremlr #azuremlrsdk #c #css #dataanalysis #datascience #deeplearning #developer #development #docker #iosdevelopment #java #javascript #machinelearning #microsoft #microsoftai #node.js #python #react #unity #webdevelopment

0 notes

Text

Updates to Azure AI, Phi 3 Fine tuning, And gen AI models

Introducing new generative AI models, Phi 3 fine tuning, and other Azure AI enhancements to enable businesses to scale and personalise AI applications.

All sectors are being transformed by artificial intelligence, which also creates fresh growth and innovation opportunities. But developing and deploying artificial intelligence applications at scale requires a reliable and flexible platform capable of handling the complex and varied needs of modern companies and allowing them to construct solutions grounded on their organisational data. They are happy to share the following enhancements to enable developers to use the Azure AI toolchain to swiftly and more freely construct customised AI solutions:

Developers can rapidly and simply customise the Phi-3-mini and Phi-3-medium models for cloud and edge scenarios with serverless fine-tuning, eliminating the need to schedule computing.

Updates to Phi-3-mini allow developers to create with a more performant model without incurring additional costs. These updates include a considerable improvement in core quality, instruction-following, and organised output.

This month, OpenAI (GPT-4o small), Meta (Llama 3.1 405B), and Mistral (Large 2) shipped their newest models to Azure AI on the same day, giving clients more options and flexibility.

Value unlocking via customised and innovative models

Microsoft unveiled the Microsoft Phi-3 line of compact, open models in April. Compared to models of the same size and the next level up, Phi-3 models are their most powerful and economical small language models (SLMs). Phi 3 Fine tuning a tiny model is a wonderful alternative without losing efficiency, as developers attempt to customise AI systems to match unique business objectives and increase the quality of responses. Developers may now use their data to fine-tune Phi-3-mini and Phi-3-medium, enabling them to create AI experiences that are more affordable, safe, and relevant to their users.

Phi-3 models are well suited for fine-tuning to improve base model performance across a variety of scenarios, such as learning a new skill or task (e.g., tutoring) or improving consistency and quality of the response (e.g., tone or style of responses in chat/Q&A). This is because of their small compute footprint and compatibility with clouds and edges. Phi-3 is already being modified for new use cases.

Microsoft and Khan Academy are collaborating to enhance resources for educators and learners worldwide. As part of the partnership, Khan Academy is experimenting with Phi-3 to enhance math tutoring and leverages Azure OpenAI Service to power Khanmigo for Teachers, a pilot AI-powered teaching assistant for educators in 44 countries. A study from Khan Academy, which includes benchmarks from an improved version of Phi-3, shows how various AI models perform when assessing mathematical accuracy in tutoring scenarios.

According to preliminary data, Phi-3 fared better than the majority of other top generative AI models at identifying and fixing mathematical errors made by students.

Additionally, they have optimised Phi-3 for the gadget. To provide developers with a strong, reliable foundation for creating apps with safe, secure AI experiences, they launched Phi Silica in June. Built specifically for the NPUs in Copilot+ PCs, Phi Silica expands upon the Phi family of models. The state-of-the-art short language model (SLM) for the Neural Processing Unit (NPU) and shipping inbox is exclusive to Microsoft Windows.

Today, you may test Phi 3 fine tuning in Azure AI

Azure AI’s Models-as-a-Service (serverless endpoint) feature is now widely accessible. Additionally, developers can now rapidly and simply begin developing AI applications without having to worry about managing underlying infrastructure thanks to the availability of Phi-3-small via a serverless endpoint.

The multi-modal Phi-3 model, Phi-3-vision, was unveiled at Microsoft Build and may be accessed via the Azure AI model catalogue. It will also soon be accessible through a serverless endpoint. While Phi-3-vision (4.2B parameter) has also been optimised for chart and diagram interpretation and may be used to produce insights and answer queries, Phi-3-small (7B parameter) is offered in two context lengths, 128K and 8K.

The community’s response to Phi-3 is excellent. Last month, they launched an update for Phi-3-mini that significantly enhances the core quality and training after. After the model was retrained, support for structured output and instruction following significantly improved.They also added support for |system|> prompts, enhanced reasoning capability, and enhanced the quality of multi-turn conversations.

They also keep enhancing the safety of Phi-3. In order to increase the safety of the Phi-3 models, Microsoft used an iterative “break-fix” strategy that included vulnerability identification, red teaming, and several iterations of testing and improvement. This approach was recently highlighted in a research study. By using this strategy, harmful content was reduced by 75% and the models performed better on responsible AI benchmarks.

Increasing model selection; around 1600 models are already accessible in Azure AI

They’re dedicated to providing the widest range of open and frontier models together with cutting-edge tooling through Azure AI in order to assist clients in meeting their specific cost, latency, and design requirements. Since the debut of the Azure AI model catalogue last year, over 1,600 models from providers such as AI21, Cohere, Databricks, Hugging Face, Meta, Mistral, Microsoft Research, OpenAI, Snowflake, Stability AI, and others have been added, giving us the widest collection to date. This month, they added Mistral Large 2, Meta Llama 3.1 405B, and OpenAI’s GPT-4o small via Azure OpenAI Service.

Keeping up the good work, they are happy to announce that Cohere Rerank is now accessible on Azure. Using Azure to access Cohere’s enterprise-ready language models Businesses can easily, consistently, and securely integrate state-of-the-art semantic search technology into their applications because to AI’s strong infrastructure. With the help of this integration, users may provide better search results in production by utilising the scalability and flexibility of Azure in conjunction with the highly effective and performant language models from Cohere.

With Cohere Rerank, Atomicwork, a digital workplace experience platform and a seasoned Azure user, has greatly improved its IT service management platform. Atomicwork has enhanced search relevancy and accuracy by incorporating the model into Atom AI, their AI digital assistant, hence offering quicker, more accurate responses to intricate IT help enquiries. Enterprise-wide productivity has increased as a result of this integration, which has simplified IT processes.

Read more on govindhtech.com

#AzureAI#Phi3#gen#AImodels#generativeAImodels#OpenAi#AzureAImodels#ai21#artificialintelligence#Llama31405B#slms#Phi3mini#smalllanguagemodels#AzureOpenAIService#npus#Databricks#technology#technews#news#govindhtech

0 notes

Text

🌎 AI Bootcamp - Tomorrow in Fort Lauderdale!

Join me tomorrow at the Microsoft Fort Lauderdale office or at a location near you. #GlobalAIBootcamp 🤖 https://globalai.community/global-ai-bootcamp #SQLServer #MachineLearning #AzureAI

I’ll be speaking at Global AI Bootcamp this weekend in the Microsoft Fort Lauderdale office. My session will be at 1:30 pm in Room 3, tomorrow, Saturday. It’s free to attend.

I am very excited to cover new material on Spark and Azure Data Studio Machine Learning Services extension which is soon to-be-announced. I hope you get the chance to join me and come the event.

Repo

https://github.com/hflei…

View On WordPress

0 notes

Photo

Azureai Service in presents a great platform for all to learn and earn :- The Guide and Pathway to FOREX trading = N30,000 flat. *Mini importation, *Cheap data and payment of Bills, *Recharge card printing, *Blogging Guide and Tips, (N2000). It's so cheap. And lot lot more .... Join Asap. 👇 Before group get filled, limited to 250 persons. https://chat.whatsapp.com/CSY78JQc1ErBwBPH7aNmJI It's Starting On Whatsapp First. 🙌🙌🙌🙌🙌🙌🙌 #Blogger #Bloggerstyle #Graphicsdesigner #Forexgroup #Forexmentor #Forexnigeria #Forextraining #Forextrade #Forexinvestment #Forexquotes #Forextraders #portharcourt #bayelsa #dropshipping #mtn #naira #Forexeducation #Forexbroker #Forexclass #Forexprofit #Forexchart #Instablog9ja #nigeriafootball #1Ddrive (at Bayelsa) https://www.instagram.com/p/BzO-18qAE_3/?igshid=1jpweta934c9e

#blogger#bloggerstyle#graphicsdesigner#forexgroup#forexmentor#forexnigeria#forextraining#forextrade#forexinvestment#forexquotes#forextraders#portharcourt#bayelsa#dropshipping#mtn#naira#forexeducation#forexbroker#forexclass#forexprofit#forexchart#instablog9ja#nigeriafootball#1ddrive

0 notes

Text

Microsoft Fabric Online Training New Batch

Join Now: https://meet.goto.com/252420005

Attend Online New Batch On Microsoft Fabric by Mr.Viraj Pawar.

Batch on: 29th February @ 8:00 AM (IST).

Contact us: +91 9989971070.

Join us on WhatsApp: https://www.whatsapp.com/catalog/919989971070/

Visit: https://visualpath.in/microsoft-fabric-online-training-hyderabad.html

#Microsoft#fabric#Azure#microsoftazure#AzureAI#OnlineLearning#training#trendingcourses#handsonlearning#visualpathedu#softwaredevelopment#PowerBI#DataFactory#synapse#dataengineering#DataAnalytics#DataWarehouse#datascience

1 note

·

View note

Text

The latest The Education and Technology News! https://t.co/vJzn7hQIBD Thanks to @KathyAugustDBE #azureai

The latest The Education and Technology News! https://t.co/vJzn7hQIBD Thanks to @KathyAugustDBE #azureai

— Dr. Albin Wallace (@albinwallace) July 11, 2020

from Twitter https://twitter.com/albinwallace

July 11, 2020 at 10:24AM

via IFTTT

0 notes

Text

जियो फाइबर (Jio Fiber) कॉमर्शियली लॉन्च, जाने कैसे मिलेगा कनेक्शन ?

जियो फाइबर (Jio Fiber) कॉमर्शियली लॉन्च, जाने कैसे मिलेगा कनेक्शन ? @reliancejio #MukeshAmbani.#RILAGM #RIL #MukeshAmbani #Microsoft #JioDigitalLife #Azure #AzureAI #MicrosoftJioAlliance #Innovation #Growth #SatyaNadella #Office365

जी हाँ एक साल का लंबा इंतजार ख़त्म, आखिरकार जियो फाइबर (Jio Fiber) कॉमर्शियली लॉन्च हो गया। 5 सितंबर से जियो फाइबर (Jio Fiber) बाजार में आ जाएगा। आइए जानते है जियो फाइबर (Jio Fiber) का प्लान कैसा होगा, कितने रूपये देने होंगे और इसे खरीदने के लिए आपको क्या करना होगा। इसके लिए आपको सिर्फ रजिस्ट्रेशन कराना होगा।

https://twitter.com/reliancejio/status/1160784558805024768कैसे, कहाँ और कब कर…

View On WordPress

0 notes