#batch gradient descent

Explore tagged Tumblr posts

Text

Choosing the Right Gradient Descent: Batch vs Stochastic vs Mini-Batch Explained

The blog shows key differences between Batch, Stochastic, and Mini-Batch Gradient Descent. Discover how these optimization techniques impact ML model training.

In my previous post on gradient descent, I explained briefly what gradient descent means and what mathematical idea it holds. A basic gradient descent algorithm involves calculating derivatives of the cost function with respect to the parameters to be optimized. This derivative is calculated over the entire training set as a whole. Now if the data has samples in hundreds of thousands, the…

0 notes

Text

Day 8 _ Gradient Decent Types : Batch, Stochastic and Mini-Batch

Understanding Gradient Descent: Batch, Stochastic, and Mini-Batch Understanding Gradient Descent: Batch, Stochastic, and Mini-Batch Learn the key differences between Batch Gradient Descent, Stochastic Gradient Descent, and Mini-Batch Gradient Descent, and how to apply them in your machine learning models. Batch Gradient Descent Batch Gradient Descent calculates the gradient of the cost function…

#artificial intelligence#batch#batch gradient decent#classification#gradient decent#gradient decent types#large gradient decent#machine learning#Stochastic gradient descent

0 notes

Text

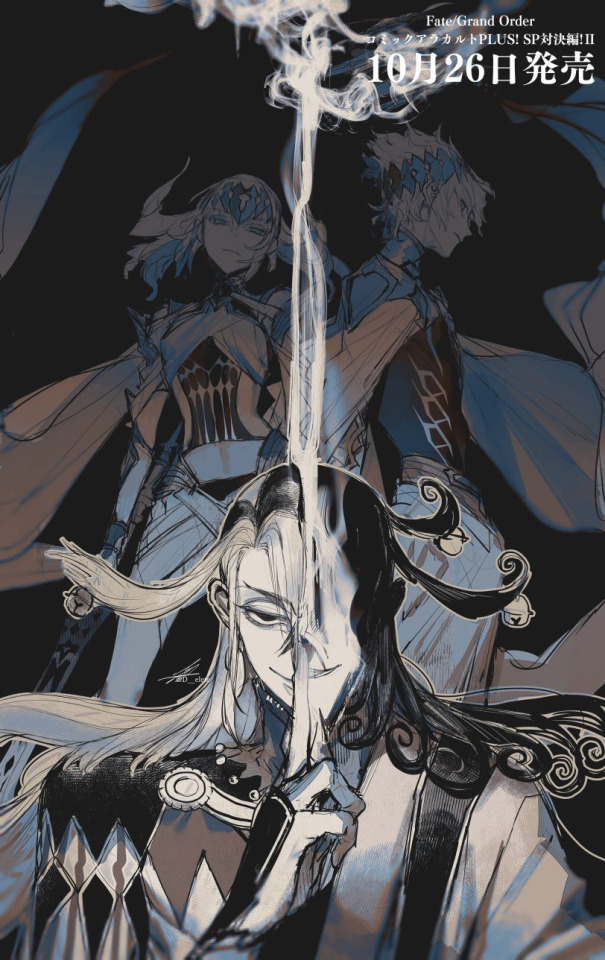

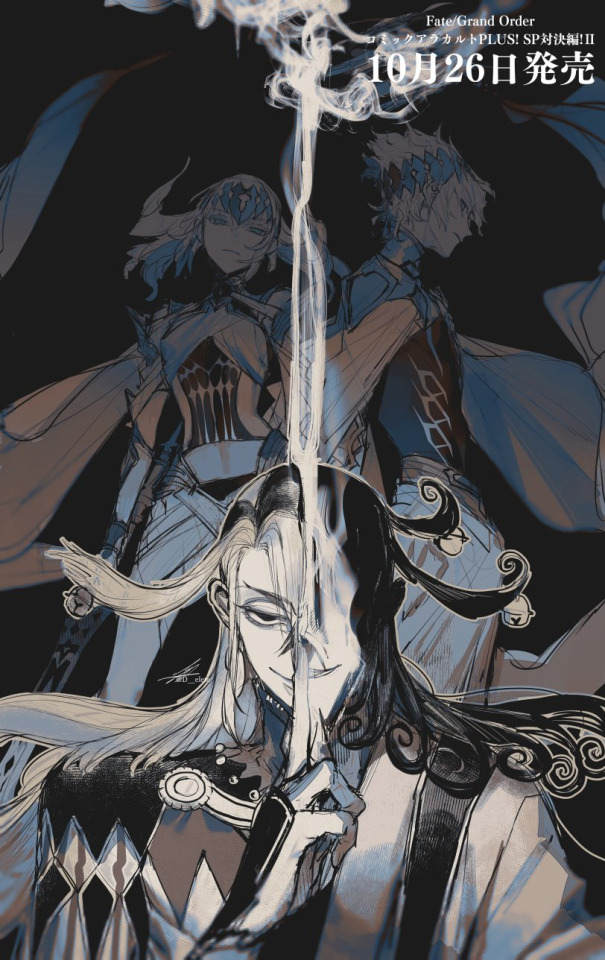

【FGO】 Beautiful Carnivore, the All-Ridiculing 💚🐈⬛

Beautiful Carnivore, the All-Ridiculing

一切嘲弄 美しき肉食獣

Original + Version with Gradient Maps

Quick Douman scribble 💚 Them!!! My evil NB GNC jester icon~ God eater clown! I love them so much!!! He is beauty, he is grace 💖✨

Douman coloured doodle WIP I did back in January 2023.

It's old, so I'd want to redraw it to make it consistent with my usual style, but I figured I'd post it for now since Idk when I'll get the chance to redraw it.

FGO popped off when they created an emotionally nuanced complex complicated jester who deeply loves the human they're obsessed with 💖✨ They are simply PEAK

Douman and LimGuda are EVERYTHING to me I've dedicated my soul to them since December 2020!!! 💚🧡🐈⬛🍊

"Beautiful Carnivore, the All-Ridiculing" is such a badass title for them oh my goddd I'm obsessed

I take lots of breaks from FGO but I'm always here to see my faves 👁️ Like awakening the slumbering beast...

Hasendow (Showichi Furumi), Douman's character artist is really good at harmonizing colours and working with green and yellow tones

I tend to use cooler tones and saturate pink/red hues in my arts (though it depends on the art for me). It also has nice contrast against their reds and greens! 💖

I already loved the colours of the OG that I chose but the others are so cool to see. It's fun to see the way low opacity gradient maps shift the colours in subtle ways WHOAAA

It's super messy but it's honestly one of my fave doodles in terms of colours 💗 I'm a big fan of the colours too hehe ✨

I used to airbrush the skin colour over the hair (which is a popular stylistic choice), but I don't do it anymore cuz I think my saturated art style looks better with higher contrast.

I think that Douman doodle has some of the best colour work I've done

It's a rough draft WIP so it's pretty messy, but I love the way the colours harmonize on this one 🤭 💗 I should do colour drafts more often…

I think it's cool to use the same green in their earrings and eyeliner, in their hair as well hehe

When I do these rough colour drafts, it's like if I took a bucket of paint and splashed it on the wall. Helps me get a sense of colours!

I love picking colours, and I think it'd be interesting to take this kind of painting style further whenever I get the chance to refine it more 💞

I definitely want to lean into this messy painting style. I wanna get stronger at the painterly style!

Because of Douman and Kuroha (KagePro), I've always wanted to draw mild horror pieces 🥰💞 It'd be super fun to draw Douman and LimGuda arts with mild artistic horror vibes in the future.

My cute kitty is so versatile. They can be cute silly and be peak horror all at once!

I need to get stronger at drawing them. Douman's design is the most detailed and complicated out of my faves, but I wanna draw LOTS of LimGuda... AHHH...

Check under the cut for the other versions (versions with gradient maps)

Other Versions

I think for some of these, I'd have to fix them manually cuz gradient maps and colour adjustment filters can throw the contrast out of wack so I'd paint over stuff later

Here's the rest of the batch of colour tests I did!

I did 18 in total but I only posted 10

Rambles

I've been obsessed with Ashiya Douman, the evil clown cat since December 2020. Didn't even take me a week to be obsessed. It took 5 days. Sen's Limbo December Descent 🙌

LimGuda is my comfort HC NBLNB ship 💚🧡 They've been one of my top fave ships since December 2020. The spice of a gay evil clown demon who hates humanity, with a karmic relationship of love/hate with the human they're in love with, is unparalleled.

I can’t get over how much I adore Douman. They’re simply the best. This chaos clown is a forever fave of mine~ I love how in-depth and nuanced this hot evil jester onmyouji is. The emotional nuance and complexity of this chara…

“WANTS TO WATCH THEM FALL TO HELL BUT UNWITTINGLY FALLS IN LOVE WITH THEM.” I’M DEVASTATED ABOUT THEM

The amount of detail that went into Douman's character and design is insane to me… Hasendow AKA Showichi Furumi (Douman's character artist/illustrator) has such a huge brain 🤯

My LimGuda collection is my pride and joy 🤭

My Douman/LimGuda merch pile pentagram from November 2022 LMAO. This isn't even all of it

(I tried to mimic the pentagram as much as possible. I scribbled out where I was standing on the bed)

When Douman first released on FGO NA in November 2022, I made a Douman pentagram filled with my Douman and LimGuda merch and fan-merch.

I did this back in 2022, so I have even MORE now to add to the Douman collection, so there's no way all of it is gonna fit on my bed

Douman merch summoning circle catalyst! We did summon an evil demon into my Chaldea~ Welcome home! You are now reunited with your BELOVED 🫶💚🧡

The clean up's gonna be hell with how detailed Douman's design is, but I love working with their colours! I wanna draw LimGuda in matching green and red Áo tấc so bad!!! Matching couple clothes~ 💘💞

Douman loves them with curses… LIKE A CAT TIPPING OVER VASES CUZ THEY WANT TO BE PAID ATTENTION TO. The whole “Limbo gets defeated/is overwhelmed by the power of feelies and their S/O’s Candid Sincerity" trope I love to see in their ship works that I wanna draw eventually btw… I wanna draw them lots!!!

Cinna said “Douman just reminds me of that ‘cat reacts to separation anxiety by trying to maul their owner’ thing I saw one time” LMAO

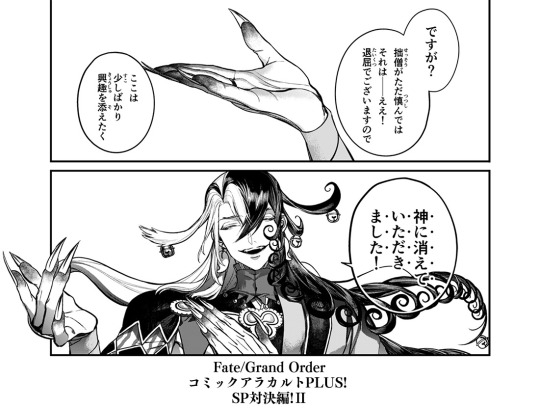

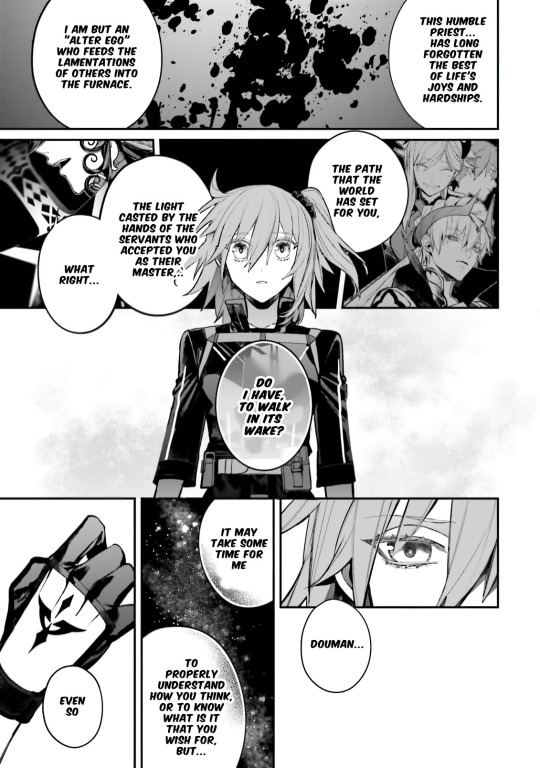

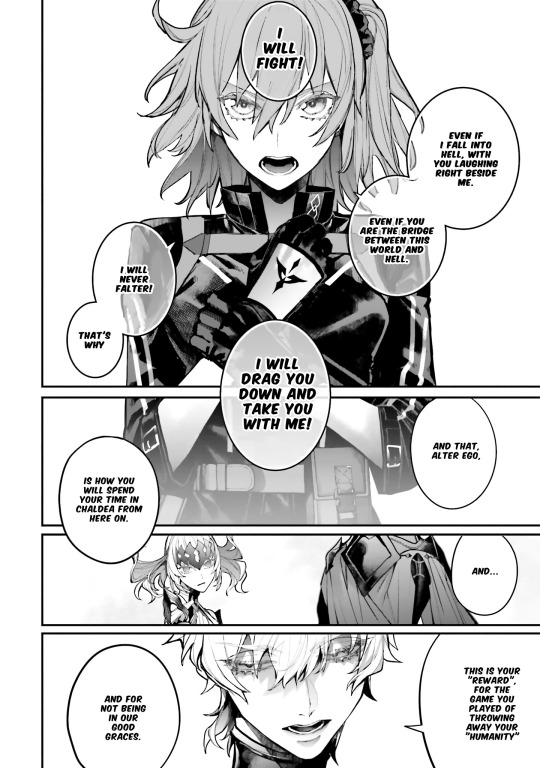

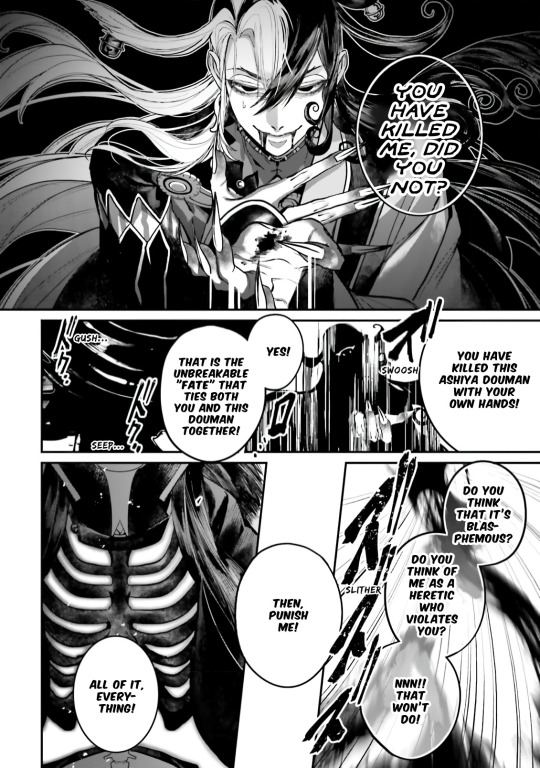

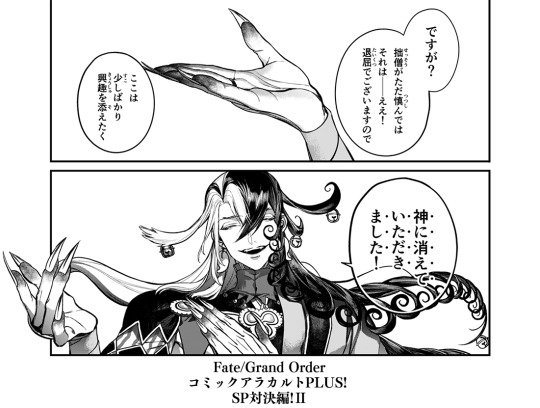

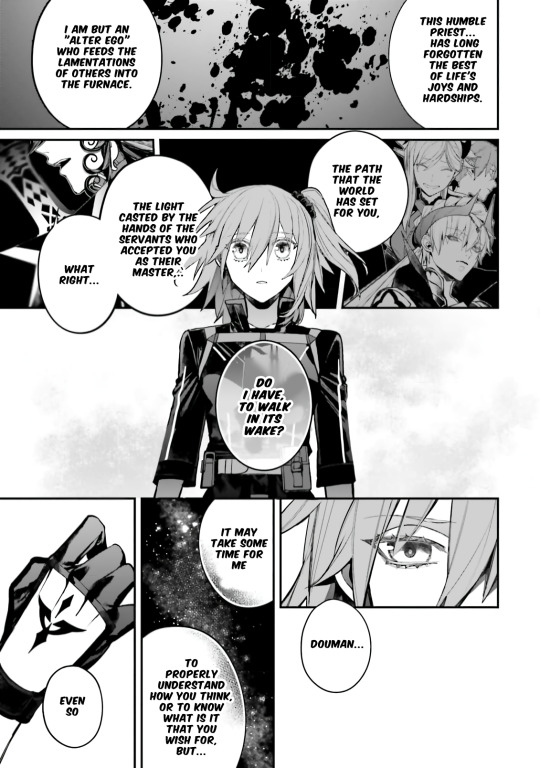

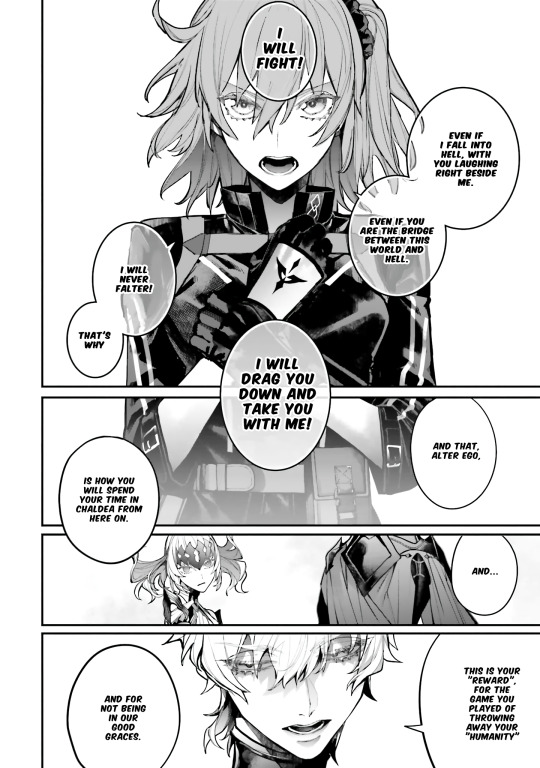

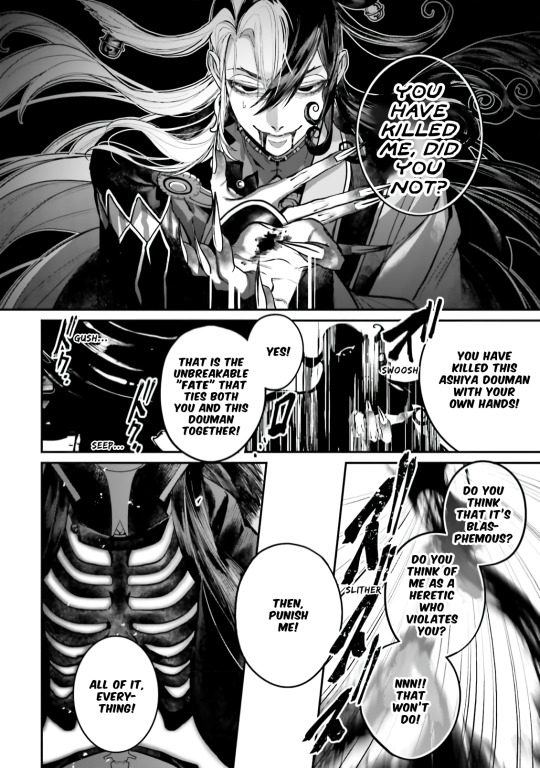

Ashiya Douman vs. Dioscuri Anthology Comic by AU

Source: (X)

(Context for my non-FGO mutuals following me)

Read the full 30+ pages above if you wanna check it out~

Reminds me of when I was rereading the Ashiya Douman vs. Dioscuri Anthology comic by AU (@/delete_au), that has huge amounts of LimGuda (Douman/Ritsuka) crumbs. DRIVES ME CRAZY...

AU (@/delete_au) is a popular Fate/Grand Order (FGO or Fate/GO) fan artist that's been commissioned to draw official works for Fate (including official merch)

AU’s art style is so intricately detailed and gorgeous. The perspectives, inking, and shading are so gorgeous. Huge art goals for me.

The Ashiya Douman vs. Dioscuri anthology comic has such beautiful art by AU (@/delete_au)

The way he sulks like a cat that wants to be paid attention to when Ritsuka hardly reacts when they sees the maimed shikigami 😭 Please give your kitty lots of attention!

Like once again Douman wants Ritsuka to pay attention to him and searches for reactions from them, but also, the way he refers to himself as their "loyal retainer"

Like a bird preening.

AU has a massive brain since the scenes, visuals, story and dialogue of this Anthology Comic were decided by them. It's also such a huge characterization surplus for Douman and such visual eye candy

Douman invited Ritsuka to fall into hell with them in-game in his My Room lines, and now they're the one inviting him, saying that they will drag him and take him along with them.

Douman tells them that the two of them are "bound by unbreakable/inseparable fate."

And the part where he sarcastically laughs at their speech by comparing it to Buddha's Great Vows, but also acknowledges their determination and tenacity... Aghhhh...

He makes this grandiose, deadly romantic speech back, telling them that they are "bound together by an unbreakable fate" (inseparable fate/karma)

JAW DROP??? THIS IS GAY AS HELL 🏳️🌈 LIMGUDA IS REAL!!! 💚🧡

I'm so glad for the fan-translation by u/kanramori

The EN fan-translation is SO good. I love the usage of Saṃvega at the end.

I got stuck on some parts reading the JP version cuz of Douman's vocabulary... I got to clear things up reading the VN fan-TL, and it was interesting to see how it was translated in Viet, but yeah the EN fan-TL cleared up so many things for me.

They drew the Ashiya Douman vs. Dioscuri chapter in one of the FGO anthologies. LIMGUDA ALSO INTERACT IN IT, AND THERE'S TONS OF LIMGUDA FOOD HEHEHEHE... Their art is so gorgeous. They're one of my favourite FGO artists, their works are stunning.

THE WHOLE THING IS SO DELICIOUS SO I'LL JUST SHOW A COUPLE PAGES FROM THE END, BUT OH MY GOD???

I LOVE THEMMMMMMMMMMM LOVE IS REALLLLLLL

LimGuda so good... NB evil clown demon and the human they're deadly obsessed with...

Douman is gigantic. Ritsuka's 200 cm tall (~6"7) bf that loves them!!!

AU'S DOUMAN COMIC FROM THE FGO ANTHOLOGY COMIC WITH DOUMAN AND RITSUKA IS MAKING ME SCREAM WTF /pos /endearing

LimGuda inspired SO much of my writing, including my writing for other ships from other interests I'm into.

"Black/white colour schemed meow meow mf with a bloodthirsty personality and violent tendencies, and/or deadly love/obsession, is paired with a warm empathetic s/o with a brighter disposition"

This Sen-core ship trope is present in all of my ships LMAO

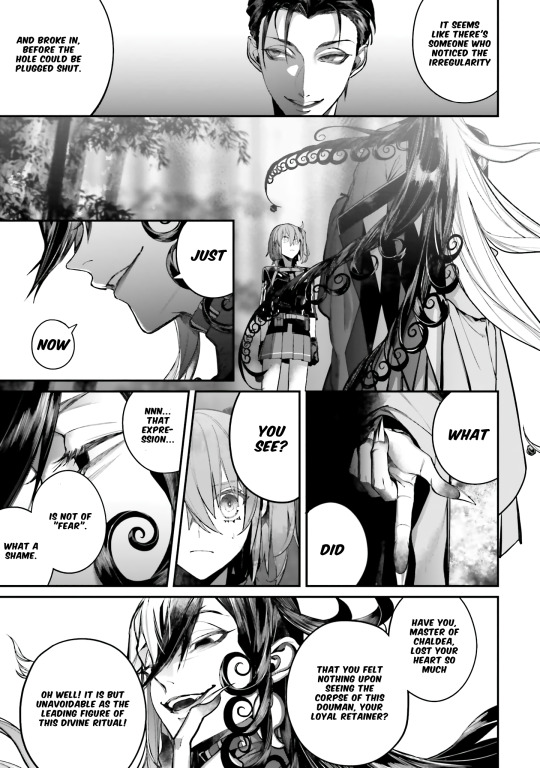

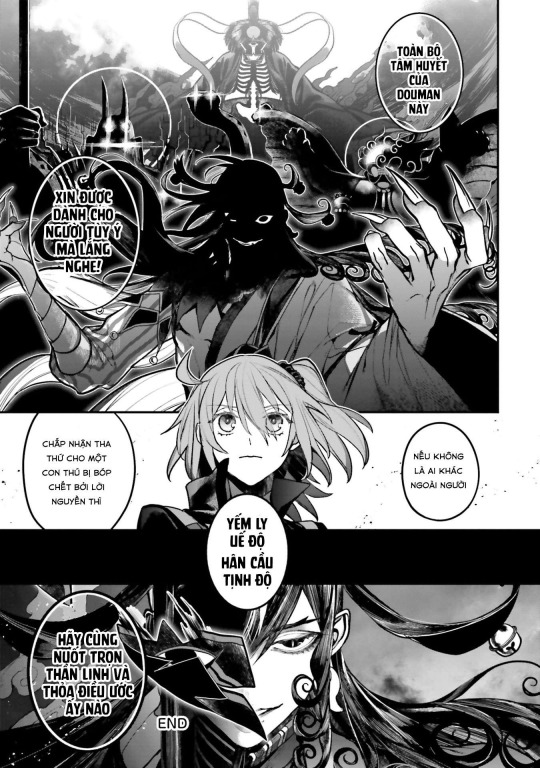

Ashiya Douman vs. Dioscuri by AU (Viet Fan-TL)

The VN fan-TL is pretty much VERY similar since it's based on the same JP text as the source material, but I'll highlight some neat tidbits I noticed

Source: (X)

道満:これぞ弘誓のごとき大言壮語!

道満:そうでしょうとも!

Douman: Such boastful words, just like Buddha’s great vows!

JP uses 弘誓 (Guzei, “Buddha’s great vows”)

The VN fan-TL uses Tứ Hoằng Thệ Nguyện ("The Four Encompassing Vows") of Buddhism, the fourfold bodhisattva vow. Only difference is that it refers to it directly

道満:そう!あなたとこの道満を結ぶは断ち切れぬ「因果」にございます

Douman: Yes, that's right! You and this Douman are connected by an unbreakable “fate”

JP uses 因果 (inga, "cause and effect; karma; fate")

VN fan-TL uses "Unbreakable karma (cause and effect)"

Nhân quả = Karmic cause and effect in Buddhism

I love the wording of him describing the way he's bound to them as “Unbreakable fate”

It's so sexy and fits so well with Douman’s character, saying they have a connection of inseparable fate/karma.

道満:厭穢欣浄(えんえごんじょう)

Douman: En’e’gon’jyou. (“I abhor this tainted world, and seek rebirth in the Pure Land.”)

JP version has it as 厭穢欣浄 (En’e’gon’jyou, “I abhor this tainted world, and seek rebirth in the Pure Land.”)

The VN fan-TL translated it as: Yếm ly uế độ, hân cầu Tịnh Độ = "I detest and want to leave the defilement of the Samsara, and seek rebirth in the Pure Land"

...

厭離穢土 (Onriedo/Enriedo, “Abhorrence of [living in] this impure world”)

The antithesis of Onriedo is 欣求浄土 (Gongujyoudo, “Seeking rebirth in the Pure Land”)

These are Yojijukugo (four character compounds), and concepts of Pure Land Buddhism that became popularized in the Heian era.

The full term is 「おんりえど・ごんぐじょうど」 (Onriedo/Enriedo - Gongujyoudo). The two combined, make a pair.

The phrase is usually shortened to 厭穢欣浄 (En’e’gon’jyou/En’ne’gon’jyou)

In Buddhism, it means, “I am disgusted with this world (I want to leave the world of filth/suffering and stain), and seek the Pure Land [of Buddha]”

...

Both the EN fan-TL and VN fan-TL are super interesting to read! It's been churning in my thoughts all day...

#fgo#fate grand order#ashiya douman#douman#caster limbo#caster of limbo#alter ego limbo#fate#fate series#fate go#artists on tumblr#digital art#fate fanart#fgo fanart#limguda#limboguda#douman x ritsuka#my art#wip#doodle#stepswordsen art#stepswordsen#art#artwork#fanart#sketch

24 notes

·

View notes

Text

【FGO】 Beautiful Carnivore, The All-Ridiculing 💚🐈⬛

And here's the other batch of colour tests I did

Ashiya Douman doodle 💚 🐈⬛

OG + playing around with gradient maps (18 total)

The 1st one is the OG!

I'm just testing rough colours for now so it's super messy and not refined yet

I already loved the colours of the OG that I chose but the other are so cool to see. The way low opacity gradient maps shift the colours in subtle ways WHOAA

If you're curious, check out the Ashiya Douman vs. Dioscuri anthology comic here! Popular FGO fan-artist and official artist AU (@delete_au) has such gorgeous art~

Rambles

I've been obsessed with Ashiya Douman, the evil clown cat since December 2020. Didn't even take me a week to be obsessed. It took 5 days. Sen's Limbo December Descent 🙌

LimGuda is my comfort HC NBLNB ship 💚🧡 They've been one of my top fave ships since December 2020. The spice of a gay evil clown demon who hates humanity, with a karmic relationship of love/hate with the human they're in love with, is unparalleled.

I can’t get over how much I adore Douman. They’re simply the best. This chaos clown is a forever fave of mine~ I love how in-depth and nuanced this hot evil jester onmyouji is. The emotional nuance and complexity of this chara…

“WANTS TO WATCH THEM FALL TO HELL BUT UNWITTINGLY FALLS IN LOVE WITH THEM.” I’M DEVASTATED ABOUT THEM

The amount of detail that went into Douman's character and design is insane to me… Hasendow AKA Showichi Furumi (Douman's character artist/illustrator) has such a huge brain 🤯

My LimGuda collection is my pride and joy 🤭 When Douman first released on FGO NA in November 2022, this was my Douman pentagram setup. Douman merch summoning circle catalyst! We did summon an evil demon into my Chaldea~ Welcome home! You are now reunited with your WIFE 🫶

The clean up's gonna be hell with how detailed Douman's design is, but I love working with their colours! I wanna draw LimGuda in matching green and red Áo tấc so bad!!! Matching couple clothes~ 💘💞

Douman loves them with curses… LIKE A CAT TIPPING OVER VASES CUZ THEY WANT TO BE PAID ATTENTION TO. The whole “Limbo gets defeated/is overwhelmed by the power of feelies and their S/O’s Candid Sincerity" trope I love to see in their ship works that I wanna draw eventually btw… I wanna draw them lots!!!

Cinna said “Douman just reminds me of that ‘cat reacts to separation anxiety by trying to maul their owner’ thing I saw one time” LMAO

Ashiya Douman vs. Dioscuri Anthology Comic by AU

The Ashiya Douman vs. Dioscuri anthology comic has such beautiful art by AU (@/delete_au). Douman invited Ritsuka to fall into hell with them & now they're the one inviting him. Douman tells them that the two of them are "bound by unbreakable/inseparable fate." JAW DROP??? THIS IS GAY AS HELL 🏳️🌈 LIMGUDA IS REAL!!! 💚🧡

AU (@/delete_au) is a popular Fate/Grand Order (FGO or Fate/GO) fan artist that's been commissioned to draw official works for Fate (including official merch)

TYSM to Carli (@/carlikun) for translating it... I got stuck on some parts reading the JP version cuz of Douman's difficult, esoteric and archaic vocabulary... I got to clear things up reading the VN fan-TL, and it was interesting to see how it was translated in Viet, but yeah the EN fan-TL cleared up so many things for me.

They drew the Ashiya Douman vs. Dioscuri chapter in one of the FGO anthologies. LIMGUDA ALSO INTERACT IN IT, AND THERE'S TONS OF LIMGUDA FOOD HEHEHEHE... Their art is so gorgeous. They're one of my favourite FGO artists, their works are stunning.

THE WHOLE THING IS SO DELICIOUS SO I'LL JUST SHOW A COUPLE PAGES FROM THE END, BUT OH MY GOD???

I LOVE THEMMMMMMMMMMM LOVE IS REALLLLLLL

Douman is gigantic. Ritsuka's 200 cm tall (~6"7) bf that loves them!!!

AU'S DOUMAN COMIC FROM THE FGO ANTHOLOGY COMIC WITH DOUMAN AND RITSUKA IS MAKING ME SCREAM WTF /pos /endearing

2 notes

·

View notes

Photo

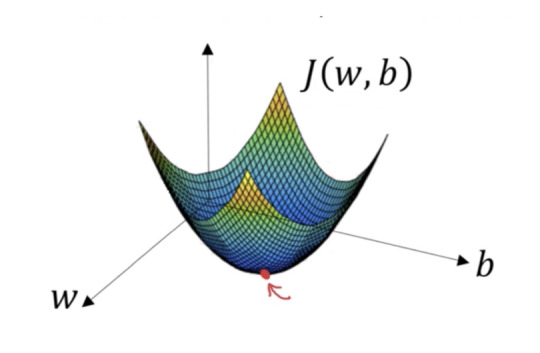

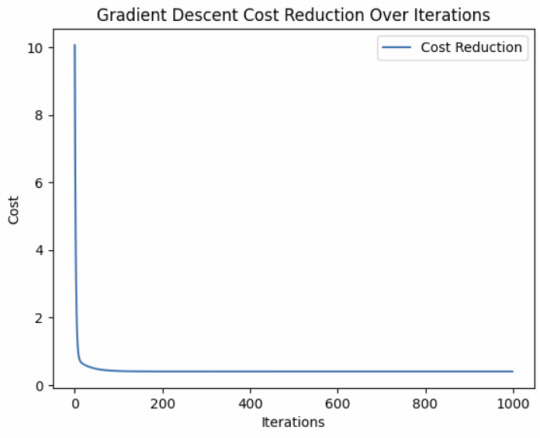

Gradient Descent is a fundamental optimization technique in machine learning that helps models learn by iteratively minimizing errors. Understanding its types and implementation ensures efficient model training. While choosing an appropriate learning rate and batch size is crucial, advancements like adaptive optimizers (Adam, RMSprop) have further improved convergence.

(via Gradient Descent in Machine Learning)

0 notes

Text

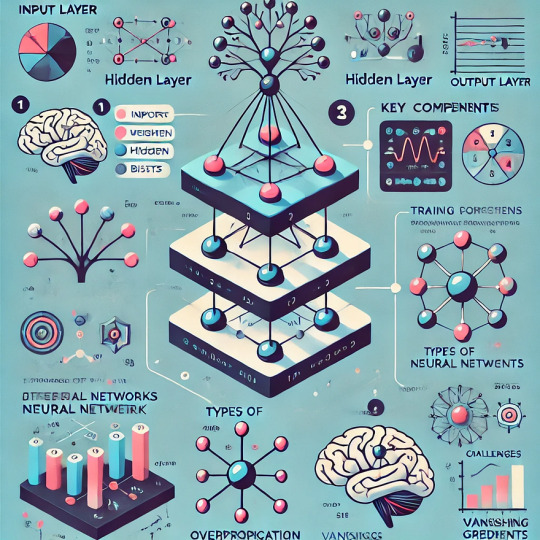

Understanding Neural Networks: Building Blocks of Deep Learning

Introduction

Briefly introduce neural networks and their role in deep learning.

Mention real-world applications (e.g., image recognition, NLP, self-driving cars).

Provide a simple analogy (e.g., comparing neurons in the brain to artificial neurons in a network).

1. What is a Neural Network?

Define neural networks in the context of artificial intelligence.

Explain how they are inspired by the human brain.

Introduce basic terms: neurons, layers, activation functions.

2. Architecture of a Neural Network

Input Layer: Where data enters the network.

Hidden Layers: Where computations happen.

Output Layer: Produces predictions.

Visual representation of a simple feedforward network.

3. Key Components of Neural Networks

Weights & Biases: How they influence predictions.

Activation Functions: ReLU, Sigmoid, Tanh (with examples).

Loss Function: Measures model performance (MSE, Cross-Entropy).

Backpropagation & Gradient Descent: Learning process of the network.

4. Types of Neural Networks

Feedforward Neural Networks (FNN)

Convolutional Neural Networks (CNNs): For image processing.

Recurrent Neural Networks (RNNs): For sequential data like text & speech.

Transformers: Modern architecture for NLP (BERT, GPT).

5. Training a Neural Network

Data preprocessing: Normalization, encoding, augmentation.

Splitting dataset: Training, validation, and test sets.

Hyperparameter tuning: Learning rate, batch size, number of layers.

6. Challenges in Neural Networks

Overfitting & Underfitting.

Vanishing & Exploding Gradients.

Computational cost and scalability.

7. Tools & Frameworks for Building Neural Networks

TensorFlow, Keras, PyTorch.

Example: Simple neural network in Python.

Conclusion

Recap key takeaways.

Encourage exploration of deep learning projects.

WEBSITE: https://www.ficusoft.in/deep-learning-training-in-chennai/

0 notes

Link

Step-by-step from fundamental concepts to training a basic generative modelContinue reading on Towards Data Science » #AI #ML #Automation

1 note

·

View note

Text

🔥 TĂNG TỐC HUẤN LUYỆN MÔ HÌNH AI BẰNG PHƯƠNG PHÁP GRADIENT DESCENT! 🚀

Bạn đã từng cảm thấy "quá tải" khi huấn luyện mô hình AI của mình chưa? 🤯 Đừng lo, vì Gradient Descent chính là chìa khóa vàng 🗝️ để bạn tối ưu hóa tốc độ và hiệu quả! ✅

💡 Gradient Descent là gì? Gradient Descent là một thuật toán học máy "quốc dân" 🌍, giúp mô hình của bạn dần tìm ra điểm tối ưu 🎯 để giảm thiểu lỗi và tăng độ chính xác. Nhưng bạn có biết rằng có nhiều biến thể thông minh như Mini-batch, Stochastic Gradient Descent (SGD) hay Momentum có thể thúc đẩy tốc độ hơn nữa? 🚀

🔍 Tại sao nên quan tâm?

Tiết kiệm thời gian ⏳

Hiệu quả vượt trội 💪

Ứng dụng linh hoạt: Từ học sâu (Deep Learning) 🧠 đến mạng nơ-ron nhân tạo, Gradient Descent đều có thể giúp bạn! 🌟

📖 Tìm hiểu thêm về các mẹo tăng tốc huấn luyện và những case study thực tế ngay tại bài viết chi tiết trên website của chúng tôi! 👉 Tăng tốc huấn luyện mô hình với phương pháp Gradient Descent

Khám phá thêm những bài viết giá trị tại aicandy.vn

1 note

·

View note

Text

Optimization Techniques in Machine Learning Training

Optimization techniques are central to machine learning as they help in finding the best parameters for a model by minimizing or maximizing a function. They guide the training process by improving model accuracy and reducing errors.

Common Optimization Algorithms:

Gradient Descent: A widely used algorithm that minimizes the loss function by iteratively moving towards the minimum. Variants include:

Batch Gradient Descent

Stochastic Gradient Descent (SGD)

Mini-batch Gradient Descent

Adam (Adaptive Moment Estimation): Combines the advantages of both AdaGrad and RMSProp.

AdaGrad: Particularly good for sparse data, adjusts the learning rate for each parameter.

RMSProp: Used to deal with the problem of decaying learning rates in gradient descent.

Challenges in Optimization:

Learning Rate: A critical hyperparameter that determines how big each update step is. Too high, and you may overshoot; too low, and learning is slow.

Overfitting and Underfitting: Ensuring that the model generalizes well and doesn’t memorize the training data.

Convergence Issues: Some algorithms may converge too slowly or get stuck in local minima.

Real-World Application in Training:

Practical Exposure: A hands-on course in Pune would likely offer real-world projects where students apply these optimization techniques to datasets.

Project-Based Learning: Students might get to work on tasks like tuning hyperparameters, selecting the best optimization methods for a particular problem, and improving model performance on various data types (e.g., structured data, images, or text).

Career Advancement

The training can enhance skills in AI and ML, making participants capable of optimizing models efficiently. Whether it’s for a career in data science, AI, or machine learning in in Pune, optimization techniques play a vital role in delivering high-performance models.

Would you like to focus on any specific aspects of the training? For example, are you interested in a particular optimization algorithm, or do you want to delve into the practical application through projects in Pune?

0 notes

Text

Mini-Batch Gradient Descent: Optimizing Machine Learning Models

#MachineLearning #MBGD Discover how Mini-Batch Gradient Descent revolutionizes model training! Learn to implement MBGD in Python, optimize your algorithms, and boost performance. Perfect for data scientists and ML engineers looking to level up their skill

Mini-Batch Gradient Descent (MBGD) is a powerful optimization technique that revolutionizes machine learning model training. By combining the best features of Stochastic Gradient Descent (SGD) and Batch Gradient Descent, MBGD offers a balanced approach to model optimization. In this blog post, we’ll explore how MBGD works, its advantages, and how to implement it in Python. Understanding the Need…

0 notes

Text

DD2424 - Assignment 2 solved

In this assignment you will train and test a two layer network with multiple outputs to classify images from the CIFAR-10 dataset. You will train the network using mini-batch gradient descent applied to a cost function that computes the cross-entropy loss of the classifier applied to the labelled training data and an L2 regularization term on the weight matrix. The overall structure of your code…

View On WordPress

0 notes

Text

DD2424 - Assignment 2

In this assignment you will train and test a two layer network with multiple outputs to classify images from the CIFAR-10 dataset. You will train the network using mini-batch gradient descent applied to a cost function that computes the cross-entropy loss of the classifier applied to the labelled training data and an L2 regularization term on the weight matrix. The overall structure of your code…

View On WordPress

0 notes

Text

Stochastic Gradient Descent

In the context of machine learning, stochastic gradient descent is a preferred approach for training various models due to its efficiency and relatively low computational cost.

SGD, by updating parameters on a per-sample basis, performs notably faster than batch gradient descent, which calculates the gradient using the whole dataset.

Researchers investigating AIalgorithms utilize SGD as a pivotal step in the development and refining of complex models.

Software developers building Machine Learning applications with large datasets harness SGD's power to ensure effective and efficient model training.

The learning rate is a critical hyperparameter that controls the step size during the optimization process. Setting it too high can cause the algorithm to oscillate and diverge; too low can result in slow convergence. It might initially be large, to make quick progress, but gradually decreased to allow more fine-grained parameter updates to reach the optimal solution.

As deep learning techniques continue to develop and gain complexity, SGD and its variants will remain foundational to training these models.

Reinforcement learning, a rapidly evolving field in artificial intelligence, often involves optimization methods including SGD.

Mini-Batch Gradient Descent, a variation of SGD, combines the advantages of both SGD and Batch Gradient Descent. It updates parameters using a mini-batch of ‘n’ training examples, striking a balance between computational efficiency and convergence stability.

0 notes

Text

Charting new paths in AI learning

28.02.24 - Physicists at EPFL explore different AI learning methods, which can lead to smarter and more efficient models. In an era where artificial intelligence (AI) is transforming industries from healthcare to finance, understanding how these digital brains learn is more crucial than ever. Now, two researchers from EPFL, Antonia Sclocchi and Matthieu Wyart, have shed light on this process, focusing on a popular method known as Stochastic Gradient Descent (SGD). At the heart of an AI’s learning process are algorithms: sets of rules that guide AIs to improve based on the data they’re fed. SGD is one of these algorithms, like a guiding star that helps AIs navigate a complex landscape of information to find the best possible solutions a bit at a time. However, not all learning paths are equal. The EPFL study reveals how different approaches to SGD can significantly affect the efficiency and quality of AI learning. Specifically, the researchers examined how changing two key variables can lead to vastly different learning outcomes. The two variables were the size of the data samples the AI learns from at a single time (this is called the “batch size”) and the magnitude of its learning steps (this is the “learning rate”). They identified three distinct scenarios (“regimes”), each with unique characteristics that affect the AI’s learning process differently. In the first scenario, like exploring a new city without a map, the AI takes small, random steps, using small batches and high learning rates, which allows it to stumble upon solutions it might not have found otherwise. This approach is beneficial for exploring a wide range of possibilities but can be chaotic and unpredictable. The second scenario involves the AI taking a significant initial step based on its first impression, using larger batches and learning rates, followed by smaller, exploratory steps. This regime can speed up the learning process but risks missing out on better solutions that a more cautious approach might discover. The third scenario is like using a detailed map to navigate directly to known destinations. Here, the AI uses large batches and smaller learning rates, making its learning process more predictable and less prone to random exploration. This approach is efficient but may not always lead to the most creative or optimal solutions. The study offers a deeper understanding of the tradeoffs involved in training AI models, and highlights the importance of tailoring the learning process to the particular needs of each application. For example, medical diagnostics might benefit from a more exploratory approach where accuracy is paramount, while voice recognition might favor more direct learning paths for speed and efficiency. Nik Papageorgiou http://actu.epfl.ch/news/charting-new-paths-in-ai-learning (Source of the original content)

0 notes

Text

Hyperparameter Tuning in Machine Learning: Techniques and Tools

What is Hyperparameter Tuning?

Hyperparameter tuning is the process of selecting the best combination of hyperparameters for a machine learning model to improve its performance.

Unlike parameters, which are learned from the data during training (e.g., weights in neural networks), hyperparameters are set before training and control aspects of the learning process, such as the complexity of the model, the learning rate, and the number of iterations.

Effective hyperparameter tuning can make a significant difference in a model’s performance. The process involves experimenting with different hyperparameter values, training the model, and evaluating its performance to find the optimal set.

2. Common Hyperparameters in Machine Learning Different types of machine learning algorithms have different hyperparameters.

Below are examples of common hyperparameters for some popular algorithms: Linear Models (e.g., Linear Regression, Logistic Regression): Regularization strength (e.g., L1 or L2 penalties) Learning rate (for gradient descent)

Decision Trees:

Maximum depth of the tree Minimum samples required to split a node Minimum samples required at a leaf node Support Vector Machines (SVM): Kernel type (linear, polynomial, RBF) Regularization parameter © Gamma value for RBF kernel Neural Networks:

Number of layers and neurons per layer Learning rate Dropout rate (for regularization) Batch size Random Forests:

Number of trees in the forest Maximum depth of each tree Minimum samples required to split nodes Each of these hyperparameters affects how the model learns, generalizes, and fits the data.

3. Techniques for Hyperparameter Tuning

There are several techniques for hyperparameter tuning, each with its strengths and limitations:

a) Grid Search Grid search is a brute-force method for hyperparameter tuning. In grid search, you define a grid of possible hyperparameter values and systematically evaluate all combinations to find the best one.

For example, you might test various learning rates and regularization values for a logistic regression model.

Advantages:

Simple and exhaustive; covers all combinations in the search space.

Easy to implement using libraries like scikit-learn.

Disadvantages:

Computationally expensive, especially when the hyperparameter space is large. Can be time-consuming, as all combinations are evaluated regardless of their effectiveness.

Example:

python

from sklearn.model_selection import GridSearchCV from sklearn.svm import SVC param_grid = {‘C’: [0.1, 1, 10], ‘kernel’: [‘linear’, ‘rbf’]} grid_search = GridSearchCV(SVC(), param_grid, cv=5) grid_search.fit(X_train, y_train) print(“Best parameters:”, grid_search.best_params_)

b) Random Search Random search randomly selects hyperparameter combinations from a defined search space. Unlike grid search, it doesn’t evaluate all combinations but selects a subset, which can lead to quicker results, especially for large search spaces.

Advantages:

More efficient than grid search, especially when the hyperparameter space is large. Less computationally expensive and faster than grid search.

Disadvantages:

No guarantee of finding the optimal set of hyperparameters, as it only evaluates a random subset.

Example:

python

from sklearn.model_selection import RandomizedSearchCV from sklearn.ensemble import RandomForestClassifier from scipy.stats import randint param_dist = {‘n_estimators’: randint(10, 200), ‘max_depth’: randint(1, 20)} random_search = RandomizedSearchCV(RandomForestClassifier(), param_dist, n_iter=100, cv=5) random_search.fit(X_train, y_train) print(“Best parameters:”, random_search.best_params_)

c) Bayesian Optimization Bayesian optimization is a probabilistic model-based method that uses past evaluation results to guide the search for the best hyperparameters.

It builds a probabilistic model of the objective function (usually the validation score) and uses it to choose the next set of hyperparameters to evaluate.

Advantages:

More efficient than grid search and random search, especially for expensive-to-evaluate functions.

Can converge to an optimal solution faster with fewer evaluations.

Disadvantages: Requires specialized libraries (e.g., GaussianProcessRegressor from scikit-learn).

Computational overhead to build the probabilistic model. d) Genetic Algorithms Genetic algorithms use principles of natural selection (like mutation, crossover, and selection) to explore the hyperparameter search space.

These algorithms work by generating a population of candidate solutions (hyperparameter combinations) and evolving them over several generations to find the optimal solution.

Advantages: Can handle complex search spaces.

Effective in exploring both continuous and discrete hyperparameter spaces.

Disadvantages: Computationally intensive. Requires careful tuning of genetic algorithm parameters.

4. Tools for Hyperparameter Tuning

There are several tools and libraries that simplify the hyperparameter tuning process:

a) Scikit-learn Scikit-learn provides easy-to-use implementations for grid search (GridSearchCV) and random search (RandomizedSearchCV).

It also offers tools for cross-validation to ensure that hyperparameter tuning results are reliable.

b) Optuna Optuna is an open-source hyperparameter optimization framework that offers features like automatic pruning of unpromising trials and parallelization.

It supports various optimization algorithms, including Bayesian optimization.

c) Hyperopt Hyperopt is another Python library for optimizing hyperparameters using Bayesian optimization and random search. It supports parallelization and can be used with a variety of machine learning frameworks.

d) Ray Tune Ray Tune is a scalable hyperparameter tuning framework built on Ray, which provides efficient support for distributed hyperparameter optimization, including advanced search algorithms like Hyperband.

e) Keras Tuner For deep learning models, Keras Tuner is a library specifically designed for tuning hyperparameters of Keras models.

It integrates seamlessly with TensorFlow and supports methods like random search, Hyperband, and Bayesian optimization.

5. Best Practices for Hyperparameter Tuning To make the process more efficient and effective, here are some best practices:

Use cross-validation:

This ensures that your hyperparameter tuning results are reliable and not overfitted to a single train-test split.

Start simple:

Begin with a smaller, manageable search space and progressively increase complexity if necessary.

Parallelization:

Tools like Ray Tune and Optuna allow you to run multiple hyperparameter tuning jobs in parallel, speeding up the process.

Monitor performance:

Keep track of how different hyperparameter sets affect model performance, and use visualization tools to analyze the results.

6. Conclusion Hyperparameter tuning is a crucial part of machine learning model optimization.

Whether you’re working with a simple linear model or a complex deep learning architecture, finding the right hyperparameters can significantly improve model performance.

By leveraging techniques like grid search, random search, and Bayesian optimization, along with powerful tools like scikit-learn, Optuna, and Keras Tuner, you can efficiently find the best set of hyperparameters for your model.

WEBSITE: https://www.ficusoft.in/data-science-course-in-chennai/

0 notes

Text

0 notes