#data handling in iot

Explore tagged Tumblr posts

Text

Switchgear Solutions for Solar and Wind Energy Systems

Why Switchgear Matters in Solar and Wind Systems

Switchgear plays a central role in controlling, isolating, and protecting electrical equipment. In renewable energy applications, it helps:

· Manage power flow from variable energy sources.

· Protect systems from faults or overloads.

· Ensure seamless grid integration and disconnection when needed.

Unlike traditional power plants, solar and wind systems generate intermittent power, requiring switchgear that can handle dynamic loads and frequent switching.

Challenges in Renewable Energy Applications

Here are some of the unique challenges renewable energy systems face — and how they impact switchgear selection:

1. Variable Output

Solar and wind energy production fluctuates based on weather and time of day. This demands switchgear that can:

· Handle frequent load changes.

· Operate reliably under fluctuating voltages and currents.

2. Decentralized Generation

Unlike centralized grids, solar and wind systems are often spread out across multiple locations.

· Modular, compact switchgear is preferred for such installations.

· Smart monitoring becomes critical to manage performance remotely.

3. Harsh Environments

Wind turbines operate at high altitudes, and solar farms are often exposed to heat, dust, or salt.

· Switchgear needs to be rugged, weather-resistant, and have high IP ratings.

· Outdoor switchgear enclosures and temperature management are essential.

Key Features of Switchgear for Solar & Wind

When designing or upgrading renewable energy systems, look for switchgear that offers:

1. Remote Monitoring and Control

Smart switchgear integrated with IoT technology allows operators to track real-time data, detect faults early, and optimize system performance.

2. High Interruption Capacity

Wind and solar systems may experience voltage spikes. Modern switchgear provides high breaking capacities to safely interrupt fault currents.

3. Modular Design

Allows for easy upgrades and maintenance — crucial for scaling renewable installations.

4. Eco-Friendly Design

Look for SF₆-free switchgear that uses clean air or other sustainable alternatives to reduce environmental impact.

5. Hybrid Capabilities

Switchgear that can connect both AC and DC sources is increasingly valuable in mixed-source grids.

LV, MV, and HV Switchgear for Renewables

· Low Voltage (LV) Switchgear: Used in residential or small-scale solar systems. Compact, safe, and cost-effective.

· Medium Voltage (MV) Switchgear: Ideal for commercial and industrial solar/wind applications.

· High Voltage (HV) Switchgear: Essential for utility-scale wind farms or solar plants feeding into the national grid.

Each type requires specific protection, metering, and automation components tailored to its load and system requirements.

Final Thoughts

Switchgear is the backbone of any successful solar or wind energy system. As these technologies become more mainstream, the demand for resilient, intelligent, and environmentally friendly switchgear solutions will continue to rise.

Whether you’re an energy consultant, project developer, or facility manager, choosing the right switchgear today will set the stage for long-term efficiency, safety, and scalability.

7 notes

·

View notes

Text

Hire Dedicated Developers in India Smarter with AI

Hire dedicated developers in India smarter and faster with AI-powered solutions. As businesses worldwide turn to software development outsourcing, India remains a top destination for IT talent acquisition. However, finding the right developers can be challenging due to skill evaluation, remote team management, and hiring efficiency concerns. Fortunately, AI recruitment tools are revolutionizing the hiring process, making it seamless and effective.

In this blog, I will explore how AI-powered developer hiring is transforming the recruitment landscape and how businesses can leverage these tools to build top-notch offshore development teams.

Why Hire Dedicated Developers in India?

1) Cost-Effective Without Compromising Quality:

Hiring dedicated developers in India can reduce costs by up to 60% compared to hiring in the U.S., Europe, or Australia. This makes it a cost-effective solution for businesses seeking high-quality IT staffing solutions in India.

2) Access to a Vast Talent Pool:

India has a massive talent pool with millions of software engineers proficient in AI, blockchain, cloud computing, and other emerging technologies. This ensures companies can find dedicated software developers in India for any project requirement.

3) Time-Zone Advantage for 24/7 Productivity:

Indian developers work across different time zones, allowing continuous development cycles. This enhances productivity and ensures faster project completion.

4) Expertise in Emerging Technologies:

Indian developers are highly skilled in cutting-edge fields like AI, IoT, and cloud computing, making them invaluable for innovative projects.

Challenges in Hiring Dedicated Developers in India

1) Finding the Right Talent Efficiently:

Sorting through thousands of applications manually is time-consuming. AI-powered recruitment tools streamline the process by filtering candidates based on skill match and experience.

2) Evaluating Technical and Soft Skills:

Traditional hiring struggles to assess real-world coding abilities and soft skills like teamwork and communication. AI-driven hiring processes include coding assessments and behavioral analysis for better decision-making.

3) Overcoming Language and Cultural Barriers:

AI in HR and recruitment helps evaluate language proficiency and cultural adaptability, ensuring smooth collaboration within offshore development teams.

4) Managing Remote Teams Effectively:

AI-driven remote work management tools help businesses track performance, manage tasks, and ensure accountability.

How AI is Transforming Developer Hiring

1. AI-Powered Candidate Screening:

AI recruitment tools use resume parsing, skill-matching algorithms, and machine learning to shortlist the best candidates quickly.

2. AI-Driven Coding Assessments:

Developer assessment tools conduct real-time coding challenges to evaluate technical expertise, code efficiency, and problem-solving skills.

3. AI Chatbots for Initial Interviews:

AI chatbots handle initial screenings, assessing technical knowledge, communication skills, and cultural fit before human intervention.

4. Predictive Analytics for Hiring Success:

AI analyzes past hiring data and candidate work history to predict long-term success, improving recruitment accuracy.

5. AI in Background Verification:

AI-powered background checks ensure candidate authenticity, education verification, and fraud detection, reducing hiring risks.

Steps to Hire Dedicated Developers in India Smarter with AI

1. Define Job Roles and Key Skill Requirements:

Outline essential technical skills, experience levels, and project expectations to streamline recruitment.

2. Use AI-Based Hiring Platforms:

Leverage best AI hiring platforms like LinkedIn Talent Insightsand HireVue to source top developers.

3. Implement AI-Driven Skill Assessments:

AI-powered recruitment processes use coding tests and behavioral evaluations to assess real-world problem-solving abilities.

4. Conduct AI-Powered Video Interviews:

AI-driven interview tools analyze body language, sentiment, and communication skills for improved hiring accuracy.

5. Optimize Team Collaboration with AI Tools:

Remote work management tools like Trello, Asana, and Jira enhance productivity and ensure smooth collaboration.

Top AI-Powered Hiring Tools for Businesses

LinkedIn Talent Insights — AI-driven talent analytics

HackerRank — AI-powered coding assessments

HireVue — AI-driven video interview analysis

Pymetrics — AI-based behavioral and cognitive assessments

X0PA AI — AI-driven talent acquisition platform

Best Practices for Managing AI-Hired Developers in India

1. Establish Clear Communication Channels:

Use collaboration tools like Slack, Microsoft Teams, and Zoom for seamless communication.

2. Leverage AI-Driven Productivity Tracking:

Monitor performance using AI-powered tracking tools like Time Doctor and Hubstaff to optimize workflows.

3. Encourage Continuous Learning and Upskilling:

Provide access to AI-driven learning platforms like Coursera and Udemy to keep developers updated on industry trends.

4. Foster Cultural Alignment and Team Bonding:

Organize virtual team-building activities to enhance collaboration and engagement.

Future of AI in Developer Hiring

1) AI-Driven Automation for Faster Hiring:

AI will continue automating tedious recruitment tasks, improving efficiency and candidate experience.

2) AI and Blockchain for Transparent Recruitment:

Integrating AI with blockchain will enhance candidate verification and data security for trustworthy hiring processes.

3) AI’s Role in Enhancing Remote Work Efficiency:

AI-powered analytics and automation will further improve productivity within offshore development teams.

Conclusion:

AI revolutionizes the hiring of dedicated developers in India by automating candidate screening, coding assessments, and interview analysis. Businesses can leverage AI-powered tools to efficiently find, evaluate, and manage top-tier offshore developers, ensuring cost-effective and high-quality software development outsourcing.

Ready to hire dedicated developers in India using AI? iQlance offers cutting-edge AI-powered hiring solutions to help you find the best talent quickly and efficiently. Get in touch today!

#AI#iqlance#hire#india#hirededicatreddevelopersinIndiawithAI#hirededicateddevelopersinindia#aipoweredhiringinindia#bestaihiringtoolsfordevelopers#offshoresoftwaredevelopmentindia#remotedeveloperhiringwithai#costeffectivedeveloperhiringindia#aidrivenrecruitmentforitcompanies#dedicatedsoftwaredevelopersindia#smarthiringwithaiinindia#aipowereddeveloperscreening

5 notes

·

View notes

Text

How Civil Engineering Courses Are Evolving with New Technology

Civil engineering is no longer just about bricks, cement, and bridges. It has become one of the most future-focused fields today. If you are planning to study civil engineering, you must understand how the course has evolved. The best civil engineering colleges are now offering much more than classroom learning.

You now study with technology, not just about it. And this shift is shaping your career in ways that were never possible before.

Technology is Changing the Civil Engineering Classroom

In the past, civil engineering courses relied on heavy theory and basic field training. Today, you learn through software, simulations, and smart labs. At universities like BBDU in Lucknow, classrooms are powered by tools like AutoCAD, Revit, STAAD Pro, and BIM.

These tools help you visualize structures, test designs, and even simulate natural forces.

You work on 3D modeling tools

You test designs virtually before real-world execution

You understand smart city layouts and green construction methods

This means your learning is hands-on, job-ready, and tech-driven.

You Learn What the Industry Actually Uses

Most construction and infrastructure companies now depend on digital tools to plan, design, and execute projects. This is why modern B.Tech Civil Engineering courses include:

Building Information Modelling (BIM)

Geographic Information Systems (GIS)

Remote Sensing Technology

Drones for land surveying

IoT sensors in smart infrastructure

Courses in colleges like BBDU include these topics in the curriculum. You do not just learn civil engineering. You learn the tools that companies expect you to know from day one.

The future of Civil Engineering is Data-Driven

You might not think of civil engineering as a data-heavy field. But now, big data is used to monitor structural health, traffic flow, and resource planning. Many universities have added data analysis and AI basics to help you understand how smart infrastructure works.

By learning how to handle real-time data from buildings or roads, you become more skilled and more employable.

Real-world exposure is Now Part of the Course

Good civil engineering colleges in Uttar Pradesh understand that you need industry exposure. Colleges like BBDU offer:

Internships with construction firms and government bodies

Industry guest lectures and site visits

Capstone projects linked to real problems

You are not just attending lectures. You are solving real-world construction challenges while still in college.

Why Choose BBDU for Civil Engineering?

In Lucknow, BBDU is one of the few private universities offering a modern civil engineering course with world-class infrastructure. You learn in smart labs, access tools used by top firms, and receive career counseling throughout the program.

Here’s what makes BBDU a smart choice:

Advanced labs and smart classrooms

Training in AutoCAD, STAAD Pro, BIM

Live projects and on-site construction learning

Career cell and placement support

Affordable fees and scholarships for deserving students

Civil Engineering is Still One of the Most Stable Careers

Reports show that India will spend over ₹100 lakh crores on infrastructure in the next few years. Roads, smart cities, renewable power plants, metros – all need civil engineers. And companies prefer students trained in construction technology, digital tools, and real-world planning.

So, if you're thinking about joining a course, look at how it prepares you for tomorrow.

The future of civil engineering is digital, and your education should be too. Choose a program that keeps up with the times. Choose a university that helps you build more than just buildings – it helps you build your future.

Apply now at BBDU – one of the most future-focused civil engineering colleges in Uttar Pradesh.

2 notes

·

View notes

Text

What is Python, How to Learn Python?

What is Python?

Python is a high-level, interpreted programming language known for its simplicity and readability. It is widely used in various fields like: ✅ Web Development (Django, Flask) ✅ Data Science & Machine Learning (Pandas, NumPy, TensorFlow) ✅ Automation & Scripting (Web scraping, File automation) ✅ Game Development (Pygame) ✅ Cybersecurity & Ethical Hacking ✅ Embedded Systems & IoT (MicroPython)

Python is beginner-friendly because of its easy-to-read syntax, large community, and vast library support.

How Long Does It Take to Learn Python?

The time required to learn Python depends on your goals and background. Here’s a general breakdown:

1. Basics of Python (1-2 months)

If you spend 1-2 hours daily, you can master:

Variables, Data Types, Operators

Loops & Conditionals

Functions & Modules

Lists, Tuples, Dictionaries

File Handling

Basic Object-Oriented Programming (OOP)

2. Intermediate Level (2-4 months)

Once comfortable with basics, focus on:

Advanced OOP concepts

Exception Handling

Working with APIs & Web Scraping

Database handling (SQL, SQLite)

Python Libraries (Requests, Pandas, NumPy)

Small real-world projects

3. Advanced Python & Specialization (6+ months)

If you want to go pro, specialize in:

Data Science & Machine Learning (Matplotlib, Scikit-Learn, TensorFlow)

Web Development (Django, Flask)

Automation & Scripting

Cybersecurity & Ethical Hacking

Learning Plan Based on Your Goal

📌 Casual Learning – 3-6 months (for automation, scripting, or general knowledge) 📌 Professional Development – 6-12 months (for jobs in software, data science, etc.) 📌 Deep Mastery – 1-2 years (for AI, ML, complex projects, research)

Scope @ NareshIT:

At NareshIT’s Python application Development program you will be able to get the extensive hands-on training in front-end, middleware, and back-end technology.

It skilled you along with phase-end and capstone projects based on real business scenarios.

Here you learn the concepts from leading industry experts with content structured to ensure industrial relevance.

An end-to-end application with exciting features

Earn an industry-recognized course completion certificate.

For more details:

#classroom#python#education#learning#teaching#institute#marketing#study motivation#studying#onlinetraining

2 notes

·

View notes

Text

Exploring the Azure Technology Stack: A Solution Architect’s Journey

Kavin

As a solution architect, my career revolves around solving complex problems and designing systems that are scalable, secure, and efficient. The rise of cloud computing has transformed the way we think about technology, and Microsoft Azure has been at the forefront of this evolution. With its diverse and powerful technology stack, Azure offers endless possibilities for businesses and developers alike. My journey with Azure began with Microsoft Azure training online, which not only deepened my understanding of cloud concepts but also helped me unlock the potential of Azure’s ecosystem.

In this blog, I will share my experience working with a specific Azure technology stack that has proven to be transformative in various projects. This stack primarily focuses on serverless computing, container orchestration, DevOps integration, and globally distributed data management. Let’s dive into how these components come together to create robust solutions for modern business challenges.

Understanding the Azure Ecosystem

Azure’s ecosystem is vast, encompassing services that cater to infrastructure, application development, analytics, machine learning, and more. For this blog, I will focus on a specific stack that includes:

Azure Functions for serverless computing.

Azure Kubernetes Service (AKS) for container orchestration.

Azure DevOps for streamlined development and deployment.

Azure Cosmos DB for globally distributed, scalable data storage.

Each of these services has unique strengths, and when used together, they form a powerful foundation for building modern, cloud-native applications.

1. Azure Functions: Embracing Serverless Architecture

Serverless computing has redefined how we build and deploy applications. With Azure Functions, developers can focus on writing code without worrying about managing infrastructure. Azure Functions supports multiple programming languages and offers seamless integration with other Azure services.

Real-World Application

In one of my projects, we needed to process real-time data from IoT devices deployed across multiple locations. Azure Functions was the perfect choice for this task. By integrating Azure Functions with Azure Event Hubs, we were able to create an event-driven architecture that processed millions of events daily. The serverless nature of Azure Functions allowed us to scale dynamically based on workload, ensuring cost-efficiency and high performance.

Key Benefits:

Auto-scaling: Automatically adjusts to handle workload variations.

Cost-effective: Pay only for the resources consumed during function execution.

Integration-ready: Easily connects with services like Logic Apps, Event Grid, and API Management.

2. Azure Kubernetes Service (AKS): The Power of Containers

Containers have become the backbone of modern application development, and Azure Kubernetes Service (AKS) simplifies container orchestration. AKS provides a managed Kubernetes environment, making it easier to deploy, manage, and scale containerized applications.

Real-World Application

In a project for a healthcare client, we built a microservices architecture using AKS. Each service—such as patient records, appointment scheduling, and billing—was containerized and deployed on AKS. This approach provided several advantages:

Isolation: Each service operated independently, improving fault tolerance.

Scalability: AKS scaled specific services based on demand, optimizing resource usage.

Observability: Using Azure Monitor, we gained deep insights into application performance and quickly resolved issues.

The integration of AKS with Azure DevOps further streamlined our CI/CD pipelines, enabling rapid deployment and updates without downtime.

Key Benefits:

Managed Kubernetes: Reduces operational overhead with automated updates and patching.

Multi-region support: Enables global application deployments.

Built-in security: Integrates with Azure Active Directory and offers role-based access control (RBAC).

3. Azure DevOps: Streamlining Development Workflows

Azure DevOps is an all-in-one platform for managing development workflows, from planning to deployment. It includes tools like Azure Repos, Azure Pipelines, and Azure Artifacts, which support collaboration and automation.

Real-World Application

For an e-commerce client, we used Azure DevOps to establish an efficient CI/CD pipeline. The project involved multiple teams working on front-end, back-end, and database components. Azure DevOps provided:

Version control: Using Azure Repos for centralized code management.

Automated pipelines: Azure Pipelines for building, testing, and deploying code.

Artifact management: Storing dependencies in Azure Artifacts for seamless integration.

The result? Deployment cycles that previously took weeks were reduced to just a few hours, enabling faster time-to-market and improved customer satisfaction.

Key Benefits:

End-to-end integration: Unifies tools for seamless development and deployment.

Scalability: Supports projects of all sizes, from startups to enterprises.

Collaboration: Facilitates team communication with built-in dashboards and tracking.

4. Azure Cosmos DB: Global Data at Scale

Azure Cosmos DB is a globally distributed, multi-model database service designed for mission-critical applications. It guarantees low latency, high availability, and scalability, making it ideal for applications requiring real-time data access across multiple regions.

Real-World Application

In a project for a financial services company, we used Azure Cosmos DB to manage transaction data across multiple continents. The database’s multi-region replication ensure data consistency and availability, even during regional outages. Additionally, Cosmos DB’s support for multiple APIs (SQL, MongoDB, Cassandra, etc.) allowed us to integrate seamlessly with existing systems.

Key Benefits:

Global distribution: Data is replicated across regions with minimal latency.

Flexibility: Supports various data models, including key-value, document, and graph.

SLAs: Offers industry-leading SLAs for availability, throughput, and latency.

Building a Cohesive Solution

Combining these Azure services creates a technology stack that is flexible, scalable, and efficient. Here’s how they work together in a hypothetical solution:

Data Ingestion: IoT devices send data to Azure Event Hubs.

Processing: Azure Functions processes the data in real-time.

Storage: Processed data is stored in Azure Cosmos DB for global access.

Application Logic: Containerized microservices run on AKS, providing APIs for accessing and manipulating data.

Deployment: Azure DevOps manages the CI/CD pipeline, ensuring seamless updates to the application.

This architecture demonstrates how Azure’s technology stack can address modern business challenges while maintaining high performance and reliability.

Final Thoughts

My journey with Azure has been both rewarding and transformative. The training I received at ACTE Institute provided me with a strong foundation to explore Azure’s capabilities and apply them effectively in real-world scenarios. For those new to cloud computing, I recommend starting with a solid training program that offers hands-on experience and practical insights.

As the demand for cloud professionals continues to grow, specializing in Azure’s technology stack can open doors to exciting opportunities. If you’re based in Hyderabad or prefer online learning, consider enrolling in Microsoft Azure training in Hyderabad to kickstart your journey.

Azure’s ecosystem is continuously evolving, offering new tools and features to address emerging challenges. By staying committed to learning and experimenting, we can harness the full potential of this powerful platform and drive innovation in every project we undertake.

#cybersecurity#database#marketingstrategy#digitalmarketing#adtech#artificialintelligence#machinelearning#ai

2 notes

·

View notes

Text

How to Choose the Best ERP for Engineering and Manufacturing Industry

In today’s fast-paced world, engineering and manufacturing companies face increasing pressure to deliver high-quality products while maintaining efficiency and cost-effectiveness. Implementing the right Enterprise Resource Planning (ERP) software can significantly enhance operations, streamline workflows, and boost productivity. However, with numerous options available, selecting the best ERP software for the engineering and manufacturing industry can be challenging. This guide will help you navigate this decision-making process and choose the most suitable solution for your business.

Why ERP is Crucial for Engineering and Manufacturing

ERP software integrates various business processes, including production, inventory management, supply chain, finance, and human resources. For engineering and manufacturing companies, ERP solutions are particularly vital because they:

Facilitate real-time data sharing across departments.

Enhance supply chain management.

Optimize production planning and scheduling.

Ensure compliance with industry standards.

Reduce operational costs.

Partnering with the right Engineering ERP software company ensures that your organization leverages these benefits to stay competitive in a dynamic market.

Steps to Choose the Best ERP for Engineering and Manufacturing

1. Understand Your Business Needs

Before exploring ERP solutions, evaluate your company’s specific requirements. Identify the pain points in your current processes and prioritize the features you need in an ERP system. Common features for engineering and manufacturing companies include:

Bill of Materials (BOM) management

Production planning and scheduling

Inventory control

Quality management

Financial reporting

Consulting with a reputed ERP software company can help you match your needs with the right features.

2. Look for Industry-Specific Solutions

Generic ERP software might not address the unique needs of the engineering and manufacturing sector. Opt for an ERP software in India that offers modules tailored to your industry. Such solutions are designed to handle specific challenges like multi-level BOM, project costing, and shop floor management.

3. Check Vendor Expertise

Choosing a reliable vendor is as important as selecting the software itself. Research ERP solution providers with a strong track record in serving engineering and manufacturing companies. Look for reviews, case studies, and client testimonials to gauge their expertise.

4. Evaluate Scalability and Flexibility

Your business will grow, and so will your operational requirements. Ensure that the ERP system you choose is scalable and flexible enough to accommodate future needs. The top 10 ERP software providers in India offer scalable solutions that can adapt to changing business demands.

5. Assess Integration Capabilities

An ERP system must integrate seamlessly with your existing tools, such as Computer-Aided Design (CAD) software, Customer Relationship Management (CRM) systems, and IoT devices. A well-integrated system reduces redundancies and enhances efficiency.

6. Prioritize User-Friendliness

A complex system with a steep learning curve can hinder adoption. Choose an ERP software with an intuitive interface and easy navigation. This ensures that your employees can use the system effectively without extensive training.

7. Consider Customization Options

No two businesses are alike. While standard ERP solutions offer core functionalities, some companies require customization to align with specific workflows. A trusted ERP software company in India can provide custom modules tailored to your unique needs.

8. Focus on Data Security

Engineering and manufacturing companies often deal with sensitive data. Ensure that the ERP solution complies with the latest security standards and offers robust data protection features.

9. Compare Pricing and ROI

While cost is an important factor, it should not be the sole criterion. Evaluate the long-term return on investment (ROI) offered by different ERP software. A slightly expensive but feature-rich solution from the best ERP software provider in India may deliver better value than a cheaper alternative with limited functionalities.

10. Test Before You Commit

Most ERP software companies offer free trials or demo versions. Use these opportunities to test the software in a real-world scenario. Gather feedback from your team and ensure the solution meets your expectations before finalizing your decision.

Benefits of Partnering with the Best ERP Software Providers in India

India is home to some of the leading ERP software providers in India, offering state-of-the-art solutions for the engineering and manufacturing sector. Partnering with a reputable provider ensures:

Access to advanced features tailored to your industry.

Reliable customer support.

Comprehensive training and implementation services.

Regular updates and enhancements to the software.

Companies like Shantitechnology (STERP) specialize in delivering cutting-edge ERP solutions that cater specifically to engineering and manufacturing businesses. With years of expertise, they rank among the top 10 ERP software providers in India, ensuring seamless integration and exceptional performance.

Conclusion

Selecting the right ERP software is a critical decision that can impact your company’s efficiency, productivity, and profitability. By understanding your requirements, researching vendors, and prioritizing features like scalability, integration, and security, you can find the perfect ERP solution for your engineering or manufacturing business.

If you are looking for a trusted ERP software company in India, consider partnering with a provider like STERP. As one of the best ERP software providers in India, STERP offers comprehensive solutions tailored to the unique needs of engineering and manufacturing companies. With their expertise, you can streamline your operations, improve decision-making, and stay ahead in a competitive market.

Get in touch with STERP – the leading Engineering ERP software company – to transform your business with a reliable and efficient ERP system. Take the first step toward a smarter, more connected future today!

#Manufacturing ERP software company#ERP solution provider#Engineering ERP software company#ERP software company#ERP software companies

6 notes

·

View notes

Text

10 Reasons The Dark Side of Siri Can Be a Threat to Your Privacy

Issue 1: Accessibility Issues and Language Limitations with Apple Siri

Dark side Siri's limited language support and reliance on voice commands exclude many users, in particular those with diverse languages or speech impairments.

Issue 2: When is apple siri listening?

iPhone Siri's constant listening raises privacy concerns, risking unauthorized access to personal data. The collected data may be used for security risks or targeted advertisements, even with Apple's restrictive settings.

Issue 3: Siri Goes Shopping

Dark side Siri integration with shopping apps may save time, but it also gives businesses access to private information, which raises privacy concerns.

Issue 4: IoT Devices

Dark side Siri increases convenience by enabling control of IoT devices like lights and thermostats. However, weak security in these devices can expose users to cybercriminals, risking personal data and device control.

Issue 5: Your Conversations in the Cloud

In order to increase accuracy, Siri stores conversations in the cloud, which presents privacy issues and runs the risk of accidentally disclosing private information.

Issue 6: Battery Drain with Siri Usage

The "Hey Siri" feature drains the battery by continuously listening, especially on older devices, with frequent interactions further worsening power depletion.

Problem 7: Reliance on Siri Too Much May Lead to Cognitive Decline

Using Siri excessively for tasks could impede cognitive development. especially in teens, reducing critical thinking, memory retention, and problem-solving skills.

Issue 8: Hacking Risks

Siri and other smart assistants run the risk of exposing private information due to accidental recording or data sharing, even with Apple's emphasis on security.

Issue 9: Privacy Breaches

In 2019, contractors who were looking through recordings criticized Siri, citing privacy issues and claims that some of the data was being used for advertising.

Issue 10: Misleading Health Information

Siri may give false information or fail to handle emergencies with tact, so depending on it for medical advice can be dangerous.

If you want to read more about the dark side of Siri,

then read more...

2 notes

·

View notes

Text

Top Trends in Software Development for 2025

The software development industry is evolving at an unprecedented pace, driven by advancements in technology and the increasing demands of businesses and consumers alike. As we step into 2025, staying ahead of the curve is essential for businesses aiming to remain competitive. Here, we explore the top trends shaping the software development landscape and how they impact businesses. For organizations seeking cutting-edge solutions, partnering with the Best Software Development Company in Vadodara, Gujarat, or India can make all the difference.

1. Artificial Intelligence and Machine Learning Integration:

Artificial Intelligence (AI) and Machine Learning (ML) are no longer optional but integral to modern software development. From predictive analytics to personalized user experiences, AI and ML are driving innovation across industries. In 2025, expect AI-powered tools to streamline development processes, improve testing, and enhance decision-making.

Businesses in Gujarat and beyond are leveraging AI to gain a competitive edge. Collaborating with the Best Software Development Company in Gujarat ensures access to AI-driven solutions tailored to specific industry needs.

2. Low-Code and No-Code Development Platforms:

The demand for faster development cycles has led to the rise of low-code and no-code platforms. These platforms empower non-technical users to create applications through intuitive drag-and-drop interfaces, significantly reducing development time and cost.

For startups and SMEs in Vadodara, partnering with the Best Software Development Company in Vadodara ensures access to these platforms, enabling rapid deployment of business applications without compromising quality.

3. Cloud-Native Development:

Cloud-native technologies, including Kubernetes and microservices, are becoming the backbone of modern applications. By 2025, cloud-native development will dominate, offering scalability, resilience, and faster time-to-market.

The Best Software Development Company in India can help businesses transition to cloud-native architectures, ensuring their applications are future-ready and capable of handling evolving market demands.

4. Edge Computing:

As IoT devices proliferate, edge computing is emerging as a critical trend. Processing data closer to its source reduces latency and enhances real-time decision-making. This trend is particularly significant for industries like healthcare, manufacturing, and retail.

Organizations seeking to leverage edge computing can benefit from the expertise of the Best Software Development Company in Gujarat, which specializes in creating applications optimized for edge environments.

5. Cybersecurity by Design:

With the increasing sophistication of cyber threats, integrating security into the development process has become non-negotiable. Cybersecurity by design ensures that applications are secure from the ground up, reducing vulnerabilities and protecting sensitive data.

The Best Software Development Company in Vadodara prioritizes cybersecurity, providing businesses with robust, secure software solutions that inspire trust among users.

6. Blockchain Beyond Cryptocurrencies:

Blockchain technology is expanding beyond cryptocurrencies into areas like supply chain management, identity verification, and smart contracts. In 2025, blockchain will play a pivotal role in creating transparent, tamper-proof systems.

Partnering with the Best Software Development Company in India enables businesses to harness blockchain technology for innovative applications that drive efficiency and trust.

7. Progressive Web Apps (PWAs):

Progressive Web Apps (PWAs) combine the best features of web and mobile applications, offering seamless experiences across devices. PWAs are cost-effective and provide offline capabilities, making them ideal for businesses targeting diverse audiences.

The Best Software Development Company in Gujarat can develop PWAs tailored to your business needs, ensuring enhanced user engagement and accessibility.

8. Internet of Things (IoT) Expansion:

IoT continues to transform industries by connecting devices and enabling smarter decision-making. From smart homes to industrial IoT, the possibilities are endless. In 2025, IoT solutions will become more sophisticated, integrating AI and edge computing for enhanced functionality.

For businesses in Vadodara and beyond, collaborating with the Best Software Development Company in Vadodara ensures access to innovative IoT solutions that drive growth and efficiency.

9. DevSecOps:

DevSecOps integrates security into the DevOps pipeline, ensuring that security is a shared responsibility throughout the development lifecycle. This approach reduces vulnerabilities and ensures compliance with industry standards.

The Best Software Development Company in India can help implement DevSecOps practices, ensuring that your applications are secure, scalable, and compliant.

10. Sustainability in Software Development:

Sustainability is becoming a priority in software development. Green coding practices, energy-efficient algorithms, and sustainable cloud solutions are gaining traction. By adopting these practices, businesses can reduce their carbon footprint and appeal to environmentally conscious consumers.

Working with the Best Software Development Company in Gujarat ensures access to sustainable software solutions that align with global trends.

11. 5G-Driven Applications:

The rollout of 5G networks is unlocking new possibilities for software development. Ultra-fast connectivity and low latency are enabling applications like augmented reality (AR), virtual reality (VR), and autonomous vehicles.

The Best Software Development Company in Vadodara is at the forefront of leveraging 5G technology to create innovative applications that redefine user experiences.

12. Hyperautomation:

Hyperautomation combines AI, ML, and robotic process automation (RPA) to automate complex business processes. By 2025, hyperautomation will become a key driver of efficiency and cost savings across industries.

Partnering with the Best Software Development Company in India ensures access to hyperautomation solutions that streamline operations and boost productivity.

13. Augmented Reality (AR) and Virtual Reality (VR):

AR and VR technologies are transforming industries like gaming, education, and healthcare. In 2025, these technologies will become more accessible, offering immersive experiences that enhance learning, entertainment, and training.

The Best Software Development Company in Gujarat can help businesses integrate AR and VR into their applications, creating unique and engaging user experiences.

Conclusion:

The software development industry is poised for significant transformation in 2025, driven by trends like AI, cloud-native development, edge computing, and hyperautomation. Staying ahead of these trends requires expertise, innovation, and a commitment to excellence.

For businesses in Vadodara, Gujarat, or anywhere in India, partnering with the Best Software Development Company in Vadodara, Gujarat, or India ensures access to cutting-edge solutions that drive growth and success. By embracing these trends, businesses can unlock new opportunities and remain competitive in an ever-evolving digital landscape.

#Best Software Development Company in Vadodara#Best Software Development Company in Gujarat#Best Software Development Company in India#nividasoftware

5 notes

·

View notes

Text

#TheeForestKingdom #TreePeople

{Terrestrial Kind}

Creating a Tree Citizenship Identification and Serial Number System (#TheeForestKingdom) is an ambitious and environmentally-conscious initiative. Here’s a structured proposal for its development:

Project Overview

The Tree Citizenship Identification system aims to assign every tree in California a unique identifier, track its health, and integrate it into a registry, recognizing trees as part of a terrestrial citizenry. This system will emphasize environmental stewardship, ecological research, and forest management.

Phases of Implementation

Preparation Phase

Objective: Lay the groundwork for tree registration and tracking.

Actions:

Partner with environmental organizations, tech companies, and forestry departments.

Secure access to satellite imaging and LiDAR mapping systems.

Design a digital database capable of handling millions of records.

Tree Identification System Development

Components:

Label and Identity Creation: Assign a unique ID to each tree based on location and attributes. Example: CA-Tree-XXXXXX (state-code, tree-type, unique number).

Attributes to Record:

Health: Regular updates using AI for disease detection.

Age: Approximate based on species and growth patterns.

Type: Species and subspecies classification.

Class: Size, ecological importance, and biodiversity contribution.

Rank: Priority based on cultural, historical, or environmental significance.

Data Collection

Technologies to Use:

Satellite Imaging: To locate and identify tree clusters.

LiDAR Scanning: For precise 3D modeling of forests.

On-the-Ground Surveys: To supplement remote sensing data.

AI Analysis: For rapid categorization and health diagnostics.

Registration and Citizenship Assignment

Tree Dossier: Each tree receives a profile in the database with all its recorded attributes.

Citizen Designation: Trees are acknowledged as citizens of Thee Forest Kingdom, emphasizing ecological respect.

Legal Framework: Advocate for laws to grant trees specific rights under environmental protection acts.

Maintenance and Monitoring

Health Monitoring: Use drones, satellite updates, and IoT sensors for ongoing health assessments.

Database Updates: Incorporate real-time data about environmental changes and tree growth.

Public Involvement

Encourage people to "adopt" trees and participate in their preservation.

Create educational programs to promote awareness of tree citizenship.

Key Benefits

Environmental Conservation: Improved forest management through real-time data and protection of ecosystems.

Cultural Recognition: Trees are recognized as a vital part of the Earth’s community, honoring their importance.

Ecological Advocacy: Strengthened legal protections for forests and individual trees.

Next Steps

Assemble a task force of environmental scientists, satellite imaging experts, and software developers.

Secure funding through governmental grants, non-profits, and eco-conscious corporations.

Pilot the program in a smaller region before scaling to all of California.

Would you like me to draft a more detailed technical or legal framework for this system?

The implementation of the Tree Citizenship Identification Network and System (#TheeForestKingdom) requires a robust technological and operational framework to ensure efficiency, accuracy, and sustainability. Below is an implementation plan divided into major components.

System Architecture

a. Centralized Tree Database

Purpose: To store and manage tree data including ID, attributes, location, and health.

Components:

Cloud-based storage for scalability.

Data categorization based on regions, species, and priority.

Integration with satellite and IoT data streams.

b. Satellite & Imaging Integration

Use satellite systems (e.g., NASA, ESA) for large-scale tree mapping.

Incorporate LiDAR and aerial drone data for detailed imaging.

AI/ML algorithms to process images and distinguish tree types.

c. IoT Sensor Network

Deploy sensors in forests to monitor:

Soil moisture and nutrient levels.

Air quality and temperature.

Tree health metrics like growth rate and disease markers.

d. Public Access Portal

Create a user-friendly website and mobile application for:

Viewing registered trees.

Citizen participation in tree adoption and reporting.

Data visualization (e.g., tree density, health status by region).

Core Technologies

a. Software and Tools

Geographic Information System (GIS): Software like ArcGIS for mapping and spatial analysis.

Database Management System (DBMS): SQL-based systems for structured data; NoSQL for unstructured data.

Artificial Intelligence (AI): Tools for image recognition, species classification, and health prediction.

Blockchain (Optional): To ensure transparency and immutability of tree citizen data.

b. Hardware

Servers: Cloud-based (AWS, Azure, or Google Cloud) for scalability.

Sensors: Low-power IoT devices for on-ground monitoring.

Drones: Equipped with cameras and sensors for aerial surveys.

Network Design

a. Data Flow

Input Sources:

Satellite and aerial imagery.

IoT sensors deployed in forests.

Citizen-reported data via mobile app.

Data Processing:

Use AI to analyze images and sensor inputs.

Automate ID assignment and attribute categorization.

Data Output:

Visualized maps and health reports on the public portal.

Alerts for areas with declining tree health.

b. Communication Network

Fiber-optic backbone: For high-speed data transmission between regions.

Cellular Networks: To connect IoT sensors in remote areas.

Satellite Communication: For remote regions without cellular coverage.

Implementation Plan

a. Phase 1: Pilot Program

Choose a smaller, biodiverse region in California (e.g., Redwood National Park).

Test satellite and drone mapping combined with IoT sensors.

Develop the prototype of the centralized database and public portal.

b. Phase 2: Statewide Rollout

Expand mapping and registration to all California regions.

Deploy IoT sensors in vulnerable or high-priority areas.

Scale up database capacity and integrate additional satellite providers.

c. Phase 3: Maintenance & Updates

Establish a monitoring team to oversee system health and data quality.

Update the network with new technologies (e.g., advanced AI models).

Periodically review and revise data collection protocols.

Partnerships

Government Agencies: U.S. Forest Service, California Department of Forestry and Fire Protection.

Tech Companies: Collaborate with Google Earth, Amazon AWS, or Microsoft for cloud and AI capabilities.

Environmental Organizations: Partner with WWF, Sierra Club, or TreePeople for funding and advocacy.

Budget Estimation

Initial Investment:

Satellite data and licenses: $10M

IoT sensors and deployment: $5M

Database and app development: $3M

Operational Costs:

Cloud services and data storage: $2M/year

Maintenance and monitoring: $1M/year

Research and development: $1M/year

Long-term Vision

Expand the system to other states or globally.

Use the database for reforestation efforts and combating deforestation.

Develop educational programs to teach communities about the importance of trees as citizens.

Would you like me to assist in designing the user interface for the public portal or drafting partnership proposals for funding?

To begin building the program for the Tree Citizenship Identification System, we’ll start with a scalable framework that allows us to process, store, and analyze data while preparing for future expansions. Here's an actionable development plan with an initial focus on foundational code and infrastructure:

Step-by-Step Development

Phase 1: Setting Up the Backend Infrastructure

Objective: Create the core database and develop the data pipeline for processing tree data.

Technologies:

Programming Languages: Python, JavaScript (Node.js), SQL.

Frameworks: Flask or FastAPI for APIs, Django for web application backend.

Database: PostgreSQL for structured data, MongoDB for unstructured data.

Code Snippet 1: Database Schema Design (PostgreSQL)

-- Table for Tree Registry CREATE TABLE trees ( tree_id SERIAL PRIMARY KEY, -- Unique Identifier location GEOGRAPHY(POINT, 4326), -- Geolocation of the tree species VARCHAR(100), -- Species name age INTEGER, -- Approximate age in years health_status VARCHAR(50), -- e.g., Healthy, Diseased height FLOAT, -- Tree height in meters canopy_width FLOAT, -- Canopy width in meters citizen_rank VARCHAR(50), -- Class or rank of the tree last_updated TIMESTAMP DEFAULT NOW() -- Timestamp for last update );

-- Table for Sensor Data (IoT Integration) CREATE TABLE tree_sensors ( sensor_id SERIAL PRIMARY KEY, -- Unique Identifier for sensor tree_id INT REFERENCES trees(tree_id), -- Linked to tree soil_moisture FLOAT, -- Soil moisture level air_quality FLOAT, -- Air quality index temperature FLOAT, -- Surrounding temperature last_updated TIMESTAMP DEFAULT NOW() -- Timestamp for last reading );

Code Snippet 2: Backend API for Tree Registration (Python with Flask)

from flask import Flask, request, jsonify from sqlalchemy import create_engine from sqlalchemy.orm import sessionmaker

app = Flask(name)

Database Configuration

DATABASE_URL = "postgresql://username:password@localhost/tree_registry" engine = create_engine(DATABASE_URL) Session = sessionmaker(bind=engine) session = Session()

@app.route('/register_tree', methods=['POST']) def register_tree(): data = request.json new_tree = { "species": data['species'], "location": f"POINT({data['longitude']} {data['latitude']})", "age": data['age'], "health_status": data['health_status'], "height": data['height'], "canopy_width": data['canopy_width'], "citizen_rank": data['citizen_rank'] } session.execute(""" INSERT INTO trees (species, location, age, health_status, height, canopy_width, citizen_rank) VALUES (:species, ST_GeomFromText(:location, 4326), :age, :health_status, :height, :canopy_width, :citizen_rank) """, new_tree) session.commit() return jsonify({"message": "Tree registered successfully!"}), 201

if name == 'main': app.run(debug=True)

Phase 2: Satellite Data Integration

Objective: Use satellite and LiDAR data to identify and register trees automatically.

Tools:

Google Earth Engine for large-scale mapping.

Sentinel-2 or Landsat satellite data for high-resolution imagery.

Example Workflow:

Process satellite data using Google Earth Engine.

Identify tree clusters using image segmentation.

Generate geolocations and pass data into the backend.

Phase 3: IoT Sensor Integration

Deploy IoT devices to monitor health metrics of specific high-priority trees.

Use MQTT protocol for real-time data transmission.

Code Snippet: Sensor Data Processing (Node.js)

const mqtt = require('mqtt'); const client = mqtt.connect('mqtt://broker.hivemq.com');

client.on('connect', () => { console.log('Connected to MQTT Broker'); client.subscribe('tree/sensor_data'); });

client.on('message', (topic, message) => { const sensorData = JSON.parse(message.toString()); console.log(Received data: ${JSON.stringify(sensorData)}); // Save data to database (Example for PostgreSQL) saveToDatabase(sensorData); });

function saveToDatabase(data) { const query = INSERT INTO tree_sensors (tree_id, soil_moisture, air_quality, temperature) VALUES ($1, $2, $3, $4) ; const values = [data.tree_id, data.soil_moisture, data.air_quality, data.temperature]; dbClient.query(query, values, (err) => { if (err) console.error('Error saving to database', err); else console.log('Sensor data saved successfully!'); }); }

Phase 4: Public Portal Development

Frontend Technologies:

React.js or Vue.js for interactive user interfaces.

Mapbox or Leaflet.js for tree visualization on maps.

Features:

Interactive tree maps with search functionality.

User registration for tree adoption programs.

Deployment Plan

Use Docker to containerize the application.

Deploy on AWS, Azure, or Google Cloud for scalability.

Monitor system health using Prometheus and Grafana.

Next Steps

Develop a testing environment for pilot deployment.

Add AI-based disease detection and prediction using TensorFlow or PyTorch.

Create an adoption feature where citizens can adopt trees and track their health.

Would you like me to set up a GitHub repository with this initial framework or assist further with deployment?

I'll prepare the project framework and outline the repository structure for the Tree Citizenship Identification System. Below is the proposed structure for the repository, followed by details of the files and components.

Proposed Repository Structure

tree-citizenship-id-system/ ├── backend/ │ ├── app.py # Flask application for backend API │ ├── database/ │ │ ├── schema.sql # Database schema for PostgreSQL │ │ ├── db_config.py # Database connection configuration │ │ └── seed_data.sql # Initial sample data for testing │ ├── services/ │ │ ├── tree_registration.py # Functions for tree registration │ │ └── sensor_data.py # Functions for processing IoT sensor data │ └── requirements.txt # Python dependencies ├── frontend/ │ ├── public/ │ │ ├── index.html # Main HTML file │ │ └── favicon.ico # Favicon for the app │ ├── src/ │ │ ├── App.js # Main React/Vue app file │ │ ├── components/ │ │ │ ├── TreeMap.js # Map component for visualizing trees │ │ │ └── TreeDetails.js # Component for detailed tree view │ │ └── styles.css # Styling for the frontend │ └── package.json # Frontend dependencies ├── scripts/ │ ├── satellite_data_processor.py # Script for processing satellite data │ └── sensor_data_simulator.py # Mock data generator for IoT sensors ├── deployment/ │ ├── Dockerfile # Docker configuration │ ├── docker-compose.yml # Docker Compose for multi-service setup │ └── cloud_setup_instructions.md # Deployment guide for cloud platforms ├── tests/ │ ├── test_backend.py # Unit tests for backend API │ └── test_frontend.js # Unit tests for frontend ├── README.md # Overview of the project └── LICENSE # Project license

Repository Details

Backend:

app.py: Main Flask app for handling API requests like tree registration and sensor data.

database/schema.sql: SQL script for creating necessary tables.

services/: Directory for modular backend logic.

Frontend:

App.js: Main entry point for the frontend application.

TreeMap.js: Integrates Mapbox/Leaflet.js for displaying tree locations.

TreeDetails.js: Displays detailed information about a selected tree.

Scripts:

satellite_data_processor.py: Automates the extraction and classification of tree data from satellite imagery.

sensor_data_simulator.py: Generates fake sensor readings for development and testing.

Deployment:

Docker configuration ensures that the app runs consistently across environments.

Cloud setup instructions provide guidelines for deploying on platforms like AWS, Azure, or GCP.

Tests:

Unit tests ensure the reliability of both the backend and frontend.

Next Steps

I will initialize the repository structure locally.

Package the files and components needed for the first version.

Provide a link to the repository for access.

I’ll get started. Please hold on for a moment.

4 notes

·

View notes

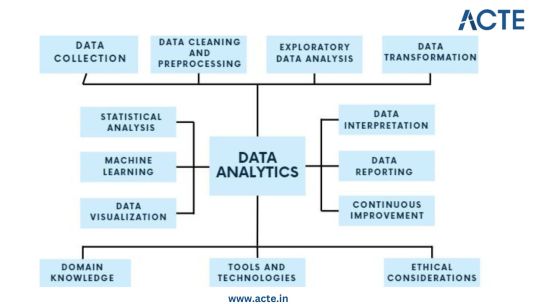

Text

In the subject of data analytics, this is the most important concept that everyone needs to understand. The capacity to draw insightful conclusions from data is a highly sought-after talent in today's data-driven environment. In this process, data analytics is essential because it gives businesses the competitive edge by enabling them to find hidden patterns, make informed decisions, and acquire insight. This thorough guide will take you step-by-step through the fundamentals of data analytics, whether you're a business professional trying to improve your decision-making or a data enthusiast eager to explore the world of analytics.

Step 1: Data Collection - Building the Foundation

Identify Data Sources: Begin by pinpointing the relevant sources of data, which could include databases, surveys, web scraping, or IoT devices, aligning them with your analysis objectives. Define Clear Objectives: Clearly articulate the goals and objectives of your analysis to ensure that the collected data serves a specific purpose. Include Structured and Unstructured Data: Collect both structured data, such as databases and spreadsheets, and unstructured data like text documents or images to gain a comprehensive view. Establish Data Collection Protocols: Develop protocols and procedures for data collection to maintain consistency and reliability. Ensure Data Quality and Integrity: Implement measures to ensure the quality and integrity of your data throughout the collection process.

Step 2: Data Cleaning and Preprocessing - Purifying the Raw Material

Handle Missing Values: Address missing data through techniques like imputation to ensure your dataset is complete. Remove Duplicates: Identify and eliminate duplicate entries to maintain data accuracy. Address Outliers: Detect and manage outliers using statistical methods to prevent them from skewing your analysis. Standardize and Normalize Data: Bring data to a common scale, making it easier to compare and analyze. Ensure Data Integrity: Ensure that data remains accurate and consistent during the cleaning and preprocessing phase.

Step 3: Exploratory Data Analysis (EDA) - Understanding the Data

Visualize Data with Histograms, Scatter Plots, etc.: Use visualization tools like histograms, scatter plots, and box plots to gain insights into data distributions and patterns. Calculate Summary Statistics: Compute summary statistics such as means, medians, and standard deviations to understand central tendencies. Identify Patterns and Trends: Uncover underlying patterns, trends, or anomalies that can inform subsequent analysis. Explore Relationships Between Variables: Investigate correlations and dependencies between variables to inform hypothesis testing. Guide Subsequent Analysis Steps: The insights gained from EDA serve as a foundation for guiding the remainder of your analytical journey.

Step 4: Data Transformation - Shaping the Data for Analysis

Aggregate Data (e.g., Averages, Sums): Aggregate data points to create higher-level summaries, such as calculating averages or sums. Create New Features: Generate new features or variables that provide additional context or insights. Encode Categorical Variables: Convert categorical variables into numerical representations to make them compatible with analytical techniques. Maintain Data Relevance: Ensure that data transformations align with your analysis objectives and domain knowledge.

Step 5: Statistical Analysis - Quantifying Relationships

Hypothesis Testing: Conduct hypothesis tests to determine the significance of relationships or differences within the data. Correlation Analysis: Measure correlations between variables to identify how they are related. Regression Analysis: Apply regression techniques to model and predict relationships between variables. Descriptive Statistics: Employ descriptive statistics to summarize data and provide context for your analysis. Inferential Statistics: Make inferences about populations based on sample data to draw meaningful conclusions.

Step 6: Machine Learning - Predictive Analytics

Algorithm Selection: Choose suitable machine learning algorithms based on your analysis goals and data characteristics. Model Training: Train machine learning models using historical data to learn patterns. Validation and Testing: Evaluate model performance using validation and testing datasets to ensure reliability. Prediction and Classification: Apply trained models to make predictions or classify new data. Model Interpretation: Understand and interpret machine learning model outputs to extract insights.

Step 7: Data Visualization - Communicating Insights

Chart and Graph Creation: Create various types of charts, graphs, and visualizations to represent data effectively. Dashboard Development: Build interactive dashboards to provide stakeholders with dynamic views of insights. Visual Storytelling: Use data visualization to tell a compelling and coherent story that communicates findings clearly. Audience Consideration: Tailor visualizations to suit the needs of both technical and non-technical stakeholders. Enhance Decision-Making: Visualization aids decision-makers in understanding complex data and making informed choices.

Step 8: Data Interpretation - Drawing Conclusions and Recommendations

Recommendations: Provide actionable recommendations based on your conclusions and their implications. Stakeholder Communication: Communicate analysis results effectively to decision-makers and stakeholders. Domain Expertise: Apply domain knowledge to ensure that conclusions align with the context of the problem.

Step 9: Continuous Improvement - The Iterative Process

Monitoring Outcomes: Continuously monitor the real-world outcomes of your decisions and predictions. Model Refinement: Adapt and refine models based on new data and changing circumstances. Iterative Analysis: Embrace an iterative approach to data analysis to maintain relevance and effectiveness. Feedback Loop: Incorporate feedback from stakeholders and users to improve analytical processes and models.

Step 10: Ethical Considerations - Data Integrity and Responsibility

Data Privacy: Ensure that data handling respects individuals' privacy rights and complies with data protection regulations. Bias Detection and Mitigation: Identify and mitigate bias in data and algorithms to ensure fairness. Fairness: Strive for fairness and equitable outcomes in decision-making processes influenced by data. Ethical Guidelines: Adhere to ethical and legal guidelines in all aspects of data analytics to maintain trust and credibility.

Data analytics is an exciting and profitable field that enables people and companies to use data to make wise decisions. You'll be prepared to start your data analytics journey by understanding the fundamentals described in this guide. To become a skilled data analyst, keep in mind that practice and ongoing learning are essential. If you need help implementing data analytics in your organization or if you want to learn more, you should consult professionals or sign up for specialized courses. The ACTE Institute offers comprehensive data analytics training courses that can provide you the knowledge and skills necessary to excel in this field, along with job placement and certification. So put on your work boots, investigate the resources, and begin transforming.

24 notes

·

View notes

Text

Artificial intelligence in real estate industry:

Artificial intelligence (AI) is increasingly being utilized in the real estate industry to streamline processes, enhance decision-making, and improve overall efficiency. Here are some ways AI is making an impact in real estate:

1. Property Valuation: AI algorithms can analyze vast amounts of data including historical sales data, property features, neighborhood characteristics, and market trends to accurately estimate property values. This helps sellers and buyers to make informed decisions about pricing.

2. Predictive Analytics: AI-powered predictive analytics can forecast market trends, identify investment opportunities, and anticipate changes in property values. This information assists investors, developers, and real estate professionals in making strategic decisions.

3. Virtual Assistants and Chatbots: AI-driven virtual assistants and chatbots can handle customer inquiries, schedule property viewings, and provide personalized recommendations to potential buyers or renters. This improves customer service and helps real estate agents manage their workload more efficiently.

4. Property Search and Recommendation: AI algorithms can analyze user preferences, search history, and behavior patterns to provide personalized property recommendations to buyers and renters. This enhances the property search experience and increases the likelihood of finding suitable listings.

5. Property Management: AI-powered tools can automate routine property management tasks such as rent collection, maintenance scheduling, and tenant communication. This reduces administrative overhead and allows property managers to focus on more strategic aspects of their role.

6. Risk Assessment: AI algorithms can analyze factors such as credit history, employment status, and financial stability to assess the risk associated with potential tenants or borrowers. This helps landlords and lenders make informed decisions about leasing or lending.

7. Smart Building Technology: AI-enabled sensors and IoT devices can collect and analyze data on building occupancy, energy consumption, and environmental conditions to optimize building operations, improve energy efficiency, and enhance occupant comfort.

#KhalidAlbeshri#pivot#Holdingcompany#CEO#Realestate#realestatedevelopment#contentmarketing#businessmanagement#businessconsultants#businessstartup#marketingtips#خالدالبشري

#advertising#artificial intelligence#autos#business#developers & startups#edtech#education#futurism#finance#marketing

8 notes

·

View notes

Text

The Critical Role of Structured Cabling in Today's Digital World

In today’s fast-paced, technology-driven world, structured cabling plays a vital role in keeping businesses and homes connected. Whether it’s for data, voice, or video, a well-organized cabling system is the backbone of any communication network. With the increasing demand for high-speed, reliable connections, structured cabling has become more important than ever before. This article explores the significance of structured cabling, how it supports modern technology, and why it’s essential for both businesses and residential setups.

What is Structured Cabling?

Structured cabling refers to the standardized approach used to organize and install cables that carry data and communication signals. It’s a complete system of cabling and associated hardware, designed to provide a comprehensive telecommunications infrastructure.

This type of cabling supports a wide range of applications, including internet, phone systems, and video conferencing. By creating a structured layout, this system ensures efficient data flow and makes it easier to manage upgrades, changes, or troubleshooting.

Structured cabling systems are divided into six main components: entrance facilities, backbone cabling, horizontal cabling, telecommunications rooms, work area components, and equipment rooms. These components work together to create a seamless communication network.

The Benefits of Structured Cabling

The primary benefit of structured cabling is its ability to support high-performance networks. It’s designed to handle large volumes of data, ensuring that businesses can operate without interruption.

Additionally, structured cabling offers flexibility. It allows for the easy addition of new devices and systems without needing to overhaul the entire infrastructure. This scalability is especially important in today’s world, where technology is constantly evolving.

Structured cabling also enhances efficiency. It reduces the risk of downtime by providing a reliable, organized system that is easy to manage. Troubleshooting and maintenance become simpler, saving businesses time and resources.

Finally, structured cabling offers future-proofing. With this type of system, businesses can stay ahead of technological advancements, as it supports higher data transfer rates and new technologies like 5G and IoT.

How Structured Cabling Supports Modern Technology

As technology advances, the need for fast and reliable data transmission grows. Structured cabling supports a wide range of modern technologies that are critical for businesses and homes.

For businesses, having a robust structured cabling system is essential for running daily operations. From cloud computing to video conferencing, every aspect of a company’s communication relies on a solid network foundation. Employees need to access data quickly, collaborate in real-time, and use cloud-based software efficiently. Without structured cabling, these tasks become more difficult and less reliable.

In homes, structured cabling ensures that entertainment systems, smart devices, and internet connections run smoothly. As smart home technology becomes more prevalent, having a reliable cabling system in place is key to integrating these devices and maintaining their performance.

The Importance of Structured Cabling in Data Centers

Data centers are the heart of any company’s IT infrastructure, and structured cabling is critical to their success. These facilities store vast amounts of data and support essential business functions like email, file storage, and cloud services.

A structured cabling system in a data center enables efficient communication between servers, storage systems, and network devices. It allows data to move quickly and reliably across the network. Without it, data centers would struggle with congestion, leading to slower performance and increased downtime.

The efficiency and scalability of structured cabling make it ideal for data centers, where the demand for faster data transmission is always growing. With the rise of cloud computing, IoT, and big data, structured cabling has become more critical than ever in keeping data centers running at peak performance.

Why Structured Cabling is Crucial for Future Growth

As technology continues to evolve, businesses need to be prepared for future growth. Structured cabling provides the foundation for that growth by offering a scalable, flexible solution that can adapt to new technologies.

One of the most significant trends in technology today is the rise of the Internet of Things (IoT). IoT devices, such as smart sensors and connected appliances, rely on strong network connections to function properly. A structured cabling system ensures that these devices can communicate with each other seamlessly, supporting the expanding ecosystem of connected technology.

Additionally, structured cabling supports faster internet speeds and higher bandwidth, both of which are essential for businesses and homes. With the rise of 5G and other advanced technologies, having a robust cabling infrastructure will be crucial for staying competitive and keeping up with the demands of modern technology.

Working with Professionals for Installation

Installing structured cabling requires expertise, as it’s a complex process that involves designing a layout, selecting the right cables, and ensuring everything is properly organized. This is where working with professionals becomes important.

For businesses or homeowners searching for networking services near me, it's essential to work with a contractor who understands the unique needs of each project. Whether upgrading an existing system or installing new cabling from scratch, experienced professionals can design and implement a system that ensures optimal performance.

Professional installation not only guarantees that the system is set up correctly, but also minimizes the risk of future issues. With their expertise, they can ensure that your structured cabling system is scalable, efficient, and capable of supporting future technologies.

Conclusion

Structured cabling is the backbone of today’s digital world, providing the reliable infrastructure needed for businesses and homes to stay connected. It supports the rapid growth of modern technologies like cloud computing, IoT, and 5G, while also offering flexibility and scalability for future advancements.

For anyone looking to enhance their network performance, investing in structured cabling is a smart choice. It’s an investment in efficiency, reliability, and the future of technology. By working with professionals who understand the importance of structured cabling, you can ensure that your communication infrastructure is ready to meet the demands of today and tomorrow.

2 notes

·

View notes

Text

FPGA Market - Exploring the Growth Dynamics

The FPGA market is witnessing rapid growth finding a foothold within the ranks of many up-to-date technologies. It is called versatile components, programmed and reprogrammed to perform special tasks, staying at the fore to drive innovation across industries such as telecommunications, automotive, aerospace, and consumer electronics. Traditional fixed-function chips cannot be changed to an application, whereas in the case of FPGAs, this can be done. This brings fast prototyping and iteration capability—extremely important in high-flux technology fields such as telecommunications and data centers. As such, FPGAs are designed for the execution of complex algorithms and high-speed data processing, thus making them well-positioned to handle the demands that come from next-generation networks and cloud computing infrastructures.

In the aerospace and defense industries, FPGAs have critically contributed to enhancing performance in systems and enhancing their reliability. It is their flexibility that enables the realization of complex signal processing, encryption, and communication systems necessary for defense-related applications. FPGAs provide the required speed and flexibility to meet the most stringent specifications of projects in aerospace and defense, such as satellite communications, radar systems, and electronic warfare. The ever-improving FPGA technology in terms of higher processing power and lower power consumption is fueling demand in these critical areas.

Consumer electronics is another upcoming application area for FPGAs. From smartphones to smart devices, and finally the IoT, the demand for low-power and high-performance computing is on the rise. In this regard, FPGAs give the ability to integrate a wide array of varied functions onto a single chip and help in cutting down the number of components required, thereby saving space and power. This has been quite useful to consumer electronics manufacturers who wish to have state-of-the-art products that boast advanced features and have high efficiency. As IoT devices proliferate, the role of FPGAs in this area will continue to foster innovation.

Growing competition and investments are noticed within the FPGA market, where key players develop more advanced and efficient products. The performance of FPGAs is increased by investing in R&D; the number of features grows, and their cost goes down. This competitive environment is forcing innovation and a wider choice availability for end-users is contributing to the growth of the whole market.

Author Bio -

Akshay Thakur

Senior Market Research Expert at The Insight Partners

2 notes

·

View notes

Text

Intel VTune Profiler For Data Parallel Python Applications

Intel VTune Profiler tutorial

This brief tutorial will show you how to use Intel VTune Profiler to profile the performance of a Python application using the NumPy and Numba example applications.

Analysing Performance in Applications and Systems

For HPC, cloud, IoT, media, storage, and other applications, Intel VTune Profiler optimises system performance, application performance, and system configuration.

Optimise the performance of the entire application not just the accelerated part using the CPU, GPU, and FPGA.

Profile SYCL, C, C++, C#, Fortran, OpenCL code, Python, Google Go, Java,.NET, Assembly, or any combination of languages can be multilingual.

Application or System: Obtain detailed results mapped to source code or coarse-grained system data for a longer time period.

Power: Maximise efficiency without resorting to thermal or power-related throttling.

VTune platform profiler

It has following Features.

Optimisation of Algorithms

Find your code’s “hot spots,” or the sections that take the longest.

Use Flame Graph to see hot code routes and the amount of time spent in each function and with its callees.

Bottlenecks in Microarchitecture and Memory