#data integration software for architecture

Explore tagged Tumblr posts

Text

Data Integration Software for Architecture

W3Partnership Ltd specializes in providing data integration software for the architectureindustry. Our solutions connect various software tools, including BIM and CAD, ensuring consistent, accurate data across systems. This integration improves communication between teams, reduces redundancies, and accelerates project timelines, ultimately enhancing efficiency and productivity throughout the architectural process.

Visit: https://www.insertbiz.com/listing/202-sovereign-court-witan-gate-east-milton-keynes-england-united-kingdom-mk9-2hp-w3-partnership/

0 notes

Text

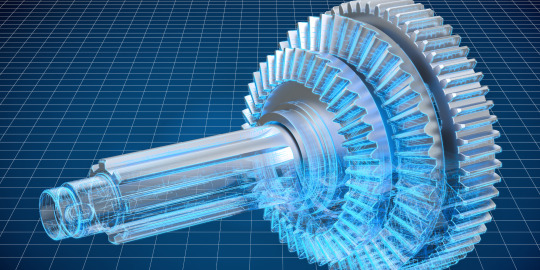

Unveiling the Power of 3D Visualization: Revolutionizing Engineering Applications

In the world of engineering, complex concepts and intricate designs often require effective means of communication to convey ideas, identify potential issues, and foster innovation. 3D visualization has emerged as a powerful tool that not only aids in comprehending intricate engineering concepts but also fuels creativity and enhances collaboration among multidisciplinary teams. This blog dives deep into the realm of 3D visualization for engineering applications, exploring its benefits, applications, and the technologies driving its evolution.

The Power of 3D Visualization

1. Enhanced Understanding: Traditional 2D drawings and diagrams can sometimes fall short in capturing the full complexity of engineering designs. 3D visualization empowers engineers, architects, and designers to create realistic and immersive representations of their ideas. This level of detail allows stakeholders to grasp concepts more easily and make informed decisions.

2. Identification of Design Flaws: One of the primary advantages of 3D visualization is its ability to identify potential design flaws before physical prototyping begins. Engineers can simulate real-world conditions, test stress points, and analyze the behavior of components in various scenarios. This process saves both time and resources that would have been wasted on rectifying issues post-construction.

3. Efficient Communication: When working on multidisciplinary projects, effective communication is essential. 3D visualization simplifies the sharing of ideas by presenting a clear visual representation of the design. This reduces the chances of misinterpretation and encourages productive discussions among team members from diverse backgrounds.

4. Innovation and Creativity: 3D visualization fosters creativity by enabling engineers to experiment with different design variations quickly. This flexibility encourages out-of-the-box thinking and exploration of unconventional ideas, leading to innovative solutions that might not have been considered otherwise.

5. Client Engagement: For projects involving clients or stakeholders who might not have technical expertise, 3D visualization serves as a bridge between complex engineering concepts and layman understanding. Clients can visualize the final product, making it easier to align their expectations with the project's goals.

Applications of 3D Visualization in Engineering

1. Architectural Visualization: In architectural engineering, 3D visualization brings blueprints to life, allowing architects to present realistic walkthroughs of structures before construction. This helps clients visualize the final appearance and make informed decisions about design elements.

2. Product Design and Prototyping: Engineers can use 3D visualization to create virtual prototypes of products, enabling them to analyze the functionality, ergonomics, and aesthetics. This process accelerates the design iteration phase and reduces the number of physical prototypes required.

3. Mechanical Engineering: For mechanical systems, 3D visualization aids in simulating motion, stress analysis, and assembly processes. Engineers can identify interferences, optimize part arrangements, and predict system behavior under different conditions.

4. Civil Engineering and Infrastructure Projects: From bridges to roadways, 3D visualization facilitates the planning and execution of large-scale infrastructure projects. Engineers can simulate traffic flow, assess environmental impacts, and optimize structural design for safety and efficiency.

5. Aerospace and Automotive Engineering: In these industries, intricate designs and high-performance requirements demand rigorous testing. 3D visualization allows engineers to simulate aerodynamics, structural integrity, and other critical factors before manufacturing.

Technologies Driving 3D Visualization

1. Computer-Aided Design (CAD): CAD software forms the foundation of 3D visualization. It enables engineers to create detailed digital models of components and systems. Modern CAD tools offer parametric design, enabling quick modifications and iterative design processes.

2. Virtual Reality (VR) and Augmented Reality (AR): VR and AR technologies enhance the immersive experience of 3D visualization. VR headsets enable users to step into a digital environment, while AR overlays digital content onto the real world, making it ideal for on-site inspections and maintenance tasks.

3. Simulation Software: Simulation tools allow engineers to analyze how a design will behave under various conditions. Finite element analysis (FEA) and computational fluid dynamics (CFD) simulations help predict stress, heat transfer, and fluid flow, enabling design optimization.

4. Rendering Engines: Rendering engines create photorealistic images from 3D models, enhancing visualization quality. These engines simulate lighting, materials, and textures, providing a lifelike representation of the design.

Future Trends and Challenges

As technology evolves, so will the field of 3D visualization for engineering applications. Here are some anticipated trends and challenges:

1. Real-time Collaboration: With the rise of cloud-based tools, engineers worldwide can collaborate on 3D models in real time. This facilitates global teamwork and accelerates project timelines.

2. Artificial Intelligence (AI) Integration: AI could enhance 3D visualization by automating design tasks, predicting failure points, and generating design alternatives based on predefined criteria.

3. Data Integration: Integrating real-time data from sensors and IoT devices into 3D models will enable engineers to monitor performance, identify anomalies, and implement preventive maintenance strategies.

4. Ethical Considerations: As 3D visualization tools become more sophisticated, ethical concerns might arise regarding the potential misuse of manipulated visualizations to deceive stakeholders or obscure design flaws.

In conclusion, 3D visualization is transforming the engineering landscape by enhancing understanding, fostering collaboration, and driving innovation. From architectural marvels to cutting-edge technological advancements, 3D visualization empowers engineers to push the boundaries of what is possible. As technology continues to advance, the future of engineering will undoubtedly be shaped by the dynamic capabilities of 3D visualization.

#3D Visualization#Engineering Visualization#CAD Software#Virtual Reality (VR)#Augmented Reality (AR)#Design Innovation#Visualization Tools#Product Design#Architectural Visualization#Mechanical Engineering#Civil Engineering#Aerospace Engineering#Automotive Engineering#Data Integration#Real-time Visualization#Engineering Trends#Visualization Technologies#Design Optimization

1 note

·

View note

Text

🎄💾🗓️ Day 11: Retrocomputing Advent Calendar - The SEL 840A🎄💾🗓️

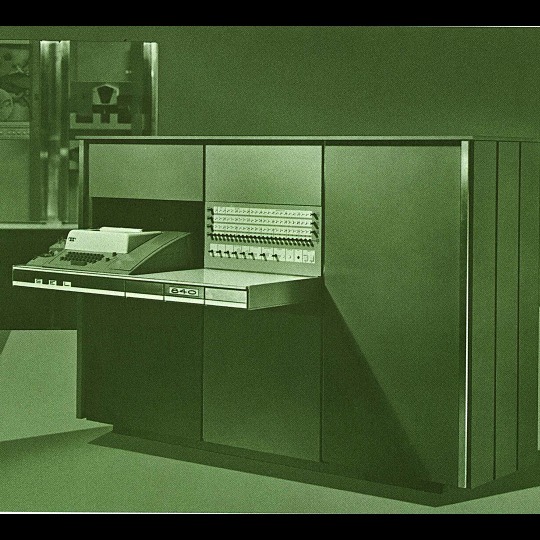

Systems Engineering Laboratories (SEL) introduced the SEL 840A in 1965. This is a deep cut folks, buckle in. It was designed as a high-performance, 24-bit general-purpose digital computer, particularly well-suited for scientific and industrial real-time applications.

Notable for using silicon monolithic integrated circuits and a modular architecture. Supported advanced computation with features like concurrent floating-point arithmetic via an optional Extended Arithmetic Unit (EAU), which allowed independent arithmetic processing in single or double precision. With a core memory cycle time of 1.75 microseconds and a capacity of up to 32,768 directly addressable words, the SEL 840A had impressive computational speed and versatility for its time.

Its instruction set covered arithmetic operations, branching, and program control. The computer had fairly robust I/O capabilities, supporting up to 128 input/output units and optional block transfer control for high-speed data movement. SEL 840A had real-time applications, such as data acquisition, industrial automation, and control systems, with features like multi-level priority interrupts and a real-time clock with millisecond resolution.

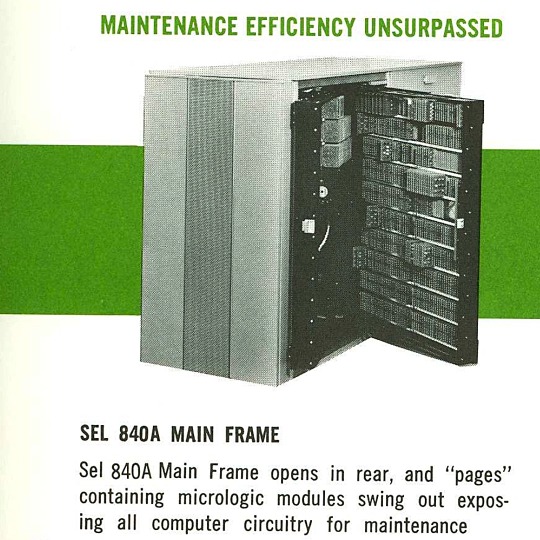

Software support included a FORTRAN IV compiler, mnemonic assembler, and a library of scientific subroutines, making it accessible for scientific and engineering use. The operator’s console provided immediate access to registers, control functions, and user interaction! Designed to be maintained, its modular design had serviceability you do often not see today, with swing-out circuit pages and accessible test points.

And here's a personal… personal computer history from Adafruit team member, Dan…

== The first computer I used was an SEL-840A, PDF:

I learned Fortran on it in eight grade, in 1970. It was at Oak Ridge National Laboratory, where my parents worked, and was used to take data from cyclotron experiments and perform calculations. I later patched the Fortran compiler on it to take single-quoted strings, like 'HELLO', in Fortran FORMAT statements, instead of having to use Hollerith counts, like 5HHELLO.

In 1971-1972, in high school, I used a PDP-10 (model KA10) timesharing system, run by BOCES LIRICS on Long Island, NY, while we were there for one year on an exchange.

This is the front panel of the actual computer I used. I worked at the computer center in the summer. I know the fellow in the picture: he was an older high school student at the time.

The first "personal" computers I used were Xerox Alto, Xerox Dorado, Xerox Dandelion (Xerox Star 8010), Apple Lisa, and Apple Mac, and an original IBM PC. Later I used DEC VAXstations.

Dan kinda wins the first computer contest if there was one… Have first computer memories? Post’em up in the comments, or post yours on socialz’ and tag them #firstcomputer #retrocomputing – See you back here tomorrow!

#retrocomputing#firstcomputer#electronics#sel840a#1960scomputers#fortran#computinghistory#vintagecomputing#realtimecomputing#industrialautomation#siliconcircuits#modulararchitecture#floatingpointarithmetic#computerscience#fortrancode#corememory#oakridgenationallab#cyclotron#pdp10#xeroxalto#computermuseum#historyofcomputing#classiccomputing#nostalgictech#selcomputers#scientificcomputing#digitalhistory#engineeringmarvel#techthroughdecades#console

31 notes

·

View notes

Text

B-2 Gets Big Upgrade with New Open Mission Systems Capability

July 18, 2024 | By John A. Tirpak

The B-2 Spirit stealth bomber has been upgraded with a new open missions systems (OMS) software capability and other improvements to keep it relevant and credible until it’s succeeded by the B-21 Raider, Northrop Grumman announced. The changes accelerate the rate at which new weapons can be added to the B-2; allow it to accept constant software updates, and adapt it to changing conditions.

“The B-2 program recently achieved a major milestone by providing the bomber with its first fieldable, agile integrated functional capability called Spirit Realm 1 (SR 1),” the company said in a release. It announced the upgrade going operational on July 17, the 35th anniversary of the B-2’s first flight.

SR 1 was developed inside the Spirit Realm software factory codeveloped by the Air Force and Northrop to facilitate software improvements for the B-2. “Open mission systems” means that the aircraft has a non-proprietary software architecture that simplifies software refresh and enhances interoperability with other systems.

“SR 1 provides mission-critical capability upgrades to the communications and weapons systems via an open mission systems architecture, directly enhancing combat capability and allowing the fleet to initiate a new phase of agile software releases,” Northrop said in its release.

The system is intended to deliver problem-free software on the first go—but should they arise, correct software issues much earlier in the process.

The SR 1 was “fully developed inside the B-2 Spirit Realm software factory that was established through a partnership with Air Force Global Strike Command and the B-2 Systems Program Office,” Northrop said.

The Spirit Realm software factory came into being less than two years ago, with four goals: to reduce flight test risk and testing time through high-fidelity ground testing; to capture more data test points through targeted upgrades; to improve the B-2’s functional capabilities through more frequent, automated testing; and to facilitate more capability upgrades to the jet.

The Air Force said B-2 software updates which used to take two years can now be implemented in less than three months.

In addition to B61 or B83 nuclear weapons, the B-2 can carry a large number of precision-guided conventional munitions. However, the Air Force is preparing to introduce a slate of new weapons that will require near-constant target updates and the ability to integrate with USAF’s evolving long-range kill chain. A quicker process for integrating these new weapons with the B-2’s onboard communications, navigation, and sensor systems was needed.

The upgrade also includes improved displays, flight hardware and other enhancements to the B-2’s survivability, Northrop said.

“We are rapidly fielding capabilities with zero software defects through the software factory development ecosystem and further enhancing the B-2 fleet’s mission effectiveness,” said Jerry McBrearty, Northrop’s acting B-2 program manager.

The upgrade makes the B-2 the first legacy nuclear weapons platform “to utilize the Department of Defense’s DevSecOps [development, security, and operations] processes and digital toolsets,” it added.

The software factory approach accelerates adding new and future weapons to the stealth bomber, and thus improve deterrence, said Air Force Col. Frank Marino, senior materiel leader for the B-2.

The B-2 was not designed using digital methods—the way its younger stablemate, the B-21 Raider was—but the SR 1 leverages digital technology “to design, manage, build and test B-2 software more efficiently than ever before,” the company said.

The digital tools can also link with those developed for other legacy systems to accomplish “more rapid testing and fielding and help identify and fix potential risks earlier in the software development process.”

Following two crashes in recent years, the stealthy B-2 fleet comprises 19 aircraft, which are the only penetrating aircraft in the Air Force’s bomber fleet until the first B-21s are declared to have achieved initial operational capability at Ellsworth Air Force Base, S.D. A timeline for IOC has not been disclosed.

The B-2 is a stealthy, long-range, penetrating nuclear and conventional strike bomber. It is based on a flying wing design combining LO with high aerodynamic efficiency. The aircraft’s blended fuselage/wing holds two weapons bays capable of carrying nearly 60,000 lb in various combinations.

Spirit entered combat during Allied Force on March 24, 1999, striking Serbian targets. Production was completed in three blocks, and all aircraft were upgraded to Block 30 standard with AESA radar. Production was limited to 21 aircraft due to cost, and a single B-2 was subsequently lost in a crash at Andersen, Feb. 23, 2008.

Modernization is focused on safeguarding the B-2A’s penetrating strike capability in high-end threat environments and integrating advanced weapons.

The B-2 achieved a major milestone in 2022 with the integration of a Radar Aided Targeting System (RATS), enabling delivery of the modernized B61-12 precision-guided thermonuclear freefall weapon. RATS uses the aircraft’s radar to guide the weapon in GPS-denied conditions, while additional Flex Strike upgrades feed GPS data to weapons prerelease to thwart jamming. A B-2A successfully dropped an inert B61-12 using RATS on June 14, 2022, and successfully employed the longer-range JASSM-ER cruise missile in a test launch last December.

Ongoing upgrades include replacing the primary cockpit displays, the Adaptable Communications Suite (ACS) to provide Link 16-based jam-resistant in-flight retasking, advanced IFF, crash-survivable data recorders, and weapons integration. USAF is also working to enhance the fleet’s maintainability with LO signature improvements to coatings, materials, and radar-absorptive structures such as the radome and engine inlets/exhausts.

Two B-2s were damaged in separate landing accidents at Whiteman on Sept. 14, 2021, and Dec. 10, 2022, the latter prompting an indefinite fleetwide stand-down until May 18, 2023. USAF plans to retire the fleet once the B-21 Raider enters service in sufficient numbers around 2032.

Contractors: Northrop Grumman; Boeing; Vought.

First Flight: July 17, 1989.

Delivered: December 1993-December 1997.

IOC: April 1997, Whiteman AFB, Mo.

Production: 21.

Inventory: 20.

Operator: AFGSC, AFMC, ANG (associate).

Aircraft Location: Edwards AFB, Calif.; Whiteman AFB, Mo.

Active Variant: •B-2A. Production aircraft upgraded to Block 30 standards.

Dimensions: Span 172 ft, length 69 ft, height 17 ft.

Weight: Max T-O 336,500 lb.

Power Plant: Four GE Aviation F118-GE-100 turbofans, each 17,300 lb thrust.

Performance: Speed high subsonic, range 6,900 miles (further with air refueling).

Ceiling: 50,000 ft.

Armament: Nuclear: 16 B61-7, B61-12, B83, or eight B61-11 bombs (on rotary launchers). Conventional: 80 Mk 62 (500-lb) sea mines, 80 Mk 82 (500-lb) bombs, 80 GBU-38 JDAMs, or 34 CBU-87/89 munitions (on rack assemblies); or 16 GBU-31 JDAMs, 16 Mk 84 (2,000-lb) bombs, 16 AGM-154 JSOWs, 16 AGM-158 JASSMs, or eight GBU-28 LGBs.

Accommodation: Two pilots on ACES II zero/zero ejection seats.

21 notes

·

View notes

Text

New data model paves way for seamless collaboration among US and international astronomy institutions

Software engineers have been hard at work to establish a common language for a global conversation. The topic—revealing the mysteries of the universe. The U.S. National Science Foundation National Radio Astronomy Observatory (NSF NRAO) has been collaborating with U.S. and international astronomy institutions to establish a new open-source, standardized format for processing radio astronomical data, enabling interoperability between scientific institutions worldwide.

When telescopes are observing the universe, they collect vast amounts of data—for hours, months, even years at a time, depending on what they are studying. Combining data from different telescopes is especially useful to astronomers, to see different parts of the sky, or to observe the targets they are studying in more detail, or at different wavelengths. Each instrument has its own strengths, based on its location and capabilities.

"By setting this international standard, NRAO is taking a leadership role in ensuring that our global partners can efficiently utilize and share astronomical data," said Jan-Willem Steeb, the technical lead of the new data processing program at the NSF NRAO. "This foundational work is crucial as we prepare for the immense data volumes anticipated from projects like the Wideband Sensitivity Upgrade to the Atacama Large Millimeter/submillimeter Array and the Square Kilometer Array Observatory in Australia and South Africa."

By addressing these key aspects, the new data model establishes a foundation for seamless data sharing and processing across various radio telescope platforms, both current and future.

International astronomy institutions collaborating with the NSF NRAO on this process include the Square Kilometer Array Observatory (SKAO), the South African Radio Astronomy Observatory (SARAO), the European Southern Observatory (ESO), the National Astronomical Observatory of Japan (NAOJ), and Joint Institute for Very Long Baseline Interferometry European Research Infrastructure Consortium (JIVE).

The new data model was tested with example datasets from approximately 10 different instruments, including existing telescopes like the Australian Square Kilometer Array Pathfinder and simulated data from proposed future instruments like the NSF NRAO's Next Generation Very Large Array. This broader collaboration ensures the model meets diverse needs across the global astronomy community.

Extensive testing completed throughout this process ensures compatibility and functionality across a wide range of instruments. By addressing these aspects, the new data model establishes a more robust, flexible, and future-proof foundation for data sharing and processing in radio astronomy, significantly improving upon historical models.

"The new model is designed to address the limitations of aging models, in use for over 30 years, and created when computing capabilities were vastly different," adds Jeff Kern, who leads software development for the NSF NRAO.

"The new model updates the data architecture to align with current and future computing needs, and is built to handle the massive data volumes expected from next-generation instruments. It will be scalable, which ensures the model can cope with the exponential growth in data from future developments in radio telescopes."

As part of this initiative, the NSF NRAO plans to release additional materials, including guides for various instruments and example datasets from multiple international partners.

"The new data model is completely open-source and integrated into the Python ecosystem, making it easily accessible and usable by the broader scientific community," explains Steeb. "Our project promotes accessibility and ease of use, which we hope will encourage widespread adoption and ongoing development."

10 notes

·

View notes

Text

The progeny of “move fast and break things” is a digital Frankenstein. This Silicon Valley mantra, once celebrated for its disruptive potential, has proven perilous, especially in the realm of artificial intelligence. The rapid iteration and deployment ethos, while fostering innovation, has inadvertently sown seeds of instability and ethical quandaries in AI systems.

AI systems, akin to complex software architectures, require meticulous design and rigorous testing. The “move fast” approach often bypasses these critical stages, leading to systems that are brittle, opaque, and prone to failure. In software engineering, technical debt accumulates when expedient solutions are favored over robust, sustainable ones. Similarly, in AI, the rush to deploy can lead to algorithmic bias, security vulnerabilities, and unintended consequences, creating an ethical and operational debt that is difficult to repay.

The pitfalls of AI are not merely theoretical. Consider the deployment of facial recognition systems that have been shown to exhibit racial bias due to inadequate training data. These systems, hastily integrated into law enforcement, have led to wrongful identifications and arrests, underscoring the dangers of insufficient vetting. The progeny of “move fast” is not just flawed code but flawed societal outcomes.

To avoid these pitfalls, a paradigm shift is necessary. AI development must embrace a philosophy of “move thoughtfully and build responsibly.” This involves adopting rigorous validation protocols akin to those in safety-critical systems like aviation or healthcare. Techniques such as formal verification, which mathematically proves the correctness of algorithms, should be standard practice. Additionally, AI systems must be transparent, with explainable models that allow stakeholders to understand decision-making processes.

Moreover, interdisciplinary collaboration is crucial. AI developers must work alongside ethicists, sociologists, and domain experts to anticipate and mitigate potential harms. This collaborative approach ensures that AI systems are not only technically sound but socially responsible.

In conclusion, the progeny of “move fast and break things” in AI is a cautionary tale. The path forward requires a commitment to deliberate, ethical, and transparent AI development. By prioritizing robustness and accountability, we can harness the transformative potential of AI without succumbing to the perils of its progeny.

#progeny#AI#skeptic#skepticism#artificial intelligence#general intelligence#generative artificial intelligence#genai#thinking machines#safe AI#friendly AI#unfriendly AI#superintelligence#singularity#intelligence explosion#bias

3 notes

·

View notes

Text

In a zonal architecture, the ECUs are categorized based on their location within the vehicle. The controller is closer to the ECUs, reducing the needed cabling, simplifying the wiring harness, and reducing harness weight by up to 50% with a corresponding reduction in vehicle weight. A zonal architecture can improve data and power distribution.

Developing time-sensitive networking (TSN) in automotive Ethernet is an enabling technology for zonal architectures. It allows efficient communication between ECUs that were in the same domain but are now separated into different zones.

A zonal architecture also supports implementing software-defined vehicle (SDV) functionality. Instead of adding new hardware (ECUs) to add new functions, SDV enables new functions to be downloaded using over-the-air updates into the powerful central vehicle controller. This enables what’s termed continuous integration and continuous deployment (CI/CD) of new vehicle functions in near real-time.

#automotive#zonal architecture#that phrase was new to me#hadn’t thought about how car ethernet would be deployed

16 notes

·

View notes

Text

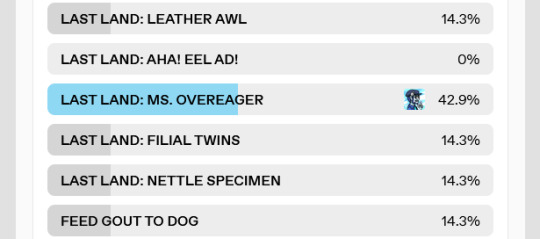

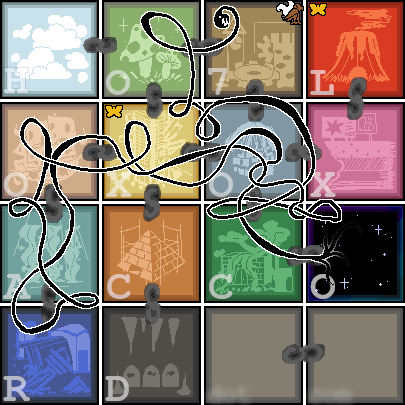

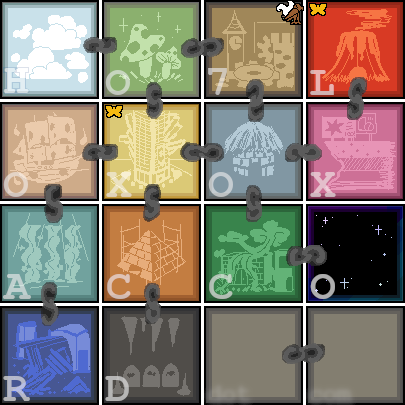

From the beginning | Previously | Coin standings | 5/18 | 6/6

MS. OVEREAGER is happy to help you out with your problems- you need to do what, again? STALL AND REMOVE GEARS? No problemo. She'll get started right away! And by "get started", I mean "dissolve into nothingness because she was a hallucination masking an abstract concept"! You're on your own, buckos.

Okay, so... Adea thinks that this ODD TAIL AVATAR DATA VALIDATOR is after the gears that Walter ransacked from this place to heal himself earlier. If he can get them out, it'll probably stop chasing him. But removing them- even though they're clearly hurting him at this point- will hurt more, like pulling a knife out of a stab wound. He's going to need to stabilize somewhat before you can risk it.

Right here, with a Defrag Point to heal with, is the best place to do it- but he "healed" about 15% STINGY OUTLIER SOUL INTEGRITY earlier, so he should expect removing the gears to do at least that much damage. He'll need to stay there healing for at least enough time to go through two hunger, probably three, to not die on the spot. So it's a question of... how much time does he have to heal before the DATA VALIDATOR arrives and it's time to operate?

Zero. Zero amount of time. It's right here.

Five NOBLE BELT TUTS remain in the DENIAL OF SERVICE gun. Is it worth it to spend them fending this thing off? By the numbers, no. It's more efficient to just buy SOFTWARE PATCHes. But Adea isn't putting up with this thing chasing after her husband one moment more.

Error: architectural entity field 0x07CF referenced without blueprint key. Update loop deferr-

BLAM.

Error 403: resource reclamation process not configured for I/O operations. Interactions with entities other than entity with field 0x07CF rejected. Error 403: resource reclamation process not configured for I/O operations. Interactions with entities other than entity with field 0x07CF rejected. Error 403: resource reclamation process not configured for I/O operations. Interac...

Four left. The undulating thing is frozen in place. Slowly- achingly slowly- the Defrag Point starts knitting Walter back together. As it does, Adea pries clockwork out of his chest, causing him to shudder violently. It's slow, and harrowing, and every gasp of pain from her scrungly little man makes her wince- but she pulls out about a third of it before the thing finishes rattling off rejection messages for the packets.

Error: architectural entity field 0x0--

BLAM.

The two of you, on top of being injured, are practically starving. Adea suggests-

Walter says we absolutely not resorting to cannibalism on purpose! That was an accident! He wasn't in his right mind! No way no way no way!

Adea says fine, and Walter lets out a cut-off scream as she rips out a driveshaft assembly that was pretending to be his lung. She's got about two-thirds of it out, now- and she's got an idea to conserve ammo.

Error: architectural entity field 0x07CF referenced without blueprint key. Update loop deferred until resource is released.

Yeah, you want this stuff, right? Go get it, Adea says- flinging a gear like a frisbee. The DATA VALIDATOR swoops through the air after it, snagging the gear on a tooth with frightening speed. But... not so frightening that she can't delay it a little more with what she's got.

She hurls the lung-driveshaft like a javelin behind the thing, and then starts chucking the rest of the clockwork every which way, scattering it over a wide area. Like a vampire confronted with grains of rice, the DATA VALIDATOR starts scrambling for the pieces of its precious architectural entity field 0x07CF, twisting itself into knots.

While it chases down cogs, sprockets, gears, and springs... Adea hurries back over to Walter, and rips the rest of the machinery from his chest cavity. This one hurts. He's a huge baby about it and screams like that one time she accidentally bought chili oil instead of lu- uh, like it hurts a lot. This would definitely kill him if he weren't being actively defragmented. She tries not to think about that.

You were kind of hoping this thing would immobilize itself from tying itself in knots, but it's able to stretch itself out and slip through gaps in its own Gordian nightmare. It's all you can do to get the rest of it out before it closes in on you again.

Daintily, it pries open a hatch on the sidewalk with one tooth- and then, piece by piece, delicately reassembles the machinery that Walter mistook for spare parts earlier.

Update loop resumes.

With an audible TWANG, and the sound of rushing air, the tension in the DATA VALIDATOR's tail is released. The knot undoes itself, and its head shoots off backwards into the distance as it's recalled to its starting point in the blink of an eye.

It's gone.

...Now what?

The FILIAL TWINS are still here, and you could always go somewhere with a phone and participate in a NAIAD RUMBLE- but there's a few other possible priorities.

There's this moronic cook who doesn't realize he's not welcome. IDIOT CHEF WON'T GO, so you've got to do something to get rid of the jerk.

You could explore the CURVE HOUSE, a weird distorted funhouse-mirror version of a normal building with all its right angles. Seems disorienting!

There's this guy named Pete sitting on the sidewalk nearby who won't stop crying. EMOTIONAL PETER should probably go to actual therapy, but maybe the two of you can help?

There's a FIENDISH ELF ARISING, and it may or may not be your duty as legendary heroes to stop it from becoming a new Demon Lord or somesuch.

Mom's trapped! OH, RELEASE MOM! From her prison that happens to be here for some reason! ...Which one of your moms is it, anyway?

Continued

#lost in hearts#we're back! with a long one!#except uh. i have a funeral to go to tomorrow so probably no update tomorrow#life has been rough lately i tell you what

9 notes

·

View notes

Text

The Evolution of DJ Controllers: From Analog Beginnings to Intelligent Performance Systems

The DJ controller has undergone a remarkable transformation—what began as a basic interface for beat matching has now evolved into a powerful centerpiece of live performance technology. Over the years, the convergence of hardware precision, software intelligence, and real-time connectivity has redefined how DJs mix, manipulate, and present music to audiences.

For professional audio engineers and system designers, understanding this technological evolution is more than a history lesson—it's essential knowledge that informs how modern DJ systems are integrated into complex live environments. From early MIDI-based setups to today's AI-driven, all-in-one ecosystems, this blog explores the innovations that have shaped DJ controllers into the versatile tools they are today.

The Analog Foundation: Where It All Began

The roots of DJing lie in vinyl turntables and analog mixers. These setups emphasized feel, timing, and technique. There were no screens, no sync buttons—just rotary EQs, crossfaders, and the unmistakable tactile response of a needle on wax.

For audio engineers, these analog rigs meant clean signal paths and minimal processing latency. However, flexibility was limited, and transporting crates of vinyl to every gig was logistically demanding.

The Rise of MIDI and Digital Integration

The early 2000s brought the integration of MIDI controllers into DJ performance, marking a shift toward digital workflows. Devices like the Vestax VCI-100 and Hercules DJ Console enabled control over software like Traktor, Serato, and VirtualDJ. This introduced features such as beat syncing, cue points, and FX without losing physical interaction.

From an engineering perspective, this era introduced complexities such as USB data latency, audio driver configurations, and software-to-hardware mapping. However, it also opened the door to more compact, modular systems with immense creative potential.

Controllerism and Creative Freedom

Between 2010 and 2015, the concept of controllerism took hold. DJs began customizing their setups with multiple MIDI controllers, pad grids, FX units, and audio interfaces to create dynamic, live remix environments. Brands like Native Instruments, Akai, and Novation responded with feature-rich units that merged performance hardware with production workflows.

Technical advancements during this period included:

High-resolution jog wheels and pitch faders

Multi-deck software integration

RGB velocity-sensitive pads

Onboard audio interfaces with 24-bit output

HID protocol for tighter software-hardware response

These tools enabled a new breed of DJs to blur the lines between DJing, live production, and performance art—all requiring more advanced routing, monitoring, and latency optimization from audio engineers.

All-in-One Systems: Power Without the Laptop

As processors became more compact and efficient, DJ controllers began to include embedded CPUs, allowing them to function independently from computers. Products like the Pioneer XDJ-RX, Denon Prime 4, and RANE ONE revolutionized the scene by delivering laptop-free performance with powerful internal architecture.

Key engineering features included:

Multi-core processing with low-latency audio paths

High-definition touch displays with waveform visualization

Dual USB and SD card support for redundancy

Built-in Wi-Fi and Ethernet for music streaming and cloud sync

Zone routing and balanced outputs for advanced venue integration

For engineers managing live venues or touring rigs, these systems offered fewer points of failure, reduced setup times, and greater reliability under high-demand conditions.

Embedded AI and Real-Time Stem Control

One of the most significant breakthroughs in recent years has been the integration of AI-driven tools. Systems now offer real-time stem separation, powered by machine learning models that can isolate vocals, drums, bass, or instruments on the fly. Solutions like Serato Stems and Engine DJ OS have embedded this functionality directly into hardware workflows.

This allows DJs to perform spontaneous remixes and mashups without needing pre-processed tracks. From a technical standpoint, it demands powerful onboard DSP or GPU acceleration and raises the bar for system bandwidth and real-time processing.

For engineers, this means preparing systems that can handle complex source isolation and downstream processing without signal degradation or sync loss.

Cloud Connectivity & Software Ecosystem Maturity

Today’s DJ controllers are not just performance tools—they are part of a broader ecosystem that includes cloud storage, mobile app control, and wireless synchronization. Platforms like rekordbox Cloud, Dropbox Sync, and Engine Cloud allow DJs to manage libraries remotely and update sets across devices instantly.

This shift benefits engineers and production teams in several ways:

Faster changeovers between performers using synced metadata

Simplified backline configurations with minimal drive swapping

Streamlined updates, firmware management, and analytics

Improved troubleshooting through centralized data logging

The era of USB sticks and manual track loading is giving way to seamless, cloud-based workflows that reduce risk and increase efficiency in high-pressure environments.

Hybrid & Modular Workflows: The Return of Customization

While all-in-one units dominate, many professional DJs are returning to hybrid setups—custom configurations that blend traditional turntables, modular FX units, MIDI controllers, and DAW integration. This modularity supports a more performance-oriented approach, especially in experimental and genre-pushing environments.

These setups often require:

MIDI-to-CV converters for synth and modular gear integration

Advanced routing and clock sync using tools like Ableton Link

OSC (Open Sound Control) communication for custom mapping

Expanded monitoring and cueing flexibility

This renewed complexity places greater demands on engineers, who must design systems that are flexible, fail-safe, and capable of supporting unconventional performance styles.

Looking Ahead: AI Mixing, Haptics & Gesture Control

As we look to the future, the next phase of DJ controllers is already taking shape. Innovations on the horizon include:

AI-assisted mixing that adapts in real time to crowd energy

Haptic feedback jog wheels that provide dynamic tactile response

Gesture-based FX triggering via infrared or wearable sensors

Augmented reality interfaces for 3D waveform manipulation

Deeper integration with lighting and visual systems through DMX and timecode sync

For engineers, this means staying ahead of emerging protocols and preparing venues for more immersive, synchronized, and responsive performances.

Final Thoughts

The modern DJ controller is no longer just a mixing tool—it's a self-contained creative engine, central to the live music experience. Understanding its capabilities and the technology driving it is critical for audio engineers who are expected to deliver seamless, high-impact performances in every environment.

Whether you’re building a club system, managing a tour rig, or outfitting a studio, choosing the right gear is key. Sourcing equipment from a trusted professional audio retailer—online or in-store—ensures not only access to cutting-edge products but also expert guidance, technical support, and long-term reliability.

As DJ technology continues to evolve, so too must the systems that support it. The future is fast, intelligent, and immersive—and it’s powered by the gear we choose today.

2 notes

·

View notes

Text

For a digital-only, cloud-based PlayStation 7, here’s an updated schematic focusing on next-gen cloud gaming, AI-driven performance, and minimalistic hardware:

1. Hardware Architecture (Cloud-Optimized, Minimalist Design)

Processing Power:

Cloud-Based AI Compute Servers with Custom Sony Neural Processing Units (NPUs)

Local Ultra-Low Latency Streaming Box (PS7 Cloud Hub) with AI-Assisted Lag Reduction

Storage:

No Internal Game Storage (Everything Runs via PlayStation ZeroCloud)

4TB Cloud-Synced SSD for System & Personal Data

Connectivity:

WiFi 7 & 6G Mobile Support for High-Speed Streaming

Quantum Encrypted Bluetooth 6.0 for Peripherals

Direct-to-Server Ethernet Optimization (AI-Managed Ping Reduction)

Form Factor:

Minimalist Digital Console Hub (Size of a Small Router)

No Disc Drive – Fully Digital & Cloud-Dependent

2. UI/UX Design (AI-Powered Cloud Interface)

NexusOS 1.0 (Cloud-Based AI UI): Personalized Dashboard Adapting to Player Preferences

ZeroNexus AI Assistant:

Predictive Game Recommendations

Smart Latency Optimization for Cloud Gaming

In-Game AI Strategy Coach

Instant Play Anywhere:

Seamless Cloud Save Syncing Across Devices

Playable on Console, PC, Tablet, or NexusPad Companion Device

Holographic UI Options (for AR Integration with Future PlayStation VR)

3. Concept Art & Industrial Design (Minimalist, Streaming-Focused)

Compact, Vertical-Standing Console (PS7 Cloud Hub)

Sleek, Heatless Design (No Heavy Internal Processing)

DualSense 2X Controller:

Cloud-Connected Haptics (Real-Time Adaptive Feedback)

AI-Touchscreen Interface for Quick Actions & Cloud Navigation

Self-Charging Dock (Wireless Power Transfer)

4. Software & Ecosystem (Full Cloud Gaming Integration)

PlayStation ZeroCloud (Sony’s Ultimate Cloud Gaming Service)

No Downloads, No Installs – Instant Play on Any Device

AI-Based 8K Upscaling & Adaptive Frame Rate

Cloud-Powered VR & AR Experiences

Cross-Platform Compatibility: PlayStation 7 Games Playable on PC, TV, & Mobile

Subscription-Based Ownership (Game Library Access Model with NFT Licensing for Exclusive Titles)

Eco-Friendly AI Resource Scaling: Low Power Consumption for Cloud Streaming

This design ensures ultra-fast, high-quality, cloud-first gaming while eliminating hardware limitations. Let me know if you want refinements or additional features!

#chanel#playstation7#deardearestbrands x chanel#deardearestbrands sony playstation7 controller#ps7#PS7#playstation7 controller#deardearestbrands#Chanel x Playstation#playtation7Chanel#chanel textiles

3 notes

·

View notes

Text

How Can Legacy Application Support Align with Your Long-Term Business Goals?

Many businesses still rely on legacy applications to run core operations. These systems, although built on older technology, are deeply integrated with workflows, historical data, and critical business logic. Replacing them entirely can be expensive and disruptive. Instead, with the right support strategy, these applications can continue to serve long-term business goals effectively.

1. Ensure Business Continuity

Continuous service delivery is one of the key business objectives of any enterprise. Maintenance of old applications guarantees business continuity, which minimizes chances of business interruption in case of software malfunctions or compatibility errors. These applications can be made to work reliably with modern support strategies such as performance monitoring, frequent patching, system optimization, despite changes in the rest of the system changes in the rest of the systems. This prevents the lost revenue and downtime of unplanned outages.

2. Control IT Costs

A straight replacement of the legacy systems is a capital intensive process. By having support structures, organizations are in a position to prolong the life of such applications and ensure an optimal IT expenditure. The cost saved can be diverted into innovation or into technologies that interact with the customers. An effective support strategy manages the total cost of ownership (TCO), without sacrificing performance or compliance.

3. Stay Compliant and Secure

The observance of industry regulations is not negotiable. Unsupported legacy application usually fall out of compliance with standards changes. This is handled by dedicated legacy application support which incorporates security updates, compliances patching and audit trails maintenance. This minimizes the risks of regulatory fines and reputational loss as well as governance and risk management objectives.

4. Connect with Modern Tools

Legacy support doesn’t mean working in isolation. With the right approach, these systems can connect to cloud platforms, APIs, and data tools. This enables real-time reporting, improved collaboration, and more informed decision-making—without requiring full system replacements.

5. Protect Business Knowledge

The legacy systems often contain years of institutional knowledge built into workflows, decision trees, and data architecture. They should not be abandoned early because vital operational insights may be lost. Maintaining these systems enables enterprises to keep that knowledge and transform it into documentation or reusable code aligned with ongoing digital transformation initiatives.

6. Support Scalable Growth

Well-supported legacy systems can still grow with your business. With performance tuning and capacity planning, they can handle increased demand and user loads. This keeps growth on track without significant disruption to IT systems.

7. Increase Flexibility and Control

Maintaining legacy application—either in-house or through trusted partners—gives businesses more control over their IT roadmap. It avoids being locked into aggressive vendor timelines and allows change to happen on your terms.

Legacy applications don’t have to be a roadblock. With the right support model, they become a stable foundation that supports long-term goals. From cost control and compliance to performance and integration, supported legacy systems can deliver measurable value. Specialized Legacy Application Maintenance Services are provided by service vendors such as Suma Soft, TCS, Infosys, Capgemini, and HCLTech, to enable businesses to get the best out of their current systems, as they prepare to transform in the future. Choosing the appropriate partner will maintain these systems functioning, developing and integrated with wider business strategies.

#BusinessContinuity#DigitalTransformation#ITStrategy#EnterpriseIT#BusinessOptimization#TechLeadership#ScalableSolutions#SmartITInvestments

3 notes

·

View notes

Text

Architectural Data Integration: Bridging the Gap Between Design and Technology

At W3 Partnership, we specialize in delivering integration connectivity as a service (iCaaS), cloud-based integration solutions, and middleware integration consultancy tailored to your unique needs. With decades of expertise, we empower businesses to streamline operations, eliminate silos, and drive innovation through hybrid integration platforms and advanced digital integration architecture services. Using advanced requirements analysis and digital integration architecture techniques, we collect all the necessary information to enable efficient stakeholder communication, ensuring a smooth transition from analysis to implementation.

Data integration software for Architecture

Data-driven decision-making is now an integral part of the business landscape. Every organization needs an integrated view of data from various sources for in-depth analytics and research. However, data integration becomes more challenging as data grows exponentially. Understanding data integration software for architecture patterns and best practices is essential when implementing advanced data integration across your organization. To overcome these challenges, traditional data warehousing methods have evolved into more efficient data management approaches such as data mesh and fabric.

Systems Integration Consulting

Industrial enterprises manage an ecosystem of equipment, assets, processes, and sub-systems and their siloed data sets. Such disparate data sources contribute to inefficiencies in data quality, integrity, duplicity, data sharing & collaboration across organizations. Inaccurate data connectivity and delayed information flow across all departments lead to missing improvement opportunities. They need to partner with systems integration consulting partner - to create a single unified system that helps streamline business operations, meet delivery targets, improve customer experience, and build a highly efficient connected enterprise system.

Digital Integration Solutions

With its cutting-edge video streaming services, Digital Integration Solutions guarantees smooth, excellent internet delivery. For low-latency, high-quality streams, we use state-of-the-art encoding gadgets and specialize in achieving maximum efficiency across devices. Our complete solutions, which serve a wide range of clients to enhance user experience for increased audience engagement, address content management, distribution, analytics, and monetization. Our knowledgeable staff guarantees that solutions are customized for security and operational agility. They study big datasets and uncover important patterns and trends by using machine learning, advanced analytics, and visualization.

Explore this Blog for further details:

https://tinyurl.com/3czrvuna

https://tinyurl.com/bdze8ewc

#data integration software for architecture#Digital Integration Solutions#systems integration consulting

0 notes

Text

Cloud Computing: Definition, Benefits, Types, and Real-World Applications

In the fast-changing digital world, companies require software that matches their specific ways of working, aims and what their customers require. That’s when you need custom software development services. Custom software is made just for your organization, so it is more flexible, scalable and efficient than generic software.

What does Custom Software Development mean?

Custom software development means making, deploying and maintaining software that is tailored to a specific user, company or task. It designs custom Software Development Services: Solutions Made Just for Your Business to meet specific business needs, which off-the-shelf software usually cannot do.

The main advantages of custom software development are listed below.

1. Personalized Fit

Custom software is built to address the specific needs of your business. Everything is designed to fit your workflow, whether you need it for customers, internal tasks or industry-specific functions.

2. Scalability

When your business expands, your software can also expand. You can add more features, users and integrations as needed without being bound by strict licensing rules.

3. Increased Efficiency

Use tools that are designed to work well with your processes. Custom software usually automates tasks, cuts down on repetition and helps people work more efficiently.

4. Better Integration

Many companies rely on different tools and platforms. You can have custom software made to work smoothly with your CRMs, ERPs and third-party APIs.

5. Improved Security

You can set up security measures more effectively in a custom solution. It is particularly important for industries that handle confidential information, such as finance, healthcare or legal services.

Types of Custom Software Solutions That Are Popular

CRM Systems

Inventory and Order Management

Custom-made ERP Solutions

Mobile and Web Apps

eCommerce Platforms

AI and Data Analytics Tools

SaaS Products

The Process of Custom Development

Requirement Analysis

Being aware of your business goals, what users require and the difficulties you face in running the business.

Design & Architecture

Designing a software architecture that can grow, is safe and fits your requirements.

Development & Testing

Writing code that is easy to maintain and testing for errors, speed and compatibility.

Deployment and Support

Making the software available and offering support and updates over time.

What Makes Niotechone a Good Choice?

Our team at Niotechone focuses on providing custom software that helps businesses grow. Our team of experts works with you throughout the process, from the initial idea to the final deployment, to make sure the product is what you require.

Successful experience in various industries

Agile development is the process used.

Support after the launch and options for scaling

Affordable rates and different ways to work together

Final Thoughts

Creating custom software is not only about making an app; it’s about building a tool that helps your business grow. A customized solution can give you the advantage you require in the busy digital market, no matter if you are a startup or an enterprise.

#software development company#development company software#software design and development services#software development services#custom software development outsourcing#outsource custom software development#software development and services#custom software development companies#custom software development#custom software development agency#custom software development firms#software development custom software development#custom software design companies#custom software#custom application development#custom mobile application development#custom mobile software development#custom software development services#custom healthcare software development company#bespoke software development service#custom software solution#custom software outsourcing#outsourcing custom software#application development outsourcing#healthcare software development

2 notes

·

View notes

Text

Why Python Will Thrive: Future Trends and Applications

Python has already made a significant impact in the tech world, and its trajectory for the future is even more promising. From its simplicity and versatility to its widespread use in cutting-edge technologies, Python is expected to continue thriving in the coming years. Considering the kind support of Python Course in Chennai Whatever your level of experience or reason for switching from another programming language, learning Python gets much more fun.

Let's explore why Python will remain at the forefront of software development and what trends and applications will contribute to its ongoing dominance.

1. Artificial Intelligence and Machine Learning

Python is already the go-to language for AI and machine learning, and its role in these fields is set to expand further. With powerful libraries such as TensorFlow, PyTorch, and Scikit-learn, Python simplifies the development of machine learning models and artificial intelligence applications. As more industries integrate AI for automation, personalization, and predictive analytics, Python will remain a core language for developing intelligent systems.

2. Data Science and Big Data

Data science is one of the most significant areas where Python has excelled. Libraries like Pandas, NumPy, and Matplotlib make data manipulation and visualization simple and efficient. As companies and organizations continue to generate and analyze vast amounts of data, Python’s ability to process, clean, and visualize big data will only become more critical. Additionally, Python’s compatibility with big data platforms like Hadoop and Apache Spark ensures that it will remain a major player in data-driven decision-making.

3. Web Development

Python’s role in web development is growing thanks to frameworks like Django and Flask, which provide robust, scalable, and secure solutions for building web applications. With the increasing demand for interactive websites and APIs, Python is well-positioned to continue serving as a top language for backend development. Its integration with cloud computing platforms will also fuel its growth in building modern web applications that scale efficiently.

4. Automation and Scripting

Automation is another area where Python excels. Developers use Python to automate tasks ranging from system administration to testing and deployment. With the rise of DevOps practices and the growing demand for workflow automation, Python’s role in streamlining repetitive processes will continue to grow. Businesses across industries will rely on Python to boost productivity, reduce errors, and optimize performance. With the aid of Best Online Training & Placement Programs, which offer comprehensive training and job placement support to anyone looking to develop their talents, it’s easier to learn this tool and advance your career.

5. Cybersecurity and Ethical Hacking

With cyber threats becoming increasingly sophisticated, cybersecurity is a critical concern for businesses worldwide. Python is widely used for penetration testing, vulnerability scanning, and threat detection due to its simplicity and effectiveness. Libraries like Scapy and PyCrypto make Python an excellent choice for ethical hacking and security professionals. As the need for robust cybersecurity measures increases, Python’s role in safeguarding digital assets will continue to thrive.

6. Internet of Things (IoT)

Python’s compatibility with microcontrollers and embedded systems makes it a strong contender in the growing field of IoT. Frameworks like MicroPython and CircuitPython enable developers to build IoT applications efficiently, whether for home automation, smart cities, or industrial systems. As the number of connected devices continues to rise, Python will remain a dominant language for creating scalable and reliable IoT solutions.

7. Cloud Computing and Serverless Architectures

The rise of cloud computing and serverless architectures has created new opportunities for Python. Cloud platforms like AWS, Google Cloud, and Microsoft Azure all support Python, allowing developers to build scalable and cost-efficient applications. With its flexibility and integration capabilities, Python is perfectly suited for developing cloud-based applications, serverless functions, and microservices.

8. Gaming and Virtual Reality

Python has long been used in game development, with libraries such as Pygame offering simple tools to create 2D games. However, as gaming and virtual reality (VR) technologies evolve, Python’s role in developing immersive experiences will grow. The language’s ease of use and integration with game engines will make it a popular choice for building gaming platforms, VR applications, and simulations.

9. Expanding Job Market

As Python’s applications continue to grow, so does the demand for Python developers. From startups to tech giants like Google, Facebook, and Amazon, companies across industries are seeking professionals who are proficient in Python. The increasing adoption of Python in various fields, including data science, AI, cybersecurity, and cloud computing, ensures a thriving job market for Python developers in the future.

10. Constant Evolution and Community Support

Python’s open-source nature means that it’s constantly evolving with new libraries, frameworks, and features. Its vibrant community of developers contributes to its growth and ensures that Python stays relevant to emerging trends and technologies. Whether it’s a new tool for AI or a breakthrough in web development, Python’s community is always working to improve the language and make it more efficient for developers.

Conclusion

Python’s future is bright, with its presence continuing to grow in AI, data science, automation, web development, and beyond. As industries become increasingly data-driven, automated, and connected, Python’s simplicity, versatility, and strong community support make it an ideal choice for developers. Whether you are a beginner looking to start your coding journey or a seasoned professional exploring new career opportunities, learning Python offers long-term benefits in a rapidly evolving tech landscape.

#python course#python training#python#technology#tech#python programming#python online training#python online course#python online classes#python certification

2 notes

·

View notes

Text

What Future Trends in Software Engineering Can Be Shaped by C++

The direction of innovation and advancement in the broad field of software engineering is greatly impacted by programming languages. C++ is a well-known programming language that is very efficient, versatile, and has excellent performance. In terms of the future, C++ will have a significant influence on software engineering, setting trends and encouraging innovation in a variety of fields.

In this blog, we'll look at three key areas where the shift to a dynamic future could be led by C++ developers.

1. High-Performance Computing (HPC) & Parallel Processing

Driving Scalability with Multithreading

Within high-performance computing (HPC), where managing large datasets and executing intricate algorithms in real time are critical tasks, C++ is still an essential tool. The fact that C++ supports multithreading and parallelism is becoming more and more important as parallel processing-oriented designs, like multicore CPUs and GPUs, become more commonplace.

Multithreading with C++

At the core of C++ lies robust support for multithreading, empowering developers to harness the full potential of modern hardware architectures. C++ developers adept in crafting multithreaded applications can architect scalable systems capable of efficiently tackling computationally intensive tasks.

C++ Empowering HPC Solutions

Developers may redefine efficiency and performance benchmarks in a variety of disciplines, from AI inference to financial modeling, by forging HPC solutions with C++ as their toolkit. Through the exploitation of C++'s low-level control and optimization tools, engineers are able to optimize hardware consumption and algorithmic efficiency while pushing the limits of processing capacity.

2. Embedded Systems & IoT

Real-Time Responsiveness Enabled

An ability to evaluate data and perform operations with low latency is required due to the widespread use of embedded systems, particularly in the quickly developing Internet of Things (IoT). With its special combination of system-level control, portability, and performance, C++ becomes the language of choice.

C++ for Embedded Development

C++ is well known for its near-to-hardware capabilities and effective memory management, which enable developers to create firmware and software that meet the demanding requirements of environments with limited resources and real-time responsiveness. C++ guarantees efficiency and dependability at all levels, whether powering autonomous cars or smart devices.

Securing IoT with C++

In the intricate web of IoT ecosystems, security is paramount. C++ emerges as a robust option, boasting strong type checking and emphasis on memory protection. By leveraging C++'s features, developers can fortify IoT devices against potential vulnerabilities, ensuring the integrity and safety of connected systems.

3. Gaming & VR Development

Pushing Immersive Experience Boundaries

In the dynamic domains of game development and virtual reality (VR), where performance and realism reign supreme, C++ remains the cornerstone. With its unparalleled speed and efficiency, C++ empowers developers to craft immersive worlds and captivating experiences that redefine the boundaries of reality.

Redefining VR Realities with C++

When it comes to virtual reality, where user immersion is crucial, C++ is essential for producing smooth experiences that take users to other worlds. The effectiveness of C++ is crucial for preserving high frame rates and preventing motion sickness, guaranteeing users a fluid and engaging VR experience across a range of applications.

C++ in Gaming Engines

C++ is used by top game engines like Unreal Engine and Unity because of its speed and versatility, which lets programmers build visually amazing graphics and seamless gameplay. Game developers can achieve previously unattainable levels of inventiveness and produce gaming experiences that are unmatched by utilizing C++'s capabilities.

Conclusion

In conclusion, there is no denying C++'s ongoing significance as we go forward in the field of software engineering. C++ is the trend-setter and innovator in a variety of fields, including embedded devices, game development, and high-performance computing. C++ engineers emerge as the vanguards of technological growth, creating a world where possibilities are endless and invention has no boundaries because of its unmatched combination of performance, versatility, and control.

FAQs about Future Trends in Software Engineering Shaped by C++

How does C++ contribute to future trends in software engineering?

C++ remains foundational in software development, influencing trends like high-performance computing, game development, and system programming due to its efficiency and versatility.

Is C++ still relevant in modern software engineering practices?

Absolutely! C++ continues to be a cornerstone language, powering critical systems, frameworks, and applications across various industries, ensuring robustness and performance.

What advancements can we expect in C++ to shape future software engineering trends?

Future C++ developments may focus on enhancing parallel computing capabilities, improving interoperability with other languages, and optimizing for emerging hardware architectures, paving the way for cutting-edge software innovations.

10 notes

·

View notes

Text

Top Trends in Software Development for 2025

The software development industry is evolving at an unprecedented pace, driven by advancements in technology and the increasing demands of businesses and consumers alike. As we step into 2025, staying ahead of the curve is essential for businesses aiming to remain competitive. Here, we explore the top trends shaping the software development landscape and how they impact businesses. For organizations seeking cutting-edge solutions, partnering with the Best Software Development Company in Vadodara, Gujarat, or India can make all the difference.

1. Artificial Intelligence and Machine Learning Integration:

Artificial Intelligence (AI) and Machine Learning (ML) are no longer optional but integral to modern software development. From predictive analytics to personalized user experiences, AI and ML are driving innovation across industries. In 2025, expect AI-powered tools to streamline development processes, improve testing, and enhance decision-making.

Businesses in Gujarat and beyond are leveraging AI to gain a competitive edge. Collaborating with the Best Software Development Company in Gujarat ensures access to AI-driven solutions tailored to specific industry needs.

2. Low-Code and No-Code Development Platforms:

The demand for faster development cycles has led to the rise of low-code and no-code platforms. These platforms empower non-technical users to create applications through intuitive drag-and-drop interfaces, significantly reducing development time and cost.

For startups and SMEs in Vadodara, partnering with the Best Software Development Company in Vadodara ensures access to these platforms, enabling rapid deployment of business applications without compromising quality.

3. Cloud-Native Development:

Cloud-native technologies, including Kubernetes and microservices, are becoming the backbone of modern applications. By 2025, cloud-native development will dominate, offering scalability, resilience, and faster time-to-market.

The Best Software Development Company in India can help businesses transition to cloud-native architectures, ensuring their applications are future-ready and capable of handling evolving market demands.

4. Edge Computing:

As IoT devices proliferate, edge computing is emerging as a critical trend. Processing data closer to its source reduces latency and enhances real-time decision-making. This trend is particularly significant for industries like healthcare, manufacturing, and retail.

Organizations seeking to leverage edge computing can benefit from the expertise of the Best Software Development Company in Gujarat, which specializes in creating applications optimized for edge environments.

5. Cybersecurity by Design:

With the increasing sophistication of cyber threats, integrating security into the development process has become non-negotiable. Cybersecurity by design ensures that applications are secure from the ground up, reducing vulnerabilities and protecting sensitive data.

The Best Software Development Company in Vadodara prioritizes cybersecurity, providing businesses with robust, secure software solutions that inspire trust among users.

6. Blockchain Beyond Cryptocurrencies:

Blockchain technology is expanding beyond cryptocurrencies into areas like supply chain management, identity verification, and smart contracts. In 2025, blockchain will play a pivotal role in creating transparent, tamper-proof systems.

Partnering with the Best Software Development Company in India enables businesses to harness blockchain technology for innovative applications that drive efficiency and trust.

7. Progressive Web Apps (PWAs):

Progressive Web Apps (PWAs) combine the best features of web and mobile applications, offering seamless experiences across devices. PWAs are cost-effective and provide offline capabilities, making them ideal for businesses targeting diverse audiences.

The Best Software Development Company in Gujarat can develop PWAs tailored to your business needs, ensuring enhanced user engagement and accessibility.

8. Internet of Things (IoT) Expansion:

IoT continues to transform industries by connecting devices and enabling smarter decision-making. From smart homes to industrial IoT, the possibilities are endless. In 2025, IoT solutions will become more sophisticated, integrating AI and edge computing for enhanced functionality.

For businesses in Vadodara and beyond, collaborating with the Best Software Development Company in Vadodara ensures access to innovative IoT solutions that drive growth and efficiency.

9. DevSecOps:

DevSecOps integrates security into the DevOps pipeline, ensuring that security is a shared responsibility throughout the development lifecycle. This approach reduces vulnerabilities and ensures compliance with industry standards.

The Best Software Development Company in India can help implement DevSecOps practices, ensuring that your applications are secure, scalable, and compliant.

10. Sustainability in Software Development:

Sustainability is becoming a priority in software development. Green coding practices, energy-efficient algorithms, and sustainable cloud solutions are gaining traction. By adopting these practices, businesses can reduce their carbon footprint and appeal to environmentally conscious consumers.

Working with the Best Software Development Company in Gujarat ensures access to sustainable software solutions that align with global trends.

11. 5G-Driven Applications:

The rollout of 5G networks is unlocking new possibilities for software development. Ultra-fast connectivity and low latency are enabling applications like augmented reality (AR), virtual reality (VR), and autonomous vehicles.

The Best Software Development Company in Vadodara is at the forefront of leveraging 5G technology to create innovative applications that redefine user experiences.

12. Hyperautomation:

Hyperautomation combines AI, ML, and robotic process automation (RPA) to automate complex business processes. By 2025, hyperautomation will become a key driver of efficiency and cost savings across industries.

Partnering with the Best Software Development Company in India ensures access to hyperautomation solutions that streamline operations and boost productivity.

13. Augmented Reality (AR) and Virtual Reality (VR):

AR and VR technologies are transforming industries like gaming, education, and healthcare. In 2025, these technologies will become more accessible, offering immersive experiences that enhance learning, entertainment, and training.

The Best Software Development Company in Gujarat can help businesses integrate AR and VR into their applications, creating unique and engaging user experiences.

Conclusion:

The software development industry is poised for significant transformation in 2025, driven by trends like AI, cloud-native development, edge computing, and hyperautomation. Staying ahead of these trends requires expertise, innovation, and a commitment to excellence.

For businesses in Vadodara, Gujarat, or anywhere in India, partnering with the Best Software Development Company in Vadodara, Gujarat, or India ensures access to cutting-edge solutions that drive growth and success. By embracing these trends, businesses can unlock new opportunities and remain competitive in an ever-evolving digital landscape.

#Best Software Development Company in Vadodara#Best Software Development Company in Gujarat#Best Software Development Company in India#nividasoftware

5 notes

·

View notes