#data science project life cycle

Text

Stages of the Data Science Lifecycle: From Idea to Deployment

In this short video, we'll discuss the stages of the data science lifecycle, from idea to deployment. We'll cover the different stages of a data science project, from data collection to data analysis and finally, the presentation of the results.

If you're new to data science or you're looking for a better understanding of the life cycle of a data science project, then this video is for you!

By the end of this short video, you'll have a clearer understanding of the stages of the data science lifecycle, and you'll be able to move from idea to deployment with ease!

#data science life cycle#data science life cycle phases#data science project life cycle#complete life cycle of a data science project#data science project#data science project step by step#data science project management#data science#data science tutorial#data science for beginners#data science project from scratch#learn data science#simplilearn data science#machine learning#data science life cycle model#overview of data science life cycle

0 notes

Text

The Gargantuan Fossil

This post was from the beginning of my project, thus some information I’ve written here is outdated. Please read my recent posts to see up to date information.

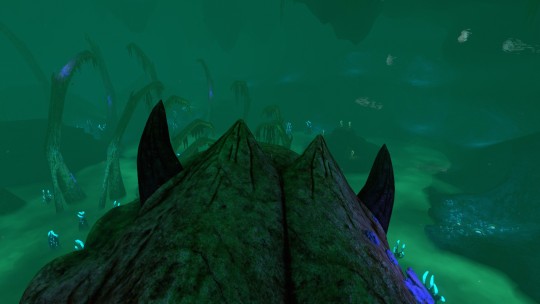

The Gargantuan Fossil is one of the most recognizable parts of the mid-portion of Subnautica’s gameplay. Its sheer size strikes both terror and awe into the hearts of players who stumble upon it. It’s unfortunate that only a third of the creature’s fossilized remains can be seen. Even using the Freecam command to check under the map reveals that the rest of the skeleton remains unmodeled. This is all we have of the Leviathan.

“Gargantuan Fossil” is quite the accurate name, considering just a third of this creature’s skeleton measures 402 meters in length, with the creature’s total size being an estimated 1,100-1,500 meters. Just the skull itself is under 100 meters, and our human player character can nestle comfortably in even its smallest eye socket. I would’ve tried to show our human character’s model for a size comparison, but this thing is so large you wouldn’t even be able to see him.

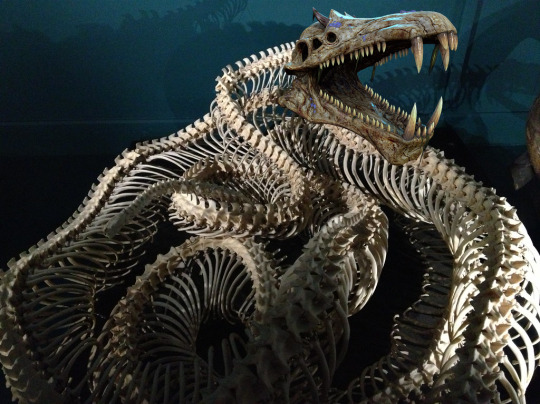

There have been many different reconstructions of this behemoth of a fossil, the most popular being this commission piece made by Tapwing, for the YouTuber Anthomnia, shown below. And while it’s cool, it’s... not all that accurate.

In the past, I actually helped create a Gargantuan Leviathan mod based off Tapwing’s concept, working alongside other incredibly talented artists (who will remain anonymous, they can talk about their experiences as they please) and some... not so savory individuals. I don’t want to be associated with that old Garg. This reconstruction project is both a way for me to move on and make something better than what I had in the past, and to test my skills and knowledge in the various natural sciences.

Although there is a second specimen, the skull of a younger instance, this fossil holds no significant data outside of showing just how small these creatures start out as. As shown in the image below, despite being a much younger instance, our player character could still fit inside the Leviathan’s smallest eye socket, although it wouldn’t be as spacious as its adult counterpart.

The game’s PDA (Personal Data Assistant) states that the Gargantuan Fossil is approximately 3 million years old, which is INCREDIBLY RECENT. For reference, 3 million years ago we still shared the planet with multiple other hominid species like Australopithecus afarensis back in the mid Pliocene. The Subnautica we know today is a byproduct of a mass-extinction of megafauna, such as Leviathans. My guess as to how the Gargantuan got this big is a combination of deep-sea gigantism and an evolutionary arms race against the other megafauna alive during its time, with prey attempting to become larger than its predator to avoid predation, and the predator growing to continue this cycle. When this ancient ecosystem of leviathan-class super predators collapsed, likely because of the meteor that struck Planet 4546B, the Gargantuan Leviathan was out of a substantial food source and went extinct.

It could be possible that these creatures even gave live birth due to their serpentine body and massive size, making them too large for life in the shallows, where laying eggs is easiest.

NOW. LET’S TALK ABOUT THE BONES!! It’s important to figure out if the Gargantuan Leviathan had a cartilaginous skeleton or a bony one, so let’s count the bones!!

There’s TWO WHOLE BONES!!! AND IF YOU LOOK NEXT TO IT!! THOSE RIBS ARE BONES TOO!!!! UWAA!!! SO MANY BONES!!!! How can we tell this is bones? It’s simple!

Cartilage is rubbery and flexible, so it doesn't fossilize well, while bone is hard and rigid, perfect fossil material!! Cartilaginous skulls also tend to be made up of many little interlocking bones, with bony skulls being made up of only a small handful!

Another thing I found interesting about the Gargantuan Skull is that it seems to have a ball and socket joint? This could have just been a similar mishap to the top and bottom jaws being fused in the skull’s model, but I’m trying to keep things as close to the original anatomy as possible. The ball and socket joint probably evolved to help with the burden of such a massive and heavy skull and allowing for greater speed and range of motion. In a world full of Leviathan-class predators, being able to have a wide range of motion would be extremely beneficial in locating both potential predators and prey.

Despite its immense size pushing the claim this Leviathan was an apex predator, it sports a small pair of horns, which is unheard of in large apex predators here on Earth. The darker coloration leads me to believe that these aren’t just horn cores, but the entire horn. These horns were most likely used to assist in defending itself against predators while it’s still small and vulnerable. It could also be a possibility they were used for threat displays and territory fights though it seems unlikely due to their small size. Sexual displays are also unlikely since just about every creature in Subnautica seems capable of asexual reproduction, as noted in the PDA entry for eggs. Asexual reproduction seems to be a very ancient basal trait in Planet 4546B’s evolutionary lineage and was most likely evolved to help species persevere even with low numbers and harsh conditions, preventing the dangers of inbreeding.

Overall, the skull’s shape and tooth structure suggest a piscivorous diet (of course it eats fish, the planet’s 99% water), and its shape specifically is reminiscent of an Orca and Redondasaurus.

The lack of nostrils stumps me, there’s no openings in the skull aside from its eye sockets, however there’s also no evidence for a gill apparatus. I’m... going to have to come back to that at a later date. Though I personally believe the Gargantuan Leviathan was an air breather due to the lack of evidence for gills.

OKOK, ENOUGH ABOUT THE SKULL ASRIEL, WHAT ABOUT THE RIBS?

WELL... THE RIBS ARE... SOMETHING.

Behold! My very poor photomash of the same two images to show the total approximate length of the Gargantuan Leviathan, and a bad edit to show off what I believe the whole skeletal system would look like! (skeleton image credit)

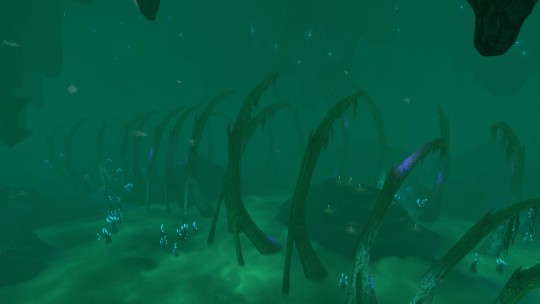

Despite the game’s PDA describing the Gargantuan Leviathan’s body as “eel-like,” its skeletal structure is more reminiscent of a snake. The ribs show no indication of limbs, so it probably had a dorsal fin similar to eels or sea kraits.

One thing I’ve noticed about the Gargantuan’s ribcage is the existence of what appear to be bony, avian-like uncinate processes, which help the trunk’s muscles pump in and air out of the body, adding onto the idea that this leviathan breathed air. These uncinate processes in diving birds are especially long, which help reinforce the body and musculature, allowing the animal to stay underwater for longer periods of time.

My hypothesis for the role the Gargantuan Leviathan played in its ecosystem is similar to the Sperm Whales of our world, taking in large amounts of air before diving into the depths to fetch their food.

Next week, I’ll be doing more research into the skeleton and possibly beginning work on fleshing the Garg out! If there are any sciencey folks out on Tumblr who want to add their own input, feel free!! I want information!! Correct me if I got anything wrong!!

#long post#VERY long post#speculative biology#speculative paleobiology#paleontology#marine biology#fictional biology#subnautica#character analysis#monster biology#leviathan#gargantuan leviathan#essay

434 notes

·

View notes

Text

“There are no forbidden questions in science, no matters too sensitive or delicate to be probed, no sacred truths.” Carl Sagan

Ecoinvent, the world’s largest database on the environmental impact of wind and solar technologies, has no data from China, even though it makes most of the world's solar panels

The UN IPCC and IEA have relied on Ecoinvent estimates of life cycle CO2 emissions from solar, but given China refuses to be transparent in their reporting, Ecoinvent fudged the numbers using values from EU and US manufacturing.

As a result, Chinese derived solar PV panels have life cycles in the range of 170 to 250 kg CO2 equivalent per MWh versus the 50 kg CO2 per MWh so commonly used by the IPCC and others in calculating CO2 emission offset credits and in climate modelling.

Consider that advanced combined cycle gas turbine power plants have CO2 emission factors ranging from 300 to 350 kg CO2 per MWh and use a much smaller fraction of the amount of non-renewable materials per unit of energy produced than do solar PV facilities.

It is a well known fact that paring natural gas turbine technologies in a load balancing subservient role to solar PV gives rise to reduced thermal efficiencies of the turbine system. Thereby acting to further reduce the emission reduction benefits of solar PV and its already small marginal difference with a stand alone natural gas system.

The moral of the story is carbon credits are a scam, as are modelling projections of the climate effects of solar PV technologies.

123 notes

·

View notes

Note

Hi, I just saw your post about the plastic pellets you found on the beach. They reminded me of "nurdles"-- little microplastic pellets used to make larger plastic items.

There's actually a science project called Nurdle Patrol monitoring the number of nurdles on beaches off the Gulf of Mexico by using data that people submit after combing a section of beach for 10 minutes.

It's a cool project that's worth looking into, even if you're not on the Gulf coast.

@gayraginglamb Yeah, I've heard about them! I love citizen science, and this sort of project is so helpful in adding data to the "Here's why we need to do something about plastics." I'm hoping we can put more pressure on industry and government to curb the flow of plastics from the source as well as make it mandatory for the companies that produce it to reclaim it as well. Having some firm data on where this stuff is ending up is just going to add to the weight of arguments for a more responsible life cycle for plastics.

9 notes

·

View notes

Link

A new, higher-resolution infrared camera outfitted with a variety of lightweight filters could probe sunlight reflected off Earth’s upper atmosphere and surface, improve forest fire warnings, and reveal the molecular composition of other planets. The cameras use sensitive, high-resolution strained-layer superlattice sensors, initially developed at NASA’s Goddard Space Flight Center in Greenbelt, Maryland, using IRAD, Internal Research and Development funding. Their compact construction, low mass, and adaptability enable engineers like Tilak Hewagama to adapt them to the needs of a variety of sciences. Goddard engineer Murzy Jhabvala holds the heart of his Compact Thermal Imager camera technology – a high-resolution, high-spectral range infrared sensor suitable for small satellites and missions to other solar-system objects. “Attaching filters directly to the detector eliminates the substantial mass of traditional lens and filter systems,” Hewagama said. “This allows a low-mass instrument with a compact focal plane which can now be chilled for infrared detection using smaller, more efficient coolers. Smaller satellites and missions can benefit from their resolution and accuracy.” Engineer Murzy Jhabvala led the initial sensor development at NASA’s Goddard Space Flight Center in Greenbelt, Maryland, as well as leading today’s filter integration efforts. Jhabvala also led the Compact Thermal Imager experiment on the International Space Station that demonstrated how the new sensor technology could survive in space while proving a major success for Earth science. More than 15 million images captured in two infrared bands earned inventors, Jhabvala, and NASA Goddard colleagues Don Jennings and Compton Tucker an agency Invention of the Year award for 2021. The Compact Thermal Imager captured unusually severe fires in Australia from its perch on the International Space Station in 2019 and 2020. With its high resolution, detected the shape and location of fire fronts and how far they were from settled areas — information critically important to first responders. Credit: NASA Data from the test provided detailed information about wildfires, better understanding of the vertical structure of Earth’s clouds and atmosphere, and captured an updraft caused by wind lifting off Earth’s land features called a gravity wave. The groundbreaking infrared sensors use layers of repeating molecular structures to interact with individual photons, or units of light. The sensors resolve more wavelengths of infrared at a higher resolution: 260 feet (80 meters) per pixel from orbit compared to 1,000 to 3,000 feet (375 to 1,000 meters) possible with current thermal cameras. The success of these heat-measuring cameras has drawn investments from NASA’s Earth Science Technology Office (ESTO), Small Business Innovation and Research, and other programs to further customize their reach and applications. Jhabvala and NASA’s Advanced Land Imaging Thermal IR Sensor (ALTIRS) team are developing a six-band version for this year’s LiDAR, Hyperspectral, & Thermal Imager (G-LiHT) airborne project. This first-of-its-kind camera will measure surface heat and enable pollution monitoring and fire observations at high frame rates, he said. NASA Goddard Earth scientist Doug Morton leads an ESTO project developing a Compact Fire Imager for wildfire detection and prediction. “We’re not going to see fewer fires, so we’re trying to understand how fires release energy over their life cycle,” Morton said. “This will help us better understand the new nature of fires in an increasingly flammable world.” CFI will monitor both the hottest fires which release more greenhouse gases and cooler, smoldering coals and ashes which produce more carbon monoxide and airborne particles like smoke and ash. “Those are key ingredients when it comes to safety and understanding the greenhouse gases released by burning,” Morton said. After they test the fire imager on airborne campaigns, Morton’s team envisions outfitting a fleet of 10 small satellites to provide global information about fires with more images per day. Combined with next generation computer models, he said, “this information can help the forest service and other firefighting agencies prevent fires, improve safety for firefighters on the front lines, and protect the life and property of those living in the path of fires.” Probing Clouds on Earth and Beyond Outfitted with polarization filters, the sensor could measure how ice particles in Earth’s upper atmosphere clouds scatter and polarize light, NASA Goddard Earth scientist Dong Wu said. This applications would complement NASA’s PACE — Plankton, Aerosol, Cloud, ocean Ecosystem — mission, Wu said, which revealed its first light images earlier this month. Both measure the polarization of light wave’s orientation in relation to the direction of travel from different parts of the infrared spectrum. “The PACE polarimeters monitor visible and shortwave-infrared light,” he explained. “The mission will focus on aerosol and ocean color sciences from daytime observations. At mid- and long-infrared wavelengths, the new Infrared polarimeter would capture cloud and surface properties from both day and night observations.” In another effort, Hewagama is working Jhabvala and Jennings to incorporate linear variable filters which provide even greater detail within the infrared spectrum. The filters reveal atmospheric molecules’ rotation and vibration as well as Earth’s surface composition. That technology could also benefit missions to rocky planets, comets, and asteroids, planetary scientist Carrie Anderson said. She said they could identify ice and volatile compounds emitted in enormous plumes from Saturn’s moon Enceladus. “They are essentially geysers of ice,” she said, “which of course are cold, but emit light within the new infrared sensor’s detection limits. Looking at the plumes against the backdrop of the Sun would allow us to identify their composition and vertical distribution very clearly.” By Karl B. Hille NASA’s Goddard Space Flight Center, Greenbelt, Md. Share Details Last Updated May 22, 2024 Related TermsGoddard TechnologyGoddard Space Flight CenterTechnology Keep Exploring Discover More Topics From NASA Goddard Technology Innovations Goddard's Office of the Chief Technologist oversees the center's technology research and development efforts and provides updates on the latest… Goddard’s Internal Research & Development Program (IRAD) Information and links for Goddard's IRAD and CIF technology research and development programs and other NASA tech development sources. Technology Goddard Office of the Chief Technologist Staff page for the Goddard Office of the Chief Technologist with portraits and short bios

2 notes

·

View notes

Text

Mega projects

Two years ago, I changed jobs.

The role of BI Analyst that I moved from was about 80% hard-skill based. I took on it while living with a disability resulting from post-operation difficulties and it served me well, providing me with great work-life balance at acceptable wage. Thanks to the arrangement, I was able to take care of myself, deal with my physical handicap, and finally undergo a couple of surgeries, which restored my somewhat healthy status.

Following that, I finally started properly recovering mentally. Soon, I had been able to take on many of my previous hobbies other than gaming. I bought a bicycle, which I'd driven the 20 odd kilometers to work couple of times and even managed to climb the way to the dorms where my then girlfriend (now wife) stayed. I also managed to recondition and sell off computer hardware that I had had piling up for a good while then. I started new electronics projects and fixed appliences for friends and family. I redesigned my blog, made a website, designed a brandbook for an acquaintance, and edited hours of videos. It was almost as if I were back at the uni with little extra money, which allowed me to invest into stuff.

The next obvious thing to happen for any purpose-driven individual was becoming more proactive at work. I suggested expansion and overall improvement of architecture behind the firm's BI suite, because it was clearly necessary - more on that in an earlier article. In spite of my being categorized under finance, there was no real budget for it and most of my proposals ended up in an abbyss. I even paid for Google Cloud resources to automate some of the data science stuff.

When a new CTO came in and things finally started to move, he was more keen on bringing his own people to do the important work. Myself, being previously involved in projects of country-level importance including system implementation and process redesign, even being offered a similar role in the Netherlands, albeit shortly before being diagnosed with cancer, I felt it was unfair not to give me the opportunity. So I left to seek it elsewhere.

I found it with a firm two miles from my birthplace, which was founded some two years after I was born. [Coincidence? Likely.] They (or rather we) are a used car retailer and at that point in time needed to replace an old CRM system. And that's what I was tasked with, all the way from technology and supplier tender to the launch and establishment of iterative development cycles. It was notch up from what I did some time before then, exactly the challenge I felt I needed.

Supported by the director of ICT with profound experience working with a global logistics giant, I completed the implementation in two years. The role encompassed project management, across business stakeholders and external suppliers, creating technical specifications, but most of all, doing a lot of the programming myself - especially the integrations. Along the way, I was joined by a teammate, whom I slowly handed over the responsibility of overseeing the operations and providing L1 - L2 support.

The final 9 months leading up to that were particularly difficult, though - finalizing every little bit to the continually adjusting requirements put forward by the key process owners. In the week before go-live, I worked double hours to finish everything and enable a "big bang" transition. D-Day 3 am, I had to abort due to not making the final data migration in time, meaning that the switch happened on Valentine's day.

The extended care period, over which we had to fix every single bug and reduce glitches took about two more months. And even though we managed to present the whole thing as complete, oversight and further expansion still take about two days of my week.

Over the duration of the CRM project, I was fully invested in it and still managed to deliver some extras, like helping out with reporting, integrating Windows users repository with chip-based attendance system from late '00s, working with some weird APIs, and administering two servers loaded with devops utilities.

Personal life did not suffer entirely. I dedicated most to spending time with my girlfriend, even managed to marry her during that period. There were some home-improvement activities that needed to be done and a small number of hurried vacations. But all my side-projects and hobbies ended up being on hold.

And that is literally the only thing I regret about the project and, to date, from the whole job change. Now is the time to try and pick up where I left off. Regaining the momentum in writing and video editing will be particularly difficult. My wife wants me to help out with her cosplay, so I have good motivation to return to being crafty again and refresh the experience from when I made a LARP crossbow and melee weapons. Furthering home-improvement is a big desire of mine but cost is an issue nowadays, with rent and utilities being entirely on my shoulders.

And then there are two things that I want to achieve that I failed at for way too long. Obtaining a driver's license (or possibly making my wife get it) and losing weight. The latter, I am working on with the handy calorie tracking app that dine4fit.com is, especially in my current region, and my Garmin watch. We will hopefully go swimming again soon as well. The former is a whole different story surrounded by plenty of trauma that still needs some recovery and obviously the sacrifice of cost and time to complete it.

I believe I have now strongly improved my work-life balance, by far not to what I was used to at the uni, but to a level that should let me do things that I want to do. And I wish to maintain it for a while. Maybe before embarking on yet another mega project, albeit with a much better starting point than the one I had in this case? Who knows.

And about the money, I believe a spike will come eventually, with transition to another employer, most likely. But the longer I am here, the more experience comes my way in doses much greater than those I would get elsewhere if I were to move just now. I'm 28 and if I lose weight and make sure to overcome obstacles of personal nature, I will do better. As for not being a millionaire by the age of 30, I should be able to handle that.

I almost died five years ago, gave up on pursuing my master's, lost the chance to take on the opportunity I had in the Netherlands, and now live where I'd wished, even managed temporarily, to move away from. I do well understand how scarce our time is, but I have to cut myself some slack when others don't (upcoming article "cancer perks"). For what it is, I still rock, don't I?!

3 notes

·

View notes

Text

A list of Automotive Engineering Service Companies in Germany

Bertrandt AG, https://www.bertrandt.com/. Bertrandt operates in digital engineering, physical engineering, and electrical systems/electronics segments. Its Designing function includes designing of all the elements of the automotive.

Alten Group, https://www.alten.com/. ALTEN Group supports the development strategy of its customers in the fields of innovation, R&D and technological information systems. Created 30 years ago, the Group has become a world leader in Engineering and Technology consulting. 24 700 highly qualified engineers carry out studies and conception projects for the Technical and Information Systems Divisions of major customers in the industrial, telecommunications and Service sectors.

L&T Technology Services Limited, https://www.ltts.com/. LTTS’ expertise in engineering design, product development, smart manufacturing, and digitalization touches every area of our lives — from the moment we wake up to when we go to bed. With 90 Innovation and R&D design centers globally, we specialize in disruptive technology spaces such as EACV, Med Tech, 5G, AI and Digital Products, Digital Manufacturing, and Sustainability.

FEV Group GmbH, https://www.fev.com/. FEV is into the design and development of internal combustion engines, conventional, electric, and alternative vehicle drive systems, energy technology, and a major supplier of advanced testing and instrumentation products and services to some of the world’s largest powertrain OEMs. Founded in 1978 by Prof. Franz Pischinger, today the company employs worldwide highly skilled research and development specialists on several continents.

Harman International, https://www.harman.com/. HARMAN designs and engineers connected products and solutions for automakers, consumers, and enterprises worldwide, including connected car systems, audio and visual products, enterprise automation solutions; and services supporting the Internet of Things.

EDAG Engineering GmbH, https://www.edag.com/de/. EDAG is into vehicle development, plant planning and construction, and process optimization.

HCL Technologies Limited, http://www.hcltech.com/. HCL Technologies Limited is an Indian multinational information technology services and consulting company headquartered in Noida. It emerged as an independent company in 1991 when HCL entered into the software services business. The company has offices in 52 countries and over 210,966 employees.

Cientra GmbH, https://www.cientra.com/. Cientra expertise across VLSI, ASIC, FPGA, SoC engineering, and IoT accelerate our delivery of customized solutions to the Consumer, Aviation, Semiconductors, Telecom, Wireless, and Automotive industries across their product lifecycle.

Akka Technologies, https://www.akka-technologies.com/. AKKA supports the world’s leading industry players in their digital transformation and throughout their entire product life cycle.

IAV GmbHb, https://www.iav.com/en/. IAV develops the mobility of the future. Regardless of the specific manufacturer, our engineering proves itself in vehicles and technologies all over the world.

Altran Technologies, https://www.altran.com/in/en/. Altran expertise from strategy and design to managing operations in the fields of cloud, data artificial intelligence, connectivity, software, digital engineering, and platforms.

Capgemini Engineering, https://capgemini-engineering.com/de/de/. Capgemini Engineering is a technology and innovation consultancy across sectors including Aeronautics, Space, Defense, Naval, Automotive, Rail, Infrastructure & Transportation, Energy, Utilities & Chemicals, Life Sciences, Communications, Semiconductor & Electronics, Industrial & Consumer, Software & Internet.

2 notes

·

View notes

Text

How Many Seconds in a Month? Here’s A Simple Way to Calculate

Understanding the number of seconds in a month can be complex because there are different no. of days every month. Thus, this blog post provides a simple method to calculate how many seconds in a month. Moreover, it will also explain the differences between a second and a month. As a result, this simple method will help you to calculate the answer effortlessly. It is true even if you are a student, have a curious mind, or need precise time calculation.

Difference Between a Seconds and a Month

As per the International System of Units (SI), a “Second” is the basic time unit. To understand it better, there are 60 seconds in a minute, 3,600 seconds in an hour, 86,400 seconds in a day, and 604,800 seconds in a week. People commonly use this unit to measure short durations and intervals.

A “Month” is a unit of time that people use in calendars. It typically consists of 28 to 31 days and is used on the lunar cycle. Furthermore, it helps people organize their annual projects and mark periods like seasons, events, and financial cycles.

How Many Seconds in a Month?

Calculating the number of seconds in a month can be tricky. However, some factors determine how many seconds there are in any particular month. But must know this basic information before starting the calculation:

60 Seconds = 1 Minute

60 Minutes = 1 Hour

24 Hours = 1 Day

So, read the following section to learn about the number of seconds in a month.

First, you must multiply the number of seconds in an hour.

After that, you should multiply the number of hours in a day:

Next, you need to multiply the number of days in a month:

First of all, here is a basic calculation you need to carry out first:

60 Minutes Per Hour * 60 Seconds / Minute = 3,600 Seconds

24 Hours Per Day * 3,600 Seconds = 86,400 Seconds

Now follow the same calculation for a 30-day Month, 31-day month, February in a common year, and February in a leap year.

30-Days Month:

30 Days Per Month * 86,400 Seconds = 25,92,000 Seconds

Hence, there are 25,92,000 Seconds in a 30-day long month.

31-Days Month:

31 Days Per Month * 86,400 Seconds = 26,78,400 Seconds

Thus, there are 26,78,400 Seconds in a 31-day long month.

February in a Common Year:

28 Days Per Month * 86,400 Seconds = 24,19,200 Seconds

So, there are 24,19,200 Seconds in February month during a common year.

February in a Leap Year:

29 Days Per Month * 86,400 Seconds = 25,05,600 Seconds

As a result, there are 25,05,600 Seconds in February during a leap year.

Real-Life Use Cases to Calculate Seconds in a Month

Calculating how many seconds in a month will help you in various real-life use cases. Here are some of them you need to know about:

Science and Engineering. You should accurately carry out the time calculations for experiments and processes. In engineering, timing is important for consistency in communication networks and data transmission systems.

Global Positioning System (GPS). GPS is dependent on precise timing to determine exact locations. Satellites use atomic clocks to calculate the exact time taken for signals to travel. As a result, it helps pinpoint the position on Earth.

Daily Routines. People use the seconds in a month conversion to manage their schedules effectively. As a result, it helps ensure that tasks and activities fit within their available time.

Summing It All Up

Understanding how many seconds are in a month can be complex due to the different days of each month. Therefore, this blog post simplifies the calculation process, explaining the difference between a second and a month. It further provides a method to calculate seconds in any month. You need to multiply the days by 24, then by 60, and finally by 60 again. This calculation is helpful for subscription billing, project management, data transfer, event logging, and financial analysis.

0 notes

Text

Discover the Best BCA Colleges in Chandigarh: Your Path to a Successful Tech Career

Chandigarh, known for its well-planned infrastructure and educational institutions, is a hub for students aspiring to build a career in technology. Among the various programs offered, the Bachelor of Computer Applications (BCA) stands out as a popular choice for those passionate about computers and software development. If you're looking to pursue a BCA degree, Chandigarh offers some of the best colleges that provide quality education, industry exposure, and excellent placement opportunities.

Why Choose Chandigarh for Your BCA Studies?

Educational Hub: Chandigarh is home to some of the top educational institutions in India, offering a conducive environment for academic growth.

Industry Exposure: The city has a growing IT sector, providing students with ample opportunities for internships, projects, and placements in leading tech companies.

Experienced Faculty: BCA colleges in Chandigarh boast experienced faculty members who are experts in their fields, ensuring that students receive a comprehensive education.

Modern Infrastructure: Colleges in Chandigarh are equipped with state-of-the-art facilities, including computer labs, libraries, and digital classrooms, providing students with the best learning environment.

BCA Course Structure and Curriculum

The BCA program in Chandigarh colleges typically spans three years and is divided into six semesters. The curriculum is designed to provide a strong foundation in computer science and its applications. Here’s an overview of the subjects covered:

Programming Languages: C, C++, Java, Python, etc.

Database Management: SQL, Oracle, etc.

Web Development: HTML, CSS, JavaScript, etc.

Software Engineering: Software development life cycle, project management.

Data Structures: Concepts of data organization, algorithms.

Operating Systems: Windows, Linux, etc.

Networking: Basics of networking, network security.

Mathematics for Computing: Discrete mathematics, statistics.

#college#bca college#bca#bca course#india#mbacollege#artificial intelligence#edtech#education#student#school#robotics#uttaranchaluniversity

0 notes

Text

Your Guide to Learn about Data Science Life Cycle

Summary: This guide covers the Data Science Life Cycle's key stages, offering practical tips for managing data science projects efficiently. Learn how to transform raw data into actionable insights that drive business decisions.

Introduction

Understanding the Data Science Life Cycle is crucial for anyone looking to excel in data science. This cycle provides a structured approach to transforming raw data into actionable insights. By mastering each stage, you ensure that your data science projects are not only efficient but also yield accurate and meaningful results.

This guide will walk you through the essential stages of the Data Science Life Cycle, explaining their significance and offering practical tips for each step. By the end, you’ll have a clear roadmap for managing data science projects from start to finish.

What is the Data Science Life Cycle?

The Data Science Life Cycle is a systematic approach to managing data science projects, guiding the entire process from understanding the problem to deploying the solution.

It consists of several stages, including business understanding, data collection, data preparation, data exploration and analysis, modeling, and deployment. Each stage plays a critical role in transforming raw data into valuable insights that can drive decision-making.

Importance of the Life Cycle in Data Science Projects

Understanding the Data Science Life Cycle is essential for ensuring the success of any data-driven project. By following a structured process, data scientists can effectively manage complex tasks, from handling large datasets to building and refining predictive models.

The life cycle ensures that each step is completed thoroughly, reducing the risk of errors and enhancing the reliability of the results.

Moreover, the iterative nature of the life cycle allows for continuous improvement. By revisiting earlier stages based on new insights or changes in requirements, data scientists can refine models and optimize outcomes.

This adaptability is crucial in today’s dynamic business environment, where data-driven decisions must be both accurate and timely. Ultimately, the Data Science Life Cycle enables teams to deliver actionable insights that align with business goals, maximizing the value of data science efforts.

Read: Data Destruction: A Comprehensive Guide.

Stages of the Data Science Life Cycle

The Data Science Life Cycle is a systematic process that guides data scientists in turning raw data into meaningful insights. Each stage of the life cycle plays a crucial role in ensuring the success of a data science project.

This guide will walk you through each stage, from understanding the business problem to communicating the results effectively. By following these stages, you can streamline your approach and ensure that your data science projects are aligned with business goals and deliver actionable outcomes.

Business Understanding

The first stage of the Data Science Life Cycle is Business Understanding. It involves identifying the problem or business objective that needs to be addressed.

This stage is critical because it sets the direction for the entire project. Without a clear understanding of the business problem, data science efforts may be misaligned, leading to wasted resources and suboptimal results.

To begin, you must collaborate closely with stakeholders to define the problem or objective clearly. Ask questions like, "What is the business trying to achieve?" and "What are the pain points that data science can address?"

This helps in framing the data science goals that align with the broader business goals. Once the problem is well-defined, you can translate it into data science objectives, which guide the subsequent stages of the life cycle.

Data Collection

With a clear understanding of the business problem, the next step is Data Collection. In this stage, you gather relevant data from various sources that will help address the business objective. Data can come from multiple channels, such as databases, APIs, sensors, social media, and more.

Understanding the types of data available is crucial. Data can be structured, such as spreadsheets and databases, or unstructured, such as text, images, and videos.

Additionally, you must be aware of the formats in which data is stored, as this will impact how you process and analyze it later. The quality and relevance of the data you collect are paramount, as poor data quality can lead to inaccurate insights and faulty decision-making.

Data Preparation

Once you have gathered the data, the next stage is Data Preparation. This stage involves cleaning and preprocessing the data to ensure it is ready for analysis.

Data cleaning addresses issues such as missing data, outliers, and anomalies that could skew the analysis. Preprocessing may involve normalizing data, encoding categorical variables, and transforming data into a format suitable for modeling.

Handling missing data is one of the critical tasks in this stage. You might choose to impute missing values, drop incomplete records, or use algorithms that can handle missing data.

Additionally, outliers and anomalies need to be identified and addressed, as they can distort the results. Data transformation and feature engineering are also part of this stage, where you create new features or modify existing ones to better capture the underlying patterns in the data.

Data Exploration and Analysis

Data Exploration and Analysis is where you begin to uncover the insights hidden within the data. This stage involves conducting exploratory data analysis (EDA) to identify patterns, correlations, and trends. EDA is an essential step because it helps you understand the data's structure and relationships before applying any modeling techniques.

During this stage, you might use various tools and techniques for data visualization, such as histograms, scatter plots, and heatmaps, to explore the data. These visualizations can reveal important insights, such as the distribution of variables, correlations between features, and potential areas for further investigation.

EDA also helps in identifying any remaining issues in the data, such as biases or imbalances, which need to be addressed before moving on to the modeling stage.

Data Modeling

After exploring the data, the next stage is Data Modeling. This stage involves selecting appropriate algorithms and models that can help solve the business problem. The choice of model depends on the nature of the data and the specific problem you are trying to address, whether it's classification, regression, clustering, or another type of analysis.

Once you've selected the model, you'll train it on your data, testing different configurations and parameters to optimize performance. After training, it's crucial to evaluate the model's performance using metrics such as accuracy, precision, recall, and F1 score. This evaluation helps determine whether the model is robust and reliable enough for deployment.

Model Deployment

With a well-trained model in hand, the next step is Model Deployment. In this stage, you deploy the model into a production environment where it can start generating predictions and insights in real-time. Deployment may involve integrating the model with existing systems or setting up a new infrastructure to support it.

Continuous monitoring and maintenance of the model are vital to ensure it remains effective over time. Models can degrade due to changes in data patterns or shifts in business needs, so regular updates and retraining may be necessary. Addressing these issues promptly ensures that the model continues to deliver accurate and relevant results.

Communication of Results

The final stage of the Data Science Life Cycle is Communication of Results. Once the model is deployed and generating insights, it's essential to communicate these findings to stakeholders effectively. Visualization plays a key role in this stage, as it helps translate complex data into understandable insights.

You should present the results clearly, highlighting the key findings and their implications for the business. Creating actionable recommendations based on these insights is crucial for driving decision-making and ensuring that the data science project delivers tangible value.

Challenges in the Data Science Life Cycle

In the Data Science Life Cycle, challenges are inevitable and can arise at every stage. These obstacles can hinder progress if not addressed effectively. However, by recognizing common issues and implementing strategies to overcome them, you can ensure a smoother process and successful project outcomes.

Data Collection Issues: Incomplete or inconsistent data can disrupt analysis. To overcome this, establish clear data collection protocols and use automated tools for consistency.

Data Quality Problems: Poor data quality, including missing values or outliers, can lead to inaccurate models. Regular data cleaning and validation checks are crucial to maintain data integrity.

Complex Data Modeling: Selecting the right model and parameters can be challenging. To tackle this, experiment with different algorithms, and consider cross-validation techniques to refine your model.

Deployment Hurdles: Deploying models into production environments can face integration issues. Collaborate with IT teams and ensure proper infrastructure is in place for smooth deployment.

Communication Barriers: Effectively communicating insights to non-technical stakeholders is often difficult. Use visualizations and clear language to bridge this gap.

Collaboration and domain knowledge play a pivotal role in addressing these challenges. By working closely with domain experts and cross-functional teams, you can ensure that the data science project aligns with business objectives and achieves desired outcomes.

Best Practices for Managing the Data Science Life Cycle

Managing the Data Science Life Cycle effectively is crucial for ensuring project success and delivering actionable insights. By following best practices, you can streamline processes, enhance collaboration, and achieve better results. Here are some key tips for efficient project management in data science:

Prioritize Clear Communication: Maintain open channels of communication among team members to ensure everyone is aligned with project goals and timelines. Regular check-ins and updates help in addressing issues promptly.

Document Every Step: Proper documentation is essential for tracking progress and maintaining a clear record of decisions and methodologies. This not only aids in replication and debugging but also provides a reference for future projects.

Emphasize Version Control: Use version control systems like Git to manage changes in code, data, and models. This ensures that all team members work on the latest versions and can easily revert to previous states if necessary.

Choose the Right Tools and Technologies: Selecting appropriate tools for each stage of the data science life cycle is vital. Use powerful data processing tools like Python or R, data visualization tools like Tableau, and cloud platforms like AWS for scalability and efficiency.

By implementing these best practices, you can manage the Data Science Life Cycle more effectively, leading to more successful and impactful data science projects.

Frequently Asked Questions

What are the stages of the Data Science Life Cycle?

The Data Science Life Cycle consists of six key stages: Business Understanding, Data Collection, Data Preparation, Data Exploration and Analysis, Data Modeling, and Model Deployment. Each stage plays a crucial role in transforming raw data into actionable insights.

Why is the Data Science Life Cycle important?

The Data Science Life Cycle is essential because it provides a structured approach to managing data science projects. By following each stage methodically, data scientists can ensure accurate results, efficient workflows, and actionable outcomes aligned with business goals.

How does Data Exploration differ from Data Modeling?

Data Exploration involves analyzing and visualizing data to uncover patterns, correlations, and trends, while Data Modeling focuses on selecting and training algorithms to create predictive models. Exploration helps understand the data, and modeling leverages this understanding to solve specific business problems.

Conclusion

Mastering the Data Science Life Cycle is key to driving successful data-driven projects.

By understanding and meticulously following each stage—from business understanding to communication of results—you can ensure that your data science efforts yield accurate, actionable insights. Implementing best practices, such as clear communication, documentation, and tool selection, further enhances the efficiency and impact of your projects.

0 notes

Text

Funding By ‘Kirana’ Owner

Kinara Capital driving last-mile MSME financial inclusion had turned full year profitable in FY15 and continues the growth despite several industry odds.

Kinara Capital offers asset purchase and working capital collateral-free business loans in the range of INR 1 lakh to 30 lakhs within a 24-hour disbursement cycle with an average ticket size of INR 8 lakh to 9 lakhs. The credit assessments are done with the help of Artificial Intelligence and Machine Learning based data-driven automated credit decisioning.

Kinara Capital had raised USD 178 million from investors namely Sorenson Impact Foundation, Gaja Capital and IndusInd Bank.

Over the last decade, Hardika Shah, CEO and Founder of Kinara Capital aided the MSMEs to flourish incorporating industries like auto components, textile, plastic and food products.

Hardika Shah had been a champion of localization. Localized nuances helped her understand the pain points of the MSMEs which today stands as the pillar of this Srobust fintech.

Kinara Capital was awarded the Gold Award as 'Bank of the Year-Asia' by the IFC/SME Finance Forum for their impact on SME Financing.

Kinara Capital understands the pain of capital starved women entrepreneurs which led to the launch of HerVikas program in 2020, aiming to expand the ambit of empowerment to the emerging women entrepreneurs.

By the 5th anniversary, HerVikas had redesigned the landscape of financial inclusion empowering women operated small businesses.

After being away from her home country for over 10 years, she packed her life in a container and moved to Hyderabad and that is how the Kinara Capital began.

Prior to Kinara Capital, Hardika spent two decades as a management consultant with Accenture, working on billion-dollar projects globally. Her passion for social impact led her to mentorship roles with prestigious institutions like Stanford University and the Acumen Fund.

After studying Computer Science, she had undertaken an Executive MBA which was a joint program between Columbia Business School & Haas School of Business thus exposing her to the myriad cultures.

One of the most successful founders in the country, Hardika firmly believes that relationships are core in business.

Born and brought in a Gujarati Family in Bombay, Hardika’s mother ran a provisional store.

The Kinara Capital CEO begins her day with feeding pets and finds relaxation in experimenting variety of dishes like Sushi, Gnocchi and or even a local Kannada Speciality, Nuchina unde.

Making lavender candles and olive oil soaps at homes rejuvenates the Fintech Founder.

The founder’s Sundays see zoom calls playing Housie or Antakshari with family.

Chances are high when the founder is not caught building one of the biggest unsecured lending platforms, you will find her with the sewing machine and charcoal painting too.

0 notes

Text

best bca colleges in dehradun

Dev Bhoomi Uttarakhand University (DBUU) in Dehradun offers a Bachelor of Computer Applications (BCA) program designed to provide students with a solid foundation in computer science and applications. The program aims to equip students with the necessary skills to excel in the rapidly evolving field of information technology.

Curriculum

The BCA program at DBUU is structured to cover a broad spectrum of topics in computer science, including:

Programming Languages: Students gain proficiency in languages such as C, C++, Java, Python, and more.

Database Management Systems: The curriculum includes comprehensive training in database design, management, and SQL.

Web Development: Courses in HTML, CSS, JavaScript, and web frameworks ensure students can build and maintain dynamic websites.

Software Engineering: Students learn about software development life cycles, methodologies, and best practices.

Data Structures and Algorithms: Fundamental concepts that are crucial for efficient problem-solving and coding practices are thoroughly covered.

Networking: Students are introduced to the basics of computer networks, network security, and protocols.

Practical Exposure

DBUU emphasizes hands-on learning and practical exposure. The university provides state-of-the-art computer labs equipped with the latest hardware and software. Students participate in various lab sessions, projects, and internships that enable them to apply theoretical knowledge in real-world scenarios. Additionally, the university often collaborates with industry partners to offer workshops, seminars, and guest lectures, enhancing the practical learning experience.

Faculty

The BCA program is taught by experienced faculty members who are experts in their respective fields. They bring a mix of academic knowledge and industry experience, ensuring that students receive a well-rounded education. The faculty is committed to mentoring students and providing personalized guidance to help them achieve their academic and professional goals.

Career Prospects

Graduates of the BCA program from DBUU are well-prepared for a variety of roles in the IT industry. They can pursue careers as software developers, system analysts, web developers, database administrators, and network administrators. The university's placement cell works tirelessly to connect students with leading companies and organizations for job placements and internships. Additionally, the program lays a strong foundation for those who wish to pursue higher studies, such as an MCA (Master of Computer Applications) or other advanced degrees in computer science.

Campus Life

DBUU offers a vibrant campus life with numerous extracurricular activities, clubs, and events. Students have access to various facilities, including a well-stocked library, sports complex, and cafeteria, contributing to a well-rounded college experience.

In summary, the BCA program at Dev Bhoomi Uttarakhand University in Dehradun is designed to provide students with comprehensive knowledge, practical skills, and ample career opportunities in the field of computer science

1 note

·

View note

Text

Skill Development & Placement Success At The Best BCA College In Patna

If you are someone who has just passed your 12th standard and aspiring to pursue a BCA degree, then going through this blog will be really beneficial. After 12th std, choosing the right college for your Bachelor of Computer Applications (BCA) is a pivotal decision, as it sets your foundation. If you’re considering studying in Patna, you might wonder what skills you’ll acquire and what placement opportunities await you after graduation. So let’s delve into these aspects and see why Amity University is the best BCA college in Patna, making it the top choice for your studies.

Why Amity University is the Top Choice

Amity University's BCA program is well-structured and always updated with the latest industry trends. It means that the students will learn the skills that every employer wants. Amity's faculty is made up of industry vets and academic experts. Faculties of Amity bring a ton of knowledge and practical experience to the classroom. These mentors help students get through tough subjects and get them ready for the real world. That's truly a solid educational foundation.

The University has modern computer labs, a huge library, and the latest software and tools. Campus of Amity is designed to help you learn and innovate. People of Amity always motivate and encourage you to get involved in extracurricular activities like tech fests and coding competitions. These activities help you develop as a whole person. The administration and faculty at Amity are super supportive and are always there for you if you need help with your studies or personal life.

Skills You Will Gain

Let’s start by addressing the crucial skills you will gain by pursuing a Bachelor's of Application degree.

Programming Proficiency

The average curriculum of a Bachelor of Computer Applications college is designed to make you proficient in various programming languages such as Java, C++, Python, and more. Having a strong foundation is essential for any software development role and it gives you the versatility to tackle different types of projects.

Database Management

Knowledge of how to manage and manipulate data is crucial in today’s evolving world. The BCA program covers extensive topics on database management systems (DBMS), it teaches you how to design, implement, and maintain databases efficiently.

Web Development

With the rise of digital platforms, web development skills are in high demand. Amity’s BCA program includes detailed courses on web technologies; it enables you to build and maintain dynamic websites and applications.

Software Engineering

Software engineering is a very important topic in computer science and its principles are used in creating good software systems. One can learn about software development life cycles, project management, and quality assurance, preparing you for roles that require planning and execution.

Problem-Solving and Analytical Skills

Beyond technical skills, the program at Amity University emphasizes critical thinking and problem-solving. One can engage in various projects and case studies that challenges him/her to apply the knowledge creatively and analytically.

Conclusion

In conclusion, studying BCA at Amity University in Patna helps you nurture technical skills along with an analytical mind. Rich curriculum, effective faculty, developed infrastructure, and collaboration with industries provide University the edge in making the students as efficient computer professionals. These achievements have made it the top BCA University in Bihar. Pick Amity University as your BCA and be ready to open a gate to an effective and fruitful life as an IT professional. Enroll now!

Source: https://amityuniversitypatna.blogspot.com/2024/07/skill-development-placement-success-at.html

0 notes

Text

Computer Science Engineering vs. Software Engineering: Which is Better?

Choosing a career path in the technology field can be daunting, especially when deciding between Computer Science Engineering (CSE) and Software Engineering. Both fields offer promising opportunities, but they have distinct focuses and career trajectories. At St. Mary's Group of Institutions, the best engineering college in Hyderabad, we aim to clarify the differences and help you make an informed decision.

Understanding Computer Science Engineering (CSE)

Computer Science Engineering is a broad field that covers both the theoretical and practical aspects of computing. It includes the study of algorithms, data structures, computer architecture, networks, artificial intelligence, machine learning, cybersecurity, and more. CSE programs typically offer a comprehensive understanding of how computer systems work and how to develop new technologies.

Understanding Software Engineering

Software Engineering is a specialized area within CSE that focuses specifically on the design, development, testing, and maintenance of software applications. It applies engineering principles to software creation, ensuring reliability, efficiency, and user satisfaction. Key areas in Software Engineering include software development life cycle (SDLC), programming languages, software design, quality assurance, and project management.

Key Differences

Scope of Study:

CSE: Covers a wide range of topics including hardware, software, algorithms, networks, and more.

Software Engineering: Focuses primarily on software development and its related processes.

Career Focus:

CSE: Offers a broader range of career opportunities in various fields such as artificial intelligence, data science, cybersecurity, and more.

Software Engineering: Primarily targets careers in software development, including roles like software developer, quality assurance engineer, and project manager.

Skill Set:

CSE: Provides a versatile skill set that includes both software and hardware knowledge, along with a strong foundation in algorithms and data structures.

Software Engineering: Focuses more on practical software development skills, project management, and software lifecycle management.

Career Prospects

Both fields offer excellent career prospects, but the opportunities may differ based on your area of interest.

Computer Science Engineering Careers:

Software Developer

Data Scientist

Cybersecurity Analyst

Network Architect

AI and Machine Learning Engineer

Software Engineering Careers:

Software Developer

Software Tester/Quality Assurance Engineer

Systems Analyst

Project Manager

DevOps Engineer

Making the Right Choice

Choosing between CSE and Software Engineering depends on your interests and career goals.

Choose CSE if:

You have a broad interest in various aspects of computing.

You enjoy understanding how systems work at both the hardware and software levels.

You want a versatile degree that offers multiple career paths in different areas of technology.

Choose Software Engineering if:

You are passionate about software development and want to focus specifically on creating, testing, and maintaining software.

You enjoy working on projects and managing the software development lifecycle.

You aim to pursue a career in software development, quality assurance, or project management.

Conclusion

Both Computer Science Engineering and Software Engineering offer rewarding career paths with numerous opportunities. At St. Mary's Group of Institutions, we provide comprehensive programs in both fields to help you achieve your career aspirations. Whether you choose the broad, versatile path of CSE or the specialized focus of Software Engineering, you’ll gain valuable skills and knowledge that will set you on the path to success in the tech industry. Consider your interests and career goals carefully to make the best decision for your future.

0 notes

Text

Fwd: Graduate position: CzechU.PhylogeographyDesertPlants

Begin forwarded message:

> From: [email protected]

> Subject: Graduate position: CzechU.PhylogeographyDesertPlants

> Date: 12 June 2024 at 05:11:19 BST

> To: [email protected]

>

>

> Dear all,

>

> The Plant Biodiversity and Evolution Research Group at the Czech

> University of Life Sciences (https://ift.tt/VQbe4HX) seeks a

> highly motivated Ph.D. student to take part in the investigation

> of the phylogeography of North American desert species of the genus

> Chenopodium. The main aim of the project is to better understand the

> evolutionary history of the North American desert biota in the context

> of Neogene orogenic activity and the Pleistocene glacial-interglacial

> cycles, as well as the influence of these events on geographic patterns

> of genetic diversity predicted by refugia hypotheses. The study is

> funded by an international collaborative project between Czech (Czech

> University of Life Sciences) and American (Brigham Young University,

> Utah) research institutions, supported by the Inter-Excellenece program

> of the Czech Ministry of Education, Youth and Sport.

>

> Requested qualification:

>

> - MSc (or equivalent) in Biology/Botany

> - experience with field work, sampling and plant determination

> - experience with basic molecular genetic techniques (DNA

> extraction, PCR)

> - experience with genetic/genomic data analysis (phylogenetics or

> population genetic)

> - good English communication skills (written and spoken)

>

> Desirable qualification:

>

> - experiences with Illumina library preparation

> - basic experience with GIS analyses

> - basic experience with bioinformatic and statistical analysis of NGS

> data

> - basic experience with bash and R scripting

>

> Personal qualities:

>

> - good presentation skills

> - willing to learn

> - networking skills

> - ability to collaborate and cooperate with other team members

> - keen interest in plant evolution and speciation

>

> We offer:

>

> - a four-year position with a tax-free Ph.D. stipend (120.000 -

> 192.000,- CZK/year)

> - additional funding (30% employment) covered by the project (gross

> salary 200.000,- CZK, which is cca 113.600,- CZK/year after tax)

> - friendly and inspiring working environment in an international

> working group

> - collaboration with researchers from other institutions in Czech

> Republic and the USA

> - opportunity to master up-to-date methods (both wet lab and

> bioinformatic)

> - possibility to attend international conferences

> - flexible working hours, 25 days of paid vacation

> - subsidized meals at the university canteen

>

> For details see

> https://ift.tt/qYaotBN,

> the application deadline is the 30th of June 2024. The position is

> available from the 1st of October 2024, at the latest.

>

> For informal queries about the position or the project, please contact

> Dr. Bohumil Mandák [email protected]

>

> Karol Krak

> Czech University of Life Sciences Prague

> Faculty of Environmental Sciences

> Kamýcká 129

> CZ 165 00 Praha 6 - Suchdol

> +420 22438 2996

> https://ift.tt/VQbe4HX

>

> Krak Karol

0 notes

Text

IBM Cloud HPC: Cadence-Enabled Next-Gen Electronic Design

Cadence makes use of IBM Cloud HPC

With more than 30 years of experience in computational software, Cadence is a leading global developer in electrical design automation (EDA). Chips and other electrical devices that power today’s growing technology are designed with its assistance by businesses all over the world. The company’s need for computational capacity is at an all-time high due to the increased need for more chips and the integration of AI and machine learning into its EDA processes. Solutions that allow workloads to move between on-premises and cloud environments seamlessly and allow for project-specific customization are essential for EDA companies such as Cadence.

Chip and system design software development requires creative solutions, strong computational resources, and cutting-edge security support. Cadence leverages IBM Cloud HPC with IBM Spectrum LSF as the task scheduler. Cadence claims faster time-to-solution, better performance, lower costs, and easier workload control using IBM Cloud HPC.

Cadence also knows personally that a cloud migration may call for new skills and expertise that not every business has. The goal of the entire Cadence Cloud portfolio is to assist clients worldwide in taking advantage of the cloud’s potential. Cadence Managed Cloud Service is a turnkey solution perfect for startups and small- to medium-sized businesses, while Cloud Passport, a customer-managed cloud option, enables Cadence tools for large enterprise clients.

Cadence’s mission is to make the cloud simple for its clients by putting them in touch with experienced service providers like IBM, whose platforms may be utilized to install Cadence solutions in cloud environments. The Cadence Cloud Passport approach can provide access to cloud-ready software solutions for usage on IBM Cloud, which is beneficial for organizations looking to accelerate innovation at scale.

Businesses have complicated, computational problems that need to be solved quickly in today’s cutthroat business climate. Such issues may be too complex for a single system to manage, or they may take a long time to fix. For businesses that require prompt responses, every minute matters. Problems cannot fester for weeks or months for companies that wish to stay competitive. Companies in semiconductors, life sciences, healthcare, financial services, and more have embraced HPC to solve these problems.

Businesses can benefit from the speed and performance that come with powerful computers cooperating by utilizing HPC. This can be especially useful in light of the ongoing desire to develop AI on an ever-larger scale. Although analyzing vast amounts of data may seem unfeasible, high-performance computing (HPC) makes it possible to employ powerful computer resources that can complete numerous calculations quickly and concurrently, giving organizations access to insights more quickly. HPC is also used to assist companies in launching innovative products. Businesses are using it more frequently because it helps manage risks more effectively and for other purposes.

HPC in the Cloud

Businesses that operate workloads during periods of high activity frequently discover that they are running above the available compute capacity on-premises. This exemplifies how cloud computing may enhance on-premises HPC to revolutionize the way a firm uses cloud resources for HPC. Cloud computing can help with demand peaks that occur throughout product development cycles, which can vary in length. It can also give businesses access to resources and capabilities that they may not require continuously. Increased flexibility, improved scalability, improved agility, increased cost efficiency, and more are available to businesses who use HPC from the cloud.

Using a hybrid cloud to handle HPC

In the past, HPC systems were developed on-site. But the huge models and heavy workloads of today are frequently incompatible with the hardware that most businesses have on-site. Numerous organizations’ have turned to cloud infrastructure providers who have already made significant investments in their hardware due to the large upfront costs associated with acquiring GPUs, CPUs, networking, and creating the data Centre infrastructures required to effectively operate computation at scale.

A lot of businesses are implementing hybrid cloud architectures that concentrate on the intricacies of converting a section of their on-premises data Centre into private cloud infrastructure in order to fully realize the benefits of both public cloud and on-premises infrastructures.

Employing a hybrid cloud strategy for HPC, which combines on-premises and cloud computing, enables enterprises to leverage the advantages of each and achieve the security, agility, and adaptability needed to fulfil their needs. IBM Cloud HPC, for instance, may assist businesses in managing compute-intensive workloads on-site flexibly. IBM Cloud HPC helps enterprises manage third- and fourth-party risks by enabling them to use HPC as a fully managed service, all while including security and controls within the platform.

Looking forward

Businesses can overcome many of their most challenging problems by utilizing hybrid cloud services via platforms such as IBM Cloud HPC. Organization’s adopting HPC should think about how a hybrid cloud strategy might support traditional on-premises HPC infrastructure deployments.

Read more on Govindhtech.com

#IBMCloud#ibm#machinelearning#hpc#cloudcomputing#HPCsystems#news#technews#technology#technologynews#technologytrends#govindhtech

0 notes