#database migration tools

Explore tagged Tumblr posts

Text

Comprehensive Guide to Data Migration Tools and Database Migration Tools

In today’s data-driven world, businesses are increasingly reliant on seamless data migration solutions to modernize infrastructure, improve scalability, and maintain competitive edges. Data migration tools and database migration tools play a pivotal role in achieving these objectives by simplifying the transfer of data from one system to another, ensuring accuracy, consistency, and minimal downtime. Visit Us: https://iri1.hashnode.dev/comprehensive-guide-to-data-migration-tools-and-database-migration-tools

0 notes

Text

A Comprehensive Guide to Database Migration Tools at Quadrant

Database migration at Q-migrator with Quadrant are software applications designed to assist in the transfer of data from one database system to another. These tools help automate and simplify the process of moving data, schema, and other database objects, ensuring data integrity, minimizing downtime, and reducing the risk of data loss. The need for database migration arises in various scenarios such as upgrading to a newer database version, switching database vendors, moving to a cloud-based database, or consolidating multiple databases.

Key Features of Database Migration Tools

Data Transfer:

Facilitate the movement of data from source to target databases.

Ensure accurate data mapping and transformation.

Schema Migration:

Migrate database schemas including tables, indexes, views, and stored procedures.

Adjust schemas to fit the target database requirements.

Data Transformation:

Transform data formats to match the target database's specifications.

Perform data cleansing and enrichment during migration.

Data Validation and Testing:

Validate data integrity and consistency post-migration.

Provide tools for testing the migrated data to ensure accuracy.

Real-Time Data Replication:

Support continuous data replication for minimal downtime migrations.

Synchronize data changes between source and target databases.

Error Handling and Logging:

Provide detailed logs and error reports for troubleshooting.

Enable rollback mechanisms in case of migration failures.

Security and Compliance:

Ensure secure data transfer with encryption and secure protocols.

Comply with data privacy regulations and standards.

Benefits of Using Database Migration Tools

Efficiency: Automate complex migration tasks, reducing manual effort and time.

Reliability: Ensure data integrity and minimize the risk of data loss.

Scalability: Handle large volumes of data efficiently.

Flexibility: Support various database types and migration scenarios.

Minimal Downtime: Enable near-zero downtime migrations for critical applications.

Consistency: Maintain data consistency and accuracy throughout the migration process.

Popular Database Migration Tools

AWS Database Migration Service (DMS)

Azure Database Migration Service

Google Cloud Database Migration Service

Oracle GoldenGate

Striim

Flyway

Liquibase

DBConvert Studio

Hevo Data

Talend Data Integration

Conclusion

Database migration tools are essential for businesses looking to upgrade, consolidate, or move their databases to new environments. They provide the necessary functionalities to ensure a smooth, efficient, and secure migration process, enabling organizations to leverage new technologies and infrastructure with minimal disruption.

0 notes

Text

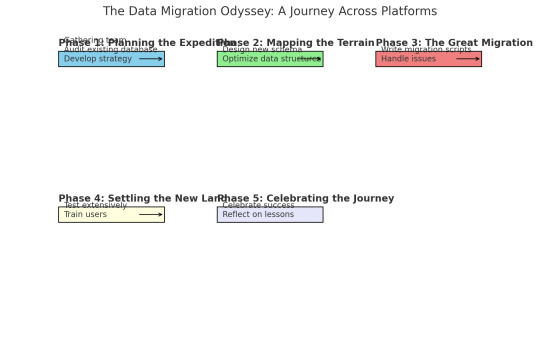

The Data Migration Odyssey: A Journey Across Platforms

As a database engineer, I thought I'd seen it all—until our company decided to migrate our entire database system to a new platform. What followed was an epic adventure filled with unexpected challenges, learning experiences, and a dash of heroism.

It all started on a typical Monday morning when my boss, the same stern woman with a flair for the dramatic, called me into her office. "Rookie," she began (despite my years of experience, the nickname had stuck), "we're moving to a new database platform. I need you to lead the migration."

I blinked. Migrating a database wasn't just about copying data from one place to another; it was like moving an entire city across the ocean. But I was ready for the challenge.

Phase 1: Planning the Expedition

First, I gathered my team and we started planning. We needed to understand the differences between the old and new systems, identify potential pitfalls, and develop a detailed migration strategy. It was like preparing for an expedition into uncharted territory.

We started by conducting a thorough audit of our existing database. This involved cataloging all tables, relationships, stored procedures, and triggers. We also reviewed performance metrics to identify any existing bottlenecks that could be addressed during the migration.

Phase 2: Mapping the Terrain

Next, we designed the new database design schema using schema builder online from dynobird. This was more than a simple translation; we took the opportunity to optimize our data structures and improve performance. It was like drafting a new map for our city, making sure every street and building was perfectly placed.

For example, our old database had a massive "orders" table that was a frequent source of slow queries. In the new schema, we split this table into more manageable segments, each optimized for specific types of queries.

Phase 3: The Great Migration

With our map in hand, it was time to start the migration. We wrote scripts to transfer data in batches, ensuring that we could monitor progress and handle any issues that arose. This step felt like loading up our ships and setting sail.

Of course, no epic journey is without its storms. We encountered data inconsistencies, unexpected compatibility issues, and performance hiccups. One particularly memorable moment was when we discovered a legacy system that had been quietly duplicating records for years. Fixing that felt like battling a sea monster, but we prevailed.

Phase 4: Settling the New Land

Once the data was successfully transferred, we focused on testing. We ran extensive queries, stress tests, and performance benchmarks to ensure everything was running smoothly. This was our version of exploring the new land and making sure it was fit for habitation.

We also trained our users on the new system, helping them adapt to the changes and take full advantage of the new features. Seeing their excitement and relief was like watching settlers build their new homes.

Phase 5: Celebrating the Journey

After weeks of hard work, the migration was complete. The new database was faster, more reliable, and easier to maintain. My boss, who had been closely following our progress, finally cracked a smile. "Excellent job, rookie," she said. "You've done it again."

To celebrate, she took the team out for a well-deserved dinner. As we clinked our glasses, I felt a deep sense of accomplishment. We had navigated a complex migration, overcome countless challenges, and emerged victorious.

Lessons Learned

Looking back, I realized that successful data migration requires careful planning, a deep understanding of both the old and new systems, and a willingness to tackle unexpected challenges head-on. It's a journey that tests your skills and resilience, but the rewards are well worth it.

So, if you ever find yourself leading a database migration, remember: plan meticulously, adapt to the challenges, and trust in your team's expertise. And don't forget to celebrate your successes along the way. You've earned it!

6 notes

·

View notes

Text

Migrating Off Evernote

Evernote, a web-based notes app, recently introduced super-restrictive controls on free accounts, after laying off a number of staff and introducing AI features, all of which is causing a lot of people to migrate off the platform. I haven't extensively researched alternative sites, so I can't offer a full resource there (readers, feel free to drop your alternative sites in notes or reblogs), but because I have access to OneNote both in my professional and personal life, I decided to migrate my Evernote there.

I use them for very different things -- Evernote I use exclusively as a personal fanfic archive, because it stores fics I want to save privately both as full-text files and as links. OneNote I have traditionally used for professional purposes, mainly for taking meeting notes and storing information I need (excel formulas, how-tos for things I don't do often in our database, etc). But while Evernote had some nicer features it was essentially a OneNote clone, and OneNote has a webclipper, so I've created an account with OneNote specifically to store my old Evernote archive and any incoming fanfic I want to archive in future.

Microsoft discontinued the tool that it offered for migrating Evernote to OneNote directly, but research turned up a reliable and so-far trustworthy independent tool that I wanted to share. You export all your Evernote notebooks as ENEX files, then download the tool and unzip it, open the exe file, and import the ENEX one by one on a computer where you already have the desktop version of OneNote installed. I had no problem with the process, although some folks with older systems might.

I suspect I might need to do some cleanup post-import but some of that is down to how Evernote fucked around with tags a while ago, and so far looking through my notes it appears to have imported formatting, links, art, and other various aspects of each clipped note without a problem. I also suspect that Evernote will not eternally allow free users to export their notebooks so if nothing else I'd back up your notebooks to ENEX or HTML files sooner rather than later.

I know the number of people who were using Free Evernote and have access to OneNote is probably pretty small, but if I found it useful I thought others might too.

433 notes

·

View notes

Text

SysNotes devlog 1

Hiya! We're a web developer by trade and we wanted to build ourselves a web-app to manage our system and to get to know each other better. We thought it would be fun to make a sort of a devlog on this blog to show off the development! The working title of this project is SysNotes (but better ideas are welcome!)

What SysNotes is✅:

A place to store profiles of all of our parts

A tool to figure out who is in front

A way to explore our inner world

A private chat similar to PluralKit

A way to combine info about our system with info about our OCs etc as an all-encompassing "brain-world" management system

A personal and tailor-made tool made for our needs

What SysNotes is not❌:

A fronting tracker (we see no need for it in our system)

A social media where users can interact (but we're open to make it so if people are interested)

A public platform that can be used by others (we don't have much experience actually hosting web-apps, but will consider it if there is enough interest!)

An offline app

So if this sounds interesting to you, you can find the first devlog below the cut (it's a long one!):

(I have used word highlighting and emojis as it helps me read large chunks of text, I hope it's alright with y'all!)

Tech stack & setup (feel free to skip if you don't care!)

The project is set up using:

Database: MySQL 8.4.3

Language: PHP 8.3

Framework: Laravel 10 with Breeze (authentication and user accounts) and Livewire 3 (front end integration)

Styling: Tailwind v4

I tried to set up Laragon to easily run the backend, but I ran into issues so I'm just running "php artisan serve" for now and using Laragon to run the DB. Also I'm compiling styles in real time with "npm run dev". Speaking of the DB, I just migrated the default auth tables for now. I will be making app-related DB tables in the next devlog. The awesome thing about Laravel is its Breeze starter kit, which gives you fully functioning authentication and basic account management out of the box, as well as optional Livewire to integrate server-side processing into HTML in the sexiest way. This means that I could get all the boring stuff out of the way with one terminal command. Win!

Styling and layout (for the UI nerds - you can skip this too!)

I changed the default accent color from purple to orange (personal preference) and used an emoji as a placeholder for the logo. I actually kinda like the emoji AS a logo so I might keep it.

Laravel Breeze came with a basic dashboard page, which I expanded with a few containers for the different sections of the page. I made use of the components that come with Breeze to reuse code for buttons etc throughout the code, and made new components as the need arose. Man, I love clean code 😌

I liked the dotted default Laravel page background, so I added it to the dashboard to create the look of a bullet journal. I like the journal-type visuals for this project as it goes with the theme of a notebook/file. I found the code for it here.

I also added some placeholder menu items for the pages that I would like to have in the app - Profile, (Inner) World, Front Decider, and Chat.

i ran into an issue dynamically building Tailwind classes such as class="bg-{{$activeStatus['color']}}-400" - turns out dynamically-created classes aren't supported, even if they're constructed in the component rather than the blade file. You learn something new every day huh…

Also, coming from Tailwind v3, "ps-*" and "pe-*" were confusing to get used to since my muscle memory is "pl-*" and "pr-*" 😂

Feature 1: Profiles page - proof of concept

This is a page where each alter's profiles will be displayed. You can switch between the profiles by clicking on each person's name. The current profile is highlighted in the list using a pale orange colour.

The logic for the profiles functionality uses a Livewire component called Profiles, which loads profile data and passes it into the blade view to be displayed. It also handles logic such as switching between the profiles and formatting data. Currently, the data is hardcoded into the component using an associative array, but I will be converting it to use the database in the next devlog.

New profile (TBC)

You will be able to create new profiles on the same page (this is yet to be implemented). My vision is that the New Alter form will unfold under the button, and fold back up again once the form has been submitted.

Alter name, pronouns, status

The most interesting component here is the status, which is currently set to a hardcoded list of "active", "dormant", and "unknown". However, I envision this to be a customisable list where I can add new statuses to the list from a settings menu (yet to be implemented).

Alter image

I wanted the folder that contained alter images and other assets to be outside of my Laravel project, in the Pictures folder of my operating system. I wanted to do this so that I can back up the assets folder whenever I back up my Pictures folder lol (not for adding/deleting the files - this all happens through the app to maintain data integrity!). However, I learned that Laravel does not support that and it will not be able to see my files because they are external. I found a workaround by using symbolic links (symlinks) 🔗. Basically, they allow to have one folder of identical contents in more than one place. I ran "mklink /D [external path] [internal path]" to create the symlink between my Pictures folder and Laravel's internal assets folder, so that any files that I add to my Pictures folder automatically copy over to Laravel's folder. I changed a couple lines in filesystems.php to point to the symlinked folder:

And I was also getting a "404 file not found" error - I think the issue was because the port wasn't originally specified. I changed the base app URL to the localhost IP address in .env:

…And after all this messing around, it works!

(My Pictures folder)

(My Laravel storage)

(And here is Alice's photo displayed - dw I DO know Ibuki's actual name)

Alter description and history

The description and history fields support HTML, so I can format these fields however I like, and add custom features like tables and bullet point lists.

This is done by using blade's HTML preservation tags "{!! !!}" as opposed to the plain text tags "{{ }}".

(Here I define Alice's description contents)

(And here I insert them into the template)

Traits, likes, dislikes, front triggers

These are saved as separate lists and rendered as fun badges. These will be used in the Front Decider (anyone has a better name for it?? 🤔) tool to help me identify which alter "I" am as it's a big struggle for us. Front Decider will work similar to FlowCharty.

What next?

There's lots more things I want to do with SysNotes! But I will take it one step at a time - here is the plan for the next devlog:

Setting up database tables for the profile data

Adding the "New Profile" form so I can create alters from within the app

Adding ability to edit each field on the profile

I tried my best to explain my work process in a way that wold somewhat make sense to non-coders - if you have any feedback for the future format of these devlogs, let me know!

~~~~~~~~~~~~~~~~~~

Disclaimers:

I have not used AI in the making of this app and I do NOT support the Vibe Coding mind virus that is currently on the loose. Programming is a form of art, and I will defend manual coding until the day I die.

Any alter data found in the screenshots is dummy data that does not represent our actual system.

I will not be making the code publicly available until it is a bit more fleshed out, this so far is just a trial for a concept I had bouncing around my head over the weekend.

We are SYSCOURSE NEUTRAL! Please don't start fights under this post

#sysnotes devlog#plurality#plural system#did#osdd#programming#whoever is fronting is typing like a millenial i am so sorry#also when i say “i” its because i'm not sure who fronted this entire time!#our syskid came up with the idea but i can't feel them so who knows who actually coded it#this is why we need the front decider tool lol

14 notes

·

View notes

Text

Unit 5- Citizen Scientists and Creativity

Science is often viewed as the domain of experts—locked away in research labs, academic journals, and institutions. However, the rise of citizen science is proving that anyone with curiosity and a willingness to learn can contribute meaningfully to scientific discovery. Making science accessible empowers communities, enriches our understanding of the natural world, and fosters a deeper appreciation for conservation efforts.

A fantastic example of this is Washington Wachira, a wildlife ecologist, nature photographer, and safari guide whose passion for birds has inspired many. In his TED Talk, Wachira highlights the importance of bird databases in Africa and how technology is bridging the gap between scientists and the public. His work showcases how everyday people, equipped with nothing more than a smartphone, can contribute valuable data to ornithological research.

For many, computers are a luxury. However, as smartphones become increasingly common, apps are providing a more accessible way to engage with science. Whether it’s using apps like eBird or iNaturalist to document bird sightings, or participating in conservation programs through mobile platforms, technology is revolutionizing how people interact with the natural world. This accessibility ensures that more voices, especially from underrepresented regions, are included in global scientific discussions.

Wachira’s enthusiasm for birds is infectious, bringing to mind one of the first nature documentaries I ever watched—Netflix's Dancing with the Birds (2019). This film masterfully captures some of the world’s most extraordinary birds—particularly the birds of paradise—and showcases their mesmerizing mating rituals. The stunning visuals of these creatures in New Guinea’s untouched forests make a compelling case for preserving such fragile ecosystems. To me, this documentary represents a beautiful intersection of science and art—two powerful lenses through which we can interpret and appreciate nature. This fusion not only bridges the gap between scientific inquiry and artistic expression but also strengthens outreach, engaging both the scientific and creative communities in a more profound way.

The importance of citizen science in environmental education is well-documented. In their article Evaluating Environmental Education, Citizen Science, and Stewardship through Naturalist Programs, Merenlender and colleagues explore how hands-on participation in science fosters environmental stewardship. The study emphasizes that engaging people in nature-based learning experiences leads to stronger conservation efforts and a deeper connection with the environment. When individuals actively contribute to scientific research—whether by tracking bird migrations, monitoring water quality, or identifying plant species—they develop a personal stake in environmental issues.

Citizen science breaks down barriers to knowledge. It transforms people from passive consumers of information into active participants in discovery. By increasing access to scientific tools and data, we empower communities to take an active role in conservation. Washington Wachira’s work, the stunning imagery of Dancing With The Birds, and the research on environmental education all point to one truth: science belongs to everyone. The more accessible we make it, the more we all benefit—from individual learners to entire ecosystems.

This male Red-Capped Manakin snaps its wings and performs the lively "moonwalk" on a branch to attract a female's attention. This is one of many birds highlighted in Netflix's Documentary Dancing with The Birds- I highly recommend!

5 notes

·

View notes

Text

AI Curated Black History Month Study Guide

As it is the start of Black History Month, it felt appropriate to start with a study guide to help base the rest of the month's posts on. Honestly, I'm guessing I am going to be posting well beyond just this month. Either way, I wanted to create a resource guide curated from accross all the AI platforms. I'm also going to create the same resource with resources approrpiate for a middle schooler - for my daughter AND I'm going to create a custom chat interface which has this knowledge from accross all these AI platforms. One of the ideas I'm most curious for people to interact with is I used a trick that AI prompters often use to get the right prompts - I asked AI to give me a list of prompts that anyone can use to explore African American History on any platform. Here are the top ten prompts ChatGPT gave me. You can see the prompts I got in the full document here. Happy Black History Month!!

Absolutely! Given your focus on community education and AI, these prompts are designed to encourage independent research while also supporting your work in bridging the gap between communities, organizations, and AI:

"How did African American communities shape environmental justice movements, and what historical examples demonstrate their leadership?" (This aligns with your work in AI and environmental justice—exploring historical roots can provide a foundation for future AI-driven initiatives.)

"What were the major economic contributions of Black-owned businesses during Reconstruction, the Civil Rights era, and today?" (Could be useful when discussing MWBE businesses and their historical impact.)

"How did historically Black colleges and universities (HBCUs) contribute to African American political and social leadership?"

"What role did African American women play in grassroots activism, and how did their contributions shape national movements?"

"What was the impact of Black Wall Street and similar economic hubs on African American wealth-building efforts?" (Ties into modern discussions about economic justice and financial equity.)

"How did African American scientists and engineers contribute to technological advancements throughout U.S. history?" (Could be an interesting connection to AI education and Black contributions to STEM fields.)

"What is the historical significance of the Great Migration, and how did it shape the cultural and political landscapes of Northern cities?"

"What were the key policies and legal battles that shaped African Americans' fight for voting rights?" (Relevant for understanding policy-making and community advocacy today.)

"How did African American music genres, such as jazz, blues, and hip-hop, influence global culture?"

"What AI-powered tools or databases exist to help explore and analyze African American historical archives?" (Bridges AI and history—could be a way to introduce tech into historical research discussions.)

These prompts will help guide deep, independent exploration into African American history, while also connecting to your broader mission of community education and AI integration. Let me know if you'd like tailored questions for specific audiences or workshops!

[I actually forgot Gemini responses but I will add them tomorrow along with the middle school version of the whole study guide. I should have time to come up with the custom chat interface tomorrow too!!]

4 notes

·

View notes

Text

Vibecoding a production app

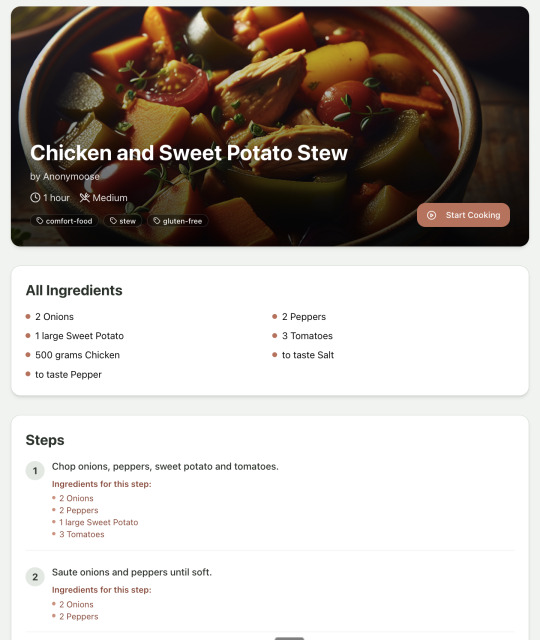

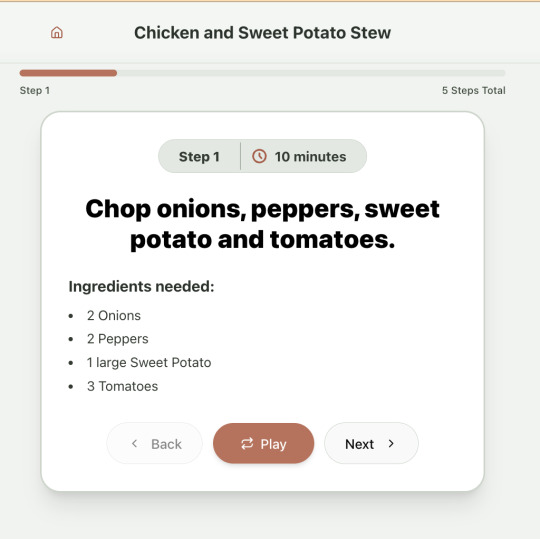

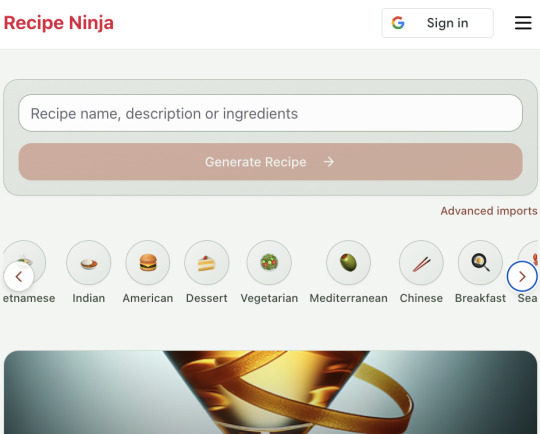

TL;DR I built and launched a recipe app with about 20 hours of work - recipeninja.ai

Background: I'm a startup founder turned investor. I taught myself (bad) PHP in 2000, and picked up Ruby on Rails in 2011. I'd guess 2015 was the last time I wrote a line of Ruby professionally. I've built small side projects over the years, but nothing with any significant usage. So it's fair to say I'm a little rusty, and I never really bothered to learn front end code or design.

In my day job at Y Combinator, I'm around founders who are building amazing stuff with AI every day and I kept hearing about the advances in tools like Lovable, Cursor and Windsurf. I love building stuff and I've always got a list of little apps I want to build if I had more free time.

About a month ago, I started playing with Lovable to build a word game based on Articulate (it's similar to Heads Up or Taboo). I got a working version, but I quickly ran into limitations - I found it very complicated to add a supabase backend, and it kept re-writing large parts of my app logic when I only wanted to make cosmetic changes. It felt like a toy - not ready to build real applications yet.

But I kept hearing great things about tools like Windsurf. A couple of weeks ago, I looked again at my list of app ideas to build and saw "Recipe App". I've wanted to build a hands-free recipe app for years. I love to cook, but the problem with most recipe websites is that they're optimized for SEO, not for humans. So you have pages and pages of descriptive crap to scroll through before you actually get to the recipe. I've used the recipe app Paprika to store my recipes in one place, but honestly it feels like it was built in 2009. The UI isn't great for actually cooking. My hands are covered in food and I don't really want to touch my phone or computer when I'm following a recipe.

So I set out to build what would become RecipeNinja.ai

For this project, I decided to use Windsurf. I wanted a Rails 8 API backend and React front-end app and Windsurf set this up for me in no time. Setting up homebrew on a new laptop, installing npm and making sure I'm on the right version of Ruby is always a pain. Windsurf did this for me step-by-step. I needed to set up SSH keys so I could push to GitHub and Heroku. Windsurf did this for me as well, in about 20% of the time it would have taken me to Google all of the relevant commands.

I was impressed that it started using the Rails conventions straight out of the box. For database migrations, it used the Rails command-line tool, which then generated the correct file names and used all the correct Rails conventions. I didn't prompt this specifically - it just knew how to do it. It one-shotted pretty complex changes across the React front end and Rails backend to work seamlessly together.

To start with, the main piece of functionality was to generate a complete step-by-step recipe from a simple input ("Lasagne"), generate an image of the finished dish, and then allow the user to progress through the recipe step-by-step with voice narration of each step. I used OpenAI for the LLM and ElevenLabs for voice. "Grandpa Spuds Oxley" gave it a friendly southern accent.

Recipe summary:

And the recipe step-by-step view:

I was pretty astonished that Windsurf managed to integrate both the OpenAI and Elevenlabs APIs without me doing very much at all. After we had a couple of problems with the open AI Ruby library, it quickly fell back to a raw ruby HTTP client implementation, but I honestly didn't care. As long as it worked, I didn't really mind if it used 20 lines of code or two lines of code. And Windsurf was pretty good about enforcing reasonable security practices. I wanted to call Elevenlabs directly from the front end while I was still prototyping stuff, and Windsurf objected very strongly, telling me that I was risking exposing my private API credentials to the Internet. I promised I'd fix it before I deployed to production and it finally acquiesced.

I decided I wanted to add "Advanced Import" functionality where you could take a picture of a recipe (this could be a handwritten note or a picture from a favourite a recipe book) and RecipeNinja would import the recipe. This took a handful of minutes.

Pretty quickly, a pattern emerged; I would prompt for a feature. It would read relevant files and make changes for two or three minutes, and then I would test the backend and front end together. I could quickly see from the JavaScript console or the Rails logs if there was an error, and I would just copy paste this error straight back into Windsurf with little or no explanation. 80% of the time, Windsurf would correct the mistake and the site would work. Pretty quickly, I didn't even look at the code it generated at all. I just accepted all changes and then checked if it worked in the front end.

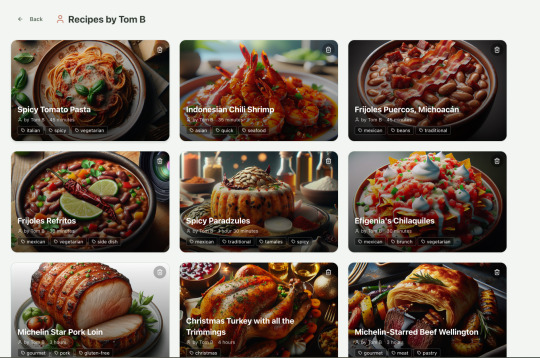

After a couple of hours of work on the recipe generation, I decided to add the concept of "Users" and include Google Auth as a login option. This would require extensive changes across the front end and backend - a database migration, a new model, new controller and entirely new UI. Windsurf one-shotted the code. It didn't actually work straight away because I had to configure Google Auth to add `localhost` as a valid origin domain, but Windsurf talked me through the changes I needed to make on the Google Auth website. I took a screenshot of the Google Auth config page and pasted it back into Windsurf and it caught an error I had made. I could login to my app immediately after I made this config change. Pretty mindblowing. You can now see who's created each recipe, keep a list of your own recipes, and toggle each recipe to public or private visibility. When I needed to set up Heroku to host my app online, Windsurf generated a bunch of terminal commands to configure my Heroku apps correctly. It went slightly off track at one point because it was using old Heroku APIs, so I pointed it to the Heroku docs page and it fixed it up correctly.

I always dreaded adding custom domains to my projects - I hate dealing with Registrars and configuring DNS to point at the right nameservers. But Windsurf told me how to configure my GoDaddy domain name DNS to work with Heroku, telling me exactly what buttons to press and what values to paste into the DNS config page. I pointed it at the Heroku docs again and Windsurf used the Heroku command line tool to add the "Custom Domain" add-ons I needed and fetch the right Heroku nameservers. I took a screenshot of the GoDaddy DNS settings and it confirmed it was right.

I can see very soon that tools like Cursor & Windsurf will integrate something like Browser Use so that an AI agent will do all this browser-based configuration work with zero user input.

I'm also impressed that Windsurf will sometimes start up a Rails server and use curl commands to check that an API is working correctly, or start my React project and load up a web preview and check the front end works. This functionality didn't always seem to work consistently, and so I fell back to testing it manually myself most of the time.

When I was happy with the code, it wrote git commits for me and pushed code to Heroku from the in-built command line terminal. Pretty cool!

I do have a few niggles still. Sometimes it's a little over-eager - it will make more changes than I want, without checking with me that I'm happy or the code works. For example, it might try to commit code and deploy to production, and I need to press "Stop" and actually test the app myself. When I asked it to add analytics, it went overboard and added 100 different analytics events in pretty insignificant places. When it got trigger-happy like this, I reverted the changes and gave it more precise commands to follow one by one.

The one thing I haven't got working yet is automated testing that's executed by the agent before it decides a task is complete; there's probably a way to do it with custom rules (I have spent zero time investigating this). It feels like I should be able to have an integration test suite that is run automatically after every code change, and then any test failures should be rectified automatically by the AI before it says it's finished.

Also, the AI should be able to tail my Rails logs to look for errors. It should spot things like database queries and automatically optimize my Active Record queries to make my app perform better. At the moment I'm copy-pasting in excerpts of the Rails logs, and then Windsurf quickly figures out that I've got an N+1 query problem and fixes it. Pretty cool.

Refactoring is also kind of painful. I've ended up with several files that are 700-900 lines long and contain duplicate functionality. For example, list recipes by tag and list recipes by user are basically the same.

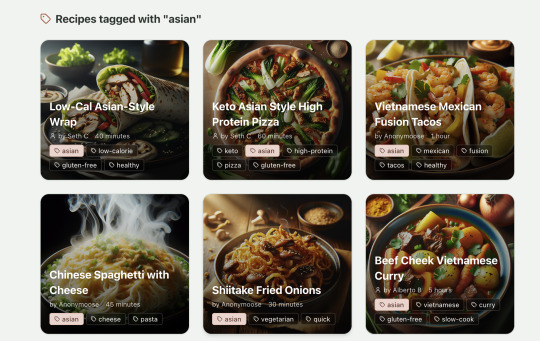

Recipes by user:

This should really be identical to list recipes by tag, but Windsurf has implemented them separately.

Recipes by tag:

If I ask Windsurf to refactor these two pages, it randomly changes stuff like renaming analytics events, rewriting user-facing alerts, and changing random little UX stuff, when I really want to keep the functionality exactly the same and only move duplicate code into shared modules. Instead, to successfully refactor, I had to ask Windsurf to list out ideas for refactoring, then prompt it specifically to refactor these things one by one, touching nothing else. That worked a little better, but it still wasn't perfect

Sometimes, adding minor functionality to the Rails API will often change the entire API response, rather just adding a couple of fields. Eg It will occasionally change Index Recipes to nest responses in an object { "recipes": [ ] }, versus just returning an array, which breaks the frontend. And then another minor change will revert it. This is where adding tests to identify and prevent these kinds of API changes would be really useful. When I ask Windsurf to fix these API changes, it will instead change the front end to accept the new API json format and also leave the old implementation in for "backwards compatibility". This ends up with a tangled mess of code that isn't really necessary. But I'm vibecoding so I didn't bother to fix it.

Then there was some changes that just didn't work at all. Trying to implement Posthog analytics in the front end seemed to break my entire app multiple times. I tried to add user voice commands ("Go to the next step"), but this conflicted with the eleven labs voice recordings. Having really good git discipline makes vibe coding much easier and less stressful. If something doesn't work after 10 minutes, I can just git reset head --hard. I've not lost very much time, and it frees me up to try more ambitious prompts to see what the AI can do. Less technical users who aren't familiar with git have lost months of work when the AI goes off on a vision quest and the inbuilt revert functionality doesn't work properly. It seems like adding more native support for version control could be a massive win for these AI coding tools.

Another complaint I've heard is that the AI coding tools don't write "production" code that can scale. So I decided to put this to the test by asking Windsurf for some tips on how to make the application more performant. It identified I was downloading 3 MB image files for each recipe, and suggested a Rails feature for adding lower resolution image variants automatically. Two minutes later, I had thumbnail and midsize variants that decrease the loading time of each page by 80%. Similarly, it identified inefficient N+1 active record queries and rewrote them to be more efficient. There are a ton more performance features that come built into Rails - caching would be the next thing I'd probably add if usage really ballooned.

Before going to production, I kept my promise to move my Elevenlabs API keys to the backend. Almost as an afterthought, I asked asked Windsurf to cache the voice responses so that I'd only make an Elevenlabs API call once for each recipe step; after that, the audio file was stored in S3 using Rails ActiveStorage and served without costing me more credits. Two minutes later, it was done. Awesome.

At the end of a vibecoding session, I'd write a list of 10 or 15 new ideas for functionality that I wanted to add the next time I came back to the project. In the past, these lists would've built up over time and never gotten done. Each task might've taken me five minutes to an hour to complete manually. With Windsurf, I was astonished how quickly I could work through these lists. Changes took one or two minutes each, and within 30 minutes I'd completed my entire to do list from the day before. It was astonishing how productive I felt. I can create the features faster than I can come up with ideas.

Before launching, I wanted to improve the design, so I took a quick look at a couple of recipe sites. They were much more visual than my site, and so I simply told Windsurf to make my design more visual, emphasizing photos of food. Its first try was great. I showed it to a couple of friends and they suggested I should add recipe categories - "Thai" or "Mexican" or "Pizza" for example. They showed me the DoorDash app, so I took a screenshot of it and pasted it into Windsurf. My prompt was "Give me a carousel of food icons that look like this". Again, this worked in one shot. I think my version actually looks better than Doordash 🤷♂️

Doordash:

My carousel:

I also saw I was getting a console error from missing Favicon. I always struggle to make Favicon for previous sites because I could never figure out where they were supposed to go or what file format they needed. I got OpenAI to generate me a little recipe ninja icon with a transparent background and I saved it into my project directory. I asked Windsurf what file format I need and it listed out nine different sizes and file formats. Seems annoying. I wondered if Windsurf could just do it all for me. It quickly wrote a series of Bash commands to create a temporary folder, resize the image and create the nine variants I needed. It put them into the right directory and then cleaned up the temporary directory. I laughed in amazement. I've never been good at bash scripting and I didn't know if it was even possible to do what I was asking via the command line. I guess it is possible.

After launching and posting on Twitter, a few hundred users visited the site and generated about 1000 recipes. I was pretty happy! Unfortunately, the next day I woke up and saw that I had a $700 OpenAI bill. Someone had been abusing the site and costing me a lot of OpenAI credits by creating a single recipe over and over again - "Pasta with Shallots and Pineapple". They did this 12,000 times. Obviously, I had not put any rate limiting in.

Still, I was determined not to write any code. I explained the problem and asked Windsurf to come up with solutions. Seconds later, I had 15 pretty good suggestions. I implemented several (but not all) of the ideas in about 10 minutes and the abuse stopped dead in its tracks. I won't tell you which ones I chose in case Mr Shallots and Pineapple is reading. The app's security is not perfect, but I'm pretty happy with it for the scale I'm at. If I continue to grow and get more abuse, I'll implement more robust measures.

Overall, I am astonished how productive Windsurf has made me in the last two weeks. I'm not a good designer or frontend developer, and I'm a very rusty rails dev. I got this project into production 5 to 10 times faster than it would've taken me manually, and the level of polish on the front end is much higher than I could've achieved on my own. Over and over again, I would ask for a change and be astonished at the speed and quality with which Windsurf implemented it. I just sat laughing as the computer wrote code.

The next thing I want to change is making the recipe generation process much more immediate and responsive. Right now, it takes about 20 seconds to generate a recipe and for a new user it feels like maybe the app just isn't doing anything.

Instead, I'm experimenting with using Websockets to show a streaming response as the recipe is created. This gives the user immediate feedback that something is happening. It would also make editing the recipe really fun - you could ask it to "add nuts" to the recipe, and see as the recipe dynamically updates 2-3 seconds later. You could also say "Increase the quantities to cook for 8 people" or "Change from imperial to metric measurements".

I have a basic implementation working, but there are still some rough edges. I might actually go and read the code this time to figure out what it's doing!

I also want to add a full voice agent interface so that you don't have to touch the screen at all. Halfway through cooking a recipe, you might ask "I don't have cilantro - what could I use instead?" or say "Set a timer for 30 minutes". That would be my dream recipe app!

Tools like Windsurf or Cursor aren't yet as useful for non-technical users - they're extremely powerful and there are still too many ways to blow your own face off. I have a fairly good idea of the architecture that I want Windsurf to implement, and I could quickly spot when it was going off track or choosing a solution that was inappropriately complicated for the feature I was building. At the moment, a technical background is a massive advantage for using Windsurf. As a rusty developer, it made me feel like I had superpowers.

But I believe within a couple of months, when things like log tailing and automated testing and native version control get implemented, it will be an extremely powerful tool for even non-technical people to write production-quality apps. The AI will be able to make complex changes and then verify those changes are actually working. At the moment, it feels like it's making a best guess at what will work and then leaving the user to test it. Implementing better feedback loops will enable a truly agentic, recursive, self-healing development flow. It doesn't feel like it needs any breakthrough in technology to enable this. It's just about adding a few tool calls to the existing LLMs. My mind races as I try to think through the implications for professional software developers.

Meanwhile, the LLMs aren't going to sit still. They're getting better at a frightening rate. I spoke to several very capable software engineers who are Y Combinator founders in the last week. About a quarter of them told me that 95% of their code is written by AI. In six or twelve months, I just don't think software engineering is going exist in the same way as it does today. The cost of creating high-quality, custom software is quickly trending towards zero.

You can try the site yourself at recipeninja.ai

Here's a complete list of functionality. Of course, Windsurf just generated this list for me 🫠

RecipeNinja: Comprehensive Functionality Overview

Core Concept: the app appears to be a cooking assistant application that provides voice-guided recipe instructions, allowing users to cook hands-free while following step-by-step recipe guidance.

Backend (Rails API) Functionality

User Authentication & Authorization

Google OAuth integration for user authentication

User account management with secure authentication flows

Authorization system ensuring users can only access their own private recipes or public recipes

Recipe Management

Recipe Model Features:

Unique public IDs (format: "r_" + 14 random alphanumeric characters) for security

User ownership (user_id field with NOT NULL constraint)

Public/private visibility toggle (default: private)

Comprehensive recipe data storage (title, ingredients, steps, cooking time, etc.)

Image attachment capability using Active Storage with S3 storage in production

Recipe Tagging System:

Many-to-many relationship between recipes and tags

Tag model with unique name attribute

RecipeTag join model for the relationship

Helper methods for adding/removing tags from recipes

Recipe API Endpoints:

CRUD operations for recipes

Pagination support with metadata (current_page, per_page, total_pages, total_count)

Default sorting by newest first (created_at DESC)

Filtering recipes by tags

Different serializers for list view (RecipeSummarySerializer) and detail view (RecipeSerializer)

Voice Generation

Voice Recording System:

VoiceRecording model linked to recipes

Integration with Eleven Labs API for text-to-speech conversion

Caching of voice recordings in S3 to reduce API calls

Unique identifiers combining recipe_id, step_id, and voice_id

Force regeneration option for refreshing recordings

Audio Processing:

Using streamio-ffmpeg gem for audio file analysis

Active Storage integration for audio file management

S3 storage for audio files in production

Recipe Import & Generation

RecipeImporter Service:

OpenAI integration for recipe generation

Conversion of text recipes into structured format

Parsing and normalization of recipe data

Import from photos functionality

Frontend (React) Functionality

User Interface Components

Recipe Selection & Browsing:

Recipe listing with pagination

Real-time updates with 10-second polling mechanism

Tag filtering functionality

Recipe cards showing summary information (without images)

"View Details" and "Start Cooking" buttons for each recipe

Recipe Detail View:

Complete recipe information display

Recipe image display

Tag display with clickable tags

Option to start cooking from this view

Cooking Experience:

Step-by-step recipe navigation

Voice guidance for each step

Keyboard shortcuts for hands-free control:

Arrow keys for step navigation

Space for play/pause audio

Escape to return to recipe selection

URL-based step tracking (e.g., /recipe/r_xlxG4bcTLs9jbM/classic-lasagna/steps/1)

State Management & Data Flow

Recipe Service:

API integration for fetching recipes

Support for pagination parameters

Tag-based filtering

Caching mechanisms for recipe data

Image URL handling for detailed views

Authentication Flow:

Google OAuth integration using environment variables

User session management

Authorization header management for API requests

Progressive Web App Features

PWA capabilities for installation on devices

Responsive design for various screen sizes

Favicon and app icon support

Deployment Architecture

Two-App Structure:

cook-voice-api: Rails backend on Heroku

cook-voice-wizard: React frontend/PWA on Heroku

Backend Infrastructure:

Ruby 3.2.2

PostgreSQL database (Heroku PostgreSQL addon)

Amazon S3 for file storage

Environment variables for configuration

Frontend Infrastructure:

React application

Environment variable configuration

Static buildpack on Heroku

SPA routing configuration

Security Measures:

HTTPS enforcement

Rails credentials system

Environment variables for sensitive information

Public ID system to mask database IDs

This comprehensive overview covers the major functionality of the Cook Voice application based on the available information. The application appears to be a sophisticated cooking assistant that combines recipe management with voice guidance to create a hands-free cooking experience.

2 notes

·

View notes

Text

Hey. I'm very sorry and concerned and angry and whatnot. Hope everyone is well. I did my fair share of ugly crying in the last days.

Nontheless, I wanted to make an update.

First, I discovered Bluesky. I never wanted to make a twitter account because I'm a filthy leftist. In the last weeks tons of people migrating to Bsky and if you want to join the party - go for it!

If you want to get a nice dive into the game dev and indie dev spaces, this list is great!

I even found a goblin list! How cool is that?

My tip is to upload a few pics, screenshots and a bio before you start to follow a bunch of people so you can be recognized and followed back.

And please delete your fascist bird app account. At this point it's hard to trust anyone still engaging there. Thank you.

3 notes

·

View notes

Text

“Rita Roe,” 28 (USA 1968)

28-year-old “Rita” was in the state of New York (but outside of New York City) when she underwent her legal but fatal abortion.

Rita was told to have an abortion because of a medical condition— rheumatic heart disease. She was three months pregnant when the “therapeutic” abortion was carried out.

Even before Roe v. Wade, “life of the mother” abortions were legal in every state. However, killing Rita’s baby was in no way a guarantee of her safety. As a side effect of the abortion, she suffered multiple pulmonary emboli and died.

The deaths of Rita and her baby are made even more tragic with the knowledge that the abortion wasn’t just lethal, but unnecessary. Today, it is well-known in the field of medicine that cardiac patients are at a higher risk from an abortion than a healthy woman, and that the vast majority of pregnant patients with cardiac conditions will have a safe pregnancy and delivery with proper care. In fact, during pregnancy, stem cells from the unborn baby will migrate throughout the mother’s body and repair damage to the heart when necessary. Rita needed real medical care, not abortion.

Others with health conditions (often including heart disease) who were killed by their allegedly “therapeutic” abortions include Barbara May Hoppert, Allegra Roseberry, Aissatou Bah, Belinda Byrd, Gail Mazo, Bonnie Fix, Anjelica Duarte, Erika Charlotte Wullschleger, Helen Grainger, “Bonnie” Roe, “Elle” Roe, “Evie” Roe, “Ginger” Roe, “Maggie” Roe, “Marina” Roe, “Molly” Roe, “Selena” Roe and “Sonia” Roe. Many of them very much wanted to have their babies, but were falsely told that they had to kill them to survive. Patients with chronic conditions deserve better than abortion.

New York State Journal of Medicine, January 1979 Edition (page 49–52)

#pre roe legal#abortion is not healthcare#tw abortion#pro life#unsafe yet legal#tw ab*rtion#unidentified victim#tw murder#abortion#abortion debate#death from legal abortion#tw negligence

2 notes

·

View notes

Text

The Comprehensive Guide to Data Migration Tools and Database Migration Tools

In today's fast-paced digital landscape, the need to move data efficiently and accurately from one environment to another is more critical than ever. Whether you're upgrading systems, consolidating data, or migrating to the cloud, data migration tools and database migration tools play a pivotal role in ensuring a smooth transition. This comprehensive guide will delve into what these tools are, their importance, the different types available, essential features to look for, and a review of some of the most popular tools on the market.

Understanding Data Migration Tools and Database Migration Tools

Data migration tools are software solutions designed to facilitate the process of transferring data between storage systems, formats, or computer systems. This can involve moving data between different databases, applications, or cloud environments. Database migration tools, a subset of data migration tools, specifically handle the transfer of database schemas and data between different database management systems (DBMS).

Importance of Data Migration Tools

Migrating data is a complex and risky task that can lead to data loss, corruption, or prolonged downtime if not executed correctly. Here are some reasons why data migration tools are essential:

1. Data Integrity: These tools ensure that data is accurately transferred without loss or corruption.

2. Efficiency: Automated tools speed up the migration process, reducing the time and resources required.

3. Minimal Downtime: Efficient data migration tools minimize downtime, ensuring business continuity.

4. Scalability: They can handle large volumes of data, making them suitable for enterprises of all sizes.

5. Compliance: Ensures adherence to regulatory requirements by maintaining data integrity and security during migration.

Types of Data Migration Tools

Data migration tools can be categorized based on their specific functions and the environments they operate in. Here are the main types:

1. On-Premises Tools: These tools are installed and operated within an organization's own infrastructure. They are suitable for migrating data between local systems or databases.

2. Cloud-Based Tools: These tools facilitate data migration to, from, or within cloud environments. They are ideal for organizations adopting cloud technologies.

3. Open-Source Tools: These are freely available tools that can be customized according to specific needs. They often have active community support but may require more technical expertise.

4. Commercial Tools: Paid solutions that come with professional support, advanced features, and comprehensive documentation. They are typically more user-friendly and scalable.

5. ETL Tools (Extract, Transform, Load): These tools are designed to extract data from one source, transform it into the desired format, and load it into the target system. They are commonly used in data warehousing and analytics projects.

Key Features to Look for in Data Migration Tools

When selecting data migration tools or database migration tools, consider the following essential features:

1. Ease of Use: User-friendly interfaces and intuitive workflows are crucial for reducing the learning curve and facilitating smooth migrations.

2. Data Mapping and Transformation: The ability to map and transform data from the source to the target schema is essential for ensuring compatibility and consistency.

3. Scalability: The tool should handle varying volumes of data and support complex migration scenarios.

4. Performance: High-performance tools reduce migration time and minimize system downtime.

5. Error Handling and Reporting: Robust error handling, logging, and reporting features help identify and resolve issues quickly.

6. Security: Ensuring data security during migration is critical. Look for tools with encryption and compliance features.

7. Support and Documentation: Comprehensive support and detailed documentation are vital for troubleshooting and effective tool utilization.

Popular Data Migration Tools

Here are some of the most popular data migration tools and database migration tools available:

1. AWS Database Migration Service (DMS): AWS DMS is a cloud-based service that supports database migrations to and from Amazon Web Services. It is highly scalable, supports homogeneous and heterogeneous migrations, and provides continuous data replication.

2. Azure Database Migration Service: This tool from Microsoft facilitates the migration of databases to Azure. It supports various database management systems, offers automated assessments, and provides seamless integration with other Azure services.

3. Talend: Talend is an ETL tool that offers robust data integration and migration capabilities. It supports numerous data sources and formats, providing a visual interface for designing data workflows and transformations.

4. IBM InfoSphere DataStage: IBM InfoSphere DataStage is a powerful ETL tool designed for large-scale data integration projects. It offers advanced data transformation capabilities, high performance, and extensive support for various data sources.

5. Oracle Data Integrator (ODI): ODI is a comprehensive data integration platform from Oracle that supports ETL and ELT architectures. It provides high-performance data transformation and integration capabilities, making it suitable for complex migration projects.

6. Fivetran: Fivetran is a cloud-based data integration tool that automates data extraction, transformation, and loading. It supports numerous data sources and destinations, providing seamless data synchronization and real-time updates.

7. Apache Nifi: Apache Nifi is an open-source data integration tool that supports data migration, transformation, and real-time data processing. It offers a web-based interface for designing data flows and extensive customization options.

8. Hevo Data: Hevo Data is a cloud-based data integration platform that offers real-time data migration and transformation capabilities. It supports various data sources, provides automated data pipelines, and ensures high data accuracy.

Best Practices for Using Data Migration Tools

To ensure successful data migrations, follow these best practices:

1. Plan Thoroughly: Define your migration strategy, scope, and timeline. Identify potential risks and develop contingency plans.

2. Data Assessment: Evaluate the quality and structure of your source data. Cleanse and normalize data to ensure compatibility with the target system.

3. Pilot Testing: Conduct pilot migrations to test the tools and processes. This helps identify and resolve issues before full-scale migration.

4. Monitor and Validate: Continuously monitor the migration process and validate the data at each stage. Ensure that data integrity and consistency are maintained.

5. Minimize Downtime: Schedule migrations during low-traffic periods and implement strategies to minimize downtime, such as phased migrations or parallel processing.

6. Documentation and Training: Document the migration process and provide training to the team members involved. This ensures a smooth transition and helps address any post-migration issues.

Conclusion

Data migration tools and database migration tools are indispensable for modern businesses looking to upgrade systems, consolidate data, or migrate to the cloud. These tools offer automated, efficient, and secure methods for transferring data, ensuring data integrity and minimizing downtime. With a variety of tools available, ranging from open-source solutions to commercial platforms, businesses can choose the one that best fits their needs and requirements. By following best practices and leveraging the right tools, organizations can achieve successful data migrations, paving the way for improved operations and business growth.

As you embark on your data migration journey, remember to carefully evaluate your options, plan meticulously, and prioritize data security and integrity. With the right approach and tools, you can ensure a seamless and successful data migration process.

0 notes

Text

Cloud Agnostic: Achieving Flexibility and Independence in Cloud Management

As businesses increasingly migrate to the cloud, they face a critical decision: which cloud provider to choose? While AWS, Microsoft Azure, and Google Cloud offer powerful platforms, the concept of "cloud agnostic" is gaining traction. Cloud agnosticism refers to a strategy where businesses avoid vendor lock-in by designing applications and infrastructure that work across multiple cloud providers. This approach provides flexibility, independence, and resilience, allowing organizations to adapt to changing needs and avoid reliance on a single provider.

What Does It Mean to Be Cloud Agnostic?

Being cloud agnostic means creating and managing systems, applications, and services that can run on any cloud platform. Instead of committing to a single cloud provider, businesses design their architecture to function seamlessly across multiple platforms. This flexibility is achieved by using open standards, containerization technologies like Docker, and orchestration tools such as Kubernetes.

Key features of a cloud agnostic approach include:

Interoperability: Applications must be able to operate across different cloud environments.

Portability: The ability to migrate workloads between different providers without significant reconfiguration.

Standardization: Using common frameworks, APIs, and languages that work universally across platforms.

Benefits of Cloud Agnostic Strategies

Avoiding Vendor Lock-InThe primary benefit of being cloud agnostic is avoiding vendor lock-in. Once a business builds its entire infrastructure around a single cloud provider, it can be challenging to switch or expand to other platforms. This could lead to increased costs and limited innovation. With a cloud agnostic strategy, businesses can choose the best services from multiple providers, optimizing both performance and costs.

Cost OptimizationCloud agnosticism allows companies to choose the most cost-effective solutions across providers. As cloud pricing models are complex and vary by region and usage, a cloud agnostic system enables businesses to leverage competitive pricing and minimize expenses by shifting workloads to different providers when necessary.

Greater Resilience and UptimeBy operating across multiple cloud platforms, organizations reduce the risk of downtime. If one provider experiences an outage, the business can shift workloads to another platform, ensuring continuous service availability. This redundancy builds resilience, ensuring high availability in critical systems.

Flexibility and ScalabilityA cloud agnostic approach gives companies the freedom to adjust resources based on current business needs. This means scaling applications horizontally or vertically across different providers without being restricted by the limits or offerings of a single cloud vendor.

Global ReachDifferent cloud providers have varying levels of presence across geographic regions. With a cloud agnostic approach, businesses can leverage the strengths of various providers in different areas, ensuring better latency, performance, and compliance with local regulations.

Challenges of Cloud Agnosticism

Despite the advantages, adopting a cloud agnostic approach comes with its own set of challenges:

Increased ComplexityManaging and orchestrating services across multiple cloud providers is more complex than relying on a single vendor. Businesses need robust management tools, monitoring systems, and teams with expertise in multiple cloud environments to ensure smooth operations.

Higher Initial CostsThe upfront costs of designing a cloud agnostic architecture can be higher than those of a single-provider system. Developing portable applications and investing in technologies like Kubernetes or Terraform requires significant time and resources.

Limited Use of Provider-Specific ServicesCloud providers often offer unique, advanced services—such as machine learning tools, proprietary databases, and analytics platforms—that may not be easily portable to other clouds. Being cloud agnostic could mean missing out on some of these specialized services, which may limit innovation in certain areas.

Tools and Technologies for Cloud Agnostic Strategies

Several tools and technologies make cloud agnosticism more accessible for businesses:

Containerization: Docker and similar containerization tools allow businesses to encapsulate applications in lightweight, portable containers that run consistently across various environments.

Orchestration: Kubernetes is a leading tool for orchestrating containers across multiple cloud platforms. It ensures scalability, load balancing, and failover capabilities, regardless of the underlying cloud infrastructure.

Infrastructure as Code (IaC): Tools like Terraform and Ansible enable businesses to define cloud infrastructure using code. This makes it easier to manage, replicate, and migrate infrastructure across different providers.

APIs and Abstraction Layers: Using APIs and abstraction layers helps standardize interactions between applications and different cloud platforms, enabling smooth interoperability.

When Should You Consider a Cloud Agnostic Approach?

A cloud agnostic approach is not always necessary for every business. Here are a few scenarios where adopting cloud agnosticism makes sense:

Businesses operating in regulated industries that need to maintain compliance across multiple regions.

Companies require high availability and fault tolerance across different cloud platforms for mission-critical applications.

Organizations with global operations that need to optimize performance and cost across multiple cloud regions.

Businesses aim to avoid long-term vendor lock-in and maintain flexibility for future growth and scaling needs.

Conclusion

Adopting a cloud agnostic strategy offers businesses unparalleled flexibility, independence, and resilience in cloud management. While the approach comes with challenges such as increased complexity and higher upfront costs, the long-term benefits of avoiding vendor lock-in, optimizing costs, and enhancing scalability are significant. By leveraging the right tools and technologies, businesses can achieve a truly cloud-agnostic architecture that supports innovation and growth in a competitive landscape.

Embrace the cloud agnostic approach to future-proof your business operations and stay ahead in the ever-evolving digital world.

2 notes

·

View notes

Text

How To Migrate Your Site To WordPress: A Seamless Journey With Sohojware

The internet landscape is ever-evolving, and sometimes, your website needs to evolve with it. If you're looking to take your online presence to the next level, migrating your site to WordPress might be the perfect solution. WordPress is a powerful and user-friendly Content Management System (CMS) that empowers millions of users worldwide.

However migrating your site can seem daunting, especially if you're new to WordPress. Worry not! This comprehensive guide will equip you with the knowledge and confidence to navigate a smooth and successful migration. Sohojware, a leading web development company, is here to help you every step of the way.

Why Choose WordPress?

WordPress offers a plethora of benefits that make it an ideal platform for websites of all shapes and sizes. Here are just a few reasons to consider migrating:

Easy to Use: WordPress boasts a user-friendly interface, making it easy to manage your website content, even for beginners with no coding experience.

Flexibility: WordPress offers a vast array of themes and plugins that cater to virtually any website need. This allows you to customize your site's look and functionality to perfectly match your vision.

Scalability: WordPress can grow with your business. Whether you're starting a simple blog or managing a complex e-commerce store, WordPress can handle it all.

SEO Friendly: WordPress is built with Search Engine Optimization (SEO) in mind. This means your website has a better chance of ranking higher in search engine results pages (SERPs), attracting more organic traffic.

Security: WordPress is constantly updated with the latest security patches, ensuring your website remains protected from potential threats.

The Migration Process: A Step-by-Step Guide

Migrating your site to WordPress can be broken down into several key steps.

Preparation: Before diving in, it's crucial to back up your existing website's files and database. This ensures you have a safety net in case anything goes wrong during the migration process. Sohojware offers expert backup and migration services to ensure a smooth transition.

Set Up Your WordPress Site: You'll need a web hosting provider and a domain name for your WordPress site. Sohojware can assist you with choosing the right hosting plan and setting up your WordPress installation.

Content Migration: There are several ways to migrate your content to WordPress. You can use a plugin specifically designed for migration, manually copy and paste your content, or utilize an XML export/import process, depending on your previous platform. Sohojware's team of developers can help you choose the most efficient method for your specific situation.

Theme Selection: WordPress offers a vast library of free and premium themes. Choose a theme that aligns with your brand identity and website's functionality.

Plugins and Functionality: Plugins extend the capabilities of your WordPress site. Install plugins that enhance your website's features, such as contact forms, image galleries, or SEO optimization tools.

Testing and Launch: Once your content is migrated and your website is customized, thoroughly test all functionalities before launching your new WordPress site. Sohojware provides comprehensive website testing services to guarantee a flawless launch.

Leveraging Sohojware's Expertise

Migrating your website to WordPress can be a breeze with the help of Sohojware's experienced web development team. Sohojware offers a comprehensive suite of migration services, including:

Expert Backup and Migration: Ensure a smooth and secure transition of your website's data.

Custom Theme Development: Create a unique and visually appealing website that reflects your brand identity.

Plugin Selection and Integration: Help you choose and implement the right plugins to enhance your website's functionality.

SEO Optimization: Optimize your website content and structure for better search engine ranking.

Ongoing Maintenance and Support: Provide ongoing support to keep your WordPress site running smoothly and securely.

FAQs: Migrating to WordPress with Sohojware

1. How long does the migration process typically take?

The migration timeframe depends on the size and complexity of your website. Sohojware will assess your specific needs and provide an estimated timeline for your migration project.

2. Will my website be down during the migration?

Typically, no. Sohojware can migrate your website to a temporary location while your existing site remains live. Once the migration is complete, the new WordPress site will be seamlessly switched in place, minimizing downtime and disruption for your visitors.

3. What happens to my existing content and SEO rankings after migration?

Sohojware prioritizes preserving your valuable content during the migration process. We can also help you implement strategies to minimize any potential impact on your SEO rankings.

4. Do I need to know how to code to use WordPress?

No! WordPress is designed to be user-friendly, and you don't need any coding knowledge to manage your website content. Sohojware can also provide training and support to help you get the most out of your WordPress site.

5. What ongoing maintenance does a WordPress website require?

WordPress requires regular updates to ensure optimal security and functionality. Sohojware offers ongoing maintenance plans to keep your website updated, secure, and running smoothly.

By migrating to WordPress with Sohojware's expert guidance, you'll gain access to a powerful and user-friendly platform that empowers you to create and manage a stunning and successful website. Contact Sohojware today to discuss your website migration needs and unlock the full potential of WordPress!

2 notes

·

View notes

Text

From Novice to Pro: Master the Cloud with AWS Training!

In today's rapidly evolving technology landscape, cloud computing has emerged as a game-changer, providing businesses with unparalleled flexibility, scalability, and cost-efficiency. Among the various cloud platforms available, Amazon Web Services (AWS) stands out as a leader, offering a comprehensive suite of services and solutions. Whether you are a fresh graduate eager to kickstart your career or a seasoned professional looking to upskill, AWS training can be the gateway to success in the cloud. This article explores the key components of AWS training, the reasons why it is a compelling choice, the promising placement opportunities it brings, and the numerous benefits it offers.

Key Components of AWS Training

1. Foundational Knowledge: Building a Strong Base

AWS training starts by laying a solid foundation of cloud computing concepts and AWS-specific terminology. It covers essential topics such as virtualization, storage types, networking, and security fundamentals. This groundwork ensures that even individuals with little to no prior knowledge of cloud computing can grasp the intricacies of AWS technology easily.

2. Core Services: Exploring the AWS Portfolio

Once the fundamentals are in place, AWS training delves into the vast array of core services offered by the platform. Participants learn about compute services like Amazon Elastic Compute Cloud (EC2), storage options such as Amazon Simple Storage Service (S3), and database solutions like Amazon Relational Database Service (RDS). Additionally, they gain insights into services that enhance performance, scalability, and security, such as Amazon Virtual Private Cloud (VPC), AWS Identity and Access Management (IAM), and AWS CloudTrail.

3. Specialized Domains: Nurturing Expertise

As participants progress through the training, they have the opportunity to explore advanced and specialized areas within AWS. These can include topics like machine learning, big data analytics, Internet of Things (IoT), serverless computing, and DevOps practices. By delving into these niches, individuals can gain expertise in specific domains and position themselves as sought-after professionals in the industry.

Reasons to Choose AWS Training

1. Industry Dominance: Aligning with the Market Leader

One of the significant reasons to choose AWS training is the platform's unrivaled market dominance. With a staggering market share, AWS is trusted and adopted by businesses across industries worldwide. By acquiring AWS skills, individuals become part of the ecosystem that powers the digital transformation of numerous organizations, enhancing their career prospects significantly.

2. Comprehensive Learning Resources: Abundance of Educational Tools

AWS training offers a wealth of comprehensive learning resources, ranging from extensive documentation, tutorials, and whitepapers to hands-on labs and interactive courses. These resources cater to different learning preferences, enabling individuals to choose their preferred mode of learning and acquire a deep understanding of AWS services and concepts.

3. Recognized Certifications: Validating Expertise

AWS certifications are globally recognized credentials that validate an individual's competence in using AWS services and solutions effectively. By completing AWS training and obtaining certifications like AWS Certified Solutions Architect or AWS Certified Developer, individuals can boost their professional credibility, open doors to new job opportunities, and command higher salaries in the job market.

Placement Opportunities

Upon completing AWS training, individuals can explore a multitude of placement opportunities. The demand for professionals skilled in AWS is soaring, as organizations increasingly migrate their infrastructure to the cloud or adopt hybrid cloud strategies. From startups to multinational corporations, industries spanning finance, healthcare, retail, and more seek talented individuals who can architect, develop, and manage cloud-based solutions using AWS. This robust demand translates into a plethora of rewarding career options and a higher likelihood of finding positions that align with one's interests and aspirations.

In conclusion, mastering the cloud with AWS training at ACTE institute provides individuals with a solid foundation, comprehensive knowledge, and specialized expertise in one of the most dominant cloud platforms available. The reasons to choose AWS training are compelling, ranging from the industry's unparalleled market position to the top ranking state.

9 notes

·

View notes

Text

The Money In Cleanup

I have an acquaintance that helps migrate businesses off of ancient and inappropriate databases onto more recent ones. If you wonder how ancient and inappropriate let me simply state “not meant for industry” and “first created when One Piece the anime started airing” and you can guess. Now and then he literally goes and cleans up questionable and persisting bad choices.

In the recent unending and omnipresent discussions of AI, I saw a similar proposal. A person rather cynical about AI mused someone might make a living in the next few years backing a company’s tech and processes OUT of AI. Such things might seem ridiculous, until you consider my aforementioned acquaintance and the fact he gets paid to help people back out past decisions. Think of it as “migration from a place you shouldn’t have migrated to.”