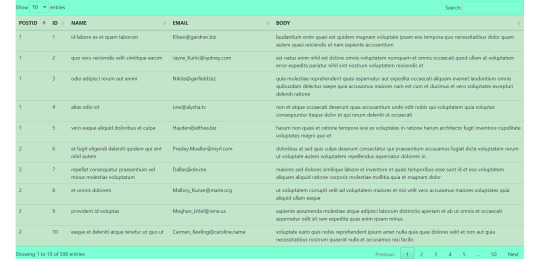

#fetch data from api and show in table

Explore tagged Tumblr posts

Text

Fetch data from api and show in table with jquery dataTable plugin.

#react#react js#jquery dataTable plugin#fetch data from api#fetch data from api and show in table#get data from api and show in table#javascript#frontend#webtutorialstack

0 notes

Text

Best Football APIs for Developers in 2025: A Deep Dive into DSG’s Feature-Rich Endpoints

In 2025, the demand for fast, reliable, and comprehensive football data APIs has reached new heights. With the explosion of fantasy leagues, sports betting, mobile apps, OTT platforms, and real-time sports analytics, developers are increasingly relying on robust data solutions to power seamless user experiences. Among the top providers, Data Sports Group (DSG) stands out for offering one of the most complete and developer-friendly football data APIs on the market.

In this blog, we’ll explore why DSG’s football API is a top choice in 2025, what features make it stand apart, and how developers across fantasy platforms, media outlets, and startups are using it to build cutting-edge applications.

Why Football APIs Matter More Than Ever

Football (soccer) is the world’s most-watched sport, and the demand for real-time stats, live scores, player insights, and match events is only growing. Whether you're building a:

Fantasy football platform

Live score app

Football analytics dashboard

OTT streaming overlay

Sports betting product

...you need accurate, timely, and structured data.

APIs are the backbone of this digital ecosystem, helping developers fetch live and historical data in real-time and display it in user-friendly formats. That’s where DSG comes in.

What Makes DSG's Football API Stand Out in 2025?

1. Comprehensive Global Coverage

DSG offers extensive coverage of football leagues and tournaments from around the world, including:

UEFA Champions League

English Premier League

La Liga, Serie A, Bundesliga

MLS, Brasileirão, J-League

African Cup of Nations

World Cup qualifiers and international friendlies

This global scope ensures that your application isn't limited to only major leagues but can cater to niche audiences as well.

2. Real-Time Match Data

Receive instant updates on:

Goals

Cards

Substitutions

Line-ups & formations

Match start/stop events

Injury notifications

Thanks to DSG’s low-latency infrastructure, your users stay engaged with lightning-fast updates.

3. Player & Team Statistics

DSG provides deep stats per player and team across multiple seasons, including:

Pass accuracy

Goals per 90 minutes

Expected Goals (xG)

Defensive stats like tackles and interceptions

Goalkeeper metrics (saves, clean sheets)

This is invaluable for fantasy football platforms and sports analytics startups.

4. Developer-Centric Documentation & Tools

DSG’s football API includes:

Clean RESTful architecture

XML & JSON format support

Well-organized endpoints by competitions, matches, teams, and players

Interactive API playground for testing

Detailed changelogs and status updates

5. Custom Widgets & Integrations

Apart from raw data, DSG offers:

Plug-and-play football widgets (live scores, player cards, league tables)

Custom dashboard feeds for enterprise customers

Webhooks and push notifications for developers

This speeds up development time and provides plug-and-play features for non-technical teams.

Common Use Cases of DSG's Football Data API

a. Fantasy Football Apps

Developers use DSG data to:

Build real-time player score systems

Offer live match insights

Power draft decisions using historical player performance

b. Sports Media & News Sites

Media outlets use DSG widgets and feeds to:

Embed real-time scores

Display dynamic league tables

Show interactive player stats in articles

c. Betting Platforms

Betting platforms use DSG to:

Automate odds updates

Deliver real-time market changes

Display event-driven notifications to users

d. Football Analytics Dashboards

Startups use DSG to:

Train AI models with historical performance data

Visualize advanced stats (xG, pass networks, heatmaps)

Generate scouting reports and comparisons

Sample Endpoints Developers Love

/matches/live: Live match updates

/players/{player_id}/stats: Season-wise stats for a specific player

/teams/{team_id}/fixtures: Upcoming fixtures

/competitions/{league_id}/standings: League table updates

/events/{match_id}: Real-time event feed (goals, cards, substitutions)

These are just a few of the dozens of endpoints DSG offers.

Final Thoughts: Why Choose DSG in 2025?

For developers looking to build applications that are scalable, real-time, and data-rich, DSG’s football API offers the ideal toolkit. With global coverage, detailed statistics, low latency, and excellent developer support, it's no surprise that DSG has become a go-to solution for companies building football-based digital products in 2025.

If you're planning to launch a fantasy app, sports betting service, or football analytics platform, DSG has the data infrastructure to support your vision. Ready to get started? Explore DSG's Football Data API here.

0 notes

Text

How to Plan for Your Big Mobile App Launch?

Have you ever thought what number of users may hit your mobile app once it goes public? As soon as the mobile app launches to the App Store or Play Store, it's available for the general public to download and begin using. But is your app capable of managing a sudden influx of installations and traffic? Any mobile app must be developed in a way so that it can manage high traffic for your big mobile app launch.

A mobile app usually contains the subsequent factors:

Mobile App Development

The mobile app is often developed using Native or Hybrid technology. Each technology has pros and cons.

Web Admin Panel Development

The Web admin panel allows the admin users or managers to work out the activities of users who are using the mobile app.

The admin panel is specially developed employing web technology (PHP, Laravel, etc.) and shows all the activities recorded from the app.

Web Services

Web services are called middle layers and are accustomed to communicate the information flow between the mobile app and also the web admin together with a database.

All dynamic content is updated or fetched from the database using web services.

When we speak about managing a large user base, all the factors mentioned above must be developed in an exceedingly way so performance doesn't fall when hundreds or thousands of users hit the app.

The subsequent points must be reviewed and managed while developing a mobile app to manage heavy traffic:

Backend Server (Hosting server)

Improving the performance of the app depends on the performance of the server that hosts your database and Web Services (APIs). The server needs to be capable of managing the traffic easily. It needs to contain the hardware and the applications that are installed on the server.

Database Transactions

A well-structured database helps with:

Saving space by removal of redundant data

Providing data access faster

Keeping data accuracy and integrity

The other important part is how we manage transactions with the database. Can we write queries that are slow to retrieve data? Can we create multiple joins while retrieving data from the database? Your query writing skills can completely change the performance of your app.

It’s important to put in writing queries that help:

Specify the sphere name from which to urge data (Don’t use select * from a table after you need access to only some columns)

Use short queries rather than multiple joins (Multiple joins within the queries can take a protracted time to fetch data)

Search on the indexed columns

Long processes in Background tasks

From a user engagement point of view, it’s always good to place long-running tasks within the background thread, so that the UI thread doesn’t get blocked. it'll help app users continue acting on their app activities without anticipating data to be processed on the forepart.

Make fewer calls to APIs

Making lots of API calls takes up lots of the method within the background. If there are fewer calls to the APIs it'll help run the mobile app effectively. APIs communicate with the database using the queries mentioned above. So each call to an API will get a knowledge result set. looking at the scale of knowledge, and therefore the time taken by the queries, this might slow the app response. So until there's a desire, don't make calls to APIs.

Contact Treevibes Technologies iOS Mobile App Company in Chennai to induce started on your big mobile app launch.

Optimized code

Code quality also plays a key role in app performance. There are several points one should keep in mind while writing code.

Avoid Creating Unnecessary Objects: Unnecessary objects cause the rubbish collector to be called unnecessarily, which successively eats resources needed to spice up the performance of the application.

Avoid Background Services: Background services should be avoided to stay running unless required, as they keep occupying the resources throughout their execution.

Use Standard Libraries: rather than implementing the identical functionality in your code, use the already available resource.

Use Optimized Data Containers’

Use Cache: Objects which are expensive to form should be implemented in the cache. For instance, if you've got to display some images from the web, then you ought to hold them in memory to avoid downloading them several times.

Conclusion

Treevibes Technologies Android App Development Company in Chennai is created by experienced app developers. We use Native and Hybrid technologies for developing mobile apps. As for that app we launched, after the launch, our client was thrilled because he heard little or no from users. No news is nice news after you do a giant mobile app launch. The app worked of course and therefore the next phase will begin soon.

Contact Treevibes Technologies Mobile App Development Company in Chennai to induce started on your big mobile app launch.

We create complex web and mobile applications. We assemble expert Indian developers – ranked among the highest in their field – and India-based, American relationship managers, who provide stateside context for clients’ needs and expectations. This mix creates a replacement reasonably contracted development that doesn’t trade quality for cost.

#mobile app development company in chennai#android app development company in chennai#mobile development company in chennai#ios app development company in chennai#ios mobile app company in chennai#mobile application development in chennai#flutter mobile app development company in chennai#leading mobile application developers in chennai#e-commerce app development company in chennai#best mobile app company in chennai#game development company in chennai#treevibes technologies

3 notes

·

View notes

Text

Top Directory Listing WordPress Themes 2020

A directory website usually contains big amounts of information, has a set of particular features, and uses a specific layout to present that information. All this poses some unique challenges when building such a site.Where you can add your Listing & publish with all information.

So in this post, we’re making your job easier and listing some of the best WordPress directory themes out there. They all come with awesome features and engaging designs, so check them out, and maybe you’ll find the one that fits your needs perfectly.

LISTO – DIRECTORY LISTING WORDPRESS THEME

Listo is the listing directory Theme for Word Press. Use Listo if you want to start you’re your business like Yelp, Yellow Pages, White Pages. This Theme is perfect for local businesses owners and promoters or any kind of web directory.

Directory & Listing WordPress Template Frontend, User dashboard and Admin panel Most advanced directory and listing theme.This is advanced and user-friendly directory Theme. This Theme mainly focused for Local Business Directory, Online Business Directory, Local Listing and all kind of Directory Services. Template powered with Bootstrap 3.0 and Materialize Framework and Integrated 360-Degree View on Business listings.

EASY SETUP PROCESS TO GET YOU STARTED IN MINUTES.

Kickstart in just few clicks with an easy setup wizard.

Includes required plugins, one-click demos and dummy content.

Theme Features

3 Home Pages

4 Blog Styles

6 Listing Posts Styles

7 Banner Styles

4 Search Styles

Powerful Admin Panel

User Dashboard

Import demo site with One-Click

Stripe and Paypal Integration

User Submit listing

Submit reviews & add images in the Comment section

Home Page Banner Background

Home Page Header Map

Homepage Video background

Responsive Ready

Extensive typography options

Featured Listing

Featured Ads

Edit Listing Short code

Submit Listing Short code

Category Short Codes

Testimonials shortcode

Demo + Theme -» GET IT HERE

Cplus – Directory Listing WordPress Theme

A beautiful directory theme, with a clean and modern look, a full-width header (with a search form), category listings, video embeds, user reviews, and multiple customization options.

Cplus is a clean and modern WordPres directory and listings Theme designed and developed keeping in mind Local Business and mobile use first. It comes with several layout versions and SASS files.

AUTOFILL THE PRIMARY BUSINESS DETAIL WITH GOOGLE API.

Super easy and fast to add new business listings from front-end.

Avoid human errors by fetching primary data with Google API.

Directory and listing WordPress theme that enables you to build a powerful website without knowing a single line of code. Aside from having a collection of beautiful predesigned listing templates, the theme also comes with some amazing features & users can create their own listings, reports, wishlists, claim items, and much more. Each location you choose to feature can be pinned on a map, and depending on your style preferences, you can customize Google Maps the way you like it best. The theme is fully intuitive and enables you to build a remarkable directory and listing website in utmost ease!

Features:

Responsive design,

ad banners,

unlimited price packages,

SEO friendly,

Google Maps,

color customizer,

user management.

Demo + Theme -» GET IT HERE

vLocal – WordPress Directory Listing Theme

A clean and simple WordPress directory theme with a full-width map header and an easy-to-follow content organization. It comes with an advanced search form, where you can look for a specific thing using various filtering methods. The theme also comes with a review system and customizable lists.

Here comes Vlocal, a city directory and listing theme that enables you to set up a directory website quickly and easily.

Vlocal Multi-purpose Responsive Themes is a Content Driven Portal that is ideal for a listing of any kind of entity or activity on a Local or Global basis.

USER DASHBOARD TO GET BUSINESS-CRITICAL KPI

Every listing owner gets their own dashboard to get insightful data.

From getting daily user views, leads and rating stats to low-rating alerts.

Features:

Dedicated mobile app view,

SEO ready,

Google Maps,

list ratings and reviews,

Google fonts,

color customizer,

advanced search form,

20+ directory extensions.

Demo + Theme -» GET IT HERE

BListing – Listing Directory WordPress Theme

A playful and modern WordPress theme for local or global directory sites. It has a full-width header, an intuitive search form, and a clean design. It also lets you use video backgrounds. Overall, a beautiful and friendly theme.

Blisting is Multi-purpose WordPress Theme is an absolutely unique premium WordPress theme, it is the result of our hardworking development team and constant feedback from users and buyers. This theme is built in cooperation with you! Your users will never have access to the WordPress dashboard, everything is done at front-end. You can, register, login, edit your profile, submit listings and Filtering and sorting search results so much more from the front-end without having to visit the WordPress dashboard.

MOST ADVANCED INTELLISENSE BASED ARCHIVE SEARCH SYSTEM

Instant live suggestions for keywords, categories, listing name, and more.

Location search is City (region) based which can be added manually or Google API.

Features:

WooCommerce integration,

the advanced filtering system,

Google Maps,

easy layout controls,

widget-based sections,

drag-and-drop page builders,

multiple styling presets.

Demo + Theme -» GET IT HERE

BLISTOVILLE – REAL ESTATE DIRECTORY WORDPRESS THEME

BlistoVille is the listing directory Theme for Word Press. Use BlistoVille if you want to start you’re your business like Yelp, Yellow Pages, White Pages. This Theme is perfect for local businesses owners and promoters or any kind of web directory.

Set online directory portal of any type – companies, shops, restaurants, real estate, websites and all others in the this category, Directory WordPress themes.

This theme has tons of features to meet your needs as your competitors have, BlistoVille is SEO friendly & Easy to one-click install and customize.

Theme Features

Powerful Admin Panel

User Dashboard

Import demo site with One-Click

Payment Integration ( Generate revenue)

User Submit listing

Submit reviews

Home Page Banner Background

Homepage Video background

Responsive Ready

Extensive typography options

Demo + Theme -» GET IT HERE

Blisto – Business Directory Listing WordPress Theme

Blisto is the listing directory Theme for Word Press. Use Blisto if you want to start you’re your business like Yelp, Yellow Pages, White Pages. This Theme is perfect for local businesses owners and promoters or any kind of web directory.

Have a specific item or a large number of them? Directory wordpress theme is your one-stop selling point for either one or all of them. It offers unlimited directory types. Add as many as you want. A complete package for a perfect demonstration of online business.

This theme has tons of features to meet your needs as your competitors have, Blisto is SEO friendly & Easy to one-click install and customize.

Theme Features

Powerful Admin Panel

User Dashboard

Import demo site with One-Click

Payment Integration ( Generate revenue)

User Submit listing

Submit reviews

Home Page Banner Background

Homepage Video background

Responsive Ready

Extensive typography options

Featured Listing

Featured Ads

This won’t be the first time you look for a directory theme, but it will be the last time. Because with Theme you have more than you could ever want or need.

Whether you know it or not, many of the websites you visit are powered by directories.

When you buy a house – real estate sites show listings.

When you buy a car – car sites show listings.

When you pick a restaurant – ratings sites show listings.

When you book a vacation – travel sites show listings.

Your site will be a thing of beauty. Let’s be honest, the problem with most of those sites, while highly functional, is that many of them aren’t very pretty. It’s why the beautiful ones are so popular, because they match great photography with fantastic functionality.

Get Started Quickly

Want to build a reservation system quickly, We have you covered with integrations to the industry’s best booking services. We support Open Table, Resurva, WooCommerce Bookings currently.

You plug in your account information, and our theme will link up the systems. The result will be the fastest and best-looking reservation site you’ve ever built.

Google Will Love You

Have you noticed that when Google returns searches, sometimes there are ratings next to listings and sometimes there aren’t? We won’t bore you with schema details, but we can tell you that your site, because of the way we’ve coded Theme. will show up the right way. Google will love you, and so will your prospective customers.

Your Revenue Is Waiting for You

Because of the variety of WooCommerce payment gateways and extensions, you could just as easily build:

A Gift Certificate Site

A Restaurant Guide

A Membership / Association Site

The potential is limitless, simply based on what you can imagine.

Powerful Plugin Integration

Let’s talk about all the functionality that you can combine with this theme. The theme integrates with the following plugins right out of the box:

Gravity Forms

NinjaForms

Contact Form 7

FacetWP

WooCommerce

WooCommerce Product Vendors

WooCommerce Subscriptions Extension

WooCommerce Bookings Extension

WooCommerce Payment Gateway Extensions

Theme was continuously updated with new features, it was getting better and better. Over the two years Directory WordPress theme received almost 100 free updates, which is remarkable.

Demo Theme Details

Listfly – Directory Listing WordPress Theme

Listfly is the listing directory Theme for Word Press. Use Listfly if you want to start you’re your business like Yelp, Yellow Pages, White Pages. This Theme is perfect for local businesses owners and promoters or any kind of web directory.

Featured Paid and Free Listings

Directory WordPress theme offers does not stop on giving you option to add unlimited categories, but it goes far beyond that.Listings of different genres like Free, Paid, featured can be added. If a listing is not free to add, you can charge users against it and can add value to your business. Directory multipurpose is not a theme but your personal business assistant indeed.

Offering listings of number categories is an obvious handy feature of Directory WordPress Themes.. However, it does not end here, you can add listing with expiration date. Once the date is reached, the listing will expire itself keeping you safe from hustle of removing it or handling it. If you want it back, refresh it and it will be back.

ou’re able to easily set up an online directory portal listing categorized items of any type – companies, shops, websites and so on. We’ve spent quite a long time developing the theme as many things were changed several times to make it right.

Theme was continuously updated with new features, it was getting better and better. Over the two years Directory WordPress theme received almost 100 free updates, which is remarkable.

Theme Features

Top notch modern & flexible design

Frontend listing submission

Advanced ajax search

Paid listing packages

Paid listing subscriptions

Unlimited price packages

Listing events

Listing coupons

Listing reports

Listing claims

Listing reviews

Fully Responsive Design

Drag&Drop Page Builder

Over 30 elements to build your website

Google Fonts

Mega Menu

Sidebar Manager

SEO Tools

Google Rich Snippets

Geolocation support in km and miles

Frontend registration for guests

Guests can administer their own listings

Ability to get pointer on the map from address

Administrator can define packages and set the price

Wide / Narrow layout style

Advertising spaces

Contact form on listing detail page

Listing by location or category

Featured items

Listing sorting

You can easily Backup all your data and admin settings

Many portfolio layouts that can be used on any page.

Widgets ready sidebar & footer

Image & Template caching for better performance

Google Analytics & Google Maps integration

Custom CSS field for your own styles

Demo + Theme -» GET IT HERE

#Directory Listing Wordpress Theme#Best Directory Listing Wordpress themes#Top Directory Listing Wordpress themes#WP Directory Themes#Business Directory Listing WP Theme#Business Directory Listing WordPress Themes#Best free WordPress Directory themes#Best Premium WordPress Directory themes#Free Directory WordPress Themes

1 note

·

View note

Photo

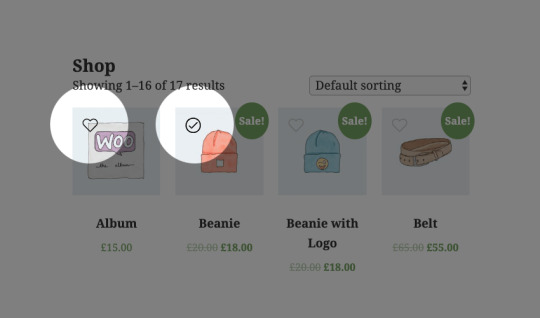

How to Create Your Own AJAX WooCommerce Wishlist Plugin

In this tutorial we will create lightweight wishlist functionality for WooCommerce using AJAX, WordPress REST API, and SVG graphics. WooCommerce doesn’t come with wishlist functionality as standard, so you’ll always need to rely on an extension to do the work for you. Unless you build it yourself for complete control..

Wish Upon a Star

Wishlist functionality will allow users to mark certain products, adding them to a list for future reference. In some eCommerce stores (such as Amazon) multiple wishlists can be created, and these can be shared with others, which makes them ideal for birthdays or weddings. In our case, the WooCommerce wishlist we’re going to create will enable customers to easily revisit products they’re considering.

Our wishlist functionality will add a heart icon to the product thumbs, which when clicked will add the product to a wishlist in a table.

Click the heart icon to add a product to the wishlist

Take a look at the demo for a proper idea of how it works.

1. Create the Plugin Structure

Let’s start by building our plugin. Create a folder with the name “wishlist” and a PHP file with the same name. Add the following snippet to the PHP file:

/* Plugin Name: Woocommerce wishlist Plugin URI: https://www.enovathemes.com Description: Ajax wishlist for WooCommerce Author: Enovathemes Version: 1.0 Author URI: http://enovathemes.com */ if ( ! defined( 'ABSPATH' ) ) { exit; // Exit if accessed directly }

We won’t go into detail about the plugin creation process, but if you are new to plugin development I highly recommend this amazing new course by Rachel McCollin:

WordPress

Introduction to WordPress Plugin Development

Rachel McCollin

Add the Plugin Functions

Let’s sketch out our plan so we know what to build:

Add wishlist toggle to products in loop and single pages using WooCommerce hooks

Create wishlist table shortcode to hold the products added to the wishlist

Create wishlist custom option in the user profile

All the plugin code will go inside the init action for the plugin, as we first need to make sure that the WooCommerce plugin is active. So right after the plugin details add the following code:

add_action('init','plugin_init'); function plugin_init(){ if (class_exists("Woocommerce")) { // Code here } }

And now let’s enqueue our plugin scripts and styles.

Add the following code to the main plugin file:

function wishlist_plugin_scripts_styles(){ wp_enqueue_style( 'wishlist-style', plugins_url('/css/style.css', __FILE__ ), array(), '1.0.0' ); wp_enqueue_script( 'wishlist-main', plugins_url('/js/main.js', __FILE__ ), array('jquery'), '', true); wp_localize_script( 'main', 'opt', array( 'ajaxUrl' => admin_url('admin-ajax.php'), 'ajaxPost' => admin_url('admin-post.php'), 'restUrl' => rest_url('wp/v2/product'), 'shopName' => sanitize_title_with_dashes(sanitize_title_with_dashes(get_bloginfo('name'))), 'inWishlist' => esc_html__("Already in wishlist","text-domain"), 'removeWishlist' => esc_html__("Remove from wishlist","text-domain"), 'buttonText' => esc_html__("Details","text-domain"), 'error' => esc_html__("Something went wrong, could not add to wishlist","text-domain"), 'noWishlist' => esc_html__("No wishlist found","text-domain"), ) ); } add_action( 'wp_enqueue_scripts', 'wishlist_plugin_scripts_styles' );

Here we enqueue the main style.css file and the main.js file for the plugin, also we pass some parameters to the main.js file to work with:

ajaxUrl – required to fetch some data from WordPress, like current User ID

ajaxPost – required to update user wishlist

restUrl – required to list the wishlist items in the wishlist table

shopName – required to add wishlist items to the session storage for non-registered or non-logged-in users

And some strings instead of hardcoding them into the js file, in case they need to be translatable.

So for now create a css, and js folder and put the corresponding files inside those folders: style.css in the css folder and main.js in the js folder.

2. Hook the Wishlist Toggle

Right inside the init action add the following code:

// Add wishlist to product add_action('woocommerce_before_shop_loop_item_title','wishlist_toggle',15); add_action('woocommerce_single_product_summary','wishlist_toggle',25); function wishlist_toggle(){ global $product; echo '<span class="wishlist-title">'.esc_attr__("Add to wishlist","text-domain").'</span><a class="wishlist-toggle" data-product="'.esc_attr($product->get_id()).'" href="#" title="'.esc_attr__("Add to wishlist","text-domain").'">'.file_get_contents(plugins_url( 'images/icon.svg', __FILE__ )).'</a>'; }

Here we add a wishlist toggle to each product in the loop and to each single product layout, using the woocommerce_before_shop_loop_item_title and woocommerce_single_product_summary hooks.

Here I want to point out the data-product attribute that contains the product ID–this is required to power the wishlist functionality. And also take a closer look at the SVG icon–this is required to power the animation.

3. Add SVG Icons

Now create an images folder in the plugin folder and put the following icon.svg in it:

<svg viewBox="0 0 471.701 471.701"> <path class="heart" d="M433.601,67.001c-24.7-24.7-57.4-38.2-92.3-38.2s-67.7,13.6-92.4,38.3l-12.9,12.9l-13.1-13.1 c-24.7-24.7-57.6-38.4-92.5-38.4c-34.8,0-67.6,13.6-92.2,38.2c-24.7,24.7-38.3,57.5-38.2,92.4c0,34.9,13.7,67.6,38.4,92.3 l187.8,187.8c2.6,2.6,6.1,4,9.5,4c3.4,0,6.9-1.3,9.5-3.9l188.2-187.5c24.7-24.7,38.3-57.5,38.3-92.4 C471.801,124.501,458.301,91.701,433.601,67.001z M414.401,232.701l-178.7,178l-178.3-178.3c-19.6-19.6-30.4-45.6-30.4-73.3 s10.7-53.7,30.3-73.2c19.5-19.5,45.5-30.3,73.1-30.3c27.7,0,53.8,10.8,73.4,30.4l22.6,22.6c5.3,5.3,13.8,5.3,19.1,0l22.4-22.4 c19.6-19.6,45.7-30.4,73.3-30.4c27.6,0,53.6,10.8,73.2,30.3c19.6,19.6,30.3,45.6,30.3,73.3 C444.801,187.101,434.001,213.101,414.401,232.701z"/> <g class="loading"> <path d="M409.6,0c-9.426,0-17.067,7.641-17.067,17.067v62.344C304.667-5.656,164.478-3.386,79.411,84.479 c-40.09,41.409-62.455,96.818-62.344,154.454c0,9.426,7.641,17.067,17.067,17.067S51.2,248.359,51.2,238.933 c0.021-103.682,84.088-187.717,187.771-187.696c52.657,0.01,102.888,22.135,138.442,60.976l-75.605,25.207 c-8.954,2.979-13.799,12.652-10.82,21.606s12.652,13.799,21.606,10.82l102.4-34.133c6.99-2.328,11.697-8.88,11.674-16.247v-102.4 C426.667,7.641,419.026,0,409.6,0z"/> <path d="M443.733,221.867c-9.426,0-17.067,7.641-17.067,17.067c-0.021,103.682-84.088,187.717-187.771,187.696 c-52.657-0.01-102.888-22.135-138.442-60.976l75.605-25.207c8.954-2.979,13.799-12.652,10.82-21.606 c-2.979-8.954-12.652-13.799-21.606-10.82l-102.4,34.133c-6.99,2.328-11.697,8.88-11.674,16.247v102.4 c0,9.426,7.641,17.067,17.067,17.067s17.067-7.641,17.067-17.067v-62.345c87.866,85.067,228.056,82.798,313.122-5.068 c40.09-41.409,62.455-96.818,62.344-154.454C460.8,229.508,453.159,221.867,443.733,221.867z"/> </g> <g class="check"> <path d="M238.933,0C106.974,0,0,106.974,0,238.933s106.974,238.933,238.933,238.933s238.933-106.974,238.933-238.933 C477.726,107.033,370.834,0.141,238.933,0z M238.933,443.733c-113.108,0-204.8-91.692-204.8-204.8s91.692-204.8,204.8-204.8 s204.8,91.692,204.8,204.8C443.611,351.991,351.991,443.611,238.933,443.733z"/> <path d="M370.046,141.534c-6.614-6.388-17.099-6.388-23.712,0v0L187.733,300.134l-56.201-56.201 c-6.548-6.78-17.353-6.967-24.132-0.419c-6.78,6.548-6.967,17.353-0.419,24.132c0.137,0.142,0.277,0.282,0.419,0.419 l68.267,68.267c6.664,6.663,17.468,6.663,24.132,0l170.667-170.667C377.014,158.886,376.826,148.082,370.046,141.534z"/> </g> </svg>

If you are new to working with SVGs I highly recommend you read these amazing tutorials on the subject:

SVG

How to Hand Code SVG

Kezz Bracey

SVG

SVG Viewport and viewBox (For Complete Beginners)

Kezz Bracey

Our SVG animation has 3 states:

Default: the heart path

Process: loading group (g tag)

End: check group (g tag)

If you now go to your shop page you will see the unstyled SVG icons piled on top of each other:

Let’s add some styling to fix this mess! Open the style.css file and paste the following code:

.wishlist-toggle { display: block; position: absolute; top: 16px; left: 16px; z-index: 5; width: 24px; height: 24px; outline: none; border:none; } .wishlist-title { display: none; } .entry-summary .wishlist-toggle { position: relative; top: 0; left: 0; display: inline-block; vertical-align: middle; margin-bottom: 8px; } .entry-summary .wishlist-title { display: inline-block; vertical-align: middle; margin-right: 8px; margin-bottom: 8px; } .wishlist-toggle:focus { outline: none; border:none; } .wishlist-toggle svg { fill:#bdbdbd; transition: all 200ms ease-out; } .wishlist-toggle:hover svg, .wishlist-toggle.active svg { fill:#000000; } .wishlist-toggle svg .loading, .wishlist-toggle svg .check { opacity: 0; } .wishlist-toggle.active svg .check { opacity: 1; } .wishlist-toggle.active svg .heart { opacity: 0; } .wishlist-toggle.loading svg .loading, .wishlist-table.loading:before { animation:loading 500ms 0ms infinite normal linear; transform-origin: center; opacity: 1; } .wishlist-toggle.loading svg .heart { opacity:0; } @keyframes loading { from {transform: rotate(0deg);} to {transform: rotate(360deg);} }

The logic here is as follows:

Initially we show the heart path of our SVG.

When the user clicks on it we will hide the heart path and show the loading path.

Once the loading finishes we will show the checkmark indicating that the product was successfully added to the wishlist.

We will toggle the loading state via JavaScript later; the loading animation is a simple transform rotate. So for now if you refresh the page (don’t forget to clear the browser cache as sometimes old styles are cached) you will see a nice heart icon with each product.

This toggle currently does nothing, so we’ll sort that out. But for now let’s keep with our plan.

4. Create Wishlist Table Shortcode

Add the following code in the init plugin action:

// Wishlist table shortcode add_shortcode('wishlist', 'wishlist'); function wishlist( $atts, $content = null ) { extract(shortcode_atts(array(), $atts)); return '<table class="wishlist-table loading"> <tr> <th><!-- Left for image --></th> <th>'.esc_html__("Name","text-domain").'</th> <th>'.esc_html__("Price","text-domain").'</th> <th>'.esc_html__("Stock","text-domain").'</th> <th><!-- Left for button --></th> </tr> </table>'; }

This is a very simple shortcode that you can add to any page, and the wishlist items will appear inside it. I won’t describe the shortcode creation process, but if you are new to this, I highly recommend reading this amazing tutorial:

Plugins

Getting Started With WordPress Shortcodes

Rohan Mehta

Make a Wishlist Page

Now from inside the WP admin create a page called “Wishlist” and put the [wishlist] shortcode inside it. Now if you go to the wishlist page you will see an empty table.

Did you notice the loading class on the table? We will remove the loading class with JavaScript later, once the wishlist items are ready to be appended to the table. But for now open the style.css and add the following code:

.wishlist-table { width:100%; position: relative; } .wishlist-table.loading:after { display: block; width: 100%; height: 100%; position: absolute; top: 0; left: 0; content: ""; background: #ffffff; opacity: 0.5; z-index: 5; } .wishlist-table.loading:before { display: block; width: 24px; height: 24px; position: absolute; top: 50%; left: 50%; margin-top:-12px; margin-left:-12px; content: ""; background-image: url('../images/loading.svg'); background-repeat: no-repeat; background-size: 100%; z-index: 6; } .wishlist-table td { position: relative; } .wishlist-table a.details { padding:4px 16px; background: #000000; color: #ffffff; text-align: center; border:none !important } .wishlist-table a.wishlist-remove { display: block; width: 24px; height: 24px; position: absolute; top: 50%; left: 50%; margin-top:-12px; margin-left:-12px; background-image: url('../images/remove.svg'); background-repeat: no-repeat; background-size: 100%; z-index: 6; border:none; opacity:0; } .wishlist-table td:hover > a.wishlist-remove { opacity:1; }

Add the loading.svg image to the images folder:

<svg xmlns="http://www.w3.org/2000/svg" xmlns:xlink="http://www.w3.org/1999/xlink" viewBox="0 0 471.701 471.701"> <path d="M409.6,0c-9.426,0-17.067,7.641-17.067,17.067v62.344C304.667-5.656,164.478-3.386,79.411,84.479 c-40.09,41.409-62.455,96.818-62.344,154.454c0,9.426,7.641,17.067,17.067,17.067S51.2,248.359,51.2,238.933 c0.021-103.682,84.088-187.717,187.771-187.696c52.657,0.01,102.888,22.135,138.442,60.976l-75.605,25.207 c-8.954,2.979-13.799,12.652-10.82,21.606s12.652,13.799,21.606,10.82l102.4-34.133c6.99-2.328,11.697-8.88,11.674-16.247v-102.4 C426.667,7.641,419.026,0,409.6,0z"/> <path d="M443.733,221.867c-9.426,0-17.067,7.641-17.067,17.067c-0.021,103.682-84.088,187.717-187.771,187.696 c-52.657-0.01-102.888-22.135-138.442-60.976l75.605-25.207c8.954-2.979,13.799-12.652,10.82-21.606 c-2.979-8.954-12.652-13.799-21.606-10.82l-102.4,34.133c-6.99,2.328-11.697,8.88-11.674,16.247v102.4 c0,9.426,7.641,17.067,17.067,17.067s17.067-7.641,17.067-17.067v-62.345c87.866,85.067,228.056,82.798,313.122-5.068 c40.09-41.409,62.455-96.818,62.344-154.454C460.8,229.508,453.159,221.867,443.733,221.867z"/> </svg>

This is the same loading SVG separated from the main icon.svg. We could use SVG sprites, but I decided to stick with a separate loading SVG.

Now, if you go to the wishlist page and refresh it you will see an empty table with loading on it. Nice, let’s move further.

5. Wishlist Custom Option in the User Profile

Our wishlist functionality will work both for logged-in users and guest users. With logged-in users we’ll store the wishlist information in the user’s metadata, and with guest users we’ll store the wishlist in the session storage.

You can also store the guest users’ wishlist in local storage, the difference being that session storage is destroyed when the user closes the tab or browser, and local storage is destroyed when the browser cache is cleared. It is up to you which option you use for guest users.

Now add the following code to the init action:

// Wishlist option in the user profile add_action( 'show_user_profile', 'wishlist_user_profile_field' ); add_action( 'edit_user_profile', 'wishlist_user_profile_field' ); function wishlist_user_profile_field( $user ) { ?> <table class="form-table wishlist-data"> <tr> <th><?php echo esc_attr__("Wishlist","text-domain"); ?></th> <td> <input type="text" name="wishlist" id="wishlist" value="<?php echo esc_attr( get_the_author_meta( 'wishlist', $user->ID ) ); ?>" class="regular-text" /> </td> </tr> </table> <?php } add_action( 'personal_options_update', 'save_wishlist_user_profile_field' ); add_action( 'edit_user_profile_update', 'save_wishlist_user_profile_field' ); function save_wishlist_user_profile_field( $user_id ) { if ( !current_user_can( 'edit_user', $user_id ) ) { return false; } update_user_meta( $user_id, 'wishlist', $_POST['wishlist'] ); }

Again, in order to remain within the scope of this tutorial, I won’t explain how to work with user metadata. If you are new to this I highly recommend reading this amazing tutorial:

WordPress

How to Work With WordPress User Metadata

Tom McFarlin

All we do here is create a text field input that will hold the wishlist items comma-separated IDs. With show_user_profile and edit_user_profile actions we add the structure of the input field, and with personal_options_update and edit_user_profile_update actions we power the save functionality.

So once the wishlist is updated it will save to the database. I you go to your profile page you will see a new text field added to it. Add whatever value you want and hit save to test if the update functionality works. With admin CSS you can hide this field if you don’t want users to see it. I will leave it as is.

6. Turn it On!

Now we are ready to power everything up!

Open the main.js file and put the following code in it:

(function($){ "use strict"; })(jQuery);

All our code will go inside this function.

Now let’s gather the required data and create some variables:

var shopName = opt.shopName+'-wishlist', inWishlist = opt.inWishlist, restUrl = opt.restUrl, wishlist = new Array, ls = sessionStorage.getItem(shopName), loggedIn = ($('body').hasClass('logged-in')) ? true : false, userData = '';

As you might remember when we enqueued our main.js script we passed some parameters to it. Here, with JavaScript, we can collect these parameters.

Next, we will create an empty wishlist array that will contains wishlist items. We will need the session storage data with our shop name (the ls variable stands for local storage), and we will need to know if the user is guest or logged-in.

Let me explain the logic here: whenever the user visits the shop page we will need to know if he or she is logged-in or is a guest-user. If the user is logged-in we will need to check if he or she has wishlist items, and if so highlight these items. If not we need to see if there are any items in the session/local storage and highlight those.

Why this is done like this? Imagine, if the user first visits the website as a guest, adds items to the wishlist, and then decides to login. If the user does not have items registered in the profile wishlist, we will need to show the ones that he or she added before login, that are stored in the session/local storage.

So let’s do that step by step:

If User is Logged-in

Fetch current user data with AJAX

If success update the wishlist

Highlight the wishlist items

Remove the session/local storage

If fail show error message in the console for the developer

if(loggedIn) { // Fetch current user data $.ajax({ type: 'POST', url: opt.ajaxUrl, data: { 'action' : 'fetch_user_data', 'dataType': 'json' }, success:function(data) { userData = JSON.parse(data); if (typeof(userData['wishlist']) != 'undefined' && userData['wishlist'] != null && userData['wishlist'] != "") { var userWishlist = userData['wishlist']; userWishlist = userWishlist.split(','); if (wishlist.length) { wishlist = wishlist.concat(userWishlist); $.ajax({ type: 'POST', url:opt.ajaxPost, data:{ action:'user_wishlist_update', user_id :userData['user_id'], wishlist :wishlist.join(','), } }); } else { wishlist = userWishlist; } wishlist = wishlist.unique(); highlightWishlist(wishlist,inWishlist); sessionStorage.removeItem(shopName); } else { if (typeof(ls) != 'undefined' && ls != null) { ls = ls.split(','); ls = ls.unique(); wishlist = ls; } $.ajax({ type: 'POST', url:opt.ajaxPost, data:{ action:'user_wishlist_update', user_id :userData['user_id'], wishlist :wishlist.join(','), } }) .done(function(response) { highlightWishlist(wishlist,inWishlist); sessionStorage.removeItem(shopName); }); } }, error: function(){ console.log('No user data returned'); } }); }

If User is Guest

Fetch wishlist from the session/local storage

else { if (typeof(ls) != 'undefined' && ls != null) { ls = ls.split(','); ls = ls.unique(); wishlist = ls; } }

As you may have noticed here we have double-AJAX and some helper functions. So first let’s create the actions of the AJAX requests, and after that I will explain our helper functions. I won’t describe in detail the AJAX functionality in WordPress, but if you are new to AJAX and WordPress, I highly recommend reading this amazing tutorial on it:

Plugins

A Primer on Ajax in the WordPress Frontend: Understanding the Process

Tom McFarlin

Our first AJAX request gets the user id and the user wishlist data from WordPress. This is done with a custom AJAX action added to the plugin code file:

// Get current user data function fetch_user_data() { if (is_user_logged_in()){ $current_user = wp_get_current_user(); $current_user_wishlist = get_user_meta( $current_user->ID, 'wishlist',true); echo json_encode(array('user_id' => $current_user->ID,'wishlist' => $current_user_wishlist)); } die(); } add_action( 'wp_ajax_fetch_user_data', 'fetch_user_data' ); add_action( 'wp_ajax_nopriv_fetch_user_data', 'fetch_user_data' );

The most important part here is the action name (fetch_user_data)–make sure it is the same for AJAX and for functions wp_ajax_fetch_user_data and wp_ajax_nopriv_fetch_user_data. Here we’re preparing JSON formatted data with user ID and user wishlist data.

Our next AJAX request updates the user wishlist if there were already wishlist items from session/local storage. Take a close look at the url option–see it is different.

The logic is the same as for the first action–the difference is that here we don’t return or echo any data, but we update the wishlist option for the current user.

function update_wishlist_ajax(){ if (isset($_POST["user_id"]) && !empty($_POST["user_id"])) { $user_id = $_POST["user_id"]; $user_obj = get_user_by('id', $user_id); if (!is_wp_error($user_obj) && is_object($user_obj)) { update_user_meta( $user_id, 'wishlist', $_POST["wishlist"]); } } die(); } add_action('admin_post_nopriv_user_wishlist_update', 'update_wishlist_ajax'); add_action('admin_post_user_wishlist_update', 'update_wishlist_ajax');

And if our user is a guest we will need to check if there are any wishlist details in the session/local storage.

Helper Functions

Before we move to the events part I want to explain our helper functions

Array.prototype.unique = function() { return this.filter(function (value, index, self) { return self.indexOf(value) === index; }); } function isInArray(value, array) {return array.indexOf(value) > -1;} function onWishlistComplete(target, title){ setTimeout(function(){ target .removeClass('loading') .addClass('active') .attr('title',title); },800); } function highlightWishlist(wishlist,title){ $('.wishlist-toggle').each(function(){ var $this = $(this); var currentProduct = $this.data('product'); currentProduct = currentProduct.toString(); if (isInArray(currentProduct,wishlist)) { $this.addClass('active').attr('title',title); } }); }

The first helper function makes the array unique, by removing duplicates, the second one checks if the given value is present in the given array. The next function executes when an item is added to the wishlist and the last one shows items that are in the wishlist.

Add Toggle

Now let’s add a click event to the wishlist toggle to power the actual functionality. On each toggle click event the animation is triggered and if the user is logged-in the wishlist update action fires with AJAX. If the user is a guest the item is added to the session/local storage.

Now if you go to the shop page, refresh the browser, and click on any wishlist toggle you will see it is working!

$('.wishlist-toggle').each(function(){ var $this = $(this); var currentProduct = $this.data('product'); currentProduct = currentProduct.toString(); if (!loggedIn && isInArray(currentProduct,wishlist)) { $this.addClass('active').attr('title',inWishlist); } $(this).on('click',function(e){ e.preventDefault(); if (!$this.hasClass('active') && !$this.hasClass('loading')) { $this.addClass('loading'); wishlist.push(currentProduct); wishlist = wishlist.unique(); if (loggedIn) { // get user ID if (userData['user_id']) { $.ajax({ type: 'POST', url:opt.ajaxPost, data:{ action:'user_wishlist_update', user_id :userData['user_id'], wishlist :wishlist.join(','), } }) .done(function(response) { onWishlistComplete($this, inWishlist); }) .fail(function(data) { alert(opt.error); }); } } else { sessionStorage.setItem(shopName, wishlist.toString()); onWishlistComplete($this, inWishlist); } } }); });

7. List Items in Wishlist Table

Now it is time to list our wishlist items in the wishlist table we created earlier.

Add the following code into main.js at the very bottom of our wrapper function:

setTimeout(function(){ if (wishlist.length) { restUrl += '?include='+wishlist.join(','); restUrl += '&per_page='+wishlist.length; $.ajax({ dataType: 'json', url:restUrl }) .done(function(response){ $('.wishlist-table').each(function(){ var $this = $(this); $.each(response,function(index,object){ $this.append('<tr data-product="'+object.id+'"><td><a class="wishlist-remove" href="#" title="'+opt.removeWishlist+'"></a>'+object.image+'</td><td>'+object.title["rendered"]+'</td><td>'+object.price+'</td><td>'+object.stock+'</td><td><a class="details" href="'+object.link+'">'+opt.buttonText+'</a></td></tr>'); }); }); }) .fail(function(response){ alert(opt.noWishlist); }) .always(function(response){ $('.wishlist-table').each(function(){ $(this).removeClass('loading'); }); }); } else { $('.wishlist-table').each(function(){ $(this).removeClass('loading'); }); } },1000);

Here we are using the WordPress REST API to get the products by ID in the wishlist array.

For each of the products we get we are adding a table row with the required data to display. We need the product image, title, stock status, button and price.

Here we have two options for the REST API:

using the WordPress REST API

using the WooCommerce REST API.

The difference here is that product data is already present in the Woocommerce REST API, but an API key is required. With the default WordPress REST API product data is absent by default, but can be added, and no API key is required. For such a simple task as a wishlist I don’t think that an API key is needed, so we will do it by extending the default WordPress REST API to return our product price, image code and the stock level.

Go to the main plugin file and at the very bottom add the following code:

// Extend REST API function rest_register_fields(){ register_rest_field('product', 'price', array( 'get_callback' => 'rest_price', 'update_callback' => null, 'schema' => null ) ); register_rest_field('product', 'stock', array( 'get_callback' => 'rest_stock', 'update_callback' => null, 'schema' => null ) ); register_rest_field('product', 'image', array( 'get_callback' => 'rest_img', 'update_callback' => null, 'schema' => null ) ); } add_action('rest_api_init','rest_register_fields'); function rest_price($object,$field_name,$request){ global $product; $id = $product->get_id(); if ($id == $object['id']) { return $product->get_price(); } } function rest_stock($object,$field_name,$request){ global $product; $id = $product->get_id(); if ($id == $object['id']) { return $product->get_stock_status(); } } function rest_img($object,$field_name,$request){ global $product; $id = $product->get_id(); if ($id == $object['id']) { return $product->get_image(); } } function maximum_api_filter($query_params) { $query_params['per_page']["maximum"]=100; return $query_params; } add_filter('rest_product_collection_params', 'maximum_api_filter');

All this does is create new fields for REST API and extends the maximum items limit per request. Again, if you are new to this subject I highly recommend reading this series.

For now, if you go to your wishlist table and refresh the page you will see the list of items that are added to your wishlist.

8. Removing Items From Wishlist

We are almost done; only the remove functionality remains. So let’s create that! Add the following code at the very bottom of the wrapper function in the main.js file

$(document).on('click', '.wishlist-remove', function(){ var $this = $(this); $this.closest('table').addClass('loading'); wishlist = []; $this.closest('table').find('tr').each(function(){ if ($(this).data('product') != $this.closest('tr').data('product')) { wishlist.push($(this).data('product')); if (loggedIn) { // get user ID if (userData['user_id']) { $.ajax({ type: 'POST', url:opt.ajaxPost, data:{ action:'user_wishlist_update', user_id :userData['user_id'], wishlist :wishlist.join(','), } }) .done(function(response) { $this.closest('table').removeClass('loading'); $this.closest('tr').remove(); }) .fail(function(data) { alert(opt.error); }); } } else { sessionStorage.setItem(shopName, wishlist.toString()); setTimeout(function(){ $this.closest('table').removeClass('loading'); $this.closest('tr').remove(); },500); } } }); });

Once the remove icon is clicked (make sure you have a remove.svg in the images folder, you can use whatever icon you want), we need to check if the user is logged-in. If so, we then remove the item ID from the wishlist using AJAX with the user_wishlist_update action. If the user is a guest we need to remove the item ID from the session/local storage.

Now go to your wishlist and refresh the page. Once you click on the remove icon your item will be removed from the wishlist.

Conclusion

That was quite a project! A simple, but comprehensive wishlist feature for your WooCommerce stores. You are free to use this plugin in any project; you can extend, modify it and make suggestions. I hope you liked it. Here is the link to the source files on GitHub. And here is the demo.

Learn More WooCommerce Theme Development

At Tuts+ we have a great collection of tutorials and courses to learn WooCommerce development. Check out these four great courses to get started!

WooCommerce

Up and Running With WooCommerce

Rachel McCollin

WordPress

Developing a WooCommerce Theme

Rachel McCollin

WordPress

Go Further With WooCommerce Themes

Rachel McCollin

WordPress

How to Make Your Theme WooCommerce Compatible

Rachel McCollin

by Karen Pogosyan via Envato Tuts+ Code https://ift.tt/2WTWfiG

1 note

·

View note

Text

Monitoring using Sensu, StatsD, Graphite, Grafana & Slack.

At Airwoot, we are in the business of processing & mining real-time social media streams. It is critical for us to track heartbeat of our expansive distributed infrastructure and take timely action to avoid service disruptions.

With this blog post, we would like to share our work so far in creating an infrastructure watchdog and more. We started with following objectives:

Monitor everything under the radar and thereby learn how the system breath.

Use the monitoring framework to collect data and power an internal dashboard for identifying trends.

Alert anything that need attention to appropriate handlers (engineering and client servicing teams).

Let’s dive.

Monitoring Framework

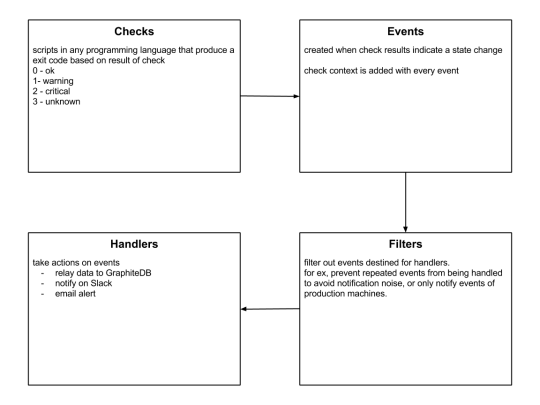

Sensu

Sensu is a feature-packed distributed monitoring framework that executes health checks for applications/services and collects metrics across all connected Sensu clients, which then are relayed to a Sensu server. The checks’ results can be handled differently based on their severity levels. We choose Sensu out of the many monitoring tools available for the following reasons:

ability to write checks and handlers for check failures in any language.

large number of community plugins available and good documentation.

easy horizontal scaling by adding more clients and servers.

it acts as a “monitoring router” that publishes check requests and collects results across all Sensu clients. The results along with their context are directed to custom defined handlers for taking actions based on the criticality of results.

Source: Sensu Documentation - https://sensuapp.org

Sensu has three main components that are executed as daemon processes.

1. sensu-server runs on one or more machines in the cluster and acts as the command center for monitoring. It performs following actions:

schedules periodic checks on clients

aggregates the checks’ results and adds context to them to create events

events can be filtered and passed on to custom defined handlers for taking actions

2. sensu-client can subscribe to group(s) of checks defined on the sensu-server or can have their own standalone checks. sensu-client communicate with the server using the RabbitMQ.

3. sensu-api has a REST interface to Sensu’s data like connected clients, active events, and check results. It also has capabilities to resolve events, remove connected clients, and issue check requests.

Sensu Entities

StatsD

StatsD is a push-based network daemon that allows a statsD client to collect custom metrics and export them to a collecting server. The catch here is that StatsD uses lightweight UDP protocol for relaying metrics to the metric store, so a slow metric receiver shall not affect application’s performance. We used the Python client for statsD to collect application level metrics.

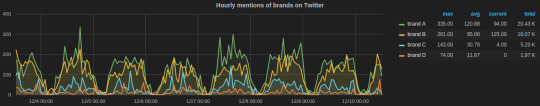

There are three main data types in statsD:

1. Counters are simply time correlated count of events taking place per unit time. There are incr and decr methods for altering the value of a counter. We extensively used counters to track brand-wise documents from social channels like Twitter and Facebook. Here’s a code snippet for tracking mentions of a brand on it’s Twitter handle:

https://gist.github.com/tanaysoni/76a6de3d7ab3e52b2860

These mentions’ metrics can be displayed at arbitrary time aggregations. Here’s how we did in our Grafana dashboard.

Grafana dashboard showing hourly brands’ mentions on Twitter calculated using StatsD counters.

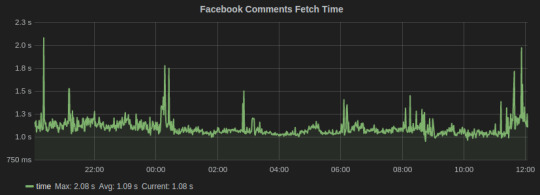

2. Timers collect numbers times or anything that may be a number. StatsD servers then calculate the lower bound, upper bound, 90th percentile, and count of each timer for each period. We used timers to track the time in fetching social media conversation from Facebook and Twitter. Here’s the graph for the task that fetches comments on brands’ Facebook page:

Facebook Comments

3. Gauges are a constant data type that are not subjected to averaging. They will retain their value until changed. We used gauges for computing the delays and queue lengths in our systems.

This is an excellent blog that explains these metrics in detail.

Graphite

Graphite is a database for storing numeric time series data. We use StatsD for collecting metrics, which are then stored in Graphite. There are three software components of Graphite:

1. carbon is a daemon that listens to the time series data. It has a cache that stores values in memory and subsequently flush them to disk at a regular interval. It has configuration files that define the storage schema and aggregation policies for the metrics. It tells whisper the frequency and the history of datapoints to store. We have configured carbon to store most our metrics in a frequency of 10 seconds and for a month’s time. Here’s an example config:

[storm_stats] # name of schema pattern = ^storm_stats.* # regex for matching metric names retentions = 10s:30d # frequency:history for retention

2. whisper is a database library for storing the metrics. The location of whisper files can be set from the carbon-conf file.

3. graphite webapp is the default web-based graphing library provided by graphite, but we used the more powerful Grafana dashboard.

New Relic

Infrastructure monitoring of all EC2 instances including memory, CPU, disks capacity and IO utilization. Many out-of-the-box solutions are available, so we decided not to reinvent the wheel. We have been using New Relic for a while now and it has worked perfectly(well almost!), so decided to stick with it.

New Relic has a quick step-wise guide for setting up. The problem we faced with New Relic is with their “Fullest Disk” alerts which are triggered when disk space of the fullest disk mounted on the machine being monitored is beyond alert thresholds. This fullest disk alert once open prevents alerts for the remaining disk from being triggered.

We solved this problem using Sensu disk check plugin which allows to select the disk(s) to be ignored from the check.

Supervisor

We run all the critical processes on Supervisor. It only has support for processes that are not daemonizing, i.e., they should not detach from the terminal from which they have been started. There are many process control features provided by Supervisor including restart on failures, alerts when set number of restart attempts fails, redirect output of processes to custom log directories, and autostart process on machine reboot.

We have instrumented a Sensu plugin that notifies on Slack if a process crashes. Here’s the code:

https://gist.github.com/tanaysoni/486ef4ad37ea97b98691

Monitoring of Services

Apache Kafka

The official monitoring doc is a good starting point for exploring metrics for monitoring Kafka. We use an open-source plugin released by Airbnb for sending the Kafka metrics to a StatsD server.

We have found the following metrics to be useful that we track,

Request Handler Idle Time, which tells us the average fraction of time request handler threads were idle. It lies in the range of 0-1, and should be ideally less than 0.3.

Grafana dash for Kafka

In the above graph, the legends Min, Avg, and Current are added by Grafana. The average value for the entire graph is just under 0.4, which tells us that it’s time to scale our Kafka cluster.

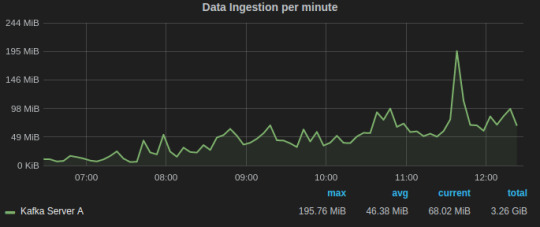

Data/Message Ingestion across all topics helps us to track and comprehend the load on the Kafka servers and how it varies with time.

Grafana dash for Kafka request handler idle time

Alerts for Kafka

A Kafka instance runs Zookeeper and Kafka-Server processes. We run them through Supervisor which automatically restarts a process if it crashes and notifies on Slack via Sensu Supervisor check.

Apache Storm

We use Storm to process data that is consumed from Kafka clusters. The command center of our Storm clusters is the Storm UI, which is provided as a daemon process in the official Storm distribution. This blog is a good documentation for Storm UI.

We run all Storm process under Supervisor, which is instrumented with Sensu to alert Slack if any process is not in the running state.

There could be instances when all Storm daemons are running, but the topology might have crashed due to a code-level bug. For this scenario, we have written a Sensu plugin that parses the output of “./storm list” to check if given topology is deployed and activated.

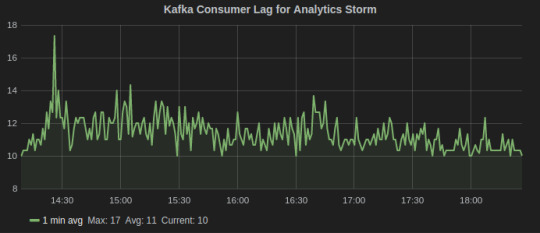

Since, we do stream processing using Storm and Kafka, an important metric is Kafka consumer lag which tells how far is the consumer from the producers. It is essentially the queue length of tuples yet to be consumed by the Storm. There are also Sensu alerts on consumer lag that notifies on Slack if it goes beyond a threshold.

Consumer Lag metric for Kafka-Storm

Gunicorn

Gunicorn comes with a StatsD instrumentation that tracks all the metrics and sends to a StatsD client over UDP. Run Gunicorn with following command line arguments specifying the location of statsD server and an optional prefix to be added to the name of metrics.

gunicorn [ --statsd-prefix sentimentAPI.gunicorn_1] --statsd-host=localhost:8125

We used the following aggregations and transformations in Grafana for the Gunicorn dashboard:

Request status

series sum for all 2xx, 3xx, 4xx, and 5xx response codes

table of avg, current, and total legends help to summarize data for the given time interval

total count of exceptions in the given time range

response time average over one min window

Celery dashboard of last week

MongoDB

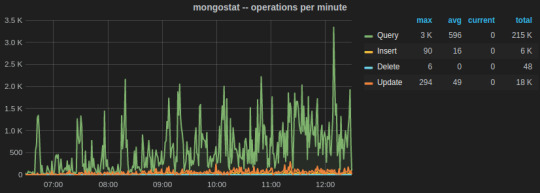

MongoDB has in-built set of utilities for reporting real-time statistics on database activities. We leveraged them to built Sensu plugin that periodically parse output from them to sent to a graphite server. These Graphite metrics are graphed on our Grafana MongoDB dashboard.

The two most important utilities are mongostat and mongotop.

mongostat tracks the load on the servers based on database operations by type including insert, update, query, and delete.

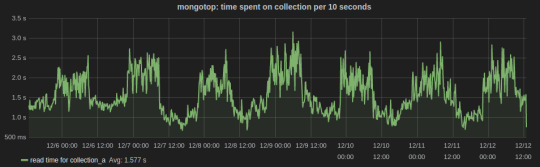

mongotop collect and reports real-time statistics on current read and write activity on a per collection basis. We wrote a Python script to send mongotop metrics to statsD client at an interval of 10 seconds.

https://gist.github.com/tanaysoni/780c4c68447cda8a0a38

Below is a Grafana dash of metrics for a collection graphed over a week time. The peaks and lows corresponds to the business hours, i.e., the reads from the collection were more during the business hours.

Sensu plugins for MongoDB monitoring

Sensu community has metrics and checks for MongoDB.

PostgreSQL

We are primarily tracking the number of connections including active, idle, and idle in transaction on PostgreSQL. For this, we created a Sensu plugin that runs periodically to fetch data from pg_stat table and output as Graphite metrics that are relayed by Sensu. Here’s the code:

https://gist.github.com/tanaysoni/30dabf820c500a58b860

PostgreSQL also provides built-in Postgres statistics collector, whose data can be relayed to a Graphite database using Postgres Sensu plugins.

Celery

Celery is an integral part of our system. Increase in the queue length beyond threshold is a critical state which the team should be informed of.

We have written a Sensu plugin which fetches the queue length of Celery every minute, which is then relayed to GraphiteDB by Sensu. If the queue length is above our warning thresholds, the team is notified on Slack.

Here’s how the Grafana dashboard for Celery looks like.

The hourly average summarization in Grafana smooths out the peaks(when bulk tasks get assigned by Celerybeat) to comprehend the load on the Celery cluster. It gives insight as to when to scale the Celery cluster to add more workers.

2 notes

·

View notes

Text

Mastering iOS Development: A Comprehensive Guide for Beginners and Professionals

Welcome to our comprehensive guide on mastering iOS development! Whether you're a beginner looking to delve into the world of iOS app development or a seasoned professional aiming to enhance your skills, this article will serve as your ultimate resource. We'll cover everything you need to know, from the basics of iOS development to advanced techniques and best practices. So, let's dive in!

Table of Contents

Understanding iOS Development

Setting Up Your Development Environment

Swift Programming Language

Xcode: The Integrated Development Environment (IDE)

User Interface Design and Development

Data Persistence

Networking and Web Services

Core Data and SQLite

Working with APIs

Testing and Debugging

App Distribution and Submission

Performance Optimization

Advanced Topics in iOS Development

Continuous Learning and Improvement

Conclusion

1. Understanding iOS Development

iOS development is the process of creating applications for Apple's mobile devices, such as the iPhone, iPad, and iPod Touch. To become a proficient iOS developer, it's crucial to have a solid understanding of the iOS platform, its frameworks, and the app development life cycle. This section will provide you with a comprehensive overview.

2. Setting Up Your Development Environment

Before you can start building iOS apps, you need to set up your development environment. This involves installing Xcode, Apple's official IDE, and configuring it for iOS development. We'll guide you through the installation process and show you how to create your first iOS project.

3. Swift Programming Language

Swift is Apple's modern programming language designed specifically for iOS, macOS, watchOS, and tvOS development. In this section, we'll explore the fundamentals of Swift, including variables, data types, control flow, functions, and object-oriented programming principles. By mastering Swift, you'll have a strong foundation for iOS app development.

4. Xcode: The Integrated Development Environment (IDE)

Xcode is the primary tool used by iOS developers for building, testing, and debugging applications. We'll take an in-depth look at Xcode's features, including its interface, project structure, code editor, and various debugging tools. Understanding Xcode is essential for efficient and productive iOS development.

5. User Interface Design and Development

Creating visually appealing and user-friendly interfaces is paramount in iOS app development. This section will cover the UIKit framework, Auto Layout, Interface Builder, and other essential components for designing and developing engaging user interfaces. We'll also discuss UI best practices and accessibility guidelines.

6. Data Persistence

Most apps require data persistence to store and retrieve user information. We'll explore different techniques for data persistence in iOS, such as Core Data, SQLite, and UserDefaults. You'll learn how to work with databases, handle data models, and perform efficient data operations within your apps.

7. Networking and Web Services

In today's interconnected world, networking and web services integration are essential for many iOS applications. We'll delve into URLSession and Alamofire frameworks, showcasing how to make network requests, handle responses, and parse JSON data. You'll gain the necessary skills to create apps that communicate with web servers and consume APIs.

8. Core Data and SQLite

Core Data is Apple's powerful framework for managing object graphs and persisting data. In this section, we'll explore Core Data's concepts, including entities, attributes, relationships, and fetching data. We'll also compare Core Data with SQLite, another popular database solution for iOS development.

9. Working with APIs

API integration is a critical aspect of iOS app development. We'll guide you through the process of working with RESTful APIs, authenticating requests, handling errors, and managing data models. You'll learn how to leverage APIs to enrich your app's functionality and provide a seamless user experience.

10. Testing and Debugging

Ensuring the quality and reliability of your apps is paramount. In this section, we'll cover various testing techniques, including unit testing, UI testing, and performance testing. We'll also explore Xcode's debugging tools and techniques for troubleshooting and resolving common issues.

11. App Distribution and Submission

Once you've built your app, it's time to distribute it to users. We'll walk you through the process of creating distribution certificates, provisioning profiles, and App Store Connect submissions. You'll gain a comprehensive understanding of the app distribution workflow and how to publish your app on the App Store.

12. Performance Optimization

Optimizing app performance is crucial for delivering a smooth and responsive user experience. We'll discuss techniques for improving app performance, such as reducing memory footprint, optimizing code execution, and leveraging profiling tools. By optimizing your apps, you'll ensure they run efficiently on iOS devices.

13. Advanced Topics in iOS Development

In this section, we'll explore advanced topics that go beyond the basics of iOS development. We'll cover topics like Core Animation, Core Graphics, advanced networking, concurrency, and integrating third-party libraries. These topics will empower you to create more sophisticated and feature-rich iOS applications.

14. Continuous Learning and Improvement

iOS development is a rapidly evolving field. To stay ahead, it's essential to cultivate a mindset of continuous learning and improvement. We'll provide you with resources, tips, and strategies for staying up to date with the latest iOS technologies, frameworks, and industry trends.

Conclusion

Congratulations on completing our comprehensive guide on mastering iOS development! We've covered a wide range of topics, from the fundamentals of iOS development to advanced techniques. By now, you should have a solid foundation to kick-start your iOS development journey or take your existing skills to the next level. Remember to practice regularly, engage with the iOS developer community, and never stop learning!

1 note

·

View note

Text

Ruby on Rails API Development- Example of Rails API

When a developer says that they are using Rails as the API, it indicates that they are using Ruby on Rails Development to build the backend, which is shared between the web application and native applications. Let’s see how you can develop an API using RoR.

What is an API application?

Application Programming Interface, or API, allows complement to interact with one another. One can request from the Rails app to fetch information by providing URLs.

What is Ruby on Rails?

The best part about Ruby on Rails is that it is open-source software. Users can utilize Ruby on Rails to develop applications or collaborate to bring change to their code.

Example: Ruby on Rails API Development Steps

Step 1: API creation using Rails

To initiate the creation of an API, one must first write the below-written code in the terminal of any chosen directory. Using the code written below will create an API named secret_menu_api

$ rails new secret_menu_api –-api --database=postgresql

You must type the below-written code in the terminal to open the API menu.

$ cd secret_menu_api code

Step 2: Enabling CORS (Cross-Origin Resource Sharing)

CORS enables other people to access the API. To prevent other people from accessing the API, one can disable the CORS. To open the cors.rb file, you need to open the file explorer where the Rails API created now is present.

config > initializers > cors.rb

You must uncomment the lines and change the code from origins ‘example.com’ to origins’*’.

Rails.application.config.middleware.insert_before 0, Rack::Cors do allow do origins '*' resources '*', headers: :any, methods: [:get, :post, :put, :patch, :delete, :options, :head] end end

Open Gemfile by scrolling down from the explorer file. Uncomment gem ‘rack-cors’ in line number 26.

# in Gemfile gem ‘rack-cors’

Open the terminal and run

$ bundle install

Step 3: Create a Controller, Model along with a table with

$ rails g resource Post title description

After using the command, it will then generate the below-mentioned files.

Model [Post]:

app > models > post.rb

Controller:

app > controllers > posts_controller.rb

Route:

config > routes.rb

Database Migration Table:

db > migrate > 20230127064441_create_posts.rb

Step 4: Attributes of Post Model

Title of the post

Description of the post

Specifying attributes

Add the following Code into db > migrate > 20230127064441_create_posts.rb:

class CreatePosts < ActiveRecord::Migration[6.0] def change create_table :posts do |t| t.string :title t.text :description end end end

Migrating the table

$ rails db:migrate

If the data has been migrated successfully, then you will be able to see the following:

== 20230127064441 CreatePosts: migrating ============================= -- create_table(:posts) -> 0.0022s == 20230127064441 CreatePosts: migrated (0.0014s) ====================

Step 5: Defining display, index, destroy, update, and create actions.

Here are the implications of the actions:

Index: It will display all the posts present in the database.

Show: It will display the specific(given as an id) post.

Create: It will make the post’s instance.

Update: It will update the post-item instance.

Delete: It will delete specific post items.

Now copy and then paste the below code in secret_menu_intems_controller.rb.

Now let’s write API magic over here.

app > controllers > posts_controller.rb

class PostsController < ApplicationController def index posts = Post.all render json: posts, status: 200 end

def show post = post.find_by(id: params[:id]) if post render json: post, status: 200 else render json: { error: “Post Not Found” } end end def create post = Post.new( title: params[:title], description: params[:description] ) if post.save render json: post else render json: { error: “Error on creating a record” } end end

def update post = Post.find_by(id: params[:id]) post.update( title: params[:title], description: params[:description] ) render json: {message: “#{post.title} has been updated!”, status: 200} end

def destroy post = Post.find_by(id: params[:id]) if post post.destroy render json: “#{post.title} has been deleted!” else render json: { error: “Post Not Found” } end end end

Step 6: Creating routes for index, create, show, delete, and update actions.

Routes receive HTTP requests that come from the client side. We have to forward it using the correct actions. To configure the route, copy the following code and paste it into the route.rb.

# config > routes.rb

Rails.application.routes.draw do resources :posts, only: [:index, :show, :create, :update, :destroy] end

Step 7: Seeding the data

Now in the database, create secret menu item instances.

# db > seed.rb

post1 = Post.create(title: "Better way to improve Ruby on Rails coding", description:"Lorem Ipsum is simply dummy text of the printing and typesetting industry.")

post2 = Post.create(title: "perfect Combination of Angular + Ruby on Rails", menu_description:"Lorem Ipsum is simply dummy text of the printing and typesetting industry.")

Seeding the data

$ rails db:seed

Verify if seeding was correctly done:

$ rails c

# It will now show a console

2.6.1 :002 >

Now you can extract all the instances of secret menu items by typing SecretMenuItem.all

2.6.1 :002 > Post.all Post Load (0.1ms) SELECT "secret_menu_items".* FROM "posts"