#gradient in neural network

Explore tagged Tumblr posts

Text

Vanishing gradient explained in detail _ day 20

Understanding and Addressing the Vanishing Gradient Problem in Deep Learning Understanding and Addressing the Vanishing Gradient Problem in Deep Learning Part 1: What is the Vanishing Gradient Problem and How to Solve It? In the world of deep learning, as models grow deeper and more complex, they bring with them a unique set of challenges. One such challenge is the vanishing gradient problem—a…

0 notes

Text

Machine Learning from scratch

Introduction This is the second project I already had when I posted Updates to project. Here is its repository: Machine Learning project on GitHub1. I started it as the Artificial Intelligence hype was going stronger, just to have a project on a domain that’s of big interest nowadays. At that point I was thinking to continue it with convolutional networks and at least recurrent networks, not…

#artificial neural networks#classification#logistic#numerical methods#optimization#regression#stochastic gradient descent

0 notes

Text

Sundress Season - S.R

a/n: spent all friday & saturday writing so sorry 4 dumping so many works 2night lololol

‧₊˚ ✩°。⋆♡ ⋆˙⟡♡ ⋆˙⟡♡⋆。°✩˚₊‧

pairings: spencer reid x fem!reader

summary: spencer decides to come help you out with some research and gets a little more than he bargained for

warnings: fluff, thigh kink if you SQUINT LIKE SQUINT

wc: 0.9k

You crossed one leg over the other, your nails drumming against the table, while your eyes bored holes into the book that lay open in your lap. You loved reading, more than most people, but when it was something you were interested in, not when the pages were smeared with the arcane symbols of mathematical algorithms that you could not seem to comprehend. It was giving you a migraine.

At the call of your name, your head lifted abruptly, a welcome excuse the cast aside the loathsome book, expecting your coffee to be awaiting you at the counter. You weren't, however, expecting to see Spencer standing there. Your brows knitted together in a moment of confusion before you face relaxed into a warm, welcoming smile.

"Spence? Hey, what are you doing here?"

"JJ said you were researching the neural network algorithms," Spencer said, his voice tinged with a hint of amusement as he pulled out the chair across from you. "I figured I could lend a hand."

“Oh, bless your heart, Dr. Reid,” you praised, hand dramatically pressed to your heart, “I could kiss you.”

The subtle rosiness that blossomed on Reid’s cheeks didn’t escape your notice, and you couldn’t deny the small thrill of saying things designed to elicit the delightful blush. It was cute.

“May I?” he asks, gesturing towards the book, ignoring your words.

You give a nod and pass it over, his fingers brushing over yours in the process. It was hard not to stare at his face, admittedly, your scientific knowledge (or any knowledge) didn’t rival his, yet surely there was some explanation for why you found him so attractive.

You watched, curiously, as he made quick work of the pages, absorbing the information with the ease of a child flipping through a picture book. Maybe that was it—his intelligence, now that wasn’t far off. I mean, who didn’t want a man who could effortlessly recite pi to the hundredth decimal?

You found yourself following the lines of his face— from the subtle shadows under his eyes to the rhythmic movement of his tongue against the inside of his cheek as he concentrated, down to the soft dip of his lips. God, he was so beautiful. And even that term barely did him justice.

Your blatant starring was broken only when you realized his lips were moving.

“Yeah, totally,” you said, bobbing your head in agreement, clueless to his actual words but hoping you said the right thing.

He regarded you with a puzzled glance, his brow raised while carefully marking his place in the book. “Is that so?”

“Absolutely.”

That famous, gorgeous smile of his spread across his face as his eyes darted around the coffee shop. His fingers patted his cheek thoughtfully in silent, teasing challenge.

“Wait, what?”

“The issue was with adjusting the weight initialization to prevent the vanishing gradient problem,” he remarked with an easy shrug. “Seems like the perfect time for that well-deserved kiss.”

His words sent a wave of warmth flooding your cheeks. Was he serious? You decided you didn’t care. Rising just enough to meet him, you cupped his face and planted a sloppy kiss against his cheek. As your drew back, you couldn’t help but delight in the sight of his ears, now tinted with a charming blush of red.

The intimate bubble burst as the barista’s voice rang out, announcing that your coffee was, in fact, prepared at last. You tapped his nose lightly before standing fully. “My hero.”

Spencer watched with a slack jaw as you walked away from the table, his eyes drawn to your thighs. The air seemed to escape him in a rush, his gaze locked on your outfit, now fully revealed as you stood up. He was so used to seeing you in dress pants, he’d never seen you in a dress, a sundress at that.

He was already burning from the feeling of your lips on his cheek but now it was spreading through every part of him as he traced your curves before landing once again on your supple thighs. God, you were beautiful, and that ass—

He was on the cusp of entertaining some rather less-than-holy ideas when the shrill ring of his phone intervened. He mentally berated the caller, wishing to preserve every detail of your image in his mind. Morgan. Naturally.

He swiped deftly at the phone, realizing it was FaceTime. Morgan’s head filled the screen, his eyebrows shooting up as he took in Spencer’s appearance.

“Morning, lover boy.”

Spencer was unsure what he meant. “Huh?”

Morgan simply flicked his cheek with a smirk. “Looks like ya missed a spot, hot stuff.”

Spencer’s face warmed with a fresh flush, hastily angling the phone away, his fingers working to erase the lipstick stain.

“Whoa, whoa, hold up, man! You on a hot date or something? C’mon, Reid, who’s the lucky lady?”

Once assured his skin was free of the pink evidence, Spencer lifted the phone again. He didn’t get a chance to ask Morgan’s reason for calling, as your face appeared behind him, curiously glancing at the phone.

“Oh, hey Morgan!”

Morgan’s mouth dropped open. “No way! You’re kidding me! Penelope is going to freak—,”

His words were cut short as Spencer swiftly hung up.

#spender reid x reader#spencer reid fluff#spencer reid#spencer reid x fem!reader#criminal minds x you#spencer reid x you#criminal minds fanfic

1K notes

·

View notes

Text

Old-school planning vs new-school learning is a false dichotomy

I wanted to follow up on this discussion I was having with @metamatar, because this was getting from the original point and justified its own thread. In particular, I want to dig into this point

rule based planners, old school search and control still outperform learning in many domains with guarantees because end to end learning is fragile and dependent on training distribution. Lydia Kavraki's lab recently did SIMD vectorisation to RRT based search and saw like a several hundred times magnitude jump for performance on robot arms – suddenly severely hurting the case for doing end to end learning if you can do requerying in ms. It needs no signal except robot start, goal configuration and collisions. Meanwhile RL in my lab needs retraining and swings wildly in performance when using a slightly different end effector.

In general, the more I learn about machine learning and robotics, the less I believe that the dichotomies we learn early on actually hold up to close scrutiny. Early on we learn about how support vector machines are non-parametric kernel methods, while neural nets are parametric methods that update their parameters by gradient descent. And this is true, until you realize that kernel methods can be made more efficient by making them parametric, and large neural networks generalize because they approximate non-parametric kernel methods with stationary parameters. Early on we learn that model-based RL learns a model that it uses for planning, while model free methods just learn the policy. Except that it's possible to learn what future states a policy will visit and use this to plan without learning an explicit transition function, using the TD learning update normally used in model-free RL. And similar ideas by the same authors are the current state-of-the-art in offline RL and imitation learning for manipulation Is this model-free? model-based? Both? Neither? does it matter?

In my physics education, one thing that came up a lot is duality, the idea that there are typically two or more equivalent representations of a problem. One based on forces, newtonian dynamics, etc, and one as a minimization* problem. You can find the path that light will take by knowing that the incoming angle is always the same as the outgoing angle, or you can use the fact that light always follows the fastest* path between two points.

I'd like to argue that there's a similar but underappreciated analog in AI research. Almost all problems come down to optimization. And in this regard, there are two things that matter -- what you're trying to optimize, and how you're trying to optimize it. And different methods that optimize approximately the same objective see approximately similar performance, unless one is much better than the other at doing that optimization. A lot of classical planners can be seen as approximately performing optimization on a specific objective.

Let me take a specific example: MCTS and policy optimization. You can show that the Upper Confidence Bound algorithm used by MCTS is approximately equal to regularized policy optimization. You can choose to guide the tree search with UCB (a classical bandit algorithm) or policy optimization (a reinforcement learning algorithm), but the choice doesn't matter much because they're optimizing basically the same thing. Similarly, you can add a state occupancy measure regularization to MCTS. If you do, MCTS reduces to RRT in the case with no rewards. And if you do this, then the state-regularized MCTS searches much more like a sampling-based motion planner instead of like the traditional UCB-based MCTS planner. What matters is really the objective that the planner was trying to optimize, not the specific way it was trying to optimize it.

For robotics, the punchline is that I don't think it's really the distinction of new RL method vs old planner that matters. RL methods that attempt to optimize the same objective as the planner will perform similarly to the planner. RL methods that attempt to optimize different objectives will perform differently from each other, and planners that attempt to optimize different objectives will perform differently from each other. So I'd argue that the brittleness and unpredictability of RL in your lab isn't because it's RL persay, but because standard RL algorithms don't have long-horizon exploration term in their loss functions that would make them behave similarly to RRT. If we find a way to minimize the state occupancy measure loss described in the above paper other theory papers, I think we'll see the same performance and stability as RRT, but for a much more general set of problems. This is one of the big breakthroughs I'm expecting to see in the next 10 years in RL.

*okay yes technically not always minimization, the physical path can can also be an inflection point or local maxima, but cmon, we still call it the Principle of Least Action.

#note: this is of course a speculative opinion piece outlining potentially fruitful research directions#not a hard and fast “this will happen” prediction or guide to achieving practical performance

29 notes

·

View notes

Text

neural networks are loosely inspired by biology and can produce results that feel "organic" in some sense but the rigid split between training and inference feels artificial -- brains may become less plastic as they grow but they don't stop learning -- and the method of creating a neural network using gradient descent to minimise a loss function on a training set feels incredibly heavy handed, a clumsy brute force approach to something biology handles much more elegantly; but how?

28 notes

·

View notes

Note

ok i know ur a gradient but like..what even are gradients.. yall got tails n other freaky stuff like how do yall work i wanna major on gradient biology

I AM SO GLAD YOU ASKED THIS I HAVE BEEN THINKING THIS UP FOR AGES NOW

so gradients have a sort of 'base form', which is the form they're most comfortable in. any other form can be exhausting to hold after a while (with the exception of liquid forms). these forms have all their organs and such, as well as their hair, tails, and facial features inherited from their parents. speaking of inheritance, i wanna say right now that i have introduced at least one of plez's relatives among the cast of new characters. do with that what you will.

when it comes to organs, gradients dont have many. really just their eye, teeth, neural network, immune system, and reproductive system. every other job is just done by whatever weird goop they're made of. i havent really figured that out.

gradient medical diagram. i hope its comprehensible enough. i was tryna look up anatomy diagrms for this and so mch peanus. if you have questions maybe ask it on my main @expungedagalungagoo so i dont stuff up this blog with ooc ramblings about fake beings ok bye.

#infodump#ooc unpleasant#regretevator#regretevator roblox#roblox regretevator#gradient oc#regretevator gradient oc

44 notes

·

View notes

Text

youtube

interesting video about why scaling up neural nets doesn't just overfit and memorise more, contrary to classical machine learning theory.

the claim, termed the 'lottery ticket hypothesis', infers from the ability to 'prune' most of the weights from a large network without completely destroying its inference ability that it is effectively simultaneously optimising loads of small subnetworks and some of them will happen to be initialised in the right part of the solution space to find a global rather than local minimum when they do gradient descent, that these types of solutions are found quicker than memorisation would be, and most of the other weights straight up do nothing. this also presumably goes some way to explaining why quantisation works.

presumably it's then possible that with a more complex composite task like predicting all of language ever, a model could learn multiple 'subnetworks' that are optimal solutions for different parts of the problem space and a means to select into them. the conclusion at the end, where he speculates that the kolmogorov-simplest model for language would be to fully simulate a brain, feels like a bit of a reach but the rest of the video is interesting. anyway it seems like if i dig into pruning a little more, it leads to a bunch of other papers since the original 2018 one, for example, so it seems to be an area of active research.

hopefully further reason to think that high performing model sizes might come down and be more RAM-friendly, although it won't do much to help with training cost.

10 notes

·

View notes

Text

Interesting Papers for Week 14, 2025

Dopamine facilitates the response to glutamatergic inputs in astrocyte cell models. Bezerra, T. O., & Roque, A. C. (2024). PLOS Computational Biology, 20(12), e1012688.

Associative plasticity of granule cell inputs to cerebellar Purkinje cells. Conti, R., & Auger, C. (2024). eLife, 13, e96140.3.

Novel off-context experience constrains hippocampal representational drift. Elyasaf, G., Rubin, A., & Ziv, Y. (2024). Current Biology, 34(24), 5769-5773.e3.

Task goals shape the relationship between decision and movement speed. Fievez, F., Cos, I., Carsten, T., Derosiere, G., Zénon, A., & Duque, J. (2024). Journal of Neurophysiology, 132(6), 1837–1856.

Neural networks with optimized single-neuron adaptation uncover biologically plausible regularization. Geadah, V., Horoi, S., Kerg, G., Wolf, G., & Lajoie, G. (2024). PLOS Computational Biology, 20(12), e1012567.

Adaptation to visual sparsity enhances responses to isolated stimuli. Gou, T., Matulis, C. A., & Clark, D. A. (2024). Current Biology, 34(24), 5697-5713.e8.

Neuro-cognitive multilevel causal modeling: A framework that bridges the explanatory gap between neuronal activity and cognition. Grosse-Wentrup, M., Kumar, A., Meunier, A., & Zimmer, M. (2024). PLOS Computational Biology, 20(12), e1012674.

Trans-retinal predictive signals of visual features are precise, saccade-specific and operate over a wide range of spatial frequencies. Grzeczkowski, L., Stein, A., & Rolfs, M. (2024). Journal of Neurophysiology, 132(6), 1887–1895.

Adapting to time: Why nature may have evolved a diverse set of neurons. Habashy, K. G., Evans, B. D., Goodman, D. F. M., & Bowers, J. S. (2024). PLOS Computational Biology, 20(12), e1012673.

Study design features increase replicability in brain-wide association studies. Kang, K., Seidlitz, J., Bethlehem, R. A. I., Xiong, J., Jones, M. T., Mehta, K., Keller, A. S., Tao, R., Randolph, A., Larsen, B., Tervo-Clemmens, B., Feczko, E., Dominguez, O. M., Nelson, S. M., Alexander-Bloch, A. F., Fair, D. A., Schildcrout, J., Fair, D. A., Satterthwaite, T. D., … Vandekar, S. (2024). Nature, 636(8043), 719–727.

Midbrain encodes sound detection behavior without auditory cortex. Lee, T.-Y., Weissenberger, Y., King, A. J., & Dahmen, J. C. (2024). eLife, 12, e89950.4.

Stable sequential dynamics in prefrontal cortex represents subjective estimation of time. Li, Y., Yin, W., Wang, X., Li, J., Zhou, S., Ma, C., Yuan, P., & Li, B. (2024). eLife, 13, e96603.3.

Homeostatic synaptic normalization optimizes learning in network models of neural population codes. Mayzel, J., & Schneidman, E. (2024). eLife, 13, e96566.3.

Membrane potential states gate synaptic consolidation in human neocortical tissue. Mittermaier, F. X., Kalbhenn, T., Xu, R., Onken, J., Faust, K., Sauvigny, T., Thomale, U. W., Kaindl, A. M., Holtkamp, M., Grosser, S., Fidzinski, P., Simon, M., Alle, H., & Geiger, J. R. P. (2024). Nature Communications, 15, 10340.

Representational spaces in orbitofrontal and ventromedial prefrontal cortex: task states, values, and beyond. Moneta, N., Grossman, S., & Schuck, N. W. (2024). Trends in Neurosciences, 47(12), 1055–1069.

Environmental complexity modulates information processing and the balance between decision-making systems. Mugan, U., Hoffman, S. L., & Redish, A. D. (2024). Neuron, 112(24), 4096-4114.e10.

Hierarchical gradients of multiple timescales in the mammalian forebrain. Song, M., Shin, E. J., Seo, H., Soltani, A., Steinmetz, N. A., Lee, D., Jung, M. W., & Paik, S.-B. (2024). Proceedings of the National Academy of Sciences, 121(51), e2415695121.

Human short-latency reflexes show precise short-term gain adaptation after prior motion. Stratmann, P., Schmidt, A., Höppner, H., van der Smagt, P., Meindl, T., Franklin, D. W., & Albu-Schäffer, A. (2024). Journal of Neurophysiology, 132(6), 1680–1692.

Orbitofrontal control of the olfactory cortex regulates olfactory discrimination learning. Wang, D., Zhang, Y., Li, S., Liu, P., Li, X., Liu, Z., Li, A., & Wang, D. (2024). Journal of Physiology, 602(24), 7003–7026.

Spatial context non-uniformly modulates inter-laminar information flow in the primary visual cortex. Xu, X., Morton, M. P., Denagamage, S., Hudson, N. V., Nandy, A. S., & Jadi, M. P. (2024). Neuron, 112(24), 4081-4095.e5.

#neuroscience#science#research#brain science#scientific publications#cognitive science#neurobiology#cognition#psychophysics#neurons#neural computation#neural networks#computational neuroscience

9 notes

·

View notes

Text

Machine Learning: A Comprehensive Overview

Machine Learning (ML) is a subfield of synthetic intelligence (AI) that offers structures with the capacity to robotically examine and enhance from revel in without being explicitly programmed. Instead of using a fixed set of guidelines or commands, device studying algorithms perceive styles in facts and use the ones styles to make predictions or decisions. Over the beyond decade, ML has transformed how we have interaction with generation, touching nearly each aspect of our every day lives — from personalised recommendations on streaming services to actual-time fraud detection in banking.

Machine learning algorithms

What is Machine Learning?

At its center, gadget learning entails feeding facts right into a pc algorithm that allows the gadget to adjust its parameters and improve its overall performance on a project through the years. The more statistics the machine sees, the better it usually turns into. This is corresponding to how humans study — through trial, error, and revel in.

Arthur Samuel, a pioneer within the discipline, defined gadget gaining knowledge of in 1959 as “a discipline of take a look at that offers computers the capability to study without being explicitly programmed.” Today, ML is a critical technology powering a huge array of packages in enterprise, healthcare, science, and enjoyment.

Types of Machine Learning

Machine studying can be broadly categorised into 4 major categories:

1. Supervised Learning

For example, in a spam electronic mail detection device, emails are classified as "spam" or "no longer unsolicited mail," and the algorithm learns to classify new emails for this reason.

Common algorithms include:

Linear Regression

Logistic Regression

Support Vector Machines (SVM)

Decision Trees

Random Forests

Neural Networks

2. Unsupervised Learning

Unsupervised mastering offers with unlabeled information. Clustering and association are commonplace obligations on this class.

Key strategies encompass:

K-Means Clustering

Hierarchical Clustering

Principal Component Analysis (PCA)

Autoencoders

three. Semi-Supervised Learning

It is specifically beneficial when acquiring categorised data is highly-priced or time-consuming, as in scientific diagnosis.

Four. Reinforcement Learning

Reinforcement mastering includes an agent that interacts with an surroundings and learns to make choices with the aid of receiving rewards or consequences. It is broadly utilized in areas like robotics, recreation gambling (e.G., AlphaGo), and independent vehicles.

Popular algorithms encompass:

Q-Learning

Deep Q-Networks (DQN)

Policy Gradient Methods

Key Components of Machine Learning Systems

1. Data

Data is the muse of any machine learning version. The pleasant and quantity of the facts directly effect the performance of the version. Preprocessing — consisting of cleansing, normalization, and transformation — is vital to make sure beneficial insights can be extracted.

2. Features

Feature engineering, the technique of selecting and reworking variables to enhance model accuracy, is one of the most important steps within the ML workflow.

Three. Algorithms

Algorithms define the rules and mathematical fashions that help machines study from information. Choosing the proper set of rules relies upon at the trouble, the records, and the desired accuracy and interpretability.

4. Model Evaluation

Models are evaluated the use of numerous metrics along with accuracy, precision, consider, F1-score (for class), or RMSE and R² (for regression). Cross-validation enables check how nicely a model generalizes to unseen statistics.

Applications of Machine Learning

Machine getting to know is now deeply incorporated into severa domain names, together with:

1. Healthcare

ML is used for disorder prognosis, drug discovery, customized medicinal drug, and clinical imaging. Algorithms assist locate situations like cancer and diabetes from clinical facts and scans.

2. Finance

Fraud detection, algorithmic buying and selling, credit score scoring, and client segmentation are pushed with the aid of machine gaining knowledge of within the financial area.

3. Retail and E-commerce

Recommendation engines, stock management, dynamic pricing, and sentiment evaluation assist businesses boom sales and improve patron revel in.

Four. Transportation

Self-riding motors, traffic prediction, and route optimization all rely upon real-time gadget getting to know models.

6. Cybersecurity

Anomaly detection algorithms help in identifying suspicious activities and capacity cyber threats.

Challenges in Machine Learning

Despite its rapid development, machine mastering still faces numerous demanding situations:

1. Data Quality and Quantity

Accessing fantastic, categorised statistics is often a bottleneck. Incomplete, imbalanced, or biased datasets can cause misguided fashions.

2. Overfitting and Underfitting

Overfitting occurs when the model learns the education statistics too nicely and fails to generalize.

Three. Interpretability

Many modern fashions, specifically deep neural networks, act as "black boxes," making it tough to recognize how predictions are made — a concern in excessive-stakes regions like healthcare and law.

4. Ethical and Fairness Issues

Algorithms can inadvertently study and enlarge biases gift inside the training facts. Ensuring equity, transparency, and duty in ML structures is a growing area of studies.

5. Security

Adversarial assaults — in which small changes to enter information can fool ML models — present critical dangers, especially in applications like facial reputation and autonomous riding.

Future of Machine Learning

The destiny of system studying is each interesting and complicated. Some promising instructions consist of:

1. Explainable AI (XAI)

Efforts are underway to make ML models greater obvious and understandable, allowing customers to believe and interpret decisions made through algorithms.

2. Automated Machine Learning (AutoML)

AutoML aims to automate the stop-to-cease manner of applying ML to real-world issues, making it extra reachable to non-professionals.

3. Federated Learning

This approach permits fashions to gain knowledge of across a couple of gadgets or servers with out sharing uncooked records, enhancing privateness and efficiency.

4. Edge ML

Deploying device mastering models on side devices like smartphones and IoT devices permits real-time processing with reduced latency and value.

Five. Integration with Other Technologies

ML will maintain to converge with fields like blockchain, quantum computing, and augmented fact, growing new opportunities and challenges.

2 notes

·

View notes

Text

The human mind has no definite state on any matter. No one mind comprehends the world the same, but many people conform. No one model of the mind exists, but many work well enough to be used, but also many conflict in key components.

Behaviour can come from biology, physical structure, environmental influences, current received input, taught behaviour, hormones, neurotransmitters, specialised structures, and inward conjecture. All of which differ vastly

If the brain were an actual neural network, the synaptic firing that regularly occurs would just be teaching the brain to retain its current state. Memories would just be a gradient mapping and recall of input relativity to other inputs.

We can't map out neuron clusters because not every brain has the wiring.

Neural networks aren't even close to the brain. I fucking hate that comparison. They're using math to approximate the shape some function makes. Its like covering a frozen blanket over an art student's sculpture using only a ballpoint pen

The brain is a machine that uses nature to construct these approximations, encoding hyper-compressed genetic data on the right initial firings and right densities that would preclude the degradation of function, leading to "functional groups" of neurons that have no purpose but to generally exist, made to roughly approximate hundreds of models that process things in ways we could never even comprehend, or imagine the intended genetic function of.

If you try and describe the brain to anybody it'll sound like fucking science fiction, but life is a metaphysical entity that yearns to feed off of waves of entropy, so it burst through the seams of whatever part of the earth FUCA came from, and decided "hey lets lead to a maximised creature designed to create change"

I'm not saying we are maximally made, but we're the best that there is currently in the same way that adding and modifying more and more computer parts to a shitty pc would make a maximally good computer

and thus society and industry and classes and hierarchies are born, dents in the pathways that make up the multidimensional shape we call our hippocampus, forged likely from the will of life, as the first step into catalysing the world.

Creatures that don't maximally destroy or change are the ones that fall victims to those that maximally do, after all.

Idk what this rant is about, brains weird, do whatever the fuck you want and make your mind whatever the fuck you want, as long as it perpetuates and encourages the story of your life and others to be beautiful and complex.

Moral of the story: fuckin idk

7 notes

·

View notes

Text

The Mathematical Foundations of Machine Learning

In the world of artificial intelligence, machine learning is a crucial component that enables computers to learn from data and improve their performance over time. However, the math behind machine learning is often shrouded in mystery, even for those who work with it every day. Anil Ananthaswami, author of the book "Why Machines Learn," sheds light on the elegant mathematics that underlies modern AI, and his journey is a fascinating one.

Ananthaswami's interest in machine learning began when he started writing about it as a science journalist. His software engineering background sparked a desire to understand the technology from the ground up, leading him to teach himself coding and build simple machine learning systems. This exploration eventually led him to appreciate the mathematical principles that underlie modern AI. As Ananthaswami notes, "I was amazed by the beauty and elegance of the math behind machine learning."

Ananthaswami highlights the elegance of machine learning mathematics, which goes beyond the commonly known subfields of calculus, linear algebra, probability, and statistics. He points to specific theorems and proofs, such as the 1959 proof related to artificial neural networks, as examples of the beauty and elegance of machine learning mathematics. For instance, the concept of gradient descent, a fundamental algorithm used in machine learning, is a powerful example of how math can be used to optimize model parameters.

Ananthaswami emphasizes the need for a broader understanding of machine learning among non-experts, including science communicators, journalists, policymakers, and users of the technology. He believes that only when we understand the math behind machine learning can we critically evaluate its capabilities and limitations. This is crucial in today's world, where AI is increasingly being used in various applications, from healthcare to finance.

A deeper understanding of machine learning mathematics has significant implications for society. It can help us to evaluate AI systems more effectively, develop more transparent and explainable AI systems, and address AI bias and ensure fairness in decision-making. As Ananthaswami notes, "The math behind machine learning is not just a tool, but a way of thinking that can help us create more intelligent and more human-like machines."

The Elegant Math Behind Machine Learning (Machine Learning Street Talk, November 2024)

youtube

Matrices are used to organize and process complex data, such as images, text, and user interactions, making them a cornerstone in applications like Deep Learning (e.g., neural networks), Computer Vision (e.g., image recognition), Natural Language Processing (e.g., language translation), and Recommendation Systems (e.g., personalized suggestions). To leverage matrices effectively, AI relies on key mathematical concepts like Matrix Factorization (for dimension reduction), Eigendecomposition (for stability analysis), Orthogonality (for efficient transformations), and Sparse Matrices (for optimized computation).

The Applications of Matrices - What I wish my teachers told me way earlier (Zach Star, October 2019)

youtube

Transformers are a type of neural network architecture introduced in 2017 by Vaswani et al. in the paper “Attention Is All You Need”. They revolutionized the field of NLP by outperforming traditional recurrent neural network (RNN) and convolutional neural network (CNN) architectures in sequence-to-sequence tasks. The primary innovation of transformers is the self-attention mechanism, which allows the model to weigh the importance of different words in the input data irrespective of their positions in the sentence. This is particularly useful for capturing long-range dependencies in text, which was a challenge for RNNs due to vanishing gradients. Transformers have become the standard for machine translation tasks, offering state-of-the-art results in translating between languages. They are used for both abstractive and extractive summarization, generating concise summaries of long documents. Transformers help in understanding the context of questions and identifying relevant answers from a given text. By analyzing the context and nuances of language, transformers can accurately determine the sentiment behind text. While initially designed for sequential data, variants of transformers (e.g., Vision Transformers, ViT) have been successfully applied to image recognition tasks, treating images as sequences of patches. Transformers are used to improve the accuracy of speech-to-text systems by better modeling the sequential nature of audio data. The self-attention mechanism can be beneficial for understanding patterns in time series data, leading to more accurate forecasts.

Attention is all you need (Umar Hamil, May 2023)

youtube

Geometric deep learning is a subfield of deep learning that focuses on the study of geometric structures and their representation in data. This field has gained significant attention in recent years.

Michael Bronstein: Geometric Deep Learning (MLSS Kraków, December 2023)

youtube

Traditional Geometric Deep Learning, while powerful, often relies on the assumption of smooth geometric structures. However, real-world data frequently resides in non-manifold spaces where such assumptions are violated. Topology, with its focus on the preservation of proximity and connectivity, offers a more robust framework for analyzing these complex spaces. The inherent robustness of topological properties against noise further solidifies the rationale for integrating topology into deep learning paradigms.

Cristian Bodnar: Topological Message Passing (Michael Bronstein, August 2022)

youtube

Sunday, November 3, 2024

#machine learning#artificial intelligence#mathematics#computer science#deep learning#neural networks#algorithms#data science#statistics#programming#interview#ai assisted writing#machine art#Youtube#lecture

4 notes

·

View notes

Text

Commissions OPEN!

Hey everybody, I am officially opening commissions as of 24 June 2023. I will update this post if my queue is filled up.

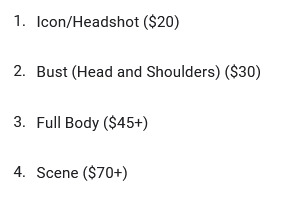

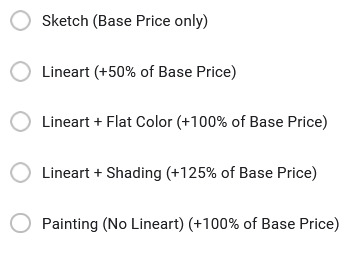

PRICES

BACKGROUND

No Background

Flat Color/Gradient Background (No extra charge)

Basic Background (+$5)

Simple to Detailed Background (+$15 - $30)

Scene (Please discuss price with me)

ADDITIONAL RULES

No NSFW, mildly explicit content is okay.

No gore.

Individual, handheld props don't cost extra.

Please be polite. Rude or inflammatory requests will be rejected.

No racist, sexist, homophobic, transphobic, or otherwise bigoted content is permitted.

I require a visual reference of your character! I reserve the right to charge additional fees to work solely off of text descriptions.

These are BASE PRICES ONLY! I may charge an extra fee depending on complexity!

As always, it is not permitted to use my artwork to train, validate or test any neural network or artificial intelligence, as it violates terms against alteration.

Payment is in full, upfront, once I have accepted your commission. I will contact you via email or DM. Please be sure to check my TOS and my Queue before commissioning.

Request a Commission Here!

#my art#digital art#commissions#commission sheet#art#dnd#original characters#sky: children of the light#artists on tumblr

35 notes

·

View notes

Text

🌟 Ứng dụng ResNet-50: Đột phá trong phân loại hình ảnh! 📸🔍

💡 Trong thời đại công nghệ số, việc phân loại hình ảnh đang trở thành một trong những bài toán quan trọng nhất 🌐. Với sự phát triển của các mô hình deep learning, ResNet-50 nổi lên như một giải pháp vượt trội nhờ khả năng xử lý d��� liệu hình ảnh nhanh và chính xác 🔥.

📖 ResNet-50 là gì? Đây là một mô hình ResNet (Residual Network) với 50 lớp, được thiết kế để giải quyết vấn đề gradient biến mất khi mạng neural trở nên quá sâu 🧠➡️📈. Đặc điểm nổi bật của ResNet-50 nằm ở việc sử dụng khối residual, cho phép thông tin truyền qua mạng hiệu quả hơn. Điều này giúp cải thiện độ chính xác mà không làm tăng độ phức tạp 🚀.

🔎 Ứng dụng thực tế:

📷 Phân loại sản phẩm: Phù hợp cho các hệ thống thương mại điện tử để nhận diện hàng hóa.

🩺 Y tế: Hỗ trợ nhận diện các tổn thương trong hình ảnh y tế, như X-quang hay MRI.

🚗 Ô tô tự hành: Phân tích hình ảnh để nhận biết vật thể trên đường.

👉 Bạn muốn tìm hiểu chi tiết cách ResNet-50 hoạt động và ứng dụng vào dự án thực tế của mình? Đọc ngay bài viết tại đây: Ứng dụng ResNet-50 vào phân loại hình ảnh 📲✨

📌 Đừng quên thả ❤️ và chia sẻ bài viết này nếu bạn thấy hữu ích nhé!

Khám phá thêm những bài viết giá trị tại aicandy.vn

3 notes

·

View notes

Text

NVIDIA AI Workflows Detect False Credit Card Transactions

A Novel AI Workflow from NVIDIA Identifies False Credit Card Transactions.

The process, which is powered by the NVIDIA AI platform on AWS, may reduce risk and save money for financial services companies.

By 2026, global credit card transaction fraud is predicted to cause $43 billion in damages.

Using rapid data processing and sophisticated algorithms, a new fraud detection NVIDIA AI workflows on Amazon Web Services (AWS) will assist fight this growing pandemic by enhancing AI’s capacity to identify and stop credit card transaction fraud.

In contrast to conventional techniques, the process, which was introduced this week at the Money20/20 fintech conference, helps financial institutions spot minute trends and irregularities in transaction data by analyzing user behavior. This increases accuracy and lowers false positives.

Users may use the NVIDIA AI Enterprise software platform and NVIDIA GPU instances to expedite the transition of their fraud detection operations from conventional computation to accelerated compute.

Companies that use complete machine learning tools and methods may see an estimated 40% increase in the accuracy of fraud detection, which will help them find and stop criminals more quickly and lessen damage.

As a result, top financial institutions like Capital One and American Express have started using AI to develop exclusive solutions that improve client safety and reduce fraud.

With the help of NVIDIA AI, the new NVIDIA workflow speeds up data processing, model training, and inference while showcasing how these elements can be combined into a single, user-friendly software package.

The procedure, which is now geared for credit card transaction fraud, might be modified for use cases including money laundering, account takeover, and new account fraud.

Enhanced Processing for Fraud Identification

It is more crucial than ever for businesses in all sectors, including financial services, to use computational capacity that is economical and energy-efficient as AI models grow in complexity, size, and variety.

Conventional data science pipelines don’t have the compute acceleration needed to process the enormous amounts of data needed to combat fraud in the face of the industry’s continually increasing losses. Payment organizations may be able to save money and time on data processing by using NVIDIA RAPIDS Accelerator for Apache Spark.

Financial institutions are using NVIDIA’s AI and accelerated computing solutions to effectively handle massive datasets and provide real-time AI performance with intricate AI models.

The industry standard for detecting fraud has long been the use of gradient-boosted decision trees, a kind of machine learning technique that uses libraries like XGBoost.

Utilizing the NVIDIA RAPIDS suite of AI libraries, the new NVIDIA AI workflows for fraud detection improves XGBoost by adding graph neural network (GNN) embeddings as extra features to assist lower false positives.

In order to generate and train a model that can be coordinated with the NVIDIA Triton Inference Server and the NVIDIA Morpheus Runtime Core library for real-time inferencing, the GNN embeddings are fed into XGBoost.

All incoming data is safely inspected and categorized by the NVIDIA Morpheus framework, which also flags potentially suspicious behavior and tags it with patterns. The NVIDIA Triton Inference Server optimizes throughput, latency, and utilization while making it easier to infer all kinds of AI model deployments in production.

NVIDIA AI Enterprise provides Morpheus, RAPIDS, and Triton Inference Server.

Leading Financial Services Companies Use AI

AI is assisting in the fight against the growing trend of online or mobile fraud losses, which are being reported by several major financial institutions in North America.

American Express started using artificial intelligence (AI) to combat fraud in 2010. The company uses fraud detection algorithms to track all client transactions worldwide in real time, producing fraud determinations in a matter of milliseconds. American Express improved model accuracy by using a variety of sophisticated algorithms, one of which used the NVIDIA AI platform, therefore strengthening the organization��s capacity to combat fraud.

Large language models and generative AI are used by the European digital bank Bunq to assist in the detection of fraud and money laundering. With NVIDIA accelerated processing, its AI-powered transaction-monitoring system was able to train models at over 100 times quicker rates.

In March, BNY said that it was the first big bank to implement an NVIDIA DGX SuperPOD with DGX H100 systems. This would aid in the development of solutions that enable use cases such as fraud detection.

In order to improve their financial services apps and help protect their clients’ funds, identities, and digital accounts, systems integrators, software suppliers, and cloud service providers may now include the new NVIDIA AI workflows for fraud detection. NVIDIA Technical Blog post on enhancing fraud detection with GNNs and investigate the NVIDIA AI workflows for fraud detection.

Read more on Govindhtech.com

#NVIDIAAI#AWS#FraudDetection#AI#GenerativeAI#LLM#AImodels#News#Technews#Technology#Technologytrends#govindhtech#Technologynews

2 notes

·

View notes

Text

Amitie

♀️ | Dissessembly Drone

Is (quite literally) a worker drone in a dissessembly drone's body (was transferred into a dissessembly drone body from an untrained neural network shell (baby drone body)).

Her hair isn't dyed blonde, the gradient is natural.

Wears a cap on her tail needle/stinger most of the time to not harm herself or others (she even wears it to sleep).

Barely knows anything about the AbsoluteSolver (the only reason is due to having a classmate that has it *coughcoughRaffinacoughcough*)

#character bio#puyo puyo#murder drones#au#alternate universe#puyo pop#puyo puyo au#murder drones au#amitie#puyo puyo amitie#amitie puyo puyo

12 notes

·

View notes

Text

so if you create a frickin' enormous neural network with randomised weights and then train it with gradient descent on a frickin' enormous dataset then aren't you essentially doing evolution on a population of random functions, genetic programming style?

except of course the functions can hook into each other, so it's more sophisticated than your average genetic programming arrangement...

26 notes

·

View notes