#hbase

Explore tagged Tumblr posts

Text

What is HBase? . . . . for more information and tutorial https://bit.ly/3UU2Ucw check the above link

0 notes

Link

0 notes

Text

Are you looking for comprehensive HBase Training in Noida? Look no further than APTRON Solutions Noida, a leading training institute offering top-notch HBase courses. With a proven track record of excellence and a dedicated team of industry experts, APTRON Solutions Noida is the ideal choice for individuals and organizations seeking to enhance their HBase skills.

0 notes

Text

hbase architecture is a distributed, scalable, NoSQL database built on Hadoop to handle large amounts of structured data. Its architecture consists of Region Servers that manage data regions, a Controller Server for coordination, and HDFS for storage. It supports real-time read/write access, enabling efficient handling of big data applications. Check here to learn more.

0 notes

Text

Yay - I get to share my love for tidbit Hazbin lore while sharing knowledge that makes me look like a millennial boomer XD Ahem... Alastor, our favorite overlord, for all intents and purposes, is a fucking elemental. His abilities are absolutely terrifying from a scientific standpoint. Okay, so remember how during the "Stayed Gone" number, Vox starts glitching out and "loses his signal" - then the Pride ring subsequently has a blackout? That is entirely Alastor's (or whatever-the-fuck-is-benefactoring-him's) doing. A powerful enough radio signal can do that. No horseshoe magnet required. IRL real shiz. Despite being digital enough to render a bluescreen while compromised, Vox might still have older hardware from his former days as a rabbit-eared, extra-thick thick cathode-ray tube.

And Alastor is our radio demon. Keep this in mind. IRL, once upon a time during the 1940s - before digital television - there was no "Channel 1". That's because in the US, a very long time ago, both radio and TV shared the band that we call "Channel One":

"Until 1948, Land Mobile Radio and television broadcasters shared the same frequencies, which caused interference. This shared allocation was eventually found to be unworkable, so the FCC reallocated the Channel 1 frequencies for public safety and land mobile use and assigned TV channels 2–13 exclusively to broadcasters. Aside from the shared frequency issue, this part of the VHF band was (and to some extent still is) prone to higher levels of radio-frequency interference (RFI) than even Channel 2 (System M)." (https://en.wikipedia.org/wiki/Channel_1_(North_American_TV))

Then for a short stint, Channel One was exclusively reserved for radio:

Channel 1 was allocated at 44–50 MHz between 1937 and 1940. Visual and aural carrier frequencies within the channel fluctuated with changes in overall TV broadcast standards prior to the establishment of permanent standards by the National Television Systems Committee. In 1940, the FCC reassigned 42–50 MHz to the FM broadcast band. Television's channel 1 frequency range was moved to 50–56 MHz. Experimental television stations in New York, Chicago, and Los Angeles were affected. (https://en.wikipedia.org/wiki/Channel_1_(North_American_TV))

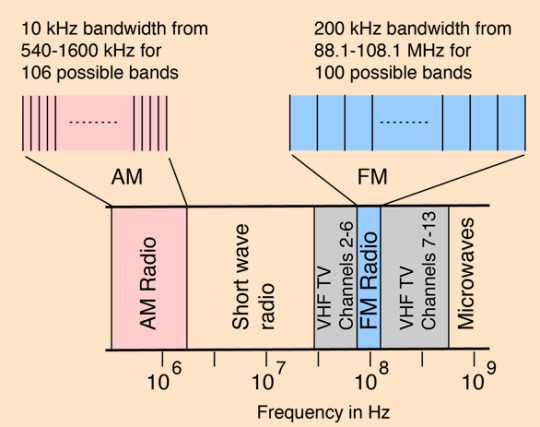

Every local TV channel and radio station has a frequency range on the electromagnetic spectrum. For those who still listen to radio on non-internet-reliant radios devices, those funny little numbers next to a station's name are a ballpark number for the frequency the station broadcasts in the Hertz unit. A Hertz (Hz) is one wave per second. A KiloHertz (KHz) is 1,000 waves per second. A GigaHertz (GHz) is 1 billion waves per second. Modern AM radio stations are 535-1605 kHz Modern FM radio stations are 88-108 MHz TV VHF Channels 2 thru 13 are 54-216 MHz TV UHF Channels 14 thru 36 are 470-608 MHz And no, that's not a discrepancy between VHF and FM radio: the frequencies designated for FM radio are nestled right in there with TV ones - between Channels 6 and 7.

(chart from http://hyperphysics.phy-astr.gsu.edu/hbase/Audio/radio.html) Even today, radio and TV are slightly shuffled in there in regards to designated frequencies. This implies that depending on Alastor's band of preference, if Vox still has some of his older hardware, Vox could, in his sleep, theoretically be able to hear Alastor's broadcasts of screaming victims without a physical radio nearby. IRL in fact, in older televisions where a knob is used to change channels, much of the static you'd hear in-between channels is actually background radiation from deep space - along with any radio interference from man-made sources nearby. No wonder Vox is obsessed with Alastor. Alastor can torment him in an in-between realm-channel daily, like Freddy Kruger.

Yet, if radio signals were only a Vox problem, why did nearly every light and electronic device go out in the Pride except the emergency lights at the Heaven embassy?

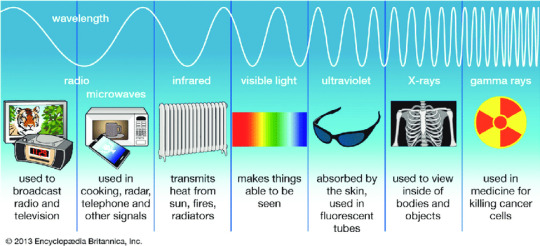

It might depend on how we define the word "radio". Is it radio, as in "those radio stations we can listen to without the internet"? Maybe radio, as in "any frequency utilized in modern communications, including TV and Radio"? Or is it radio, as in "almost any signal on the electromagnetic spectrum with a frequency lower than friggin' heat?" People, below is an IRL over-simplified chart of the electromagnetic spectrum and its usages by human.

When radio is defined as a specific part of the electromagnetic spectrum, it is basically any frequency below infrared. *** Cellphone service and WiFi use radio signals within this range. Most cellular services are between 600 MHz and 39 GHz WiFi routers are about 2.4-5 GHz (6 GHz in newer models) That's where the "G" in "4G" and "5G" come from - the "G" stands for "Gigahertz" Radio, local television, cellphone service, WiFi, and basically any point in the internet that isn't linked by a landline - these are all safely within the part of the electromagnetic spectrum that the scientists would call "radio". If Hell's technology is supposed to mirror the real world, then most electronic devices need radio frequencies in order to communicate. The VVV's empire is truly fucked, should Alastor so choose. The only plot hole in this explanation I see is why all the lights went out. These devices don't run on radio - they communicate using it. My best-educated guess is that the on/off switch for Hell's power grid is on an open network and at least part of it wireless. Or maybe Alastor's radio attack works like a general EMP and he can just break stuff by "brute force". (I am not an expert on these sorts of things like telecommunication... or network security... or physics.... I politely ask that someone in the comments, please enlighten me U.U ) ------------------------------------- Also, notice that Alastor's Tower, Cannibal Town and the Heaven Embassy were the only regions with lights on during the blackout.

is that...?

Cannibal Town?

If this is, in fact, Cannibal Town, then my only guess is that the Cannibals are so hipster, many of them only light their homes and businesses with candlelight and leviathan whale oil. Neither candlelight nor oil-burning rely on wifi. Only some of their region's light was lost in the blackout. They might use some electricity (as many during the Victorian era did, which Cannibal Town seems to be inspired by), but they don't fully rely upon electricity. This suggests that Alastors friendship with Rosie might be less of an organic friendship and more like a strategically slick alliance. Rosie's territory is one part of Pride that Alastor can't completely shut down (other than the Embassy). But, who knows?

Alastor's derision of modern tech now seems to have more merit than just being "hipster", or avoiding leaving a digital footprint that Vox can manipulate, (the latter of which I once head-canoned before this epiphany). Alastor can literally just shut most of Hell's tech down. This might also suggest why Alastor is homies with Zestial - another known old-timey prick.

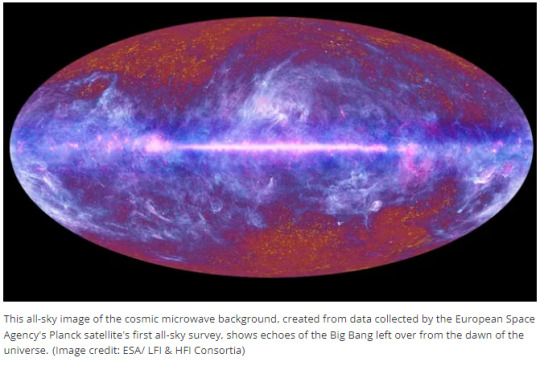

Alastor makes alliances with demons he can't easily overpower with his abilities. This might seem self-contradictory to Alastor's seeming over-confidence in teasing Lucifer - until you realize he did this only after he learned angels could be killed during the Overlords' meeting. (And yes, I know what I wrote about Alastor a couple of tumbl notes back with the "popsicle" evaluation. I do not consider flip-flopping a moral issue if done so by epiphany. That note stays, because it's funny XD ) ----------------------- Another theory! Ok, so this theory isn't entirely my own-own, I'm just building off of it based on what I've just said (mostly Roo stuff). So IRL, scientists decided to take an image of the observable universe in the microwave range. Microwave energy is in the upper ends of radio, but just below infrared in frequency. What they found was cosmic background radiation - a lot of energy that isn't coming from the stars themselves.

(Image source: https://www.space.com/33892-cosmic-microwave-background.html) Some scientists theorize this is because this particular energy is left over from the formation of the universe. So about Roo:

In the first non-pilot episode, The Story of Hell, as read by Charlie, states that the angels of pure light "worshipped good and shielded all from evil." During this line, imagery of two faces are shown before the angels: one face of light and another face of twisted red and black.

Subsequent lines and imagery in the episode suggest that this "evil" existed before Lucifer fell or Eve allowed this evil to enter the world - even before the Earth was created. Some Tumblrs who have been in this fandom longer than I have may know of Roo, a character that appears in some of VivziePop's older works within the Hazbin/Hellaverse. Some of Roo's monikers include "The Root of All Evil" and the "Tree of Knowledge". I'm wondering if in the Hellaverse, the cosmic background radiation of the universe is a manifestation of Roo when she isn't bound to a tree. Could Alastor's radio powers come Roo, the background "dark" energy of the universe's birth? Did Alastor bite the apple the second third time for mankind? XD

------------------------------------------------- While researching for this paper, I learned that microwave ovens and 2G cell phones operate within the same frequencies at around 2 GHz. Apparently, the only reason cell phones don't cook our brains is because the wattage is too low. (I dunno what wattage means. I'm not a scientist.) But now, Alastors singing lines in S1E8 had me thinking: "The constraints of my deal surely have a back door Once I figure out how to unclip my wings, guess who will be pulling all the strings" Knowing what Alastor is capable of with radio, this has me wondering if Alastor's radio powers are coming from one source, all while be is being chained by another entity entirely. Someone might have gone out of their way to get Alastor into a contract - if only to keep him from literally baking the universe for his viewing pleasure... on a rotating glass plate.

Being able to cook a soul in microwaves would require that they be at least partially made of water, however. Buuuut... I guess if there are working ACs in Hell, I really shouldn't read too much into it XD -------------

Do you think the mad scientists from Helluva Boss, Lyle Lipton and Loopty Goopty, ever chat over coffee about the abilities of the overlords based on casual observation?

One day, Alastor's name comes up... ...and after four minutes of discussing facts over coffee, they're both just like "Nope"?

XD {END} *** Note: Googling "Electromagnetic Spectrum charts" will yield different results. Some charts will have different designations frequencies lower than radio, like Extremely Low Frequencies (ELF). I do not know whether this difference is a reflection of a newer categorization, or if most charts online are made for laymen such as myself. Most charts I saw years ago only designated "radio" as "everything below microwave". I want to assume that the "only radio below microwave" categorization went into the writer's designing of Alastor's character simply because such charts are more common (while also making for a more interesting power scaling).

______________ Disclaimer: I am composed of chauffeur knowledge. I know nearly nothing about communication science little about radiation stuff. I took an astronomy elective in college once, so I sorta knew where to look when it came to frequency stuff. I have no idea what the fuck I'm talking about. I know that I confused frequency and wavelength somewhere. Please, #sciencesideoftumblr feel free to correct me. ----------------- TLDR: Most tech IRL uses radio waves to communicate. That Includes TVs, WiFi and cell phones. Alastor can make the Pride Ring go kaploowee if he looks at it funny. I don't know what he's cooking.

#hazbin hotel#hazbin hotel theory#hazbin hotel alastor#hazbin hotel vox#hazbin hotel vvv#hazbin hotel vees#science#science side of tumblr#please help me#i'm absolutly sure i mixed up frequency and wavelength somewhere#I'm not a communications expert#i flunked chemistry in high school and i can't write my name in cursive#chauffeur knowledge#hazbin hotel rosie#hazbin hotel lucifer#hazbin hotel zestial#hazbin hotel roo#sciencesideoftumblr#science side help me#radio#electromagnetic waves

199 notes

·

View notes

Text

Big Data Course in Kochi: Transforming Careers in the Age of Information

In today’s hyper-connected world, data is being generated at an unprecedented rate. Every click on a website, every transaction, every social media interaction — all of it contributes to the vast oceans of information known as Big Data. Organizations across industries now recognize the strategic value of this data and are eager to hire professionals who can analyze and extract meaningful insights from it.

This growing demand has turned big data course in Kochi into one of the most sought-after educational programs for tech enthusiasts, IT professionals, and graduates looking to enter the data-driven future of work.

Understanding Big Data and Its Relevance

Big Data refers to datasets that are too large or complex for traditional data processing applications. It’s commonly defined by the 5 V’s:

Volume – Massive amounts of data generated every second

Velocity – The speed at which data is created and processed

Variety – Data comes in various forms, from structured to unstructured

Veracity – Quality and reliability of the data

Value – The insights and business benefits extracted from data

These characteristics make Big Data a crucial resource for industries ranging from healthcare and finance to retail and logistics. Trained professionals are needed to collect, clean, store, and analyze this data using modern tools and platforms.

Why Enroll in a Big Data Course?

Pursuing a big data course in Kochi can open up diverse opportunities in data analytics, data engineering, business intelligence, and beyond. Here's why it's a smart move:

1. High Demand for Big Data Professionals

There’s a huge gap between the demand for big data professionals and the current supply. Companies are actively seeking individuals who can handle tools like Hadoop, Spark, and NoSQL databases, as well as data visualization platforms.

2. Lucrative Career Opportunities

Big data engineers, analysts, and architects earn some of the highest salaries in the tech sector. Even entry-level roles can offer impressive compensation packages, especially with relevant certifications.

3. Cross-Industry Application

Skills learned in a big data course in Kochi are transferable across sectors such as healthcare, e-commerce, telecommunications, banking, and more.

4. Enhanced Decision-Making Skills

With big data, companies make smarter business decisions based on predictive analytics, customer behavior modeling, and real-time reporting. Learning how to influence those decisions makes you a valuable asset.

What You’ll Learn in a Big Data Course

A top-tier big data course in Kochi covers both the foundational concepts and the technical skills required to thrive in this field.

1. Core Concepts of Big Data

Understanding what makes data “big,” how it's collected, and why it matters is crucial before diving into tools and platforms.

2. Data Storage and Processing

You'll gain hands-on experience with distributed systems such as:

Hadoop Ecosystem: HDFS, MapReduce, Hive, Pig, HBase

Apache Spark: Real-time processing and machine learning capabilities

NoSQL Databases: MongoDB, Cassandra for unstructured data handling

3. Data Integration and ETL

Learn how to extract, transform, and load (ETL) data from multiple sources into big data platforms.

4. Data Analysis and Visualization

Training includes tools for querying large datasets and visualizing insights using:

Tableau

Power BI

Python/R libraries for data visualization

5. Programming Skills

Big data professionals often need to be proficient in:

Java

Python

Scala

SQL

6. Cloud and DevOps Integration

Modern data platforms often operate on cloud infrastructure. You’ll gain familiarity with AWS, Azure, and GCP, along with containerization (Docker) and orchestration (Kubernetes).

7. Project Work

A well-rounded course includes capstone projects simulating real business problems—such as customer segmentation, fraud detection, or recommendation systems.

Kochi: A Thriving Destination for Big Data Learning

Kochi has evolved into a leading IT and educational hub in South India, making it an ideal place to pursue a big data course in Kochi.

1. IT Infrastructure

Home to major IT parks like Infopark and SmartCity, Kochi hosts numerous startups and global IT firms that actively recruit big data professionals.

2. Cost-Effective Learning

Compared to metros like Bangalore or Hyderabad, Kochi offers high-quality education and living at a lower cost.

3. Talent Ecosystem

With a strong base of engineering colleges and tech institutes, Kochi provides a rich talent pool and a thriving tech community for networking.

4. Career Opportunities

Kochi’s booming IT industry provides immediate placement potential after course completion, especially for well-trained candidates.

What to Look for in a Big Data Course?

When choosing a big data course in Kochi, consider the following:

Expert Instructors: Trainers with industry experience in data engineering or analytics

Comprehensive Curriculum: Courses should include Hadoop, Spark, data lakes, ETL pipelines, cloud deployment, and visualization tools

Hands-On Projects: Theoretical knowledge is incomplete without practical implementation

Career Support: Resume building, interview preparation, and placement assistance

Flexible Learning Options: Online, weekend, or hybrid courses for working professionals

Zoople Technologies: Leading the Way in Big Data Training

If you’re searching for a reliable and career-oriented big data course in Kochi, look no further than Zoople Technologies—a name synonymous with quality tech education and industry-driven training.

Why Choose Zoople Technologies?

Industry-Relevant Curriculum: Zoople offers a comprehensive, updated big data syllabus designed in collaboration with real-world professionals.

Experienced Trainers: Learn from data scientists and engineers with years of experience in multinational companies.

Hands-On Training: Their learning model emphasizes practical exposure, with real-time projects and live data scenarios.

Placement Assistance: Zoople has a dedicated team to help students with job readiness—mock interviews, resume support, and direct placement opportunities.

Modern Learning Infrastructure: With smart classrooms, cloud labs, and flexible learning modes, students can learn in a professional, tech-enabled environment.

Strong Alumni Network: Zoople’s graduates are placed in top firms across India and abroad, and often return as guest mentors or recruiters.

Zoople Technologies has cemented its position as a go-to institute for aspiring data professionals. By enrolling in their big data course in Kochi, you’re not just learning technology—you’re building a future-proof career.

Final Thoughts

Big data is more than a trend—it's a transformative force shaping the future of business and technology. As organizations continue to invest in data-driven strategies, the demand for skilled professionals will only grow.

By choosing a comprehensive big data course in Kochi, you position yourself at the forefront of this evolution. And with a trusted partner like Zoople Technologies, you can rest assured that your training will be rigorous, relevant, and career-ready.

Whether you're a student, a working professional, or someone looking to switch careers, now is the perfect time to step into the world of big data—and Kochi is the ideal place to begin.

0 notes

Text

Explore the key differences between Apache Hive and Apache HBase, two essential tools in the big data ecosystem. This detailed comparison is tailored for learners pursuing a data science course in Mumbai, highlighting practical use cases, real-time vs. batch processing, and how mastering these tools can boost your career. Ideal for students of any leading data science institute in Mumbai aiming for hands-on expertise and placement success in 2025.

0 notes

Text

Bigtable SQL Introduces Native Support for Real-Time Queries

Upgrades to Bigtable SQL offer scalable, fast data processing for contemporary analytics. Simplify procedures and accelerate business decision-making.

Businesses have battled for decades to use data for real-time operations. Bigtable, Google Cloud's revolutionary NoSQL database, powers global, low-latency apps. It was built to solve real-time application issues and is now a crucial part of Google's infrastructure, along with YouTube and Ads.

Continuous materialised views, an enhancement of Bigtable's SQL capabilities, were announced at Google Cloud Next this week. Maintaining Bigtable's flexible schema in real-time applications requires well-known SQL syntax and specialised skills. Fully managed, real-time application backends are possible with Bigtable SQL and continuous materialised views.

Bigtable has gotten simpler and more powerful, whether you're creating streaming apps, real-time aggregations, or global AI research on a data stream.

The Bigtable SQL interface is now generally available.

SQL capabilities, now generally available in Bigtable, has transformed the developer experience. With SQL support, Bigtable helps development teams work faster.

Bigtable SQL enhances accessibility and application development by speeding data analysis and debugging. This allows KNN similarity search for improved product search and distributed counting for real-time dashboards and metric retrieval. Bigtable SQL's promise to expand developers' access to Bigtable's capabilities excites many clients, from AI startups to financial institutions.

Imagine AI developing and understanding your whole codebase. AI development platform Augment Code gives context for each feature. Scalability and robustness allow Bigtable to handle large code repositories. This user-friendliness allowed it to design security mechanisms that protect clients' valuable intellectual property. Bigtable SQL will help onboard new developers as the company grows. These engineers can immediately use Bigtable's SQL interface to access structured, semi-structured, and unstructured data.

Equifax uses Bigtable to store financial journals efficiently in its data fabric. The data pipeline team found Bigtable's SQL interface handy for direct access to corporate data assets and easier for SQL-savvy teams to use. Since more team members can use Bigtable, it expects higher productivity and integration.

Bigtable SQL also facilitates the transition between distributed key-value systems and SQL-based query languages like HBase with Apache Phoenix and Cassandra.

Pega develops real-time decisioning apps with minimal query latency to provide clients with real-time data to help their business. As it seeks database alternatives, Bigtable's new SQL interface seems promising.

Bigtable is also previewing structured row keys, GROUP BYs, aggregations, and a UNPACK transform for timestamped data in its SQL language this week.

Continuously materialising views in preview

Bigtable SQL works with Bigtable's new continuous materialised views (preview) to eliminate data staleness and maintenance complexity. This allows real-time data aggregation and analysis in social networking, advertising, e-commerce, video streaming, and industrial monitoring.

Bigtable views update gradually without impacting user queries and are fully controllable. Bigtable materialised views accept a full SQL language with functions and aggregations.

Bigtable's Materialised Views have enabled low-latency use cases for Google Cloud's Customer Data Platform customers. It eliminates ETL complexity and delay in time series use cases by setting SQL-based aggregations/transformations upon intake. Google Cloud uses data transformations during import to give AI applications well prepared data with reduced latency.

Ecosystem integration

Real-time analytics often require low-latency data from several sources. Bigtable's SQL interface and ecosystem compatibility are expanding, making end-to-end solutions using SQL and basic connections easier.

Open-source Apache Large Table Washbasin Kafka

Companies utilise Google Cloud Managed Service for Apache Kafka to build pipelines for Bigtable and other analytics platforms. The Bigtable team released a new Apache Kafka Bigtable Sink to help clients build high-performance data pipelines. This sends Kafka data to Bigtable in milliseconds.

Open-source Apache Flink Connector for Bigtable

Apache Flink allows real-time data modification via stream processing. The new Apache Flink to Bigtable Connector lets you design a pipeline that modifies streaming data and publishes it to Bigtable using the more granular Datastream APIs and the high-level Apache Flink Table API.

BigQuery Continuous Queries are commonly available

BigQuery continuous queries run SQL statements continuously and export output data to Bigtable. This widely available capability can let you create a real-time analytics database using Bigtable and BigQuery.

Python developers may create fully-managed jobs that synchronise offline BigQuery datasets with online Bigtable datasets using BigQuery's Python frameworks' bigrames streaming API.

Cassandra-compatible Bigtable CQL Client Bigtable is previewed.

Apache Cassandra uses CQL. Bigtable CQL Client enables developers utilise CQL on enterprise-grade, high-performance Bigtable without code modifications as they migrate programs. Bigtable supports Cassandra's data migration tools, which reduce downtime and operational costs, and ecosystem utilities like the CQL shell.

Use migrating tools and Bigtable CQL Client here.

Using SQL power via NoSQL. This blog addressed a key feature that lets developers use SQL with Bigtable. Bigtable Studio lets you use SQL from any Bigtable cluster and create materialised views on Flink and Kafka data streams.

#technology#technews#govindhtech#news#technologynews#cloud computing#Bigtable SQL#Continuous Queries#Apache Flink#BigQuery Continuous Queries#Bigtable#Bigtable CQL Client#Open-source Kafka#Apache Kafka

0 notes

Text

What is MapReduce? . . . . for more information and tutorial https://bit.ly/3QD5K2Z check the above link

0 notes

Link

0 notes

Text

Are you looking to build a career in Big Data Analytics? Gain in-depth knowledge of Hadoop and its ecosystem with expert-led training at Sunbeam Institute, Pune – a trusted name in IT education.

Why Choose Our Big Data Hadoop Classes?

🔹 Comprehensive Curriculum: Covering Hadoop, HDFS, MapReduce, Apache Spark, Hive, Pig, HBase, Sqoop, Flume, and more. 🔹 Hands-on Training: Work on real-world projects and industry use cases to gain practical experience. 🔹 Expert Faculty: Learn from experienced professionals with real-time industry exposure. 🔹 Placement Assistance: Get career guidance, resume building support, and interview preparation. 🔹 Flexible Learning Modes: Classroom and online training options available. 🔹 Industry-Recognized Certification: Boost your resume with a professional certification.

Who Should Join?

✔️ Freshers and IT professionals looking to enter the field of Big Data & Analytics ✔️ Software developers, system administrators, and data engineers ✔️ Business intelligence professionals and database administrators ✔️ Anyone passionate about Big Data and Machine Learning

#Big Data Hadoop training in Pune#Hadoop classes Pune#Big Data course Pune#Hadoop certification Pune#learn Hadoop in Pune#Apache Spark training Pune#best Big Data course Pune#Hadoop coaching in Pune#Big Data Analytics training Pune#Hadoop and Spark training Pune

0 notes

Text

Big Data Analysis Application Programming

Big data is not just a buzzword—it's a powerful asset that fuels innovation, business intelligence, and automation. With the rise of digital services and IoT devices, the volume of data generated every second is immense. In this post, we’ll explore how developers can build applications that process, analyze, and extract value from big data.

What is Big Data?

Big data refers to extremely large datasets that cannot be processed or analyzed using traditional methods. These datasets exhibit the 5 V's:

Volume: Massive amounts of data

Velocity: Speed of data generation and processing

Variety: Different formats (text, images, video, etc.)

Veracity: Trustworthiness and quality of data

Value: The insights gained from analysis

Popular Big Data Technologies

Apache Hadoop: Distributed storage and processing framework

Apache Spark: Fast, in-memory big data processing engine

Kafka: Distributed event streaming platform

NoSQL Databases: MongoDB, Cassandra, HBase

Data Lakes: Amazon S3, Azure Data Lake

Big Data Programming Languages

Python: Easy syntax, great for data analysis with libraries like Pandas, PySpark

Java & Scala: Often used with Hadoop and Spark

R: Popular for statistical analysis and visualization

SQL: Used for querying large datasets

Basic PySpark Example

from pyspark.sql import SparkSession # Create Spark session spark = SparkSession.builder.appName("BigDataApp").getOrCreate() # Load dataset data = spark.read.csv("large_dataset.csv", header=True, inferSchema=True) # Basic operations data.printSchema() data.select("age", "income").show(5) data.groupBy("city").count().show()

Steps to Build a Big Data Analysis App

Define data sources (logs, sensors, APIs, files)

Choose appropriate tools (Spark, Hadoop, Kafka, etc.)

Ingest and preprocess the data (ETL pipelines)

Analyze using statistical, machine learning, or real-time methods

Visualize results via dashboards or reports

Optimize and scale infrastructure as needed

Common Use Cases

Customer behavior analytics

Fraud detection

Predictive maintenance

Real-time recommendation systems

Financial and stock market analysis

Challenges in Big Data Development

Data quality and cleaning

Scalability and performance tuning

Security and compliance (GDPR, HIPAA)

Integration with legacy systems

Cost of infrastructure (cloud or on-premise)

Best Practices

Automate data pipelines for consistency

Use cloud services (AWS EMR, GCP Dataproc) for scalability

Use partitioning and caching for faster queries

Monitor and log data processing jobs

Secure data with access control and encryption

Conclusion

Big data analysis programming is a game-changer across industries. With the right tools and techniques, developers can build scalable applications that drive innovation and strategic decisions. Whether you're processing millions of rows or building a real-time data stream, the world of big data has endless potential. Dive in and start building smart, data-driven applications today!

0 notes

Text

蜘蛛池购买有哪些NoSQL技术?TG@yuantou2048

在当今的大数据时代,NoSQL数据库因其高可扩展性、高性能和灵活性而备受青睐。对于需要处理大量非结构化或半结构化数据的应用场景来说,选择合适的NoSQL技术至关重要。本文将介绍几种常见的NoSQL技术,并探讨它们在蜘蛛池(爬虫系统)中的应用。

1. MongoDB

MongoDB是一种文档型数据库,支持灵活的数据模型,非常适合存储复杂的数据结构。它提供了丰富的查询语言和强大的索引功能,能够高效地处理海量数据。在蜘蛛池项目中,MongoDB可以用于存储网页抓取结果、用户行为数据等信息。

2. Cassandra

Cassandra是一个分布式列族数据库,设计用于处理大规模数据集。它具有出色的横向扩展能力,能够轻松应对高并发读写需求。在蜘蛛池项目中,Cassandra可用于存储和管理大量的网页内容及元数据。

3. Redis

Redis是一个内存中的键值存储系统,常用于缓存和会话管理。由于其快速的读写性能,Redis常被用作临时数据存储或高速缓存层,帮助提高爬虫系统的响应速度。

4. HBase

HBase是基于Hadoop生态系统的分布式数据库,特别适合于需要频繁更新和查询的场景。对于需要持久化存储且对实时性要求较高的应用场景,如日志记录、用户行为分析等领域,表现尤为出色。

5. Neo4j

Neo4j是一款图数据库,擅长处理复杂关系网络数据。如果您的蜘蛛池需要处理复杂的链接关系或社交网络分析任务,那么Neo4j可能是理想的选择。

6. Couchbase

Couchbase结合了NoSQL的灵活性与传统关系型数据库的事务一致性保障。它可以作为主数据库或辅助数据库使用,特别是在需要进行实时分析时。

7. Apache Cassandra

Apache Cassandra是一个分布式数据库管理系统,适用于需要高度可用性和容错性的场景。它支持水平扩展,非常适合构建高可用、可扩展的蜘蛛池系统。

结论

选择哪种NoSQL技术取决于您的具体需求和技术栈。希望本文能为您提供一些参考意见。如果您有任何疑问或想要进一步讨论,请随时联系我:TG@yuantou2048。

加飞机@yuantou2048

EPP Machine

SEO优化

0 notes

Text

The past 15 years have witnessed a massive change in the nature and complexity of web applications. At the same time, the data management tools for these web applications have undergone a similar change. In the current web world, it is all about cloud computing, big data and extensive users who need a scalable data management system. One of the common problems experienced by every large data web application is to manage big data efficiently. The traditional RDBM databases are insufficient in handling Big Data. On the contrary, NoSQL database is best known for handling web applications that involve Big Data. All the major websites including Google, Facebook and Yahoo use NoSQL for data management. Big Data companies like Netflix are using Cassandra (NoSQL database) for storing critical member data and other relevant information (95%). NoSQL databases are becoming popular among IT companies and one can expect questions related to NoSQL in a job interview. Here are some excellent books to learn more about NoSQL. Seven Databases in Seven Weeks: A Guide to Modern Databases and the NoSQL Movement (By: Eric Redmond and Jim R. Wilson ) This book does what it is meant for and it gives basic information about seven different databases. These databases include Redis, CouchDB, HBase, Postgres, Neo4J, MongoDB and Riak. You will learn about the supporting technologies relevant to all of these databases. It explains the best use of every single database and you can choose an appropriate database according to the project. If you are looking for a database specific book, this might not be the right option for you. NoSQL Distilled: A Brief Guide to the Emerging World of Polyglot Persistence (By: Pramod J. Sadalage and Martin Fowler ) It offers a hands-on guide for NoSQL databases and can help you start creating applications with NoSQL database. The authors have explained four different types of databases including document based, graph based, key-value based and column value database. You will get an idea of the major differences among these databases and their individual benefits. The next part of the book explains different scalability problems encountered within an application. It is certainly the best book to understand the basics of NoSQL and makes a foundation for choosing other NoSQL oriented technologies. Professional NoSQL (By: Shashank Tiwari ) This book starts well with an explanation of the benefits of NoSQL in large data applications. You will start with the basics of NoSQL databases and understand the major difference among different types of databases. The author explains important characteristics of different databases and the best-use scenario for them. You can learn about different NoSQL queries and understand them well with examples of MongoDB, CouchDB, Redis, HBase, Google App Engine Datastore and Cassandra. This book is best to get started in NoSQL with extensive practical knowledge. Getting Started with NoSQL (By: Gaurav Vaish ) If you planning to step into NoSQL databases or preparing it for an interview, this is the perfect book for you. You learn the basic concepts of NoSQL and different products using these data management systems. This book gives a clear idea about the major differentiating features of NoSQL and SQL databases. In the next few chapters, you can understand different types of NoSQL storage types including document stores, graph databases, column databases, and key-value NoSQL databases. You will even come to know about the basic differences among NoSQL products such as Neo4J, Redis, Cassandra and MongoDB. Data Access for Highly-Scalable Solutions: Using SQL, NoSQL, and Polyglot Persistence (By: John Sharp, Douglas McMurtry, Andrew Oakley, Mani Subramanian, Hanzhong Zhang ) It is an advanced level book for programmers involved in web architecture development and deals with the practical problems in complex web applications. The best part of this book is that it describes different real-life

web development problems and helps you identify the best data management system for a particular problem. You will learn best practices to combine different data management systems and get maximum output from it. Moreover, you will understand the polyglot architecture and its necessity in web applications. The present web environment requires an individual to understand complex web applications and practices to handle Big Data. If you are planning to start high-end development and get into the world of NoSQL databases, it is best to choose one of these books and learn some practical concepts about web development. All of these books are full of practical information and can help you prepare for different job interviews concerning NoSQL databases. Make sure to do the practice section and implement these concepts for a better understanding.

0 notes

Text

🚀 Boost Performance with Columnar Databases! 🚀

⚡ Faster reads & writes 📊 Optimized for big data analytics 🔹 Tools: Cassandra | HBase | BigTable

Learn more 👉 www.datascienceschool.in

#DataScience #BigData #ColumnarDatabase #Tech

#datascience#machinelearning#datascienceschool#ai#python#data scientist#learndatascience#bigdata#data#database

0 notes