#human detection robot using raspberry pi

Explore tagged Tumblr posts

Text

Getting Started with Coding and Robotics: A Beginner's Guide

In a world driven by technology, coding and robotics have become essential skills for the innovators of tomorrow. Whether you're a student, a parent looking to introduce your child to STEM, or a curious beginner, diving into this field can be both exciting and a bit overwhelming. This guide is here to help you take the first confident steps into the world of coding and robotics.

What is Coding and Robotics?

Coding is the process of writing instructions that a computer can understand. It's the language behind websites, apps, and yes, robots. Robotics involves designing, building, and programming robots that can perform tasks either autonomously or through human control.

Together, coding and robotics form a dynamic combination that helps learners see the immediate results of their code in the real world.

Why Learn Coding and Robotics?

Promotes Problem Solving: Kids and adults alike learn to break down problems and think logically.

Builds Creativity: Designing a robot or coding a game encourages imaginative thinking.

Future-Ready Skill: As automation and AI grow, these skills are becoming essential.

Hands-On Learning: It’s engaging, interactive, and incredibly rewarding to see your code come to life in a robot.

Tools to Get Started

Here are some beginner-friendly tools and platforms:

For Younger Learners (Ages 6–10):

Scratch: A visual programming language where kids can drag and drop blocks to create games and animations.

Bee-Bot or Botley: Simple robots that teach basic coding through play.

For Middle Schoolers (Ages 10–14):

mBlock or Tynker: Easy-to-use platforms that introduce Python and block-based coding.

LEGO Mindstorms or Quarky: Kits for building and coding robots.

For Teens and Adults:

Arduino: An open-source electronics platform ideal for building custom robots and gadgets.

Raspberry Pi: A small, affordable computer perfect for learning to code and building digital projects.

Python: A powerful yet beginner-friendly programming language widely used in robotics.

Simple Project Ideas to Begin With

Blinking LED with Arduino: A classic beginner project to learn hardware and coding.

Line-Following Robot: Build a robot that follows a black line on the floor.

Scratch Maze Game: Create an interactive maze using block-based coding.

Smart Light System: Program a light to turn on based on motion detection.

Learning Resources

Online Platforms: Code.org, Khan Academy, TinkerCAD Circuits, STEMpedia.

YouTube Channels: STEMpedia, TechZone, FreeCodeCamp.

Books: "Coding for Kids" by Adrienne B. Tacke, "Adventures in Raspberry Pi" by Carrie Anne Philbin.

Local Classes: Check for nearby coding and robotics centers or STEM workshops.

Tips for Beginners

Start Small: Choose one tool or platform and explore it fully before moving on.

Be Curious: Don’t be afraid to experiment and fail—that’s how you learn.

Join a Community: Forums, clubs, or online groups offer support and inspiration.

Build Projects: Apply what you learn through simple, real-world applications.

Have Fun: Learning should be exciting. Choose projects that interest you.

1 note

·

View note

Text

Raspberry Pi OTA Updates Driving Innovation in Industrial 4.0

Overview of Industrial 4.0 Raspberry Pi OTA Updates As Industry 4.0 develops, the Raspberry Pi has emerged as a crucial instrument that makes intelligent systems and more effective automation possible in industrial settings. Without requiring manual intervention, industries may continuously improve and evolve their devices with the inclusion of Raspberry Pi OTA updates. These upgrades offer the efficiency and flexibility that are essential in the fast-paced industrial sectors of today. Raspberry Pi OTA updates are at the forefront of streamlining industrial operations, from safety systems to intelligent inventory management.

Transforming Smart Inventory Management with Raspberry Pi OTA Updates Smart inventory management systems have revolutionized how industries manage their stock and resources. With Raspberry Pi OTA updates, real-time monitoring, tracking, and optimization have become seamless. These updates enable automatic adjustments to stock levels and improved predictive analytics, reducing human errors and enhancing operational efficiency. By implementing raspberry pi ota, warehouses can now enjoy a level of precision in tracking goods that was once impossible. This technology empowers industries to stay ahead of demand, ensuring inventory is always in the right place at the right time.

Enhancing Safety with Smart Badge Cameras and Raspberry Pi OTA Updates Safety is a top priority in industrial environments, and smart badge cameras play a crucial role in monitoring personnel. With Raspberry Pi OTA updates, these cameras benefit from continuous improvements in image quality, connectivity, and security features. Remote updates allow for faster integration of advanced features, such as facial recognition or real-time alerting, which significantly improves workplace safety. These updates also ensure that the cameras are always compatible with evolving security protocols, enabling industries to maintain a secure environment for all workers.

Improving Gas Leak Detection with Raspberry Pi OTA Updates The risk of gas leaks in industrial environments can be catastrophic, making real-time monitoring essential. With raspberry pi ota updates, gas leak analyzers are equipped with more accurate sensors and faster response times. These remote updates enhance the system’s ability to detect even the smallest leaks and alert workers instantly. By ensuring that gas leak analyzers receive regular updates, industries can reduce the risk of dangerous situations, improving safety and minimizing operational disruptions. The ability to continuously enhance these devices remotely is a key factor in maintaining a safe industrial setting.

Industrial IoT Devices and Raspberry Pi OTA Integration Industrial IoT devices have redefined connectivity and automation in manufacturing. Through the use of raspberry pi ota updates, these devices remain functional, efficient, and up-to-date without manual interventions. Whether it's smart sensors, robots, or actuators, Raspberry Pi OTA updates enhance performance by optimizing algorithms, reducing downtime, and improving system integration. The flexibility provided by remote updates ensures that devices across an industrial network can be modified simultaneously, streamlining operations and lowering maintenance costs. This is especially vital for industries operating on a global scale where logistics and on-site updates may not always be feasible.

Advancing Predictive Maintenance in Industrial 4.0 with Raspberry Pi OTA Predictive maintenance is a cornerstone of Industry 4.0, allowing industries to anticipate failures before they occur, which in turn reduces costs and improves productivity, raspberry pi ota updates play a vital role in maintaining the algorithms that power predictive maintenance systems. By regularly updating software and sensor data analysis, Raspberry Pi OTA ensures that machines are continually learning and adapting to new conditions. This means industrial equipment is monitored more precisely, and potential issues are detected earlier, ensuring smoother operations and fewer unexpected breakdowns.

Security Enhancements through Raspberry Pi OTA Updates In today’s interconnected industrial world, security is a top concern. With Raspberry Pi OTA updates, the security of industrial systems can be continuously enhanced. From updating encryption protocols to patching vulnerabilities, these updates ensure that devices stay protected against cyber threats. The flexibility of remote updates makes it possible to deploy the latest security features across a vast network of devices without disrupting operations. With Raspberry Pi OTA, industrial systems can remain secure and compliant with evolving cybersecurity regulations, safeguarding sensitive data and intellectual property.

Future of Raspberry Pi OTA Updates in Industrial 4.0 The future of Raspberry Pi OTA updates in Industrial 4.0 looks promising, with advancements in artificial intelligence, machine learning, and 5G technology enhancing the scope of these updates. As industries continue to embrace smarter automation, the need for continuous improvement through remote updates will grow. Raspberry Pi OTA updates will enable devices to integrate more complex functionalities, such as real-time analytics and autonomous decision-making. Furthermore, the advent of 5G connectivity will significantly increase the speed and reliability of these updates, allowing for even greater innovation in industrial operations.

In conclusion In conclusion, by promoting efficiency, safety, and connection across a variety of applications, Raspberry Pi OTA updates are radically changing Industrial 4.0. Remote updates guarantee that gadgets stay on the bleeding edge of technology, from gas leak analyzers to intelligent inventory management systems. Raspberry Pi OTA updates will become ever more important in providing smarter, more robust industrial ecosystems as enterprises continue to rely more on automation and IoT devices. Without a doubt, the ongoing development of Raspberry Pi OTA technology will have an impact on industrial operations in the future.

For more details click the link below

0 notes

Text

Advanced Robotic Applications Using USB Camera Modules on Raspberry Pi and Android

The integration of USB camera modules has caused a revolution in robotics and automation, especially with platforms like Android and Raspberry Pi. Not only are these small but mighty gadgets improving vision, but they are also propelling innovations in a wide range of domains, from smart home technology to industrial automation.

The Evolution of USB Camera Modules

USB camera modules have evolved significantly, offering high-resolution imaging capabilities in compact forms. They leverage the ubiquitous USB interface, making them easy to integrate with a variety of computing platforms, such as the Raspberry Pi and Android-based systems. This integration has democratized advanced visual functionalities once limited to specialized equipment, empowering developers and enthusiasts alike to explore innovative robotic applications.

Applications in Industrial Automation

In industrial settings, where precision and efficiency are paramount, USB camera modules play a crucial role. They enable tasks such as quality control, object recognition, and process monitoring with high accuracy. Coupled with the Raspberry Pi's computational prowess, these modules facilitate real-time image processing and decision-making, thereby optimizing production lines and reducing operational costs. Industries ranging from manufacturing to logistics are leveraging these capabilities to achieve unprecedented levels of automation and productivity.

Enhancing Robotics with Raspberry Pi

The Raspberry Pi has emerged as a cornerstone in the development of robotic systems due to its affordability, versatility, and community support. When paired with USB camera modules, the Raspberry Pi transforms into a potent platform for robotic vision applications. Robots equipped with these modules can navigate environments autonomously, detect and avoid obstacles, and even perform complex tasks like sorting and inspection with minimal human intervention. This synergy between Raspberry Pi and USB cameras is driving the next wave of intelligent robotics across various domains.

Advantages in Surveillance and Security

The integration of USB camera modules with Android devices has revolutionized surveillance and security systems. Android's flexibility allows for the deployment of mobile and stationary surveillance units equipped with high-definition USB cameras. These systems provide real-time monitoring capabilities, facial recognition, and even night vision functionalities, enhancing both public safety and private security measures. From smart cities to residential complexes, USB camera modules on Android are reshaping how we perceive and implement surveillance solutions.

Innovating smart home automation

In the realm of smart homes, USB camera modules integrated with Raspberry Pi and Android enable homeowners to monitor their premises remotely and automate tasks based on visual cues. From adjusting lighting and temperature settings to alerting occupants of suspicious activities, these systems enhance convenience, safety, and energy efficiency. Moreover, the ability to integrate with voice assistants and mobile applications further enhances usability, making smart home automation accessible to a wider audience.

Future Trends and Possibilities

Looking ahead, the trajectory of USB camera modules in robotics and automation appears promising. Advancements in sensor technology, coupled with improvements in AI and machine learning algorithms, will further expand the capabilities of these modules. Future applications may include collaborative robots that interact seamlessly with humans, drones equipped for autonomous aerial surveillance, and advanced medical diagnostics through robotic imaging systems. The potential for innovation using USB camera modules on Raspberry Pi and Android is vast, promising a future where robotics are more intelligent, responsive, and integrated into everyday life.

In summary

Finally, USB camera modules have become essential parts of the development of robotic apps for Android and Raspberry Pi platforms. The processing capacity of these platforms, combined with their high-quality picture capturing capabilities, is propelling advancements in a variety of industries, ranging from smart home technology to industrial automation and surveillance. These modules will be crucial in determining the direction robotics takes as technology develops, helping to make automation safer, more intelligent, and easier to use than it has ever been.

0 notes

Text

Binocular Camera Module: Enhancing Depth Perception in AI Vision Applications Introduction

Binocular Camera Module: Enhancing Depth Perception in AI Vision Applications Introduction The binocular camera module is an innovative technology that mimics the human eye’s depth perception by using two separate lenses and image sensors. This module has gained popularity in various fields, including robotics, autonomous vehicles, and augmented reality. In this article, we’ll explore the features, applications, and compatibility of the IMX219-83 Stereo Camera, a binocular camera module with dual 8-megapixel cameras.Get more news about top selling binocular camera module,you can vist our website!

Features at a Glance Dual IMX219 Cameras: The module features two onboard IMX219 cameras, each with 8 megapixels. These cameras work in tandem to capture stereo images, enabling depth perception. AI Vision Applications: The binocular camera module is suitable for various AI vision applications, including depth vision and stereo vision. It enhances the accuracy of object detection, obstacle avoidance, and 3D mapping. Compatible Platforms: The module supports both the Raspberry Pi series (including Raspberry Pi 5, CM3/3+/4) and NVIDIA’s Jetson Nano and Jetson Xavier NX development kits.

Applications The binocular camera module finds applications in the following areas:

Robotics: Enables robots to perceive their environment in 3D, aiding navigation and manipulation tasks. Autonomous Vehicles: Enhances object detection and collision avoidance systems. Augmented Reality: Provides accurate depth information for AR applications. Industrial Automation: Assists in quality control, object tracking, and depth-based measurements. Conclusion The IMX219-83 Stereo Camera offers a powerful solution for depth perception in AI vision systems. Whether you’re a hobbyist experimenting with Raspberry Pi or a professional working with Jetson platforms, this binocular camera module opens up exciting possibilities for creating intelligent and perceptive devices.

0 notes

Text

Innovative Opencv Projects in Robotics and Autonomous Systems

OpenCV (Open Source Computer Vision) is a popular open-source library for computer vision and image processing tasks. With its extensive collection of algorithms and tools, it has become a go-to resource for developers working on robotics and autonomous systems. In recent years, there has been a surge in the use of OpenCV in these fields, resulting in some innovative projects that have pushed the boundaries of what is possible with robotics and autonomous systems.

Here are some of the most exciting and innovative OpenCV projects in robotics and autonomous systems:

1. Autonomous Navigation with Visual Odometry Visual odometry is a technique that uses visual input from cameras to estimate the position and orientation of a robot in an environment. This information can then be used for autonomous navigation. OpenCV provides a robust set of algorithms for feature detection, matching, and pose estimation, making it a perfect tool for visual odometry. One of the most notable projects using this technique is the open-source robot platform, TurtleBot. It uses two cameras and OpenCV to perform visual odometry and navigate through its surroundings autonomously.

2. Object Detection and Tracking for Autonomous Drones Autonomous drones have become increasingly popular for various applications, such as search and rescue, surveillance, and delivery. OpenCV has played a crucial role in enabling these drones to detect and track objects in their environment. The library offers a variety of algorithms for object detection and tracking, including Haar cascades, HOG, and deep learning-based methods. One such project, the OpenCV Drone, uses a Raspberry Pi and a camera to detect and track objects in real-time, allowing the drone to navigate and avoid obstacles autonomously.

3. Simultaneous Localization and Mapping (SLAM) SLAM is a technique used to create a map of an unknown environment while simultaneously localizing the robot within it. OpenCV provides a range of algorithms for feature detection, matching, and 3D reconstruction, making it a valuable tool for SLAM applications. One of the most prominent projects using OpenCV for SLAM is Google's Project Tango, which uses a combination of cameras and sensors to create 3D maps of indoor environments. OpenCV plays a crucial role in feature detection and matching for this project.

4. Emotion and Gesture Recognition for Human-Robot Interaction In order for robots to interact with humans in a more natural and intuitive way, they need to be able to recognize emotions and gestures. OpenCV has a variety of tools and algorithms for facial and gesture recognition, making it a popular choice for human-robot interaction projects. One such project is the robot companion, Pepper, which uses OpenCV to recognize and respond to human emotions and gestures, enhancing its ability to communicate and interact with humans.

5. Vision-based Control for Robotic Arms Robotic arms have a wide range of applications, from manufacturing and assembly to surgical procedures. OpenCV has been used in various projects to enable vision-based control for these robotic arms. The library offers algorithms for object detection, tracking, and pose estimation, which are essential for controlling the movements of a robotic arm. One remarkable project is the OpenCV-Gripper, which uses a camera and OpenCV to detect and pick up objects of different shapes and sizes, showcasing the potential of vision-based control in robotics.

In conclusion, OpenCV has proven to be a versatile and powerful tool for robotics and autonomous systems. Its algorithms and tools have enabled developers to create innovative projects that have revolutionized these fields. With the continuous development and advancements in computer vision and artificial intelligence, we can expect to see even more impressive projects using OpenCV in the future.

0 notes

Text

DESIGN AND CONSTRUCTION OF A HUMAN DETECTION ROBOT

DESIGN AND CONSTRUCTION OF A HUMAN DETECTION ROBOT

DESIGN AND CONSTRUCTION OF A HUMAN DETECTION ROBOT

ABSTRACT

The circuit breaker is an absolutely essential device in the modern world, and one of the most important safety mechanisms in your home. Whenever electrical wiring in a building has too much current flowing through it, these simple machines cut the power until somebody can fix the problem. Without circuit breakers (or the alternative,…

View On WordPress

#3d human sensing slideshare#alive human detection robot for disaster management#android control robot using 8051#arduino human detection#arduino pedestrian detection#bomb detection robot circuit diagram#DESIGN AND CONSTRUCTION OF A HUMAN DETECTION ROBOT#disadvantages of human detection robot#how to make human detection sensor#human detection by live body sensor#human detection pdf#human detection robot ppt#human detection robot using arduino pdf#human detection robot using raspberry pi#human detection sensor for home automation#human detection using arduino#human detector sensor#human speed detection project using pic#iot based robotics projects#live human being detection wireless remote controlled robot#metal detector robot#metal detector robotic vehicle ppt#pir sensor#pir sensor working#rf section in metal detector#sensor to detect human body

0 notes

Text

Bionic Controlled Computers (B.C.C)

(C)2019-2020 By Kevin Michael Kappler. All rights reserved.

I had been watching videos on Youtube concerning Bionic arms,legs, and even eyes. Noticing even the brainwave frequency detection headgear to control the bionically enhanced vehicles and weapons on various science channels – I thought. “Why can't I design my own Brainwave head gear (or use an already manufactured one to purchase), use the brainwave interpreter circuit board and finally a raspberry pi or latte panda single board computer units to act like the command executor - “bionic computer interfaces” or “B.C.I.”

I Googled such items. I found a few for $500usd that may fit the description in my so called “ideological fantasy.”

Bionic headgear and interface -

Input commands and directions to robotic units and vehicles.

Such a system would allow handicapped people and also people in my condition (with nerve sensations damaged by M.S or S.L.E)(even visual impairments)

to drive cars, control wheel chairs(quadriplegics) and even goggles to connect cameras for vision (helping the blind to see).

Controlling personal computers, drones, weapons, and other common entertainment electronics with simply a “thought” would change how one could interact. Especially in a world limited by Nerve damage, disease, or handicap.

My “Bionic S Campaign” is a headband with Brainwave EEG connectors, an interface that would detect,amplify and then direct those waves to the interpreter box then the interpreter box would tell the intended software and hardware would to do.

Brainwaves would be detected and the wave patterns, which it detected, would be interpreted as certain commands or “willed reactions” by the interpreter.

Imagine that you are sitting in an electric battery powered go kart.

A box to receive the commands would be installed in place of a steering wheel and accelerator.

The headband would then be placed on the subject's head.

Brain sensors would be positioned to pick up their intended brainwaves. As electroencephalogram)

picks up a subjects brainwaves just to measure the activity of their thoughts.

An interpreter box would look for such waves. By the frequency picked up or “wave form”(alike a modem and audio signal of two computers communicating through tones), this “brainwave modem” would use such detected waves and match them to commands that were stored in it's programmed rom.

Once the brainwave was matched to the wave length in it's command base, the machine would then interpret what the command would result with an action sent to the box in the vehicle.

The subject could think of going “forward” or the words “go forward.”

Just like a bionic arm or leg – this system could integrate a Human to a machine and “will” such devices without touch, vocal , or touch commands.

If one wished for the vehicle to turn, they would simply push the though of which direction the vehicle should turn and the interpreter computer would make such and action happen.

For people in wheelchairs – they could even fly a helicopter or write a novel simply by thought.

People, like myself, with Nephropathy and severe pain disorders. People who could walk, talk, and do many normal things – their lack of feeling in parts of their bodies, distorted vision without glasses, and interactions that are limited by neurological damages by disease or action.

They could have their vision enhanced. They could run machinery, safely.

The could create with 3d printers connected to the interpreter computer.

The could draw, beautifully, or even animate simply by thought.

Once the funds needed are collected, I could order the parts I needed. Then trials and experiments in computer,vehicle, or bionic response unit (B.R.U) could be made successful.

Human thought to gadget computer controlled vehicles, art equipment, and many more household items. “Will Controllable Appliances (W.C.A).

#bionics#computers#bionic controlled computers#brainwave controlled appliances#brainwave vehicles#kappler#inventive ideas

1 note

·

View note

Text

AAAAAAAAAAAA

It's the weekend before finals weeks and i just submitted my abstract for an undergraduate conference at my university

Thanks all for keeping up with my research so far.

Read it below:

End-to-end neural networks (EENN) utilize machine learning to make predictions or decisions without being explicitly programmed to perform tasks by considering the inputs and outputs directly. In contrast, traditional hard coded algorithmic autonomous robotics require every possibility programmed. Existing research with EENN and autonomous driving demonstrates level-two autonomy where the vehicle can assist with acceleration, braking, and environment monitoring with a human observer, such as NVIDIA's DAVE-2 autonomous car system by utilizing case-specific computing hardware, and DeepPiCar by scaling technology down to a low power embedded computer (Raspberry Pi). The goal of this study is to recreate previous findings on a different platform and in different environments through EENN application by scaling up DeepPiCar with a NVIDIA Jetson TX2 computing board and hobbyist grade parts (e.g. 12V DC motor, Arduino) to represent 'off-the-shelf' components when compared to DAVE-2. This advancement validates that the concept is scalable to using more generalized data, therefore easing the training process for an EENN by avoiding dataset overfitting and production of a system with a level of 'common sense'. Training data is collected via camera input and associating velocity and encoder values from a differential drive ground vehicle (DDGV) with quadrature motors at 320x240 resolution with a CSV database. Created datasets are fed into an EENN analogous to the DAVE-2 EENN layered structure: one normalization, five convolutional, three fully connected layers. The EENN is considered a convolutional neural network (assumes inputs are images and learns filters, e.g. edge detection, independently from a human programmer), and accuracy is measured by comparing produced velocity values against actual values from a collected validation dataset. An expected result is the DDGV navigates a human space and obstacles by the EENN only inputting sensor data and outputting velocities for each motor.

12 notes

·

View notes

Link

Raspberry Pi is a technology that has made a lot of impact since its introduction to the public. It is a dynamic microcontroller which is capable of doing anything a computer can. The price and the wide support community also increase the range of applications this board supports. It is a good way for people of all ages to understand about programming and computing. Raspberry Pi Based Projects will introduce students to the main ideas of the Raspberry pi. Also the projects help you to build some Real time applications.

Why Raspberry Pi Based Projects are Important?

The Raspberry Pi is a perfect learning tool. Because it is low cost to make, easy to replace and needs only a keyboard and a Television to run. These same pros also make it a suitable product to start computing in this developing world. In a real-time work environment theoretical knowledge alone won’t be of help. However, as a new comer, one needs to be focus on the basics and need to learn how things work. Raspberry Pi Based Projects are the best method to understand the concepts.

Where can you find the best Projects?

Pantech eLearning, an Online Learning Service provider in Chennai, is offering latest Raspberry Pi based projects for you. We have the most modern tools and professionals with experience to guide you.

Given below is the list of Top 10 Raspberry Pi Based Projects we are providing:

Smart ATM Security

In this system, not only the valid card holder is allowed and also only by the knowledge of account holder any one can enter into the ATM by using account holders ATM card. If any unauthorized person is inserted ATM card, their picture with one OTP will be sent to account holders mail.

Eyeball Movement Based Wheel Chair Control

In this method camera is focused on the eye by using OpenCV. We need to find out the centroid of the eyeball. By tacking the eyeball movement, we can move the wheel chair accordingly.

Fire Fighting Robot

The robot detects the presence of fire then it moves towards that particular direction and also it pours water or turns on extinguisher automatically.

Road Sign Recognition

The system recognize speed limit signs, stop signs and also warning signs such as pedestrian crossing, railroad crossing etc. along with Raspberry pi.

White Light Detection Based Adaptive Lighting

The main aim is to control the brightness of the headlight and to properly illuminate the path. So that we can easily find if there are any pedestrians in the way of a curved path.

Smart Blind Stick

In this project, the Pre-trained model is using. Its accuracy is more than 90%. It can also be customize to recognize other objects using Transfer learning.

Smart Trolley Based on Color Tracking

This trolley can help humans move things and create a sense of comfort and also efficiency while doing the activities.

Digital Door Lock With Mail Alert

The goal of this paper is to design and implement a home security system by integrating smart phone and also home network service in the absence of residents.

Drowsiness Detection while Driving

The main objective of this project is safety and security with autonomous region based automatic car system.

Health Monitoring System

The real-time medical data collecting using wearable medical sensors are transmit to a diagnostic center.

Visit our Website and Book your Projects Now.

0 notes

Text

Student scientists want to help all of us survive a warmer world

This has been a year full of the unexpected. COVID-19 has forced people indoors, closed schools and killed more than 220,000 Americans. Besides the pandemic, there have been hurricanes, wildfires, protests and more. Behind some of these upheavals, though, is an unfolding event that shows no sign of stopping: climate change.

Climate change is contributing to many of the disasters that have been changing lives around the world. Here we meet students who have been using science to help people better understand and deal with the effects of our changing climate.

Their research helped place them among 30 finalists at the 10th Broadcom MASTERS. MASTERS stands for Math, Applied Science, Technology and Engineering for Rising Stars. It’s a research program open to middle-school investigators. Society for Science & the Public (which publishes Science News for Students) created the event. Broadcom Foundation, headquartered in Irvine, Calif., sponsors it.

Some of the kids developed systems to help keep people safe as climate change makes life more unpredictable. Others developed ways to save precious resources, such as water and oil. And one student applied lessons from a study done in a cereal bowl to model melting glaciers.

Fighting fire and flood with science

Like many Californians, Ryan Honary, 12, has personal experience with wildfires. A student at Pegasus School in Huntington, he was with his father at an Arizona tennis tournament when he saw wildfires raging across his home state on TV. “The hills that were burning looked just like the hills behind my house,” Ryan recalls. “I called my mom and asked if she was okay.” Once he knew that she was, he asked his dad why wildfires got out of control so often. “We’re planning to send people to Mars but we can’t detect wildfires,” Ryan says.

That’s when Ryan decided to create a way to detect wildfires early — before they get out of control. He linked together a series of Raspberry Pi computers. Some of these tiny units were fitted to detect smoke, fire and humidity (how much water is in the air). Their sensors relayed data wirelessly to another Raspberry Pi. This slightly bigger computer served as a mini meteorological station. He estimates that each sensor would cost around $20, and the mini stations would cost $60 each.

Ryan Honary shows off his wildfire detectorH. Honary

Ryan brought his entire system to a park and tested it by holding the flame from a lighter in front of each sensor. When these sensed a fire, they informed the detector. It then alerted an app that Ryan built for his phone. While creating that app, Ryan talked with Mohammed Kachuee. He’s a graduate student at the University of California, Los Angeles. Kachuee helped Ryan use machine learning to train his app with data from the large 2018 Camp Fire. The app took lessons from how this fire had traveled over time. Using those data, the app “learned” to predict how flames at future events might spread.

Someday, Ryan hopes his sensors might be deployed throughout his state. “Five of the worst fires in California just happened in the last three months,” he notes. “So it’s pretty obvious that global warming and climate change is just making the fire problems worse.”

Stronger hurricanes are another symptom of a warming climate. Heavy rain and storms can produce sudden floods that appear and disappear locally within minutes. One such flash flood provided a memorable experience for Ishan Ahluwalia, 14.

Ishan Ashluwalia had a tire roll on a wet treadmill to create a warning system for when a car might slip. A. Ahluwalia

It was a rainy day in Portland, Ore. “My family was driving on a highway,” recalls the now ninth grader at Jesuit High School in the city. “We were driving at the speed limit.” But a sudden sheet of water on the road made the car swerve. It was hydroplaning. This occurs when water builds up beneath a tire, he explains. With no friction, the tire slips “and the car slips as well.” This can lead to accidents.

Ishan was surprised that there was no system in a car to sense when tires were about to slide. So he went to his garage and put a small tire on a treadmill. He hooked the wheel up to a computer with an accelerometer inside. As the treadmill moved and the wheel rolled, he ran water down the belt to make synthetic rain. The computer then measured the friction between the wheel and the belt as differing amounts of rain fell.

Then, like Ryan, Ishan used machine learning. “In middle school, my science teachers really helped me get the project off the ground,” he says. But the next step was to talk to an engineer who works at nearby Oregon Health and Science University. With that engineer’s guidance, Ishan trained the system he built to associate different types of weather with how much water was on the road. It could then link those water levels with a car’s ability to maneuver.

If installed in a car, Ishan says, this system might offer a notice in green, yellow or red to warn people when they faced a risk of losing control of steering or braking. It also could help people drive more safely as heavy rains and flooding become more common.

Saving water and stopping snails

Just as it’s possible to have way too much rain, it’s also possible to have far too little. Pauline Estrada’s home, in Fresno, Calif., is in one such drought-prone region. The eighth grader at Granite Ridge Intermediate School saw nearby farmers watering their fields. In dry regions like hers, no drop would be wasted. So she sought a way to help growers predict when their plants truly needed water. Right now, Pauline says, farmers measure soil moisture to see if their plants are thirsty. But, she notes, that doesn’t show if the plant itself is suffering.

Pauline Estrada made this rover seen in a farm field. Her so-called Infra-Rover scans plants to determine if they need water. P. Estrada

Luckily, this 13-year-old had a rover lying around. She had built the robot vehicle from a kit. The teen also built an infrared camera. It makes images at light wavelengths the human eye can’t see. Infrared light often is used to map heat. A hotter plant is a drier plant, Pauline explains. When a plant has enough water, she says, “it lets water go through its leaves.” This cools the air on the surface of the leaf. But if the plant is dry, it will hold in water, and the leaf surface will be hotter.

Pauline attached the camera to her rover and drove it around pepper plants that she grew in pots. Sure enough, her roving camera could spot when these plants needed water. Then, with the help of Dave Goorahoo, a plant scientist at Fresno State University, she ran her rover around pepper plants in a farm field.

Her Infra-Rover currently scans only one plant at a time. Pauline hopes to scale up her system to observe many at once. She also plans to work on a system to predict when hot plants will need water — before they get parched. “It’s important to not waste water during climate change,” she says. Water them when they need it, she says, not before.

Explainer: CO2 and other greenhouse gases

Once those crops are grown, they’ll need to be shipped to hungry people the world over. Many will travel on huge cargo ships powered by large amounts of fossil fuels. In fact, cargo ships account for three percent of all carbon dioxide emitted into the air each year.

Those ships would burn less fuel if they encountered less friction at sea, known as drag, reasoned Charlotte Michaluk. The 14-year-old is now a ninth grader at Hopewell Valley Central High School in Pennington, N.J. A scuba diver since sixth grade, Charlotte knew that one source of drag was stuff growing on the hulls of ships. Barnacles, snails and other organisms contribute to this biofouling. Their lumpy bodies increase drag, making ships work harder and burn more fuel.

Charlotte runs water down a ramp to determine how much drag it creates. She hopes that better materials will help ships use fewer climate-warming fuels to sail the seas. C. Michaluk

Charlotte opted to design a new, more slippery coating for a ship’s hull so that fewer creatures would be able to hitch a ride. She tested different materials in the aquatic version of a wind tunnel. Charlotte loves woodworking and crafting. “My family knows where I’ve been in the house based on my trail of crafting materials,” she says. She designed a ramp that she could coat with different materials. Then she measured the force of the water flowing off different metal and plastic coatings to calculate their drag.

One material proved really good at reducing drag. Called PDMS, it’s a type of silicone — a material made of chains of silicon and oxygen atoms. Charlotte also tested some surfaces that had been based on mako shark skins. The sharklike drag-limiting scales, known as denticles, worked well at cutting the ramp’s drag.

But would they also prevent other creatures from latching on? To find out, Charlotte went hunting for small bladder snails in local streams. She put the snails in her water tunnel and observed how well they were able to cling to different surfaces. PDMS and the fake mako skin prevented snails from sticking.

“Biofouling is a really big problem,” she says. Affected ships will consume more fossil fuels. And that, she explains, “contributes to global warming.” She hopes her discoveries might someday help ships slip more easily through the water — and save fuel.

Eyes on ice

It might seem like a kid from Hawaii wouldn’t spend a lot of time thinking about glaciers. But Rylan Colbert, 13, sure does. It started when this eighth grader at Waiakea Intermediate School in Hilo first saw news of an experiment on how dams might collapse. Those tests had studied how piles of rice cereal collapsed in milk.

The cereal was puffed rice. But Rylan was soon thinking about ice. “I think I had shaved ice [a popular treat in Hawaii] the day before and I was thinking about it,” he says. “And that led me to thinking about glaciers” and how their collapse might affect polar regions.

Rylan decided to study if shaved ice would collapse into water as the rice cereal had in the study he had read about. For a little guidance, he emailed Itai Einav. He’s a coauthor of the rice cereal study at the University of Sydney in Australia. Einav emailed back and became “kind of my mentor,” Rylan says.

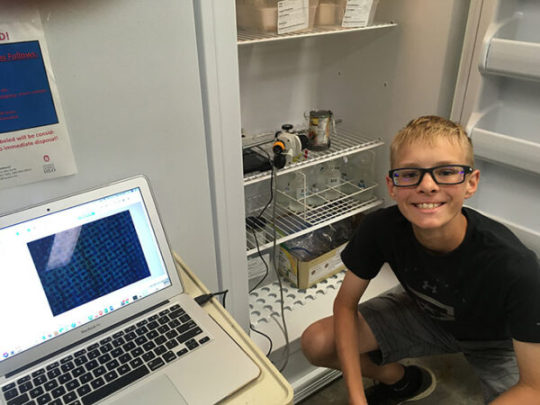

Rylan Colbert next to his tiny shaved ice “glacier.” To model a cold environment, he kept his science project in the refrigerator. S. Colbert

Using a refrigerator in his father’s lab at the University of Hawaii, Ryan filled beakers with a layer of gravel. Then he added a layer of shaved ice to serve as his model glacier. “The density of the shaved ice was about the density of freshly fallen show,” he says. That’s really important, he says; it simulates how glaciers develop. “That’s the start of the process.”

He set a microscope on its side in the fridge to monitor exactly what happened. “To simulate global warming, I pumped water under the shaved ice and it compacted,” Rylan notes. He tested water pumped in at -1° Celsius (30° Fahrenheit) and then again at 8 °C (46 °F). That warmer water simulated a warming ocean.

Ice with warmer water below it compacted seven percent faster than ice atop very cold water, Rylan showed. He hopes his research might help people understand how glaciers form (or don’t), as the world warms.

Doing more scientific research around climate change is key, he believes. Eventually, he says, “it’ll hit home with somebody and they’ll say, ‘Hey, let’s stop this.’”

Pauline hopes that more research also will prompt more action. “We should take all measures to prevent [climate change] as much as possible,” she says. “At the rate it’s going, it’s going to destroy the planet.”

Student scientists want to help all of us survive a warmer world published first on https://triviaqaweb.tumblr.com/

0 notes

Text

Artificial Intelligence Books For Beginners | Top 17 Books of AI for Freshers

Artificial Intelligence (AI) has taken the world by storm. Almost every industry across the globe is incorporating AI for a variety of applications and use cases. Some of its wide range of applications includes process automation, predictive analysis, fraud detection, improving customer experience, etc.

AI is being foreseen as the future of technological and economic development. As a result, the career opportunities for AI engineers and programmers are bound to drastically increase in the next few years. If you are a person who has no prior knowledge about AI but is very much interested to learn and start a career in this field, the following ten Books on Artificial Intelligence will be quite helpful:

List of 17 Best AI Books for Beginners– By Stuart Russell & Peter Norvig

This book on artificial intelligence has been considered by many as one of the best AI books for beginners. It is less technical and gives an overview of the various topics revolving around AI. The writing is simple and all concepts and explanations can be easily understood by the reader.

The concepts covered include subjects such as search algorithms, game theory, multi-agent systems, statistical Natural Language Processing, local search planning methods, etc. The book also touches upon advanced AI topics without going in-depth. Overall, it’s a must-have book for any individual who would like to learn about AI.

2. Machine Learning for Dummies

– By John Paul Mueller and Luca Massaron

Machine Learning for Dummies provides an entry point for anyone looking to get a foothold on Machine Learning. It covers all the basic concepts and theories of machine learning and how they apply to the real world. It introduces a little coding in Python and R to tech machines to perform data analysis and pattern-oriented tasks.

From small tasks and patterns, the readers can extrapolate the usefulness of machine learning through internet ads, web searches, fraud detection, and so on. Authored by two data science experts, this Artificial Intelligence book makes it easy for any layman to understand and implement machine learning seamlessly.

3. Make Your Own Neural Network

– By Tariq Rashid

One of the books on artificial intelligence that provides its readers with a step-by-step journey through the mathematics of Neural Networks. It starts with very simple ideas and gradually builds up an understanding of how neural networks work. Using Python language, it encourages its readers to build their own neural networks.

The book is divided into three parts. The first part deals with the various mathematical ideas underlying the neural networks. Part 2 is practical where readers are taught Python and are encouraged to create their own neural networks. The third part gives a peek into the mysterious mind of a neural network. It also guides the reader to get the codes working on a Raspberry Pi.

4. Machine Learning: The New AI

– By Ethem Alpaydin

Machine Learning: The New AI gives a concise overview of machine learning. It describes its evolution, explains important learning algorithms, and presents example applications. It explains how digital technology has advanced from number-crunching machines to mobile devices, putting today’s machine learning boom in context.

The book on artificial intelligence gives examples of how machine learning is being used in our day-to-day lives and how it has infiltrated our daily existence. It also discusses the future of machine learning and the ethical and legal implications for data privacy and security. Any reader with a non-Computer Science background will find this book interesting and easy to understand.

5. Fundamentals of Machine Learning for Predictive Data Analytics: Algorithms, Worked Examples, and Case Studies

– By John D. Kelleher, Brian Mac Namee, Aoife D’Arcy

This AI Book covers all the fundamentals of machine learning along with practical applications, working examples, and case studies. It gives detailed descriptions of important machine learning approaches used in predictive analytics.

Four main approaches are explained in very simple terms without using many technical jargons. Each approach is described by using algorithms and mathematical models illustrated by detailed worked examples. The book is suitable for those who have a basic background in computer science, engineering, mathematics or statistics.

6. The Hundred-Page Machine Learning Book

– By Andriy Burkov

Andriy Burkov’s “The Hundred-Page Machine Learning Book” is regarded by many industry experts as the best book on machine learning. For newcomers, it gives a thorough introduction to the fundamentals of machine learning. For experienced professionals, it gives practical recommendations from the author’s rich experience in the field of AI.

The book covers all major approaches to machine learning. They range from classical linear and logistic regression to modern support vector machines, boosting, Deep Learning, and random forests. This book is perfect for those beginners who want to get familiar with the mathematics behind machine learning algorithms.

7. Artificial Intelligence for Humans

– By Jeff Heaton

This book helps its readers get an overview and understanding of AI algorithms. It is meant to teach AI for those who don’t have an extensive mathematical background. The readers need to have only a basic knowledge of computer programming and college algebra.

Fundamental AI algorithms such as linear regression, clustering, dimensionality, and distance metrics are covered in depth. The algorithms are explained using numeric calculations which the readers can perform themselves and through interesting examples and use cases.

8. Machine Learning for Beginners

– By Chris Sebastian

As per its title, Machine Learning for Beginners is meant for absolute beginners. It traces the history of the early days of machine learning to what it has become today. It describes how big data is important for machine learning and how programmers use it to develop learning algorithms. Concepts such as AI, neural networks, swarm intelligence, etc. are explained in detail.

This Artificial Intelligence book provides simple examples for the reader to understand the complex math and probability statistics underlying machine learning. It also provides real-world scenarios of how machine learning algorithms are making our lives better.

9. Artificial Intelligence: The Basics

– By Kevin Warwick

This book provides a basic overview of different AI aspects and the various methods of implementing them. It explores the history of AI, its present, and where it will be in the future. The book has interesting depictions of modern AI technology and robotics. It also gives recommendations for other books that have more details about a particular concept.

The book is a quick read for anyone interested in AI. It explores issues at the heart of the subject and provides an illuminating experience for the reader.

10. Machine Learning for Absolute Beginners: A Plain English Introduction

– By Oliver Theobald

One of the few artificial intelligence books that explains the various theoretical and practical aspects of machine learning techniques in a very simple manner. It makes use of plain English to prevent beginners from being overwhelmed by technical jargons. It has clear and accessible explanations with visual examples for the various algorithms.

Apart from learning the technology itself for the business applications, there are other aspects of AI that enthusiasts should know about, the philosophical, sociological, ethical, humanitarian and other concepts. Here are some of the books that will help you understand other aspects of AI for a larger picture, and also help you indulge in intelligent discussions with peers.

Philosophical books11. Superintelligence: Paths, Dangers, Strategies

– By Nick Bostrom

Recommended by both Elon Musk and Bill Gates, the book talks about steering the course through the unknown terrain of AI. The author of this book, Nick Bostrom, is a Swedish-born philosopher and polymath. His background and experience in computational neuroscience and AI lays the premise for this marvel of a book.

12. Life 3.0

– By Max Tegmark

This AI book by Max Tegmark will surely inspire anyone to dive deeper into the field of Artificial Intelligence. It covers the larger issues and aspects of AI including superintelligence, physical limits of AI, machine consciousness, etc. It also covers the aspect of automation and societal issues arising with AI.

Sociological Books13. The Singularity Is Near

– By Ray Kurzweil

Ray Kurzweil was called ‘restless genius’ by the Wall Street Journal and is also highly praised by Bill Gates. He is a leading inventor, thinker, and futurists who takes keen interest in the field of Artificial Intelligence. In this AI book, he talks about the aspect of AI which is most feared by many of us, i.e., ‘Singularity’. He talks extensively about the union of humans and the machine.

14. The Sentiment Machine

– By Amir Husain

This book challenges us about societal norms and the assumptions of a ‘good life’. Amir Husain, being the brilliant computer scientist he is, points out that the age of Artificial Intelligence is the dawn of a new kind of intellectual diversity. He guides us through the ways we can embrace AI into our lives for a better tomorrow.

15. The Society of Mind

– By Marvin Minsky

Marvin Minsky is the co-founder of the AI Laboratory at MIT and has authored a number of great Artificial Intelligence Books. One such book is ‘The Society of Mind’ which portrays the mind as a society of tiny components. This is the ideal book for all those who are interested in exploring intelligence and the aspects of mind in the age of AI.

Humanitarian Books16. The Emotion Machine – By Marvin Minsky

In this book, Marvin Minsky presents a novel and a fascinating model of how the human mind works. He also argues that machines with a conscious can be built to assist humans with their thinking process. In his book, he presents emotion as another way of thinking. It is a great follow up to the book “Society Of Mind”.

17. Human Compatible — Artificial Intelligence and the Problem of Control

– By Stuart Russell

The AI researcher, Stuart Russell explains the probable misuse of Artificial Intelligence and its near term benefits. It is an optimistic and an empathetic take on the journey of humanity in this day and age of AI. The author also talks about the need for rebuilding AI on a new foundation where the machine can be built for humanity and its objectives.

So these were some of the books on artificial intelligence that we recommend to start with. Under Artificial Intelligence, we have Machine Learning, Deep Learning, Computer Vision, Neural Networks and many other concepts which you need to touch upon. To put machine learning in context, some Basic Python Programming is also introduced. The reader doesn’t need to have any mathematical background or coding experience to understand this book.

If you are interested in the domain of AI and want to learn more about the subject, check out Great Learning’s PG program in Artificial Intelligence and Machine Learning.

0 notes

Text

OpenCV AI Kit aims to do for computer vision what Raspberry Pi did for hobbyist hardware

A new gadget called the OpenCV AI Kit, or OAK, looks to replicate the success of Raspberry Pi and other minimal computing solutions, but for the growing fields of computer vision and 3D perception. Its new multi-camera PCBs pack a lot of capability into a small, open-source unit and are now seeking funding on Kickstarter.

The OAK devices use their cameras and onboard AI chip to perform a number of computer vision tasks, like identifying objects, counting people, finding distances to and between things in frame, and more. This info is sent out in polished, ready-to-use form.

Having a reliable, low cost, low power draw computer vision unit like this is a great boon for anyone looking to build a smart device or robot that might have otherwise required several and discrete cameras and other chips (not to mention quite a bit of fiddling with software).

Image Credits: Luxonis

Like the Raspberry Pi, which has grown to become the first choice for hobbyist programmers dabbling in hardware, pretty much everything about these devices is open source on the permissive MIT license. And it’s officially affiliated with OpenCV, a widespread set of libraries and standards used in the computer vision world.

The actual device and onboard AI were created by Luxonis, which previously created the CommuteGuardian, a sort of smart brake light for bikes that tracks objects in real time so it can warn the rider. The team couldn’t find any hardware that fit the bill so they made their own, and then collaborated with OpenCV to make the OAK series as a follow-up.

There are actually two versions: The extra-small OAK-1 and triple-camera OAK-D. They share many components, but the OAK-D’s multiple camera units mean it can do true stereoscopic 3D vision rather than relying on other cues in the plain RGB image — these techniques are better now than ever but true stereo is still a big advantage. (The human vision system uses both, in case you’re wondering.)

The two OAK devices, with the world’s ugliest quarter for scale.

The idea was to unitize the computer vision system so there’s no need to build or configure it, which could help get a lot of projects off the ground faster. You can use the baked-in object and depth detection out of the box, or pick and choose the metadata you want and use it to augment your own analysis of the 4K (plus two 720p) images that also come through.

A very low power draw helps, too. Computer vision tasks can be fairly demanding on processors and thus use a lot of power, which was why a device like XNOR’s ultra-efficient chip was so promising (and why that company got snapped up by Apple). The OAK devices don’t take things to XNOR extremes but with a maximum power draw of a handful of watts, they could run on normal-sized batteries for days or weeks on end depending on their task.

The specifics will no doubt be interesting to those who know the ins and outs of such things — ports and cables and GitHub repositories and so on — but I won’t duplicate them here, as they’re all listed in orderly fashion in the campaign copy. Here’s the quick version:

Image Credits: Luxonis

If this seems like something your project or lab could make use of, you might want to get in quick on the Kickstarter, as there are some deep discounts for early birds, and the price will double at retail. $79 for the OAK-1 and $129 for the OAK-D sound like bargains to me based on their stated capabilities (they’ll be $199 and 299 eventually). And Luxonis and OpenCV are hardly fly-by-night organizations hocking vaporware, so you can back the campaign with confidence. Also, they flew past their goal in like an hour, so no need to worry about that.

from RSSMix.com Mix ID 8176395 https://techcrunch.com/2020/07/14/opencv-ai-kit-aims-to-do-for-computer-vision-what-raspberry-pi-did-for-hobbyist-hardware/ via http://www.kindlecompared.com/kindle-comparison/

0 notes

Text

OpenCV AI Kit aims to do for computer vision what Raspberry Pi did for hobbyist hardware

A new gadget called the OpenCV AI Kit, or OAK, looks to replicate the success of Raspberry Pi and other minimal computing solutions, but for the growing fields of computer vision and 3D perception. Its new multi-camera PCBs pack a lot of capability into a small, open-source unit and are now seeking funding on Kickstarter.

The OAK devices use their cameras and onboard AI chip to perform a number of computer vision tasks, like identifying objects, counting people, finding distances to and between things in frame, and more. This info is sent out in polished, ready-to-use form.

Having a reliable, low cost, low power draw computer vision unit like this is a great boon for anyone looking to build a smart device or robot that might have otherwise required several and discrete cameras and other chips (not to mention quite a bit of fiddling with software).

Image Credits: Luxonis

Like the Raspberry Pi, which has grown to become the first choice for hobbyist programmers dabbling in hardware, pretty much everything about these devices is open source on the permissive MIT license. And it’s officially affiliated with OpenCV, a widespread set of libraries and standards used in the computer vision world.

The actual device and onboard AI were created by Luxonis, which previously created the CommuteGuardian, a sort of smart brake light for bikes that tracks objects in real time so it can warn the rider. The team couldn’t find any hardware that fit the bill so they made their own, and then collaborated with OpenCV to make the OAK series as a follow-up.

There are actually two versions: The extra-small OAK-1 and triple-camera OAK-D. They share many components, but the OAK-D’s multiple camera units mean it can do true stereoscopic 3D vision rather than relying on other cues in the plain RGB image — these techniques are better now than ever but true stereo is still a big advantage. (The human vision system uses both, in case you’re wondering.)

The two OAK devices, with the world’s ugliest quarter for scale.

The idea was to unitize the computer vision system so there’s no need to build or configure it, which could help get a lot of projects off the ground faster. You can use the baked-in object and depth detection out of the box, or pick and choose the metadata you want and use it to augment your own analysis of the 4K (plus two 720p) images that also come through.

A very low power draw helps, too. Computer vision tasks can be fairly demanding on processors and thus use a lot of power, which was why a device like XNOR’s ultra-efficient chip was so promising (and why that company got snapped up by Apple). The OAK devices don’t take things to XNOR extremes but with a maximum power draw of a handful of watts, they could run on normal-sized batteries for days or weeks on end depending on their task.

The specifics will no doubt be interesting to those who know the ins and outs of such things — ports and cables and GitHub repositories and so on — but I won’t duplicate them here, as they’re all listed in orderly fashion in the campaign copy. Here’s the quick version:

Image Credits: Luxonis

If this seems like something your project or lab could make use of, you might want to get in quick on the Kickstarter, as there are some deep discounts for early birds, and the price will double at retail. $79 for the OAK-1 and $129 for the OAK-D sound like bargains to me based on their stated capabilities (they’ll be $199 and 299 eventually). And Luxonis and OpenCV are hardly fly-by-night organizations hocking vaporware, so you can back the campaign with confidence. Also, they flew past their goal in like an hour, so no need to worry about that.

0 notes

Text

Wireless Glove for Hand Gesture Acknowledgment: Sign Language to Discourse Change Framework in Territorial Dialect- Juniper Publishers

Abstract

Generally deaf-dumb people use sign language for communication, but they find it difficult to communicate in a society where most of the people do not understand sign language. Due to which communications between deaf-mute and a normal person have always been a challenging task. The idea proposed in this paper is a digital wireless glove which can convert sign language to text and speech output. The glove is embedded with flex sensors and a 9DOF inertial measurement unit (IMU) to recognize the gesture. A 9-degree of freedom inertial measurement unit (IMU) and flex sensors are used to track the orientation of fingers and motion of hand in three dimensional spaces which senses the gestures of a person in the form of bend of fingers and tilt of the hand fist. This system was tried for its practicality in changing over gesture based communication and gives the continuous discourse yield in local dialect and additionally shows the text on GLCD module. The text show being in English, the voice yield of the glove will be in provincial dialect (here Kannada). So this glove goes about as a communicator which in part encourages them to get their necessities and an interpreter giving greater adaptability in correspondence. In spite of the fact that the glove is planned for gesture based communication to discourse transformation, it is a multipurpose glove and discovers its applications in gaming, mechanical autonomy and therapeutic field.In this paper, we propose an approach to avoid the gap between customer and software robotics development. We define a EUD (End-User Development) environment based on the visual programming environment Scratch, which has already proven in children learning computer science. We explain the interests of the environment and show two examples based on the Lego Mindstorms and on the Robosoft Kompai robot.

Keywords: Sign Language; Flex Sensors; State Estimation Method; 3D Space; Gesture Recognition

Abbrevations: ANN: Artificial Neural Networks; SAD: Sum of Absolute Difference; IMU: Inertial Measurement Unit; ADC: Analog to Digital Converter; HCI: Human Computer Interface

Introduction

About nine thousand million people in the world are deaf and mute. How commonly we come across these people communicating with the normal world? The communication between a deaf and general public is to be a thoughtful issue compared to communication between visually impaired and general public. This creates a very small space for them as communication being a fundamental aspect of our life. The blind people can talk freely by means of normal language whereas the deaf-mute people have their own manual-visual language popularly known as sign language [1]. The development of the most popular devices for hand movement acquisition, glove-based systems started about 30 years ago and continues to engage a growing number of researchers. Sign language is the non-verbal form of intercommunication used by deaf and mute people that uses gestures instead of sound to convey or to express fluidly a speaker’s thoughts. A gesture in a sign language is a particular movement of the hands with a specific shape made out of them [2]. The conventional idea for gesture recognition is to use a camera based system to track the hand gestures. The camera based system is comparatively less user friendly as it would be difficult to carry around.

The main aim of this paper is to discuss the novel concept of glove based system that efficiently translates Sign Language gestures to auditory voice as well as text and also promises to be portable [3]. Several languages are being spoken all around the world and even the sign language varies from region to region, so this system aims to give the voice output in regional languages (here Kannada). For Sign language recognition few attempts have been made in the past to recognize the gestures using camera, Leaf switches and copper plates but there were certain limitations of time and recognition rate which restricted the glove to be portable. Mainly there were two well-known approaches viz. Image processing technique and another is processor and sensor based data glove [4]. These approaches are also known as vision based and sensor based techniques. Our system is also one such sensor based effort to overcome this communication barrier, which senses the hand movement through flex sensors and inertial measurement unit and then transmits the data wirelessly to the raspberry pi which is the main processor, that accepts digital data as input and processes it according to instructions stored in its memory, and outputs the results as text on GLCD display and a voice output.

Background Work

People who are hard of hearing or quiet are isolated in the cutting edge work environment as well as in regular daily existence making them live in their own different networks. For example, there have been enhancements in amplifiers and cochlear inserts for the hard of hearing and counterfeit voice boxes for the quiet with vocal rope harm. Be that as it may, these arrangements don’t come without drawbacks and expenses [5]. Cochlear inserts have even caused a tremendous debate in the hard of hearing network and numerous decline to considerably think about such arrangements. Thusly, we trust society still requires a compelling answer for expel the correspondence obstruction between hard of hearing and quiet people and nonmarking individuals. Our proposed arrangement and objective is to plan a Human Computer Interface (HCI) gadget that can make an interpretation of gesture based communication to content and discourse in provincial dialect, furnishing any hard of hearing and quiet people with the capacity to easily speak with anybody [6]. The thought is to plan a gadget put on a hand with sensors fit for assessing hand signals and after that transmitting the data to a preparing unit which plays out the communication via gestures interpretation. The last item will have the capacity to proficiently perform gesture based communication and give the focused on yield. We want to have the capacity to enhance the personal satisfaction of hard of hearing and quiet people with this gadget.

Comparison of Background Related Work

(Table 1)

Problem Definition

With a population of around 7.6 billion today communication is a strong means for understanding each other. Around nine thousand million individuals are deaf and mute. Individuals with discourse hindrance their vocal articulation are not reasonable, they require a specific skill like static state of the hand orientation to give a sign, more as manual-visual dialect prevalently known as sign language to communicate with general population. They think that it’s hard to impart in a general public where a large portion of the general population don’t comprehend sign language. Hence forth they find a little space to convey and do not have the capacity to impart at a more extensive territory [7].

Literature Survey

The system investigates the utilization of a glove to give communication via gestures interpretation in a way that enhances the techniques for previous plans. Few university research has taken an activity to make prototype devices as a proposed answer for this issue, these devices focus on reading and analyzing hand movement. However, they are lacking in their capacity to join the full scope of movement that gesture based communication requires, including wrist revolution and complex arm developments (Table 2).

Proposed System

(Figure 1)

Block Diagram Explanation

The sensor based system is designed using four 4.5 inch and two 2.2-inch flex sensors which are used to measure the degree to which the fingers are bent. These are sensed in terms of resistance values which is maximum for minimum bend radius. The flex sensor incorporates a potential divider network which is employed to line the output voltage across 2 resistors connected as shown in Figure 2.

The output voltage is determined using the following equation,

Where;

R1 - flex sensor resistance. R2 - Input resistance.

The external resistor and flex forms a potential divider that divides the input voltage by a quantitative relation determined by the variable and attached resistors. For particular gestures the current will change, as a result of the changing resistance of the flex sensor which is accommodated as analog data. One terminal of flex sensor is connected to the 3.3Volts and another terminal to the ground to close the circuit. A 9-Degree of Freedom Ardu Inertial Measurement Unit (IMU) is essential for accelerometer and gyroscope readings which is placed on the top of the hand to determine hand position. The co-related 3D coordinates are collected by the inertial measurement unit as input data [8]. The impedance values from flex sensors and IMU coordinates for individual gesture are recorded to enumerate the database. The database contains values assigned for different finger movements. When the data is fed from both flex sensors and IMU to Arduino nano it will be compute and compare with the predefined dataset to detect the precise gesture and transmitted wirelessly to the central processor i.e. raspberry pi via Bluetooth module. Raspberry Pi 3 is programed to display text output on GLCD. Graphic LCD is interfaced with the Raspberry pi3 using 20-bit universal serial bus in order to avoid bread board connection between processor and the display, and a 10Kohm trim potentiometer is used to control the brightness of display unit. Further to provide an auditory speech, pre-embedded regional language voice is assigned for each conditions as similar to the text database which is mapped with the impedance values. Two speakers are used with single jack of 3.5mm for connection and a USB to power-up the speakers [9]. When text is displayed, the processor will search for the voice signal which will be transmitted through speakers.

Methodology (Figure 3)

a) The gesture is served as an input to the system which is measured by both the sensors particularly from the f lex sensor in terms of impedance and the IMU gives the digital values.

b) These values from the flex sensor are analog in nature and is given to the Arduino nano which uses the analog to digital convertor consolidated in it to convert the resistive values to digital values.

c) IMU utilizes the accelerometer/gyroscope sensors to measure the displacement and position of the hand.

d) These qualities from both the sensors are fed to Arduino nano which contrasts it and the values stored in the predefined database, and further transmits this digital data wirelessly to the main processor by means of Bluetooth.

e) Central processor the raspberry pi3 is coded in python dialect for processing the received digital signals to generate the text output, for example, characters, numbers and pictures. Further, the text output is shown on Graphic-LCD display and next text to speech engine, here particularly espeak converter is utilized to give the soundrelated voice output [10].

Finally, system effectively delivers the output as text and auditory voice in regional dialect.

Prototype Implementation and its Working

In this system, the features are extracted from the hardware sensor inputs and the targets are the words in sign language. To realize the design requirements, several hardware components were considered during prototyping. Much of the hardware was selected due to their ease of use and accuracy. The Ardu 9DOF IMU was selected for convenience as it produces the computation necessary for linear acceleration and gyroscopic measurements. The roll, pitch and yaw measurements were found to be roughly ±2° accuracy which is far beyond sufficient for our sign language requirements. Since the flex sensor required additional analog pins, careful planning allowed us to fit the circuit in an agreeable manner on the hand glove. Space was managed to add in the HC05 Bluetooth module onto the device. All the sensors must be placed in a way as to not make contact with each other causing short circuits which and disruption of measurement readings [11]. Electrical tape was necessary to provide insulation for our sensors. The system recognizes gestures made by the hand movements by wearing the glove on which two sensors are attached, the first sensor is to sense the bending of five fingers, the flex sensor of 2.2 inches for thumb and for the other four fingers of 4.5 inches and the second sensor used is 9-DOF Inertial Measurement Unit to track the motion of hand in the three-dimensional space, which allows us to track its movement in any random direction by using the angular coordinates of IMU (pitch, roll and yaw). Since the output of f lex sensor is resistive in nature the values are converted to voltage using a voltage divider circuit.

The resistance values of 4.5-inch flex sensors range from 7K to 15K and for 2.2-inch flex sensor, it ranges from 20K to 40K, as shorter the radius the more resistor value. Another 2.5K ohm resistor is utilized to build a voltage divider circuit with Vcc supply being 3.3volts taken from Arduino nano processor, the voltage values from the voltage divider circuit being analog in nature are given to the Arduino nano processor which has an inbuilt ADC [12]. Further, the IMU senses the hand movements and gives the digital values in XYZ direction called the roll, yaw, pitch respectively. The values from the IMU and values of the flex sensors are processed in the Arduino nano which is interfaced with HC-05 Bluetooth module embedded on the glove which provides the approximate range of 10 meters. The data processed in nano are sent wirelessly through Bluetooth to the central processor i.e. Raspberry Pi which is coded in python, in a way to convert given values into the text signal by searching in database for that particular gesture. In accordance with the digital value received, the impedance values along with 3 dimensional IMU coordinates for each individual gestures are recorded to enumerate the database. The database contains collective resistance values assigned for different finger movements. When the computed data is received by the processor, it is compared with the measured dataset to detect the precise gestures. If the values matches, then the processor sends the designated SPI commands to display the texts according to gestures onto the GLCD and the espeak provides the text to speech facility giving audible voice output in regional language through the speakers. Further, for any next gestures made, both flex sensor and IMU detects and data is compared with the database already present in the processor and if it matches, displays in text format as well as audible output speech will be given by the speakers [13] (Figure 4).

Results

In this prototype system, the user forms a gesture and holds it approximately for 1 or 1.5 seconds to ensure proper recognition. Each gesture comprises of bending of all fingers in certain angles accordingly. Every bend of the sensor (finger) produces unique ADC value so that when different hand gesture is made, different ADC values are produced. Taking such ADC values for 4 different users, a table of average ADC values for each sensor is maintained where F1, F2, F3, F4 and F5 represents the little finger, the ring finger, the middle finger, the index finger and thumb respectively. Table 3 shows the gestures and corresponding words voiced out. The hand signs taken in the prototype can be easily modified using the concept of ADC count according to the user convenience. At the same time the voice output can be changed easily to gives a flexibility in change of language according to different regions (Figure 5).

Applications

a) Voice interpreter for mute people

b) No touch user interface