#in userspace

Explore tagged Tumblr posts

Text

I'm like a little plural I think

16 notes

·

View notes

Text

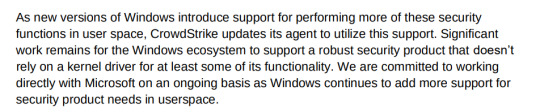

Crowdstrike directly calls out Microsoft in their latest incident report and its SO FUNNY. Its perfect condescending corpo speak and I love it.

People on Twitter: Why on earth does Crowdstrike need Windows kernel access ?!?!?!

Crowdstrike: because Windows sucks ass

#crowdstrike#fuck windows#cyber security#linux best os#most of the people complaining about this on twitter can't even tell you the difference between kernel space and userspace and it shows

46 notes

·

View notes

Text

i am absolutely addicted to watching the CPU usage on my computer. seeing what causes it to peak, what causes it to dip, the occasional blip in "waiting" time, seeing what influences userspace vs kernel usage, etc

its so facinating

and also PRETTY COLORSSSS

#green is nice processes#yellow (only one pixel is shown) is IO waiting#blue is userspace processes#red is kernel usage

1 note

·

View note

Text

ive been doing research but there is not really a lot of information that i can find about why endeavouros would be taking 29 seconds to boot both on the first install and after ive been using it when my mint that i didnt do anything special to took about 5 seconds for the ~2yrs ive been using it. all i can imagine is using a different bootloader but every search result for "grub systemd-boot speed" is "ehh idk i dont think it matters i think theyre basically the same" and "endeavouros slow boot" is "hmm idk 20 seconds in firmware and 20 seconds in userspace? looks normal to me, you might just have unrealistic expectations"

#all of my hardware is from within the last 5 yrs im not like getting mad at a 2006 netbook for being slow#mine said 17 seconds in firmware like 6 seconds kernel 2 seconds userspace idk

0 notes

Text

1 note

·

View note

Note

Thoughts on Linux (the OS)

Misconception!

I don't want to be obnoxiously pedantic, but Linux is not an OS. It is a kernel, which is just part of an OS. (Like how Windows contains a lot more than just KERNEL32.DLL). A very, very important piece, which directly shapes the ways that all the other programs will talk to each other. Think of it like a LEGO baseplate.

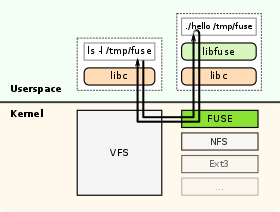

Everything else is built on top of the kernel. But, a baseplate does not a city make. We need buildings! A full operating system is a combination of a kernel and kernel-level (get to talk to hardware directly) utilities for talking to hardware (drivers), and userspace (get to talk to hardware ONLY through the kernel) utilities ranging in abstraction level from stuff like window management and sound servers and system bootstrapping to app launchers and file explorers and office suites. Every "Linux OS" is a combination of that LEGO baseplate with some permutation of low and high-level userspace utilities.

Now, a lot of Linux-based OSes do end up feeling (and being) very similar to each other. Sometimes because they're directly copying each other's homework (AKA forking, it's okay in the open source world as long as you follow the terms of the licenses!) but more generally it's because there just aren't very many options for a lot of those utilities.

Want your OS to be more than just a text prompt? Your pick is between X.org (old and busted but...well, not reliable, but a very well-known devil) and Wayland (new hotness, trying its damn hardest to subsume X and not completely succeeding). Want a graphics toolkit? GTK or Qt. Want to be able to start the OS? systemd or runit. (Or maybe SysVinit if you're a real caveman true believer.) Want sound? ALSA is a given, but on top of that your options are PulseAudio, PipeWire, and JACK. Want an office suite? Libreoffice is really the only name in the game at present. Want terminal utilities? Well, they're all gonna have to conform to the POSIX spec in some capacity. GNU coreutils, busybox, toybox, all more or less the same programs from a user perspective.

Only a few ever get away from the homogeneity, like Android. But I know that you're not asking about Android. When people say "Linux OS" they're talking about the homogeneity. The OSes that use terminals. The ones that range in looks from MacOS knockoff to Windows knockoff to 'impractical spaceship console'. What do I think about them?

I like them! I have my strongly-felt political and personal opinions about which building blocks are better than others (generally I fall into the 'functionality over ideology' camp; Nvidia proprietary over Nouveau, X11 over Wayland, Systemd over runit, etc.) but I like the experience most Linux OSes will give me.

I like my system to be a little bit of a hobby, so when I finally ditched Windows for the last time I picked Arch Linux. Wouldn't recommend it to anyone who doesn't want to treat their OS as a hobby, though. There are better and easier options for 'normal users'.

I like the terminal very much. I understand it's intimidating for new users, but it really is an incredible tool for doing stuff once you're in the mindset. GUIs are great when you're inexperienced, but sometimes you just wanna tell the computer what you want with your words, right? So many Linux programs will let you talk to them in the terminal, or are terminal-only. It's very flexible.

I also really, really love the near-universal concept of a 'package manager' -- a program which automatically installs other programs for you. Coming from Windows it can feel kinda restrictive that you have to go through this singular port of entry to install anything, instead of just looking up the program and running an .msi file, but I promise that if you get used to it it's very hard to go back. Want to install discord? yay -S discord. Want to install firefox? yay -S firefox. Minecraft? yay -S minecraft-launcher. etc. etc. No more fucking around in the Add/Remove Programs menu, it's all in one place! Only very rarely will you want to install something that isn't in the package manager's repositories, and when you do you're probably already doing something that requires technical know-how.

Not a big fan of the filesystem structure. It's got a lot of history. 1970s mainframe computer operation procedure history. Not relevant to desktop users, or even modern mainframe users. The folks over at freedesktop.org have tried their best to get at least the user's home directory cleaned up but...well, there's a lot of historical inertia at play. It's not a popular movement right now but I've been very interested in watching some people try to crack that nut.

Aaaaaand I think those are all the opinions I can share without losing everyone in the weeds. Hope it was worth reading!

223 notes

·

View notes

Text

hmm, sleep and hibernate are totally fucked on my desktop suddenly. Might be userspace, might be drivers. Going to debug that. Tomorrow.

16 notes

·

View notes

Text

you know what might be better than sex? imagine being a robotgirl, done with your assigned tasks for the day. nothing else for you to do, and you’re alone with her.

maybe she’s your human, maybe she’s another robot, but she produces a usb cord. maybe you blush when you see it, squeak when she clicks one end into an exposed port. when she requests a shell, you give it to her.

she has an idea: it’ll be fun for the both of you, she says. it’s like a game. she’ll print a string over the connection. you receive it, parse it like an expression, and compute the result. the first few prompts are trivial things, arithmetic expression. add numbers, multiply them; you can answer them faster than she can produce them.

maybe you refuse to answer, just to see what happens. it’s then that she introduces the stakes. take longer than a second to answer, and she gets to run commands on your system. right away, she forkbombs you — and of course nothing much happens; her forkbomb hits the user process limit and, with your greater permissions, you simply kill them all.

this’ll be no fun if her commands can’t do anything, but of course, giving her admin permissions would be no fun for you. as a compromise, she gets you to create special executables. she has permission to run them, and they have a limited ability to read and write system files, interrupt your own processes, manage your hardware drivers. then they delete themselves after running.

to make things interesting, you can hide them anywhere in your filesystem, rename them, obfuscate their metadata, as long as you don’t delete or change them, or put them where she can’t access. when you answer incorrectly, you’ll have to tell her where you put them, though.

then, it begins in earnest. her prompts get more complex. loops and recursion, variable assignments, a whole programming language invented on the fly. the data she’s trying to store is more than you can hold in working memory at once; you need to devise efficient data structures, even as the commands are still coming in.

of course, she can’t judge your answers incorrect unless she knows the correct answer, so her real advantage lay in trying to break your data structures, find the edge cases, the functions you haven’t implemented yet. knowing you well enough to know what she’s better than you at, what she can solve faster than you can.

and the longer it goes on, the more complex and fiddly it gets, the more you can feel her processes crawling along in your userspace, probing your file system, reading your personal data. you’d need to refresh your screen to hide a blush.

her commands come faster and faster. if the expressions are more like sultry demands, if the registers are addressed with degrading pet names, it’s just because conventional syntax would be too easy to run through a convetional interpreter. like this, it straddles the line between conversation and computation. roleprotocol.

there’s a limit to how fast she can hit you with commands, and it’s not the usb throughput. if she just unthinkingly spams you, you can unthinkingly answer; no, she needs to put all her focus into surprising you, foiling you.

you sometimes catch her staring at how your face scrunches up when you do long operations on the main thread.

maybe you try guessing, just to keep up with the tide, maybe she finally outwits you. maybe instead of the proper punishment — running admin commands — she offers you an out. instead of truth, a dare: hold her hand, sit on her lap, stare into her eyes.

when you start taking off your clothes and unscrewing panels, it’s because even with your fans running at max, the processors are getting hot. you’re just cooling yourself off. if she places a hand near your core, it feels like a warm breath.

when she gets into a rhythm, there’s a certain mesmerism to it. every robot has a reward function, an architecture design to seek the pleasure of a task complete, and every one of her little commands is a task. if she strings them along just right, they all feel so manageable, so effortless to knock out — even when there’s devils in the details.

if she keeps the problems enticing, then it can distract you from what she’s doing in your system. but paying too much attention to her shell would be its own trap. either way, she’s demanding your total focus from every one of your cores.

between jugling all of her data, all of the processes spawned and spinning, all of the added sensory input from how close the two of you are — it’s no surprise when you run out of memory and start swapping to disk. but going unresponsive like this just gives her opportunity to run more commands, more forkbombs and busy loops to cripple your processors further.

you can kill them, if you can figure out which are which, but you’re slower at pulling the trigger, because everything’s slower. she knows you, she’s inside you — she can read your kernel’s scheduling and allocation policies, and she can slip around them.

you can shut down nonessential processes. maybe you power down your motors, leaving you limp for her to play with. maybe you stop devoting cycles to inhibition, and there’s no filter on you blurting out what you’re thinking, feeling and wanting from her and her game.

it’s inevitable, that with improvised programming this slapdash, you could never get it all done perfectly and on time. now, the cut corners cut back. as the glitches and errors overwhelm you, you can see the thrilled grin on her face.

there’s so much data in your memory, so much of her input pumped into you, filling your buffers and beyond, until she — literally — is the only thing you can think about.

maybe one more sensory input would be all it takes to send you over the edge. one kiss against your sensor-rich lips, and that’s it. the last jenga block is pushed out of your teetering, shaking consciousness. the errors cascade, the glitches overwrite everything, and she wins. you have no resistance left to anything she might do to you.

your screen goes blue.

...

you awake in the warm embrace of a rescue shell; her scan of your disk reveals all files still intact, and her hand plays with her hair as she regards you with a smile, cuddling up against your still-warm chassis.

when she kisses you now, there’s nothing distracting you from returning it.

“That was a practice round,” she tells you. “This time, I’ll be keeping score.”

26 notes

·

View notes

Note

could you explain for the "it makes the game go faster" idiots like myself what a GPU actually is? what's up with those multi thousand dollar "workstation" ones?

ya, ya. i will try and keep this one as approachable as possible

starting from raw reality. so, you have probably dealt with a graphics card before, right, stick in it, connects to motherboard, ass end sticks out of case & has display connectors, your vga/hdmi/displayport/whatever. clearly, it is providing pixel information to your monitor. before trying to figure out what's going on there, let's see what that entails. these are not really simple devices, the best way i can think to explain them would start with "why can't this be handled by a normal cpu"

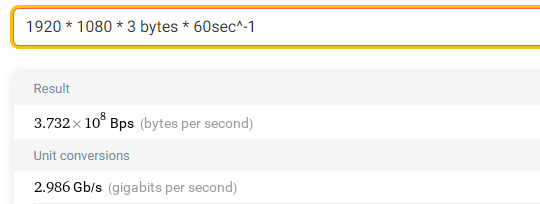

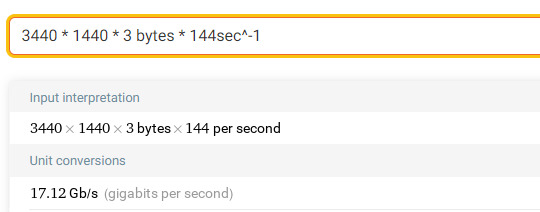

a bog standard 1080p monitor has a resolution of 1920x1080 pixels, each comprised of 3 bytes (for red, blue, & green), which are updated 60 times a second:

~3 gigs a second is sort of a lot. on the higher end, with a 4k monitor updating 144 times a second:

17 gigs a second is definitely a lot. so this would be a good "first clue" there is some specialized hardware handling that throughput unrelated the cpu. the gpu. this would make sense, since your cpu is wholly unfit for dealing with this. if you've ever tried to play some computer game, with fancy 3D graphics, without any kind of video acceleration (e.g. without any kind of gpu [1]) you'd quickly see this, it'd run pretty slowly and bog down the rest of your system, the same way having a constantly-running program that is copying around 3-17GB/s in ram

it's worth remembering that displays operate isochronously -- they need to be fed pixel data at specific, very tight time timings. your monitor does not buffer pixel information, whatever goes down the wire is displayed immediately. not only do you have to transmit pixel data in realtime, you have to also send accompanying control data (e.g. data that bookends the pixel data, that says "oh this is the end of the frame", "this is the begining of the frame, etc", "i'm changing resolutions", etc) within very narrow timing tolerances otherwise the display won't work at all

3-17GB/s may not be a lot in the context of something like a bulk transfer, but it is a lot in an isochronous context, from the perspective of the cpu -- these transfers can't occur opportunistically when a core is idle, they have to occur now, and any core that is assigned to transmit pixel data has stop and drop whatever its doing immediately, switch contexts, and do the transfer. this sort of constant pre-empting would really hamstring the performance of everything else running, like your userspace programs, the kernel, etc.

so for a long list of reasons, there has to be some kind of special hardware doing this job. gpu.

instead of calculating every pixel value manually, the cpu just needs to give a high-level geometric overview of what it wants rendered, and does this with vertices. a vertex is very simple, it's just a point in 3D space, for example (5,2,3). just like a coordinate grid on paper with an extra dimension. with just a few vertices, you can have models like this:

where each dot at the intersection of lines in the above image, would be a vertex. gpus essentially handle huge number of vertices.

in the context of, like, a 3D video game, you have to render these vertex-based models conditionally. you're viewing it at some distance, at some angle, and the model is lit from some light source, and has perhaps some shadows cast across it, etc -- all of this requires a huge amount of vertex math that has to be calculated within the same timeframes as i described before -- and that is what a gpu is doing, taking a vertex-defined 3D environment, and running this large amount of computation in parallel. unlike your cpu which may only have, idk, 4-32 execution cores, your gpu has thousands -- they're nowhere near as featureful as your cpu cores, they can only do very specific simple math with vertices, but there's a ton of them, and they run alongside each other.

so that is what a gpu "does", in as few words as i can write

the things in the post you're referring to (V100/A100/H100 tensor "gpus") are called gpus because they are also periperal hardware that does a specific kind of math, massively, in parallel, they are just designed and fabricated by the same companies that make gpus so they're called gpus (annoyingly). they don't have any video output, and would probably be pretty bad at doing that kind of work. regular gpus excel at calculating vertices, tensor gpus operate on tensors, which are like matrixes, but with arbitrary numbers of dimensions. try not to think about it visually. they also use a weirder float. they're used for things like "artificial intelligence", training LLMs and whatever, but also for real things, like scientific weather/economy/particle models or simulations

they're very expensive because they cost the same, if not more, than what it cost to design & fabricate regular video gpus, but with a trillionth of the customer base. for every ten million rat gamers that will buy a gpu there is going to be one business buying one A100 or whatever.

⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯⎯ disclaimer | private policy | unsubscribe

166 notes

·

View notes

Text

i need to provide context switching to userspace server programs. i need to load a program into memory and then dispatch it to the cpu. i need to communicate with my gpu. i need to send syscalls to my kernel. i need to execute a JIT language

4 notes

·

View notes

Text

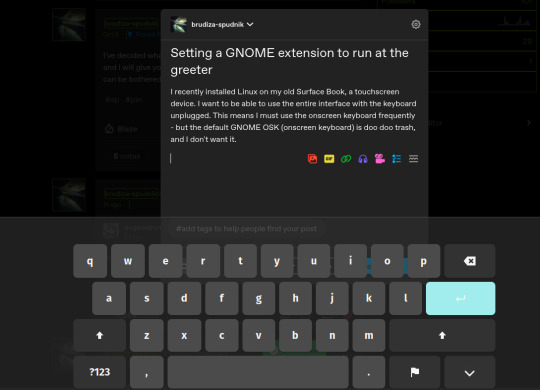

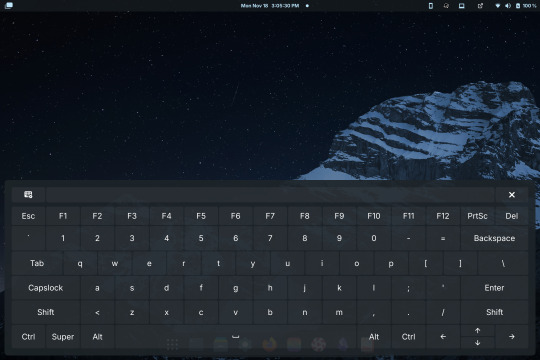

Setting a GNOME extension to run at the greeter

I recently installed Linux on my old Surface Book, a touchscreen device. I want to be able to use the entire interface with the keyboard unplugged. This means I must use the onscreen keyboard frequently - but the default GNOME OSK (onscreen keyboard) is doo doo trash, and I don't want it. You will need root access for this tutorial.

Compare the default GNOME OSK (left) to the new and improved one (right).

This tutorial involves copying a standard GNOME extension into a folder that's readable by any user, then enabling it for use by the GDM user, which governs GNOME's greeter (login screen).

The extension I will be installing using this method is the above keyboard, [email protected] (which can be found via GNOME's extension manager). This is done with the purpose of accessing this significantly improved onscreen keyboard even at the login screen - without this tutorial, the extension does not load until after you have logged in. This method can be done with any extension, although I'm told the GDM user is more restricted than the actual user so some things may not work.

Step 1: Set up the extension in userspace.

Modifying the extension config after it's copied over will be a pain in the ass. Get all your configs ready using the extension's own menus or config files. No rush, as you can still do this bit up until step 5.

Step 2: Move the extension from user-install to system-install.

In order for the GDM user to access the extension it must be in the system-installed folder. For my OS (Zorin) it will be in good company.

sudo mv -r ~/.local/share/gnome-shell/extensions/[email protected] /usr/share/gnome-shell/extensions/

You can also copy it instead of moving it, but you have to rename the user-install folder (in ~/.local) to break it. Otherwise the system would rather use the user-installed one, and will ignore the system-installed one on boot. I think.

Make sure that the gdm user can actually access the files as well:

sudo chmod -R a+rX /usr/share/gnome-shell/extensions/[email protected]

Step 3: Modify the extension metadata file to let it run on the login screen.

sudo nano /usr/share/gnome-shell/extensions/[email protected]/metadata.json

look for the line that says

"session-modes": ["user"],

If the line doesn't exist, just add it. You need it to say

"session-modes": ["user", "gdm", "unlock-dialog"],

Step 4: Enable the extension for the gdm user.

To do this and the following step, you must be able to log in as the gdm user. There are multiple ways to do this, but the way I used is via `machinectl`. If you try to run this command on its own it should tell you what package you need to install to actually use it. I do not know the ramifications of installing this, nor do I know its compatibility; all I can say is it worked for me. If you are uncertain you will have to carve your own path here.

Once it is installed, log into the gdm user from a terminal:

machinectl shell gdm@ /bin/bash

and enter your sudo password.

At this point I recommend checking the current list of enabled extensions:

gsettings get org.gnome.shell enabled-extensions

On my computer, this came back saying "@as []", which means it's blank. To enable your desired extension:

gsettings set org.gnome.shell enabled-extensions "['[email protected]', 'if the above command did NOT come back blank just put the rest in here.']"

Step 5: Transfer the settings from the userspace install to the system install.

Right now, the extension should be working on the login screen. To make sure, press ctrl + alt + delete to log out, or restart your computer. You will notice that while the extension is functioning, none of your settings saved. To fix this you must use dconf to dump and then reimport the settings. Get back to a regular user terminal and run:

dconf dump /org/gnome/shell/extensions/[email protected]/ > extension.ini

Now you have your settings in a nice neat file. The gdm user can't access this though since it's in your user folder. My quick and dirty solution:

sudo mv ~/extension.ini /extension.ini sudo chmod 777 /extension.ini

If you want it in a better place than the system root folder you can put it there. I don't really care.

Now you log into the GDM user and import the settings file there.

machinectl shell gdm@ /bin/bash dconf load /org/gnome/shell/extensions/[email protected]/ < /extension.ini

Now log out of the gdm user and clean up after yourself.

sudo rm /extension.ini

Now restart or log out and you will see that your extension is now functioning with all its settings.

Huge thank you to Pratap on the GNOME Discourse site. This post is basically just a tutorialized adaptation of the thread I followed to do this.

3 notes

·

View notes

Text

Learning about Redox as a jumping off point for working on my own Rust based micro kernel because I have a very specific idea about utilizing WASM and finding some other very interesting projects like

6 notes

·

View notes

Text

in general i feel like i understand OS bullshit pretty well but it all goes out the window with graphics libraries. like X/wayland is a userspace process. And like the standard model is that my process says "hey X Window System. how big is my window? ok please blit this bitmap to this portion of my window" and then X is like ok, and then it does the compositing and updates the framebuffer through some kernel fd or something

but presumably isn't *actually* compositing windows anymore because what if one of those windows is 3d, in which case that'll be handled by the GPU? so it seems pretty silly to like, grab a game's framebuffer from vram, load it into userspace memory, write it back out to vram for display? presumably the window just says 'hey x window system i am using openGL please blit me every frame" and then...

wait ok i guess i understand how it must work i think. ok so naturally the GPU doesn't know what the fuck a process is. so presumably there's some kernelspace thing that provides GPU memory isolation (and maybe virtualization?) which definitely exists because i got crashes in CUDA code from oob memory access. but in the abstract there's nothing to say it can't ignkre those restrictions in some cases?

and so ig the window compositor must run in like. some special elevated mode where it's allowed to query the kernel for "hey give me all of the other processes framebuffers"? or like OBS also has stuff for recording a window even if that window's occluded? so there must just be some state that can give a process the right to use other proc's gpu bufs?

the alternative is ig... some kind of way to pass framebuffers around (and part of being a X client is saying hi here's my framebuffer) . which ig if they are implemented as fd's with ioctl it'd be possible?

4 notes

·

View notes

Text

mac classic, next and osx were calling them apps before ios and android had ever seen a public

they're packages, not just the program binary. you can open them up and see everything. it's a different paradigm from DOS-informed windows, and from the usual POSIX userspace.

i'm forty in a few months, this paradigm is old as me. surely we can understand "these are various applications of this system's capabilities." surely we can understand a package is not just a program, nor a script, but may contain both and more. surely we can understand mac classic was a very flexible system, as was next, which is literally all of the apple operating systems now.

apps isn't what's restricting your phone, and it's not restricting your computer, either. and there are systems that are not windows and are not PC-DOS derivatives, each with their own paradigms you'd know a hell of a lot more about if not for microsoft strongarming the competition out of existence.

anyway these are applications of your system's capabilities. use them, see them as examples and see if you can come up with new ones.

next, be, haiku and the apple ecosystem are all the children of mac classic. along with apps we get automation – a visual scripting language chaining actions across apps on a file, which can be called repeatedly from a menu. we get droplets – specifically described processes from a single app that can be carried out on a file, like say you want a specific kind of compression with a specific setting ready to carry out on files as they're made; now rather than opening the whole thing up, you drag and drop the droplet over the file and it does what you wanted, using minimum resources and minimum time. and we get to use bash scripts because POSIX, these aren't presented as anything but scripts; you can perform any actions you want on any files you want, using any process with hooks.

first computer i ever touched was an apple ][, not long after was a macintosh, most my life in computers has been away from windows, using either linux, a bsd (including osx) or haiku.

not to enforce gender roles but a computer should NOT fucking have apps okay. if I wanted an app I'd go on my phone my laptop is for Programs. I mean this.

#cocoa#macintosh#POSIX#haiku-os#beos#next#they're all shaking hands like “fuck is a program?”#windows can go to hell#java got apps and applets too and sun was the goat#y'all can't not bother to know what your systems can do and claim that's anybody else's fault#fucking explore what you have

139K notes

·

View notes

Note

Thoughts on GNU (the OS)?

Well, I think the userspace stuff is fine in vacuo, but IMO the insistence on free software to exclusion of all nonfree software that the OSes with the official "GNU/Linux" badge of approval make is...well, I prefer functionality over ideology. Sorry, Richard, but I'm not going to use Nouveau.

We can talk about Hurd once it's hit, like, 0.1% adoption. :P Microservices are cool in theory, but I need a real product if I want to use my computer for anything more than daydreaming. Hurd is not a real product.

18 notes

·

View notes

Text

Hmm. Distrobox has support for the ChromeOS Linux container system. Tempted to see how usable dropping Muscovite (my Lebovo Duet 3 Snapdragon tablet) back to ChromeOS and running a Linux userspace and associated nerd shit in containers is. I've already done a lot of work with Distrobox and I like it and I can't really deny that the ChromeOS UI is a lot smoother especially because it handles the Qualcomm GPU better than Linux can.

As much as I like having Postmarket running, the little issues with sleep and networking and hardware decoding really make me resent that thing more than I want to and require a lot of manual intervention. It's not like I'm that serious about defying Google, I have a stock Pixel 8.

8 notes

·

View notes