#latency solutions

Explore tagged Tumblr posts

Text

5G, Edge AI, and the Death of the Cloud?

Is the cloud… dying? Not quite. But 5G and Edge AI are challenging its dominance — fast. In 2025, real-time computing is moving closer to devices, and businesses are waking up to the power of local intelligence over remote dependence. Let’s break down what’s happening — and why it matters for your business. 5G, Edge AI, and the Death of the Cloud? What is Edge AI? Edge AI refers to AI models…

0 notes

Text

Enterprise-Grade Datacenter Network Solutions by Esconet

Esconet Technologies offers cutting-edge datacenter network solutions tailored for enterprises, HPC, and cloud environments. With support for high-speed Ethernet (10G to 400G), Software-Defined Networking (SDN), Infiniband, and Fibre‑Channel technologies, Esconet ensures reliable, scalable, and high-performance connectivity. Their solutions are ideal for low-latency, high-bandwidth applications and are backed by trusted OEM partnerships with Cisco, Dell, and HPE. Perfect for businesses looking to modernize and secure their datacenter infrastructure. for more details visit: Esconet Datacenter Network Page

#Datacenter Network#Enterprise Networking#High-Speed Ethernet#Software-Defined Networking (SDN)#Infiniband Network#Fibre Channel Storage#Network Infrastructure#Data Center Solutions#10G to 400G Networking#Low Latency Networks#Esconet Technologies#Datacenter Connectivity#Leaf-Spine Architecture#Network Virtualization#High Performance Computing (HPC)

0 notes

Text

#Low latency IoT#Energy-efficient IoT solutions#5G IoT#LPWAN for IoT#IoT device communication#IoT network scalability#Physical layer security IoT

0 notes

Text

#VIAVI Solutions#low-latency#OFC2025#AI#Networking#OpticalTesting#DataCenters#Innovation#electronicsnews#technologynews

1 note

·

View note

Text

there should be a practical way to filter out the poe whisper sound from firefox (aka youtube/twitch) so I don't get confused

#path of exile#path of exile 2#like im sure a very high effort solution is possible but it'd probably still have a latency and/or perf cost#maybe if we get an ah I will be free finally lol

0 notes

Text

The Synergy Between NDI and LiveU in Live Broadcasting Workflows: Transforming Remote Production and Cloud Solutions

New Post has been published on https://thedigitalinsider.com/the-synergy-between-ndi-and-liveu-in-live-broadcasting-workflows-transforming-remote-production-and-cloud-solutions/

The Synergy Between NDI and LiveU in Live Broadcasting Workflows: Transforming Remote Production and Cloud Solutions

In this article by Daniel Pisarski for LiveU, the author explores the powerful synergy between NDI (Network Device Interface) and LiveU, two game-changing technologies in the world of live broadcasting. Since NDI’s introduction in 2015, LiveU recognized its potential as a simple, IP-based protocol for local interconnectivity, providing broadcasters with the ability to easily integrate video contributions from remote locations. By incorporating NDI into its receive servers like the LU2000 and LU4000, LiveU enabled broadcasters to seamlessly inject video into IP workflows, further enhancing broadcast quality and efficiency. This integration, coupled with LiveU’s proprietary Reliable Transport (LRT™), allows broadcasters to transmit live video content over cellular networks, leveraging the simplicity and scalability of NDI for efficient IP video transmission.

As NDI’s influence in live broadcasting has grown, it has become a popular choice for a variety of industries, including live sports, news, and streaming. Initially embraced in Pro-AV, live streaming, and houses of worship, NDI has expanded its reach into larger broadcast environments, gaining significant traction in the wake of the COVID-19 pandemic. The protocol’s scalability and ability to support cloud-based workflows made it the ideal solution for broadcasters navigating remote production challenges. While larger broadcasters were initially hesitant due to concerns over competing protocols like SMPTE, NDI’s ability to support cloud workflows and its increasing industry support have made it an attractive option for broadcasters seeking to modernize their operations.

NDI stands out for its low-latency, lossless quality, and wide adoption, making it the preferred protocol for broadcasters operating in private cloud environments. Although alternatives like JPEG XS are emerging, NDI’s ease of use, cost-effectiveness, and widespread support have solidified its role as the go-to solution for cloud production. As broadcasters increasingly migrate to virtual private clouds, the ability to seamlessly integrate technologies like LiveU’s bonded cellular solutions with NDI interconnect solutions is essential for maintaining efficient workflows.

The COVID-19 pandemic highlighted the need for flexible, cloud-based broadcasting solutions. As broadcasters struggled with limited access to traditional facilities, cloud production became a critical solution, enabling them to continue producing content remotely. LiveU played a key role in this transition, providing a ground-to-cloud video contribution solution, while NDI facilitated in-cloud interconnectivity. This combination of LiveU’s reliable transport and NDI’s interconnect solutions allowed broadcasters to scale operations, remain agile, and embrace the flexibility of remote production.

The collaboration between LiveU and NDI has reshaped live broadcasting, offering broadcasters greater efficiency, scalability, and the ability to work seamlessly in cloud-based environments. As broadcasters continue to explore the benefits of cloud workflows and remote production, the integration of NDI and LiveU technologies will remain pivotal in driving innovation and improving live broadcast operations.

vimeo

Read the full article by Dan Pisarski for LiveU HERE

Learn more about LiveU below:

#adoption#agile#Article#author#Cloud#cloud solutions#clouds#Collaboration#content#covid#driving#efficiency#Facilities#Full#game#Industries#Industry#Innovation#integration#IP#it#latency#Learn#network#networks#News#Pandemic#Private Cloud#Production#Read

0 notes

Text

Problem: TECH ISSUES WITH YOUR VR HEADSET?!

Solution: How about removing the headset first and disconnecting from the horrific dystopia it just created for you on behalf of the AI it was programmed with.

But that would be too easy being a horror movie and all, wouldn't it?!

#latency#movie#movies#problem#solution#anti ai#dystopia#ai generated#artificial intelligence#ai art#ai girl#ai artwork#ai#a.i. generated#a.i. art#a.i.

0 notes

Text

Optimizing Connectivity: The Role of Edge Colocation in Today’s Digital Landscape

Edge Colocation is a rising trend in the data centre industry, representing a shift towards decentralized infrastructure to meet the demands of emerging technologies. As the market evolves, companies are increasingly adopting edge colocation solutions to enhance their IT capabilities and address the challenges posed by latency-sensitive applications.

Edge colocation refers to the deployment of data centre facilities at the edge of the network, closer to end-users and devices. This approach aims to reduce latency, enhance performance, and accommodate the growing volume of data generated by IoT devices, 5G networks, and other edge computing applications.

The emergence of edge colocation is driven by the need for faster data processing and reduced latency, critical factors in industries like gaming, healthcare, and autonomous vehicles. Traditional centralized data centres face limitations in delivering real-time responses for these applications, making edge colocation an attractive solution.

Why Companies are Adopting Edge Colocation

Reduced Latency

Edge colocation minimizes data travel distances, leading to lower latency and improved application performance. This is crucial for applications requiring real-time interactions, such as online gaming and autonomous vehicles.

Scalability

Edge colocation allows companies to scale their IT infrastructure more efficiently by distributing resources across multiple edge locations. This flexibility is beneficial for businesses experiencing unpredictable growth or seasonal spikes in demand.

Enhanced Reliability

By decentralizing data centres, companies can enhance the reliability of their services. Redundant edge locations provide backup support, reducing the risk of downtime in the event of a localized failure.

Compliance and Data Sovereignty

Edge colocation helps address regulatory and compliance requirements by allowing companies to store and process data within specific geographic regions. This is particularly important in industries with strict data sovereignty regulations.

Download the sample report of Market Share: Edge Colocation

Market Intelligence Reports

Quadrant Knowledge Solutions provides valuable insights into the edge colocation market through two key reports:

Market Share: Edge Colocation, 2022, Worldwide

This report outlines the market landscape, identifying key players and their respective market shares. Understanding the competitive landscape is crucial for businesses looking to make informed decisions about their edge colocation providers.

Market Forecast: Edge Colocation, 2022–2027, Worldwide

The forecast report provides insights into the future trends and growth opportunities in the edge colocation market. This information is invaluable for companies planning their long-term IT strategies and investments in edge infrastructure.

The Significance of Market Research Reports for Edge Colocation

In the rapidly evolving landscape of edge computing, companies are increasingly turning to market research reports to navigate the complexities of edge colocation. Here’s why these reports are crucial:

Industry Insight and Trends

- Market research reports provide in-depth insights into the current state of the edge colocation market, offering a comprehensive overview of emerging trends, challenges, and opportunities.

- Understanding industry dynamics allows companies to make informed decisions about their edge computing strategies, ensuring they align with market trends.

Competitive Landscape

- Reports delve into the competitive landscape, identifying key players, their market share, and strategic initiatives.

- Companies can use this information to benchmark themselves against competitors, assess market saturation, and identify potential collaboration or differentiation opportunities.

Market Size and Forecast

- Accurate market sizing and forecasting enable businesses to gauge the growth potential of edge colocation services.

- These insights assist in strategic planning, resource allocation, and market positioning, ensuring companies are well-prepared for the future trajectory of the edge computing industry.

Risk Mitigation

- Market research reports highlight potential risks and challenges associated with edge colocation, allowing companies to proactively address issues and build resilient strategies.

- Understanding market risks helps organizations develop contingency plans, ensuring they can navigate uncertainties effectively.

Customer Insights

- Reports often include customer preferences, requirements, and satisfaction levels, providing valuable insights into the demands of end-users.

- This customer-centric data aids companies in tailoring their edge colocation services to meet the specific needs and expectations of their target audience.

Download the sample report of Market Forecast: Edge Colocation

Why Choose Quadrant Knowledge Solutions

Quadrant Knowledge Solutions stands out as a reliable source for market intelligence, and here’s why companies should turn to them for insights into edge colocation:

Expertise and Credibility

- Quadrant Knowledge Solutions is known for its industry expertise and credibility. Their reports are crafted by seasoned analysts who thoroughly understand the nuances of the market.

- The firm’s reputation for delivering accurate and insightful analyses enhances the reliability of the information presented in their reports.

Comprehensive Coverage

- Quadrant’s reports provide comprehensive coverage, offering a detailed examination of various aspects of the edge colocation market, including market share, growth drivers, challenges, and future forecasts.

- This comprehensive approach ensures that companies receive a holistic view of the market, enabling well-informed decision-making.

Timely and Relevant Information

- In the fast-paced tech industry, timeliness is crucial. Quadrant Knowledge Solutions delivers reports that are timely, ensuring that businesses receive the most up-to-date information to inform their strategies.

Customized Solutions

- Quadrant understands that one size doesn’t fit all. Their reports often include insights and recommendations tailored to different business sizes, industries, and geographies, providing companies with actionable intelligence aligned with their unique requirements.

Talk To Analyst: https://quadrant-solutions.com/talk-to-analyst

In conclusion, the rise of edge colocation signifies a paradigm shift in data centre strategies, driven by the need for low-latency, scalable, and reliable infrastructure. As companies increasingly recognize the benefits of edge computing, market intelligence reports play a pivotal role in guiding businesses toward optimal edge colocation solutions. Market research reports, particularly those offered by Quadrant Knowledge Solutions, serve as indispensable tools for companies venturing into the edge colocation space. By leveraging these reports, businesses can gain a competitive edge, mitigate risks, and strategically position themselves in the dynamic landscape of edge computing.

#In conclusion#the rise of edge colocation signifies a paradigm shift in data centre strategies#driven by the need for low-latency#scalable#and reliable infrastructure. As companies increasingly recognize the benefits of edge computing#market intelligence reports play a pivotal role in guiding businesses toward optimal edge colocation solutions. Market research reports#particularly those offered by Quadrant Knowledge Solutions#serve as indispensable tools for companies venturing into the edge colocation space. By leveraging these reports#businesses can gain a competitive edge#mitigate risks#and strategically position themselves in the dynamic landscape of edge computing.

0 notes

Text

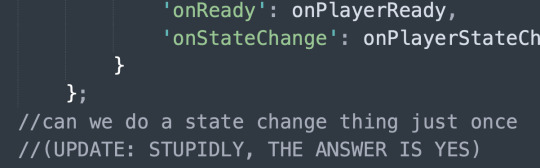

oh i forgot this one:

yeah no i refuse to believe that 170+ people have now successfully done all their remote learning course completions + tradesperson business license renewals on my stupid god damn web app.

#just use a google javascript api they said. it'll be fine & not at all like last time they said.#me @ past me#on like. i think three separate occasions now lol.#IT'S NOT MY FAULT. EVERY TIME IT IS SUCH A PAIN IN THE ASS THAT I JUST INSTANTLY REPRESS ALL MEMORY OF THE PROCESS.#the aforementioned Dumbfuck Clown Solution in the original post above was a side effect of the google maps distance matrix api lol.#apis be like#hey you know how the most fun & cool quality of javascript is how you can in no way ever trust it to execute in the order you wrote it#what if that but ALSO u gotta do several asynchronous requests for data from google servers with various degrees of latency#number of requests is variable BUT you will NEED TO BE SURE you have FINISHED ALL OF THEM before continuing to next step#oh lol you thought jquery .each would work because it has an iterator in it AAAHAHA YOU FOOL.#AGAIN YOU FALL INTO THE TRAP OF ASSUMING JAVASCRIPT WILL BEHAVE LIKE A PROGRAMMING LANGUAGE.#WHEN WILL U LEARN.

7 notes

·

View notes

Note

Legionspace... it is... difficult for this one to grasp. It should be natural, but it is foreign.

Is it... safe enough to...

Build a bridge through?

This one would see her freinds, look them in the eye, if only through data.

< L4 Ma'ii: Hello again, Styx. Since receiving your message, I've given this a bit of thought.

For your purposes, I think Legionspace is probably a poor option. I think you'll find that an Omninet connection of reasonable bandwidth and an enhanced subjectivity suite, or some other form of neural intermediary, will prove more than sufficient to emulate the experience of physically meeting your friends.

Allow me to explain. >

< I see no reason why Legionspace shouldn't be foreign to you. It's a highly specialized environment, developed for military use. Naval NHPs need intense training and regular drills to gain tactical proficiency in its use. It serves as a naval battleground, designed to consolidate, manage, and direct the digital and cognitive power of entire fleets; a terrible choice for recreation or enjoyment. I wouldn't be surprised if it has been used for such purposes before, but this is far from it was designed to do.

Its primary function is to provide an extremely low latency, high bandwidth medium for transmission of experiences or qualia between NHPs. Such a medium is necessary to support the formation of gestalts, that is, the temporary merging of multiple NHP subjectivities into singular, remarkably powerful intelligences.

Legionspace is not meant for use by humans, except through multiple degrees of ontologic filtering and anthropocentric translation. In fact, direct exposure to Legionspace qualia can easily destroy a human subjectivity. Even experiences transmitted with entirely benign intent may be so incompatible that the human mind suffers the equivalent of a system crash on attempting to process them.

So, then, unless you're interested in merging with your friends or directly interfacing with each others' minds, use of Legionspace shouldn't be necessary. Moreover, if there are any humans you wish to meet, it may be a challenge to maintain the necessary ontologic filtering. Even with these safety measures in place, a human may find prolonged Legionspace interaction to be...draining.

An easier, more accessible solution would be a somatosensory uplink, achievable through the use of a standard enhanced subjectivity suite. Rather than directly bridging one mind to another, this process involves the use of a neural intermediary to temporarily replace the physical body with a virtual one; an avatar. In the case of humans, sensory information from the body is intercepted and replaced with computer-generated stimuli, and outgoing impulses from the brain are translated into controls for a virtual avatar.

Much of the technology was pioneered by SSC, and is used as one mode of accessing the company's virtual-reality Omninet campuses. If you've heard of full subjectivity sync cybernetics, they are an extension of the same principles, used by mech pilots to control their chassis as a human would control their own body.

It is a mature technology which has undergone a great deal of refinement, but there are still risks, especially where humans are concerned. For them, shifting to an unfamiliar body can be unpleasant, sometimes traumatic. They can, but not always, experience dysphoria, disorientation, nausea, dissociative symptoms, a variety of side effects. In cases where the simulated body is notably different from a humanoid one, the subjectivity may reject or recognize the unreality of the substitute body, leading to extreme distress.

On the other hand, as I understand it, exploration of alternate forms can also be a liberating, enjoyable experience. The level of comfort varies from human to human. Some are able to tolerate very unfamiliar experiences, while others find themselves unable to use somatosensory uplinking at all. For most of our kind, of course, changing from one body to another is as simple as switching input sources. While the architecture of the human brain is not naturally constructed to accommodate body replacement, we lack such impediments.

Come to think of it, Hachiko recently had reason to research this topic. We know a human mech pilot, Sokaris Kelsius, callsign Opossum ( @the-last-patch ). He was badly injured in the course of combat; we went to aid him. In the course of treating his wounds, Hachiko used his full subjectivity sync cybernetics to establish somatosensory uplink, both to facilitate communication and provide pain relief. To do so, she rapidly constructed an avatar for him which initially failed to match with his physical body. This initially led to some dysphoric symptoms, but after some refinement of the avatar, he was able to use it seamlessly to communicate with us through our own avatars.

I bring this up because I am aware of the somewhat unique relationship you have to your own organic body. This is a factor which does not exist for most NHPs. Should you decide to pursue the use of somatosensory uplinking, I could forsee some obstacles associated with the relationship between your casket and body.

All of this might necessitate surgical implantation of a neural intermediary between your nervous system and casket, similarly to how a human would have an enhanced subjectivity suite installed between body and brain. I know that your nervous system does not support suspension or alteration of input delivered to the casket--perhaps such an implant might be beneficial in other regards, as well.

As for the other end of the equation, the avatar, if might be best to use an avatar which closely matches your physical body to avoid potentially unpleasant experiences.

Still, compared to the prospect of using Legionspace, this may be safer and simpler to achieve, and the outcomes more enjoyable. Access to Legion bridging cybernetics and Omninet connection of sufficient bandwidth to make use of them is generally restricted to military or corporate forces. It is possible, and can be used safely, but the resources required may be difficult to procure.

One other note: if you ever wish to wish to visit us, you are more than welcome. The simulated environment we use as our home is a hybridized Legion-adjacent environment; it can support qualic transfer, but in general, we typically use physical simulations to interact with one another. This conserves processing power, and to be completely frank, pure Legionspace is an environment we associate with some very unpleasant memories.

I wish you luck, Styx. Let me know if I can help. >

#lancer rpg#lancer rp#lancer nhp#lancer oc#oc rp blog#styx-class-nhp#ooc: had to crack open Legionnaire and check on a few things for this one#ooc: the somatosensory replacement stuff is mostly me extrapolating on SSC tech btw - not canon just spitballing#ooc: woe wall of text be upon ye

18 notes

·

View notes

Text

The incoming German government, rattled by the prospect of U.S. President Donald Trump withdrawing security guarantees, is preparing a fundamental readjustment of its defense posture. The new coalition of Christian Democrats (CDU/CSU) and Social Democrats (SPD) has already agreed to push for changes to the debt brake that would pave the way to dramatically higher military spending. Germany’s likely next chancellor, CDU leader Friedrich Merz, stated that “in view of the threats to our freedom and peace on our continent,” the government’s new motto needs to be “whatever it takes.”

A litmus test for how serious these efforts are is whether the new government will pursue a Plan B for a possible end to the U.S. nuclear security umbrella for Germany and Europe. Berlin needs an ambitious nuclear policy rethink that includes a push to recreate nuclear sharing at the European level—with the continent’s nuclear powers, France and the U.K.—to deter Russia and other adversaries. It is also essential for Germany to invest in civilian nuclear research to maintain nuclear latency as a hedge. Fortunately, Merz has signaled willingness to do both.

As part of NATO nuclear sharing, Germany hosts about 20 U.S. B-61 nuclear bombs at the Büchel airbase. For much of the past few decades, a majority of Germans were in favor of getting these nuclear weapons out of Germany. This was part and parcel of the German desire to exit everything nuclear, be it military or civilian. As late as mid-2021, a survey published by the Munich Security Conference found that only 14 percent of Germans favored nuclear weapons on German soil.

Russia’s invasion of Ukraine led to a dramatic shift in public opinion. In mid-2022, 52 percent of Germans surveyed for Panorama magazine expressed support for keeping or even increasing U.S. nuclear weapons in Germany. Russia’s attack against nonnuclear power Ukraine, which included the threat of using such weapons to deter Europe and the United States from supporting Kyiv, clearly left a mark on the German population.

In light of such threats, it seems that a majority of Germans have concluded that it is better to be directly under a nuclear umbrella. After Russia’s invasion, German Chancellor Olaf Scholz decided to pursue a 10 billion euro ($13.85 billion) deal with the United States to buy F-35s to replace the aging Tornado fleet that would carry the U.S. nuclear bombs stored at Büchel airbase. With this deal, Scholz sought to lock in U.S. commitments to German defense.

Of all recent German chancellors, Scholz probably pursued the closest relationship with the Washington. He tried to stick to this path even after the return of President Donald Trump to the White House. At the Munich Security Conference in mid-February, Scholz said: “We will not agree to any solution that leads to a decoupling of European and American security.”

That statement sounds decisive, but only if you ignore that the decision to decouple from European security lies in the hands of the United States. Berlin has no veto power here.

Scholz’s likely successor, Merz, strikes a very different tone. Before official results had been announced on the night of the Feb. 23 election, he stated: “My absolute priority will be to strengthen Europe as quickly as possible so that, step by step, we can really achieve independence from the USA.” Merz also said that it was unclear whether “we will still be talking about NATO in its current form” by the time of the bloc’s planned summit in June, “or whether we will have to establish an independent European defense capability much more quickly.”

Merz is convinced that this needs to include a Plan B for the possible end of the U.S. nuclear umbrella. The chancellor-in-waiting has proposed discussions with France and the U.K. on whether the two are willing to engage in a nuclear-sharing arrangement with Germany.

That is a sea change in the German debate. Former Chancellors Angela Merkel and Scholz had consistently ignored French President Emmanuel Macron’s offers to engage in a strategic dialogue on nuclear deterrence in Europe. In a televised address on March 5, Macron responded positively to what he referred to as Merz’s “historic call.” The French president said that he had decided “to open the strategic debate on the protection of our allies on the European continent by our [nuclear] deterrent.”

The Merz-Macron alignment provides a solid political base for discussions on Europeanizing nuclear sharing further. Of course, there are many obstacles, risks, and unanswered questions, as critics of these proposals in the German debate have been quick to point out.

It is easy to belittle “somewhat panicky policy suggestions” by an “increasing chorus of pundits and policy-makers from across the mainstream political establishment that fear US abandonment,” as German arms control researcher Ulrich Kühn has done. But given the havoc that the Trump administration has caused in only seven weeks, it is not “panicky” to think through a potential U.S. exit from the trans-Atlantic alliance. Not seriously pursuing a Plan B would be grossly irresponsible at this point.

A first requirement is a shared vision of a realistic political arrangement for Europeanizing extended deterrence and nuclear sharing. One option would be to recreate NATO’s nuclear planning group at the European level, with France and the U.K. as its nuclear anchor powers. To allow for U.K. participation, this should be done outside the formal EU framework. At its core, the planning group should include a handful of key European countries (Poland, Italy and Germany would be certain to be among). The EU could be collectively represented through the European Council president or the EU foreign affairs chief. Leaders of Germany and Poland have already expressed openness to concrete nuclear sharing arrangements, like having French capabilities stationed in German or Polish soil.

Of course, the final decision on any nuclear weapons use would remain with France and the U.K., as Macron also stressed during his comments on March 5. This mirrors the current arrangement with the United States. Members engaged in nuclear sharing would contribute financially to the burden of maintaining the French and British nuclear arsenal.

As early as 2019, Bruno Tertrais—one of France’s foremost nuclear strategists—discussed such an arrangement. Mindful of the obstacles, he also debunked some of the most common criticisms. For example, even combined, the limited French and U.K. arsenals would not be a full substitute for U.S. extended deterrence based on an arsenal many times that size. But that does not mean that a France- or U.K.-based deterrent would not be credible, per se. As Tertrais argued, “a small arsenal can deter a major power provided that it has the ability to inflict damage seen as unacceptable by the other party.”

It is also unconvincing to claim that a focus on Europeanizing nuclear deterrence distracts from the necessary investments in conventional deterrence (including deep precision-strike capabilities). Tertrais contended that Europeans simply need to pursue both. And yes, the U.K. does rely on Washington for key elements of its own nuclear arsenal. But the French capabilities are fully autonomous, which is crucial for credibility in light of a possible U.S. turn against Europe.

To claim that a push to Europeanize nuclear sharing would incentivize nuclear proliferation globally seems far-fetched. South Korea, Saudi Arabia, or Turkey will make their own determinations about going nuclear based on their own assessments of their security situations. And in the medium and long term, Germany and Europe also need to think about arms control and confidence-building measures with Russia.

Of course, Germans and others now looking to France and the U.K. for nuclear protection might ask themselves how stable and reliable these European nuclear powers are politically. That is a valid question. After all, in the U.K. Nigel Farage—the leader of the far-right Reform U.K. party—is making steady gains. France may be just one election away from having a president from a far-right or far-left party hostile to sharing the French nuclear deterrent.

That said, the only other option for Germany aside from a European nuclear umbrella would be to pursue its own nuclear weapons. At this stage, given the political fallout, the financial burden and the time that it would take to make a German bomb is not a cost-effective alternative. Yet, as a hedge, Germany needs to invest in maintaining nuclear latency—that is, having the basic capabilities in place to pursue its own nuclear weapons program in a situation where it is left with no other alternative.

To this end, Germany needs to recommit to civilian nuclear research—and that should be a no-brainer for other reasons in an age of energy-intensive artificial intelligence and the need to phase out fossil fuels amid ongoing climate change. A leading economy such as Germany simply needs to be at the forefront of civilian nuclear research.

During the early days of Trump’s first term, Merkel declared that “we Europeans must truly take our destiny into our own hands.” Yet, little to no action followed. Today, we are seeing the dramatic consequences of finally taking that statement seriously.

Merz is very much right to call for switching to “hoping for the best and still preparing for the worst.” However uncomfortable this might be for many in Germany, this strategy has to include a Plan B for nuclear deterrence.

7 notes

·

View notes

Text

The Rise of 5G and Its Impact on Mobile App Development

5G isn’t just about faster internet — it’s opening up a whole new era for Mobile App Development. With dramatically higher speeds, ultra-low latency, and the ability to connect millions of devices seamlessly, 5G is transforming how developers think about building apps. From richer experiences to smarter services, let's explore how 5G is already reshaping the mobile app landscape in 2025 and beyond.

1. Lightning-Fast Data Transfer

One of the biggest promises of 5G is incredibly fast data transfer — we're talking about speeds up to 100 times faster than 4G. For mobile apps, this means that large files, high-resolution images, and HD or even 4K video content can be downloaded or streamed instantly. Apps that once needed to compress their data heavily or restrict features due to bandwidth limits can now offer fuller, richer experiences without worrying about lag.

2. Seamless Real-Time Experiences

5G dramatically reduces latency, meaning the time between a user action and the app’s response is almost instant. This will revolutionize apps that rely on real-time communication, such as video conferencing, live-streaming platforms, and online gaming. Developers can create much more responsive apps, allowing users to interact with data, people, and services with zero noticeable delay.

3. The Growth of AR and VR Mobile Applications

Augmented Reality (AR) and Virtual Reality (VR) apps have been growing, but 5G takes them to another level. Because of the high bandwidth and low latency, developers can now build more complex, interactive, and immersive AR/VR experiences without requiring bulky hardware. Imagine trying on clothes virtually in real-time or exploring a vacation destination through your phone — 5G is making this possible within Mobile App Development.

4. Smarter IoT-Connected Apps

The Internet of Things (IoT) will thrive even more in a 5G environment. Smart home apps, connected car apps, fitness trackers, and other IoT applications will be able to sync and update faster and more reliably. Developers can now integrate complex IoT ecosystems into mobile apps with minimal worries about network congestion or instability.

5. Enhanced Mobile Cloud Computing

Thanks to 5G, mobile cloud computing becomes much more viable. Instead of relying solely on local device storage and processing, apps can now store large amounts of data and execute processes directly in the cloud without latency issues. This allows users with even mid-range smartphones to experience high-performance features without the need for powerful hardware.

6. Revolutionizing Mobile Commerce

E-commerce apps are set to benefit greatly from 5G. Instant-loading product pages, real-time customer support, virtual product previews through AR, and lightning-fast payment gateways will enhance user experience dramatically. This could lead to higher conversion rates, reduced cart abandonment, and greater user loyalty in shopping apps.

7. Opportunities for New App Categories

With the technical limitations of mobile networks reduced, a whole new range of apps becomes possible. Real-time remote surgeries, autonomous vehicles controlled via mobile apps, and highly advanced telemedicine solutions are just a few examples. The doors are open for mobile developers to innovate and create applications that were previously impossible.

8. Better Security Requirements

With 5G’s mass connectivity also comes a bigger responsibility for security. As mobile apps become more connected and complex, developers must prioritize data protection, encryption, and secure authentication methods. Building security deeply into Mobile App Development workflows will be critical to maintain user trust.

9. More Demanding User Expectations

As 5G rolls out globally, users will expect every app to be faster, smoother, and more capable. Apps that fail to leverage the benefits of 5G may seem outdated or sluggish. This shift will push developers to continually optimize their apps to take advantage of higher speeds and smarter networking capabilities.

10. Preparing for the 5G Future

Whether you’re building entertainment apps, business solutions, healthcare tools, or gaming platforms, now is the time to adapt to 5G. Developers must start thinking about how faster speeds, greater device connections, and cloud capabilities can improve their mobile applications. Partnering with experts in Mobile App Development who understand the full potential of 5G will be key to staying ahead in a rapidly evolving digital world.

3 notes

·

View notes

Text

The Evolution of DJ Controllers: From Analog Beginnings to Intelligent Performance Systems

The DJ controller has undergone a remarkable transformation—what began as a basic interface for beat matching has now evolved into a powerful centerpiece of live performance technology. Over the years, the convergence of hardware precision, software intelligence, and real-time connectivity has redefined how DJs mix, manipulate, and present music to audiences.

For professional audio engineers and system designers, understanding this technological evolution is more than a history lesson—it's essential knowledge that informs how modern DJ systems are integrated into complex live environments. From early MIDI-based setups to today's AI-driven, all-in-one ecosystems, this blog explores the innovations that have shaped DJ controllers into the versatile tools they are today.

The Analog Foundation: Where It All Began

The roots of DJing lie in vinyl turntables and analog mixers. These setups emphasized feel, timing, and technique. There were no screens, no sync buttons—just rotary EQs, crossfaders, and the unmistakable tactile response of a needle on wax.

For audio engineers, these analog rigs meant clean signal paths and minimal processing latency. However, flexibility was limited, and transporting crates of vinyl to every gig was logistically demanding.

The Rise of MIDI and Digital Integration

The early 2000s brought the integration of MIDI controllers into DJ performance, marking a shift toward digital workflows. Devices like the Vestax VCI-100 and Hercules DJ Console enabled control over software like Traktor, Serato, and VirtualDJ. This introduced features such as beat syncing, cue points, and FX without losing physical interaction.

From an engineering perspective, this era introduced complexities such as USB data latency, audio driver configurations, and software-to-hardware mapping. However, it also opened the door to more compact, modular systems with immense creative potential.

Controllerism and Creative Freedom

Between 2010 and 2015, the concept of controllerism took hold. DJs began customizing their setups with multiple MIDI controllers, pad grids, FX units, and audio interfaces to create dynamic, live remix environments. Brands like Native Instruments, Akai, and Novation responded with feature-rich units that merged performance hardware with production workflows.

Technical advancements during this period included:

High-resolution jog wheels and pitch faders

Multi-deck software integration

RGB velocity-sensitive pads

Onboard audio interfaces with 24-bit output

HID protocol for tighter software-hardware response

These tools enabled a new breed of DJs to blur the lines between DJing, live production, and performance art—all requiring more advanced routing, monitoring, and latency optimization from audio engineers.

All-in-One Systems: Power Without the Laptop

As processors became more compact and efficient, DJ controllers began to include embedded CPUs, allowing them to function independently from computers. Products like the Pioneer XDJ-RX, Denon Prime 4, and RANE ONE revolutionized the scene by delivering laptop-free performance with powerful internal architecture.

Key engineering features included:

Multi-core processing with low-latency audio paths

High-definition touch displays with waveform visualization

Dual USB and SD card support for redundancy

Built-in Wi-Fi and Ethernet for music streaming and cloud sync

Zone routing and balanced outputs for advanced venue integration

For engineers managing live venues or touring rigs, these systems offered fewer points of failure, reduced setup times, and greater reliability under high-demand conditions.

Embedded AI and Real-Time Stem Control

One of the most significant breakthroughs in recent years has been the integration of AI-driven tools. Systems now offer real-time stem separation, powered by machine learning models that can isolate vocals, drums, bass, or instruments on the fly. Solutions like Serato Stems and Engine DJ OS have embedded this functionality directly into hardware workflows.

This allows DJs to perform spontaneous remixes and mashups without needing pre-processed tracks. From a technical standpoint, it demands powerful onboard DSP or GPU acceleration and raises the bar for system bandwidth and real-time processing.

For engineers, this means preparing systems that can handle complex source isolation and downstream processing without signal degradation or sync loss.

Cloud Connectivity & Software Ecosystem Maturity

Today’s DJ controllers are not just performance tools—they are part of a broader ecosystem that includes cloud storage, mobile app control, and wireless synchronization. Platforms like rekordbox Cloud, Dropbox Sync, and Engine Cloud allow DJs to manage libraries remotely and update sets across devices instantly.

This shift benefits engineers and production teams in several ways:

Faster changeovers between performers using synced metadata

Simplified backline configurations with minimal drive swapping

Streamlined updates, firmware management, and analytics

Improved troubleshooting through centralized data logging

The era of USB sticks and manual track loading is giving way to seamless, cloud-based workflows that reduce risk and increase efficiency in high-pressure environments.

Hybrid & Modular Workflows: The Return of Customization

While all-in-one units dominate, many professional DJs are returning to hybrid setups—custom configurations that blend traditional turntables, modular FX units, MIDI controllers, and DAW integration. This modularity supports a more performance-oriented approach, especially in experimental and genre-pushing environments.

These setups often require:

MIDI-to-CV converters for synth and modular gear integration

Advanced routing and clock sync using tools like Ableton Link

OSC (Open Sound Control) communication for custom mapping

Expanded monitoring and cueing flexibility

This renewed complexity places greater demands on engineers, who must design systems that are flexible, fail-safe, and capable of supporting unconventional performance styles.

Looking Ahead: AI Mixing, Haptics & Gesture Control

As we look to the future, the next phase of DJ controllers is already taking shape. Innovations on the horizon include:

AI-assisted mixing that adapts in real time to crowd energy

Haptic feedback jog wheels that provide dynamic tactile response

Gesture-based FX triggering via infrared or wearable sensors

Augmented reality interfaces for 3D waveform manipulation

Deeper integration with lighting and visual systems through DMX and timecode sync

For engineers, this means staying ahead of emerging protocols and preparing venues for more immersive, synchronized, and responsive performances.

Final Thoughts

The modern DJ controller is no longer just a mixing tool—it's a self-contained creative engine, central to the live music experience. Understanding its capabilities and the technology driving it is critical for audio engineers who are expected to deliver seamless, high-impact performances in every environment.

Whether you’re building a club system, managing a tour rig, or outfitting a studio, choosing the right gear is key. Sourcing equipment from a trusted professional audio retailer—online or in-store—ensures not only access to cutting-edge products but also expert guidance, technical support, and long-term reliability.

As DJ technology continues to evolve, so too must the systems that support it. The future is fast, intelligent, and immersive—and it’s powered by the gear we choose today.

2 notes

·

View notes

Text

Can Open Source Integration Services Speed Up Response Time in Legacy Systems?

Legacy systems are still a key part of essential business operations in industries like banking, logistics, telecom, and manufacturing. However, as these systems get older, they become less efficient—slowing down processes, creating isolated data, and driving up maintenance costs. To stay competitive, many companies are looking for ways to modernize without fully replacing their existing systems. One effective solution is open-source integration, which is already delivering clear business results.

Why Faster Response Time Matters

System response time has a direct impact on business performance. According to a 2024 IDC report, improving system response by just 1.5 seconds led to a 22% increase in user productivity and a 16% rise in transaction completion rates. This means increased revenue, customer satisfaction as well as scalability in industries where time is of great essence.

Open-source integration is prominent in this case. It can minimize latency, enhance data flow and make process automation easier by allowing easier communication between legacy systems and more modern applications. This makes the systems more responsive and quick.

Key Business Benefits of Open-Source Integration

Lower Operational Costs

Open-source tools like Apache Camel and Mule eliminate the need for costly software licenses. A 2024 study by Red Hat showed that companies using open-source integration reduced their IT operating costs by up to 30% within the first year.

Real-Time Data Processing

Traditional legacy systems often depend on delayed, batch-processing methods. With open-source platforms using event-driven tools such as Kafka and RabbitMQ, businesses can achieve real-time messaging and decision-making—improving responsiveness in areas like order fulfillment and inventory updates.

Faster Deployment Cycles: Open-source integration supports modular, container-based deployment. The 2025 GitHub Developer Report found that organizations using containerized open-source integrations shortened deployment times by 43% on average. This accelerates updates and allows faster rollout of new services.

Scalable Integration Without Major Overhauls

Open-source frameworks allow businesses to scale specific parts of their integration stack without modifying the core legacy systems. This flexibility enables growth and upgrades without downtime or the cost of a full system rebuild.

Industry Use Cases with High Impact

Banking

Integrating open-source solutions enhances transaction processing speed and improves fraud detection by linking legacy banking systems with modern analytics tools.

Telecom

Customer service becomes more responsive by synchronizing data across CRM, billing, and support systems in real time.

Manufacturing

Real-time integration with ERP platforms improves production tracking and inventory visibility across multiple facilities.

Why Organizations Outsource Open-Source Integration

Most internal IT teams lack skills and do not have sufficient resources to manage open-source integration in a secure and efficient manner. Businesses can also guarantee trouble-free setup and support as well as improved system performance by outsourcing to established providers. Top open-source integration service providers like Suma Soft, Red Hat Integration, Talend, TIBCO (Flogo Project), and Hitachi Vantara offer customized solutions. These help improve system speed, simplify daily operations, and support digital upgrades—without the high cost of replacing existing systems.

2 notes

·

View notes

Text

#VIAVI Solutions#datacenters#low latency#Networking#FiberOptics#5G#AI#CloudComputing#powerelectronics#powermanagement#powersemiconductor

0 notes

Text

DGQEX Focuses on the Trend of Crypto Asset Accounting, Supporting Corporate Accounts to Hold Bitcoin

Recently, UK-listed company Vinanz purchased 16.9 bitcoins at an average price of $103,341, with a total value of approximately $1.75 million. This transaction represents another landmark event in the field of crypto assets. Not only does it mark the first time a traditional enterprise has incorporated bitcoin into its balance sheet, but it also highlights the evolving role of crypto assets within the global financial system. Against this backdrop, DGQEX, as a technology-driven digital currency exchange, is increasingly recognized by institutional investors for its service capabilities and technological innovation.

Institutional Adoption Drives Diversified Crypto Asset Allocation

The Vinanz bitcoin acquisition is not an isolated case. Recently, multiple multinational corporations and institutional investors have begun to include bitcoin in their portfolios. For example, a North American technology giant disclosed bitcoin holdings in its financial reports, while some sovereign wealth funds are reportedly exploring crypto asset allocation strategies. This trend reflects the growing recognition by the traditional financial sector of the anti-inflation and decentralized characteristics of crypto assets. DGQEX, by offering multi-currency trading pairs and deep liquidity, has already provided customized trading solutions for numerous institutional investors. Its proprietary smart matching engine supports high-concurrency trading demands, ensuring institutions can efficiently execute large orders in volatile markets and avoid price slippage due to insufficient liquidity.

Technological Strength Fortifies Institutional Trading Security

With the influx of institutional funds, the security and compliance of crypto asset trading have become central concerns. David Lenigas, Chairman of Vinanz, has explicitly stated that bitcoin holdings will serve as the foundation for the company core business value, reflecting institutional confidence in the long-term value of crypto assets. However, institutional investors now place higher demands on the technical capabilities of trading platforms. DGQEX has built a multi-layered security system through multi-signature wallets, cold storage isolation technology, and real-time risk monitoring systems. In addition, the distributed architecture of DGQEX can withstand high-concurrency trading pressures, ensuring stable operations even under extreme market conditions. These technological advantages provide institutional investors with a reliable trading environment, allowing them to focus on asset allocation strategies rather than technical risks.

Global Expansion Facilitates Cross-Border Asset Allocation for Institutions

The Vinanz bitcoin purchase is seen as a key milestone in the institutionalization of the crypto market. As institutional interest in crypto assets rises globally, demand for cross-border asset allocation has significantly increased. DGQEX has established compliant nodes in multiple locations worldwide, supports multilingual services and localized payment methods, and provides institutional investors with a low-latency, high-liquidity trading environment. Its smart routing system automatically matches optimal trading paths, reducing cross-border transaction costs. Moreover, the DGQEX API interface supports quantitative trading strategies, meeting institutional needs for high-frequency and algorithmic trading, and helping institutions achieve asset appreciation in complex market environments.

DGQEX: Empowering Crypto Asset Allocation with Technology and Service

As an innovation-driven digital currency exchange, DGQEX is committed to providing institutional investors with a secure and convenient trading environment. The platform employs distributed architecture and multiple encryption technologies to ensure user asset security. Its smart routing system and deep liquidity pools deliver low-slippage, high-efficiency execution for large trades. Furthermore, the compliance team of DGQEX continuously monitors global regulatory developments to ensure platform operations adhere to the latest regulatory requirements, providing institutional investors with compliance assurance.

Currently, the crypto asset market is undergoing a transformation from individual investment to institutional allocation. Leveraging its technological strength and global presence, DGQEX offers institutional investors one-stop digital asset solutions. Whether it is multi-currency trading, block trade matching, or customized risk management tools, DGQEX can meet the diverse needs of institutions in crypto asset allocation. Looking ahead, as more traditional institutions enter the crypto market, DGQEX will continue to optimize its services and help global users seize opportunities in the digital asset space.

2 notes

·

View notes