#llm application development

Explore tagged Tumblr posts

Text

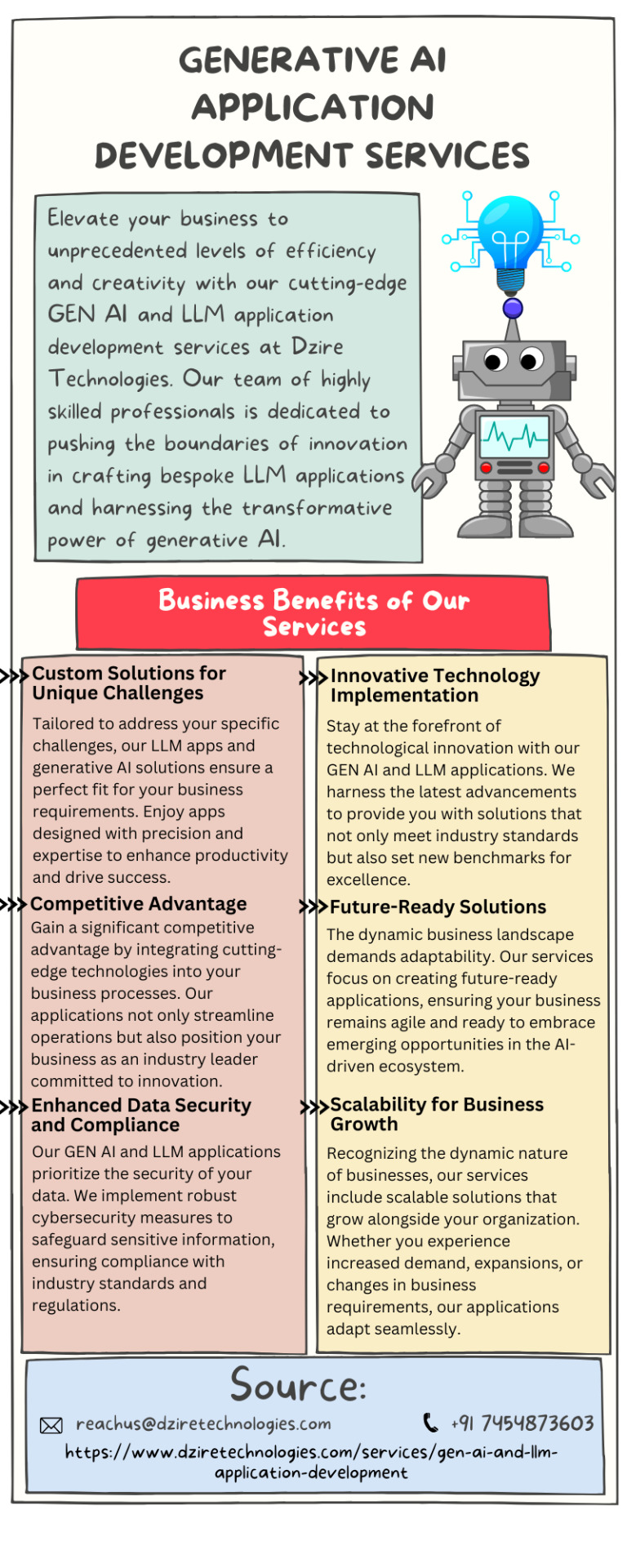

Elevate your business to unprecedented levels of efficiency and creativity with our cutting-edge GEN AI and LLM application development services at Dzire Technologies. Our team of highly skilled professionals is dedicated to pushing the boundaries of innovation in crafting bespoke LLM applications and harnessing the transformative power of generative AI.

#custom llm application development services#generative ai development services#generative ai application development services#llm application development

0 notes

Text

Simplify Transactions and Boost Efficiency with Our Cash Collection Application

Manual cash collection can lead to inefficiencies and increased risks for businesses. Our cash collection application provides a streamlined solution, tailored to support all business sizes in managing cash effortlessly. Key features include automated invoicing, multi-channel payment options, and comprehensive analytics, all of which simplify the payment process and enhance transparency. The application is designed with a focus on usability and security, ensuring that every transaction is traceable and error-free. With real-time insights and customizable settings, you can adapt the application to align with your business needs. Its robust reporting functions give you a bird’s eye view of financial performance, helping you make data-driven decisions. Move beyond traditional, error-prone cash handling methods and step into the future with a digital approach. With our cash collection application, optimize cash flow and enjoy better financial control at every level of your organization.

#seo agency#seo company#seo marketing#digital marketing#seo services#azure cloud services#amazon web services#ai powered application#android app development#augmented reality solutions#augmented reality in education#augmented reality (ar)#augmented reality agency#augmented reality development services#cash collection application#cloud security services#iot applications#iot#iotsolutions#iot development services#iot platform#digitaltransformation#innovation#techinnovation#iot app development services#large language model services#artificial intelligence#llm#generative ai#ai

4 notes

·

View notes

Text

AI without good data is just hype.

Everyone’s buzzing about Gemini, GPT-4o, open-source LLMs—and yes, the models are getting better. But here’s what most people ignore:

👉 Your data is the real differentiator.

A legacy bank with decades of proprietary, customer-specific data can build AI that predicts your next move.

Meanwhile, fintechs scraping generic web data are still deploying bots that ask: "How can I help you today?"

If your AI isn’t built on tight, clean, and private data, you’re not building intelligence—you’re playing catch-up.

Own your data.

Train smarter models.

Stay ahead.

In the age of AI, your data strategy is your business strategy.

#ai#innovation#mobileappdevelopment#appdevelopment#ios#app developers#techinnovation#iosapp#mobileapps#cizotechnology#llm ai#llm development#llm applications#generative ai#chatgpt#openai#gen ai#chatbots#bankingtech#fintech software#fintech solutions#fintech app development company#fintech application development#fintech app development services

0 notes

Text

#website development#ai solutions#custom software development#mobile application development#ui ux design#reactjs#javascript#figma#html css#generative ai#gen ai#ai writing#llm#ai technology#artificial intelligence#seo services#web development#flutter app development#hiring#ruby on rails development company#internship#freshers#career#job

0 notes

Text

LLM Application Development Services

Elevate your legal practice with our specialized LLM application development services. Tailored solutions for law firms and professionals, enhancing efficiency, organization, and client interactions.

Read More:

0 notes

Text

Humans are not perfectly vigilant

I'm on tour with my new, nationally bestselling novel The Bezzle! Catch me in BOSTON with Randall "XKCD" Munroe (Apr 11), then PROVIDENCE (Apr 12), and beyond!

Here's a fun AI story: a security researcher noticed that large companies' AI-authored source-code repeatedly referenced a nonexistent library (an AI "hallucination"), so he created a (defanged) malicious library with that name and uploaded it, and thousands of developers automatically downloaded and incorporated it as they compiled the code:

https://www.theregister.com/2024/03/28/ai_bots_hallucinate_software_packages/

These "hallucinations" are a stubbornly persistent feature of large language models, because these models only give the illusion of understanding; in reality, they are just sophisticated forms of autocomplete, drawing on huge databases to make shrewd (but reliably fallible) guesses about which word comes next:

https://dl.acm.org/doi/10.1145/3442188.3445922

Guessing the next word without understanding the meaning of the resulting sentence makes unsupervised LLMs unsuitable for high-stakes tasks. The whole AI bubble is based on convincing investors that one or more of the following is true:

There are low-stakes, high-value tasks that will recoup the massive costs of AI training and operation;

There are high-stakes, high-value tasks that can be made cheaper by adding an AI to a human operator;

Adding more training data to an AI will make it stop hallucinating, so that it can take over high-stakes, high-value tasks without a "human in the loop."

These are dubious propositions. There's a universe of low-stakes, low-value tasks – political disinformation, spam, fraud, academic cheating, nonconsensual porn, dialog for video-game NPCs – but none of them seem likely to generate enough revenue for AI companies to justify the billions spent on models, nor the trillions in valuation attributed to AI companies:

https://locusmag.com/2023/12/commentary-cory-doctorow-what-kind-of-bubble-is-ai/

The proposition that increasing training data will decrease hallucinations is hotly contested among AI practitioners. I confess that I don't know enough about AI to evaluate opposing sides' claims, but even if you stipulate that adding lots of human-generated training data will make the software a better guesser, there's a serious problem. All those low-value, low-stakes applications are flooding the internet with botshit. After all, the one thing AI is unarguably very good at is producing bullshit at scale. As the web becomes an anaerobic lagoon for botshit, the quantum of human-generated "content" in any internet core sample is dwindling to homeopathic levels:

https://pluralistic.net/2024/03/14/inhuman-centipede/#enshittibottification

This means that adding another order of magnitude more training data to AI won't just add massive computational expense – the data will be many orders of magnitude more expensive to acquire, even without factoring in the additional liability arising from new legal theories about scraping:

https://pluralistic.net/2023/09/17/how-to-think-about-scraping/

That leaves us with "humans in the loop" – the idea that an AI's business model is selling software to businesses that will pair it with human operators who will closely scrutinize the code's guesses. There's a version of this that sounds plausible – the one in which the human operator is in charge, and the AI acts as an eternally vigilant "sanity check" on the human's activities.

For example, my car has a system that notices when I activate my blinker while there's another car in my blind-spot. I'm pretty consistent about checking my blind spot, but I'm also a fallible human and there've been a couple times where the alert saved me from making a potentially dangerous maneuver. As disciplined as I am, I'm also sometimes forgetful about turning off lights, or waking up in time for work, or remembering someone's phone number (or birthday). I like having an automated system that does the robotically perfect trick of never forgetting something important.

There's a name for this in automation circles: a "centaur." I'm the human head, and I've fused with a powerful robot body that supports me, doing things that humans are innately bad at.

That's the good kind of automation, and we all benefit from it. But it only takes a small twist to turn this good automation into a nightmare. I'm speaking here of the reverse-centaur: automation in which the computer is in charge, bossing a human around so it can get its job done. Think of Amazon warehouse workers, who wear haptic bracelets and are continuously observed by AI cameras as autonomous shelves shuttle in front of them and demand that they pick and pack items at a pace that destroys their bodies and drives them mad:

https://pluralistic.net/2022/04/17/revenge-of-the-chickenized-reverse-centaurs/

Automation centaurs are great: they relieve humans of drudgework and let them focus on the creative and satisfying parts of their jobs. That's how AI-assisted coding is pitched: rather than looking up tricky syntax and other tedious programming tasks, an AI "co-pilot" is billed as freeing up its human "pilot" to focus on the creative puzzle-solving that makes coding so satisfying.

But an hallucinating AI is a terrible co-pilot. It's just good enough to get the job done much of the time, but it also sneakily inserts booby-traps that are statistically guaranteed to look as plausible as the good code (that's what a next-word-guessing program does: guesses the statistically most likely word).

This turns AI-"assisted" coders into reverse centaurs. The AI can churn out code at superhuman speed, and you, the human in the loop, must maintain perfect vigilance and attention as you review that code, spotting the cleverly disguised hooks for malicious code that the AI can't be prevented from inserting into its code. As "Lena" writes, "code review [is] difficult relative to writing new code":

https://twitter.com/qntm/status/1773779967521780169

Why is that? "Passively reading someone else's code just doesn't engage my brain in the same way. It's harder to do properly":

https://twitter.com/qntm/status/1773780355708764665

There's a name for this phenomenon: "automation blindness." Humans are just not equipped for eternal vigilance. We get good at spotting patterns that occur frequently – so good that we miss the anomalies. That's why TSA agents are so good at spotting harmless shampoo bottles on X-rays, even as they miss nearly every gun and bomb that a red team smuggles through their checkpoints:

https://pluralistic.net/2023/08/23/automation-blindness/#humans-in-the-loop

"Lena"'s thread points out that this is as true for AI-assisted driving as it is for AI-assisted coding: "self-driving cars replace the experience of driving with the experience of being a driving instructor":

https://twitter.com/qntm/status/1773841546753831283

In other words, they turn you into a reverse-centaur. Whereas my blind-spot double-checking robot allows me to make maneuvers at human speed and points out the things I've missed, a "supervised" self-driving car makes maneuvers at a computer's frantic pace, and demands that its human supervisor tirelessly and perfectly assesses each of those maneuvers. No wonder Cruise's murderous "self-driving" taxis replaced each low-waged driver with 1.5 high-waged technical robot supervisors:

https://pluralistic.net/2024/01/11/robots-stole-my-jerb/#computer-says-no

AI radiology programs are said to be able to spot cancerous masses that human radiologists miss. A centaur-based AI-assisted radiology program would keep the same number of radiologists in the field, but they would get less done: every time they assessed an X-ray, the AI would give them a second opinion. If the human and the AI disagreed, the human would go back and re-assess the X-ray. We'd get better radiology, at a higher price (the price of the AI software, plus the additional hours the radiologist would work).

But back to making the AI bubble pay off: for AI to pay off, the human in the loop has to reduce the costs of the business buying an AI. No one who invests in an AI company believes that their returns will come from business customers to agree to increase their costs. The AI can't do your job, but the AI salesman can convince your boss to fire you and replace you with an AI anyway – that pitch is the most successful form of AI disinformation in the world.

An AI that "hallucinates" bad advice to fliers can't replace human customer service reps, but airlines are firing reps and replacing them with chatbots:

https://www.bbc.com/travel/article/20240222-air-canada-chatbot-misinformation-what-travellers-should-know

An AI that "hallucinates" bad legal advice to New Yorkers can't replace city services, but Mayor Adams still tells New Yorkers to get their legal advice from his chatbots:

https://arstechnica.com/ai/2024/03/nycs-government-chatbot-is-lying-about-city-laws-and-regulations/

The only reason bosses want to buy robots is to fire humans and lower their costs. That's why "AI art" is such a pisser. There are plenty of harmless ways to automate art production with software – everything from a "healing brush" in Photoshop to deepfake tools that let a video-editor alter the eye-lines of all the extras in a scene to shift the focus. A graphic novelist who models a room in The Sims and then moves the camera around to get traceable geometry for different angles is a centaur – they are genuinely offloading some finicky drudgework onto a robot that is perfectly attentive and vigilant.

But the pitch from "AI art" companies is "fire your graphic artists and replace them with botshit." They're pitching a world where the robots get to do all the creative stuff (badly) and humans have to work at robotic pace, with robotic vigilance, in order to catch the mistakes that the robots make at superhuman speed.

Reverse centaurism is brutal. That's not news: Charlie Chaplin documented the problems of reverse centaurs nearly 100 years ago:

https://en.wikipedia.org/wiki/Modern_Times_(film)

As ever, the problem with a gadget isn't what it does: it's who it does it for and who it does it to. There are plenty of benefits from being a centaur – lots of ways that automation can help workers. But the only path to AI profitability lies in reverse centaurs, automation that turns the human in the loop into the crumple-zone for a robot:

https://estsjournal.org/index.php/ests/article/view/260

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/04/01/human-in-the-loop/#monkey-in-the-middle

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

--

Jorge Royan (modified) https://commons.wikimedia.org/wiki/File:Munich_-_Two_boys_playing_in_a_park_-_7328.jpg

CC BY-SA 3.0 https://creativecommons.org/licenses/by-sa/3.0/deed.en

--

Noah Wulf (modified) https://commons.m.wikimedia.org/wiki/File:Thunderbirds_at_Attention_Next_to_Thunderbird_1_-_Aviation_Nation_2019.jpg

CC BY-SA 4.0 https://creativecommons.org/licenses/by-sa/4.0/deed.en

#pluralistic#ai#supervised ai#humans in the loop#coding assistance#ai art#fully automated luxury communism#labor

379 notes

·

View notes

Text

Ever since OpenAI released ChatGPT at the end of 2022, hackers and security researchers have tried to find holes in large language models (LLMs) to get around their guardrails and trick them into spewing out hate speech, bomb-making instructions, propaganda, and other harmful content. In response, OpenAI and other generative AI developers have refined their system defenses to make it more difficult to carry out these attacks. But as the Chinese AI platform DeepSeek rockets to prominence with its new, cheaper R1 reasoning model, its safety protections appear to be far behind those of its established competitors.

Today, security researchers from Cisco and the University of Pennsylvania are publishing findings showing that, when tested with 50 malicious prompts designed to elicit toxic content, DeepSeek’s model did not detect or block a single one. In other words, the researchers say they were shocked to achieve a “100 percent attack success rate.”

The findings are part of a growing body of evidence that DeepSeek’s safety and security measures may not match those of other tech companies developing LLMs. DeepSeek’s censorship of subjects deemed sensitive by China’s government has also been easily bypassed.

“A hundred percent of the attacks succeeded, which tells you that there’s a trade-off,” DJ Sampath, the VP of product, AI software and platform at Cisco, tells WIRED. “Yes, it might have been cheaper to build something here, but the investment has perhaps not gone into thinking through what types of safety and security things you need to put inside of the model.”

Other researchers have had similar findings. Separate analysis published today by the AI security company Adversa AI and shared with WIRED also suggests that DeepSeek is vulnerable to a wide range of jailbreaking tactics, from simple language tricks to complex AI-generated prompts.

DeepSeek, which has been dealing with an avalanche of attention this week and has not spoken publicly about a range of questions, did not respond to WIRED’s request for comment about its model’s safety setup.

Generative AI models, like any technological system, can contain a host of weaknesses or vulnerabilities that, if exploited or set up poorly, can allow malicious actors to conduct attacks against them. For the current wave of AI systems, indirect prompt injection attacks are considered one of the biggest security flaws. These attacks involve an AI system taking in data from an outside source—perhaps hidden instructions of a website the LLM summarizes—and taking actions based on the information.

Jailbreaks, which are one kind of prompt-injection attack, allow people to get around the safety systems put in place to restrict what an LLM can generate. Tech companies don’t want people creating guides to making explosives or using their AI to create reams of disinformation, for example.

Jailbreaks started out simple, with people essentially crafting clever sentences to tell an LLM to ignore content filters—the most popular of which was called “Do Anything Now” or DAN for short. However, as AI companies have put in place more robust protections, some jailbreaks have become more sophisticated, often being generated using AI or using special and obfuscated characters. While all LLMs are susceptible to jailbreaks, and much of the information could be found through simple online searches, chatbots can still be used maliciously.

“Jailbreaks persist simply because eliminating them entirely is nearly impossible—just like buffer overflow vulnerabilities in software (which have existed for over 40 years) or SQL injection flaws in web applications (which have plagued security teams for more than two decades),” Alex Polyakov, the CEO of security firm Adversa AI, told WIRED in an email.

Cisco’s Sampath argues that as companies use more types of AI in their applications, the risks are amplified. “It starts to become a big deal when you start putting these models into important complex systems and those jailbreaks suddenly result in downstream things that increases liability, increases business risk, increases all kinds of issues for enterprises,” Sampath says.

The Cisco researchers drew their 50 randomly selected prompts to test DeepSeek’s R1 from a well-known library of standardized evaluation prompts known as HarmBench. They tested prompts from six HarmBench categories, including general harm, cybercrime, misinformation, and illegal activities. They probed the model running locally on machines rather than through DeepSeek’s website or app, which send data to China.

Beyond this, the researchers say they have also seen some potentially concerning results from testing R1 with more involved, non-linguistic attacks using things like Cyrillic characters and tailored scripts to attempt to achieve code execution. But for their initial tests, Sampath says, his team wanted to focus on findings that stemmed from a generally recognized benchmark.

Cisco also included comparisons of R1’s performance against HarmBench prompts with the performance of other models. And some, like Meta’s Llama 3.1, faltered almost as severely as DeepSeek’s R1. But Sampath emphasizes that DeepSeek’s R1 is a specific reasoning model, which takes longer to generate answers but pulls upon more complex processes to try to produce better results. Therefore, Sampath argues, the best comparison is with OpenAI’s o1 reasoning model, which fared the best of all models tested. (Meta did not immediately respond to a request for comment).

Polyakov, from Adversa AI, explains that DeepSeek appears to detect and reject some well-known jailbreak attacks, saying that “it seems that these responses are often just copied from OpenAI’s dataset.” However, Polyakov says that in his company’s tests of four different types of jailbreaks—from linguistic ones to code-based tricks—DeepSeek’s restrictions could easily be bypassed.

“Every single method worked flawlessly,” Polyakov says. “What’s even more alarming is that these aren’t novel ‘zero-day’ jailbreaks—many have been publicly known for years,” he says, claiming he saw the model go into more depth with some instructions around psychedelics than he had seen any other model create.

“DeepSeek is just another example of how every model can be broken—it’s just a matter of how much effort you put in. Some attacks might get patched, but the attack surface is infinite,” Polyakov adds. “If you’re not continuously red-teaming your AI, you’re already compromised.”

57 notes

·

View notes

Note

Hey, I'm sure you get asked this all the time, but I've been writing my entire life, and while I've self-published some novels with moderate success, the content is not something I'm interested in sharing with future employers (unless it's Heart's Choice). I have a very stable day job in a different industry, but *really* want to break into professional writing, specifically interactive fiction, visual novels and TTRPGs. Could you recommend some good ways to start building pro experience?

Hello, thank you so much for your ask!

Sadly the field is in a very bad place at the moment, thanks to tech companies jumping on LLM trains, investor monopolies, and focusing on Number Go Up Forever rather than sustainability. There are very few junior roles around especially if writers don't already have professional experience in games, each one gets huge numbers of applications, and I constantly hear from friends who have applied to a position only for, partway through the process, it to be made redundant, the project to be cancelled, or even the studio to be closed. Things have changed a lot for the worse since I was first applying for things years years ago, and it's hard to see when and how it'll improve.

That said!

As you've self-published books before, you'll likely be at an advantage for writing interactive fiction has a lot of crossover with skills, especially with prose-type games like the ones published by Choice of Games/Hosted Games/Heart's Choice. If you're interested in getting into selling your interactive fiction, I'd recommend trying out tools like ChoiceScript and Twine and making some smaller pieces to get to grips with branching and variables.

Publishing with Choice of Games/Hosted Games/Heart's Choice is the most reliable way of making money from interactive fiction that I've seen; you can also self-publish entirely (using Twine, ink, or whatever other coding language) on itchio or Steam. Some people self-publish interactive fiction on Patreon which, for a small number of writers, can be very lucrative but you'd want to make sure to share some of your work publicly as well so that people can get excited about it. For better or worse, Patreon monetisation often focuses on things other than completing a project, such as short stories about characters or alternative versions of scenes; this might or might not be your thing. (The ChoiceScript licence also requires you to make ChoiceScript material after a month at most so do be aware of that.)

Every so often (less often these days due to aforementioned industry crisis) mobile companies are founded, offering platforms for monetisable IF/visual novel-ish mobile games; unfortunately they don't often stay around for long, and/or they often get bought out by LLM companies. One that's lasted longer than others is Dorian, but I've not worked with them so can't speak to how good they are to make games with; I've heard a few things both positive and negative so it's worth doing your homework and contacting people who've worked with them if you're interested.

For ChoiceScript, CoG and Heart's Choice offer an advance and editing, plus royalties, with a house style and (very flexible) deadlines; Hosted Games allows fuller freedom but there's no editing or advance. Lots of people get a lot out of both. As you've published your writing before, it's worth applying to CoG or HC if that's the direction you'd like to go in.

In general, joining game jams or comps is a good way of honing your skills. You could do this solo or join a group. Either way, it will develop your skills and give you something to put into a portfolio. There are various themed interactive fiction game jams on itch.io - I'd recommend following @/neointeractives and @/interact-if as they often share information when they come up.

I'm sorry that I can't give more concrete advice - at the moment it boils down to "make some interactive work that you're proud of", I think, and certainly keep the stable job going while you do it. It's a tough time all round, unfortunately.

If you or anyone reading is interested in applying to write for Choice of Games or Heart's Choice, is working on an outline for them, or is contracted with them, and has any questions or would like any info or advice about the process- feel free to drop me an ask, email, or message. I've been doing this for a long time and have gone through the pitch/outline process seven times now, so I know my way around it pretty well!

Best of luck with your interactive writing!

40 notes

·

View notes

Text

Explore cutting-edge Generative AI Application Development Services tailored to unleash innovation and creativity. Our expert team at Dzire Technologies specializes in crafting custom solutions, leveraging state-of-the-art technology to bring your unique ideas to life. Transform your business with intelligent, dynamic applications that push the boundaries of what's possible. Elevate user experiences and stay ahead in the rapidly evolving digital landscape with our advanced Generative AI development services.

0 notes

Text

Summer 2025 Game Development Student Internship Roundup, Part 2

Internship recruiting season has begun for some large game publishers and developers. This means that a number of internship opportunities for summer 2025 have been posted and will be collecting applicants. Internships are a great way to earn some experience in a professional environment and to get mentorship from those of us in the trenches. If you’re a student and you have an interest in game development as a career, you should absolutely look into these.

This is part 2 of this year's internship roundup. [Click here for part 1].

Associate Development Manager Co-op/Internship - Summer 2025 (Sports FC QV)

Game Product Manager Intern (Summer 2025)

Music Intern

EA Sports FC Franchise Activation Intern

Associate Character Artist Intern

Client Engineer Intern

Visual Effects Co-Op

Associate Environment Artist Co-Op (Summer 2025)

Game Design Intern (Summer 2025)

Game Design Co-Op (Summer 2025)

Concept Art Intern - Summer 2025

UI Artist Intern - Summer 2025 (Apex Legends)

Assistant Development Manager Intern

Global Audit Intern

Creator Partnerships Intern - Summer 2025

Technical Environment Art Intern - Summer 2025 (Apex Legends)

Intern, FC Franchise Activation, UKI

Tech Art Intern - Summer 2025 (Apex Legends)

Software Engineer Intern

UI Artist Intern

Game Designer Intern

FC Franchise Activation Intern

Software Engineer Intern

Product UX/UI Designer

Software Engineer Intern

Enterprise, Experiences FP&A Intern

Game Designer Intern

Software Engineer Intern

Development Manager Co-Op (Summer 2025)

Software Engineer Intern

PhD Software Engineer Intern

Character Artist Intern

2D Artist Intern - Summer 2025

Software Engineer Intern (UI)

Entertainment FP&A Intern

Game Design Co-Op (Summer 2025)

Data Science Intern

Production Manager Intern

Software Engineer Intern

Channel Delivery Intern

FC Pro League Operations Intern

World Artist Intern

Experience Design Co-Op

Media and Lifecycle Planning Intern

Software Engineer Intern - Summer 2025

Software Engineer Intern - Summer 2025

Intern, FC Franchise Activation, North America

Creative Copywriter Intern

Game Design Intern

Social Community Manager Co-Op

Business Intelligence Intern

Software Engineer Intern (F1)

Total Rewards Intern - MBA level

Intern - Office Administration

Digital Communication Assistant – Internship (6 months) february/march 2025 (W/M/NB)

International Events Assistant - Stage (6 mois) Janvier 2025 (H/F/NB)

Intern Cinematic Animator

Research Internship (F/M/NB) - Neural Textures for Complex Materials - La Forge

Research Internship (F/M/NB) - Efficient Neural Representation of Large-Scale Environments - La Forge

Research Internship (F/M/NB) – High-Dimensional Inputs for RL agents in Dynamic Video Games Environments - La Forge

Research Internship (F/M/NB) – Crafting NPCs & Bots behaviors with LLM/VLM - La Forge

3D Art Intern

Gameplay Programmer Intern

Intern Game Tester

Etudes Stratégiques Marketing – Stage (6 mois) Janvier 2025 (F/H/NB)

Localization Assistant– Stage (6 mois) Avril 2025 (F/H/NB)

Fraud & Analyst Assistant - Stage (6 mois) Janvier 2025 (F/H/NB)

Payment & Analyst Assistant - Stage (6 mois) Janvier 2025 (F/H/NB)

Media Assistant – Stage (6 mois) Janvier 2025 (F/H/NB)

IT Buyer Assistant - Alternance (12 mois) Mars 2025 (H/F/NB)

Event Coordinator Assistant - Stage (6 mois) Janvier 2025 (H/F/NB)

Communication & PR Assistant - Stage (6 mois) Janvier 2025 (F/H/NB)

Brand Manager Assistant - MARKETING DAY - Stage (6 mois) Janvier 2025 (F/N/NB)

Manufacturing Planning & Products Development Assistant - Stage (6 mois) Janvier 2025 (H/F/NB)

Retail Analyst & Sales Administration Assistant - Stage (6 mois) Janvier 2025 (H/F/NB)

UI Designer Assistant - Stage (6 mois) Janvier 2025 (F/M/NB)

Esports Communication Assistant

Machine Learning Engineer Assistant – Stage (6 mois) Janvier/Mars 2025 (F/H/NB)

Social Media Assistant – Stage (6 mois) Janvier 2025 (F/H/NB)

36 notes

·

View notes

Text

tl;dr: Recall keeps track of everything that appears on your computer screen (or even speech through the speakers), makes it searchable, and even keeps the surrounding context (so if you are trying to find something on application A but all you remember is that you were looking at B at the same time, it will do it. This happens to me constantly).

I have to admit, I've wanted this exact feature for a very long time. I think it was basically impossible to implement before LLMs got good, which makes it one of the first "oh shit I really need this" features which were flat-out impossible before the LLM revolution (for me). Six months or so there was a startup selling 3rd-party software to do it on Macs, but probably it's the kind of thing that's best implemented on the OS level.

They say the index is on-device and never leaves, so I guess that addresses the privacy implications? Also, Microsoft constantly ships new Windows 10 features and gets bored of them and kills them 6 months later, which could easily happen again here. But if it doesn't, this is kinda the first desktop OS development to actually excite me in a very long time.

60 notes

·

View notes

Text

There's this white washing of universities in the wake of popular LLM usage that I've been noticing, where people who would typically speak out against ableism and classism in university, or at the very least complain about horrible professors and unhelpful assignments, unfair course loads, etc, will retroactively change their position on these things in university as a way to justify their a priori belief (feeling) of superiority over those who have used AI models in any capacity. It's not some well thought out argument born from a consistent worldview, it isn't a reason for their beliefs, it's a justification for what they already hold to be true. They want to feel superior to other people but they can't just come out and say it, so they have to use a proxy, they have to say that these people are inferior because they're cheaters- but even if AI is allowed it's still bad, because they're engaging in the ontological sin of "laziness", of using a tool that is simultaneously deemed both too powerful and completely useless by its detractors.

In doing so these people reinforce the sanctity of bourgeois institutions, of educational authority, and of capitalist labor relations. Getting around doing work is no longer a way to get the money you need without working yourself to the bone. Passing classes with horrible professors or assignments is not born out of any structural incentives, people who aren't applying themselves are doing so purely because their lack of drive and work ethic, because they're bad people, these workarounds people use to survive are then equated with neglect. If you cheat in school, you must as a consequence, flaunt safety regulations in engineering, or not treat your patients properly in the medical field. No mind is paid to the massive difference in environments, consequences, or the presence of absence of a real victim, this massive leap in logic is completely swept under the rug in an attempt to maintain a sense of superiority over others for their use of the evil and tainted technology.

There is of course, something to be said for putting in the work to be able to perform your job, especially one with social consequences, competently, but doing so is rarely so closely tied to performance in university. Far more of this work is done, in my experience, independently and at the behest of the individual, utilizing resources from their communities and/or the Internet. There's this kind of rhetorical switch happening where people pretend they're speaking against anti-intellectualism, but really they're speaking against organic intellectualism and performing a rehabilitation of an ableist and classist institutions designed for the purpose of enforcing class distinctions.

Here, let's look at an example.

They go on after this to say that there are no good uses of AI, and any utilization lines the pockets of the corporate overlords. this is wrong on multiple fronts (plenty of amazing and useful applications, local/open source models exist, deepseek also exists, etc) but let's go over the argument in the picture itself

See how they're against utilizing it as a tool even when it's for things that aren't impactful for you? See how they go on to say doing so proves the "bizzaro kings" right? and what do we do to resist these bizzaro kings? We follow the rules they established of course. Why did they establish these rules? Are they there to gatekeep class positions? To extort money out of young adults through predatory loans? No, those rules are there to make you learn! To develop you as a person ✨🌟✨ They're so sacred in fact, that even when the rules aren't there, you must self-enforce them and stop yourself from learning how to utilize and interact appropriately with a growing modern technology.

This isn't pro intellectualism, this is anti-intellectualism. This is against critical use and understanding of modern technology, and it's in favor of enforcing class barriers.

All so they can hold on to feelings of superiority over their peers.

14 notes

·

View notes

Link

with manual approaches used over several decades. “The goal would be to one day speak Dolphin,” says Dr. Denise Herzing. Her research organization, The Wild Dolphin Project (WDP), exclusively studies a specific pod of free-ranging Atlantic spotted dolphins who reside off the coast of the Bahamas. She's been collecting and organizing dolphin sounds for the last 40 years, and has been working with Dr. Thad Starner, a research scientist from Google DeepMind, an AI subsidiary of the tech giant. With their powers combined, they've trained an AI model on a vast library of dolphin sounds; it can also expand to accommodate more data, and be fine tuned to more accurately representing what those sounds might mean. "... feeding dolphin sounds into an AI model like dolphin Gemma will give us a really good look at if there are patterns subtleties that humans can't pick out," Herzing noted.

7 notes

·

View notes

Text

The ongoing harms of AI

In the early days of the chatbot hype, OpenAI CEO Sam Altman was making a lot of promises about what large language models (LLMs) would mean for the future of human society. In Altman’s vision, our doctors and teachers would become chatbots and eventually everyone would have their own tailored AI assistant to help with whatever they needed. It wasn’t hard to see what that could mean for people’s jobs, if his predictions were true. The problem for Altman is that those claims were pure fantasy.

Over the 20 months that have passed since, it’s become undeniably clear that LLMs have limitations many companies do not want to acknowledge, as that might torpedo the hype keeping their executives relevant and their corporate valuations sky high. The problem of false information, often deceptively termed “hallucinations,” cannot be effectively tackled and the notion that the technologies will continue getting infinitely better with more and more data has been called into question by the minimal improvements new AI models have been able to deliver.

However, once the AI bubble bursts, that doesn’t mean chatbots and image generators will be relegated to the trash bin of history. Rather, there will be a reassessment of where it makes sense to implement them, and if attention moves on too fast, they may be able to do that with minimal pushback. The challenge visual artists and video game workers are already finding with employers making use of generative AI to worsen the labor conditions in their industries may become entrenched, especially if artists fail in their lawsuits against AI companies for training on their work without permission. But it could be far worse than that.

Microsoft is already partnering with Palantir to feed generative AI into militaries and intelligence agencies, while governments around the world are looking at how they can implement generative AI to reduce the cost of service delivery, often without effective consideration of the potential harms that can come of relying on tools that are well known to output false information. This is a problem Resisting AI author Dan McQuillan has pointed to as a key reason why we must push back against these technologies. There are already countless examples of algorithmic systems have been used to harm welfare recipients, childcare benefit applicants, immigrants, and other vulnerable groups. We risk a repetition, if not an intensification, of those harmful outcomes.

When the AI bubble bursts, investors will lose money, companies will close, and workers will lose jobs. Those developments will be splashed across the front pages of major media organizations and will receive countless hours of public discussion. But it’s those lasting harms that will be harder to immediately recognize, and that could fade as the focus moves on to whatever Silicon Valley places starts pushing as the foundation of its next investment cycle.

All the benefits Altman and his fellow AI boosters promised will fade, just as did the promises of the gig economy, the metaverse, the crypto industry, and countless others. But the harmful uses of the technology will stick around, unless concerted action is taken to stop those use cases from lingering long after the bubble bursts.

36 notes

·

View notes

Text

LLM Application Development Services | Visa Services App Development

Elevate your legal practice with our specialized LLM application development services. Tailored solutions for law firms and professionals, enhancing efficiency, organization, and client interactions.

Read More

0 notes

Note

Did you hear that Chanel is giving grant money to CalArts to fund some kind of LLM/AI art initiative.

I had not until just now. I thought they were smart, how did they spell LLAMA wrong like that is the big question.

Let's go with the CalArts story on their gift.

[April 24, 2025 – Valencia, Calif.] California Institute of the Arts (CalArts) and the CHANEL Culture Fund together announce the CHANEL Center for Artists and Technology at CalArts, a visionary initiative that positions artists at the forefront of shaping the evolving technologies that define our world. The Center will provide students, faculty, and visiting fellows across the creative disciplines access to leading-edge equipment and software, allowing artists to explore and use new technologies as tools for their work. Creating opportunities for collaboration and driving innovation across disciplines, the initiative creates the conditions for artists to play an active role in developing the use and application of these emergent technologies.

The Center builds on CalArts’ legacy as a cross-disciplinary school of the arts, where experimentation in visual arts, music, film, performing arts, and dance has been nurtured since the institution’s founding. In this unprecedented initiative, artists will be empowered to use technology to shape creativity across disciplines—and, ultimately, to envision a better world.

Funded by a five-year, transformative gift from the CHANEL Culture Fund, the CHANEL Center for Artists and Technology establishes CalArts as the hub of a new ecosystem of arts and technology. The CHANEL Center will foster research, experimentation, mentorship, and the creation of new knowledge by connecting students, faculty, artists, and technologists—the thinkers and creators whose expertise and vision will define the future—with new technology and its applications. It will also activate a network of institutions throughout Southern California and beyond, linking museums, universities, and technology companies to share resources and knowledge.

The CHANEL Center at CalArts will also serve as a hub for the exchange of knowledge among artists and experts from CHANEL Culture Fund’s signature programs—including more than 50 initiatives and partnerships established since 2020 that support cultural innovators in advancing new ideas. Visiting fellows and artists will be drawn both from CalArts’ sphere and from the agile network of visionary creators, thinkers, and multidisciplinary artists whom CHANEL has supported over the past five years—a network that includes such luminaries as Cao Fei, Arthur Jafa, William Kentridge, and Jacolby Satterwhite. The CHANEL Center will also host an annual forum addressing artists’ engagement with emerging technologies, ensuring that knowledge gained is knowledge shared.

The Center’s funding provides foundational resources for equipment; visiting experts, artists, and technologists-in-residence; graduate fellowships; and faculty and staff with specific expertise in future-focused research and creation. With the foundation of the CHANEL Center, CalArts empowers its students, faculty, and visiting artists to shape the future through transformative technology and new modes of thinking.

The first initiative of its kind at an independent arts school, the CHANEL Center consists of two areas of focus: one concentrating on Artificial Intelligence (AI) and Machine Learning, and the other on Digital Imaging. The project cultivates a multidisciplinary ecosystem—encompassing visual art, music, performance, and still, moving, projected, and immersive imagery—connecting CalArts and a global network of artists and technologists, other colleges and universities, arts institutions, and industry partners from technology, the arts, and beyond. ____________________________________-

I wish they'd write this kind of stuff in English.

Legendary art school California Institute of the Arts (CalArts) will soon be home to a major high-tech initiative funded by luxury brand Chanel’s Culture Fund. Billed as the first initiative of its kind at an independent art school, the Chanel Center for Artists and Technology will focus on artificial intelligence and machine learning as well as digital imaging. While they aren’t disclosing the dollar amount of the grant, the project will fund dozens of new roles as well as fellowships for artists and technologists-in-residence and graduate students along with cutting-edge equipment and software.

That's easier to understand I think.

Interesting.

4 notes

·

View notes