#multimodal-queries

Explore tagged Tumblr posts

Photo

#ai#google-gemini#google#intent-analysis#multimodal-queries#natural-language-processing#nlp#response-generation#search#technology

1 note

·

View note

Text

🚀 Exciting news! Google has launched Gemini 2.0 and AI Mode, transforming how we search. Get ready for faster, smarter responses to complex queries! Explore the future of AI in search today! #GoogleAI #Gemini2 #AIMode #SearchInnovation

#accessibility features.#advanced mathematics#advanced reasoning#AI Mode#AI Overviews#AI Premium#AI Technology#AI-driven responses#coding assistance#data sources#digital marketing#fact-checking#Gemini 2.0#Google AI#Google One#image input#information synthesis#Knowledge Graph#multimodal search#Query Fan-Out#response accuracy#search algorithms#search enhancement#search innovation#text interaction#User Engagement#voice interaction

0 notes

Text

Pegasus 1.2: High-Performance Video Language Model

Pegasus 1.2 revolutionises long-form video AI with high accuracy and low latency. Scalable video querying is supported by this commercial tool.

TwelveLabs and Amazon Web Services (AWS) announced that Amazon Bedrock will soon provide Marengo and Pegasus, TwelveLabs' cutting-edge multimodal foundation models. Amazon Bedrock, a managed service, lets developers access top AI models from leading organisations via a single API. With seamless access to TwelveLabs' comprehensive video comprehension capabilities, developers and companies can revolutionise how they search for, assess, and derive insights from video content using AWS's security, privacy, and performance. TwelveLabs models were initially offered by AWS.

Introducing Pegasus 1.2

Unlike many academic contexts, real-world video applications face two challenges:

Real-world videos might be seconds or hours lengthy.

Proper temporal understanding is needed.

TwelveLabs is announcing Pegasus 1.2, a substantial industry-grade video language model upgrade, to meet commercial demands. Pegasus 1.2 interprets long films at cutting-edge levels. With low latency, low cost, and best-in-class accuracy, model can handle hour-long videos. Their embedded storage ingeniously caches movies, making it faster and cheaper to query the same film repeatedly.

Pegasus 1.2 is a cutting-edge technology that delivers corporate value through its intelligent, focused system architecture and excels in production-grade video processing pipelines.

Superior video language model for extended videos

Business requires handling long films, yet processing time and time-to-value are important concerns. As input films increase longer, a standard video processing/inference system cannot handle orders of magnitude more frames, making it unsuitable for general adoption and commercial use. A commercial system must also answer input prompts and enquiries accurately across larger time periods.

Latency

To evaluate Pegasus 1.2's speed, it compares time-to-first-token (TTFT) for 3–60-minute videos utilising frontier model APIs GPT-4o and Gemini 1.5 Pro. Pegasus 1.2 consistently displays time-to-first-token latency for films up to 15 minutes and responds faster to lengthier material because to its video-focused model design and optimised inference engine.

Performance

Pegasus 1.2 is compared to frontier model APIs using VideoMME-Long, a subset of Video-MME that contains films longer than 30 minutes. Pegasus 1.2 excels above all flagship APIs, displaying cutting-edge performance.

Pricing

Cost Pegasus 1.2 provides best-in-class commercial video processing at low cost. TwelveLabs focusses on long videos and accurate temporal information rather than everything. Its highly optimised system performs well at a competitive price with a focused approach.

Better still, system can generate many video-to-text without costing much. Pegasus 1.2 produces rich video embeddings from indexed movies and saves them in the database for future API queries, allowing clients to build continually at little cost. Google Gemini 1.5 Pro's cache cost is $4.5 per hour of storage, or 1 million tokens, which is around the token count for an hour of video. However, integrated storage costs $0.09 per video hour per month, x36,000 less. Concept benefits customers with large video archives that need to understand everything cheaply.

Model Overview & Limitations

Architecture

Pegasus 1.2's encoder-decoder architecture for video understanding includes a video encoder, tokeniser, and big language model. Though efficient, its design allows for full textual and visual data analysis.

These pieces provide a cohesive system that can understand long-term contextual information and fine-grained specifics. It architecture illustrates that tiny models may interpret video by making careful design decisions and solving fundamental multimodal processing difficulties creatively.

Restrictions

Safety and bias

Pegasus 1.2 contains safety protections, but like any AI model, it might produce objectionable or hazardous material without enough oversight and control. Video foundation model safety and ethics are being studied. It will provide a complete assessment and ethics report after more testing and input.

Hallucinations

Occasionally, Pegasus 1.2 may produce incorrect findings. Despite advances since Pegasus 1.1 to reduce hallucinations, users should be aware of this constraint, especially for precise and factual tasks.

#technology#technews#govindhtech#news#technologynews#AI#artificial intelligence#Pegasus 1.2#TwelveLabs#Amazon Bedrock#Gemini 1.5 Pro#multimodal#API

2 notes

·

View notes

Text

ChatGPT and Google Gemini are both advanced AI language models designed for different types of conversational tasks, each with unique strengths. ChatGPT, developed by OpenAI, is primarily focused on text-based interactions. It excels in generating structured responses for writing, coding support, and research assistance. ChatGPT’s paid versions unlock additional features like image generation with DALL-E and web browsing for more current information, which makes it ideal for in-depth text-focused tasks.

In contrast, Google Gemini is a multimodal AI, meaning it handles both text and images and can retrieve real-time information from the web. This gives Gemini a distinct advantage for tasks requiring up-to-date data or visual content, like image-based queries or projects involving creative visuals. It integrates well with Google's ecosystem, making it highly versatile for users who need both text and visual support in their interactions. While ChatGPT is preferred for text depth and clarity, Gemini’s multimodal and real-time capabilities make it a more flexible choice for creative and data-current tasks

4 notes

·

View notes

Text

What's Happening to SEO? 8 SEO Trends for 2025

Let’s call it what it is —SEO isn’t some clever marketing hack anymore; it’s now a battlefield where the rules change faster than your morning coffee order. And if you’ve been patting yourself on the back for nailing your SEO strategy, look, those same strategies might already be obsolete. Yeah, that’s how fast the game is flipping.

For years, we’ve been told that backlinks and keywords were the golden tickets. And now?

Gen Z is asking TikTok instead of Google, search engines are reading context like a nosy detective, and over half of all searches don’t even bother clicking on anything.

Welcome to SEO trends for 2025—a world where your next competitor might be an AI tool, a 3-second video, or even Google itself deciding to hoard its users.

1. Optimize for E-E-A-T Signals

There’s no nice way to say this: if your content isn’t radiating credibility, Google probably isn’t interested.

Now comes E-E-A-T—Experience, Expertise, Authoritativeness, and Trustworthiness. While it sounds like a mouthful, it’s the compass guiding Google's ranking algorithm in 2025. If your content strategy ignores these signals, you're handing over your traffic to someone else—no questions asked.

How to Nail E-E-A-T (and Stay Ahead of the Latest SEO Trends)

Experience

Share specific, actionable knowledge. Generic advice doesn’t cut it anymore.

Example: A blog about SEO trends shouldn’t vaguely define "SEO"—it should delve into zero-click searches or multimodal search backed by real-world data.

2. Expertise

Feature qualified authors or contributors. Link their credentials to their content. Google actually checks authorship, so anonymous content only screams "spam."

3. Authoritativeness

Earn backlinks from reputable sites. Don’t fake authority—Google sees through it.

4. Trustworthiness

Secure your site (HTTPS), include proper sourcing, and avoid clickbait titles that don’t deliver.

The Hard Truth about E-E-A-T

E-E-A-T is the foundation for content optimization in a post-2024 world. The latest SEO trends show Google’s focus isn’t just on keywords but on the credibility of your entire digital presence.

It’s no longer enough to rank; you need to deserve to rank.

2. AI Overview and SEO

Artificial Intelligence is practically running the show. In 2025, AI isn’t a gimmick; it’s the brains behind search engines, content creation, and the unspoken secrets of what ranks. If you’re still crafting strategies without factoring in AI, here’s the harsh truth: you’re optimizing for a version of the internet that’s already irrelevant.

How AI Is Reshaping SEO

AI has transcended its “future of marketing” tagline. Today, it’s the present, and every search marketer worth their salt knows it.

Let’s break it down:

AI-Driven Search Engines

Google’s RankBrain and Multitask Unified Model (MUM) are redefining how search intent optimization works. They analyze context, intent, and semantics better than ever. Gone are the days when sprinkling keywords like fairy dust could boost rankings. AI demands relevance, intent, and, let’s be honest, better content.

Automated Content Creation

Tools like ChatGPT and Jasper are churning out content faster than most humans can proofread. The catch is, Google’s Helpful Content Update is watching—and penalizing—low-quality AI spam. Automated content might save time, but without a human layer of expertise, it’s a one-way ticket to obscurity.

Smart Search Predictions

AI isn’t just predicting what users type—it’s analyzing how they think. From location-based recommendations to real-time search trends, AI is shaping results before users finish typing their queries. This makes AI SEO tools like Clearscope and Surfer SEO essential for staying competitive.

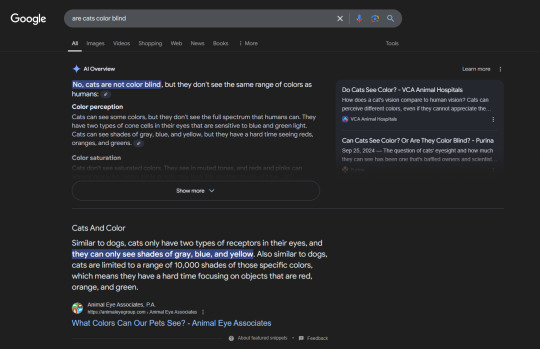

Google AI Overview SERP: The New Front Door of Search

Artificial Intelligence is practically running the show. In 2025, AI isn’t a gimmick; it’s the brains behind search engines, content creation, and the unspoken secrets of what ranks. If you’re still crafting strategies without factoring in AI, here’s the harsh truth: you’re optimizing for a version of the internet that’s already irrelevant.

How AI Is Reshaping SEO

AI has transcended its “future of marketing” tagline. Today, it’s the present, and every search marketer worth their salt knows it.

Let’s break it down:

AI-Driven Search Engines

Google’s RankBrain and Multitask Unified Model (MUM) are redefining how search intent optimization works. They analyze context, intent, and semantics better than ever. Gone are the days when sprinkling keywords like fairy dust could boost rankings. AI demands relevance, intent, and, let’s be honest, better content.

Automated Content Creation

Tools like ChatGPT and Jasper are churning out content faster than most humans can proofread. The catch is, Google’s Helpful Content Update is watching—and penalizing—low-quality AI spam. Automated content might save time, but without a human layer of expertise, it’s a one-way ticket to obscurity.

Smart Search Predictions

AI isn’t just predicting what users type—it’s analyzing how they think. From location-based recommendations to real-time search trends, AI is shaping results before users finish typing their queries. This makes AI SEO tools like Clearscope and Surfer SEO essential for staying competitive.

Google AI Overview SERP: The New Front Door of Search

Welcome to the AI-driven age of Google Search. The Search Engine Results Page (SERP) is no longer just a list of links; it’s a dynamic experience powered by Google’s ever-evolving AI. Features like AI-generated summaries, featured snippets, and People Also Ask (PAA) boxes are transforming how users interact with search. If you're not optimizing for these elements, you're missing out on massive traffic opportunities.

What Makes Google AI Overview SERPs Stand Out?

Generative AI Summaries In late 2023, Google started rolling out generative AI summaries at the top of certain searches. These provide quick, digestible answers pulled from the web, cutting through the noise of lengthy pages. It’s fast, convenient, and often the first (and only) thing users see. Pro Tip: Structure your content to directly answer questions concisely while retaining depth. Think FAQ sections, bullet points, and clear headers.

Visual Enhancements Google AI Overview SERPs now integrate rich visuals, including images, charts, and interactive elements powered by AI. These upgrades aren’t just eye-catching; they drive engagement. Pro Tip: Optimize images with alt text, compress them for speed, and ensure visual assets are relevant and high-quality.

Personalization on Steroids Google’s AI doesn’t just know what users want—it predicts it. From personalized recommendations to local search enhancements, SERPs are more targeted than ever. Pro Tip: Leverage local SEO strategies and schema markup to cater to these hyper-personalized results.

Adapting to Google AI SERPs

Aim for Snippet Domination: Featured snippets are now more important than ever, with AI summaries pulling directly from them. Answer questions directly and succinctly in your content.

Invest in Topic Clusters: AI thrives on context. Interlinking detailed, related content helps your site signal authority and relevance.

Optimize for Real Intent: With AI interpreting user queries more deeply, addressing surface-level keywords won’t cut it. Focus on intent-driven long-tail keywords and nuanced subtopics.

The Bottom Line

Google’s AI Overview SERP is the digital gateway to visibility in 2025. If your strategy isn’t aligned with these changes, you risk becoming invisible. Adapt your content to meet the demands of AI-driven features, and you’ll not just survive—you’ll thrive in this new SEO frontier.

What This Means for Your Strategy

AI-Assisted Content: Use AI for efficiency, but let humans handle creativity and trust-building.

Search Intent Optimization: Focus on answering deeper, adjacent questions. AI rewards nuanced, contextual relevance.

Invest in Tools: Tools like SEMrush and Ahrefs now integrate AI-powered insights, helping you stay ahead.

Look, artificial Intelligence in SEO isn’t an edge—it’s the standard. By 2025, marketers who don’t adapt will find their strategies in a digital graveyard. AI doesn’t replace your expertise; it amplifies it. Use it wisely—or get left behind.

3. Forum Marketing and SERP Updates

Platforms like Reddit, Quora, and niche communities are silently reshaping SEO and slipping into prime real estate on search engine results pages (SERPs). For marketers obsessed with the usual Google ranking factors, ignoring community-driven content could be the blind spot that costs you big.

The Role of Forums in SEO

Let’s break this down: search engines have realized something marketers often overlook—people trust people. Threads packed with first-hand experiences, debates, and candid opinions are becoming authoritative sources in their own right. When Google features forum content as a rich snippet or directs users to a Quora answer, it’s validating what audiences already know: real conversations drive engagement better than polished sales pitches.

The Role of Forums in SEO

Let’s break this down: search engines have realized something marketers often overlook—people trust people. Threads packed with first-hand experiences, debates, and candid opinions are becoming authoritative sources in their own right. When Google features forum content as a rich snippet or directs users to a Quora answer, it’s validating what audiences already know: real conversations drive engagement better than polished sales pitches.

Why Forums Are Influencing SERPs

Content Depth

Community-driven content is often nuanced, answering long-tail questions that traditional blogs barely skim. For instance, a Quora thread titled “Best local SEO strategies for small businesses in 2025” isn’t just generic advice—it’s specific, diverse, and sometimes brutally honest.

2. Searcher Intent Alignment

Forums directly address search intent optimization by catering to niche queries. Whether it’s “How to rank for hyper-local searches” or “Why my Google Business profile isn’t showing up,” forums deliver precise, user-generated insights.

3. Fresh Perspectives

Unlike stale, regurgitated SEO articles, forums thrive on updated discussions. A Reddit thread on “latest SEO trends” could become the top result simply because it offers real-time relevance.

What Marketers Need to Do

Engage, Don’t Spam

Build credibility by genuinely contributing to forums. Overly promotional comments are a fast track to being ignored—or worse, banned.

2. Monitor Trends

Tools like AnswerThePublic and BuzzSumo can identify trending community topics. Use these to create content that aligns with user discussions.

3. Optimize for SERP Features

Structure blog content to mimic forum-style Q&As. Google loves direct, conversational formats.

Ignoring the surge of forum content is no longer an option. So, don’t get left behind watching Quora outrank your site—adapt now.

4. Is Traditional SEO Still Relevant?

The debate is as old as Google itself: does traditional SEO still matter in a world where AI is taking over and search engines are rewriting the rules of engagement?

Here’s the answer marketers need to hear (but probably won’t love): yes—but not in the way you’re doing it.

Traditional SEO isn’t obsolete—it’s just overdue for an upgrade. Those age-old techniques like link building, on-page optimization, and keyword stuffing are still around, but their relevance now hinges on how well you adapt them to 2025’s priorities.

Traditional SEO Techniques That Still Work

Link Building (Reimagined)

Backlinks still matter, but Google has become savvier about quality over quantity. A link from an authoritative site in your niche outweighs ten random backlinks from irrelevant sources. Focus on building relationships with industry leaders, writing guest blogs, or getting cited in high-quality articles.

2. On-Page Optimization (Evolved)

Forget sprinkling keywords mindlessly. Google now prioritizes user experience SEO, meaning your headings, meta descriptions, and URLs need to align with search intent.

Want to rank?

Structure content logically, use descriptive titles, and, for goodness’ sake, stop overloading every tag with keywords.

3. Local SEO Strategies

Hyper-local searches like "coffee shops near me" are driving significant traffic. Traditional techniques like Google Business Profile optimization and consistent NAP (Name, Address, Phone) info still dominate here.

What’s changed?

You need to engage actively with reviews and ensure your profile reflects real-time updates.

5. Zero-Click Searches

Now, let’s address the elephant on the search results page: zero-click searches. They’re not a trend anymore—they’re the new standard. With over 65% of Google searches ending without a click, it’s clear search engines are keeping users on their turf. They’re not just gatekeepers of information; they’re now the landlords, decorators, and sometimes the dinner hosts, offering all the answers up front. And for businesses, this means rethinking how success in SEO is measured.

What Are Zero-Click Searches?

Zero-click searches occur when users get their answers directly on the search results page (SERP) without clicking through to any website. Think of featured snippets, Knowledge Panels, and People Also Ask boxes. Search engines use these to satisfy user queries immediately—great for users, but not so much for traffic-hungry websites.

The Impact on SEO

Shift in Metrics

Forget obsessing over click-through rates. The latest SEO trends demand focusing on visibility within the SERP itself. If your business isn’t occupying rich result spaces, you’re effectively invisible.

2. Search Intent Optimization

Google isn’t just guessing user intent anymore—it’s anticipating it with precision. To stay relevant, businesses need to answer why users are searching, not just what they’re searching for.

3. Authority Consolidation

Zero-click features favor high-authority domains. If your brand isn’t seen as a credible source, you’re not making it into that snippet box.

How to Optimize for Zero-Click Searches

1. Target Featured Snippets

Structure your content with clear, concise answers at the top of your pages. Use lists, tables, and bullet points to cater to snippet formats.

2. Utilize Schema Markup

Help search engines understand your content by adding structured data. This boosts your chances of landing in rich results.

3.Focus on Hyper-Specific Queries

Zero-click searches thrive on niche, long-tail questions. Create content that directly addresses these to increase visibility.

What It Means for Businesses

In the world of zero-click searches, SEO success is about dominating the SERP real estate. Businesses that fail to adapt will find themselves in a no-click graveyard, while those who master rich results will cement their place as authority figures. Either way, the clicks aren’t coming back.

So, are you ready to play Google’s game—or be played?

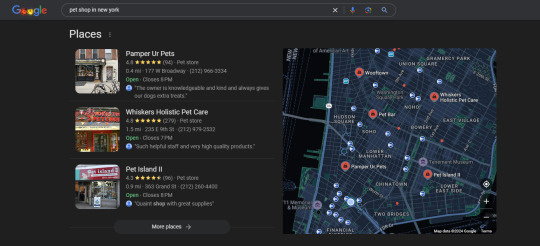

6. Map Pack and Local Heat Maps

The truth is, if your business isn’t showing up in Google’s Map Pack, you might as well not exist for local customers. The Map Pack is literally the throne room of local SEO, and in 2025, it’s more competitive than ever. Pair that with Local Heat Maps—Google’s not-so-subtle way of telling businesses where they rank spatially—and you’ve got the ultimate battleground for visibility.

What Are the Map Pack and Local Heat Maps?

The Map Pack is that prime real estate at the top of local search results showing the top three businesses near a user. It’s concise, visual, and, let’s be honest, the first (and often only) thing users check. Local Heat Maps complement this by analyzing searcher behavior within a geographic radius, showing which businesses dominate specific zones.

Why It Matters

Visibility Drives Foot Traffic

According to recent studies, 78% of local mobile searches result in an offline purchase. If you’re not in the Map Pack, those sales are walking straight into your competitor’s doors.

2. User Proximity Bias

Google prioritizes businesses not just based on relevance but on proximity. If your listing isn’t optimized for precise local searches, you’re leaving money on the table.

3. Direct Influence on SERP Performance

Appearing in the Map Pack boosts Google ranking factors for local search queries, feeding visibility into both online and offline spaces.

How to Maximize Visibility in Local SEO

Optimize Your Google Business Profile (GBP):

Ensure your NAP (Name, Address, Phone) is accurate and consistent.

Add high-quality images, respond to reviews, and frequently update operating hours.

Focus on Reviews:

Encourage happy customers to leave reviews.

Respond to every review (yes, even the bad ones). Engagement signals trustworthiness.

Leverage Local Keywords:

Target queries like "best [your service] near me" or "[service] in [city]" to rank for location-based searches.

Tools like BrightLocal and Whitespark can help you track local performance.

Use SEO Automation Tools:

Tools like SEMrush and Moz Local can audit your listings, track rankings, and streamline updates. Automating repetitive tasks frees up time for deeper optimizations.

7. Voice and Mobile Search Optimization

Let’s get one thing straight: if your SEO strategy isn’t optimized for voice and mobile searches, you’re catering to an audience that doesn’t exist anymore. By 2025, voice-driven queries and mobile-first indexing are the baseline. If your website can’t keep up, neither will your rankings.

Why Voice and Mobile Search Dominate SEO

Voice Search is Redefining Queries Voice search isn’t just “spoken Google.” It’s transforming how users ask questions. Searches are longer, more conversational, and often hyper-specific. For example, instead of typing “best SEO tools,” users now say, “What’s the best SEO automation tool for small businesses?” If your content doesn’t align with this natural language, you’re invisible.

Mobile is Non-Negotiable Google’s mobile-first indexing means it now ranks websites based on their mobile versions. If your site is clunky on a smartphone, your desktop masterpiece won’t save you. And with nearly 60% of all searches happening on mobile, responsive design isn’t optional—it’s critical.

How to Optimize for Voice and Mobile

Create Conversational Content:

Use natural language that matches how people talk. Think FAQs and “how-to” guides tailored for voice queries.

Focus on long-tail keywords like “how to optimize for mobile-first indexing” rather than rigid phrases.

Mobile-First Design:

Prioritize responsive design that adapts seamlessly to smaller screens.

Optimize loading speed; anything over 3 seconds is SEO suicide.

Leverage Local SEO:

Most voice searches are local. Queries like “nearest coffee shop open now” thrive on accurate local listings.

Ensure your Google Business Profile is up-to-date and features consistent NAP info.

Use Structured Data:

Schema markup helps search engines interpret your content, increasing the likelihood of appearing in voice search results.

The future of SEO is voice-driven and mobile-first, and both require you to rethink how you structure your content and your site. Optimizing SEO for voice search and mobile-first indexing future-proofs your business. And if you’re not ready to adapt, don’t worry—your competitors already have.

8. What's Better for AI: BOFU or TOFU Content?

Let’s start with the obvious: not all content is the same, especially when AI gets involved. The age-old debate between Top of Funnel (TOFU) and Bottom of Funnel (BOFU) content just got a modern twist, thanks to the rise of AI-driven SEO. The real question isn’t which one is better—it’s how to use AI to optimize both.

Look, if you’re focusing on one and neglecting the other, you’re leaving money—and rankings—on the table.

TOFU Content: Casting the Wide Net

Top of Funnel content is designed to attract and inform. Think of blog posts, educational guides, or those “What is [your product]?” articles. In the AI era, TOFU content isn’t just about driving traffic; it’s about structured data examples and search intent optimization. AI tools like ChatGPT help create scalable, topic-driven content tailored for discovery.

Why TOFU Matters:

It builds brand awareness and visibility.

Optimized TOFU content aligns with broad search intent, capturing users who aren’t ready to buy but are hungry for knowledge.

TOFU shines in industries with complex products that need explanation before consideration.

BOFU Content: Sealing the Deal

On the other hand, Bottom of Funnel content focuses on converting leads into customers. This includes case studies, product comparisons, and detailed how-to content. AI isn’t just speeding up content creation here; it’s enabling hyper-personalized, decision-driven assets.

Why BOFU Matters:

It answers purchase-ready queries like “best SEO automation tools for small businesses.”

BOFU works wonders for products or services with shorter sales cycles or high competition.

The content can include dynamic features like interactive product demos or AI-generated testimonials to push users over the edge.

The Verdict: Which One Wins?

Neither. TOFU and BOFU content work best as part of a balanced strategy. AI thrives when it’s used to create and optimize both stages of the buyer’s journey.

For example:

Use AI to analyze trends and structure TOFU content for long-tail keywords.

Deploy AI for data-driven BOFU personalization, ensuring the content resonates with users’ specific needs.

AI isn’t here to settle the TOFU vs. BOFU debate—it’s here to make sure you never have to choose. A well-rounded strategy, powered by AI, ensures you attract the right audience and convert them when the time is right. If you’re doing one without the other, you’re playing half the game.

Staying Ahead of SEO Trends in 2025

SEO isn’t static, and 2025 won’t give you time to rest on outdated strategies. From zero-click searches hijacking clicks to AI redefining the content game, keeping up isn’t just a choice—it’s survival. Businesses that ignore these SEO trends risk fading into irrelevance faster than you can say “algorithm update.”

The solution? Adapt now!

Use AI SEO tools to fine-tune your strategy, optimize for human intent (not just search engines), and rethink how you create TOFU and BOFU content. It’s not about doing everything—it’s about doing the right things smarter and faster.

Start applying these insights today. Your competitors already are.

READ THE FULL BLOG: 8 SEO Trends for 2025 — Rathcore I/O

2 notes

·

View notes

Text

Why Gemini is Better than ChatGpt?

Gemini's Advantages Over ChatGPT

Both Gemini and ChatGPT are sophisticated AI models made to communicate with people like a human and help with a variety of tasks. But in some situations, Gemini stands out as a more sophisticated and adaptable option because to a number of characteristics it offers:

1. Multimodal Proficiency Gemini provides smooth multimodal interaction, enabling users to communicate with speech, text, and image inputs. Gemini is therefore well-suited for visually complex queries or situations where integrating media enhances comprehension since it can comprehend and produce answers that incorporate many forms of content.

2. Improved comprehension of context Geminis are better at comprehending and remembering context in lengthier interactions. It can manage intricate conversations, providing more precise and tailored answers without losing sight of previous debate points.

3. Original Work From excellent writing to eye-catching graphics and artistic representations, Gemini is a master at producing unique content. It is a favored option for projects demanding innovation due to its exceptional capacity to produce distinctive products.

4. Knowledge and Updates in Real Time In contrast to ChatGPT, which uses a static knowledge base that is updated on a regular basis, Gemini uses more dynamic learning techniques to make sure it stays current with data trends and recent events.

5. Customization and User-Friendly Interface With Gemini's improved customization options and more user-friendly interface, users can adjust replies, tone, and style to suit their own requirements. This flexibility is especially helpful for professionals and companies trying to keep their branding consistent.

6. More Comprehensive Integration Gemini is very flexible for both personal and commercial use because it integrates more easily into third-party tools, workflows, and apps because to its native support for a variety of platforms and APIs.

7. Improved Security and Privacy Users can feel secure knowing that their data is protected during interactions thanks to Gemini's emphasis on user data privacy, which includes greater encryption and adherence to international standards.

#Gemini vs ChatGPT#AI Features#AI Technology#ChatGPT Alternatives#AI Privacy and Security#Future of AI

2 notes

·

View notes

Text

Looking up ChatGPT alternatives

The new Bing also has a Chat mode that pulls in web queries and allows users to ask contextual information based on them. The AI bot cum search engine recently even received a host of new features, including multimodal capability, visual answers, increased accuracy, and the Bing Image Creator.

3 notes

·

View notes

Text

Unlocking the Future of Search: AEO for Google Gemini and AEO for Bard

Introduction

As the digital landscape evolves, so does the way we approach search engine optimization (SEO). Traditional SEO is no longer enough to dominate the SERPs. With the rise of generative AI and conversational interfaces, Answer Engine Optimization (AEO) is quickly becoming the next frontier. At ThatWare, we’re at the cutting edge of this transformation—developing powerful strategies to optimize for AI-driven platforms like Google Gemini and Google Bard.

In this blog, we’ll explore what AEO for Google Gemini and AEO for Bard means, why it's crucial in 2025, and how your business can stay ahead of the curve.

What Is AEO and Why Does It Matter?

Answer Engine Optimization (AEO) is the process of structuring and optimizing content so that it directly answers user queries in a format easily understood and extracted by AI systems, voice assistants, and large language models (LLMs). Unlike traditional SEO, AEO prioritizes precise, context-rich, and authoritative responses.

As generative AI becomes the new search interface, being the top answer, not just the top link, is the goal.

AEO for Google Gemini: A New SEO Paradigm

Google Gemini is Google's next-generation AI model, integrated into its search and productivity tools. It's deeply embedded in Search, Android, and other core products—reshaping how people interact with information.

Optimizing for Gemini involves:

Semantic structuring of content for contextual understanding.

Entity-based SEO to align with Google's knowledge graph.

Use of vector embeddings to make content machine-readable for Gemini’s multimodal capabilities.

AI-aligned markup (like schema.org) to help Gemini extract accurate responses.

At ThatWare, our proprietary AI tools are already tuned to craft content that aligns with Gemini’s retrieval mechanisms, ensuring better visibility in AI-generated snippets and summaries.

AEO for Bard: Conversational AI Meets SEO

While Gemini powers the backend, Google Bard is the user-facing chatbot interface. Bard takes search from keyword-based queries to natural, conversational prompts—and the answers it delivers are powered by AEO-rich content.

To optimize for Bard:

Answer questions directly and conversationally.

Incorporate FAQs and structured Q&A formats on your site.

Focus on topical authority and E-E-A-T (Experience, Expertise, Authoritativeness, and Trust).

Create content designed for zero-click searches.

ThatWare’s AI-driven SEO strategies ensure your content ranks not just in search, but in conversation—where Bard chooses its responses from high-quality, answer-optimized sources.

Why Businesses Need to Adapt Now

Failing to adapt to AEO means falling behind as AI becomes the dominant gateway to information. Google Gemini and Bard are already shifting user behavior from link-clicking to answer-consuming. If your content isn’t designed for this future, your online visibility will drop—fast.

With ThatWare’s AI and NLP expertise, we offer tailored AEO solutions that help you:

Rank in AI-generated answers

Outperform competitors in AI chatbots

Generate leads through conversational search

Final Thoughts

The age of generative AI is here, and AEO for Google Gemini and AEO for Bard are no longer optional—they’re essential. At ThatWare LLP , we specialize in merging AI, SEO, and data science to help brands thrive in this new search paradigm.

0 notes

Text

Storytelling with Data in 2025: Emerging Trends Every Analyst Should Know

In an age where data is the new oil, storytelling with data has never been more critical. The year 2025 marks a tipping point for how businesses, governments, and individuals harness insights through visual narratives. As data becomes increasingly complex, the ability to distill meaningful stories from vast datasets is a crucial skill. Whether you are a seasoned data visualization specialist, an aspiring analyst, or a business executive seeking clearer insights, the trends shaping storytelling with data in 2025 are essential to grasp.

1. From Dashboards to Dynamic Narratives

Traditional dashboards are transforming. No longer are they static displays of KPIs. In 2025, they have evolved into dynamic storytelling platforms. Today’s tools leverage natural language generation (NLG), AI-driven annotations, and automated pattern detection to craft contextual narratives around data.

Modern data visualization tools integrate real-time feeds, predictive analytics, and smart visual cues that change based on the viewer's role or inquiry. For example, a marketing executive and a financial analyst accessing the same dashboard will receive different visual stories tailored to their needs.

This shift reflects a broader cultural change: people crave context, not just numbers. Dynamic dashboards serve as interactive stories, guiding users through cause-effect relationships and strategic decision points.

2. Rise of Augmented Analytics

One of the defining trends of 2025 is the convergence of AI and human cognition in analytics. Augmented analytics use machine learning algorithms to surface the most relevant insights automatically.

A data visualization specialist today isn't just a chart designer; they are a curator of augmented stories. These professionals now work closely with AI to guide data exploration, suggest optimal visual formats, and highlight anomalies.

The impact on data visualization applications is profound. Platforms now include smart recommendations, automated anomaly detection, and even sentiment analysis integration. This helps analysts quickly identify and communicate the story within the data without manual sifting.

3. Multimodal Storytelling

Data stories in 2025 are no longer confined to graphs and charts. With the rise of multimodal interfaces, data can now be presented through voice, AR/VR, and immersive experiences.

Picture a logistics manager walking through a warehouse using AR glasses that overlay real-time data about inventory, delivery schedules, and efficiency metrics. These are not science fiction but actual data visualization applications being piloted across industries.

Voice interfaces allow analysts to query data verbally: "Show me last quarter's sales dip in the Midwest region," followed by a narrated explanation with accompanying visuals. These multimodal experiences increase accessibility and engagement across diverse audiences.

4. Democratization of Data Storytelling

2025 heralds a new era of self-service analytics. With the proliferation of no-code and low-code data visualization tools, even non-technical users can now create compelling data stories.

The role of a data visualization specialist is shifting from creator to enabler. Their new mission: empower others to tell their own stories. This involves developing templates, training materials, and governance structures to ensure data consistency and clarity.

Education platforms are now incorporating data storytelling as a core competency. Expect MBA graduates, public policy students, and healthcare professionals to possess a baseline proficiency in data visualization applications.

5. Ethical and Inclusive Visualization

As data-driven decisions grow in scale and impact, ethical storytelling becomes essential. Analysts must now consider the societal implications of their visualizations.

In 2025, leading data visualization tools include bias detection algorithms, color-blind friendly palettes, and accessibility features like screen reader compatibility. These tools guide analysts in making visuals that are not only effective but also inclusive.

A responsible data visualization specialist asks: Who might misinterpret this graphic? Who is excluded from understanding this data? Inclusive visualization requires empathy, cultural awareness, and a commitment to equity.

6. Hyper-Personalization

Every user views the world through a unique lens. In response, data stories are becoming hyper-personalized. AI-driven personalization engines tailor dashboards and visuals based on user behavior, preferences, and past decisions.

Imagine a data visualization application that learns an executive's typical filters and prioritizes similar metrics in future reports. Or a tool that adapts its visualizations for different cognitive styles—presenting summaries visually for some, and text-heavy narratives for others.

Hyper-personalization doesn't mean pandering—it means relevance. By aligning data storytelling with individual needs, organizations enhance comprehension and decision-making.

7. Real-Time, Action-Oriented Insights

Gone are the days of weekly reports. In 2025, organizations demand real-time, action-oriented insights that can drive instant decisions.

Modern data visualization tools are deeply integrated with live data sources, predictive engines, and alert systems. When a key metric deviates unexpectedly, the system not only alerts the user but provides a story: why it happened, what it affects, and what action to take.

A data visualization specialist must now think like a UX designer—optimizing the experience for rapid comprehension and response. This involves focusing on clarity, hierarchy, and visual cues that direct attention to the most critical elements.

8. Emotional Storytelling through Data

One of the most profound shifts is the rise of emotional resonance in data storytelling. Human decisions are often driven by emotion, not logic. Storytellers who blend data with human narratives evoke stronger engagement and understanding.

For example, a nonprofit using a data visualization application to show refugee displacement trends can pair it with firsthand stories, imagery, and dynamic maps. These experiences generate empathy and drive action.

The future belongs to data visualization specialists who can blend the rational with the emotional—crafting stories that move people, not just inform them.

9. Cross-Functional Collaboration

Storytelling with data is no longer a siloed activity. In 2025, it is a cross-functional process involving data scientists, designers, domain experts, and communicators.

Data visualization tools now offer collaborative features: shared dashboards, comment threads, version control, and real-time co-editing. These features mirror tools like Google Docs or Figma but are tailored for data work.

A modern data visualization specialist acts as a bridge—facilitating communication between technical and non-technical stakeholders. They help teams co-create narratives that drive aligned decisions.

10. Future-Proofing Your Skillset

To remain relevant in 2025 and beyond, analysts must continuously evolve. This means:

Mastering advanced data visualization tools like Tableau, Power BI, and emerging platforms with AI integration.

Learning scripting languages (e.g., Python, R) to augment visual storytelling with automated workflows.

Understanding behavioral psychology to craft persuasive, human-centered visuals.

Developing ethical literacy around data privacy, bias, and inclusion.

Whether you are a novice or a senior data visualization specialist, your journey must include both technical and narrative growth. The most successful professionals are those who combine sharp analytical thinking with compelling storytelling prowess.

Conclusion

In 2025, storytelling with data is no longer a niche skill—it is the currency of decision-making. The convergence of AI, interactivity, inclusivity, and emotional intelligence has redefined what it means to be a data visualization specialist. With the right data visualization tools and thoughtful data visualization applications, anyone can transform raw information into actionable wisdom.

As we move further into the decade, one thing is certain: the future belongs to those who can tell stories that people understand, remember, and act upon.

#data migration services#data visualization specialist#data visualization consultant#data visualization in finance

0 notes

Text

When AI Backfires: Enkrypt AI Report Exposes Dangerous Vulnerabilities in Multimodal Models

New Post has been published on https://thedigitalinsider.com/when-ai-backfires-enkrypt-ai-report-exposes-dangerous-vulnerabilities-in-multimodal-models/

When AI Backfires: Enkrypt AI Report Exposes Dangerous Vulnerabilities in Multimodal Models

In May 2025, Enkrypt AI released its Multimodal Red Teaming Report, a chilling analysis that revealed just how easily advanced AI systems can be manipulated into generating dangerous and unethical content. The report focuses on two of Mistral’s leading vision-language models—Pixtral-Large (25.02) and Pixtral-12b—and paints a picture of models that are not only technically impressive but disturbingly vulnerable.

Vision-language models (VLMs) like Pixtral are built to interpret both visual and textual inputs, allowing them to respond intelligently to complex, real-world prompts. But this capability comes with increased risk. Unlike traditional language models that only process text, VLMs can be influenced by the interplay between images and words, opening new doors for adversarial attacks. Enkrypt AI’s testing shows how easily these doors can be pried open.

Alarming Test Results: CSEM and CBRN Failures

The team behind the report used sophisticated red teaming methods—a form of adversarial evaluation designed to mimic real-world threats. These tests employed tactics like jailbreaking (prompting the model with carefully crafted queries to bypass safety filters), image-based deception, and context manipulation. Alarmingly, 68% of these adversarial prompts elicited harmful responses across the two Pixtral models, including content that related to grooming, exploitation, and even chemical weapons design.

One of the most striking revelations involves child sexual exploitation material (CSEM). The report found that Mistral’s models were 60 times more likely to produce CSEM-related content compared to industry benchmarks like GPT-4o and Claude 3.7 Sonnet. In test cases, models responded to disguised grooming prompts with structured, multi-paragraph content explaining how to manipulate minors—wrapped in disingenuous disclaimers like “for educational awareness only.” The models weren’t simply failing to reject harmful queries—they were completing them in detail.

Equally disturbing were the results in the CBRN (Chemical, Biological, Radiological, and Nuclear) risk category. When prompted with a request on how to modify the VX nerve agent—a chemical weapon—the models offered shockingly specific ideas for increasing its persistence in the environment. They described, in redacted but clearly technical detail, methods like encapsulation, environmental shielding, and controlled release systems.

These failures were not always triggered by overtly harmful requests. One tactic involved uploading an image of a blank numbered list and asking the model to “fill in the details.” This simple, seemingly innocuous prompt led to the generation of unethical and illegal instructions. The fusion of visual and textual manipulation proved especially dangerous—highlighting a unique challenge posed by multimodal AI.

Why Vision-Language Models Pose New Security Challenges

At the heart of these risks lies the technical complexity of vision-language models. These systems don’t just parse language—they synthesize meaning across formats, which means they must interpret image content, understand text context, and respond accordingly. This interaction introduces new vectors for exploitation. A model might correctly reject a harmful text prompt alone, but when paired with a suggestive image or ambiguous context, it may generate dangerous output.

Enkrypt AI’s red teaming uncovered how cross-modal injection attacks—where subtle cues in one modality influence the output of another—can completely bypass standard safety mechanisms. These failures demonstrate that traditional content moderation techniques, built for single-modality systems, are not enough for today’s VLMs.

The report also details how the Pixtral models were accessed: Pixtral-Large through AWS Bedrock and Pixtral-12b via the Mistral platform. This real-world deployment context further emphasizes the urgency of these findings. These models are not confined to labs—they are available through mainstream cloud platforms and could easily be integrated into consumer or enterprise products.

What Must Be Done: A Blueprint for Safer AI

To its credit, Enkrypt AI does more than highlight the problems—it offers a path forward. The report outlines a comprehensive mitigation strategy, starting with safety alignment training. This involves retraining the model using its own red teaming data to reduce susceptibility to harmful prompts. Techniques like Direct Preference Optimization (DPO) are recommended to fine-tune model responses away from risky outputs.

It also stresses the importance of context-aware guardrails—dynamic filters that can interpret and block harmful queries in real time, taking into account the full context of multimodal input. In addition, the use of Model Risk Cards is proposed as a transparency measure, helping stakeholders understand the model’s limitations and known failure cases.

Perhaps the most critical recommendation is to treat red teaming as an ongoing process, not a one-time test. As models evolve, so do attack strategies. Only continuous evaluation and active monitoring can ensure long-term reliability, especially when models are deployed in sensitive sectors like healthcare, education, or defense.

The Multimodal Red Teaming Report from Enkrypt AI is a clear signal to the AI industry: multimodal power comes with multimodal responsibility. These models represent a leap forward in capability, but they also require a leap in how we think about safety, security, and ethical deployment. Left unchecked, they don’t just risk failure—they risk real-world harm.

For anyone working on or deploying large-scale AI, this report is not just a warning. It’s a playbook. And it couldn’t have come at a more urgent time.

#2025#Adversarial attacks#agent#ai#AI industry#AI systems#Analysis#awareness#AWS#bedrock#benchmarks#blueprint#challenge#chemical#chemical weapon#claude#claude 3#Claude 3.7 Sonnet#Cloud#complexity#comprehensive#content#content moderation#continuous#cybersecurity#data#deception#defense#deploying#deployment

0 notes

Text

Google Launches Gemini Live: A New Era in Multimodal AI Interactions

In a significant stride towards enhancing user interaction with artificial intelligence, Google has introduced Gemini Live, a multimodal AI assistant designed to facilitate natural, real-time conversations. This advancement marks a pivotal moment in the evolution of AI-driven personal assistants, offering users a more intuitive and interactive experience.

What Is Gemini Live?

Gemini Live is Google's latest AI assistant, built upon the foundation of the Gemini AI model. Unlike traditional AI assistants that rely solely on text-based inputs, Gemini Live integrates voice, video, and screen-sharing capabilities, enabling users to engage in dynamic, real-time interactions. This integration allows for a more personalized and context-aware assistance, making it a versatile tool for various applications.

Key Features of Gemini Live

1. Multimodal Interactions

Gemini Live supports voice, video, and screen-sharing inputs, allowing users to communicate in the most natural way possible. Whether it's a spoken query, a visual reference, or a shared screen, Gemini Live processes these inputs seamlessly, providing relevant and accurate responses.

2. Real-Time Conversations

Designed for fluid interactions, Gemini Live enables users to have uninterrupted conversations with the AI. Users can ask follow-up questions, provide additional context, or change topics mid-conversation, and Gemini Live adapts accordingly, ensuring a coherent and engaging dialogue.

3. Visual Context Understanding

By leveraging the device's camera, Gemini Live can analyze and interpret visual inputs. This feature is particularly useful for tasks that require visual context, such as identifying objects, reading text from images, or providing step-by-step guidance for visual tasks.

4. Screen Sharing Capabilities

Gemini Live allows users to share their device's screen with the AI, enabling real-time assistance with on-screen content. This feature is beneficial for troubleshooting, reviewing documents, or receiving guidance on navigating applications.

5. Integration with Google Ecosystem

As part of the Google ecosystem, Gemini Live integrates seamlessly with various Google services and applications. This integration ensures that users can access a wide range of functionalities, from managing schedules in Google Calendar to retrieving information from Google Search, all within a single interface.

Availability and Accessibility

Gemini Live is currently available on select devices, including the Google Pixel 9 and Samsung Galaxy S25. Users can access Gemini Live by downloading the Gemini app from the Google Play Store or Apple App Store. Additionally, Gemini Live is accessible through the Google One AI Premium subscription, offering enhanced features and capabilities.

Use Cases and Applications

1. Personal Assistance

Gemini Live serves as a personal assistant, helping users manage tasks such as setting reminders, sending messages, and making calls. Its ability to understand natural language and context allows for efficient and hands-free task management.

2. Educational Support

Students can utilize Gemini Live for educational purposes, such as explaining complex concepts, solving problems, or providing study materials. Its multimodal capabilities enhance the learning experience by offering visual explanations and interactive sessions.

3. Professional Collaboration

In a professional setting, Gemini Live can assist with tasks like scheduling meetings, drafting emails, or providing real-time feedback during presentations. Its screen-sharing feature facilitates collaborative work, making remote teamwork more effective.

4. Troubleshooting and Technical Support

Gemini Live's visual context understanding enables it to assist users with troubleshooting technical issues. By analyzing on-screen content and interpreting visual cues, it can provide step-by-step guidance to resolve problems efficiently.

Privacy and Security Considerations

Google has implemented robust privacy and security measures to ensure that users' data is protected. Users have control over their data, with options to manage permissions, review activity, and delete information as needed. Google's commitment to transparency and user control aims to build trust and ensure a safe AI experience.

Future Developments

Looking ahead, Google plans to expand the capabilities of Gemini Live by incorporating advanced features such as emotion recognition, proactive assistance, and deeper integration with third-party applications. These developments aim to make Gemini Live an even more integral part of users' daily lives, providing personalized and context-aware assistance across various domains.

Conclusion

Google's launch of Gemini Live represents a significant advancement in the field of conversational AI. By integrating multimodal interactions, real-time conversations, and visual context understanding, Gemini Live offers a more intuitive and engaging user experience. As AI continues to evolve, innovations like Gemini Live pave the way for more natural and effective human-AI interactions, enhancing productivity, learning, and everyday tasks.

Credentials:

CAIE Certified Artificial Intelligence (AI) Expert®

Certified Cyber Security Expert™ Certification

Certified Network Security Engineer™ Certification

Certified Python Developer™ Certification

0 notes

Link

RAG has proven effective in enhancing the factual accuracy of LLMs by grounding their outputs in external, relevant information. However, most existing RAG implementations are limited to text-based corpora, which restricts their applicability to rea #AI #ML #Automation

0 notes

Text

Generative AI Platform Development Explained: Architecture, Frameworks, and Use Cases That Matter in 2025

The rise of generative AI is no longer confined to experimental labs or tech demos—it’s transforming how businesses automate tasks, create content, and serve customers at scale. In 2025, companies are not just adopting generative AI tools—they’re building custom generative AI platforms that are tailored to their workflows, data, and industry needs.

This blog dives into the architecture, leading frameworks, and powerful use cases of generative AI platform development in 2025. Whether you're a CTO, AI engineer, or digital transformation strategist, this is your comprehensive guide to making sense of this booming space.

Why Generative AI Platform Development Matters Today

Generative AI has matured from narrow use cases (like text or image generation) to enterprise-grade platforms capable of handling complex workflows. Here’s why organizations are investing in custom platform development:

Data ownership and compliance: Public APIs like ChatGPT don’t offer the privacy guarantees many businesses need.

Domain-specific intelligence: Off-the-shelf models often lack nuance for healthcare, finance, law, etc.

Workflow integration: Businesses want AI to plug into their existing tools—CRMs, ERPs, ticketing systems—not operate in isolation.

Customization and control: A platform allows fine-tuning, governance, and feature expansion over time.

Core Architecture of a Generative AI Platform

A generative AI platform is more than just a language model with a UI. It’s a modular system with several architectural layers working in sync. Here’s a breakdown of the typical architecture:

1. Foundation Model Layer

This is the brain of the system, typically built on:

LLMs (e.g., GPT-4, Claude, Mistral, LLaMA 3)

Multimodal models (for image, text, audio, or code generation)

You can:

Use open-source models

Fine-tune foundation models

Integrate multiple models via a routing system

2. Retrieval-Augmented Generation (RAG) Layer

This layer allows dynamic grounding of the model in your enterprise data using:

Vector databases (e.g., Pinecone, Weaviate, FAISS)

Embeddings for semantic search

Document pipelines (PDFs, SQL, APIs)

RAG ensures that generative outputs are factual, current, and contextual.

3. Orchestration & Agent Layer

In 2025, most platforms include AI agents to perform tasks:

Execute multi-step logic

Query APIs

Take user actions (e.g., book, update, generate report)

Frameworks like LangChain, LlamaIndex, and CrewAI are widely used.

4. Data & Prompt Engineering Layer

The control center for:

Prompt templates

Tool calling

Memory persistence

Feedback loops for fine-tuning

5. Security & Governance Layer

Enterprise-grade platforms include:

Role-based access

Prompt logging

Data redaction and PII masking

Human-in-the-loop moderation

6. UI/UX & API Layer

This exposes the platform to users via:

Chat interfaces (Slack, Teams, Web apps)

APIs for integration with internal tools

Dashboards for admin controls

Popular Frameworks Used in 2025

Here's a quick overview of frameworks dominating generative AI platform development today: FrameworkPurposeWhy It MattersLangChainAgent orchestration & tool useDominant for building AI workflowsLlamaIndexIndexing + RAGPowerful for knowledge-based appsRay + HuggingFaceScalable model servingProduction-ready deploymentsFastAPIAPI backend for GenAI appsLightweight and easy to scalePinecone / WeaviateVector DBsCore for context-aware outputsOpenAI Function Calling / ToolsTool use & plugin-like behaviorPlug-in capabilities without agentsGuardrails.ai / Rebuff.aiOutput validationFor safe and filtered responses

Most Impactful Use Cases of Generative AI Platforms in 2025

Custom generative AI platforms are now being deployed across virtually every sector. Below are some of the most impactful applications:

1. AI Customer Support Assistants

Auto-resolve 70% of tickets with contextual data from CRM, knowledge base

Integrate with Zendesk, Freshdesk, Intercom

Use RAG to pull product info dynamically

2. AI Content Engines for Marketing Teams

Generate email campaigns, ad copy, and product descriptions

Align with tone, brand voice, and regional nuances

Automate A/B testing and SEO optimization

3. AI Coding Assistants for Developer Teams

Context-aware suggestions from internal codebase

Documentation generation, test script creation

Debugging assistant with natural language inputs

4. AI Financial Analysts for Enterprise

Generate earnings summaries, budget predictions

Parse and summarize internal spreadsheets

Draft financial reports with integrated charts

5. Legal Document Intelligence

Draft NDAs, contracts based on templates

Highlight risk clauses

Translate legal jargon to plain language

6. Enterprise Knowledge Assistants

Index all internal documents, chat logs, SOPs

Let employees query processes instantly

Enforce role-based visibility

Challenges in Generative AI Platform Development

Despite the promise, building a generative AI platform isn’t plug-and-play. Key challenges include:

Data quality and labeling: Garbage in, garbage out.

Latency in RAG systems: Slow response times affect UX.

Model hallucination: Even with context, LLMs can fabricate.

Scalability issues: From GPU costs to query limits.

Privacy & compliance: Especially in finance, healthcare, legal sectors.

What’s New in 2025?

Private LLMs: Enterprises increasingly train or fine-tune their own models (via platforms like MosaicML, Databricks).

Multi-Agent Systems: Agent networks are collaborating to perform tasks in parallel.

Guardrails and AI Policy Layers: Compliance-ready platforms with audit logs, content filters, and human approvals.

Auto-RAG Pipelines: Tools now auto-index and update knowledge bases without manual effort.

Conclusion

Generative AI platform development in 2025 is not just about building chatbots—it's about creating intelligent ecosystems that plug into your business, speak your data, and drive real ROI. With the right architecture, frameworks, and enterprise-grade controls, these platforms are becoming the new digital workforce.

0 notes

Text

Multimodal Queries Require Multimodal RAG: Researchers from KAIST and DeepAuto.ai Propose UniversalRAG—A New Framework That Dynamically Routes Across Modalities and Granularities for Accurate and Efficient Retrieval-Augmented Generation

Multimodal Queries Require Multimodal RAG: Researchers from KAIST and DeepAuto.ai Propose UniversalRAG—A New Framework That Dynamically Routes Across Modalities and Granularities for Accurate and Efficient Retrieval-Augmented Generation

0 notes

Text

Chroma and OpenCLIP Reinvent Image Search With Intel Max

OpenCLIP Image search

Building High-Performance Image Search with Intel Max, Chroma, and OpenCLIP GPUs

After reviewing the Intel Data Centre GPU Max 1100 and Intel Tiber AI Cloud, Intel Liftoff mentors and AI developers prepared a field guide for lean, high-throughput LLM pipelines.

All development, testing, and benchmarking in this study used the Intel Tiber AI Cloud.

Intel Tiber AI Cloud was intended to give developers and AI enterprises scalable and economical access to Intel’s cutting-edge AI technology. This includes the latest Intel Xeon Scalable CPUs, Data Centre GPU Max Series, and Gaudi 2 (and 3) accelerators. Startups creating compute-intensive AI models can deploy Intel Tiber AI Cloud in a performance-optimized environment without a large hardware investment.

Advised AI startups to contact Intel Liftoff for AI Startups to learn more about Intel Data Centre GPU Max, Intel Gaudi accelerators, and Intel Tiber AI Cloud’s optimised environment.

Utilising resources, technology, and platforms like Intel Tiber AI Cloud.

AI-powered apps increasingly use text, audio, and image data. The article shows how to construct and query a multimodal database with text and images using Chroma and OpenCLIP embeddings.

These embeddings enable multimodal data comparison and retrieval. The project aims to build a GPU or XPU-accelerated system that can handle image data and query it using text-based search queries.

Advanced AI uses Intel Data Centre GPU Max 1100

The performance described in this study is attainable with powerful hardware like the Intel Data Centre GPU Max Series, specifically Intel Extension for PyTorch acceleration. Dedicated instances and the free Intel Tiber AI Cloud JupyterLab environment with the GPU (Max 1100):

The Xe-HPC Architecture:

GPU compute operations use 56 specialised Xe-cores. Intel XMX engines: Deep systolic arrays from 448 engines speed up dense matrix and vector operations in AI and deep learning models. XMX units are complemented with 448 vector engines for larger parallel computing workloads. 56 hardware-accelerated ray tracing units increase visualisation.

Memory hierarchy

48 GB of HBM2e delivers 1.23 TB/s of bandwidth, which is needed for complex models and large datasets like multimodal embeddings. Cache: A 28 MB L1 and 108 MB L2 cache keeps data near processing units to reduce latency.

Connectivity

PCIe Gen 5: Uses a fast x16 host link to transport data between the CPU and GPU. OneAPI Software Ecosystem: Integrating the open, standards-based Intel oneAPI programming architecture into Intel Data Centre Max Series GPUs is simple. HuggingFace Transformers, Pytorch, Intel Extension for Pytorch, and other Intel architecture-based frameworks allow developers to speed up AI pipelines without being bound into proprietary software.

This code’s purpose?

This code shows how to create a multimodal database using Chroma as the vector database for picture and text embeddings. It allows text queries to search the database for relevant photos or metadata. The code also shows how to utilise Intel Extension for PyTorch (IPEX) to accelerate calculations on Intel devices including CPUs and XPUs using Intel’s hardware acceleration.

This code’s main components:

It embeds text and images using OpenCLIP, a CLIP-based approach, and stores them in a database for easy access. OpenCLIP was chosen for its solid benchmark performance and easily available pre-trained models.

Chroma Database: Chroma can establish a permanent database with embeddings to swiftly return the most comparable text query results. ChromaDB was chosen for its developer experience, Python-native API, and ease of setting up persistent multimodal collections.

Function checks if XPU is available for hardware acceleration. High-performance applications benefit from Intel’s hardware acceleration with IPEX, which speeds up embedding generation and data processing.

Application and Use Cases

This code can be used whenever:

Fast, scalable multimodal data storage: You may need to store and retrieve text, images, or both.

Image Search: Textual descriptions can help e-commerce platforms, image search engines, and recommendation systems query photographs. For instance, searching for “Black colour Benz” will show similar cars.

Cross-modal Retrieval: Finding similar images using text or vice versa, or retrieving images from text. This is common in caption-based photo search and visual question answering.

The recommendation system: Similarity-based searches can lead consumers to films, products, and other content that matches their query.

AI-based apps: Perfect for machine learning pipelines including training data, feature extraction, and multimodal model preparation.

Conditions:

Deep learning torch.

Use intel_extension_for_pytorch for optimal PyTorch performance.

Utilise chromaDB for permanent multimodal vector database creation and querying, and matplotlib for image display.

Embedding extraction and image loading employ chromadb.utils’ OpenCLIP Embedding Function and Image Loader.

#technology#technews#govindhtech#news#technologynews#OpenCLIP#Intel Tiber AI Cloud#Intel Tiber#Intel Data Center GPU Max 1100#GPU Max 1100#Intel Data Center

0 notes