#Intel Tiber

Explore tagged Tumblr posts

Text

Chroma and OpenCLIP Reinvent Image Search With Intel Max

OpenCLIP Image search

Building High-Performance Image Search with Intel Max, Chroma, and OpenCLIP GPUs

After reviewing the Intel Data Centre GPU Max 1100 and Intel Tiber AI Cloud, Intel Liftoff mentors and AI developers prepared a field guide for lean, high-throughput LLM pipelines.

All development, testing, and benchmarking in this study used the Intel Tiber AI Cloud.

Intel Tiber AI Cloud was intended to give developers and AI enterprises scalable and economical access to Intel’s cutting-edge AI technology. This includes the latest Intel Xeon Scalable CPUs, Data Centre GPU Max Series, and Gaudi 2 (and 3) accelerators. Startups creating compute-intensive AI models can deploy Intel Tiber AI Cloud in a performance-optimized environment without a large hardware investment.

Advised AI startups to contact Intel Liftoff for AI Startups to learn more about Intel Data Centre GPU Max, Intel Gaudi accelerators, and Intel Tiber AI Cloud’s optimised environment.

Utilising resources, technology, and platforms like Intel Tiber AI Cloud.

AI-powered apps increasingly use text, audio, and image data. The article shows how to construct and query a multimodal database with text and images using Chroma and OpenCLIP embeddings.

These embeddings enable multimodal data comparison and retrieval. The project aims to build a GPU or XPU-accelerated system that can handle image data and query it using text-based search queries.

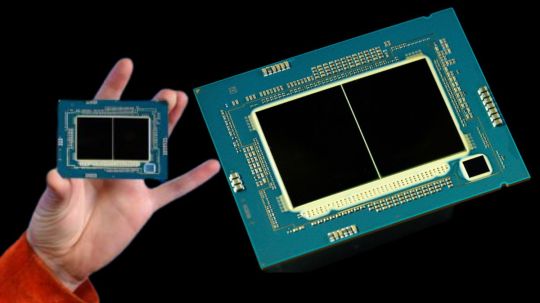

Advanced AI uses Intel Data Centre GPU Max 1100

The performance described in this study is attainable with powerful hardware like the Intel Data Centre GPU Max Series, specifically Intel Extension for PyTorch acceleration. Dedicated instances and the free Intel Tiber AI Cloud JupyterLab environment with the GPU (Max 1100):

The Xe-HPC Architecture:

GPU compute operations use 56 specialised Xe-cores. Intel XMX engines: Deep systolic arrays from 448 engines speed up dense matrix and vector operations in AI and deep learning models. XMX units are complemented with 448 vector engines for larger parallel computing workloads. 56 hardware-accelerated ray tracing units increase visualisation.

Memory hierarchy

48 GB of HBM2e delivers 1.23 TB/s of bandwidth, which is needed for complex models and large datasets like multimodal embeddings. Cache: A 28 MB L1 and 108 MB L2 cache keeps data near processing units to reduce latency.

Connectivity

PCIe Gen 5: Uses a fast x16 host link to transport data between the CPU and GPU. OneAPI Software Ecosystem: Integrating the open, standards-based Intel oneAPI programming architecture into Intel Data Centre Max Series GPUs is simple. HuggingFace Transformers, Pytorch, Intel Extension for Pytorch, and other Intel architecture-based frameworks allow developers to speed up AI pipelines without being bound into proprietary software.

This code’s purpose?

This code shows how to create a multimodal database using Chroma as the vector database for picture and text embeddings. It allows text queries to search the database for relevant photos or metadata. The code also shows how to utilise Intel Extension for PyTorch (IPEX) to accelerate calculations on Intel devices including CPUs and XPUs using Intel’s hardware acceleration.

This code’s main components:

It embeds text and images using OpenCLIP, a CLIP-based approach, and stores them in a database for easy access. OpenCLIP was chosen for its solid benchmark performance and easily available pre-trained models.

Chroma Database: Chroma can establish a permanent database with embeddings to swiftly return the most comparable text query results. ChromaDB was chosen for its developer experience, Python-native API, and ease of setting up persistent multimodal collections.

Function checks if XPU is available for hardware acceleration. High-performance applications benefit from Intel’s hardware acceleration with IPEX, which speeds up embedding generation and data processing.

Application and Use Cases

This code can be used whenever:

Fast, scalable multimodal data storage: You may need to store and retrieve text, images, or both.

Image Search: Textual descriptions can help e-commerce platforms, image search engines, and recommendation systems query photographs. For instance, searching for “Black colour Benz” will show similar cars.

Cross-modal Retrieval: Finding similar images using text or vice versa, or retrieving images from text. This is common in caption-based photo search and visual question answering.

The recommendation system: Similarity-based searches can lead consumers to films, products, and other content that matches their query.

AI-based apps: Perfect for machine learning pipelines including training data, feature extraction, and multimodal model preparation.

Conditions:

Deep learning torch.

Use intel_extension_for_pytorch for optimal PyTorch performance.

Utilise chromaDB for permanent multimodal vector database creation and querying, and matplotlib for image display.

Embedding extraction and image loading employ chromadb.utils’ OpenCLIP Embedding Function and Image Loader.

#technology#technews#govindhtech#news#technologynews#OpenCLIP#Intel Tiber AI Cloud#Intel Tiber#Intel Data Center GPU Max 1100#GPU Max 1100#Intel Data Center

0 notes

Text

0 notes

Text

Possible reasons for why Mannimarco does what he does in ESO (besides being impatient in waiting for Daggerfall to roll around)

In a world without Mannimarco as the eso villain, he'd just get kicked off Artaeum and be chillin in some cave somewhere for the next 800 years while waiting for Tiber Septim to do his thing. So what happened to make him change his mind about what is by all appearances a solid bet? Especially given that he seems to know his own future to a degree as shown in the book “ Where Were You When the Dragon Broke”. It’s possible he doesn’t recall the nature of Talos until after the Daggerfall break, since the book first appears in Morrowind, but it's more fun this way, so there. And also, this book appears in eso Cyrodiil in a moth priest temple, which may indicate that it was in fact published before Morrowind in-universe. So there too, maybe.

Possible scenarios, in no particular order:

Varen becoming a legit emperor threatens Mannimarco's godhood because if he unites Tamriel without Numidium or Zurin's mantella, Mannimarco doesn’t get a power boost this time around. So he sets up legit emperor Varen as his own personal betrayal of Talos to get his, with Tharn as the observer and the amulet of kings as the Numidium reactor.

Varen is set to become yet another short lived ruler-incumbent in a long line of them, but Tharn is a shrewd and patriotic mofo who unwittingly threatens to destroy Tamriel in his bid to force Akatosh to step up in picking a new favorite [via his original intent as eso's big bad], so Mannimarco backs Varen in an attempt to brute-force his own betrayal of Talos, with saving the world as a nice bonus.

Vanus is stepping up his persecution of necromancy and Mannimarco is feeling the pressure, so in an act of desperation he pushes his timetable for godhood forward by a few centuries in an attempt to solve the problem once and for all. I.e. he goes in early so neither Vanus nor any other earthly power can take necromancy away from him or his followers. Btw, that’s why [I headcanon] he wants to become a god even in Daggerfall. Necromancy in all its forms, sordid and otherwise, is his Thing, so he naturally wants it to live forever and he feels he is the right mer for the job. He's like Van Gogh, but with dead shit. (Or maybe more like the Marquis De Sade...)

Mannimarco doesn’t actually know how he becomes a god, only that Zurin Arctus and some bitch named Hijalti have something to do with it. He’s finding his way to the sky ass-first, and trying to swallow Bal is just his latest hare-brained attempt.

While visiting Orsinium on a necro-promo tour, Mannimarco discovers the titan and is floored by it. He has his cult raise the titan and manages to summon the sleeping spirit of the dragon itself for a chat. It rages at him about it's mistreatment and vows that their Father would eat Bal alive for this grave insult, which gives Mannimarco an idea. Vanus's increasingly hostile and systematic oppression of Mannimarco’s favorite pastime may or may not encourage the early and hasty implementation of this idea..

~

So, why does Mannimarco need Varen, et al specifically? He wants to find that rock and use it to pseudo-mantle Bal, but why does he need new emperor Varen and his three highly trained and loyal private bodyguards to do what he did? He could have conned literally anyone safer into insulting Akatosh enough/doing that mysterious ritual to open the gem. Tharn even does it with us. He says himself it’s the same ritual. So why those four?

Talk about high risk for little reward. He didn’t even need them to find the stupid thing. None of them knew where it was or had any kind of intel on it or else they wouldn’t have had to go on a quest to find it in the first place.

(You would think it would be the Blades' job to secure it after each emperors death and courier it to the location of a potential chosen one for inauguration purposes, but no, apparently not. Wtf are you guys for then???)

Given the above, the reenaction of the betrayal of Talos is the least stupid option I think, even if it doesn’t quite make sense that we can do the same with our five companions. Unless the entanomorph doesn’t need the "betrayal" to be a secret? Of course, we don’t become a god (that we know of any way lol), so maybe the ritual we do isn't exactly the same, or Mannimarco just really really likes to make his life harder than it needs to be.

My head....

#Elder Scrolls#mannimarco#vanus galerion#eso#tes lore#eso lore#motives#plot holes#stupid villains#why writers why???#calling all headcanons#for why mannimarco is so damn stupid in the mq#no i am not satisfied with because gameplay#our fave wormboy deserves better#and i wanna give it to him#heh#mine#eboriginal

9 notes

·

View notes

Text

SICON verse: ask SICON hour/APEX 99

The camera cut in as Kvella said “welcome to today’s ask SICON hour, today’s question is from Feqesh of the planet Tophet who asked, How do humans get into combat zones?”

The camera drone buzzed by as Kvella explained “the humans have 4 major methods of entering a combat zone.”

Footage of her capsule drop was cut in as she explained “the first and most famous is there orbital insertion into hostile territory, special capsulizes are fired from their ships that descend rapidly shedding outer layers in order to confuse enemy defense systems.”

The footage changed back to the alien reporter who said “the next is the calmest method; it is a simple drop ship landing into friendly or friendly controlled territory, in the same vain is the Tac Drop.”

It cut to a Human in his office the title card reading “Lieutenant William Ericson, SICON Federal Forces”

The Human explained “Tac, or tactical insertions are preformed when part of a planet is claimed or if we cannot for whatever reason insert from Orbit, the drop ship will rise to an altitude of about 150-200 meters,” the subtitles informed “about 80 council standard height units.”

Ericson scratched his head “every drop ship is equipped with what we call mole holes, small hatches that can be opened by the pilot, you sure you want to hear about this stuff it is very boring?” the Human asked Kvella.

Kvella answered from behind the camera “you promised to answer the publics questions LT,” Using the human’s nickname.

The human sighed “when we get to the drop point the Mole Holes open and we jump and fall out of the vessel”

Kvella stared as the human finished “our drop jets will kick in allowing us to land safely.”

Kvella nodded keeping her composer “and the 4th method?”

The human sighed “this one is rare but occasionally for one reason or another we need to insert into an environment where special transport is needed, such as an ocean.”

Kvella nodded “go on…”

The Human shifted “so the drop ship is loaded with the right transport, and dropped with personal for about 50 meters at high speeds….”

Kvella yelled “are you Humans insane!? How did any of you survive long enough to see the…” the camera went black.

It cut back in the Kvella saying “thank you so much, Feqesh from Tophet for your question on this Ask SICON hour.” An alarm blared in the background as the camera went black again.

Dagger squad Quarters:

T’Mai glared as Futuba looked at the piece of paper in front of them with equal intensity, Depoint glanced up from her book asking “what are you two stuck on?”

Futuba glared “I swear 46 down was built to destroy me!” she accused the cross word.

Francis stopped playing with his paddleball a gag gift from the squad as he asked “what is the clue?”

T’Mai read “Rome’s most famous aqueduct….. Five letters”

Ericson entered saying “Gear up we got a job.”

Francis yelled “officer on deck!”

Everyone snapped up as the human shook his head “as you were, briefing in an hour… also the Tiber River.”

Everyone relaxed and Futuba gasped “it fits!”

Briefing room:

The council official shifted as the squad entered, he let out a sigh of relief upon seeing two non-humans among their ranks.

Ericson saluted Captain Hernandez saying “Dagger squad reporting as ordered, Sir!”

Hernandez nodded “as you were dagger, this is Major Kieta of the council intelligence service.”

Ericson nodded at him “Sir, what can Dagger do for you?”

Kieta answered “we recently lost contact with the planet Nandry 4…18 Terran hours ago they said they were suffering the start of a plague…possibly Bug related, you vessel is the closest ship with the proper personal, you are to evaluate the danger to planet and the council as a whole and decide the proper course of action.”

Ericson frowned “course of action sir?”

Hernandez answered “depending on your we either send for a council medical team…or make the planet glow.”

Kvella asked T’Mai “make the planet glow?”

T’Mai answered “the humans mean bomb the planet.”

Ericson nodded “yes Sir’s…Depoint this is your show, I want a list of everything you need prepped and ready by the time we get into orbit!”

Depoint nodded “on it LT!”

Hernandez said “dismissed!”

Dropship:

The pilot frowned “lieutenant…”

Ericson got up moving to the cockpit asking “what is it pilot?”

The man said “I’m not picking anything up, no emissions ,no thermals…nothing on motion tracking…”

Ericson said “Intel says this plague started 18 hours ago…is the equipment malfunctioning?”

The pilot answered “no way to know sir…”

Ericson turned on his radio saying “this is Dagger 0-1 we can’t detect any signs of life on the planet, requesting an orbital scan.”

The voice returned “roger that Dagger 0-1 beginning orbital Scan….Dagger 0-1 reading are confirmed there is no detectable signs of life on the planet.”

Ericson turned to the pilot “forget the meet up coordinates find us ground zero and prep a tac drop.”

The pilot nodded “roger sir” pulling up and increasing speed as the ships interior lights changed from blue to red.

Ericson walked into the cabin calling out “Tac drop, let’s move!”

The team got out of the seats and headed for the mole holes, T’Mai asked “what’s the issue LT?”

Ericson shook his head “unknown but we are getting ready for the worst…helmets on assume airborne!”

The squad chanted “sir yes sir!”

The pilot called “thirty seconds!” the mole holes opened and the squad jumped through them falling into a deserted city.

Kvella deployed her drone midair to get a wide shot of the silent city, the squad landed and Francis pulled out his gun saying “well if they are people around they should have seen that…”

Futuba pulled down her helmets visor saying “power grid is still online…this city seems to be in perfect working order…”

Ericson looked around the empty square “but everyone is gone…it’s like the east wind swept through here.”

Depoint said “sir they were not kidding about some kind of disease…it is everywhere effecting everything.”

Kvella brought the drone in asking “the east wind?”

Francis sighed “drone off in combat zones tourist…but it is an old earth legend a force that would sweep away children that misbehave in their sleep never to be heard from again…it’s unstoppable, undetectable.”

T’Mai looked around asking “and this is a story you tell your children.”

Depoint held up as scanner “a lot of earth legends are like that…perk of growing up on a death world…I think I got something LT.”

Ericson asked “what you got Doc?”

Depoint frowned “undeterminable biomass…”

Francis asked “alive…a survivor?”

Depoint shook her head “no detectable life signs”

Ericson said “but maybe some answers, let’s check it out…Futuba ideas as to why we could not pick it up in the ship?”

Futuba shrugged “our scanners are designed to pick up communities or community sized things if it is small enough and in the right place our systems may not have been sensitive enough to pick it up…”

T’Mai said “and if we couldn’t maybe some else could not either…?”

Ericson nodded “an entire planet population doesn’t just disappear…Depoint lead the way.”

Dagger squad advanced through the deserted town before stopping before a tunnel entrance vaguely similar to a subway entrance. Francis muttered “its defendable LT…”

Futuba cut in “correction was defended…I’m picking up makeshift barricades and other obstacles…”

Ericson squatted looking into the dark tunnel “so something was happening and someone clearly didn’t agree with it…alright combat wedge nice and careful.”

The team went underground there suits lights turning on with a click. They tore down the barricades and saw a dead local…the creature was small roughly similar to a beaver of earth. It’s eyes had a green foam around them.

Ericson held the squad back saying “Futuba…any booby traps?”

Futuba scanned the room before answering “no explosives, no chemical agents, no known weapons of any kind.”

Ericson nodded “your show Doc.”

Depoint nodded carefully scanning the body saying “this one was killed by a plague alright…but our scanner is kicking back the hallmarks of a chemical weapon.”

T’Mai blinked “what?”

Francis frowned “bugs don’t use chemical weapons…or anything artificial.”

Depoint nodded “yep and I’m also picking up another oddity…a trademark.”

Ericson frowned “a trademark?! Bugs don’t have businesses”

Kvella pointed out as her drone buzzed by “so it wasn’t the bugs…”

The room went quiet as implication set in; Futuba said “council ships have similar scanning capabilities to our own.”

Depoint sighed “this creature expired close to 64 days ago.”

T’Mai said “why would the council test chemical weapons on its own people and then send a ship to investigate”

Ericson sighed “it’s a cover up….Kvella send your drone out get footage of everything.” Kvella nodded her drone sailing out of the tunnel.

Francis asked “what are you thinking LT?”

Ericson sighed “I’m thinking the council doesn’t have the stomach for this war…so they hired a company to develop a chemical weapon that will wipe out the bugs… the needed a testing ground and chose this city because it was secluded and the locals could contain it easily…then it got out and whipped out the entire planet…so our corp friends got scared, destroyed the bodies from orbit missing our friend here and then contacting the council…they sent us figuring we would write it off as an odd cosmic anomaly and that would be that.”

The squad stayed silent they knew the LT had most likely hit the nail on the head, Depoint cleared her throat “I have identified the Copyright LT…”

Ericson sighed “tag the remains as a bio hazard and get the ship down here.”

Watson:

As soon as the squad got back aboard they went through quarantine procedures, then Ericson met Hernández and they disappeared into the communication center as the squad returned to their quarters.

Com Center:

Hernández sighed “Will I’m sorry…”

Ericson asked “I know that tone what is it Hailey?”

Hernández sighed “that council intel guy aboard caught wind of what we found and put in a call to on high… the council stepped in and have classified this entire thing, cancelled the investigation ordered the footage destroyed and the silence of all involved…”

Ericson yelled “they are just covering it up!? What about SICON?”

Hernández sighed “command is as appalled as we are but this didn’t affect any humans and as such the council is blocking all attempts to make these experiments public.”

Ericson sighed “you’re joking…”

Hernández sighed “it gets worse…” and she pulled up a propaganda piece that is now broadcasting throughout council space.

It showed footage of the bugs doing strange things while a voice narrates “these creatures have been attacking innocent civilians for far too long!”

Footage of the Sorak 9 airstrike was shown as the narrator continued “and while the brave forces of the council try to repel these horrible invaders it has been an uphill battle!”

It cut to a laboratory looking building filled with a Varity of council’s species as Narrator kept going “but thanks to new invention’s by Vesta Corp soon the war will come to a safe end! Introducing Apex 99!”

It showed stock footage of old timey humans as they continue “our friends in the strategically integrated collation of Nations developed a weapon a long time ago they Called Anthrax! This powerful chemical was not very useful however until Vesta Corp got involved and using it as a basis managed to develop the bug killing weapon that will save the galaxy! Good work team!” it showed footage of a scientist getting a result on a computer screen and cheering.

Before the footage ended and a card came up saying “want to defend the galaxy?! Join your council member armed forces today!”

The piece ended and Ericson asked “so they commit war crimes and probable genocide and get a boatload of cash and the love form the public!?”

Hernández nodded “there stock price has already tripled.”

Ericson paused asking “wait…how exactly did this war start?”

Hernández answered “the bug invaded Sorak 9…remember?”

Ericson nodded “yes but why did they invade?”

Hernández blinked “I don’t know…we just went to Defcon 1 and the council told us the bugs declared war…”

Ericson sighed “maybe this whole thing is rubbing me the wrong way…but something is up…I can feel it.”

Hernandez nodded “yea I feel it to.”

Editing room:

Kvella smiled at the camera trying to forget her boss telling her to delete the footage of that planet and never talk about it again as she said “welcome to this week’s ask SICON hour! This week’s question is from L’Bec of the Kalber….”

Dagger squad quarters:

The squad sat aimlessly trying not to think about the entire planet that was wiped out, Futuba sighed shifting from side to side as she half-heartedly tried to finish the cross word with T’Mai who himself was barely hiding his disgust with the council .

Depoint had a medical book in her hand but had been reading the same page for the last 3 hours her mind wondering back to devastation planet side.

Francis sat on his bunk staring into space as he thought about all those people…after a few minutes Futuba spook up quietly “we are good guys…right?”

The squad stayed quiet not having an answer.

do you think the council is evil!? why do you think the bug started? are our heroes on the right side? let me know what you think! :D

35 notes

·

View notes

Text

Tiberian sun mods best

There are some codes that you can use, but it will be something like this: Then you have to decide what AI will do now. XX is an unused number for the script type and SCRIPT1 is the internal name of your script type. To make new Script Types, you need to open your ai.ini if you don`t have Firestorm or your aifs.ini if you have Firestorm and go to the ScriptTypes List and write your new script type: What does it target for? Will the units deploy? And other stuff. Script Types are the way AI behaves when attacks. If you wanna post this tutorial anywhere, please ask me thorugh email ( ). Note that it was also originally designed for TS and Red Alert 2, but when it came for EE, it become TS only. Since EE is no more, it has been placed here in PPM. This tutorial was originally written to the old Intel Website (from PPM) and to Entertainment Explosion. I am unable to test everything, however, most of info over there comes from Final Alert 2, Deezire's Editing Guide and also from testing and comparison between script types. One thing is certain Tiberium is changing our planet and if not combated it will create a world completely alien to us.The whole veracity of the information below cannot be guaranteed. It started with blossom trees and viceroids, but since then other creatures such as the tiberian fiend and veins have been discovered with reports of other Tiberium based creatures flowing in. Since the First Tiberium War the more mutagenic properties of Tiberium has become more apparent. These crystals are rich in precious minerals and are available at a minimum of mining expense. Tiberium leeches minerals from the soil causing the formation of Tiberium crystals. It spreads through unknown means, but has proven to be very useful. Tiberium, named after the Tiber River where it was first discovered, is both a crystal and a lifeform that arrived on Earth in a meteorite. So begins the Second Tiberium War! The Factions Even though it sounds ridicules there is no doubt who has returned Nod's true leader. Hassan is cornered and captured, he is brought to the masses and in his last moments gets the shock of his life "You can't kill the Messiah!"Īfter this incident, GDI outposts are attacked everywhere and from all directions. Slavik becomes a hero of the Brotherhood, and when people discover that he is still alive they rise up against Hassan. Despite this Hassan broadcasts false messages about Slavik's supposed death, but this does not go as planned for Hassan. Anton Slavik, the leader of this group, has been attempted executed by Hassan for being a "GDI spy" but little do they know, even among Hassan's most elite soldiers there are people who oppose his rule and so Slavik is freed. Tiberium is not the GDI's only problem followers of Kane still exist and they will stop at nothing until they have revealed the truth about Hassan and his followers. Over the last three decades it has become apparent that Tiberium is terraforming the planet by consuming Earthborne ecosystems and spawning its own alien ecosystems. With the Brotherhood divided and scattered the GDI have put their focus elsewhere containing the threat of Tiberium. Nod has been corrupted even through its highest echelons a GDI controlled puppet named Hassan is in charge. Nod fights itself brother against brother. Kane is presumed dead and the Brotherhood is in disarray. About 35 years have passed since the end of Tiberian Dawn.

0 notes

Text

0 notes

Text

Intel And Inflection AI Vision For Future Generations Of AI

What is Inflection AI?

An AI system called Inflection for Enterprise was created to help companies incorporate a personalized, sympathetic AI “co-worker” into their daily operations. It enables businesses to take use of AI capabilities without the need for complex infrastructure or specialized knowledge with to Intel’s Gaudi and Tiber AI Cloud technologies.

Availability of Inflection for Enterprise

Intel and Inflection AI established a partnership to accelerate AI adoption and impact for developers and businesses alike. In order to provide empathetic, conversational, and employee-friendly AI capabilities as well as the control, customization, and scalability needed for intricate, large-scale deployments, Inflection AI is launching Inflection for Intel Gaudi and Intel Tiber AI Cloud power Enterprise, an industry-first enterprise-grade AI system. This system is now accessible via the AI Cloud and will be shipped to clients in Q1 2025 as the first AI appliance in the industry powered by Gaudi 3.

Its are establishing a new benchmark with AI solutions that provide rapid, significant outcomes thanks to our strategic partnership with Inflection AI. For businesses of all sizes, using GenAI is now feasible, economical, and effective because to Intel Gaudi 3 solutions’ competitive performance per watt and compatibility for open-source models and tools.

It usually takes a lot of infrastructure to build an AI system, including cooperation between engineers, data scientists, and application developers as well as lengthy model building and training. Built on top of Inflection 3.0, Inflection for Enterprise gives business clients access to a complete AI solution that equips their employees with a virtual AI colleague that has been specially educated on their particular company’s data, rules, and culture.

Through the Intel Gaudi 3 AI accelerator, which provides industry-leading price/performance for effective, high-impact outcomes, the cooperation with Intel delivers unparalleled performance. Intel’s technology guarantees scalability and flexibility for significant outcomes.

Time to market is further accelerated by the AI Cloud, which simplifies the development, testing, and deployment of AI applications in a single environment. Given the advantages and worth of this service, Intel and Inflection AI are also working together to implement Inflection for Enterprise within Intel in the hopes that Intel will early adopter of the product.

How it Works

Through alignment with the company’s tone, purpose, and distinctive product, service, and operational information, Inflection AI optimizes its model to be local to each business, accelerating user acceptance and enhancing the utility of use cases. With affordability, performance, and security/compliance benefits, Inflection 3.0 gives business clients a quicker time-to-value via generative AI experiences that are easy for employees to use.

Removing Barriers to GenAI: Eliminating Obstacles to GenAI Inflection for Enterprise, which is based on AI Cloud, offers application templates that enable companies to grow rapidly without incurring capital costs and avoiding hardware testing and model construction. Customers will also be able to buy Inflection for Enterprise, a full turnkey AI appliance, in Q1 2025. Customers of this appliance may take advantage of up to two times better pricing performance and 128GB of high-bandwidth memory capacity by using Gaudi 3, which further optimizes their GenAI performance when compared to competing products.

Optimized Price/Performance: Inflection 3.0 will be powered by Gaudi 3, with instances on-premises or in the cloud powered by AI Cloud. Previously, Inflection AI‘s Pi consumer application ran on Nvidia GPUs. This reduces the overall cost of ownership in addition to the deployment time.

Fine-Tuned for Enterprises: Optimized for Businesses Inflection for Enterprise models are tailored to the culture and methods of operation of each company, using the fine-tuning and reinforcement learning from human feedback (RLHF) skills that drove Inflection AI’s Pi. Inflection AI, which is based on data and insights from an organization’s history, policies, content, tone, products, and operational information, promotes productivity and alignment across the whole enterprise.

Improved Security and Ownership: Inflection for Enterprise enables businesses to fully own their intelligence. Customized models are exclusive to the client and are never distributed to other parties. Furthermore, clients have the option to host and operate the model on the cloud, on-premises, or hybrid architecture of their choice.

Future plans for Inflection AI

Using the stable and user-focused Inflection 3.0 framework, Inflection AI and Intel will also make it possible for developers to create corporate apps for Inflection for corporate, producing essential software solutions.

Read more on govindhtech.com

#Intel#InflectionAIVision#FutureGenerationsAI#IntelGaudi#IntelTiberAICloud#generativeAI#ai#IntelGaudi3AIaccelerator#NvidiaGPU#gpu#nvidia#InflectionAI#technology#technews#news#govindhtech

0 notes

Text

0 notes

Text

Intel Launches Intel Tiber Edge Platform New Version 24.08

The release of the Intel Tiber Edge Platform version 24.08 is now available.

In this article we will discuss how the Intel Tiber Edge Platform Can transform your AI and edge computing projects. The Most Recent Innovations to the state-of-the-art features and innovations in the most recent Edge Platform version. How to get Started with Edge and AI Solutions to use the Intel Tiber Edge Platform to start developing strong edge and AI solutions.

About Intel Tiber Edge Platform

Businesses can create, implement, protect, and oversee Edge and AI solutions from pipeline to production with ease and scalability with the Intel Tiber Edge Platform. These three fundamental layers make up the modular platform, which is supported by Intel’s cutting-edge, industry-leading foundational hardware:

Industry Solutions: AI-powered edge endpoints tailored to various sectors and applications.

AI & Apps: Edge-specific AI and app development, deployment, and management services.

Edge infrastructure: Orchestration, fleet/infrastructure management, and edge node software.

New Features

Advanced AI model enhancements for the edge are included in this version, along with streamlined device onboarding and management across the platform’s core layers.

Here are some of the main features of the latest software version, 24.08, among other updates:

Industry Solutions

New Features in Intel SceneScape:

Various camera feeds may now be processed to create bigger aggregated scenes for insights that can be used immediately.

Identify items like cars, cargo containers, or pallets by using the recently introduced 3D bounding boxes to detect and track things.

Today, object tracking technology can recognize and accurately re-identify items or people across numerous camera frames, even after they have moved and come back into view.

Intel Edge Insights System for Improved Edge AI Model Management:

Using a model registry, you may upload, retrieve, and update Intel Geti vision models that are installed in your edge devices.

What is Intel Geti Software?

Intel’s latest software can create computer vision models with less data and in a quarter of the time. Teams can now create unique AI models at scale with this software, which streamlines time-consuming data labeling, model training, and optimization processes throughout the AI model creation process.

Using Intel to Scale Cutting-Edge AI Solutions

The Intel Tiber Edge Platform, a system created to address edge concerns across sectors, includes the Intel Geti software. With the help of an unparalleled partner ecosystem, the platform gives businesses the ability to create, implement, manage, grow, and operate edge applications with ease akin to that of the cloud.

Offering infrastructure software, AI & applications, and industrial solutions, the Edge Platform is a modular system that can be tailored to meet the demands of its clients. An essential part of the AI and Applications area, Intel Geti software is used to create vision AI models.

AI and Applications

Optimizations for Large Language Models (LLM)

Decreased latency and enhanced LLM performance for Intel discrete and built-in GPUs.

With only a few lines of code, the new OpenVINO GenAI package provides a simpler API for text creation utilizing huge language models.

When providing LLMs to several concurrent users, OpenVINO Model Server’s Continuous Batching, Paged Attention, and OpenAI-compatible API allow for greater throughput for parallel inferencing.

Infrastructure Software

Simplified Management of the Edge:

By creating hierarchical relationships such as regions, locations, and sites you can easily manage your edge nodes.

Apply certain OS and security settings to many edge nodes at once.

Streamlined Onboarding for Brownfield and Local Hardware:

For the first configuration, use USB-based secure manual edge hardware onboarding. Perfect for gadgets that don’t have HTTPS Boot.

Accessibility

In Q1 2024, the Intel Tiber Edge Platform was introduced globally and is now widely accessible.

With new versions, the platform will keep developing to support partners and businesses deploying Edge and AI applications. Please get in touch with your Intel representative to explore using the platform in your company or to have access to its extensive technical documentation.

Read more on govindhtech.com

#IntelLaunches#IntelTiberEdgePlatform#intel#NewVersion2408#AboutIntelTiberEdgePlatform#AImodel#OpenVINO#OpenAI#NewFeatures#InfrastructureSoftware#AIApplications#technology#technews#news#govindhtech

0 notes

Text

Intel Tiber AI Cloud: Intel Tiber Cloud Services Portfolio

Introducing Intel Tiber AI Cloud

Today Intel excited to announce the rebranding of Intel Tiber AI Cloud, the company’s rapidly expanding cloud offering. It is aimed to providing large-scale access to Intel software and computation for AI installations, support for the developer community, and benchmark testing. It is a component of the Intel Tiber Cloud Services portfolio.

What is Intel Tiber AI Cloud?

Intel’s new cloud service, the Intel Tiber AI Cloud, is designed to accommodate massive AI workloads using its Gaudi 3 CPUs. Rather than going up against AWS or other cloud giants directly, this platform is meant to serve business clients with an emphasis on AI computing. Tiber AI Cloud is particularly designed for complicated AI activities and offers scalability and customization for enterprises by using Intel’s cutting-edge AI processors.

Based on the foundation of Intel Tiber Developer Cloud, Intel Tiber AI Cloud represents the rising focus on large-scale AI implementations with more paying clients and AI partners, all the while expanding the developer community and open-source collaborations.

New Developments Of Intel Tiber AI Cloud

It have added a number of new features to Intel Tiber AI Cloud in the last several months:

New computing options

Nous began building up Intel’s most recent AI accelerator platform, Intel Tiber AI Cloud, in conjunction with last month’s launch of Intel Gaudi 3. We anticipate broad availability later this year. Furthermore, pre-release Dev Kits for the AI PC using the new Intel Core Ultra processors and compute instances with the new 128-core Intel Xeon 6 CPU are now accessible for tech assessment via the Preview catalog in the Intel Tiber AI Cloud.

Open source model and framework integrations

With the most recent PyTorch 2.4 release, Intel revealed support for Intel GPUs. Developers may now test the most recent Pytorch versions supported by Intel on laptops via the Learning catalog.

The most recent version of Meta’s Llama mode collection, Llama 3.2, has been deployed in Intel Tiber AI Cloud and has undergone successful testing with Intel Xeon processors, Intel Gaudi AI accelerator, and AI PCs equipped with Intel Core Ultra CPUs.

Increased learning resources

New notebooks introduced to the Learning library allow you to use Gaudi AI accelerators. AI engineers and data scientists may use these notebooks to run interactive shell commands on Gaudi accelerators. Furthermore, the Intel cloud team collaborated with Prediction Guard and DeepLearning.ai to provide a brand-new, well-liked LLM course powered by Gaudi 2 computing.

Partnerships

It revealed Intel’s collaboration with Seekr, a rapidly expanding AI startup, at the Intel Vision 2024 conference. To assist businesses overcome the difficulties of bias and inaccuracy in their AI applications, Seekr is now training foundation models and LLMs on one of the latest super-compute Gaudi clusters in Intel’s AI Cloud, which has over 1,024 accelerators. Its dependable artificial intelligence platform, SeekrFlow, is now accessible via Intel Tiber AI Cloud‘s Software Catalog.

How it Works

Let’s take a closer look at the components that make up Intel’s AI cloud offering: systems, software, silicon, and services.

Silicon

For the newest Intel CPUs, GPUs, and AI accelerators, Intel Tiber AI Cloud is first in line and Customer Zero, offering direct support lines into Intel engineers. Because of this, Intel’s AI cloud is able to provide early access to inexpensive, perhaps non-generally accessible access to the newest Intel hardware and software.

Systems

The size and performance needs of AI workloads are growing, which causes the demands on computational infrastructure to change quickly.

To implement AI workloads, Intel Tiber AI Cloud provides a range of computing solutions, including as virtual machines, dedicated systems, and containers. Furthermore, it have recently implemented high-speed Ethernet networking, compact and super-compute size Intel Gaudi clusters for training foundation models.

Program

Intel actively participates in open source initiatives like Pytorch and UXL Foundation and supports the expanding open software ecosystem for artificial intelligence. Users may access the newest AI models and frameworks in Intel Tiber AI Cloud with to dedicated Intel cloud computing that is assigned to help open source projects.

Services

The way in which developers and enterprise customers use computing AI services varies according on use cases, technical proficiency, and implementation specifications. To satisfy these various access needs, Intel Tiber AI Cloud provides server CLI access, curated interactive access via notebooks, and serverless API access.

Why It Is Important

One of the main components of Intel’s plan to level the playing field and eliminate obstacles to AI adoption for developers, enterprises, and startups is the Intel Tiber AI Cloud:

Cost performance: For the best AI implementations, it provides early access to the newest Intel computing at a competitive cost performance.

Scale: From virtual machines (VMs) and single nodes to massive super-computing Gaudi clusters, it provides a scale-out computing platform for expanding AI deployments.

Open source: It ensures mobility and prevents vendor lock-in by providing access to open source models, frameworks, and accelerator software supported by Intel.

Read more on Govindhtech.com

#IntelTiberAICloud#Gaudi3#AICloud#IntelTiberDeveloperCloud#IntelCoreUltraprocessors#Pytorchversions#GaudiAIaccelerators#virtualmachines#news#technews#technology#technologynews#technologytrends#govindhtech

0 notes

Text

Intel Tiber Developer Cloud, Text- to-Image Stable Diffusion

Check Out GenAI for Text-to-Image with a Stable Diffusion Intel Tiber Developer Cloud Workshop.

What is Intel Tiber Developer Cloud?

With access to state-of-the-art Intel hardware and software solutions, developers, AI/ML researchers, ecosystem partners, AI startups, and enterprise customers can build, test, run, and optimize AI and High-Performance Computing applications at a low cost and overhead thanks to the Intel Tiber Developer Cloud, a cloud-based platform. With access to AI-optimized software like oneAPI, the Intel Tiber Developer Cloud offers developers a simple way to create with small or large workloads on Intel CPUs, GPUs, and the AI PC.

- Advertisement -

Developers and enterprise clients have the option to use free shared workspaces and Jupyter notebooks to explore the possibilities of the platform and hardware and discover what Intel can accomplish.

Text-to-Image

This article will guide you through a workshop that uses the Stable Diffusion model practically to produce visuals in response to a written challenge. You will discover how to conduct inference using the Stable Diffusion text-to-image generation model using PyTorch and Intel Gaudi AI Accelerators. Additionally, you will see how the Intel Tiber Developer Cloud can assist you in creating and implementing generative AI workloads.

Text To Image AI Generator

AI Generation and Steady Diffusion

Industry-wide, generative artificial intelligence (GenAI) is quickly taking off, revolutionizing content creation and offering fresh approaches to problem-solving and creative expression. One prominent GenAI application is text-to-image generation, which uses an understanding of the context and meaning of a user-provided description to generate images based on text prompts. To learn correlations between words and visual attributes, the model is trained on massive datasets of photos linked with associated textual descriptions.

A well-liked GenAI deep learning model called Stable Diffusion uses text-to-image synthesis to produce images. Diffusion models work by progressively transforming random noise into a visually significant result. Due to its efficiency, scalability, and open-source nature, stable diffusion is widely used in a variety of creative applications.

- Advertisement -

The Stable Diffusion model in this training is run using PyTorch and the Intel Gaudi AI Accelerator. The Intel Extension for PyTorch, which maximizes deep learning training and inference performance on Intel CPUs for a variety of applications, including large language models (LLMs) and Generative AI (GenAI), is another option for GPU support and improved performance.

Stable Diffusion

To access the Training page once on the platform, click the Menu icon in the upper left corner.

The Intel Tiber Developer Cloud‘s Training website features a number of JupyterLab workshops that you may try out, including as those in AI, AI with Intel Gaudi 2 Accelerators, C++ SYCL, Gen AI, and the Rendering Toolkit.

Workshop on Inference Using Stable Diffusion

Thwy will look at the Inference with Stable Diffusion v2.1 workshop and browse to the AI with Intel Gaudi 2 Accelerator course in this tutorial.

Make that Python 3 (ipykernel) is selected in the upper right corner of the Jupyter notebook training window once it launches. To see an example of inference using stable diffusion and creating an image from your prompt, run the cells and adhere to the notebook’s instructions. An expanded description of the procedures listed in the training notebook can be found below.

Note: the Jupyter notebook contains the complete code; the cells shown here are merely for reference and lack important lines that are necessary for proper operation.

Configuring the Environment

Installing all the Python package prerequisites and cloning the Habana Model-References repository branch to this docker will come first. Additionally, They are going to download the Hugging Face model checkpoint.%cd ~/Gaudi-tutorials/PyTorch/Single_card_tutorials !git clone -b 1.15.1 https://github.com/habanaai/Model-References %cd Model-References/PyTorch/generative_models/stable-diffusion-v-2-1 !pip install -q -r requirements.txt !wget https://huggingface.co/stabilityai/stable-diffusion-2-1-base/resolve/main/ v2-1_512-ema-pruned.ckpt

Executing the Inference

prompt = input("Enter a prompt for image generation: ")

The prompt field is created by the aforementioned line of code, from which the model generates the image. To generate an image, you can enter any text; in this tutorial, for instance, they’ll use the prompt “cat wearing a hat.”cmd = f'python3 scripts/txt2img.py --prompt "{prompt}" 1 --ckpt v2-1_512-ema-pruned.ckpt \ --config configs/stable-diffusion/v2-inference.yaml \ --H 512 --W 512 \ --n_samples 1 \ --n_iter 2 --steps 35 \ --k_sampler dpmpp_2m \ --use_hpu_graph'

print(cmd) import os os.system(cmd)

Examining the Outcomes

Stable Diffusion will be used to produce their image, and Intel can verify the outcome. To view the created image, you can either run the cells in the notebook or navigate to the output folder using the File Browser on the left-hand panel:

/Gaudi-tutorials/PyTorch/Single_card_tutorials/Model-References /PyTorch/generative_models/stable-diffusion-v-2-1/outputs/txt2img-samples/Image Credit To Intel

Once you locate the outputs folder and locate your image, grid-0000.png, you may examine the resulting image. This is the image that resulted from the prompt in this tutorial:

You will have effectively been introduced to the capabilities of GenAI and Stable Diffusion on Intel Gaudi AI Accelerators, including PyTorch, model inference, and quick engineering, after completing the tasks in the notebook.

Read more on govindhtech.com

#IntelTiberDeveloper#TexttoImage#StableDiffusion#IntelCPU#aipc#IntelTiberDeveloperCloud#aiml#IntelGaudiAI#Workshop#genai#Python#GenerativeAI#IntelGaudi#generativeartificialintelligence#technology#technews#news#GenAI#ai#govindhtech

0 notes

Text

Intel Webinar: Experienced Assistance To Implement LLMs

How Prediction Guard Uses Intel Gaudi 2 AI Accelerators to Provide Reliable AI.

Intel webinar

Large language models (LLMs) and generative AI are two areas where the growing use of open-source tools and software at the corporate level makes it necessary to talk about the key tactics and technologies needed to build safe, scalable, and effective LLMs for business applications. In this Intel webinar, Rahul Unnikrishnan Nair, Engineering Lead at Intel Liftoff for Startups, and Daniel Whitenack, Ph.D., creator of Prediction Guard, lead us through the important topics of implementing LLMs utilizing open models, protecting data privacy, and preserving high accuracy.

- Advertisement -

Intel AI webinar

Important Conditions for Enterprise LLM Adoption

Three essential elements are identified in the Intel webinar for an enterprise LLM adoption to be successful: using open models, protecting data privacy, and retaining high accuracy. Enterprises may have more control and customization using open models like Mistral and Llama 3, which allow them to obtain model weights and access inference code. In contrast, closed models lack insight into underlying processes and are accessible via APIs.

Businesses that handle sensitive data like PHI and PII must secure data privacy. HIPAA compliance is typically essential in these scenarios. High accuracy is also crucial, necessitating strong procedures to compare the LLM outputs with ground truth data in order to reduce problems like as hallucinations, in which the output generates erroneous or misleading information even while it is grammatically and coherently accurate.

Obstacles in Closed Models

Closed models like those offered by Cohere and OpenAI have a number of drawbacks. Businesses may be biased and make mistakes because they are unable to observe how their inputs and outputs are handled. In the absence of transparency, consumers could experience latency variations and moderation failures without knowing why they occur. Prompt injection attacks can provide serious security threats because they may use closed models to expose confidential information. These problems highlight how crucial it is to use open models in corporate applications.

Prediction Guard

The Method Used by Prediction Guard

The platform from Prediction Guard tackles these issues by combining performance enhancements, strong security measures, and safe hosting. To ensure security, models are hosted in private settings inside the Intel Tiber Developer Cloud. To improve speed and save costs, Intel Gaudi 2 AI accelerators are used. Before PII reaches the LLM, input filters are employed to disguise or substitute it and prevent prompt injections. By comparing LLM outputs to ground truth data, output validators guarantee the factual consistency of the data.

- Advertisement -

During the optimization phase, which lasted from September 2023 to April 2024, load balancing over many Gaudi 2 machines, improving prompt processing performance by bucketing and padding similar-sized prompts, and switching to the TGI Gaudi framework for easier model server administration were all done.

Prediction Guard moved to Kubernetes-based architecture in Intel Tiber Developer Cloud during the current growth phase (April 2024 to the present), merging CPU and Gaudi node groups. Implemented include deployment automation, performance and uptime monitoring, and integration with Cloudflare for DDoS protection and CDN services.

Performance and Financial Gains

There were notable gains when switching to Gaudi 2. Compared to earlier GPU systems, Prediction Guard accomplished a 10x decrease in computation costs and a 2x gain in throughput for corporate applications. Prediction Guard’s sub-200ms time-to-first-token latency reduction puts it at the top of the industry performance rankings. These advantages were obtained without performance loss, demonstrating Gaudi 2’s scalability and cost-effectiveness.

Technical Analysis and Suggestions

The presenters stressed that having access to an LLM API alone is not enough for a strong corporate AI solution. Thorough validation against ground truth data is necessary to guarantee the outputs’ correctness and reliability. Data management is a crucial factor in AI system design as integrating sensitive data requires robust privacy and security safeguards. Prediction Guard offers other developers a blueprint for optimizing Gaudi 2 consumption via a staged approach. The secret to a successful deployment is to validate core functionality first, then gradually scale and optimize depending on performance data and user input.

Additional Information on Technical Execution

In order to optimize memory and compute utilization, handling static forms during the first migration phase required setting up model servers to manage varying prompt lengths by padding them to specified sizes. By processing a window of requests in bulk via dynamic batching, the system was able to increase throughput and decrease delay.

In order to properly handle traffic and prevent bottlenecks, load balancing among numerous Gaudi 2 servers was deployed during the optimization process. Performance was further improved by streamlining the processing of input prompts by grouping them into buckets according to size and padding within each bucket. Changing to the TGI Gaudi framework made managing model servers easier.

Scalable and robust deployment was made possible during the scaling phase by the implementation of an Intel Kubernetes Service (IKS) cluster that integrates CPU and Gaudi node groups. High availability and performance were guaranteed by automating deployment procedures and putting monitoring systems in place. Model serving efficiency was maximized by setting up inference parameters and controlling key-value caches.

Useful Implementation Advice

It is advised that developers and businesses wishing to use comparable AI solutions begin with open models in order to maintain control and customization options. It is crucial to make sure that sensitive data is handled safely and in accordance with applicable regulations. Successful deployment also requires taking a staged approach to optimization, beginning with fundamental features and progressively improving performance depending on measurements and feedback. Finally, optimizing and integrating processes may be streamlined by using frameworks like TGI Gaudi and Optimum Habana.

In summary

Webinar Intel

Prediction Guard’s all-encompassing strategy, developed in partnership with Intel, exemplifies how businesses may implement scalable, effective, and safe AI solutions. Prediction Guard offers a strong foundation for corporate AI adoption by using Intel Gaudi 2 and Intel Tiber Developer Cloud to handle important issues related to control, personalization, data protection, and accuracy. The Intel webinar‘s technical insights and useful suggestions provide developers and businesses with invaluable direction for negotiating the challenges associated with LLM adoption.

Read more on govindhtech.com

#IntelWebinar#ExperiencedAssistance#Llama3#ImplementLLM#Largelanguagemodels#IntelLiftoff#IntelTiberDeveloperCloud#PredictionGuard#IntelGaudi2#KubernetesService#AIsolutions#dataprotection#technology#technews#news#govindhtech

0 notes

Text

The Veda App Resolved Asia’s Challenges Regarding Education

Asia’s Educational Challenges Solved by the Veda App.

As the school year draws to a close, the Veda app will still be beneficial to over a million kids in Brunei, Japan, and hundreds of schools in Nepal.

Developed by Intel Liftoff program member InGrails Software, the Veda app is a cloud-based all-in-one school and college administration solution that combines all of the separate systems that educational institutions have historically required to employ.

The Veda App Solutions

The Veda app helps schools streamline their operations, provide parents real-time information, and enhance student instruction by automating administrative duties.

All administrative tasks at the school, such as payroll, inventory management, billing and finance, and academic (class, test, report, assignments, attendance, alerts, etc.), are now automated on a single platform. Schools were more conscious of the need to improve remote learning options as a result of the COVID-19 epidemic.

Teachers and students may interact with the curriculum at various times and from different places thanks to the platform’s support for asynchronous learning. This guarantees that learners may go on with their study in spite of outside disturbances.

Technological Accessibility

Although the requirements of all the schools are similar, their technological competency varies. The platform facilitates the accessibility and use of instructional products.

Veda gives schools simple-to-use tools that help them save time while making choices that will have a lasting effect on their children by using data and visualization. They are able to change the way they give education and learning.

The Role of Intel Liftoff

The Veda team and InGrails credit the Intel Liftoff program for helping them with product development. They also credit the mentorship and resources, such as the Intel Tiber Developer Cloud, for helping them hone their technology and optimize their platform for efficiency and scalability by incorporating artificial intelligence.

Their team members were able to learn new techniques for enhancing system performance while managing system load via a number of virtual workshops. In the future, they want to use the hardware resources made available by the Intel developer platform to further innovate Intel product and stay true to their goal of making education the greatest possible user of rapidly changing technology.

The Veda App‘s founder, Nirdesh Dwa, states, “they are now an all-in-one cloud-based school software and digital learning system for growing, big and ambitious names in education.”

The Effect

Veda has significantly changed the education industry by developing a single solution. The more than 1,200 schools that serve 1.3 million kids in Nepal, Japan, and Brunei have benefited from simplified procedures, a 30% average increase in parent participation, and enhanced instruction thanks to data-driven insights.

Education’s Future in Asia and Africa

The Veda platform is now reaching Central Africa, South East Asia, and the Middle East and North Africa. Additionally, they’re keeping up their goal of completely integrating AI capabilities, such social-emotional learning (SEL) tools and decision support systems, to build even more encouraging and productive learning environments by the middle of 2025.

Their mission is to keep pushing the boundaries of innovation and giving educators the resources they need to thrive in a world becoming more and more digital.

Future ideas for Veda include integrating AI to create a Decision Support System based on instructor input and making predictions about students’ talents for advanced coursework possible.

An all-inclusive MIS and digital learning platform for schools and colleges

They are the greatest all-in-one cloud-based educational software and digital learning platform for rapidly expanding, well-known, and aspirational educational brands.

Designed to Be Used by All

Parents and children may access all the information and study directly from their mobile devices with the help of an app. accessible on Android and iOS platforms.

Designed even for parents without technological experience, this comprehensive solution gives parents access to all the information they need about their kids, school, and important details like bills.

With the aid of their mobile devices, students may access resources and complete assignments, complete online courses, and much more.

Features

All you need to put your college and school on autopilot is Veda. Veda takes care of every facet of education, freeing you up to focus on what really matters: assisting students and securing their future.

Why should your school’s ERP be Veda?

Their superior product quality, first-rate customer support, industry experience, and ever-expanding expertise make us the ideal software for schools and colleges.Image Credit To Intel

More than one thousand universities and institutions vouch for it.

99% of clients have renewed for over six years.

Market leader in 45 Nepalese districts

superior after-sale support

Operating within ten days of the agreed-upon date

MIS Veda & E-learning

enabling online education in over a thousand schools. With only one school administration software, they provide Zoom Integrated Online Classes, Auto Attendance, Assignment with annotation, Subjective and Objective Exams, Learning Materials, Online Admissions, and Online Fee payment.Image Credit To Intel

Your software should reflect the uniqueness of your institution

Every kind of educational institution, including private schools, public schools, foreign schools, Montessori schools, and universities, uses the tried-and-true Veda school management system.

What is the Veda?

“Veda” is a comprehensive platform for digital learning and school management. Veda assists schools with automating daily operations and activities; facilitating effective and economical communication and information sharing among staff, parents, and school administration; and assisting schools in centrally storing, retrieving, and analyzing data produced by various school processes.

Read more on govindhtech.com

#VedaApp#ResolvedAsia#Challenges#IntelLiftoff#RegardingEducation#IntelLiftoffprogram#intel#IntelTiberDeveloperCloud#AIcapabilities#analyzingdata#learningplatform#AndroidiOS#mobiledevices#technology#technews#news#govindhtech

0 notes

Text

Nodeshift Offers Affordable AI Cloud with Intel Tiber Cloud!

A San Francisco-based firm called Nodeshift is making waves in the industry with its ground-breaking cloud-based solutions at a time when AI development is often associated with exorbitant costs. Nodeshift is democratising the development of AI by providing a worldwide cloud network for training and running AI models at a fraction of the cost of market leaders like Google Cloud and Microsoft Azure.

Nodeshift

Terraform can help automate deployments

Thanks to its integration with Terraform, you can automate the deployment of GPU and Compute virtual machines, as well as storage. This place is a great fit for your AWS, GCP, and Azure expertise.

Steps x NodeShift on GitHub

To generate resources on it and launch your code straight into GPU and compute virtual machines, utilise the GitHub Actions pipeline.

What People Say About NodeShift

With its new decentralised paradigm, it is poised to reshape cloud services, altering the dynamics of the market and opening up new avenues for innovation.

The NodeShift founders have been chosen to participate in Intel’s startup accelerators, Intel Ignite and Intel Liftoff. Their goal is to build a strong foundation for growth by collaborating with seasoned business owners, mentors, and engineers. This will facilitate in expediting the advancement of decentralisation technology and expanding its operations worldwide.

Developing business apps in the cloud securely and at a significant cost savings is made simple for developers by the NodeShift platform. By applying applied cryptography to distributed computing, it is possible to leverage technical breakthroughs.

With years of experience implementing Palantir’s business SaaS platform in the cloud, KestrelOx1 is excited to support the NodeShift project and team as they push the boundaries of what is possible in the cloud and ensure data security.

It is revolutionary that Nodeshift is a component of the Intel Liftoff Programme. It will always be able to improve their cloud services thanks to this strategic partnership, which gives them unrestricted access to state-of-the-art hardware and software.

Intel Liftoff

“The close collaboration with the Intel Liftoff Programme has significantly improved and accelerated our own development and success in the market.” The co-founder of Nodeshift, Mihai Mărcuță.

Developing and training cloud-based AI systems can be extremely expensive for small and medium-sized businesses. Leading suppliers’ exorbitant prices frequently strain budgets to the breaking point, inhibiting innovation. With savings of up to 80% over popular cloud services from Google, AWS, and Azure, Nodeshift takes on this challenge head-on.

The Cost-Cutting Method of Nodeshift

Nodeshift’s creative utilisation of already-existing, underutilised processing and storage resources is the key to its incredible cost effectiveness. As an alternative to building their own data centres, it makes use of a network of geographically dispersed virtual machines that are purchased from both major and small telecom providers. This model improves scalability and flexibility in addition to cutting expenses. Here at Nodeshift, security and data protection come first.

Because Nodeshift is SOC 2 certified and follows strict standards like GDPR, it guarantees the highest level of data security and privacy.Image Credit to Intel

Strengthened by the Tiber Developer Cloud at Intel

As a participant in the Intel Liftoff Programme, it has unmatched access to the Intel Tiber Development Cloud. You may easily find cutting-edge hardware components and necessary software tools for developing AI here. Because of this collaboration, Nodeshift engineers can make sure that their products are always at the forefront of technology by thoroughly testing and improving them.

Max security and performance for AI applications are ensured by Nodeshift because to its access to the newest AI accelerator technologies, such as Intel Gaudi 2 or Gaudi 3, and contemporary CPU developments, such Intel SGX. It keeps its solutions one step ahead of the competition by utilising Intel’s expertise to further develop them.

Gaining More Recognition and Trustworthiness

Apart from the technology assistance, it gains from the powerful startup acceleration apparatus of Intel. In addition to increased media presence, this entails introductions to mentors, prospective clients, and important industry people. Being present at Intel events helps it become more visible in the market and improves its standing with investors, staff members, and clients.

The story of its demonstrates how creative thinking and smart alliances can revolutionise the tech sector. It is opening up AI development to a wider audience at a lower cost and opening doors for new kinds of technical breakthroughs by utilising Intel’s resources and their distinctive approach to cloud infrastructure.

Intel Ignite

“Decentralisation will be the foundation of NodeShift’s new paradigm, which will redefine cloud services and alter the dynamics of the market. This will present new opportunities for innovation.” The NodeShift founders have been chosen to participate in Intel’s startup accelerators, Intel Ignite and Intel Liftoff. Their goal is to build a strong foundation for growth by collaborating with seasoned business owners, mentors, and engineers. As a result, NodeShift will be able to expand its operations internationally and quicken the advancement of decentralised technologies.

Read more on Govindhtech.com

#Terraform#govindhtech#nodeshift#artificialintelligence#TechNews2024#technologynews#technology#Technologytrends#intelliftoff#inteltiber#intel#inteligente

0 notes

Text

Aurora Supercomputer Sets a New Record for AI Tragic Speed!

Intel Aurora Supercomputer

Together with Argonne National Laboratory and Hewlett Packard Enterprise (HPE), Intel announced at ISC High Performance 2024 that the Aurora supercomputer has broken the exascale barrier at 1.012 exaflops and is now the fastest AI system in the world for AI for open science, achieving 10.6 AI exaflops. Additionally, Intel will discuss how open ecosystems are essential to the advancement of AI-accelerated high performance computing (HPC).

Why This Is Important:

From the beginning, Aurora was intended to be an AI-centric system that would enable scientists to use generative AI models to hasten scientific discoveries. Early AI-driven research at Argonne has advanced significantly. Among the many achievements are the mapping of the 80 billion neurons in the human brain, the improvement of high-energy particle physics by deep learning, and the acceleration of drug discovery and design using machine learning.

Analysis

The Aurora supercomputer has 166 racks, 10,624 compute blades, 21,248 Intel Xeon CPU Max Series processors, and 63,744 Intel Data Centre GPU Max Series units, making it one of the world’s largest GPU clusters. 84,992 HPE slingshot fabric endpoints make up Aurora’s largest open, Ethernet-based supercomputing connection on a single system.

The Aurora supercomputer crossed the exascale barrier at 1.012 exaflops using 9,234 nodes, or just 87% of the system, yet it came in second on the high-performance LINPACK (HPL) benchmark. Aurora supercomputer placed third on the HPCG benchmark at 5,612 TF/s with 39% of the machine. The goal of this benchmark is to evaluate more realistic situations that offer insights into memory access and communication patterns two crucial components of real-world HPC systems. It provides a full perspective of a system’s capabilities, complementing benchmarks such as LINPACK.

How AI is Optimized

The Intel Data Centre GPU Max Series is the brains behind the Aurora supercomputer. The core of the Max Series is the Intel X GPU architecture, which includes specialised hardware including matrix and vector computing blocks that are ideal for AI and HPC applications. Because of the unmatched computational performance provided by the Intel X architecture, the Aurora supercomputer won the high-performance LINPACK-mixed precision (HPL-MxP) benchmark, which best illustrates the significance of AI workloads in HPC.

The parallel processing power of the X architecture excels at handling the complex matrix-vector operations that are a necessary part of neural network AI computing. Deep learning models rely heavily on matrix operations, which these compute cores are essential for speeding up. In addition to the rich collection of performance libraries, optimised AI frameworks, and Intel’s suite of software tools, which includes the Intel oneAPI DPC++/C++ Compiler, the X architecture supports an open ecosystem for developers that is distinguished by adaptability and scalability across a range of devices and form factors.

Enhancing Accelerated Computing with Open Software and Capacity

He will stress the value of oneAPI, which provides a consistent programming model for a variety of architectures. OneAPI, which is based on open standards, gives developers the freedom to write code that works flawlessly across a variety of hardware platforms without requiring significant changes or vendor lock-in. In order to overcome proprietary lock-in, Arm, Google, Intel, Qualcomm, and others are working towards this objective through the Linux Foundation’s Unified Acceleration Foundation (UXL), which is creating an open environment for all accelerators and unified heterogeneous compute on open standards. The UXL Foundation is expanding its coalition by adding new members.

As this is going on, Intel Tiber Developer Cloud is growing its compute capacity by adding new, cutting-edge hardware platforms and new service features that enable developers and businesses to assess the newest Intel architecture, innovate and optimise workloads and models of artificial intelligence rapidly, and then implement AI models at scale. Large-scale Intel Gaudi 2-based and Intel Data Centre GPU Max Series-based clusters, as well as previews of Intel Xeon 6 E-core and P-core systems for certain customers, are among the new hardware offerings. Intel Kubernetes Service for multiuser accounts and cloud-native AI training and inference workloads is one of the new features.

Next Up

Intel’s objective to enhance HPC and AI is demonstrated by the new supercomputers that are being implemented with Intel Xeon CPU Max Series and Intel Data Centre GPU Max Series technologies. The Italian National Agency for New Technologies, Energy and Sustainable Economic Development (ENEA) CRESCO 8 system will help advance fusion energy; the Texas Advanced Computing Centre (TACC) is fully operational and will enable data analysis in biology to supersonic turbulence flows and atomistic simulations on a wide range of materials; and the United Kingdom Atomic Energy Authority (UKAEA) will solve memory-bound problems that underpin the design of future fusion powerplants. These systems include the Euro-Mediterranean Centre on Climate Change (CMCC) Cassandra climate change modelling system.

The outcome of the mixed-precision AI benchmark will serve as the basis for Intel’s Falcon Shores next-generation GPU for AI and HPC. Falcon Shores will make use of Intel Gaudi’s greatest features along with the next-generation Intel X architecture. A single programming interface is made possible by this integration.

In comparison to the previous generation, early performance results on the Intel Xeon 6 with P-cores and Multiplexer Combined Ranks (MCR) memory at 8800 megatransfers per second (MT/s) deliver up to 2.3x performance improvement for real-world HPC applications, such as Nucleus for European Modelling of the Ocean (NEMO). This solidifies the chip’s position as the host CPU of choice for HPC solutions.

Read more on govindhtech.com

#aurorasupercomputer#AISystem#AIaccelerated#highperformancecomputing#aimodels#machinelearning#inteldatacentre#intelxeoncpu#HPC#MemoryAccess#HPCApplications#AIComputing#softwaretools#intelgaudi2#IntelXeon#aibenchmark#news#technews#technology#technologynews#technologytrends#govindhtech

0 notes