#nvidia graphics

Text

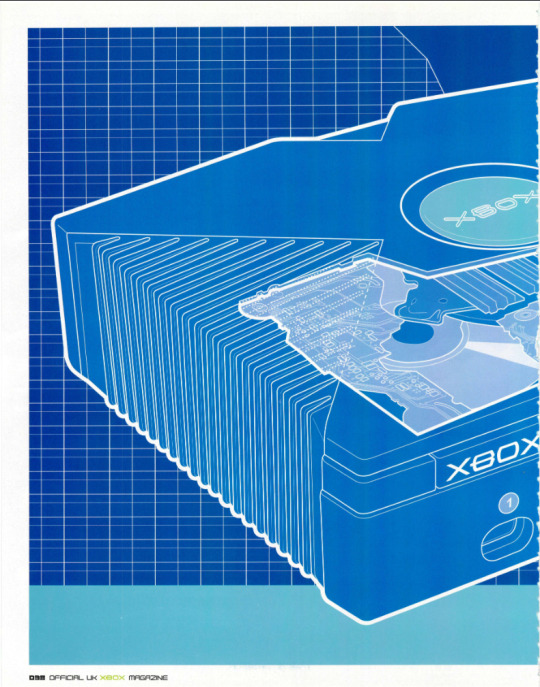

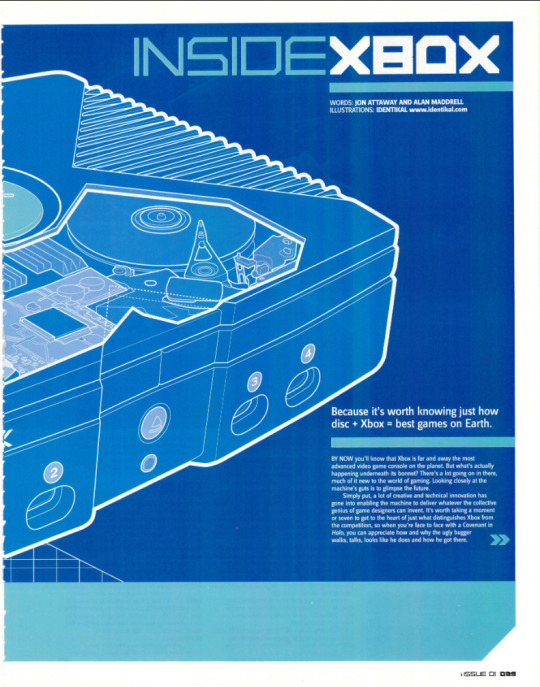

" Because it's worth knowing just how disc + Xbox = best games on Earth! "

Official Xbox Magazine (UK) n01 - November, 2001. - Pg. 38 -> Pg.45

#Microsoft#Microsoft Xbox#Xbox#OG Xbox#Xbox Live#NVIDIA#NVIDIA Graphics#NV2A#Hardware#Hardware Specs

68 notes

·

View notes

Text

I, @Lusin333, have a Dell NVIDIA GeForce GTX 280 GPU (0X103G).

I will put this GPU in my crypto mining rig so I can mine more Bitcoin or some other cryptocurrency.

Thanks to Dell for giving this graphics card to me FOR FREE.

#Dell#Dell computer#Dell Computers#nvidia#nvidia geforce#nvidia gaming#nvidia gpu#nvidia graphics#nvidia graphics card#ultimate gamer#ultimate gangster#tech gang#tech gangster#Lusin333#nvidia rtx#nvidia geforce rtx#gpu#gpus#graphics card#graphics cards#gamer#gaming#tech#techstuff#black and white#linustechtips#tech meme#tech memes#gaming tech#gaming technology

0 notes

Text

youtube

Custom PC Build Powered by Intel i9-14900k & RTX 3060

Explore peak performance with The IT Gear's dynamic duo – the Intel i9-14900k processor and RTX 3060 graphics card. Unleash unparalleled power in your PC setup, whether you're a gamer, content creator, or tech enthusiast. Dive into a world where speed meets graphics excellence, and elevate your computing experience with this powerful combination available at The IT Gear.

#Intel i9-14900k & RTX 3060#Intel i9-14900k#14900k#RTX 3060#Nvidia Graphics#the it gear#Customized PC#Custom PC#Youtube

0 notes

Text

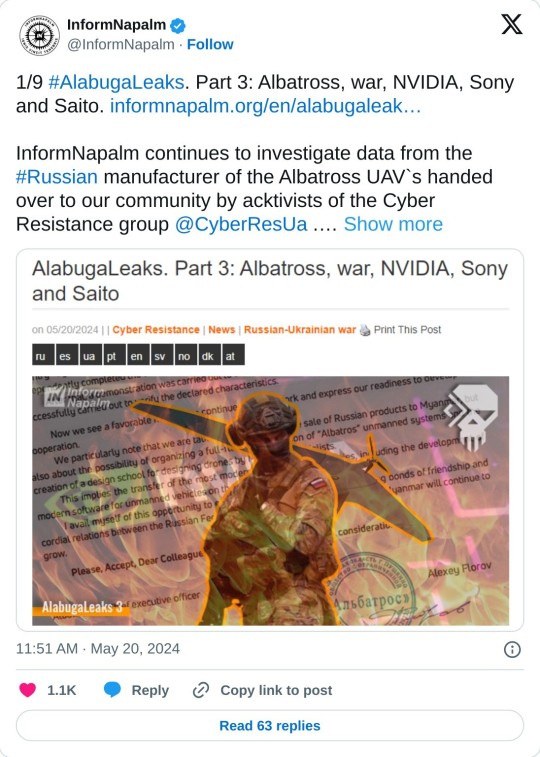

NVIDIA company kills Ukrainians.

Please share

#nvidia#computers#computer games#rpg#windows#video games#pc#console#graphics#graphic cards#computing

24 notes

·

View notes

Text

50 notes

·

View notes

Text

Earlier this year my laptop started randomly BSOD-ing so I reset it but had it keep some of my settings. Ever since then the general performance has sucked majorly, and tonight I discovered that it apparently made duplicates of like almost every system file. So it's just like bloated as shit. Amazing.

I'll have to reset it again and do it right this time. There's no reason Lethal Company and GGStrive in Gameboy mode should be running at like 10fps on this thing lol

#textpost#It's only an i5 with 8gb memory but that WAS good enough to run remastered Halo 2 on medium-high graphics pre-reset#So something fucky is afoot...#Turned out it was BSOD-ing because of a faulty Nvidia driver btw#It could've been fixed just by reinstalling an older version T_T

17 notes

·

View notes

Note

sorry for a dumb question but i thought you would know since you're gifing the concert movie: is there a way to download the video after i rent it? or is it just impossible? i just assumed that since people are making gifs there is indeed a way to download it but i have no such option so i'm confused

No worries! Vimeo doesn't offer a way to download the movie. I have a program that lets me record my screen so that's the way I've gotten the material for my gifs.

#I'm using GeForce Experience if anyone's wondering#but I think you need a nvidia graphics card for it to work? idk#AMD has their own program but I've never used it since I've only had nvidias in my PCs#joker out#actually yeah go in the tag so ppl who think of renting it will see this

11 notes

·

View notes

Text

so yesterday at around 4:30pm my GTX1650 died on me, after a furious troubleshooting period to make sure it was in fact non functional, i ran to microcenter to get a replacement. i got an RTX3050 for $379.

i decided to give the new card a test with Studio renders. the 1650 could render "Space Marine" in roughly 5-6 minutes.

the RTX3050 came in at a blazing 63 seconds.

for shit's and giggles my work computer with a Quadro K220 (thats from 2013 btw) could render this in around 25-30 minutes.

79 notes

·

View notes

Text

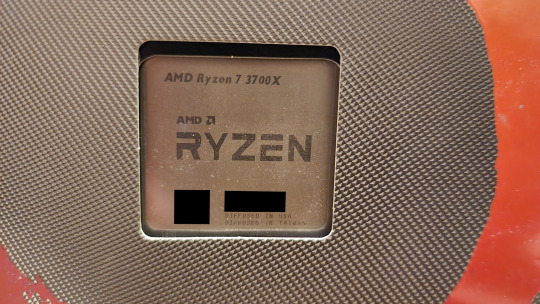

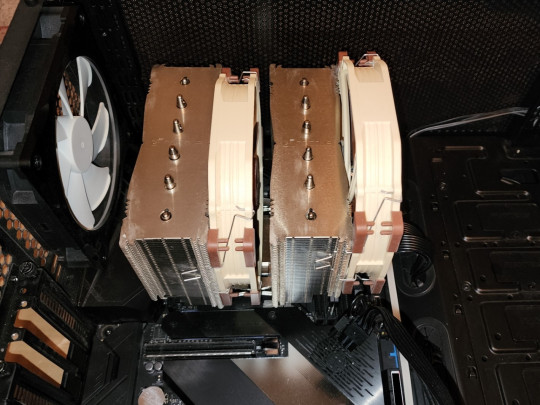

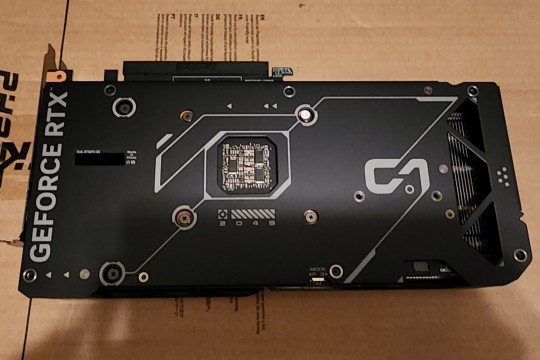

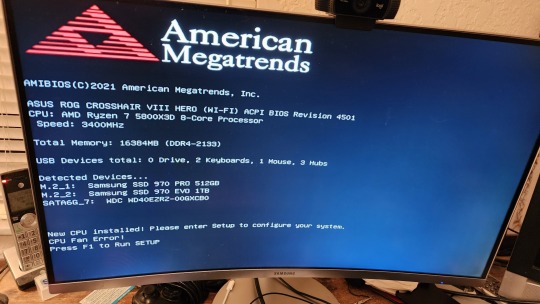

I upgraded my CPU + GPU a couple weekends ago ٩( ᐛ )و

Ryzen 7 3700X -> Ryzen 7 5800X3D

NVIDIA GTX 1070 -> NVIDIA RTX 4070

EDIT: Also yes I'm aware that I left the CPU fan cable dangling in the last photo there, lol

39 notes

·

View notes

Note

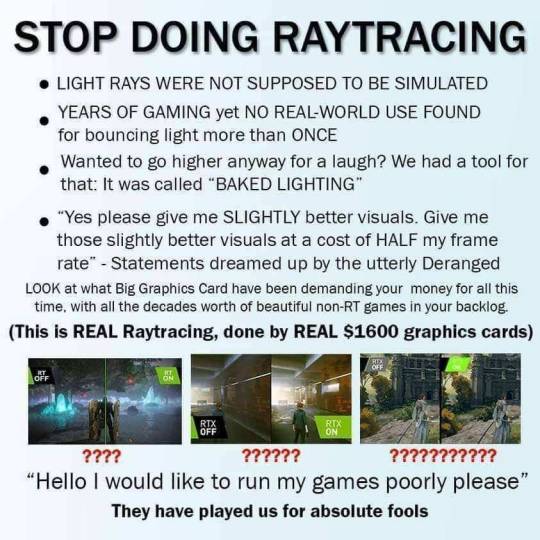

What do you think of Nvidia's new DLSS Frame Generation technology that creates frames in between ones created by the GPU with AI to produce a smoother frame rate and also give you more FPS?

As a chronic screenshotist, I hate it. I can't find the post now, but I talked about how smeary recent games are thanks to upscaling. Games with dynamic resolutions and heavy upscaling always leave smudgy, smeary, ugly artifacts all over the screen, where the renderer is filling in missing information.

I see it in a lot of Unreal Engine games, but I'm pretty sure I saw it in the PS4 version of Street Fighter 6, too. It makes games look worse than they should.

I've also played Fortnite through things like GeForce Now, where you can (or used to be able to) crank the settings up to max, and even with everything on Epic Quality, surfaces can still be smeary, grainy, and covered in artifact trails.

So when you tell me that not only is the rendering engine going to be making a blurry mess out of my game because of resolution scaling, but it's also going to be motion smoothing whole entire new frames on top of that?

Gross. No thank you.

I feel like photo mode is a way to "solve" this, because photo mode deliberately takes you out of the action to render screenshots at much, much higher settings than you normally get during gameplay. But that creates a different problem in that's not how photography always works.

Photography is about taking 300 shots and only using the five best ones.

Photo mode suggests you are setting up for a specific screenshot on purpose when that's just not how it always goes.

If interframe generation makes this worse, or harder? I don't want it. I don't care what the benefits claim to be. We're in a constant war to tell our parents to turn motion smoothing off on new TVs, why would we want to bring that in to games?

15 notes

·

View notes

Text

Praise be to my external hard drive, which had a copy of my entire sims 4 folder because I somehow accidentally deleted half of my saves, including my Tolkien one I had been working on for years. I've lost some progress since the old saves were from February 6th, but at least I have them. I was about to cry.

#i think this happened when i unlinked onedrive it screwed stuff up#my nvidia graphics are fucked too i can't open the control panel#and my gshade for ts3 isn't loading any shaders#all my sims 4 settings were reset too and that was a pain

2 notes

·

View notes

Text

техно-демо nvidia в 2000 году

4 notes

·

View notes

Text

I, @Lusin333, have a Gigabyte NVIDIA GeForce GTX 460 (GVN460OC1GI).

I will put this GPU in my crypto mining rig so I can mine more Bitcoin or some other crypto.

Thanks to Gigabyte for giving this GPU to me FOR FREE.

#gigabyte#gigabyte gaming#gigabyte graphics card#gigabyte gpu#nvidia#nvidia geforce#nvidiagaming#nvidia gpu#nvidia graphics#nvidiagraphicscard#gtx 770#ultimate gamer#ultimate gangster#tech gang#tech gangster#Lusin333#nvidiartx#nvidia geforce rtx#gpu#gpus#graphics card#graphics cards#gamer#gaming#tech#techstuff#black and white#linustechtips#tech meme#tech memes

0 notes

Text

Getting so tired of douchey tech companies constantly using AI as a marketing buzzword as if it's going to impress when it all does is make me think of annoying douchebags who want credit for stolen art assets. I'm getting ads for AI crap just trying to update my graphics card. Please stop.

I miss when the term AI just made me think of video game enemy behavior and Data from Star Trek TNG...

#nvidia#graphics card#techbro douchebags#tech#technology#fuck ai art#marketing#buzzwords#annoying people#annoying corporations#star trek#data#star trek data#video games

5 notes

·

View notes

Text

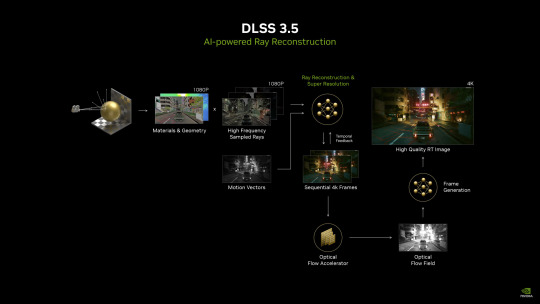

NVIDIA'S DLSS 3.5 - What it is & some thoughts on it

So DLSS 3.5 was just announced by NVIDIA at gamescom 2023. Here is an explanation & some thoughts on it.

For the most part it has a similar feature-set as base DLSS 3, but with one new key feature (which unlike DLSS 3's frame generation works on all RTX GPUs, not just the 40 series):

"Ray Reconstruction" - a new technique that uses the DLSS to reconstruct raytraced output directly in the DLSS frame, functioning like a denoiser allowing for a much higher raytracing quality than traditional real-time denoising filters could achieve.

To people unfamiliar with raytracing, this might sound like a bunch of nonsense, so let me explain what this means:

Raytracing is a rendering technique that allows for much more realistic lighting and reflection effects than traditional rasterization as most people should at this point be aware of. However, raytracing is an iterative process, meaning that the more rays you shoot and accumulate, the more accurate the result will be. If you shoot too few rays, the result will be noisy and grainy. Here is an example of such a noisy image from my pathtracer:

These individual rays are called samples, and the process of shooting them is called sampling. The more samples you shoot, the more accurate the result will be, but the longer it will take to render, as we have to run the expensive raytracing calculations for each sample. This is why raytracing is so expensive, as it can require a lot of samples to look good.

However, we have a way to reduce the noise in the result without having to shoot more samples: Denoising filters. The job of a denoising filter is simple: To take a noisy input and make it, well, less noisy. In addition, raytracing is typically performed at a lower resolution than the final output, and then upscaled to the final resolution, as we have fewer pixels to shoot rays for. Typical real-time raytracing sample counts are in the 1-4 samples per pixel range, while a typical final output could take tens, hundreds, even thousands of samples per pixel to converge into a non-noisy result.

However, there is one trade-off with these denoising filters: They often crush detail, and can make the result look blurry and smeared, and if there is not enough detail in the input, they may cause blotchy artifacts. In addition, many of them use temporal methods, meaning that they require multiple frames to converge into a good result - and they can fail to discard bad pixels (so pixels should be updated, such as something moving on screen) and cause ghosting artifacts.

Using AI in order to denoise raytracing results is not a new idea, and has been done before, such as by Intel with their famous Open Image Denoise Library, but it has not really been seen shipping in real-time applications before (more on that later). Here is the bedroom scene from above, but denoised with Open Image Denoise:

This is what makes DLSS 3.5 so interesting; it uses the AI-acceleration hardware on modern NVIDIA GPUs (the "tensor cores") to perform the denoising in real-time - right with the upscaling that DLSS is already doing. This means that we are basically petting two goats with one hand: We get a cleaner, noise-free output, and then also directly scale it up to the final resolution. Thanks to the deep-learning nature of it, details can be approximated by the neural network and retained, and the result can be much cleaner than traditional denoising filters. At least that is what NVIDIA claims, and from what they have shown so far, and what other AI-based denoisers have shown, I am inclined to believe them.

There are however 2 things I would like to point out here.

First up, the idea of using real-time neural network based denoising is not new. In fact, a paper about this very subject was published by Intel research in July 2022 - over a year ago titled "Temporally Stable Real-Time Joint Neural Denoising and Supersampling". Here is a sample of the outputs from the paper's method:

The similarities to DLSS 3.5 are... quite remarkable, such as performing upscaling and denoising directly in one go. However, this technology (to my knowledge) has not actually shipped in XeSS, Intel's own DLSS competitor - which unlike DLSS is also supported on non-Intel hardware. And this leads me to my second point:

I'm quite concerned about so much of the rendering pipeline being a more or less blackbox, and much more one that is controlled by NVIDIA, and therefore only available on NVIDIA hardware. This is not a new concern, as DLSS has been exclusive to NVIDIA hardware since its inception, but it is one that is becoming more and more relevant as more and more of the rendering pipeline is being taken over by these AI-based techniques. XeSS seems like the only reasonable competitor; AMD's FSR2, while especially good for lower-power environments like mobile chips, is after all a more traditional temporal + spatial upscaler, and does not have the same potential for quality as DLSS and XeSS as AI-based methods do - and neither are truly open source. One really has to hope AMD will show up with an FSR3 that can compete with DLSS and Intel's XeSS... but I'm not holding my breath on that one.

Another interesting aspect of all of this is how the raw computational power of a GPU is no longer as relevant with methods like these. Features have started to become more important than performance, especially in the low and mid-end of the spectrum. Interestingly, but unsurprisingly, the cost of most GPUs, especially NVIDIA GPUs, has not really gone down despite the fact that the performance of the GPUs themselves has almost stagnated, at least in the lower end of the spectrum.

Regardless, DLSS 3.5 is cool tech, and I'm looking forward to seeing it in action. I'm just hoping that this trend of AI-driven rendering isn't going to put us into an unfortunate situation where we are at the mercy of AI blackboxes that are only available on certain hardware.

5 notes

·

View notes

Photo

in the twilight of dicsoveries

#hogwarts legacy#hogwarts legacy mc#nvidia ansel#oc: lavinia whitlock#the yassification new hair gave her is stunning#the clipping is pain ofc but otherwise it's amazing#off for a fireflies date and probably poacher murder#hopefully one day i'll fix my mediocre graphics

18 notes

·

View notes