#sql transaction

Explore tagged Tumblr posts

Text

🚀 Struggling to balance transactional (OLTP) & analytical (OLAP) workloads? Microsoft Fabric SQL Database is the game-changer! In this blog, I’ll share best practices, pitfalls to avoid, and optimization tips to help you master Fabric SQL DB. Let’s dive in! 💡💬 #MicrosoftFabric #SQL

#Data management#Database Benefits#Database Optimization#Database Tips#Developer-Friendly#Fabric SQL Database#Microsoft Fabric#SQL database#SQL Performance#Transactional Workloads#Unlock SQL Potential

0 notes

Text

Understanding Transaction Isolation Levels in SQL Server

Introduction Have you ever wondered what happens behind the scenes when you start a transaction in SQL Server? As a database developer, understanding how isolation levels work is crucial for writing correct and performant code. In this article, we’ll dive into the details of what happens when you begin an explicit transaction and run multiple statements before committing. We’ll explore whether…

View On WordPress

0 notes

Text

LETS FUCKING GOOOO I JUST CHECKED IF MY DATABASES FINAL PROJECT IS GRADED AND IT IS

I expected 145/200

I got 190/200

This has been a great first day home

The worst part of coming home is the fucking fur. EVERYWHERE. From 3 cats living in my messy room alone for over a year (over a year because I never actually got around to cleaning my room last summer either)

#rambles#the best part is there were short response questions:#'describe how you use transactions in your project' 'i didn't.'#'describe how you prevent sql injection' 'i didn't'#full points on both of those.#(i explained that i tried to do the first thing and everything broke and i didn't have time to fix it)

4 notes

·

View notes

Text

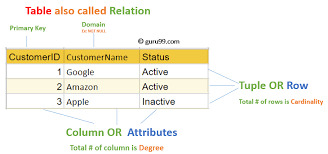

Structured Query Language (SQL): A Comprehensive Guide

Structured Query Language, popularly called SQL (reported "ess-que-ell" or sometimes "sequel"), is the same old language used for managing and manipulating relational databases. Developed in the early 1970s by using IBM researchers Donald D. Chamberlin and Raymond F. Boyce, SQL has when you consider that end up the dominant language for database structures round the world.

Structured query language commands with examples

Today, certainly every important relational database control system (RDBMS)—such as MySQL, PostgreSQL, Oracle, SQL Server, and SQLite—uses SQL as its core question language.

What is SQL?

SQL is a website-specific language used to:

Retrieve facts from a database.

Insert, replace, and delete statistics.

Create and modify database structures (tables, indexes, perspectives).

Manage get entry to permissions and security.

Perform data analytics and reporting.

In easy phrases, SQL permits customers to speak with databases to shop and retrieve structured information.

Key Characteristics of SQL

Declarative Language: SQL focuses on what to do, now not the way to do it. For instance, whilst you write SELECT * FROM users, you don’t need to inform SQL the way to fetch the facts—it figures that out.

Standardized: SQL has been standardized through agencies like ANSI and ISO, with maximum database structures enforcing the core language and including their very own extensions.

Relational Model-Based: SQL is designed to work with tables (also called members of the family) in which records is organized in rows and columns.

Core Components of SQL

SQL may be damaged down into numerous predominant categories of instructions, each with unique functions.

1. Data Definition Language (DDL)

DDL commands are used to outline or modify the shape of database gadgets like tables, schemas, indexes, and so forth.

Common DDL commands:

CREATE: To create a brand new table or database.

ALTER: To modify an present table (add or put off columns).

DROP: To delete a table or database.

TRUNCATE: To delete all rows from a table but preserve its shape.

Example:

sq.

Copy

Edit

CREATE TABLE personnel (

id INT PRIMARY KEY,

call VARCHAR(one hundred),

income DECIMAL(10,2)

);

2. Data Manipulation Language (DML)

DML commands are used for statistics operations which include inserting, updating, or deleting information.

Common DML commands:

SELECT: Retrieve data from one or more tables.

INSERT: Add new records.

UPDATE: Modify existing statistics.

DELETE: Remove information.

Example:

square

Copy

Edit

INSERT INTO employees (id, name, earnings)

VALUES (1, 'Alice Johnson', 75000.00);

three. Data Query Language (DQL)

Some specialists separate SELECT from DML and treat it as its very own category: DQL.

Example:

square

Copy

Edit

SELECT name, income FROM personnel WHERE profits > 60000;

This command retrieves names and salaries of employees earning more than 60,000.

4. Data Control Language (DCL)

DCL instructions cope with permissions and access manage.

Common DCL instructions:

GRANT: Give get right of entry to to users.

REVOKE: Remove access.

Example:

square

Copy

Edit

GRANT SELECT, INSERT ON personnel TO john_doe;

five. Transaction Control Language (TCL)

TCL commands manage transactions to ensure data integrity.

Common TCL instructions:

BEGIN: Start a transaction.

COMMIT: Save changes.

ROLLBACK: Undo changes.

SAVEPOINT: Set a savepoint inside a transaction.

Example:

square

Copy

Edit

BEGIN;

UPDATE personnel SET earnings = income * 1.10;

COMMIT;

SQL Clauses and Syntax Elements

WHERE: Filters rows.

ORDER BY: Sorts effects.

GROUP BY: Groups rows sharing a assets.

HAVING: Filters companies.

JOIN: Combines rows from or greater tables.

Example with JOIN:

square

Copy

Edit

SELECT personnel.Name, departments.Name

FROM personnel

JOIN departments ON personnel.Dept_id = departments.Identity;

Types of Joins in SQL

INNER JOIN: Returns statistics with matching values in each tables.

LEFT JOIN: Returns all statistics from the left table, and matched statistics from the right.

RIGHT JOIN: Opposite of LEFT JOIN.

FULL JOIN: Returns all records while there is a in shape in either desk.

SELF JOIN: Joins a table to itself.

Subqueries and Nested Queries

A subquery is a query inside any other query.

Example:

sq.

Copy

Edit

SELECT name FROM employees

WHERE earnings > (SELECT AVG(earnings) FROM personnel);

This reveals employees who earn above common earnings.

Functions in SQL

SQL includes built-in features for acting calculations and formatting:

Aggregate Functions: SUM(), AVG(), COUNT(), MAX(), MIN()

String Functions: UPPER(), LOWER(), CONCAT()

Date Functions: NOW(), CURDATE(), DATEADD()

Conversion Functions: CAST(), CONVERT()

Indexes in SQL

An index is used to hurry up searches.

Example:

sq.

Copy

Edit

CREATE INDEX idx_name ON employees(call);

Indexes help improve the performance of queries concerning massive information.

Views in SQL

A view is a digital desk created through a question.

Example:

square

Copy

Edit

CREATE VIEW high_earners AS

SELECT call, salary FROM employees WHERE earnings > 80000;

Views are beneficial for:

Security (disguise positive columns)

Simplifying complex queries

Reusability

Normalization in SQL

Normalization is the system of organizing facts to reduce redundancy. It entails breaking a database into multiple related tables and defining overseas keys to link them.

1NF: No repeating groups.

2NF: No partial dependency.

3NF: No transitive dependency.

SQL in Real-World Applications

Web Development: Most web apps use SQL to manipulate customers, periods, orders, and content.

Data Analysis: SQL is extensively used in information analytics systems like Power BI, Tableau, and even Excel (thru Power Query).

Finance and Banking: SQL handles transaction logs, audit trails, and reporting systems.

Healthcare: Managing patient statistics, remedy records, and billing.

Retail: Inventory systems, sales analysis, and consumer statistics.

Government and Research: For storing and querying massive datasets.

Popular SQL Database Systems

MySQL: Open-supply and extensively used in internet apps.

PostgreSQL: Advanced capabilities and standards compliance.

Oracle DB: Commercial, especially scalable, agency-degree.

SQL Server: Microsoft’s relational database.

SQLite: Lightweight, file-based database used in cellular and desktop apps.

Limitations of SQL

SQL can be verbose and complicated for positive operations.

Not perfect for unstructured information (NoSQL databases like MongoDB are better acceptable).

Vendor-unique extensions can reduce portability.

Java Programming Language Tutorial

Dot Net Programming Language

C ++ Online Compliers

C Language Compliers

2 notes

·

View notes

Text

Symfony Clickjacking Prevention Guide

Clickjacking is a deceptive technique where attackers trick users into clicking on hidden elements, potentially leading to unauthorized actions. As a Symfony developer, it's crucial to implement measures to prevent such vulnerabilities.

🔍 Understanding Clickjacking

Clickjacking involves embedding a transparent iframe over a legitimate webpage, deceiving users into interacting with hidden content. This can lead to unauthorized actions, such as changing account settings or initiating transactions.

🛠️ Implementing X-Frame-Options in Symfony

The X-Frame-Options HTTP header is a primary defense against clickjacking. It controls whether a browser should be allowed to render a page in a <frame>, <iframe>, <embed>, or <object> tag.

Method 1: Using an Event Subscriber

Create an event subscriber to add the X-Frame-Options header to all responses:

// src/EventSubscriber/ClickjackingProtectionSubscriber.php namespace App\EventSubscriber; use Symfony\Component\EventDispatcher\EventSubscriberInterface; use Symfony\Component\HttpKernel\Event\ResponseEvent; use Symfony\Component\HttpKernel\KernelEvents; class ClickjackingProtectionSubscriber implements EventSubscriberInterface { public static function getSubscribedEvents() { return [ KernelEvents::RESPONSE => 'onKernelResponse', ]; } public function onKernelResponse(ResponseEvent $event) { $response = $event->getResponse(); $response->headers->set('X-Frame-Options', 'DENY'); } }

This approach ensures that all responses include the X-Frame-Options header, preventing the page from being embedded in frames or iframes.

Method 2: Using NelmioSecurityBundle

The NelmioSecurityBundle provides additional security features for Symfony applications, including clickjacking protection.

Install the bundle:

composer require nelmio/security-bundle

Configure the bundle in config/packages/nelmio_security.yaml:

nelmio_security: clickjacking: paths: '^/.*': DENY

This configuration adds the X-Frame-Options: DENY header to all responses, preventing the site from being embedded in frames or iframes.

🧪 Testing Your Application

To ensure your application is protected against clickjacking, use our Website Vulnerability Scanner. This tool scans your website for common vulnerabilities, including missing or misconfigured X-Frame-Options headers.

Screenshot of the free tools webpage where you can access security assessment tools.

After scanning for a Website Security check, you'll receive a detailed report highlighting any security issues:

An Example of a vulnerability assessment report generated with our free tool, providing insights into possible vulnerabilities.

🔒 Enhancing Security with Content Security Policy (CSP)

While X-Frame-Options is effective, modern browsers support the more flexible Content-Security-Policy (CSP) header, which provides granular control over framing.

Add the following header to your responses:

$response->headers->set('Content-Security-Policy', "frame-ancestors 'none';");

This directive prevents any domain from embedding your content, offering robust protection against clickjacking.

🧰 Additional Security Measures

CSRF Protection: Ensure that all forms include CSRF tokens to prevent cross-site request forgery attacks.

Regular Updates: Keep Symfony and all dependencies up to date to patch known vulnerabilities.

Security Audits: Conduct regular security audits to identify and address potential vulnerabilities.

📢 Explore More on Our Blog

For more insights into securing your Symfony applications, visit our Pentest Testing Blog. We cover a range of topics, including:

Preventing clickjacking in Laravel

Securing API endpoints

Mitigating SQL injection attacks

🛡️ Our Web Application Penetration Testing Services

Looking for a comprehensive security assessment? Our Web Application Penetration Testing Services offer:

Manual Testing: In-depth analysis by security experts.

Affordable Pricing: Services starting at $25/hr.

Detailed Reports: Actionable insights with remediation steps.

Contact us today for a free consultation and enhance your application's security posture.

3 notes

·

View notes

Text

Windows Recall Has Already Been Exploited

If its existence wasn't already bad enough, everything Recall stores is kept in an unencrypted SQL database.

Even if Microsoft pinkie promises that Recall data is only stored locally, that will not stop malware from slurping everything you have done, are doing, or will do, from your PC.

If you're thinking you can just turn it off when it arrives in your Windows updates and be fine, consider the fact that your personal information is likely going to be on someone else's PC at some point.

I have worked IT for a Fortune 500 retailer and can tell you; if you make any large purchase, be it a car, an instrument, windows or doors for a home, or have any sort of custom order made, that task is done through a Windows PC that will have your personal info and payment information on it at some point.

Financial and medical institutions will have more care about this, but I can guess with a high degree of certainty that nearly any PC in a retail environment is susceptible to malware from unsavvy employees, and even more susceptible to a simple physical attack. In a busy store, basically anyone could walk up and plug a USB drive with a malicious payload to a PC used for transactions. Cash registers/tills are generally safer, since they will be running a maximum-stability older version of Windows that will not have these kinds of updates.

I'd love to hope that businesses realize this and demand a version of windows with AI features disabled, but AI is such a buzzword in the current market that products are popping up all over the place that do not directly have anything to do with AI, but are slapped with the label anyway. Microsoft does not care if home users complain. They care if investors, large business accounts, and system integrators do. Those people are nothing if not techbro hype train riders, so good luck with that.

The takeaway here is to be extremely careful. And switch to Linux if it meets your needs.

11 notes

·

View notes

Text

The Great Data Cleanup: A Database Design Adventure

As a budding database engineer, I found myself in a situation that was both daunting and hilarious. Our company's application was running slower than a turtle in peanut butter, and no one could figure out why. That is, until I decided to take a closer look at the database design.

It all began when my boss, a stern woman with a penchant for dramatic entrances, stormed into my cubicle. "Listen up, rookie," she barked (despite the fact that I was quite experienced by this point). "The marketing team is in an uproar over the app's performance. Think you can sort this mess out?"

Challenge accepted! I cracked my knuckles, took a deep breath, and dove headfirst into the database, ready to untangle the digital spaghetti.

The schema was a sight to behold—if you were a fan of chaos, that is. Tables were crammed with redundant data, and the relationships between them made as much sense as a platypus in a tuxedo.

"Okay," I told myself, "time to unleash the power of database normalization."

First, I identified the main entities—clients, transactions, products, and so forth. Then, I dissected each entity into its basic components, ruthlessly eliminating any unnecessary duplication.

For example, the original "clients" table was a hot mess. It had fields for the client's name, address, phone number, and email, but it also inexplicably included fields for the account manager's name and contact information. Data redundancy alert!

So, I created a new "account_managers" table to store all that information, and linked the clients back to their account managers using a foreign key. Boom! Normalized.

Next, I tackled the transactions table. It was a jumble of product details, shipping info, and payment data. I split it into three distinct tables—one for the transaction header, one for the line items, and one for the shipping and payment details.

"This is starting to look promising," I thought, giving myself an imaginary high-five.

After several more rounds of table splitting and relationship building, the database was looking sleek, streamlined, and ready for action. I couldn't wait to see the results.

Sure enough, the next day, when the marketing team tested the app, it was like night and day. The pages loaded in a flash, and the users were practically singing my praises (okay, maybe not singing, but definitely less cranky).

My boss, who was not one for effusive praise, gave me a rare smile and said, "Good job, rookie. I knew you had it in you."

From that day forward, I became the go-to person for all things database-related. And you know what? I actually enjoyed the challenge. It's like solving a complex puzzle, but with a lot more coffee and SQL.

So, if you ever find yourself dealing with a sluggish app and a tangled database, don't panic. Grab a strong cup of coffee, roll up your sleeves, and dive into the normalization process. Trust me, your users (and your boss) will be eternally grateful.

Step-by-Step Guide to Database Normalization

Here's the step-by-step process I used to normalize the database and resolve the performance issues. I used an online database design tool to visualize this design. Here's what I did:

Original Clients Table:

ClientID int

ClientName varchar

ClientAddress varchar

ClientPhone varchar

ClientEmail varchar

AccountManagerName varchar

AccountManagerPhone varchar

Step 1: Separate the Account Managers information into a new table:

AccountManagers Table:

AccountManagerID int

AccountManagerName varchar

AccountManagerPhone varchar

Updated Clients Table:

ClientID int

ClientName varchar

ClientAddress varchar

ClientPhone varchar

ClientEmail varchar

AccountManagerID int

Step 2: Separate the Transactions information into a new table:

Transactions Table:

TransactionID int

ClientID int

TransactionDate date

ShippingAddress varchar

ShippingPhone varchar

PaymentMethod varchar

PaymentDetails varchar

Step 3: Separate the Transaction Line Items into a new table:

TransactionLineItems Table:

LineItemID int

TransactionID int

ProductID int

Quantity int

UnitPrice decimal

Step 4: Create a separate table for Products:

Products Table:

ProductID int

ProductName varchar

ProductDescription varchar

UnitPrice decimal

After these normalization steps, the database structure was much cleaner and more efficient. Here's how the relationships between the tables would look:

Clients --< Transactions >-- TransactionLineItems

Clients --< AccountManagers

Transactions --< Products

By separating the data into these normalized tables, we eliminated data redundancy, improved data integrity, and made the database more scalable. The application's performance should now be significantly faster, as the database can efficiently retrieve and process the data it needs.

Conclusion

After a whirlwind week of wrestling with spreadsheets and SQL queries, the database normalization project was complete. I leaned back, took a deep breath, and admired my work.

The previously chaotic mess of data had been transformed into a sleek, efficient database structure. Redundant information was a thing of the past, and the performance was snappy.

I couldn't wait to show my boss the results. As I walked into her office, she looked up with a hopeful glint in her eye.

"Well, rookie," she began, "any progress on that database issue?"

I grinned. "Absolutely. Let me show you."

I pulled up the new database schema on her screen, walking her through each step of the normalization process. Her eyes widened with every explanation.

"Incredible! I never realized database design could be so... detailed," she exclaimed.

When I finished, she leaned back, a satisfied smile spreading across her face.

"Fantastic job, rookie. I knew you were the right person for this." She paused, then added, "I think this calls for a celebratory lunch. My treat. What do you say?"

I didn't need to be asked twice. As we headed out, a wave of pride and accomplishment washed over me. It had been hard work, but the payoff was worth it. Not only had I solved a critical issue for the business, but I'd also cemented my reputation as the go-to database guru.

From that day on, whenever performance issues or data management challenges cropped up, my boss would come knocking. And you know what? I didn't mind one bit. It was the perfect opportunity to flex my normalization muscles and keep that database running smoothly.

So, if you ever find yourself in a similar situation—a sluggish app, a tangled database, and a boss breathing down your neck—remember: normalization is your ally. Embrace the challenge, dive into the data, and watch your application transform into a lean, mean, performance-boosting machine.

And don't forget to ask your boss out for lunch. You've earned it!

8 notes

·

View notes

Text

Why Tableau is Essential in Data Science: Transforming Raw Data into Insights

Data science is all about turning raw data into valuable insights. But numbers and statistics alone don’t tell the full story—they need to be visualized to make sense. That’s where Tableau comes in.

Tableau is a powerful tool that helps data scientists, analysts, and businesses see and understand data better. It simplifies complex datasets, making them interactive and easy to interpret. But with so many tools available, why is Tableau a must-have for data science? Let’s explore.

1. The Importance of Data Visualization in Data Science

Imagine you’re working with millions of data points from customer purchases, social media interactions, or financial transactions. Analyzing raw numbers manually would be overwhelming.

That’s why visualization is crucial in data science:

Identifies trends and patterns – Instead of sifting through spreadsheets, you can quickly spot trends in a visual format.

Makes complex data understandable – Graphs, heatmaps, and dashboards simplify the interpretation of large datasets.

Enhances decision-making – Stakeholders can easily grasp insights and make data-driven decisions faster.

Saves time and effort – Instead of writing lengthy reports, an interactive dashboard tells the story in seconds.

Without tools like Tableau, data science would be limited to experts who can code and run statistical models. With Tableau, insights become accessible to everyone—from data scientists to business executives.

2. Why Tableau Stands Out in Data Science

A. User-Friendly and Requires No Coding

One of the biggest advantages of Tableau is its drag-and-drop interface. Unlike Python or R, which require programming skills, Tableau allows users to create visualizations without writing a single line of code.

Even if you’re a beginner, you can:

✅ Upload data from multiple sources

✅ Create interactive dashboards in minutes

✅ Share insights with teams easily

This no-code approach makes Tableau ideal for both technical and non-technical professionals in data science.

B. Handles Large Datasets Efficiently

Data scientists often work with massive datasets—whether it’s financial transactions, customer behavior, or healthcare records. Traditional tools like Excel struggle with large volumes of data.

Tableau, on the other hand:

Can process millions of rows without slowing down

Optimizes performance using advanced data engine technology

Supports real-time data streaming for up-to-date analysis

This makes it a go-to tool for businesses that need fast, data-driven insights.

C. Connects with Multiple Data Sources

A major challenge in data science is bringing together data from different platforms. Tableau seamlessly integrates with a variety of sources, including:

Databases: MySQL, PostgreSQL, Microsoft SQL Server

Cloud platforms: AWS, Google BigQuery, Snowflake

Spreadsheets and APIs: Excel, Google Sheets, web-based data sources

This flexibility allows data scientists to combine datasets from multiple sources without needing complex SQL queries or scripts.

D. Real-Time Data Analysis

Industries like finance, healthcare, and e-commerce rely on real-time data to make quick decisions. Tableau’s live data connection allows users to:

Track stock market trends as they happen

Monitor website traffic and customer interactions in real time

Detect fraudulent transactions instantly

Instead of waiting for reports to be generated manually, Tableau delivers insights as events unfold.

E. Advanced Analytics Without Complexity

While Tableau is known for its visualizations, it also supports advanced analytics. You can:

Forecast trends based on historical data

Perform clustering and segmentation to identify patterns

Integrate with Python and R for machine learning and predictive modeling

This means data scientists can combine deep analytics with intuitive visualization, making Tableau a versatile tool.

3. How Tableau Helps Data Scientists in Real Life

Tableau has been adopted by the majority of industries to make data science more impactful and accessible. This is applied in the following real-life scenarios:

A. Analytics for Health Care

Tableau is deployed by hospitals and research institutions for the following purposes:

Monitor patient recovery rates and predict outbreaks of diseases

Analyze hospital occupancy and resource allocation

Identify trends in patient demographics and treatment results

B. Finance and Banking

Banks and investment firms rely on Tableau for the following purposes:

✅ Detect fraud by analyzing transaction patterns

✅ Track stock market fluctuations and make informed investment decisions

✅ Assess credit risk and loan performance

C. Marketing and Customer Insights

Companies use Tableau to:

✅ Track customer buying behavior and personalize recommendations

✅ Analyze social media engagement and campaign effectiveness

✅ Optimize ad spend by identifying high-performing channels

D. Retail and Supply Chain Management

Retailers leverage Tableau to:

✅ Forecast product demand and adjust inventory levels

✅ Identify regional sales trends and adjust marketing strategies

✅ Optimize supply chain logistics and reduce delivery delays

These applications show why Tableau is a must-have for data-driven decision-making.

4. Tableau vs. Other Data Visualization Tools

There are many visualization tools available, but Tableau consistently ranks as one of the best. Here’s why:

Tableau vs. Excel – Excel struggles with big data and lacks interactivity; Tableau handles large datasets effortlessly.

Tableau vs. Power BI – Power BI is great for Microsoft users, but Tableau offers more flexibility across different data sources.

Tableau vs. Python (Matplotlib, Seaborn) – Python libraries require coding skills, while Tableau simplifies visualization for all users.

This makes Tableau the go-to tool for both beginners and experienced professionals in data science.

5. Conclusion

Tableau has become an essential tool in data science because it simplifies data visualization, handles large datasets, and integrates seamlessly with various data sources. It enables professionals to analyze, interpret, and present data interactively, making insights accessible to everyone—from data scientists to business leaders.

If you’re looking to build a strong foundation in data science, learning Tableau is a smart career move. Many data science courses now include Tableau as a key skill, as companies increasingly demand professionals who can transform raw data into meaningful insights.

In a world where data is the driving force behind decision-making, Tableau ensures that the insights you uncover are not just accurate—but also clear, impactful, and easy to act upon.

#data science course#top data science course online#top data science institute online#artificial intelligence course#deepseek#tableau

3 notes

·

View notes

Text

SQL temp tables are valuable for storing temporary data during a session or transaction. Let's Explore:

https://madesimplemssql.com/sql-temp-table/

Please follow us on FB: https://www.facebook.com/profile.php?id=100091338502392

OR

Join our Group: https://www.facebook.com/groups/652527240081844

3 notes

·

View notes

Text

Unlock Success: MySQL Interview Questions with Olibr

Introduction

Preparing for a MySQL interview requires a deep understanding of database concepts, SQL queries, optimization techniques, and best practices. Olibr’s experts provide insightful answers to common mysql interview questions, helping candidates showcase their expertise and excel in MySQL interviews.

1. What is MySQL, and how does it differ from other database management systems?

Olibr’s Expert Answer: MySQL is an open-source relational database management system (RDBMS) that uses SQL (Structured Query Language) for managing and manipulating databases. It differs from other DBMS platforms in its open-source nature, scalability, performance optimizations, and extensive community support.

2. Explain the difference between InnoDB and MyISAM storage engines in MySQL.

Olibr’s Expert Answer: InnoDB and MyISAM are two commonly used storage engines in MySQL. InnoDB is transactional and ACID-compliant, supporting features like foreign keys, row-level locking, and crash recovery. MyISAM, on the other hand, is non-transactional, faster for read-heavy workloads, but lacks features such as foreign keys and crash recovery.

3. What are indexes in MySQL, and how do they improve query performance?

Olibr’s Expert Answer: Indexes are data structures that improve query performance by allowing faster retrieval of rows based on indexed columns. They reduce the number of rows MySQL must examine when executing queries, speeding up data retrieval operations, and optimizing database performance.

4. Explain the difference between INNER JOIN and LEFT JOIN in MySQL.

Olibr’s Expert Answer: INNER JOIN and LEFT JOIN are SQL join types used to retrieve data from multiple tables. INNER JOIN returns rows where there is a match in both tables based on the join condition. LEFT JOIN returns all rows from the left table and matching rows from the right table, with NULL values for non-matching rows in the right table.

5. What are the advantages of using stored procedures in MySQL?

Olibr’s Expert Answer: Stored procedures in MySQL offer several advantages, including improved performance due to reduced network traffic, enhanced security by encapsulating SQL logic, code reusability across applications, easier maintenance and updates, and centralized database logic execution.

Conclusion

By mastering these MySQL interview questions and understanding Olibr’s expert answers, candidates can demonstrate their proficiency in MySQL database management, query optimization, and best practices during interviews. Olibr’s insights provide valuable guidance for preparing effectively, showcasing skills, and unlocking success in MySQL-related roles.

2 notes

·

View notes

Text

SQL Temporary Table | Temp Table | Global vs Local Temp Table

Q01. What is a Temp Table or Temporary Table in SQL? Q02. Is a duplicate Temp Table name allowed? Q03. Can a Temp Table be used for SELECT INTO or INSERT EXEC statement? Q04. What are the different ways to create a Temp Table in SQL? Q05. What is the difference between Local and Global Temporary Table in SQL? Q06. What is the storage location for the Temp Tables? Q07. What is the difference between a Temp Table and a Derived Table in SQL? Q08. What is the difference between a Temp Table and a Common Table Expression in SQL? Q09. How many Temp Tables can be created with the same name? Q10. How many users or who can access the Temp Tables? Q11. Can you create an Index and Constraints on the Temp Table? Q12. Can you apply Foreign Key constraints to a temporary table? Q13. Can you use the Temp Table before declaring it? Q14. Can you use the Temp Table in the User-Defined Function (UDF)? Q15. If you perform an Insert, Update, or delete operation on the Temp Table, does it also affect the underlying base table? Q16. Can you TRUNCATE the temp table? Q17. Can you insert the IDENTITY Column value in the temp table? Can you reset the IDENTITY Column of the temp table? Q18. Is it mandatory to drop the Temp Tables after use? How can you drop the temp table in a stored procedure that returns data from the temp table itself? Q19. Can you create a new temp table with the same name after dropping the temp table within a stored procedure? Q20. Is there any transaction log created for the operations performed on the Temp Table? Q21. Can you use explicit transactions on the Temp Table? Does the Temp Table hold a lock? Does a temp table create Magic Tables? Q22. Can a trigger access the temp tables? Q23. Can you access a temp table created by a stored procedure in the same connection after executing the stored procedure? Q24. Can a nested stored procedure access the temp table created by the parent stored procedure? Q25. Can you ALTER the temp table? Can you partition a temp table? Q26. Which collation will be used in the case of Temp Table, the database on which it is executing, or temp DB? What is a collation conflict error and how you can resolve it? Q27. What is a Contained Database? How does it affect the Temp Table in SQL? Q28. Can you create a column with user-defined data types (UDDT) in the temp table? Q29. How many concurrent users can access a stored procedure that uses a temp table? Q30. Can you pass a temp table to the stored procedure as a parameter?

#sqlinterview#sqltemptable#sqltemporarytable#sqltemtableinterview#techpointinterview#techpointfundamentals#techpointfunda#techpoint#techpointblog

4 notes

·

View notes

Text

Multiple Transaction Log Files Per Database?

Learn when to use multiple transaction log files per SQL Server database and the pros and cons.

Are you wondering whether it’s a good idea to have more than one transaction log file per database in SQL Server? In this article, we’ll explore the pros and cons of using multiple log files and explain when it can make sense. We’ll also show you how to remove extra log files if needed. By the end, you’ll have a clear understanding of SQL Server transaction log best practices. What are…

View On WordPress

#database performance#multiple log files#shrink transaction log#sql server transaction log#transaction log best practices

0 notes

Text

I'm back in school! Kind of

I took a year off to focus on finding and applying to internships + a little mental health break. But I needed to enroll this semester to keep my status as a student, so I am taking only 1 class hehe

I'm taking Principles of Data Management, so I'll be learning data management with relational databases, SQL, queries, data transactions, data security, etc. I'm excited! I've been meaning to learn more abt SQL and databases as I think it would be really helpful in a few of my projects, but it's such a wide topic so it's a bit difficult to cover on your own without guidance.

Since this is my only class right now, I'll be able to really focus on assignments and studying, and hopefully be able to incorporate my learnings into existing or new projects.

cheers to everyone starting the new semester ✩₊˚⊹⸜(˃ ᵕ ˂ )⸝

5 notes

·

View notes

Text

In today’s digital era, database performance is critical to the overall speed, stability, and scalability of modern applications. Whether you're running a transactional system, an analytics platform, or a hybrid database structure, maintaining optimal performance is essential to ensure seamless user experiences and operational efficiency.

In this blog, we'll explore effective strategies to improve database performance, reduce latency, and support growing data workloads without compromising system reliability.

1. Optimize Queries and Use Prepared Statements

Poorly written SQL queries are often the root cause of performance issues. Long-running or unoptimized queries can hog resources and slow down the entire system. Developers should focus on:

Using EXPLAIN plans to analyze query execution paths

Avoiding unnecessary columns or joins

Reducing the use of SELECT *

Applying appropriate filters and limits

Prepared statements can also boost performance by reducing parsing overhead and improving execution times for repeated queries.

2. Leverage Indexing Strategically

Indexes are powerful tools for speeding up data retrieval, but improper use can lead to overhead during insert and update operations. Indexes should be:

Applied selectively to frequently queried columns

Monitored for usage and dropped if rarely used

Regularly maintained to avoid fragmentation

Composite indexes can also be useful when multiple columns are queried together.

3. Implement Query Caching

Query caching can drastically reduce response times for frequent reads. By storing the results of expensive queries temporarily, you avoid reprocessing the same query multiple times. However, it's important to:

Set appropriate cache lifetimes

Avoid caching volatile or frequently changing data

Clear or invalidate cache when updates occur

Database proxy tools can help with intelligent query caching at the SQL layer.

4. Use Connection Pooling

Establishing database connections repeatedly consumes both time and resources. Connection pooling allows applications to reuse existing database connections, improving:

Response times

Resource management

Scalability under load

Connection pools can be fine-tuned based on application traffic patterns to ensure optimal throughput.

5. Partition Large Tables

Large tables with millions of records can suffer from slow read and write performance. Partitioning breaks these tables into smaller, manageable segments based on criteria like range, hash, or list. This helps:

Speed up query performance

Reduce index sizes

Improve maintenance tasks such as vacuuming or archiving

Partitioning also simplifies data retention policies and backup processes.

6. Monitor Performance Metrics Continuously

Database monitoring tools are essential to track performance metrics in real time. Key indicators to watch include:

Query execution time

Disk I/O and memory usage

Cache hit ratios

Lock contention and deadlocks

Proactive monitoring helps identify bottlenecks early and prevents system failures before they escalate.

7. Ensure Hardware and Infrastructure Support

While software optimization is key, underlying infrastructure also plays a significant role. Ensure your hardware supports current workloads by:

Using SSDs for faster data access

Scaling vertically (more RAM/CPU) or horizontally (sharding) as needed

Optimizing network latency for remote database connections

Cloud-native databases and managed services also offer built-in scaling options for dynamic workloads.

8. Regularly Update and Tune the Database Engine

Database engines release frequent updates to fix bugs, enhance performance, and introduce new features. Keeping your database engine up-to-date ensures:

Better performance tuning options

Improved security

Compatibility with modern application architectures

Additionally, fine-tuning engine parameters like buffer sizes, parallel execution, and timeout settings can significantly enhance throughput.

0 notes

Text

Lead Software engineer - React JS / Angular, Java and Spring and SQL

Our PurposeMastercard powers economies and empowers people in 200+ countries and territories worldwide. Together with our customers, we’re helping build a sustainable economy where everyone can prosper. We support a wide range of digital payments choices, making transactions secure, simple, smart and accessible. Our technology and innovation, partnerships and networks combine to deliver a unique…

0 notes

Text

Learn Data Analytics: Grow Skills, Make it Truth Future

The world inside that's increasingly shaped by information, being able to understand and interpret data isn't just a specialized skill anymore—it's a crucial ability for navigating today’s landscape. At its heart, data analytics involves digging into raw data to uncover meaningful patterns, draw insightful conclusions, and guide decision-making. When individuals get a handle on this discipline, they can turn those raw numbers into actionable insights, paving the way for a more predictable and 'truthful' future for themselves and the organizations they work with. This article dives into the compelling reasons to learn data analytics, highlighting the key skills involved and how they help build a future rooted in verifiable facts.

The Foundational Power of Data Literacy

At the heart of a data-driven future lies data literacy – the ability to read, understand, create, and communicate data as information. This fundamental understanding is the first step towards leveraging analytics effectively. Without it, individuals and businesses risk making decisions based on intuition or outdated information, which can lead to missed opportunities and significant errors.

Understanding Data's Language

Learning data analytics begins with grasping how data is generated and structured. This involves:

Data Sources: Recognizing where data comes from, whether it's from website clicks, sales transactions, sensor readings, or social media interactions.

Data Types: Differentiating between numerical, categorical, textual, and temporal data, as each requires different analytical approaches.

Data Quality: Appreciating the importance of clean, accurate, and complete data. Flawed data inevitably leads to flawed conclusions, rendering efforts useless.

Essential Skills for Data Analytics Growth

To truly make a "truth future" through data, a blend of technical proficiency, analytical thinking, and effective communication is required.

Technical Proficiencies

The journey into data analytics necessitates acquiring specific technical skills:

Statistical Foundations: A solid understanding of statistical concepts (e.g., probability, hypothesis testing, regression) is crucial for interpreting data accurately and building robust models.

Programming Languages: Python and R are the industry standards. They offer powerful libraries for data manipulation, statistical analysis, machine learning, and visualization. Proficiency in at least one of these is non-negotiable.

Database Management: SQL (Structured Query Language) skills are vital for querying, extracting, and managing data from relational databases, which are the backbone of many business operations.

Data Visualization Tools: Tools like Tableau, Power BI, or Qlik Sense enable analysts to transform complex datasets into intuitive charts, graphs, and dashboards, making insights accessible to non-technical audiences.

Analytical Thinking and Problem-Solving

Beyond tools, the analytical mindset is paramount. This involves:

Critical Thinking: The ability to question assumptions, identify biases, and evaluate the validity of data and its interpretations.

Problem Framing: Defining business problems clearly and translating them into analytical questions that can be answered with data.

Pattern Recognition: The knack for identifying trends, correlations, and anomalies within datasets that might not be immediately obvious.

Communication Skills

Even the most profound data insights are useless if they cannot be effectively communicated.

Storytelling with Data: Presenting findings in a compelling narrative that highlights key insights and their implications for decision-making.

Stakeholder Management: Understanding the needs and questions of different audiences (e.g., executives, marketing teams, operations managers) and tailoring presentations accordingly.

Collaboration: Working effectively with cross-functional teams to integrate data insights into broader strategies.

Making the "Truth Future": Applications of Data Analytics

The skills acquired in data analytics empower individuals to build a future grounded in verifiable facts, impacting various domains.

Business Optimization

In the corporate world, data analytics helps to:

Enhance Customer Understanding: By analyzing purchasing habits, Browse behavior, and feedback, businesses can create personalized experiences and targeted marketing campaigns.

Improve Operational Efficiency: Data can reveal bottlenecks in supply chains, optimize resource allocation, and predict equipment failures, leading to significant cost savings.

Drive Strategic Decisions: Whether it's market entry strategies, product development, or pricing models, analytics provides the evidence base for informed choices, reducing risk and increasing profitability.

Personal Empowerment

Data analytics isn't just for corporations; it can profoundly impact individual lives:

Financial Planning: Tracking spending patterns, identifying savings opportunities, and making informed investment decisions.

Health and Wellness: Analyzing fitness tracker data, sleep patterns, and dietary information to make healthier lifestyle choices.

Career Advancement: Understanding job market trends, in-demand skills, and salary benchmarks to strategically plan career moves and upskilling efforts.

Societal Impact

On a broader scale, data analytics contributes to a more 'truthful' and efficient society:

Public Policy: Governments use data to understand demographic shifts, optimize public services (e.g., transportation, healthcare), and allocate resources effectively.

Scientific Discovery: Researchers analyze vast datasets in fields like genomics, astronomy, and climate science to uncover new knowledge and accelerate breakthroughs.

Urban Planning: Cities leverage data from traffic sensors, public transport usage, and environmental monitors to design more sustainable and livable urban environments.

The demand for skilled data analytics professionals continues to grow across the nation, from the vibrant tech hubs to emerging industrial centers. For those looking to gain a comprehensive and practical understanding of this field, pursuing dedicated training is a highly effective path. Many individuals choose programs that offer hands-on experience and cover the latest tools and techniques. For example, a well-regarded Data analytics training course in Noida, along with similar opportunities in Kanpur, Ludhiana, Moradabad, Delhi, and other cities across India, provides the necessary foundation for a successful career. These courses are designed to equip students with the skills required to navigate and contribute to the data-driven landscape.

Conclusion

Learning data analytics goes beyond just picking up technical skills; it’s really about developing a mindset that looks for evidence, values accuracy, and inspires thoughtful action. By honing these vital abilities, people can not only grasp the intricacies of our digital landscape but also play an active role in shaping a future that’s more predictable, efficient, and fundamentally rooted in truth. In a world full of uncertainties, data analytics provides a powerful perspective that helps us find clarity and navigate a more assured path forward

1 note

·

View note