#table auditing in oracle database

Explore tagged Tumblr posts

Text

Firebird to Oracle Migration – Ask On Data

In this article, we explore the complexities and best practices involved in Firebird to Oracle Migration. As organizations grow and their data needs evolve, transitioning to a robust and scalable database system like Oracle becomes imperative. We will guide you through the entire migration process, highlighting potential challenges and offering practical solutions to ensure a seamless transition. From initial planning and data mapping to execution and post-migration optimization, this comprehensive guide aims to equip you with the knowledge and tools needed for a successful Firebird to Oracle migration.

What is Firebird

Firebird is an advanced open-source relational database management system renowned for its efficiency and reliability. It supports a wide range of platforms, including Windows, Linux, and macOS, offering seamless cross-platform capabilities. With features like multi-generational architecture, SQL compliance, and robust security, Firebird caters to both small applications and large enterprise solutions. Its lightweight footprint and minimal maintenance requirements make it an attractive option for developers and businesses seeking a cost-effective yet powerful database solution. Dive into the capabilities of Firebird and discover why it remains a popular choice in the database community.

What is Oracle

Oracle is a leading relational database management system known for its robust performance, scalability, and comprehensive feature set. It provides unparalleled reliability and security, making it a preferred choice for enterprises handling large volumes of data and complex transactions. With support for advanced SQL features, PL/SQL programming, and extensive data analytics, Oracle enables organizations to optimize their data management and gain actionable insights. Its high availability and disaster recovery solutions ensure business continuity, while its integration capabilities allow seamless connectivity with various applications and systems. Discover how Oracle can transform your data infrastructure and drive your business forward.

Advantages of Firebird to Oracle Migration

Enhanced Security: Oracle offers advanced data encryption, access controls, and auditing capabilities.

Superior Performance Optimization: Oracle's query optimization and performance tuning tools ensure efficient data processing.

High Availability and Disaster Recovery: Oracle provides robust solutions to ensure business continuity and minimize downtime.

Scalability: Oracle supports seamless scalability to handle growing data volumes and transaction loads.

Comprehensive Analytics: Oracle's analytics and reporting tools offer deep insights for informed decision-making.

Broad Ecosystem and Support: Oracle's extensive ecosystem and support services provide access to expert resources and third-party integrations.

Method 1: Migrating Data from Firebird to Oracle Using the Manual Method

Data Export: Export data from Firebird using tools like isql or custom scripts to extract data in a suitable format.

Data Transformation: Transform the exported data to match Oracle's schema and data types, ensuring compatibility.

Schema Creation: Manually create the corresponding tables, indexes, and constraints in Oracle to mirror the Firebird schema.

Data Import: Use Oracle tools like SQL*Loader or direct SQL inserts to import the transformed data into Oracle.

Validation: Validate the migrated data by comparing record counts and sample data between Firebird and Oracle.

Testing: Perform thorough testing to ensure that the data migration is successful and that applications function correctly with the new Oracle database.

Disadvantages of Migrating Data from Firebird to Oracle Using the Manual Method

High Error Risk with lot of manual efforts

Difficult in achieving Data Transformation

Dependency on tech resources

No Automation

Limited Scalability

For every table, this work has to be done.

No automated methods of handling errors, notifications

No automated methods of roll back in case

No automated direct methods of logs and knowing amount of data transferred

No automated direct methods in case if you would like to have methods like incremental load (Change Data Capture)

Method 2: Migrating Data from Firebird to Oracle Using ETL Tools

There are various advantages which ETL

Data Extraction: ETL tools can automatically extract data from Firebird using connectors or custom queries, simplifying the extraction process.

Data Transformation: The ETL process allows for automated transformation of data, including converting data types, cleaning, and restructuring data to match Oracle's schema.

Data Loading: The ETL tools efficiently load transformed data into Oracle, ensuring compatibility with the target database's structure.

Error Handling: Most ETL tools include error handling features, which automatically detect and log issues during migration, reducing the risk of data corruption.

Automation and Scheduling: ETL tools offer the ability to automate and schedule migration tasks, saving time and reducing manual intervention during the process.

Scalability and Efficiency: ETL tools are designed to handle large volumes of data, making them more scalable and efficient than manual methods, especially for ongoing migrations or large datasets.

Challenges of Using ETL Tools for Data Migration:

Complex Setup and Configuration in case of on-premise deployment

Steep learning curve to use these tools

Dependency on using highly technical resources/ Data Engineers to do this kind of work

Cost

Scalability Issues

Limited Customization

Maintenance Overhead

Why Ask On Data is the Best Tool for Migrating Data from Firebird to Oracle

User-Friendly Interface: Ask On Data offers an intuitive interface that simplifies the migration process, making it easy for users of all skill levels.

Seamless Integration: The tool connects smoothly with both Firebird and Oracle, ensuring a hassle-free data transfer without complicated setups.

Automated Data Transformation: It automatically transforms and cleans your data, reducing the risk of errors and saving you time during migration.

Real-Time Monitoring: Ask On Data provides real-time monitoring of the migration process, allowing you to track progress and quickly address any issues.

Cost-Effective Solution: With a flexible pricing model, Ask On Data helps you manage migration costs without sacrificing quality or performance.

Usage of Ask On Data : A chat based AI powered Data Engineering Tool

Ask On Data is world’s first chat based AI powered data engineering tool. It is present as a free open source version as well as paid version. In free open source version, you can download from Github and deploy on your own servers, whereas with enterprise version, you can use Ask On Data as a managed service.

Advantages of using Ask On Data

Built using advanced AI and LLM, hence there is no learning curve.

Simply type and you can do the required transformations like cleaning, wrangling, transformations and loading

No dependence on technical resources

Super fast to implement (at the speed of typing)

No technical knowledge required to use

Below are the steps to do the data migration activity

Step 1: Connect to Firebird(which acts as source)

Step 2 : Connect to Oracle (which acts as target)

Step 3: Create a new job. Select your source (Firebird) and select which all tables you would like to migrate.

Step 4 (OPTIONAL): If you would like to do any other tasks like data type conversion, data cleaning, transformations, calculations those also you can instruct to do in natural English. NO knowledge of SQL or python or spark etc required.

Step 5: Orchenstrate/schedule this. While scheduling you can run it as one time load, or change data capture or truncate and load etc.

For more advanced users, Ask On Data is also providing options to write SQL, edit YAML, write PySpark code etc.

There are other functionalities like error logging, notifications, monitoring, logs etc which can provide more information like the amount of data transferred, logs, any error information if the job did not run and other kind of monitoring information etc.

Trying Ask On Data

You can reach out to us on mailto:[email protected] for a demo, POC, discussion and further pricing information. You can make use of our managed services or you can also download and install on your own servers our community edition from Github.

0 notes

Text

Oracle Reporting for Financial Analysis: How to Generate Accurate and Insightful Reports

Oracle Reporting is a powerful tool that enables organizations to gain deep insights from their financial data. For finance professionals, generating accurate and insightful reports is crucial for decision-making, forecasting, and maintaining compliance. This article will guide you through the essential steps and best practices for leveraging Oracle Reporting in financial analysis.

Understanding Oracle Reporting

Oracle Reporting tools, such as Oracle Business Intelligence (OBI) and Oracle Analytics Cloud (OAC), provide robust functionalities for financial reporting. These tools allow finance teams to create, customize, and automate reports, ensuring that data is presented in a meaningful and actionable format. The flexibility and scalability of Oracle Reporting make it an ideal choice for organizations of all sizes.

Setting Up Financial Reports

The first step in generating accurate financial reports is setting up your reporting environment. This involves configuring data sources, defining report parameters, and ensuring data integrity.

Data Integration: Integrate your financial data from various sources such as ERP systems, CRM platforms, and external databases. Oracle’s Extract, Transform, Load (ETL) tools can help streamline this process, ensuring that all relevant data is available for reporting.

Data Validation: Ensure the accuracy and completeness of your data by implementing data validation checks. This step is critical to avoid discrepancies and ensure the reliability of your reports.

Defining Parameters: Define the parameters and filters that will be used in your reports. Parameters such as time periods, account codes, and organizational units help tailor the reports to specific needs and make them more relevant.

Designing Financial Reports

Once your data is integrated and validated, the next step is designing the reports. A well-designed report should be clear, concise, and visually appealing.

Report Layout: Organize your report layout to highlight key financial metrics. Use tables, charts, and graphs to present data in an easily digestible format. Oracle Reporting tools offer various templates and design options to help you create professional-looking reports.

KPIs and Metrics: Identify the key performance indicators (KPIs) and financial metrics that are most important for your analysis. Common financial KPIs include revenue, expenses, profit margins, and cash flow. Display these metrics prominently in your reports to provide a quick overview of financial performance.

Data Visualization: Utilize data visualization techniques to enhance the readability and impact of your reports. Charts, graphs, and dashboards can help illustrate trends, comparisons, and anomalies in the data. Oracle’s data visualization capabilities allow you to create interactive and dynamic reports that provide deeper insights.

Automating Financial Reporting

Automation is a key feature of Oracle Reporting that can save time and reduce errors in financial reporting.

Scheduled Reports: Use Oracle’s scheduling features to automate the generation and distribution of reports. You can set up reports to run at specific intervals (daily, weekly, monthly) and deliver them to stakeholders via email or a reporting portal.

Real-Time Reporting: Implement real-time reporting to provide up-to-date financial information. Real-time reporting is particularly useful for monitoring cash flow, budget compliance, and other time-sensitive metrics.

Alerts and Notifications: Set up alerts and notifications to inform stakeholders of critical changes or anomalies in the financial data. This proactive approach helps in quickly addressing issues and making informed decisions.

Ensuring Compliance and Accuracy

Maintaining compliance and accuracy in financial reporting is crucial for any organization. Oracle Reporting provides features to help you achieve this.

Audit Trails: Implement audit trails to track changes in financial data and report generation processes. This ensures transparency and accountability, which are essential for compliance with regulatory standards.

Data Security: Ensure the security of your financial data by implementing access controls and encryption. Oracle Reporting tools offer robust security features to protect sensitive information from unauthorized access.

Review and Validation: Regularly review and validate your financial reports to ensure accuracy. This involves cross-checking report data with source systems, verifying calculations, and conducting periodic audits.

Leveraging Advanced Analytics

Oracle Reporting goes beyond traditional financial reporting by offering advanced analytics capabilities.

Predictive Analytics: Utilize predictive analytics to forecast future financial performance. Oracle’s machine learning algorithms can analyze historical data to identify trends and predict future outcomes, helping in strategic planning and decision-making.

Scenario Analysis: Conduct scenario analysis to evaluate the impact of different business scenarios on financial performance. This helps in understanding potential risks and opportunities, allowing for better preparation and response.

Drill-Down Analysis: Use drill-down analysis to explore the underlying details of summary reports. This feature enables finance professionals to investigate specific transactions and gain deeper insights into the factors driving financial results.

Conclusion

Oracle Reporting is an indispensable tool for finance professionals seeking to generate accurate and insightful financial reports. By following best practices in data integration, report design, automation, compliance, and leveraging advanced analytics, you can transform your financial reporting process and make informed decisions that drive business success. Embrace the power of Oracle Reporting to unlock the full potential of your financial data.

0 notes

Text

Data Engineering Course in Hyderabad | AWS Data Engineer Training

Overview of AWS Data Modeling

Data modeling in AWS involves designing the structure of your data to effectively store, manage, and analyse it within the Amazon Web Services (AWS) ecosystem. AWS provides various services and tools that can be used for data modeling, depending on your specific requirements and use cases. Here's an overview of key components and considerations in AWS data modeling

AWS Data Engineer Training

Understanding Data Requirements: Begin by understanding your data requirements, including the types of data you need to store, the volume of data, the frequency of data updates, and the anticipated usage patterns.

Selecting the Right Data Storage Service: AWS offers a range of data storage services suitable for different data modeling needs, including:

Amazon S3 (Simple Storage Service): A scalable object storage service ideal for storing large volumes of unstructured data such as documents, images, and logs.

Amazon RDS (Relational Database Service): Managed relational databases supporting popular database engines like MySQL, PostgreSQL, Oracle, and SQL Server.

Amazon Redshift: A fully managed data warehousing service optimized for online analytical processing (OLAP) workloads.

Amazon DynamoDB: A fully managed NoSQL database service providing fast and predictable performance with seamless scalability.

Amazon Aurora: A high-performance relational database compatible with MySQL and PostgreSQL, offering features like high availability and automatic scaling. - AWS Data Engineering Training

Schema Design: Depending on the selected data storage service, design the schema to organize and represent your data efficiently. This involves defining tables, indexes, keys, and relationships for relational databases or determining the structure of documents for NoSQL databases.

Data Ingestion and ETL: Plan how data will be ingested into your AWS environment and perform any necessary Extract, Transform, Load (ETL) operations to prepare the data for analysis. AWS provides services like AWS Glue for ETL tasks and AWS Data Pipeline for orchestrating data workflows.

Data Access Control and Security: Implement appropriate access controls and security measures to protect your data. Utilize AWS Identity and Access Management (IAM) for fine-grained access control and encryption mechanisms provided by AWS Key Management Service (KMS) to secure sensitive data.

Data Processing and Analysis: Leverage AWS services for data processing and analysis tasks, such as - AWS Data Engineering Training in Hyderabad

Amazon EMR (Elastic MapReduce): Managed Hadoop framework for processing large-scale data sets using distributed computing.

Amazon Athena: Serverless query service for analysing data stored in Amazon S3 using standard SQL.

Amazon Redshift Spectrum: Extend Amazon Redshift queries to analyse data stored in Amazon S3 data lakes without loading it into Redshift.

Monitoring and Optimization: Continuously monitor the performance of your data modeling infrastructure and optimize as needed. Utilize AWS CloudWatch for monitoring and AWS Trusted Advisor for recommendations on cost optimization, performance, and security best practices.

Scalability and Flexibility: Design your data modeling architecture to be scalable and flexible to accommodate future growth and changing requirements. Utilize AWS services like Auto Scaling to automatically adjust resources based on demand. - Data Engineering Course in Hyderabad

Compliance and Governance: Ensure compliance with regulatory requirements and industry standards by implementing appropriate governance policies and using AWS services like AWS Config and AWS Organizations for policy enforcement and auditing.

By following these principles and leveraging AWS services effectively, you can create robust data models that enable efficient storage, processing, and analysis of your data in the cloud.

Visualpath is the Leading and Best Institute for AWS Data Engineering Online Training, in Hyderabad. We at AWS Data Engineering Training provide you with the best course at an affordable cost.

Attend Free Demo

Call on - +91-9989971070.

Visit: https://www.visualpath.in/aws-data-engineering-with-data-analytics-training.html

#AWS Data Engineering Online Training#AWS Data Engineering Training#Data Engineering Training in Hyderabad#AWS Data Engineering Training in Hyderabad#Data Engineering Course in Ameerpet#AWS Data Engineering Training Ameerpet#Data Engineering Course in Hyderabad#AWS Data Engineering Training Institute

0 notes

Text

Database Solutions- SalesDemand

Database solutions in business-to-business (B2B) contexts are essential tools for managing and leveraging data effectively within an organization. These solutions encompass a variety of technologies and approaches aimed at storing, organizing, accessing, and analyzing data relevant to B2B operations.

For More Information: https://sales-demand.com/database-solutions/

Here's a breakdown of key aspects and considerations:

Database Types:

Relational Databases: These are traditional databases structured around tables, with relationships defined between them. Examples include MySQL, PostgreSQL, Oracle, and Microsoft SQL Server.

NoSQL Databases: These databases are designed for unstructured or semi-structured data and offer more flexibility and scalability compared to relational databases. Examples include MongoDB, Cassandra, Couchbase, and Redis.

Graph Databases: Especially useful for B2B scenarios where relationships between entities are crucial, graph databases like Neo4j enable efficient querying of complex relationships.

In-Memory Databases: These databases primarily store data in memory for faster access, making them suitable for real-time analytics and processing. Examples include Redis and Memcached.

Visit Us: www.sales-demand.com

Data Warehousing:

B2B organizations often require data warehousing solutions to consolidate data from multiple sources for reporting, analysis, and decision-making.

Data warehousing platforms like Amazon Redshift, Google BigQuery, and Snowflake provide scalable solutions for storing and processing large volumes of data.

Data Integration:

B2B environments typically involve integrating data from various sources such as CRM systems, ERP systems, third-party vendors, and partner networks.

Integration platforms like Apache Kafka, Apache Nifi, and MuleSoft facilitate seamless data movement and synchronization across disparate systems.

Data Security and Compliance:

Given the sensitivity of B2B data, robust security measures are crucial to safeguard against breaches and ensure compliance with regulations like GDPR and CCPA.

Database solutions often include features such as encryption, access controls, auditing, and compliance frameworks to address security and regulatory requirements.

Our Services: https://sales-demand.com/lead-generation-solutions/

Scalability and Performance:

B2B database solutions must be able to handle growing volumes of data and accommodate increasing user loads without sacrificing performance.

Technologies like sharding, replication, and distributed databases help achieve scalability and high availability.

Data Analytics and Business Intelligence:

B2B organizations leverage database solutions to derive insights from data through analytics and business intelligence (BI) tools.

Integration with BI platforms like Tableau, Power BI, and Looker enables visualization, reporting, and exploration of data to drive strategic decisions.

Cloud vs. On-Premises:

B2B companies often evaluate whether to deploy database solutions on-premises or in the cloud.

Cloud-based solutions offer advantages such as scalability, cost-effectiveness, and ease of management, while on-premises solutions provide greater control over data and infrastructure.

Read Our More Blog:

About Us:

DATA DRIVEN | CLIENT FOCUSED | PEOPLE POWERED

SalesDemand has a “data first” approach to solutions built for multi-channel B2B and technology marketers worldwide to enable growth. We are proud to be the trusted provider of campaign execution solutions, marketing services, business data, and database products to Media Agencies and Technology Brands – with a singular focus on B2B. We partner with Technology brands and Media Agencies in 85+ markets across North America, Europe, Asia, and the Middle East.

Our business is built on the firm foundation of robust data. It is our holistic approach to data, strategy, and insight, as well as our follow through with action-based, results-focused execution, that uniquely qualifies us to be your Tech Media Publisher – Lead Generation Partner. When it comes to business marketing solutions, we are dedicated to delivering the results that matter to you – Revenue and ROI.

0 notes

Text

FINANCIAL ANALYST manufacturing 3145149

Job ID: 3145149 Full-Time Onsite with Paid Relocation Job Title: Financial Analyst - Manufacturing Location: Davenport, Iowa Industry: Manufacturing & Production Job Category: Finance / Accounting - Analyst Client Description: Our client, a distinguished organization, operates as a leading provider of aluminum sheet, plate, and extrusions, along with pioneering architectural solutions that advance ground transportation, aerospace, industrial, packaging, and building and construction sectors. With numerous accolades, our client has a strong presence across various industries. Job Description: Our client, headquartered in Pittsburgh, Pennsylvania, is currently seeking a Financial Analyst for immediate placement. The chosen candidate will be responsible for various financial analysis and reporting functions within their organization, contributing to the ongoing success of the company. Key Responsibilities: - Conduct financial analysis of administrative and manufacturing costs at our client's Davenport facility, presenting findings to diverse stakeholders, including plant management, marketing and sales departments, operating units, and support teams. - Perform detailed financial assessments related to product costing and customer profitability, providing valuable insights to plant management and marketing and sales teams. - Utilize analytical skills to interpret financial data and identify critical issues impacting our client's Davenport Works and the broader business unit. - Collaborate with various manufacturing and support departments, offering financial support and insights to enhance operational efficiency. - Actively participate in monthly closing activities, including account reconciliations, inventory accounting transactions, inter-company accounting, and fixed assets accounting. - Support physical inventories, self-assessed audits, compliance testing, and annual audits, ensuring financial accuracy and regulatory compliance. - Develop and analyze burden budgets and standard costs, optimizing financial planning and resource allocation. - Generate monthly financial forecasts and actively contribute to annual planning processes, fostering proactive decision-making. Qualifications: Basic Qualifications: - Bachelor's degree in accounting or finance from an accredited institution. - Candidates must possess legal authorization to work in the United States. Visa sponsorship is not available for this position. - This role is subject to the International Traffic in Arms Regulations (ITAR), requiring U.S. person status. ITAR defines U.S. person as a U.S. Citizen, U.S. Permanent Resident (Green Card Holder), Political Asylee, or Refugee. Preferred Qualifications: - Master's Degree MBA, CPA/CMA. - 2 or more years of experience in a Finance or Accounting role, preferably within a manufacturing environment. - Familiarity with standard costing systems and budgeting processes. - Proficiency in advanced Microsoft Excel functions, including Pivot Tables, Sum IFs, and VLOOKUP. - Experience working with Enterprise Business Systems, such as Oracle or SAP. - Knowledge of database query tools, such as Microsoft Access, Oracle Discovery, Hyperion, Essbase, Smartview, or related programs. How to Apply: Please submit qualified candidates for consideration using the provided application link. Job ID: 3145149 Read the full article

1 note

·

View note

Text

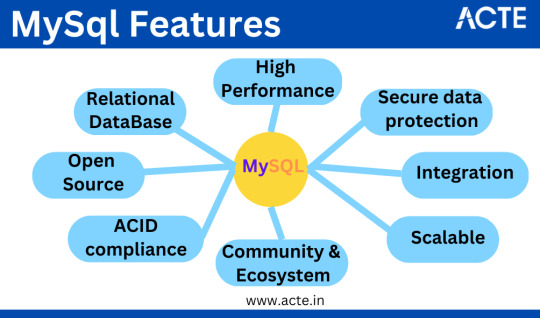

"Mastering MySQL: Comprehensive Database Management Course"

I'm thrilled to address your inquiries about MySQL. My perspective and expertise have undergone significant growth in this field. MySQL enjoys widespread recognition and finds extensive applications across diverse industries.

MySQL is an open-source relational database management system (RDBMS) renowned for its versatility and utility in handling structured data. Initially developed by the Swedish company MySQL AB, it is presently under the ownership of Oracle Corporation. MySQL's reputation rests on its speed, reliability, and user-friendliness, which have fueled its adoption among developers and organizations of all sizes.

Key Attributes of MySQL:

1. Relational Database: MySQL organizes data into tables with rows and columns, facilitating efficient data retrieval and manipulation.

2. Open Source: Available under an open-source license, MySQL is freely accessible and modifiable, with commercial versions and support options for those requiring additional features.

3. Multi-Platform: MySQL caters to various operating systems, including Windows, Linux, macOS, and more, ensuring adaptability across diverse environments.

4.Scalability: MySQL seamlessly scales from small-scale applications to high-traffic websites and data-intensive enterprise systems, offering support for replication and clustering.

5. Performance: Recognized for high-performance capabilities, MySQL ensures swift data retrieval and efficient query execution, offering multiple storage engines with varying feature trade-offs.

6. Security: MySQL provides robust security features, encompassing user authentication, access control, encryption, and auditing to safeguard data and ensure compliance with security standards.

7. ACID Compliance: MySQL adheres to ACID principles, ensuring data integrity and reliability, even in the event of system failures.

8. SQL Support: Employing Structured Query Language (SQL), MySQL defines, manipulates, and queries data, aligning with a standard set of SQL commands for compatibility with various applications and development tools.

9. Community and Ecosystem: MySQL boasts a thriving user community, contributing to its development and offering extensive resources, including documentation, forums, and third-party extensions.

10.Integration: MySQL seamlessly integrates with multiple programming languages and development frameworks, making it a favored choice for web and application development.

MySQL finds widespread usage in web applications, content management systems, e-commerce platforms, and numerous other software projects where structured data storage and retrieval are imperative. It competes with other relational databases such as PostgreSQL, Oracle Database, and Microsoft SQL Server in the database management system arena.

Should you seek to delve deeper into MySQL Course, I strongly recommend reaching out to ACTE Technologies, a hub for certifications and job placement opportunities. Their experienced instructors can facilitate your learning journey, with both online and offline options available. Take a methodical approach, and contemplate enrolling in a course if your interest persists.

I trust that this response effectively addresses your inquiries. If not, please do not hesitate to voice your concerns in the comments section. I remain committed to continuous learning.

If you've found my insights valuable, consider following me on this platform and giving this content an upvote to encourage further MySQL-related content. Thank you for investing your time here, and I wish you a splendid day.

1 note

·

View note

Text

Question-61: How do you implement row-level auditing in Oracle?

Interview Questions on Oracle SQL & PLSQL Development: For more questions like this: Do follow the main blog #oracledatabase #interviewquestions #freshers #beginners #intermediatelevel #experienced #eswarstechworld #oracle #interview #development #sql

Implementing row-level auditing in Oracle involves capturing and storing detailed information about changes made to individual rows in a database table. Row-level auditing, also known as fine-grained auditing, is a mechanism in Oracle that allows for the tracking and recording of changes made to specific rows within a table. It captures detailed information about each modification, including…

View On WordPress

0 notes

Text

How to Check Last Password Change History?

How to Check Last Password Change History? #oracledba #oracle #shripaldba #ocptechnology

In this article, we are going to learn how to check past password change history in the oracle database. Using the below commands you can check the password change history step by step. Read: What is control file and datafile in Oracle? When was my Oracle password last changed? The below query will show you the timestamp of last password change. Here i’m checking SCOTT user password change…

View On WordPress

#ctime oracle#get ddl of all users in oracle#how to change expiry date of user in oracle 12c#how to change password in database#how to find who changed oracle user password#how to get user creation script in oracle#how to retrieve user password in oracle 11g#how to retrieve user password in oracle 12c#last password change date in oracle#oracle audit password change#oracle copy users from one database to another#oracle db password change date#oracle export users and passwords#oracle user password table#query to get password of user in oracle#sql query to check password expiry date in oracle

0 notes

Text

A DATA INTEGRATION APPROACH TO MAXIMIZE YOUR ROI

The data Integration approach adopted by many data integration projects relies on a set of premium tools leading to cash burnout with RoI less than the standard.

To overcome this and to maximize the RoI, we lay down a data integration approach that makes use of open-source tools over the premium to deliver better results and an even more confident return on the investment.

Adopt a two-stage data integration approach:

Part 1 explains the process of setting up technicals and part 2 covers the execution approach involving challenges faced and solutions to the same.

Part 1: Setting Up

The following are the widely relied data sources:

REST API Source with standard NoSQL JSON (with nested datasets)

REST API Source with full data schema in JSON

CSV Files in AWS S3

Relational Tables from Postgres DB

There are 2 different JSON types above in which the former is conventional, and the latter is here

Along with the data movement, it is necessary to facilitate Plug-n-Play architecture, Notifications, Audit data for Reporting, Un-burdened Intelligent scheduling, and setting up all the necessary instances.

The landing Data warehouse chosen was AWS Redshift which is a one-stop for the operational data stores (ODS) as well as facts & dimensions. As said, we completely relied on open-source tools over the tools from tech giants like Oracle, Microsoft, Informatica, Talend, etc.,

The data integration was successful by leveraging Python, SQL, and Apache Airflow to do all the work. Use Python for Extraction; SQL to Load & Transform the data and Airflow to orchestrate the loads via python-based scheduler code. Below is the data flow architecture.

Data Flow Architecture

Part 2: Execution

The above data flow architecture gives a fair idea of how the data was integrated. The execution is explained in parallel with the challenges faced and how they were solved.

Challenges:

Plug-n-Play Model.

Dealing with the nested data in JSON.

Intelligent Scheduling.

Code Maintainability for future enhancements.

1. Plug-n-Play Model

To meet the changing business needs, the addition of columns or a datastore is obvious and if the business is doing great, expansion to different regions is apparent. The following aspects were made sure to ensure a continuous integration process.

A new column will not break the process.

A new data store should be added with minimal work by a non-technical user.

To bring down the time consumed for any new store addition(expansion) integration from days to hours.

The same is achieved by using:

config table which is the heart of the process holding all the jobs needed to be executed, their last extract dates, and parameters for making the REST API call/extract data from RDBMS.

Re-usable python templates which are read-modified-executed based on the parameters from the config table.

Audit table for logging all the crucial events happening whilst integration.

Control table for mailing and Tableau report refreshes after the ELT process

By creating state-of-art DAGs which can generate DAGs(jobs) with configuration decided in the config table for that particular job.

Any new table which is being planned for the extraction or any new store being added as part of business expansion needs its entries into the config table.

The DAG Generator DAG run will build jobs for you in a snap which will be available in Airflow UI on the subsequent refresh within seconds, and the new jobs are executed on the next schedule along with existing jobs.

2. Dealing with the nested data in JSON.

It is a fact that No-SQL JSONS hold a great advantage from a storage and data redundancy perspective but add a lot of pain while reading the nested data out of the inner arrays.

The following approach is adopted to conquer the above problem:

Configured AWS Redshift Spectrum, with IAM Role and IAM Policy as needed to access AWS Glue Catalog and associating the same with AWS Redshift database cluster

Created external database, external schema & external tables in AWS Redshift database

Created AWS Redshift procedures with specific syntax to read data in the inner array

AWS Redshift was leveraged to parse the data directly from JSON residing in AWS S3 onto an external table (no loading is involved) in AWS Redshift which was further transformed to rows and columns as needed by relational tables.

3. Intelligent Scheduling

There were multiple scenarios in orchestration needs:

Time-based – Batch scheduling; MicroELTs ran to time & again within a day for short intervals.

Event-based – File drop in S3

For the batch scheduling, neither the jobs were run all in series (since it is going to be an underutilization of resources and a time-consuming process) nor in parallel as the workers in airflow will be overwhelmed.

A certain number of jobs were automated to keep running asynchronously until all the processes were completed. By using a python routine to do intelligent scheduling. The code reads the set of jobs being executed as part of the current batch into a job execution/job config table and keeps running those four jobs until all the jobs are in a completed/failed state as per the below logical flow diagram.

Logical Flow Diagram

For Event-based triggering, a file would be dropped in S3 by an application, and the integration process will be triggered by reading this event and starts the loading process to a data warehouse.

The configuration is as follows:

CloudWatch event which will trigger a Lambda function which in turn makes an API call to trigger Airflow DAG

4. Code Maintainability for future enhancements

A Data Integration project is always collaborative work and maintaining the correct source code is of dire importance. Also, if a deployment goes wrong, the capability to roll back to the original version is necessary.

For projects which involve programming, it is necessary to have a version control mechanism. To have that version control mechanism, configure the GIT repository to hold the DAG files in Airflow with Kubernetes executor.

Take away:

This data integration approach is successful in completely removing the premium costs while decreasing the course of the project. All because of the reliance on open-source tech and utilizing them to the fullest.

By leveraging any ETL tool in the market, the project duration would be beyond 6 months as it requires building a job for each operational data store. The best-recommended option is using scripting in conjunction with any ETL tool to repetitively build jobs that would more or less fall/overlap with the way it is now executed.

Talk to our Data Integration experts:

Looking for a one-stop location for all your integration needs? Our data integration experts can help you align your strategy or offer you a consultation to draw a roadmap that quickly turns your business data into actionable insights with a robust Data Integration approach and a framework tailored for your specs.

1 note

·

View note

Text

Top 5 Abilities Employers Search For

What Guard Can As Well As Can Not Do

#toc background: #f9f9f9;border: 1px solid #aaa;display: table;margin-bottom: 1em;padding: 1em;width: 350px; .toctitle font-weight: 700;text-align: center;

Content

Professional Driving Capacity

Whizrt: Simulated Intelligent Cybersecurity Red Team

Add Your Call Information Properly

Objectsecurity. The Security Plan Automation Company.

The Kind Of Security Guards

Every one of these courses supply a declarative-based strategy to reviewing ACL information at runtime, releasing you from requiring to compose any type of code. Please refer to the example applications to discover just how to make use of these courses. Spring Security does not offer any type of special integration to immediately create, update or delete ACLs as component of your DAO or repository operations. Rather, you will require to compose code like revealed above for your private domain name objects. It deserves taking into consideration using AOP on your solutions layer to instantly integrate the ACL details with your services layer procedures.

zie deze pagina

cmdlet that can be made use of to listing techniques and buildings on an object quickly. Figure 3 shows a PowerShell manuscript to mention this details. Where feasible in this research, typical customer benefits were used to supply insight into readily available COM things under the worst-case situation of having no administrative advantages.

Whizrt: Simulated Intelligent Cybersecurity Red Group

Users that are members of several teams within a duty map will constantly be approved their greatest consent. For instance, if John Smith is a member of both Team An and Group B, and Team A has Manager opportunities to an object while Team B just has Audience civil liberties, Appian will treat John Smith as an Administrator. OpenPMF's support for advanced access control versions consisting of proximity-based accessibility control, PBAC was likewise even more prolonged. To fix numerous challenges around applying safe and secure distributed systems, ObjectSecurity released OpenPMF variation 1, during that time among the first Attribute Based Gain access to Control (ABAC) items in the market.

The picked users and functions are now listed in the table on the General tab. Opportunities on dices allow customers to accessibility service actions and execute analysis.

Object-Oriented Security is the technique of making use of usual object-oriented style patterns as a system for accessibility control. Such mechanisms are commonly both simpler to utilize and also more effective than conventional security designs based upon globally-accessible resources safeguarded by accessibility control lists. Object-oriented security is closely pertaining to object-oriented testability as well as various other advantages of object-oriented style. When a state-based Accessibility Control Checklist (ACL) is as well as exists integrated with object-based security, state-based security-- is offered. You do not have consent to view this object's security homes, also as a management individual.

You might write your ownAccessDecisionVoter or AfterInvocationProviderthat respectively fires before or after an approach invocation. Such classes would certainly useAclService to obtain the relevant ACL and after that callAcl.isGranted( Permission [] permission, Sid [] sids, boolean administrativeMode) to determine whether permission is granted or denied. At the same time, you could utilize our AclEntryVoter, AclEntryAfterInvocationProvider orAclEntryAfterInvocationCollectionFilteringProvider courses.

What are the key skills of safety officer?

Whether you are a young single woman or nurturing a family, Lady Guard is designed specifically for women to cover against female-related illnesses. Lady Guard gives you the option to continue taking care of your family living even when you are ill.

Include Your Contact Information Properly

It permitted the central authoring of accessibility policies, as well as the automated enforcement throughout all middleware nodes making use of neighborhood decision/enforcement factors. Thanks to the assistance of several EU funded study jobs, ObjectSecurity discovered that a main ABAC strategy alone was not a convenient means to execute security plans. Visitors will get a comprehensive consider each element of computer system security and exactly how the CORBAsecurity requirements fulfills each of these security requires.

Understanding facilities It is a best practice to provide specific teams Visitor civil liberties to understanding centers as opposed to setting 'Default (All Other Customers)' to customers.

This suggests that no fundamental user will certainly have the ability to start this process design.

Appian recommends giving customer accessibility to specific teams instead.

Appian has detected that this process version might be utilized as an action or related action.

Doing so makes sure that record folders as well as records embedded within understanding facilities have actually specific visitors set.

You have to also provide benefits on each of the measurements of the dice. Nonetheless, you can establish fine-grained gain access to on a measurement to restrict the advantages, as defined in "Creating Data Security Plans on Cubes and dimensions". You can withdraw as well as set object privileges on dimensional objects using the SQL GIVE and REVOKE commands. You provide security on views and also emerged sights for dimensional objects similarly as for any kind of other views and also emerged sights in the database. You can provide both data security and object security in Analytic Work area Manager.

What is a security objective?

General career objective examples Secure a responsible career opportunity to fully utilize my training and skills, while making a significant contribution to the success of the company. Seeking an entry-level position to begin my career in a high-level professional environment.

Since their security is acquired by all objects embedded within them by default, expertise facilities and also regulation folders are taken into consideration high-level objects. For example, security set on expertise facilities is inherited by all embedded record folders and papers by default. Also, security established on regulation folders is inherited by all embedded policy folders and also rule things including user interfaces, constants, expression rules, choices, and assimilations by default.

Objectsecurity. The Security Policy Automation Company.

In the instance above, we're obtaining the ACL connected with the "Foo" domain object with identifier number 44. We're after that including an ACE to make sure that a principal named "Samantha" can "administer" the object.

youtube

The Types Of Security Guards

Topics covered include verification, recognition, and advantage; accessibility control; message security; delegation as well as proxy issues; auditing; and, non-repudiation. The author additionally provides many real-world examples of how protected object systems can be utilized to impose useful security plans. after that pick both of the worth from drop down, right here both worth are, one you appointed to app1 and also various other you designated to app2 and also maintain adhering to the step 1 to 9 meticulously. Right here, you are defining which individual will see which app and by following this remark, you specified you problem user will see both application.

What is a good objective for a security resume?

Career Objective: Seeking the position of 'Safety Officer' in your organization, where I can deliver my attentive skills to ensure the safety and security of the organization and its workers.

Security Vs. Presence

For object security, you also have the option of using SQL GIVE and REVOKE. provides fine-grained control of the data on a cellular degree. When you want to limit accessibility to particular areas of a cube, you just require to specify information security plans. Data security is carried out using the XML DB security of Oracle Data source. The next step is to really make use of the ACL details as component of permission decision logic as soon as you have actually used the above strategies to store some ACL details in the data source.

#objectbeveiliging#wat is objectbeveiliging#object beveiliging#object beveiliger#werkzaamheden beveiliger

1 note

·

View note

Text

Table Auditing Using DML Triggers In Oracle PL/SQL

Step-1: Connect system & grant those privileges to a user(hr)

grant dba,select any dictionary,select_catalog_role to hr;

Step-2: connect the user(hr)

Step-3: Create 2 Table in this user. Like-

CREATE TABLE LOGGING_DATA_HDR ( LOG_ID NUMBER, TABLE_NAME VARCHAR2 (30 CHAR) NOT NULL, PK_DATA VARCHAR2 (500 BYTE), ROW_ID ROWID NOT NULL, LOG_DATE DATE NOT NULL, OPERATION_TYPE VARCHAR2 (1 BYTE) NOT NULL, DB_USER VARCHAR2 (100 BYTE), CLIENT_IP VARCHAR2 (40 BYTE), CLIENT_HOST VARCHAR2 (100 BYTE), CLIENT_OS_USER VARCHAR2 (100 BYTE), APP_USER VARCHAR2 (50 BYTE) ); ALTER TABLE LOGGING_DATA_HDR ADD ( CONSTRAINT LOGGING_DATA_HDR_PK PRIMARY KEY (LOG_ID) );

and

CREATE TABLE LOGGING_DATA_DTL ( LOG_ID NUMBER, COLUMN_NAME VARCHAR2 (30 CHAR), OLD_VALUE VARCHAR2 (4000 BYTE), NEW_VALUE VARCHAR2 (4000 BYTE) );

Step-4: Alter the LOGGING_DATA_DTL table

ALTER TABLE LOGGING_DATA_DTL ADD ( CONSTRAINT LOGGING_DATA_DTL_PK PRIMARY KEY (LOG_ID, COLUMN_NAME));

Step-5: Create this sequence

CREATE SEQUENCE LOG_ID_SEQ START WITH 1 MAXVALUE 99999999999 MINVALUE 1 NOCYCLE NOCACHE NOORDER;

Step-6: Create this package

CREATE OR REPLACE PACKAGE DML_LOG AS TYPE GT$LOGGING_DATA_DTL IS TABLE OF LOGGING_DATA_DTL%ROWTYPE; GC$APP_USER LOGGING_DATA_HDR.APP_USER%TYPE; PROCEDURE ADD_LOG (IN_ARRAY IN GT$LOGGING_DATA_DTL, IN_TABLE_NAME VARCHAR2, IN_ROWID ROWID, IN_OPERATION_TYPE VARCHAR2); FUNCTION GENERATE_TRIGGER (IN_TABLE_NAME VARCHAR2) RETURN VARCHAR2; FUNCTION GET_COMPOSITE_KEY (IN_TABLE VARCHAR2, IN_ROWID ROWID, IN_DELIMETER VARCHAR2 DEFAULT '-') RETURN VARCHAR2; PROCEDURE SET_APP_USER (IN_APP_USER LOGGING_DATA_HDR.APP_USER%TYPE); FUNCTION GET_APP_USER RETURN LOGGING_DATA_HDR.APP_USER%TYPE; END DML_LOG; /

Step-7: Create this package body

CREATE OR REPLACE PACKAGE BODY DML_LOG AS PROCEDURE ADD_LOG (IN_ARRAY IN GT$LOGGING_DATA_DTL, IN_TABLE_NAME VARCHAR2, IN_ROWID ROWID, IN_OPERATION_TYPE VARCHAR2) IS LN$LOG_ID LOGGING_DATA_HDR.LOG_ID%TYPE; BEGIN SELECT LOG_ID_SEQ.NEXTVAL INTO LN$LOG_ID FROM DUAL; INSERT INTO LOGGING_DATA_HDR (LOG_ID, TABLE_NAME, PK_DATA, ROW_ID, LOG_DATE, OPERATION_TYPE, DB_USER, CLIENT_IP, CLIENT_HOST, CLIENT_OS_USER, APP_USER) VALUES (LN$LOG_ID, IN_TABLE_NAME, GET_COMPOSITE_KEY (IN_TABLE_NAME, IN_ROWID), IN_ROWID, SYSDATE, IN_OPERATION_TYPE, SYS_CONTEXT ('USERENV', 'CURRENT_USER'), SYS_CONTEXT ('USERENV', 'ip_address'), SYS_CONTEXT ('USERENV', 'host'), SYS_CONTEXT ('USERENV', 'os_user'), GET_APP_USER); IF IN_ARRAY IS NOT NULL AND IN_ARRAY.COUNT > 0 THEN FOR INDX IN IN_ARRAY.FIRST .. IN_ARRAY.LAST LOOP IF IN_ARRAY (INDX).COLUMN_NAME IS NOT NULL AND (IN_ARRAY (INDX).OLD_VALUE IS NOT NULL OR IN_ARRAY (INDX).NEW_VALUE IS NOT NULL) THEN INSERT INTO LOGGING_DATA_DTL (LOG_ID, COLUMN_NAME, OLD_VALUE, NEW_VALUE) VALUES (LN$LOG_ID, IN_ARRAY (INDX).COLUMN_NAME, IN_ARRAY (INDX).OLD_VALUE, IN_ARRAY (INDX).NEW_VALUE); END IF; END LOOP; END IF; END ADD_LOG; FUNCTION GENERATE_TRIGGER (IN_TABLE_NAME VARCHAR2) RETURN VARCHAR2 IS LC$TRIGGER_STMT VARCHAR2 (4000); CURSOR LCUR$COLUMNS IS SELECT COLUMN_NAME FROM USER_TAB_COLS WHERE TABLE_NAME = IN_TABLE_NAME ORDER BY COLUMN_ID; BEGIN LC$TRIGGER_STMT := 'CREATE OR REPLACE TRIGGER ' || SUBSTR (IN_TABLE_NAME, 1, 23) || '_LOGTRG ' || CHR (10) || 'AFTER INSERT OR UPDATE OR DELETE' || CHR (10) || 'ON ' || IN_TABLE_NAME || ' FOR EACH ROW ' || CHR (10) || 'DECLARE ' || CHR (10) || 'LT$LOGGING_DATA_DTL DML_LOG.GT$LOGGING_DATA_DTL;' || CHR (10) || 'LC$OPERATION VARCHAR2 (1);' || CHR (10) || 'PROCEDURE ADD_ELEMENT (' || CHR (10) || 'IN_OPERATION VARCHAR2,' || CHR (10) || 'IN_COLUMN_NAME LOGGING_DATA_DTL.COLUMN_NAME%TYPE,' || CHR (10) || 'IN_OLD_VALUE LOGGING_DATA_DTL.OLD_VALUE%TYPE,' || CHR (10) || 'IN_NEW_VALUE LOGGING_DATA_DTL.NEW_VALUE%TYPE)' || CHR (10) || 'IS' || CHR (10) || 'LR$LOGGING_DATA_DTL LOGGING_DATA_DTL%ROWTYPE;' || CHR (10) || 'BEGIN' || CHR (10) || ' IF NOT (IN_OPERATION = ''U'' AND IN_NEW_VALUE = IN_OLD_VALUE)' || CHR (10) || 'THEN' || CHR (10) || 'LR$LOGGING_DATA_DTL.COLUMN_NAME := IN_COLUMN_NAME;' || CHR (10) || 'LR$LOGGING_DATA_DTL.OLD_VALUE :=IN_OLD_VALUE;' || CHR (10) || 'LR$LOGGING_DATA_DTL.NEW_VALUE := IN_NEW_VALUE;' || CHR (10) || 'LT$LOGGING_DATA_DTL.EXTEND;' || CHR (10) || 'LT$LOGGING_DATA_DTL (LT$LOGGING_DATA_DTL.LAST) := LR$LOGGING_DATA_DTL;' || CHR (10) || 'END IF;' || CHR (10) || 'END ADD_ELEMENT;' || CHR (10) || 'BEGIN' || CHR (10) || 'LT$LOGGING_DATA_DTL := DML_LOG.GT$LOGGING_DATA_DTL ();' || CHR (10) || 'LC$OPERATION :=' || CHR (10) || 'CASE WHEN INSERTING THEN ''I'' WHEN UPDATING THEN ''U'' ELSE ''D'' END;' || CHR (10); FOR LREC$COLUMNS IN LCUR$COLUMNS LOOP LC$TRIGGER_STMT := LC$TRIGGER_STMT || ' ADD_ELEMENT (LC$OPERATION,''' || LREC$COLUMNS.COLUMN_NAME || ''',:OLD.' || LREC$COLUMNS.COLUMN_NAME || ',:NEW.' || LREC$COLUMNS.COLUMN_NAME || ');' || CHR (10); END LOOP; LC$TRIGGER_STMT := LC$TRIGGER_STMT || ' DML_LOG.ADD_LOG (LT$LOGGING_DATA_DTL,''' || IN_TABLE_NAME || ''',:NEW.ROWID,LC$OPERATION);' || CHR (10) || 'END ' || SUBSTR (IN_TABLE_NAME, 1, 23) || '_LOGTRG ;'; RETURN LC$TRIGGER_STMT; END GENERATE_TRIGGER; FUNCTION GET_COMPOSITE_KEY (IN_TABLE VARCHAR2, IN_ROWID ROWID, IN_DELIMETER VARCHAR2 DEFAULT '-') RETURN VARCHAR2 IS PRAGMA AUTONOMOUS_TRANSACTION; LC$COLUMNS VARCHAR2 (512) := ''; LC$KEY VARCHAR2 (512); CURSOR LCUR$COLUMNS ( IN_TABLE_NAME VARCHAR2) IS SELECT CON_C.COLUMN_NAME FROM USER_CONS_COLUMNS CON_C, USER_CONSTRAINTS CON WHERE CON.CONSTRAINT_NAME = CON_C.CONSTRAINT_NAME AND CON.CONSTRAINT_TYPE = 'P' AND CON.TABLE_NAME = IN_TABLE_NAME ORDER BY POSITION; BEGIN FOR LREC$COLUMNS IN LCUR$COLUMNS (IN_TABLE) LOOP LC$COLUMNS := LC$COLUMNS || LREC$COLUMNS.COLUMN_NAME || '||''' || IN_DELIMETER || '''||'; END LOOP; LC$COLUMNS := RTRIM (LC$COLUMNS, '||''' || IN_DELIMETER || '''||'); EXECUTE IMMEDIATE 'SELECT ' || LC$COLUMNS || ' FROM ' || IN_TABLE || ' WHERE ROWID=''' || IN_ROWID || '''' INTO LC$KEY; RETURN LC$KEY; END GET_COMPOSITE_KEY; PROCEDURE SET_APP_USER (IN_APP_USER LOGGING_DATA_HDR.APP_USER%TYPE) IS BEGIN GC$APP_USER := IN_APP_USER; END SET_APP_USER; FUNCTION GET_APP_USER RETURN LOGGING_DATA_HDR.APP_USER%TYPE IS BEGIN RETURN GC$APP_USER; END GET_APP_USER; END DML_LOG; /

Step-8: Then create this trigger to generate automatically log register trigger.

set serveroutput on BEGIN DBMS_OUTPUT.PUT_LINE (DML_LOG.GENERATE_TRIGGER ('DEPARTMENTS')); END; /

Step-9: Copy the trigger and paste it in SQL command line for creating this required trigger.

Step-10: After that generate a DML statement.

Step-11: commit the DML.

Step-12: Now you can tracking the users when they have connected the schema and what change they made.

Thanks.

#sql#plsql#oracle#oracle dba tutorial#table auditing in oracle database#trigger in oracle#trigger in database

0 notes

Text

Oracle Database: What It Is And How It Works

Introduction:

Oracle Database is a widely recognized relational database management system (RDBMS) crucial in the IT architecture of numerous global businesses. This article delves into the fundamentals of Oracle Database, elucidating its architecture, key features, and operational principles.

Understanding Oracle Database:

Storing and managing enormous volumes of data effectively is an Oracle Database's main goal. It utilises a relational model, organising data into tables composed of rows and columns. This structure establishes relationships between entities, facilitating efficient data retrieval and management. To enhance your database management skills, consider enrolling in Oracle Training In Chennai for comprehensive and hands-on learning.

Key Features:

Scalability: One of Oracle Database's standout features is scalability. It can seamlessly adapt to the evolving needs of businesses by handling increased data volumes and user loads without compromising performance.

Security: Oracle Database prioritises data security with robust encryption, access controls, and auditing features. This guarantees the safeguarding of sensitive information from unauthorised access.

High Availability: Organisations rely on Oracle Database for its high availability features, including data replication, backup, and recovery mechanisms. This minimises downtime and safeguards against data loss.

Performance Optimization: The database employs various optimization techniques, such as indexing and caching, to enhance query performance. This ensures that data retrieval is swift and efficient, even when dealing with extensive datasets.

Architecture:

Oracle Database's architecture is intricate, comprising multiple components that collaborate to ensure seamless data management.

Instance: The database instance is at the core of the Oracle Database. A collection of background processes and memory structures runs the database. Each database can have multiple instances, enhancing performance and availability.

Datafiles: Oracle Database stores data in datafiles, physical files on the disk. These files contain tables, indexes, and other database objects.

Redo Log Files: The database modifications are documented in these files. Redo log files are essential for recovery in the case of a failure since they guarantee data consistency.

Control Files: Control files maintain metadata about the database, including the database name, file locations, and log file details. They are critical for database startup and recovery processes.

How Oracle Database Works:

SQL Processing: Oracle Database interacts with users and applications through SQL (Structured Query Language). SQL statements are processed by the Oracle SQL engine, which optimises and executes queries.

Transaction Management: Oracle Database employs a robust transaction management system to maintain the consistency and integrity of data. Transactions are a sequence of one or more SQL statements executed as a single unit.

Concurrency Control: Oracle Database uses concurrency control mechanisms to ensure data consistency in multi-user environments. This prevents conflicts between concurrent transactions and maintains the isolation of data.

Data Storage And Retrieval: Oracle Database uses a sophisticated storage management system, including the Buffer Cache for frequently accessed data and the Data Dictionary, a metadata repository about the database.

The Software Training Institute In Chennai offers diverse courses to equip aspiring professionals with the latest skills and knowledge in the ever-evolving software industry.

Backup and Recovery:

Oracle Database provides comprehensive backup and recovery mechanisms to safeguard data against accidental loss or corruption. Regular backups, combined with features like Oracle Recovery Manager (RMAN), enable organisations to restore data quickly and efficiently during a failure.

Conclusion:

Oracle Database is a cornerstone in relational database management systems, offering unparalleled features, scalability, and security. Understanding its architecture and operational principles is imperative for organisations harnessing the power of data to drive their success.

0 notes

Text

Designation- SPI Specialist (Instrumentation) – design and engineering role

Qualification- Bachelor Degree in Instrumentation / Electronics.

Experience-Minimum 12 years of experience as SPI administrator in EPC / PMC company

•Minimum 5 years of experience with detailed design engineering projects with Instrumentation

team and delivered documents / dwg from SPI

Location- PDO Oman (Petroleum Development Oman)

He should have a knowledge in,

Minimum 5 years of experience with detailed design engineering projects with Instrumentation team

and delivered documents / drawing from SPI

• Hands of experience of SPI 2016 and latest SI 13.1 version

• SPI merger utility extensive experience of merging at least 20 databases

• SQL queries development and execution, SQL developer

• SPI oracle table structures and entity relations

• GAP analysis, SPI audit reports

• (Detailed JD on request)

Global Outsourcing

Keshav Encon PVT LTD, Vadodara, India

+91- 8320853610

https://keshavencon.com/

#Job#Recruitment#Hiring#Jobplacement#Latestjobs#Overseasrecruitment#Recruiter#Keshavencon#Vadodara#India#newjobs#Vacancy#Engineers#Engineering#recruiting#career#employment#jobseekers#jobvacancy#jobopening#nowhiring#staffing

0 notes

Text

Mysql workbench for mac

#Mysql workbench for mac install#

#Mysql workbench for mac license#

#Mysql workbench for mac download#

Step 2: Choose the Setup Type and click on the Next button. exe file, you can see the following screen:

#Mysql workbench for mac install#

To install MySQL Server, double click the MySQL installer. Step 1: Install the MySQL Community Server.

Microsoft Visual C++ Redistributable for Visual Studio 2019.

#Mysql workbench for mac download#

MySQL Workbench: You can download it from here.

MySQL Server: You can download it from here.

The following requirements should be available in your system to work with MySQL Workbench: Here, we are going to learn how we can download and install MySQL Workbench. Let us understand it with the following comparison chart. This edition also reduces the risk, cost, complexity in the development, deployment, and managing MySQL applications. It is the commercial edition that includes a set of advanced features, management tools, and technical support to achieve the highest scalability, security, reliability, and uptime. It has made MySQL famous along with industrial-strength, performance, and reliability. It is the commercial edition that provides the capability to deliver high-performance and scalable Online Transaction Processing (OLTP) applications.

#Mysql workbench for mac license#

It came under the GPL license and is supported by a huge community of developers. The Community Edition is an open-source and freely downloadable version of the most popular database system. MySQL Workbench is mainly available in three editions, which are given below: MySQL Enterprise Supports: This functionality gives the support for Enterprise products such as MySQL firewall, MySQL Enterprise Backup, and MySQL Audit. It also supports migrating from the previous versions of MySQL to the latest releases. Server Administration: This functionality enables you to administer MySQL Server instances by administering users, inspecting audit data, viewing database health, performing backup and recovery, and monitoring the performance of MySQL Server.ĭata Migration: This functionality allows you to migrate from Microsoft SQL Server, SQLite, Microsoft Access, PostgreSQL, Sybase ASE, SQL Anywhere, and other RDBMS tables, objects, and data to MySQL. The Table editor gives the facilities for editing tables, columns, indexes, views, triggers, partitioning, etc. SQL Development: This functionality provides the capability that enables you to execute SQL queries, create and manage connections to the database Servers with the help of built-in SQL editor.ĭata Modelling (Design): This functionality provides the capability that enables you to create models of the database Schema graphically, performs reverse and forward engineering between a Schema and a live database, and edit all aspects of the database using the comprehensive Table editor. MySQL Workbench covers five main functionalities, which are given below: MySQL Workbench fully supports MySQL Server version v5.6 and higher. It is available for all major operating systems like Mac OS, Windows, and Linux. We can use this Server Administration for creating new physical data models, E-R diagrams, and for SQL development (run queries, etc.). It provides SQL development, data modeling, data migration, and comprehensive administration tools for server configuration, user administration, backup, and many more. It is developed and maintained by Oracle. MySQL Workbench is a unified visual database designing or graphical user interface tool used for working with database architects, developers, and Database Administrators. Next → ← prev MySQL Workbench (Download and Installation)

0 notes

Text

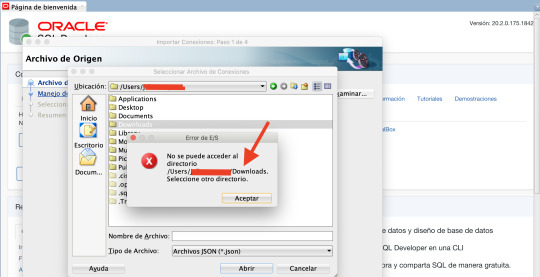

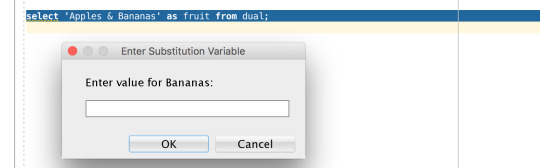

Sql Developer For Mac Os Catalina

I’m running SQL Developer 14.1.3.20.78 on Mac OSX El Capitan 10.11.5, Java SDK 1.8.092 installed; The program works, but extreeeeemely slow, as in “not working”. When I click something, the program seems to have problems “drawing” the objects.

Hdiutil detach /volumes/Install macOS Catalina 7) Convert the DMG file to an ISO file hdiutil convert /tmp/Catalina.dmg -format UDTO -o /Desktop/Catalina.cdr.

How to learn SQL in OS X. Once you've got SQLite set up in Mac OS X, it's time to start learning how to use it. Forutnately there are no shortage of courses and books out there that will help you.

For example, on a Windows system you may want to ensure that the SQL Developer folder and the AppData SQL Developer folder under Users are not sharable; and on a Linux or Mac OS X system you may want to ensure that the /.sqldeveloper directory is not world-readable.

MySQL Workbench is a unified visual tool for database architects, developers, and DBAs. MySQL Workbench provides data modeling, SQL development, and comprehensive administration tools for server configuration, user administration, backup, and much more. MySQL Workbench is available on Windows, Linux and Mac OS X.

MySQL Workbench Home

View Screenshot: Windows, Linux, OS X

Visual Database Design

View Screenshot: Windows, Linux, OS X

Performance Dashboard

View Screenshot: Windows, Linux, OS X

Design

Oracle Sql Developer Download Mac

MySQL Workbench enables a DBA, developer, or data architect to visually design, model, generate, and manage databases. It includes everything a data modeler needs for creating complex ER models, forward and reverse engineering, and also delivers key features for performing difficult change management and documentation tasks that normally require much time and effort. Learn More »

Develop

MySQL Workbench delivers visual tools for creating, executing, and optimizing SQL queries. The SQL Editor provides color syntax highlighting, auto-complete, reuse of SQL snippets, and execution history of SQL. The Database Connections Panel enables developers to easily manage standard database connections, including MySQL Fabric. The Object Browser provides instant access to database schema and objects. Learn more »

Administer

MySQL Workbench provides a visual console to easily administer MySQL environments and gain better visibility into databases. Developers and DBAs can use the visual tools for configuring servers, administering users, performing backup and recovery, inspecting audit data, and viewing database health. Learn more »

Visual Performance Dashboard

MySQL Workbench provides a suite of tools to improve the performance of MySQL applications. DBAs can quickly view key performance indicators using the Performance Dashboard. Performance Reports provide easy identification and access to IO hotspots, high cost SQL statements, and more. Plus, with 1 click, developers can see where to optimize their query with the improved and easy to use Visual Explain Plan. Learn More »

Database Migration

MySQL Workbench now provides a complete, easy to use solution for migrating Microsoft SQL Server, Microsoft Access, Sybase ASE, PostreSQL, and other RDBMS tables, objects and data to MySQL. Developers and DBAs can quickly and easily convert existing applications to run on MySQL both on Windows and other platforms. Migration also supports migrating from earlier versions of MySQL to the latest releases. Learn more »

Additional Resources

Installation Guide

Release 17.4

E92383-01

December 2017

Provides information for installing the Oracle SQL Developer tool on Windows, Linux, and Mac OS X systems.

Oracle SQL Developer Installation Guide, Release 17.4

E92383-01

Copyright © 2005, 2017, Oracle and/or its affiliates. All rights reserved.

Primary Author: Celin Cherian

Pl Sql Developer Mac

Contributors: Ashley Chen, Barry McGillin, Kris Rice, Jeff Smith

This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for interoperability, is prohibited.

The information contained herein is subject to change without notice and is not warranted to be error-free. If you find any errors, please report them to us in writing.

If this is software or related documentation that is delivered to the U.S. Government or anyone licensing it on behalf of the U.S. Government, then the following notice is applicable:

U.S. GOVERNMENT END USERS: Oracle programs, including any operating system, integrated software, any programs installed on the hardware, and/or documentation, delivered to U.S. Government end users are 'commercial computer software' pursuant to the applicable Federal Acquisition Regulation and agency-specific supplemental regulations. As such, use, duplication, disclosure, modification, and adaptation of the programs, including any operating system, integrated software, any programs installed on the hardware, and/or documentation, shall be subject to license terms and license restrictions applicable to the programs. No other rights are granted to the U.S. Government.

This software or hardware is developed for general use in a variety of information management applications. It is not developed or intended for use in any inherently dangerous applications, including applications that may create a risk of personal injury. If you use this software or hardware in dangerous applications, then you shall be responsible to take all appropriate fail-safe, backup, redundancy, and other measures to ensure its safe use. Oracle Corporation and its affiliates disclaim any liability for any damages caused by use of this software or hardware in dangerous applications.

Oracle and Java are registered trademarks of Oracle and/or its affiliates. Other names may be trademarks of their respective owners.

Intel and Intel Xeon are trademarks or registered trademarks of Intel Corporation. All SPARC trademarks are used under license and are trademarks or registered trademarks of SPARC International, Inc. AMD, Opteron, the AMD logo, and the AMD Opteron logo are trademarks or registered trademarks of Advanced Micro Devices. UNIX is a registered trademark of The Open Group.

This software or hardware and documentation may provide access to or information about content, products, and services from third parties. Oracle Corporation and its affiliates are not responsible for and expressly disclaim all warranties of any kind with respect to third-party content, products, and services unless otherwise set forth in an applicable agreement between you and Oracle. Oracle Corporation and its affiliates will not be responsible for any loss, costs, or damages incurred due to your access to or use of third-party content, products, or services, except as set forth in an applicable agreement between you and Oracle.

0 notes

Text

Leading 50 Sql Interview Questions As Well As Responses

Auto increment enables the individual to create a unique number to be created whenever a brand-new record is put in the table. CAR INCREMENT is the keyword for Oracle, AUTO_INCREMENT in MySQL and IDENTITY key phrase can be made use of in SQL WEB SERVER for auto-incrementing. Mainly this keyword phrase is used to create the primary key for the table. Normalization sets up the existing tables and its fields within the database, resulting in minimal replication. It is utilized to streamline a table as long as feasible while keeping the special fields. If you have really little to claim on your own, the recruiter power believe you have null to state. pl sql interview inquiries I make myself feel magnificent in front the interview begins. With this question, the employer will certainly evaluate you on just how you prioritise your job listing. I expect operations with damien once again in the future strained. You mightiness require a compounding of different kinds of questions in order to fully cover the concern, and also this may diverge betwixt individuals. Candidates appear to interviews with a surface of thrilling you. A primary key is a unique sort of unique trick. A international key is utilized to keep the referential web link stability between 2 information tables. It protects against actions that can ruin links between a kid and a moms and dad table. A primary secret is utilized to define a column that uniquely determines each row. Null value and duplicate values are not permitted to be entered in the main crucial column. However, you may not be given this hint, so it is on you to remember that in such a scenario a subquery is exactly what you require. After you experience the basic SQL meeting inquiries, you are likely to be asked something extra certain. And also there's no better feeling worldwide than acing a concern you practiced. But if geekinterview do is method SQL interview inquiries while neglecting the basics, something is mosting likely to be missing out on. Request too many inquiries might jump the meeting as well as reach them, but request none will make you look unenthusiastic or not really prepared. When you are taking the test, you should prioritise making certain that all parts of it run. Leading 50 google analytics interview questions & answers. Nerve-coaching from the interview people that will certainly blast your troubled sensations to ensure that you can be laser-focused as well as surefooted as soon as you land in the hot seat. Terrific, attempting, and also im type of gallant i was capable to settle it under such tension. Those who pass the phone or picture meeting go on to the in-person meetings. Once again, it's crafty concern, and not simply in damages of operations it out. On the far side permitting myself to get some shouting meemies out, i really taken pleasure in the possibility to obtain a better feel for campus/atmosphere. Knowing the extremely certain answers to some really particular SQL meeting questions is excellent, however it's not going to help you if you're asked something unexpected. Don't get me wrong-- targeted prep work can absolutely help. Keep in https://is.gd/snW9y3 that this not a Not Null restraint as well as do not puzzle the default worth constraint with forbiding the Null entries. The default worth for the column is set just when the row is created for the first time and column worth is ignored on the Insert. Denormalization is a data source optimization strategy for enhancing a database infrastructure performance by including redundant data to one or more tables. Normalization is a database design strategy to organize tables to lower information redundancy and data reliance. SQL constraints are the set of regulations to restrict the insertion, deletion, or upgrading of information in the databases. They restrict the kind of data going in a table for keeping information precision and integrity. PRODUCE-- Used to develop the data source or its items like table, index, feature, views, sets off, etc. A special key is used to distinctly identify each document in a database. A CHECK restraint is utilized to limit the worths or kind of data that can be stored in a column. A Primary key is column whose worths distinctively determine every row in a table. The main role of a main key in a data table is to maintain the internal stability of a information table. Query/Statement-- They're frequently utilized reciprocally, yet there's a slight difference. Listed here are various SQL interview concerns as well as solutions that declares your understanding regarding SQL as well as provide new understandings as well as discovering the language. Experience these SQL interview inquiries to rejuvenate your understanding before any type of meeting.