#the majority of our universal knowledge is assumption-based

Explore tagged Tumblr posts

Note

why do you hate "humans and earth are weird" posts and stuff?

Look, you can do whatever you want in fiction, I really don't care at the end of the day, it's just that like 70-80% of our problems stem from humans thinking we're uniquely special in some way, when we're really probably not, like at all, so I'm just not a fan of that trope.

#I think the human race thinks it's a lot smarter than it actually is#the majority of our universal knowledge is assumption-based#and we can't even tell otherwise because you quite literally cannot know what you don't know#we have all these assumptions on how life *has* to function when we've explored like 5% of our oceans#within which there is life that actively contradicts what we think we understand about the requirements of life#literally on our own planet there is life that contradicts what we think we know#but somehow so many people still have themselves convinced that life on Earth is uniquely special#when you literally *cannot* fucking know that!#the only thing we have uniquely going for us is pretentiousness and I'm not fucking joking#yeah it's real easy to think you're fucking special when you've never met anyone else before!

5 notes

·

View notes

Note

Hi there!

I've been a fan of yours for a while (I discovered you through your "You want to manifest your dream life?" post a few weeks back) and I appreciate the way you approach loass. It is so uncomplicated but it just resonates with me. Just good vibes all around on this blog (and you're pink, always a plus!)

That being said, I've noticed that not a lot of bloggers address the topic of procrastination, or - if they do - it's something along the lines of "just do it/you already have your desires/other loass phrase" While I know there is merit in what they say, it is a little demotivating when these blanket statements don't connect with me (and likely others).

I'm aware of the many causes of it (fear of failure, perfectionism, anxiety, etc) and while I have made major strides on my own, I feel it'd be beneficial to get some outside advice.

Do you have any tips to manage procrastination?

(also, if you don't mind, can I be your 🪷 annon? I'd love to come back, even just to chat)

omg hello dear!

yes you can be my 🪷 anon!

it bring me joy when i see people loving my blog and actually find my posts interesting!

so my tips for the procrastination part, my advice is:

what do i do to manage procrastination?

First of all i noticed something, people tend to make manifesting seems like it's a job, and they complicate it so much, i know why, since we subconsciously believe that there's no way we can get whatever we want with just assuming that it ours and yes it's too good to be true, but you'll need to accept that manifesting your desires is really simple and easy.

Procrastination may make you feel like giving up, feeling slow, lazy and many more, but we need to fix that.

First step: keep it light and simple.

Go back remembering what you did when you Manifested something a month ago or a year ago, what did you think? What did you do?

Personally that helped me alot, since i Always seems to forget how to manifest like i used to.

Second step: go back to the basic.

What is the Law of assumption really?

It's a Universal Law that state whatever you assume to be true will be true.

Do i have to really use the terms 3d and 4d?

Coming from my opinion, no.

I don't use 3d and 4d terms anymore, i just decide it's mine and move on 🤷🏻♀️.

(if you want to explore more about the 3d and 4d go on, it's your choice).

Do i have to persist?

I mean if you already have it, why would you need Persisting anyway? You assumed it's yours? Then it's yours, period.

Is it gonna take too much time? What if it delays? What if-

Those all are limiting beliefs, time delay and things that would ruin your manifestation, signs, movements doesn't exist, you make them exist if you assumed so, you're the creator after all.

How do i manage my anxiety and doubt while manifesting?

You know that little voice that is telling you that you don't have your desires? That the ego, how to shut it up? Just tell it "no? I have it" Easy peasy, don't fight it, just tell it that not true and that you already have your desires.

When you find yourself getting stressful about time, just take a deep breath and say to yourself "no i have it right now, my desires are mine"

How do i deal with the 3d?

You don't have to ignore the 3d, you just knowledge it and remind yourself that you already have your desires and what happening in the 3d is gonna change, and move on.

Actually that all i have to say, manifestation is truly easy and simple.

Which methods are better?

Honestly, that based on your assumption, if you believe that would work then it will.

Assumption= reality.

I hope that cleared up everything for you, and made you understand that the Law of assumption is nothing and it's just a part of your lifestyle, speak it into existence because it is.

Read this, it would help a lot:

Xoxo, Eli

#🪷 Anon#law of assumption#loa tumblr#loa blog#loa#law of manifestation#how to manifest#loassumption#void state#asks#anon ask#affirm and manifest 🫧 🎀✨ ִִֶָ ٠˟

23 notes

·

View notes

Text

Amane, indoctrination, and gaslighting

and why voting Amane innocent would be the best course of action

I've been wanting to write a big post on Amane talking about indoctrination and such. Because I see takes sometimes that make it clear the person doesn't really... Get It.

Most of what I'll be explaining comes from my personal experiences growing up.

Additionally, most of what I say when it comes to outcomes (i.e. "If x happens, Amane will do y") will be based on the assumption that realism, not entertainment, is prioritized in the writing and that there are no major holes in our knowledge of what's going on. Theoretically anything could happen since this is a fictional scenario and we don't know everything when it comes to the world, the cases, and the characters. Not to mention my situation was nowhere near as extreme as hers. So although I probably have a better understanding of it than most people, I definitely can't claim that I know what she's gone through.

Personal anecdotes I add to better support my points will be in the small font (this!) since I don't want them to distract from the main text and so that they can be easily skipped for those who may be worried about being triggered. But if anyone needs plain text descriptions, I'll happily provide them!

!! TW for child abuse, religious abuse, and cults !!

I recommend skipping my personal anecdotes if more detailed discussions about these topics are a trigger for you.

At the heart of "good" (read: successful) indoctrination is gaslighting.

Since gaslighting has been one of the many psychology terms completely watered down and distorted by the internet, I will define it just so we're all on the same page!

Gaslighting is a form of psychological manipulation used to make the victim question their own sanity, sense of reality, or power of reasoning.

Basically, you can't trust yourself. You can't trust your thoughts, your feelings, your interpretations, etc. You become completely reliant on other people (usually specific people who are the ones doing the gaslighting) to figure out what's real/true or not.

Toxic/extremist religious groups like to take gaslighting a step further though. Not only do they make it so you cannot trust yourself to judge what is right or not, they may also teach you that what feels wrong is actually right. You can see where this can start to cause some issues lol.

Anything your gut may tell you that contradicts what the group/cult leaders tell you—"this is wrong!", "this is bad!", "I don't want to do this..."—must be ignored. Because those feelings and thoughts, according to the leaders, are actually the sinful part of you trying to lead the good and faithful part of you astray. They make you question yourself to make sure you never question them.

They will figuratively or literally beat this into you until your first instinct is no longer to listen to your gut and do what it says, but to dismiss it and do what it's telling you not to do. Existing becomes a chronic power struggle between your unconscious mind and your conscious mind. Unfortunately, the fact that you're struggling often then gets used against you as proof that you need to follow their teachings. Because if you're unhappy, then you must be doing something wrong. You just need to have a little more faith, dedicate a little more time to the religion/group, go a little harder into your duties... Only then will you feel better—feel more enlightened.

An integral part in making all this work is isolation. If you don't somehow isolate the members, they may figure out that they're being manipulated and abused.

Now, isolation doesn't always mean purely physical isolation (though Amane is being isolated physically to at least some capacity). Psychological isolation is almost just as powerful. An almost universal psychological isolation tactic used by extremist groups and cults is the "Us vs Them" mentality. We can see this being very prominent with Amane. A lot of things she talks about with regard to the cult involves an Us-vs-Them dynamic. There is "Us", the cult, and "Them", everyone else.

Personally, we were taught that those who weren't believers of our religion were out to get us or will, at the very least, get us hurt/killed somehow. We were told many people wanted us dead just for being believers. You had to be careful and watch out when interacting with non-believers; you couldn't trust them. God was constantly testing you via others, and you had to make sure you stayed faithful.

This in particular is why no matter if you vote guilty or innocent, that itself will not actually do anything to change her beliefs. Voting her guilty will not make her start to feel bad and then question her beliefs. Voting her innocent will not make her listen to us and then question her beliefs. If we make her have any doubt about the cult, that's just proof to her that what we're telling her is wrong and is just another "trial" from God for her to overcome. So, changing her beliefs should not be a factor considered when voting since it's completely irrelevant. Everything can be twisted to support the cult. That's just how it works.

I don't think any amount of punishment will make Amane "come to her senses". I mean... what could we possibly do to her that she hasn't already had to endure? Punishment will likely only escalate things even more. Not to mention that having a bit of a fascination with martyrdom isn't all that uncommon in those who have been religiously abused and indoctrinated. The threat of punishment may only serve to motivate her to double down on her beliefs and behavior. Not to say she wants and likes punishment. It's obvious she's both scared of punishment and wants it to stop. After all, that's most likely the motive behind the murder.

Even prior to Amane's age, I was already fantasizing about being a martyr. A part of me almost wanted to be killed for my religion and community. It was seen as something extremely admirable. The ultimate sacrifice, if you will. We were taught that if given the choice between saving yourself by denying your faith or letting yourself be hurt/killed by standing your ground, you should choose the latter. Of course, I also did not want that to happen at all. It scared me shitless. But we weren't allowed to be scared about that stuff. It was seen as questioning God and the religious authorities, which was completely taboo. So I had no choice but to "want" it.

Isolating Amane is the worst possible thing we could do to her. No one gets better from being isolated, and this goes double for people living in abusive environments. She's been isolated her whole life. The best thing for her would be spending time with the other prisoners without restrictions. The more time she spends around people who have no connection to the cult, the better. Trying to argue with those in cults about why they're wrong and why they are in a cult (because most don't even recognize they're in a cult due to the gaslighting, indoctrination, and stigma) will almost always backfire. The best thing to do is to just be there for them to have someone to interact with who is not a cult member.

The only reason I left the extremist religious community I grew up in was because I made a friend who was not affiliated with it. I don't think I would've been able to see that the conditions I was living in were Not Very Good without that friend. He didn't even really do anything to actively help me. Just learning more about the real world through him was enough to make me start looking closer at my life.

To vote her guilty would be to continue isolating her. Not just physically as the guilty prisoners get restrictions put on them, but it's also an inescapable psychological isolation. Innocent vs Guilty is just another Us vs Them dynamic.

I fear that, if she ends up guilty this trial, she will likely be voted guilty again in trial 3. Her aggression will probably only escalate as she feels herself becoming more and more cornered. And since I know many people are voting her guilty solely to make sure she doesn't hurt Shidou or other prisoners, I can only imagine what the voting will look like for her in trial 3 once she's forced to become even more aggressive to protect herself.

And tbh... I can't imagine that having a prisoner with 3 guilty verdicts will make for all that interesting of a story for them. Not that it would be boring, per se. But having variety would, in my opinion, be the most interesting and entertaining! So, if nothing else I've said has been able to sway those who vote her guilty, then think about the entertainment factor!

Please vote this severely traumatized 12 y/o girl innocent. We can give her so many secret cakes to eat.

#Milgram#milgram project#milgram amane#amane momose#milgram analysis#... i guess?#ミルグラム#yeah im pulling out all the stops for this#tw child abuse#tw religious abuse#tw cults#this is a bit less in-depth than i wanted. but i also have a horrible rambling problem and am attempting to be more concise LOL#ive got shit to say you know? /ref#im so passionate about amane#pls have mercy... free my girl...#im nervous about posting this 😔. not sure why#my social anxiety is horrible. but. i very much encourage discussion and such#for amane i will conquer my demons. they may know how to swim‚ but /i/ know how to poison the fucking water supply

111 notes

·

View notes

Note

hey there! i saw your post about british vs american people and as a working-class british person who is now based in the us, i wanted to add my two cents. i think something a lot of americans don't realise about brits is that our class system is so deep-rooted in our society that seemingly trivial things such as accents are in fact an integral part of it. accents in britain are not indicators of where you live or where you come from, but whether you are "rich" or "not rich". people with, say, east london or liverpudlian accents find it much harder to gain social status or high-paying jobs regardless of their education or financial situation because the assumption is always that they are poor and stupid. people who come from working-class backgrounds are mercilessly mocked and at worst actively discriminated against for their accents in traditionally middle-to-upper-class areas such as universities, and end up changing their accents to blend in, so it's really a self fulfilling cycle. slang such as "innit" or pronunciations such as "chewsday" are inherently working-class, and as such, people mocking them will be perceived as classism by most working-class brits. what you're seeing is not a country full of snobs unable to take criticism, but people who have been ridiculed for their accents their whole lives, and have the ability to strike back without major consequence. the problem here isn't either of you, it's the lack of knowledge surrounding each other's cultures, as a seemingly innocuous comment from you can be seen as a direct attack to someone else regardless of intent. this was very long and rambly but i hope it clears some things up! top of the mornin' to ya, guv'na, and maybe next time we can come together and roast the shit out of the english upper class :)

as a final note, id like to say that obviously none of this justifies the disproportionate response you describe in your post, and i hope that both of our fucked up systems improve soon and no-one innocent gets hurt in the process (<- this was very poorly articulated but i hope you understood what i was trying to say!)

I will admit, you have given me a new perspective on the matter of accents. I had figured there might be some form of class differences at play here, but I hadn’t realized just how deep those differences are rooted over in the UK.

In all honesty, taking a jab at accents was certainly not the best example to use, to put it lightly, and I apologize for that.

Thank you for taking the time to write this out; a friend of the working class is a friend of mine :)

8 notes

·

View notes

Text

Okay, so... the study actually is also not "testing how well people in the 21st century can understand the specific nuances of 19th century London". It was, as the article states, "designed to test how college students create meaning while they read," specifically English majors reading Bleak House. And the authors explain why these participants and why this text. The students at these universities enter college with ACT Reading scores that indicate they cannot read and understand a text like Bleak House. The authors of the study state:

As faculty, we often assume that the students learn to read at this level on their own, after they take classes that teach literary analysis of assigned literary texts. Our study was designed to test this assumption.

They know they are testing students who did not arrive at university ready to read this book. They also recognize that, as professors, they have been assuming they're teaching these students the skills they need to do so. After all, these are English majors, and 41% of them were specifically majoring in English Education, meaning they are likely planning to become English teachers. The authors state that "Bleak House is a standard in college literature classes and, so, is important for English Education students, who often are called on to teach Great Expectations and A Tale of Two Cities in high schools."

Additionally, preliminary to the main test, they administered a questionnaire to ascertain the students' knowledge of 19th century American and British literature, history, and culture. This is important because:

According to Wolfgang Iser in The Act of Reading, one’s ability to read complex literature is partly dependent on one’s knowledge of what he calls the “repertoire” of the text, “the form of references to earlier works, or to social and historical norms, or to the whole culture from which the text has emerged”. With Bleak House, this knowledge is crucial.

Based on the results of this questionnaire, they determined that the students "could not rely on previous knowledge to help them with Bleak House; in fact, they could not remember much of what they had studied in previous or current English classes."

So not only did these students arrive at university unable to read at the level needed to understand a text as complex as Bleak House, they also currently lack the contextual knowledge that would make that particular book more accessible to them.

The authors fully acknowledge these things. The point of this study is not to see if random modern people can rawdog Bleak House. It's to see how the typical English major at these universities—who started college unprepared to tackle a book this difficult, and who has not been successfully familiarized with 19th century literary contexts by their English classes so far—tries to understand Bleak House.

The OP in this thread says:

The study structure was 20 minutes to read aloud seven paragraphs. So, while one was allowed a quick Google or a peek at the dictionary, there isn't really time to do any sort of deep dive - this is a test of whether you are already familiar with this sort of work.

This is not accurate. Each subject was given the first seven paragraphs of Bleak House, and each session was 20 minutes. This was not a timed test: "Subjects were encouraged to go at their own pace and were not required to finish the entire passage," according to the article. They were not allowed just "a quick Google or a peek at the dictionary"; they could take all the time they wished. And again, it's not "a test of whether you are already familiar with this sort of work"; that was what the preliminary questionnaire was for, and it established that the students did not have such familiarity.

OP also states that "every few sentences, the facilitator would poke the subject to explain the last few sentences," which is also inaccurate: the article states that "subjects were asked to read out loud and then translate each sentence". And to the second poster's concern about reading aloud: "Those who were uncomfortable reading out loud had the option to read silently." The authors also cite previous research to justify their use of the "think-aloud" method and the choice to have participants interpret each individual sentence.

OP, again:

Not summarize, no: they wanted a full dissection. "Dickens is setting the atmosphere by describing the fog" was considered a failure of comprehension.

The participant whose response OP seems to be referencing was evaluated as belonging to the "problematic readers" group, who "had no successful reading tactics to help them understand Bleak House, so they became quickly lost and floundered throughout the reading test." This response was considered by the authors to be an example of the unsuccessful tactic of "oversimplifying—that is, reducing the details of a complex sentence to a generic statement." In the passage, Dickens is doing more than just "setting the atmosphere by describing the fog". He is specific about where the fog is, what the fog is doing, how it's affecting the people in it; he's using this description to draw us down into the London streets and finally into Lincoln's Inn, where the fog becomes "a symbol for the confusion, disarray, and blindness of the Court of Chancery." So, yes—if all a student gets from that passage is "he's describing the fog," they're failing to meaningfully comprehend what Dickens is saying and how he's saying it.

Poster #2 in this thread objects to the observation made by the author of a different thread (linked by the OP) that "struggling readers do not expect what they read to make sense." That person was relating a hypothesis they'd formed in the course of teaching: that if struggling readers are not helped to become better at reading and comprehending, they become used to not understanding what they read, and to drawing inaccurate conclusions about the meaning based on disparate familiar words or images. Poster #2 feels that the students' responses in this study do not suggest they read this way. But I'd argue they very much do suggest that. The examples from the study that the other thread's author cites illustrate this. One student reads the sentence describing the Chancellor being "addressed by a large advocate with great whiskers", fixates on the word whiskers, and guesses that there's a cat in the room. Another example is a student's interpretation of this sentence:

As much mud in the streets as if the waters had but newly retired from the face of the earth, and it would not be wonderful to meet a Megalosaurus, forty feet long or so, waddling like an elephantine lizard up Holborn Hill.

The student's interpretation that illustrates a misunderstanding of this imagery is that "everything’s been like kind of washed around and we might find Megalosaurus bones but he’s says they’re waddling, um, all up the hill." Dickens is not saying anything about finding bones, or that anything is waddling up the hill; he's saying the streets are so muddy it's as though we're at the dawn of creation with land having just emerged from the seas, and joking that you wouldn't be surprised to see a prehistoric beast (he specifically imagines this guy, depicted inaccurately but as was typical in Dickens' time) roaming Holborn Hill. (Note that the student could have looked up both these references.)

Poster #2 also seems to think that the study is failing to appreciate that a reader gains a better grasp on the text as they go. But the article says: "It is understandable that problematic readers skipped over 'In Chancery' and 'Lincoln’s Inn Hall' in the first two sentences because they could assume they would understand this language later in the reading." What concerned the authors is that the problematic readers' understanding of the text did not improve as they read, and they still didn't look up the unfamiliar terms.

Poster #2 says that they, personally, would expect to learn such things as what a Chancery is by reading the rest of Bleak House. But Dickens wrote this book with the expectation that the readers of his time already knew what it was. You may learn more things about it in the course of the book, but you're going in without any of the basic knowledge Dickens assumes you have; it's going to be harder for you to understand, and some critical aspects may elude you entirely. That's exactly the kind of thing this study was getting at—that the students made comprehension harder on themselves because they didn't use effective reading tactics. They didn't look things up when they could've, even when they seemed to recognize that they weren't fully grasping what they were reading.

This was not some malicious and cruel test to mock modern people's lack of familiarity with 19th century life and literature. It was an exploration of how English students tried to make sense of a book they could expect to encounter in their degree program and might have to teach some day. It was an attempt to answer the question, "Are we successfully teaching these students the things they need to know in order to grapple with the texts we assign them?" and the answer was no.

So no, I don't agree with the previous posters in this thread in their evaluation of this study, or of the post that tipped them off about it. I didn't get as much into that post here, but IMO it's an interesting take on students' struggles with reading comprehension by someone with experience in the field—and, frankly, someone who better understood the study than the people in this thread did. I don't want to be mean about it, but it is a bit ironic that a study about reading comprehension elicited such outrage, based apparently on people's incorrect comprehension of said study.

So I decided to read the actual study (link) - it's totally free. TL;DR: the study is testing how well people in the 21st century can understand the specific nuances of 19th century London. This is not "reading comprehension", they are testing whether you know things like what a "Michaelmas Term" (Wikipedia) is. This is... to put it politely, not a normal part of reading comprehension in any sort of day to day task. This study is exclusively about your ability to read and be familiar with the nuances of 19th century English Literature as a specific body.

The study structure was 20 minutes to read aloud seven paragraphs. So, while one was allowed a quick Google or a peek at the dictionary, there isn't really time to do any sort of deep dive - this is a test of whether you are already familiar with this sort of work.

---

Oh, but it wasn't just 20 minutes to read it out loud: every few sentences, the facilitator would poke the subject to explain the last few sentences. Not summarize, no: they wanted a full dissection. "Dickens is setting the atmosphere by describing the fog" was considered a failure of comprehension. The only explanation they provide that counts as a "pass" is almost twice as long as the actual passage itself!

It's not even really clear if they made it clear to the subjects that they were looking for this sort of verbose summary - the facilitator just replies "O.K." regardless of how detailed their response is.

I cannot imagine I would do terribly better, given 20 minutes to read aloud 7 paragraphs, and being constantly prodded to regurgitate the material at random intervals!

---

I really do NOT consider it worth reading, but here's a link to the original post for posterity's sake: https://www.tumblr.com/prettyboysdontlookatexplosions/783379386552516608?source=share

#update: I do in fact have Opinions about this thread#fynn posts#literature#research#how dare you say we piss on the poor

3K notes

·

View notes

Text

The World After Capital in 64 Theses

Over the weekend I tweeted out a summary of my book The World After Capital in 64 theses. Here they are in one place:

The Industrial Age is 20+ years past its expiration date, following a long decline that started in the 1970s.

Mainstream politicians have propped up the Industrial Age through incremental reforms that are simply pushing out the inevitable collapse.

The lack of a positive vision for what comes after the Industrial Age has created a narrative vacuum exploited by nihilist forces such as Trump and ISIS.

The failure to enact radical changes is based on vastly underestimating the importance of digital technology, which is not simply another set of Industrial Age machines.

Digital technology has two unique characteristics not found in any prior human technology: zero marginal cost and universality of computation.

Our existing approaches to regulation of markets, dissemination of information, education and more are based on the no longer valid assumption of positive marginal cost.

Our beliefs about the role of labor in production and work as a source of purpose are incompatible with the ability of computers to carry out ever more sophisticated computations (and to do so ultimately at zero marginal cost).

Digital technology represents as profound a shift in human capabilities as the invention of agriculture and the discovery of science, each of which resulted in a new age for humanity.

The two prior transitions, from the Forager Age to the Agrarian Age and from the Agrarian Age to the Industrial Age resulted in humanity changing almost everything about how individuals live and societies function, including changes in religion.

Inventing the next age, will require nothing short of changing everything yet again.

We can, if we make the right choices now, set ourselves on a path to the Knowledge Age which will allow humanity to overcome the climate crisis and to broadly enjoy the benefits of automation.

Choosing a path into the future requires understanding the nature of the transition we are facing and coming to terms with what it means to be human.

New technology enlarges the “space of the possible,” which then contains both good and bad outcomes. This has been true starting from the earliest human technology: fire can be used to cook and heat, but also to wage war.

Technological breakthroughs shift the binding constraint. For foraging tribes it was food. For agrarian societies it was arable land. Industrial countries were constrained by how much physical capital (machines, factories, railroads, etc.) they could produce.

Today humanity is no longer constrained by capital, but by attention.

We are facing a crisis of attention. We are not paying enough attention to profound challenges, such as “what is our purpose?” and “how do we overcome the climate crisis?”

Attention is to time as velocity is to speed: attention is what we direct our minds to during a time period. We cannot go back and change what we paid attention to. If we are poorly prepared for a crisis it is because of how we have allocated our attention in the past.

We have enough capital to meet our individual and collective needs, as long as we are clear about the difference between needs and wants.

Our needs can be met despite the population explosion because of the amazing technological progress we have made and because population growth is slowing down everywhere with peak population in sight.

Industrial Age society, however, has intentionally led us down a path of confusing our unlimited wants with our modest needs, as well as specific solutions (e.g. individually owned cars) with needs (e.g. transportation).

The confusion of wants with needs keeps much of our attention trapped in the “job loop”: we work so that we can buy goods and services, which are produced by other people also working.

The job loop was once beneficial, when combined with markets and entrepreneurship, it resulted in much of the innovation that we now take for granted.

Now, however, we can and should apply as much automation as we can muster to free human attention from the “job loop” so that it can participate in the “knowledge loop” instead: learn, create, and share.

Digital technology can be used to vastly accelerate the knowledge loop, as can be seen from early successes, such as Wikipedia and open access scientific publications.

Much of digital technology is being used to hog human attention into systems such as Facebook, Twitter and others that engage in the business of reselling attention, commonly known as advertising. Most of what is advertised is furthering wants and reinforces the job loop.

The success of market-based capitalism is that capital is no longer our binding constraint. But markets cannot be used for allocating attention due to missing prices.

Prices do not and cannot exist for what we most need to pay attention to. Price formation requires supply and demand, which don't exist for finding purpose in life, overcoming the climate crisis, conducting fundamental research, or engineering an asteroid defense.

We must use the capabilities of digital technology so that we can freely allocate human attention.

We can do so by enhancing economic, information, and psychological freedom.

Economic freedom means allowing people to opt out of the job loop by providing them with a universal basic income (UBI).

Informational freedom means empowering people to control computation and thus information access, creation and sharing.

Psychological freedom means developing mindfulness practices that allow people to direct their attention in the face of a myriad distractions.

UBI is affordable today exactly because we have digital technology that allows us to drive down the cost of producing goods and services through automation.

UBI is the cornerstone of a new social contract for the Knowledge Age, much as pensions and health insurance were for the Industrial Age.

Paid jobs are not a source of purpose for humans in and of themselves. Doing something meaningful is. We will never run out of meaningful things to do.

We need one global internet without artificial geographic boundaries or fast and slow lanes for different types of content.

Copyright and patent laws must be curtailed to facilitate easier creation and sharing of derivative works.

Large systems such as Facebook, Amazon, Google, etc. must be mandated to be fully programmable to diminish their power and permit innovation to take place on top of the capabilities they have created.

In the longrun privacy is incompatible with technological progress. Providing strong privacy assurances can only be accomplished via controlled computation. Innovation will always grow our ability to destroy faster than our ability to build due to entropy.

We must put more effort into protecting individuals from what can happen to them if their data winds up leaked, rather than trying to protect the data at the expense of innovation and transparency.

Our brains evolved in an environment where seeing a cat meant there was a cat. Now the internet can show us an infinity of cats. We can thus be forever distracted.

It is easier for us to form snap judgments and have quick emotional reactions than to engage our critical thinking facilities.

Our attention is readily hijacked by systems designed to exploit these evolutionarily engrained features of our brains.

We can use mindfulness practices, such as conscious breathing or meditation to take back and maintain control of our attention.

As we increase economic, informational and psychological freedom, we also require values that guide our actions and the allocation of our attention.

We should embrace a renewed humanism as the source of our values.

There is an objective basis for humanism. Only humans have developed knowledge in the form of books and works of art that transcend both time and space.

Knowledge is the source of humanity’s great power. And with great power comes great responsibility.

Humans need to support each other in solidarity, irrespective of such differences as gender, race or nationality.

We are all unique, and we should celebrate these differences. They are beautiful and an integral part of our humanity.

Because only humans have the power of knowledge, we are responsible for other species. For example, we are responsible for whales, rather than the other way round.

When we see something that could be improved, we need to have the ability to express that. Individuals, companies and societies that do not allow criticism become stagnant and will ultimately fail.

Beyond criticism, the major mode for improvement is to create new ideas, products and art. Without ongoing innovation, systems become stagnant and start to decay.

We need to believe that problems can be solved, that progress can be achieved. Without optimism we will stop trying, and problems like the climate crisis will go unsolved threatening human extinction.

If we succeed with the transition to the Knowledge Age, we can tackle extraordinary opportunities ahead for humanity, such as restoring wildlife habitats here on earth and exploring space.

We can and should each contribute to leaving the Industrial Age behind and bringing about the Knowledge Age.

We start by developing our own mindfulness practice and helping others do so.

We tackle the climate crisis through activism demanding government regulation, through research into new solutions, and through entrepreneurship deploying working technologies.

We defend democracy from attempts to push towards authoritarian forms of government.

We foster decentralization through supporting localism, building up mutual aid, participating in decentralized systems (crypto and otherwise).

We promote humanism and live in accordance with humanist values.

We recognize that we are on the threshold of both transhumans (augmented humans) and neohumans (robots and artificial intelligences).

We continue on our epic human journey while marveling at (and worrying about) our aloneness in the universe.

We act boldly and with urgency, because humanity’s future depends on a successful transition to the Knowledge Age.

1K notes

·

View notes

Text

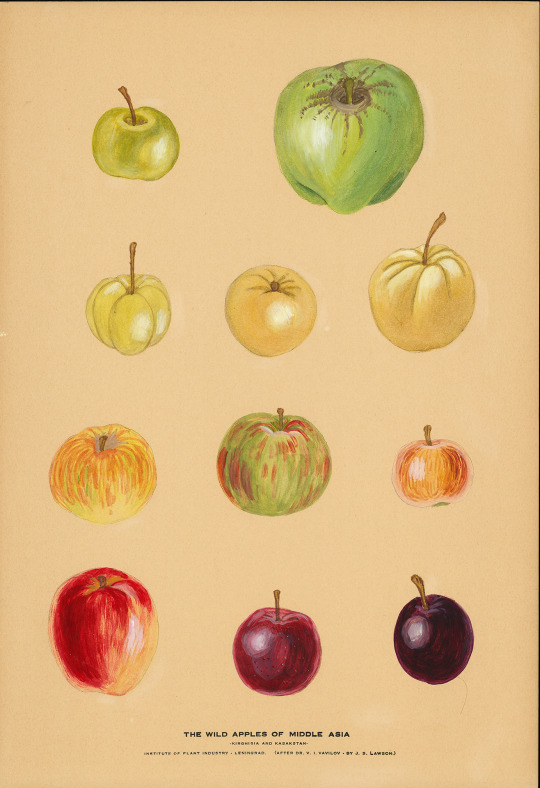

Seed Saver

Wild Apples of Middle Asia, produced by J.S. Lawson from field drawings made by Nikolai Vavilov (1 of 6 plates donated to Cornell professor of horticulture Richard Wellington by Nikolai Vavilov during the 6th International Genetics Conference held in Geneva, N.Y. in 1932; For an online look at the complete illustration set, please visit the Biodiversity Heritage Library at bit.ly/vavilov-apples).

The rare and distinctive collections vault at Mann Library houses hand-colored prints of wild apples and pears that tell an extraordinary story. The wild fruit specimens depicted were collected in Central Asia and the Caucasus by the Russian plant scientist Nikokai Vavilov (1887-1943) and his team of botanist colleagues over the course of extensive plant-finding expeditions during the early decades of the 1900s. These illustrations provide a full color glimpse of the intrepid work undertaken by a pioneering life scientist to advance food security in Russia and the world beyond. Sadly, Nikolai Vavilov’s was a brilliant career that was cut brutally short. January 26th marks the anniversary of Vavilov’s death at fifty-five in a Soviet prison in Saratov, Russia. We post this piece today in profound esteem for his inspiring legacy.

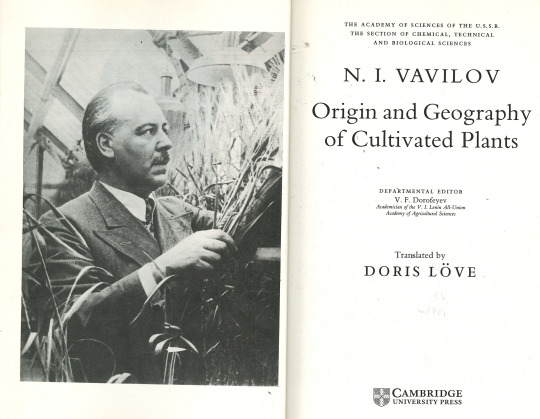

During his all-too-brief life, Nikolai Vavilov advanced the knowledge of genetics and plant science in innumerable ways—in both lab and field. His work on genetic homology led to the formulation of scientific law, while his expeditions to remote parts of the world both founded the largest seed bank of his time and traced the origins of numerous crop species.

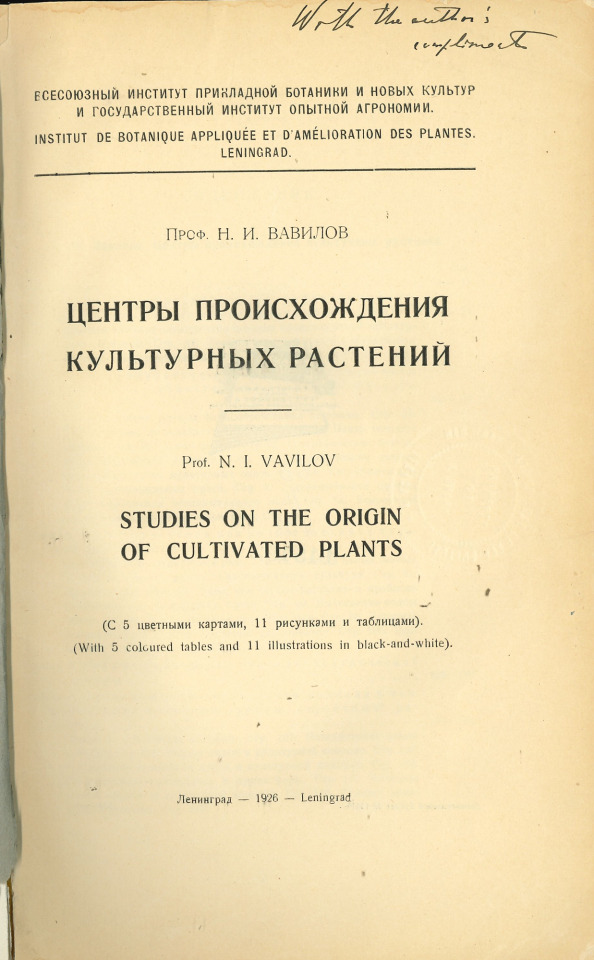

Original Russian edition of N.I.Vavilov’s Studies on the Origin of Cultivated Plants, Leningrad, 1926, donated to Cornell University by the author. The work was widely acclaimed by the world’s plant scientists for establishing the geographical origins of major food crops.

Born in 1887 just west of Moscow, Vavilov became an international star of Soviet science in the 1920s. He traveled widely, not just to collect specimens but to collaborate and share ideas with fellow scientists around the world. In 1932 Vavilov came to Ithaca to participate at the 6th International Congress on Genetics, hosted at Cornell University.

The Sixth International Congress on Genetics, Cornell University, 1932; excerpt shows Nikolai Vavilov among his international colleagues.

He wasn't here simply to lecture, though; afterwards, writing for a Soviet audience, he made effort to bring what he learned in Ithaca to the USSR. An excellent example is what Vavilov wrote about Dr. Barbara McClintock, who was researching maize genetics at Cornell:

"For our understanding of chromosomes, the star exhibit was the work of the young assistant to Cornell University, Miss McClintock. She displayed her remarkable preparations of corn chromosomes, allowing one to see the chromosomes' internal structure. This new technique made it possible to visually distinguish chromosomes, and to identify discrete chromosomal regions. Her astute understanding of the genetic background of corn could be seen by looking at the maps she created. These maps charted the distribution of genes over cytological scans, making it possible to connect the internal structure of the chromosome with the external phenomena of the genes. Miss McClintock was able to capture the conjugation of non-homologous chromosomes, and map the chromosomal sectioning of corn. This discovery is of huge significance, as all modern conceptions of genetics are based on the assumption that only homologous chromosomes conjugate."

(Written by N. I. Vavilov for the All-Soviet Academy of Agricultural and Rural Sciences in the name of Lenin: Institute of Applied Botany of USSR, available online in Russian at the All-Russian Research Institute of Plant Genetics. Translated to English by Anya Osatuke)

Unfortunately, the 1932 Genetics Congress in Geneva, N.Y. was the last the world outside of Russia would see of Nikolai Vavilov. By the 1930’s, Vavilov’s line of research in plant genetics had fallen deeply out of favor with the Stalin regime, which sought to purge Soviet institutes of science from any scholarship that argued with principles of Lysenkoism, a doctrine of the Soviet era—since de-bunked—that rejected Mendelian genetics and asserted an inexorable passing along of environmentally-influenced traits from all organisms to their offspring. Increasingly pressured by the Stalin government and hedged out by ideological pseudo-science, Vavilov was barred from travel until his arrest in 1940. Three years later, he perished imprisoned, likely from starvation.

By the early 1950s, the political tides in the Soviet Union were turning. Soviet science under the regime of Nikita Kruschev slowly abandoned Lysenkoism. In 1987, a celebration marked the official rehabilitation of Vavilov and his scientific contributions in the eyes of the Soviet government. A postage stamp was issued in Vavilov’s honor and a collection of his papers on food crop origins was published, with an English translation produced five years later and reissued in 1997. Today, Russia’s premier institute for genetics research bears Vavilov’s name: the Vavilov Institute of General Genetics in Moscow. And the institute that Vavilov founded over a century ago, the Institute for Plant Genetic Resources in St. Petersburg, which keeps a collection of seeds of thousands of food plant varieties and has an extraordinary story of its own to tell, continues to be a world renowned center for the preservation of agrobiodiversity.

The important story of Nikolai Vavilov is discussed in more detail in the exhibit Cultivating Silence: Nikolai Vavilov and the Suppression of Science in the Modern Era, which opened in the Mann Library lobby in October 2021 and can now also be viewed at exhibits.library.cornell.edu/cultivating-silence.

#vaults of mann#CornellRAD#vavilov#botany#science history#agricultural history#agriculture#plant science#Cornell University Library

21 notes

·

View notes

Text

Secrets of the Deltarune

Okay so I was taking a closer look at the Deltarune and I started to notice some really weird things. It’s a symbol for the Kingdom of Monsters, right? Wrong. Gerson tells us “That's the Delta Rune, the emblem of our kingdom.The Kingdom...Of Monsters.” Okay so its the same thing, right? Nope. I looked up emblem and its distinction from Symbol. A Symbol represents an idea, a process, or a physical entity. While an Emblem is often an abstract that represents a concept like a moral truth or an allegory. And when it is used for a person, it is usually a King, a saint, or even a deity. An emblem crystallizes in concrete, visual terms some abstraction: a deity, a tribe or nation, or a virtue or vice and can be worn as an identifier if worn as say a patch or on clothing or armor or carried on a flag or banner or shield. So what does it matter? Well Gerson even tells us why. “That emblem actually predates written history.The original meaning has been lost to time...“ Hold up. Predates written history? The beginning of written history is approximately 5500 years ago. Somewhere around 3400 B.C.E. Thats a long time. And the prophecy that goes with the symbol talks about the Underground going empty, so it can only really be as old as The War Between Humans and Monsters. But...when was that? The game doesn’t tell us the exact dates. Well we have a couple clues. At the beginning of the game we have a little cut-scene of the war and then a bit where we see a human going up the mountain only to fall down into the Underground. Most players assume that this is you, beginning your adventure. Except its not. Later in the game, when you SAVE Asriel in the True Pacifist Route, we’re shown another cutscene with the exact same human figure in EXACTLY the same position, being helped by a very young Asriel and the silhouette of Toriel. It’s Chara, not Frisk. So our date of 201X (2010-2019) takes place long before Frisk even arrives. We don’t know how long before. That really doesn’t help with when they were first thrown down there though. So I took a look at the images before that, of the war. The first image shows a human who is very different from the later pictures. Both the make of the spear and the animal hide-like clothing suggest that it’s probably stone age. The text tells us a very general “Long Ago”when describing how both races ruled the earth together. In the next two images we’re shown the actual war. The crowd of humans have various things like torches and spears. Those diamond type spears are very similar to Roman Pilums. The Human figure with a sword was interesting though. He bore a mantle (cape or cloak) and is sporting a sword. Though there’s not much detail, we can still identify the general time period of the sword. The size isn’t big enough for a proper claymore or longsword, or even a hand and half sword. Since our figure appears to be moving forward, and we can guess that it’s not in a friendly manner given the context, yet still holding the sword in one hand instead of two, it’s probably a one handed broadsword. It also has a cruciform hilt (cross-shaped) that is slightly curved. The blade is quite wide with what appears to be straight edges (based on two images with limited detail). And it has a very narrow Ricasso, an unsharpened length of blade just above the guard or handle. Ricassos were used all throughout history, but they’re pretty notable for the Early Medieval Period in Europe. And the rest of the sword (blade type, length, crossguard, and method of use) is very reminiscent of a Medieval Knightly Arming Sword, the prominent type of sword in that period from the 10th to 13th centuries. So I had to take a closer look at my spears. Turns out, they actually more closely resemble a medieval cavalry lance or javelin. And many Javelins have their root in the style of the Roman Pilums, including the sometimes diamond shaped tips. The sword and mantle of the figure suggest heavily he’s a knight, and backed up by the spear carriers we can guess that its the Early Medieval Period, possibly the beginning of the Romanesque Period. So that would place us all roughly a thousand and at least ten years before Chara fell into the Underground in 201X. Asgore was certainly alive back then. In the Genocide Route Gerson says “Long ago, ASGORE and I agreed that escaping would be pointless...Since once we left, humans would just kill us.“ and in the Post-Pacifist when you go back to talk to everyone he’ll say this when talking about Undyne “I used to be a hero myself, back in the old days. Gerson, the Hammer of Justice.” He even talks about how Undyne would follow him around when he was beating up bad guys, and try to help, by enthusiastically attacking people at random such as the mailman. This tells us that Gerson and Asgore are as old as the original war and both had been part of the battle. And both lived long enough to survive till now. Gerson is quite old looking, while Asgore is not. He explains this by saying that Boss Monsters don’t age unless they have children and then they age as their children grow, otherwise they’ll be the same age forever. But Undyne doesn’t appear to be old. And I started to wonder how long normal monsters lived in comparison to Boss Monsters. A long time for sure. From the Undertale 5th Anniversary Alarm Clock Dialogue we can learn that Asgore once knew a character called Rudy (who also appears in the Deltarune Game), who he met at Hotland University and appeared to be generally the same age as Asgore. Since it takes place in Hotland we know that it was already when they were underground, Asgore was King and was already doing his Santa Clause thing, and that Asgore was trying to find ways to occupy his time aside from actually Ruling. In the dialogue he tells us that Rudy began to look older than him. “I was there for it all. His Youth, his Marriage, his Fatherhood. Then, suddenly, one day... he fell down. ... Rudy... I... was never able to show you the sun.” Monsters can live a long time. But Boss Monsters, as long as they don’t have a child, can live nearly forever as long as they aren’t killed. Based on that, Undyne is probably quite young and Gerson is incredibly old even for a Monster, and yet only recently he’s stopped charging around fighting bad guys. Since Undyne was with him, those bad guys were in the Underground, and his distinction of her attacking not so bad folk like the mailman, means that he was probably in an official capacity to fight crime, such as a guardsman, or maybe captain of the royal guard. So. Even though there’s plenty of time for a prophecy to spring up naturally. We have a number of Monsters who have actually lived that long that would be more than happy to correct mistakes and assumptions. Gerson is quite elderly and is a tad forgetful, but he still knows much. Characters such as Toriel and Asgore are still hale and hearty, and both had witnessed so much. Though we know very little about the character, Elder Puzzler is also implied to be quite aged and knows a great deal about the “Puzzling Roots” of Monster History. You’re probably wondering what all of this is leading to. Well with these characters in place to maintain knowledge of history in the populace, then we have an Underground which created a prophecy AFTER it was trapped there, which leads me to conclude that when the prophecy was created, it must have been referencing something older than the War of Monsters and Humans.

“The original meaning has been lost to time... All we know is that the triangles symbolize us monsters below, and the winged circle above symbolizes... Somethin' else. Most people say it's the 'angel,' from the prophecy...” ‘Angel’. This is when we hear about the angel. We see the Deltarune on Toriel’s clothing and on the Ruins door. As well as behind Gerson himself. The thing he mentions clearly has wings of some kind. Surrounding a ball (note to self: Look into possible connection between mythical ball artifact from the piano room and the Deltarune Emblem). It looks a little like the fairy from the Zelda series. Those “triangles” are the greek letter Delta. That letter has a lot of connections and meanings to it. A river delta is shaped like the letter which is how it got its name. There are a number of maths and science connections. But the two connections you’d be interested in are that a Delta chord is another name for a Major Seventh Chord in music. The soundtrack of Undertale uses these chords to do fantastic things with the tone and aesthetic of its leitmotifs, changing them from a happy or hopeful tune, to a dark and despairing one without actually changing the melody. And in a subfield of Set Theory, a branch of mathematics and philosophical logic, it is used to calculate and examines the conditions under which one or the other player of a game has a winning strategy, and the consequences of the existence of such strategies. The games studied in set theory are usually Gale–Stewart games—two-player games of perfect information (each player, when making any decision, is perfectly informed of all the events that have previously occurred, including the "initialization event" of the game (e.g. the starting hands of each player in a card game)) in which the players make an infinite sequence of moves and there are no draws. But why is one of them turned upside down? I started looking things up again. Turns out there is such a symbol. The Nabla symbol is the Greek Letter Delta only inverted so that it appears upside down. Its name comes from the Phoenician harp shape, though its also called the “Del”. A musical connection is exactly what Toby would do. But its main use is in mathematics, where it is a mathematical notation to represent three different operators which make equations infinitely easier to write. These equations are all concerned with what is called Physical Mathematics. That is... Mathematics that calculate and have to do with measuring the physical world. Why is that relevant? Well the difference between humans and monsters is that humans have physical bodies while monsters are made primarily of magic. Well I also discovered that the Delta symbol for the ancient Greeks was sometimes used to as an abbreviation for the word δύση , which meant the West in the compass points. West, westerly, sunset, twilight, nightfall, dusk, darkness, decline, end of a day. All this symbolism for a couple of triangles. There’s entire books devoted to them. And he calls the whole symbol, deltas and angel alike, the Delta RUNE. Whats a rune? Well a rune is a letter, but specifically a letter from the writing of one of the Germanic Languages before the adoption of the Latin alphabet. Interestingly... the Greek Letter Delta does NOT qualify as a Rune. In any stretch of the word. I searched for hours. What I DID find was the etymological origins of the word Rune. It comes from a Proto-Germanic word “rūnō“ which means something along the lines of “whisper, mystery, secret, secret conversation, letter”. Interesting. So since its paired up with the Delta... it could be taken to mean “The Secret of the Delta” or “The Delta’s Secret”. If we make a few assumptions we might even get something like “The Secret of the West” or “The Mystery of the Twilight” or numerous other variations that have different connotations. It’s conjecture, certainly, and possibly a few stretches. But it is certainly there to think about. My thoughts centered around the positioning of the letters. The idea that the one facing up represented Humanity, and the two ordinary Deltas were Monsters. With the Angel above them all. Or rather, SOMETHING above them all. We have no proof that the idea of an Angel existed before the Underground’s prophecy. I like to think it did because usually that sort of thing draws on previously existing beliefs and ideas. For all we know the symbol could represent an abstract idea that governed both monsters and humans. Like “Kill or be killed” or “Do unto others as you would have others do unto you” or other basic idiomatic ideologies of that sort. Other than the realization that the Deltarune is older than the prophecy and the Underground, I didn’t have a concrete idea of what the Emblem actually means. Just a lot of theories and connective ideas. But there’s certainly a lot to be found. I don’t really know how much thought Toby actually put into this, but he’s quite well known for secrets within secrets. So its possible he knew all this going in. If he’s anything like me, and I am notorious for writing this sort of twisting references within references within references into my stories, then he’s probably at least aware of an existing connection. Its quite probably that the Deltarune is exactly what Gerson tells us. An emblematic set of symbols that is used to represent the continuing Kingdom of Monsters and has been since before written history. But as he says... its so old that it might have had a different meaning originally, whatever idea the Monsters wanted to remember, wanted to uphold enough to use it for their royal family and their kingdom, a reminder. Of something, or someone.

#undertale#deltarune#undertale deltarune#delta#greek delta#delta symbol#rune#delta rune#nabla symbol#runes#symbols#emblems#mathematics#musical theory#medieval history#gerson undertale#emblem#undertale theory#undertale analysis#video game analysis#video game theory

80 notes

·

View notes

Link

Imagine that the US was competing in a space race with some third world country, say Zambia, for whatever reason. Americans of course would have orders of magnitude more money to throw at the problem, and the most respected aerospace engineers in the world, with degrees from the best universities and publications in the top journals. Zambia would have none of this. What should our reaction be if, after a decade, Zambia had made more progress?

Obviously, it would call into question the entire field of aerospace engineering. What good were all those Google Scholar pages filled with thousands of citations, all the knowledge gained from our labs and universities, if Western science gets outcompeted by the third world?

For all that has been said about Afghanistan, no one has noticed that this is precisely what just happened to political science. The American-led coalition had countless experts with backgrounds pertaining to every part of the mission on their side: people who had done their dissertations on topics like state building, terrorism, military-civilian relations, and gender in the military. General David Petraeus, who helped sell Obama on the troop surge that made everything in Afghanistan worse, earned a PhD from Princeton and was supposedly an expert in “counterinsurgency theory.” Ashraf Ghani, the just deposed president of the country, has a PhD in anthropology from Columbia and is the co-author of a book literally called Fixing Failed States. This was his territory. It’s as if Wernher von Braun had been given all the resources in the world to run a space program and had been beaten to the moon by an African witch doctor.

…

Phil Tetlock’s work on experts is one of those things that gets a lot of attention, but still manages to be underrated. In his 2005 Expert Political Judgment: How Good Is It? How Can We Know?, he found that the forecasting abilities of subject-matter experts were no better than educated laymen when it came to predicting geopolitical events and economic outcomes. As Bryan Caplan points out, we shouldn’t exaggerate the results here and provide too much fodder for populists; the questions asked were chosen for their difficulty, and the experts were being compared to laymen who nonetheless had met some threshold of education and competence.

At the same time, we shouldn’t put too little emphasis on the results either. They show that “expertise” as we understand it is largely fake. Should you listen to epidemiologists or economists when it comes to COVID-19? Conventional wisdom says “trust the experts.” The lesson of Tetlock (and the Afghanistan War), is that while you certainly shouldn’t be getting all your information from your uncle’s Facebook Wall, there is no reason to start with a strong prior that people with medical degrees know more than any intelligent person who honestly looks at the available data.

…

I think one of the most interesting articles of the COVID era was a piece called “Beware of Facts Man” by Annie Lowrey, published in The Atlantic.

…

The reaction to this piece was something along the lines of “ha ha, look at this liberal who hates facts.” But there’s a serious argument under the snark, and it’s that you should trust credentials over Facts Man and his amateurish takes. In recent days, a 2019 paper on “Epistemic Trespassing” has been making the rounds on Twitter. The theory that specialization is important is not on its face absurd, and probably strikes most people as natural. In the hard sciences and other places where social desirability bias and partisanship have less of a role to play, it’s probably a safe assumption. In fact, academia is in many ways premised on the idea, as we have experts in “labor economics,” “state capacity,” “epidemiology,” etc. instead of just having a world where we select the smartest people and tell them to work on the most important questions.

But what Tetlock did was test this hypothesis directly in the social sciences, and he found that subject-matter experts and Facts Man basically tied.

…

Interestingly, one of the best defenses of “Facts Man” during the COVID era was written by Annie Lowrey’s husband, Ezra Klein. His April 2021 piece in The New York Times showed how economist Alex Tabarrok had consistently disagreed with the medical establishment throughout the pandemic, and was always right. You have the “Credentials vs. Facts Man” debate within one elite media couple. If this was a movie they would’ve switched the genders, but since this is real life, stereotypes are confirmed and the husband and wife take the positions you would expect.

…

In the end, I don’t think my dissertation contributed much to human knowledge, making it no different than the vast majority of dissertations that have been written throughout history. The main reason is that most of the time public opinion doesn’t really matter in foreign policy. People generally aren’t paying attention, and the vast majority of decisions are made out of public sight. How many Americans know or care that North Macedonia and Montenegro joined NATO in the last few years? Most of the time, elites do what they want, influenced by their own ideological commitments and powerful lobby groups. In times of crisis, when people do pay attention, they can be manipulated pretty easily by the media or other partisan sources.

If public opinion doesn’t matter in foreign policy, why is there so much study of public opinion and foreign policy? There’s a saying in academia that “instead of measuring what we value, we value what we can measure.” It’s easy to do public opinion polls and survey experiments, as you can derive a hypothesis, get an answer, and make it look sciency in charts and graphs. To show that your results have relevance to the real world, you cite some papers that supposedly find that public opinion matters, maybe including one based on a regression showing that under very specific conditions foreign policy determined the results of an election, and maybe it’s well done and maybe not, but again, as long as you put the words together and the citations in the right format nobody has time to check any of this. The people conducting peer review on your work will be those who have already decided to study the topic, so you couldn’t find a more biased referee if you tried.

Thus, to be an IR scholar, the two main options are you can either use statistical methods that don’t work, or actually find answers to questions, but those questions are so narrow that they have no real world impact or relevance. A smaller portion of academics in the field just produce postmodern-generator style garbage, hence “feminist theories of IR.” You can also build game theoretic models that, like the statistical work in the field, are based on a thousand assumptions that are probably false and no one will ever check. The older tradition of Kennan and Mearsheimer is better and more accessible than what has come lately, but the field is moving away from that and, like a lot of things, towards scientism and identity politics.

…

At some point, I decided that if I wanted to study and understand important questions, and do so in a way that was accessible to others, I’d have a better chance outside of the academy. Sometimes people thinking about an academic career reach out to me, and ask for advice. For people who want to go into the social sciences, I always tell them not to do it. If you have something to say, take it to Substack, or CSPI, or whatever. If it’s actually important and interesting enough to get anyone’s attention, you’ll be able to find funding.

If you think your topic of interest is too esoteric to find an audience, know that my friend Razib Khan, who writes about the Mongol empire, Y-chromosomes and haplotypes and such, makes a living doing this. If you want to be an experimental physicist, this advice probably doesn’t apply, and you need lab mates, major funding sources, etc. If you just want to collect and analyze data in a way that can be done without institutional support, run away from the university system.

The main problem with academia is not just the political bias, although that’s another reason to do something else with your life. It’s the entire concept of specialization, which holds that you need some secret tools or methods to understand what we call “political science” or “sociology,” and that these fields have boundaries between them that should be respected in the first place. Quantitative methods are helpful and can be applied widely, but in learning stats there are steep diminishing returns.

…

Outside of political science, are there other fields that have their own equivalents of “African witch doctor beats von Braun to the moon” or “the Taliban beats the State Department and the Pentagon” facts to explain? Yes, and here are just a few examples.

Consider criminology. More people are studying how to keep us safe from other humans than at any other point in history. But here’s the US murder rate between 1960 and 2018, not including the large uptick since then.

So basically, after a rough couple of decades, we’re back to where we were in 1960. But we’re actually much worse, because improvements in medical technology are keeping a lot of people that would’ve died 60 years ago alive. One paper from 2002 says that the murder rate would be 5 times higher if not for medical developments since 1960. I don’t know how much to trust this, but it’s surely true that we’ve made some medical progress since that time, and doctors have been getting a lot of experience from all the shooting victims they have treated over the decades. Moreover, we’re much richer than we were in 1960, and I’m sure spending on public safety has increased. With all that, we are now about tied with where we were almost three-quarters of a century ago, a massive failure.

What about psychology? As of 2016, there were 106,000 licensed psychologists in the US. I wish I could find data to compare to previous eras, but I don’t think anyone will argue against the idea that we have more mental health professionals and research psychologists than ever before. Are we getting mentally healthier? Here’s suicides in the US from 1981 to 2016

…

What about education? I’ll just defer to Freddie deBoer’s recent post on the topic, and Scott Alexander on how absurd the whole thing is.

Maybe there have been larger cultural and economic forces that it would be unfair to blame criminology, psychology, and education for. Despite no evidence we’re getting better at fighting crime, curing mental problems, or educating children, maybe other things have happened that have outweighed our gains in knowledge. Perhaps the experts are holding up the world on their shoulders, and if we hadn’t produced so many specialists over the years, thrown so much money at them, and gotten them to produce so many peer reviews papers, we’d see Middle Ages-levels of violence all across the country and no longer even be able to teach children to read. Like an Ayn Rand novel, if you just replaced the business tycoons with those whose work has withstood peer review.

Or you can just assume that expertise in these fields is fake. Even if there are some people doing good work, either they are outnumbered by those adding nothing or even subtracting from what we know, or our newly gained understanding is not being translated into better policies. Considering the extent to which government relies on experts, if the experts with power are doing things that are not defensible given the consensus in their fields, the larger community should make this known and shun those who are getting the policy questions so wrong. As in the case of the Afghanistan War, this has not happened, and those who fail in the policy world are still well regarded in their larger intellectual community.

…

Those opposed to cancel culture have taken up the mantle of “intellectual diversity” as a heuristic, but there’s nothing valuable about the concept itself. When I look at the people I’ve come to trust, they are diverse on some measures, but extremely homogenous on others. IQ and sensitivity to cost-benefit considerations seem to me to be unambiguous goods in figuring out what is true or what should be done in a policy area. You don’t add much to your understanding of the world by finding those with low IQs who can’t do cost-benefit analysis and adding them to the conversation.

One of the clearest examples of bias in academia and how intellectual diversity can make the conversation better is the work of Lee Jussim on stereotypes. Basically, a bunch of liberal academics went around saying “Conservatives believe in differences between groups, isn’t that terrible!” Lee Jussim, as someone who is relatively moderate, came along and said “Hey, let’s check to see whether they’re true!” This story is now used to make the case for intellectual diversity in the social sciences.

Yet it seems to me that isn’t the real lesson here. Imagine if, instead of Jussim coming forward and asking whether stereotypes are accurate, Osama bin Laden had decided to become a psychologist. He’d say “The problem with your research on stereotypes is that you do not praise Allah the all merciful at the beginning of all your papers.” If you added more feminist voices, they’d say something like “This research is problematic because it’s all done by men.” Neither of these perspectives contributes all that much. You’ve made the conversation more diverse, but dumber. The problem with psychology was a very specific one, in that liberals are particularly bad at recognizing obvious facts about race and sex. So yes, in that case the field could use more conservatives, not “more intellectual diversity,” which could just as easily make the field worse as make it better. And just because political psychology could use more conservative representation when discussing stereotypes doesn’t mean those on the right always add to the discussion rather than subtract from it. As many religious Republicans oppose the idea of evolution, we don’t need the “conservative” position to come and help add a new perspective to biology.

The upshot is intellectual diversity is a red herring, usually a thinly-veiled plea for more conservatives. Nobody is arguing for more Islamists, Nazis, or flat earthers in academia, and for good reason. People should just be honest about the ways in which liberals are wrong and leave it at that.

…

The failure in Afghanistan was mind-boggling. Perhaps never in the history of warfare had there been such a resource disparity between two sides, and the US-backed government couldn’t even last through the end of the American withdrawal. One can choose to understand this failure through a broad or narrow lens. Does it only tell us something about one particular war or is it a larger indictment of American foreign policy?

The main argument of this essay is we’re not thinking big enough. The American loss should be seen as a complete discrediting of the academic understanding of “expertise,” with its reliance on narrowly focused peer reviewed publications and subject matter knowledge as the way to understand the world. Although I don’t develop the argument here, I think I could make the case that expertise isn’t just fake, it actually makes you worse off because it gives you a higher level of certainty in your own wishful thinking. The Taliban probably did better by focusing their intellectual energies on interpreting the Holy Quran and taking a pragmatic approach to how they fought the war rather than proceeding with a prepackaged theory of how to engage in nation building, which for the West conveniently involved importing its own institutions.

A discussion of the practical implications of all this, or how we move from a world of specialization to one with better elites, is also for another day. For now, I’ll just emphasize that for those thinking of choosing an academic career to make universities or the peer review system function better, my advice is don’t. The conversation is much more interesting, meaningful, and oriented towards finding truth here on the outside.

11 notes

·

View notes

Text

ok so well it started from a chem lecture in class 11th i think? yeah so there's this thing called Heisenberg's principle it basically states that if your observing an electron and if u know it's velocity in space, then u cannot know it's position in space. and if you know the position, the exact x, y and z coordinate of the electron at a time, then u can never know the exact velocity of the electron.

anyway so just the fact that. science. a subject based on calculations and precisions and maths is so fucking vague that THEOREMS have to state that "no you cannot know this for sure" just got me thinking. like i considered 2-3 examples and like arts is vague? but so is science? because your version of truth in science is defined by what you observe, hear, feel and whether it is alike or not according to a person and majority of humans in general. but then again and who said that what humans observe is in fact the absolute truth? like humans as always tend to be overly selfish and they consider themselves and their own observations to be the truth? but that's just stupid bc inherently there are no facts and there are only assumptions any sentence and all theorems are based on assumptions. like even 1+1 = 2 is based on the assumption that the world works on a mathematical scale governed by humans and that just coincides with almost all of human knowledge over the years. like everything is an assumption that is believed by a group of people and that just makes it? the truth? not convinced here but ok and well there are groups that believe that humans have to be in such a mindset to progress?? but then that's ignoring the fact that humanity is random. the universe, for all we know, is a fluke and it has just one law, and that's randomness the universe pushes itself to randomness, not chaos or discipline and it's just wild. that there is a tremendous amount of randomness that has lead to everything being the way it is this moment in time [m considering time to be linear rn and us as matter based individuals who wake up everyday with no guide as to how they want to spend their day/knowledge of how they're going to spend their day] and that's just wild? bc one moment after this, we might just get sucked into a black hole and we'd never even k n o w like the principle of randomness is what amazes me sometimes and it's all due to randomness, you going "oooooooooooh" over a cluster of nebulae, hormones finding it pretty etc etc etc and also. life.