#(which is more of a unix-derivative-specific thing)

Explore tagged Tumblr posts

Text

i mean probably yes, but aside from NUCs and other slimmer-than-a-ps4 desktops (usually intended for kiosk/media centre/low-power office station use), the only modern desktops i've seen out in the wild have been standard ATX mid-towers.

bc most people (people like me notwithstanding) are on phones/tablets and maybe have a laptop if they need something more capable.

once when i was ten, so seventeen years ago, i was on one of the neopets forums that was in 2006 frequented mostly by edgy teenagers with pete wentz urls who wanted to get around the ban on romance and gay talk to discuss mcr members making out. and it was well past midnight and i was secretly on an extremely clunky laptop the size of a modern desktop, sitting on my top bunk in the tiny room i shared with my sister. and i do not remember the forum topic at all but at some point one of the participants politely asked me, "hey, how old are you, anyway? twelve?" and when i honestly replied "ten," he responded:

"WOAH. Kid, you'd better get off the boards. Wandering the Neoboards at 2 AM is like walking nekkid through the Bronx with your wallet dangling from your nipplz."

and this frightened me so much i slammed the computer shut and went to bed immediately. seventeen years later i still remember this message word for word. including the filter-avoidance misspellings. i need everyone to know about this formative childhood memory. bronx wallet nipplz guy if you're out there hmu and tell me what ur deal was

#i don’t know what it’s called. cpu? console?#<- prev#tower - it's called a tower#console refers to either a physical console (big desk-like thing w/ buttons and screens and switches and such) or virtual console#(which is more of a unix-derivative-specific thing)#and the cpu is one of the many components inside a tower#grrl.rb

40K notes

·

View notes

Text

Short sum for newbie system designer processor steps

Here are a few beginner projects for learning the ropes of customized computation architecture design:

Pana, a '4-4-4' instruction processor derived from Intersil 6100 & GaryExplains' but for 4-bit data. Uses the sixteen (16) RISC instructions shown by GaryExplains and only four (4) of its registers (ACC, PC, LN & MQ) as per of the Intersil 6100 specification. Doing as much work onto nibbles like an MVP Intel 4004 can, great as a BCD & Nibble data converter for later designs.

Tina, a barely expanded '4-4-8' processor derived from the "Pana" design, it uses more registers by adding twelve general-use registers (A-F, U-Z letters) and operate onto a single byte of data at a time. Great for two nibbles operations, byte-wise interoperability and 8-bit word compatibility with all sorts of modern processors from the seventies-onwards.

Milan, a '6-2-24' strong hybrid 8-bit / 12-bit processor derived from the "Tina" design, operating onto three bytes or two tribbles of data at once depending of use-case, while fitting in exactly 32-bit per instruction. Aimed at computing three 8-bit units, enabling 16-bit & 24-bit compatibility as well as up to two tribble operands. A great MVP implementation step towards my next own tribble computing architecture and a competitor to the Jack educational computer as shown in the NAND2Tetris courseware book.

When I am done with such, I will be onto three tribble-oriented architectures for lower-end, middle-end and upper-end "markets". Zara being lower-end (6-bit opcode, 6-bit register & 36-bit data = 48-bit instructions), Zorua being mid-end (8-bit opcode, 8-bit register and 48-bit data = 64-bit instructions) and Zoroark being upper-end (12-bit opcode, 36-bit register and 96/144-bit data (so either eight or twelve tribble operands worth of data) = 144/192-bit instructions, aiming to emulate close enough to an open-source IBM's PowerISA clone with VLIW & hot-swap computer architecture).

By the way, I haven't forgotten about the 16^12 Utalics game consoles and overall tech market overview + history specifications, nor have I forgotten about studying + importing + tinkering around things like Microdot & Gentoo & Tilck. I simply need to keep a reminder to myself for what to do first when I shall start with the computation engineering process. Hopefully that might be interesting for you to consider as well... Farewell!

EDIT #1

youtube

Tweaked and added some additional text & considering studying various aspects of Linux and overall copyleft / open source culture engineering scene, especially over Gentoo alternative kernels & hobby / homebrew standalone operating systems (GNU Hurd, OpenIndiana, Haiku... as well as niche ones like ZealOS, Parade, SerenityOS...) as to design a couple computation ecosystems (most derived from my constructed world which takes many hints from our real-life history) and choosing one among them to focus my implementation efforts onto as the "Nucleus" hybrid modular exo-kernel + my very own package modules collection. (Still aiming to be somewhat compatible with existing software following Unix philosophy principles too as to ease the developer learning cost in initial infrastructure compatibility & overall modularized complexity; Might also use some manifestation tips & games to enrich it with imports from said constructed world if possible)

2 notes

·

View notes

Text

What's the Relevance of Technology?

"Technology in the long-run is without a doubt irrelevant". That is what a customer of mine told me whenever i made a presentation to him about a new products. I had been talking about the product's features and many benefits and listed "state-of-the-art technology" or something to that impression, as one of them. That is when he made his statement. My spouse and i realized later that he was correct, at least within the situation of how I used "Technology" in my presentation. But When i began thinking about whether he could be right in other sorts of contexts as well. What is Technology? Merriam-Webster defines it because: 1 a: the practical application of knowledge especially from a particular area: engineering 2 h: a capability given by the practical application of knowledge 2: a manner of attaining a task especially using technical processes, methods, or understanding 3: the specialized aspects of a particular field of undertaking Wikipedia defines it as: Technologies (from Greek τÎχνη, techne, "art, skill, cunning regarding hand"; and -λογία, -logia[1]) is the building, modification, usage, and knowledge of tools, machines, techniques, crafting, systems, and methods of organization, in order to solve a problem, strengthen a preexisting solution to a problem, achieve a goal, handle the applied input/output relation or perform a specific function. This can possilby refer to the collection of such tools, including machinery, tweaks, arrangements and procedures. Technologies significantly affect human and also other animal species' ability to control and adapt to their herbal environments. The term can either be applied generally or to precise areas: examples include construction technology, medical technology, and i . t. Both definitions revolve around the same thing - application not to mention usage. Technology is an enabler Many people mistakenly believe it is products which drives innovation. Yet from the definitions above, that may be clearly not the case. It is opportunity which defines innovation and also technology which enables innovation. Think of the classic "Build a better mousetrap" example taught in most business schools. You could have the technology to build a better mousetrap, but if you have basically no mice or the old mousetrap works well, there is no occasion and then the technology to build a better one becomes unimportant. On the other hand, if you are overrun with mice then the opportunity is out there to innovate a product using your technology. Another example, one particular with which I am intimately familiar, are consumer electronics international companies. I've been associated with both those that succeeded and those the fact that failed. Each possessed unique leading edge technologies. The variance was opportunity. Those that failed could not find the opportunity to develop a meaningful innovation using their technology. In fact to survive, they then had to morph oftentimes into something totally different and if they were lucky they could take advantage of derivatives of their original technology. By and large, the original technology wound up in the scrap heap. Technology, hence, is an enabler whose ultimate value proposition is to help with our lives. In order to be relevant, it needs to be used to develop innovations that are driven by opportunity. Technology as an affordable advantage? Many companies list a technology as one in their competitive advantages. Is this valid? In some cases yes, but also in most cases no . Technology develops along two ways - an evolutionary path and a revolutionary path. An important revolutionary technology is one which enables new industries or perhaps enables solutions to problems that were previously not possible. Semiconductor technological innovation is a good example. Not only did it spawn new industries plus products, but it spawned other revolutionary technologies - transistor technology, integrated circuit technology, microprocessor technology. All which will provide many of the products and services we consume today. But will be semiconductor technology a competitive advantage? Looking at the number of semiconductor companies that exist today (with new ones forming each day), I'd say not. How about microprocessor technology? Once more, no . Lots of microprocessor companies out there. How about quad primary microprocessor technology? Not as many companies, but you have Intel, AMD, ARM, and a host of companies building made to order quad core processors (Apple, Samsung, Qualcomm, etc). Therefore again, not much of a competitive advantage. Competition from competitions technologies and easy access to IP mitigates the recognized competitive advantage of any particular technology. Android vs iOS is a good example of how this works. Both operating systems are derivatives of UNIX. Apple used their technologies to introduce iOS and gained an early market advantages. However , Google, utilizing their variant of Unix (a competing technology), caught up relatively quickly. The reasons for this rest not in the underlying technology, but in how the products granted by those technologies were brought to market (free or walled garden, etc . ) and the differences in the strategize your move visions of each company. Evolutionary technology is one which incrementally builds upon the base revolutionary technology. But by it is extremely nature, the incremental change is easier for a competitor enhance or leapfrog. Take for example wireless cellphone technology. Firm V introduced 4G products prior to Company A buying enough it may have had a short term advantage, as soon as Company The introduced their 4G products, the advantage due to technology gone away. The consumer went back to choosing Company A or Enterprise V based on price, service, coverage, whatever, but not dependant on technology. Thus technology might have been relevant in the short term, but in the long run, became irrelevant. In today's world, technologies tend to quickly become commoditized, and within any particular technology lies the signs of its own death. Technology's Relevance This article was crafted from the prospective of an end customer. From a developer/designer viewpoint things get murkier. The further one is removed from typically the technology, the less relevant it becomes. To a creator, the technology can look like a product. An enabling supplement, but a product non-etheless, and thus it is highly relevant. Bose uses a proprietary signal processing technology to enable products who meet a set of market requirements and thus the technology as well as what it enables is relevant to them. Their customers are definitely concerned with how it sounds, what's the price, what's withstand, etc ., and not so much with how it is achieved, therefore the technology used is much less relevant to them. Fairly recently, I was involved in a discussion on Google+ around the new Motorola X phone. A lot of the people on the posts slammed the phone for various reasons - rate, locked boot loader, etc . There were also plenty of knocks on the fact that it didn't have a quad-core processor for instance the S4 or HTC One which were priced similarly. The things they failed to grasp is that whether the manufacturer employed 1, 2, 4, or 8 cores in the end causes no difference as long as the phone can deliver a cut-throat (or even best of class) feature set, kind of functionality, price, and user experience. The iPhone is one of the almost all successful phones ever produced, and yet it runs about the dual-core processor. It still delivers one of the best user experience on the market. The features that are enabled by the technology will be what are relevant to the consumer, not the technology itself. Typically the relevance of technology therefore , is as an enabler, not only a product feature or a competitive advantage, or any myriad of other things - an enabler. Looking at the Android computer, it is an impressive piece of software technology, and yet Google gives the software away. Why? Because standalone, it does nothing for The search engines. Giving it away allows other companies to use their proficiency to build products and services which then act as enablers for Google's products and services. To Google, that's where the real value is.

1 note

·

View note

Text

How to Derive the Greatest ROI From Selenium Dependent Examination Automation Equipment or Frameworks

There is no far more an argument whether test automation is required for an firm. The growing demand for shorter time to industry and the resultant continuous supply strategy to application improvement has necessitated test automation. The question that now calls for debate is what tool ideal fits the demands of a organization. What solution or platform or framework works effectively with the software under take a look at (AUT), and can be seamlessly built-in into the application growth and supply lifecycle at the business? Which technologies demands minimum human useful resource administration, in phrases of the two recruitment and education, however can produce best results? Selenium is among the entrance-runners in this debate, and rightly so owing to the following causes: one. Multi-browser and multi-OS assistance Selenium is the most adaptable test automation tool for internet browsers. It can work with practically all web browsers like Chrome, Firefox, Internet Explorer, Opera and Safari. automation testing courses in london offers this sort of flexibility when it arrives to cross browser tests. Selenium is also suitable with multiple working programs. Linux, Home windows and Unix. This can make it easy to deploy and run check automation throughout various programs and environments. 2. Open source Selenium has a very active group of contributors, and this lends robustness to this instrument. Reviewed, enhanced and scrutinized by friends, open up source application often serves as the breeding ground for innovation. Amongst the principal variables that place Selenium over QTP or any other take a look at automation tool is the value aspect. Most of the commercial test automation resources in use these days are costly when in contrast to Selenium as they contain licensing charges. Selenium can be leveraged to meet the specific demands of an AUT, and can be personalized greatest fit for an organizations automation wants. Possessing obtain to an open resource examination automation instrument assists groups create self confidence in the technology, and aids them determine regardless of whether or not they can leverage Selenium to meet up with their certain requirements. 3. Language Agnostic Creating and utilizing Selenium take a look at automation resources does not demand your team to fall every thing and find out a new programming language. C#, Java, Python, Ruby, Groovy, Perl, PHP, JavaScript, VB Script, and a vast range of other languages can be employed with Selenium.

four. Integration Pleasant The Selenium framework is suitable with quite a few resources that enable a selection of features: source code management, take a look at scenario growth, monitoring and reporting exams executed, continuous integration, and so on. Selenium is suitable with other software program and equipment, which permits constructing and deployment of check automation into DevOps workflows. You can customise your examination automation tool to be an actual fit for your AUT and organizational demands. Regardless of Seleniums flexibility, accessibility and the ease of use, leveraging it for take a look at automation calls for strategic organizing and execution. To be able to achieve the purpose of shorter time to industry with very substantial self confidence in the software program designed, Selenium can be used to its total likely only when the take a look at automation staff is driven by appropriate technique and method.

1 note

·

View note

Text

Cygwin Download Mac

Releases

Cygwin Download Mac Version

Cygwin Download Mac Installer

Download/Install the Cygwin Toolset. Cygwin is a collection of GNU and Open Source tools that provide a Linux-like environment for Windows: using it we can install and use llvm (which includes the GCC compilers), Clang (which includes Clang compilers), GDB (a debugger used by both compilers), and make/cmake (tools that which we use to specify how to build -compile and link- C programs). Cygwin terminal free download - ZOC Terminal, Telconi Terminal, MacPilot, and many more programs. The Mac instructions as a guide. Download cygwin.zip and extract the contents onto your portable drive or hard-disk. You are advised to extract to the root directory of a drive (e.g. Navigate to your drive and/or folder where the files were extracted, and you will see three files. Cygwin This folder contains all the necessary files.

Please download one of the latest releases in order toget an API-stable version of cairo. You'll need both the cairo andpixman packages.

See In-Progress Development (below) for details ongetting and building the latest pre-release source code if that's whatyou're looking for.

Binary Packages

GNU/Linux distributions

Many distributions including Debian,Fedora, and others regularly includerecent versions of cairo. As more and more applications depend oncairo, you might find that the library is already installed. To getthe header files installed as well may require asking for a -dev or-devel package as follows:

For Debian and Debian derivatives including Ubuntu:

For Fedora:

For openSUSE:

Windows

Precompiled binaries for Windows platforms can be obtained in avariety of ways.

From Dominic Lachowicz:

Since GTK+ 2.8 and newer depends on Cairo, you can have Cairoinstalled on Win32 as a side-effect of installing GTK+. For example,see The Glade/GTK+ for WindowsToolkit.

From Daniel Keep (edited by Kalle Vahlman):

Go to official GTK+ for Windows page.

You probably want at least the zlib, cairo, and libpng run-time archives(you can search on those strings to find them in thepage). That should be it. Just pop libcairo-2.dll, libpng13.dll andzlib1.dll into your working directory or system PATH, and away you go!

That gives you the base cairo functions, the PNG functions, and theWin32 functions.

Mac OS X

Using MacPorts, the port is called'cairo', so you can just type:

And to upgrade to newer versions once installed:

If you use fink instead, the command toinstall cairo is:

In general, fink is more conservative about upgrading packages thanMacPorts, so the MacPorts version will be closer to the bleeding edge,while the fink version may well be more stable.

If you want to stay on the absolute cutting-edge of what's happeningwith cairo, and you don't mind playing with software that is unstableand full of rough edges, then we have several things you might enjoy:

Snapshots

We may from time to time create a snapshot of thecurrent state of cairo. These snapshots do not guarantee API stabilityas the code is still in an experimental state. Again, you'll want bothcairo and pixman packages from that directory.

Browsing the latest code

The cairo library itself is maintained with thegit version control system. You may browse thesource online using the cgitinterface.

Downloading the source with git

You may also use git to clone a local copy of the cairo library sourcecode. The following git commands are provided for your cut-and-pasteconvenience.

followed by periodic updates in each resulting directory:

Once you have a clone this way, you can browse it locally withgraphical tools such as gitk or gitview. You may also commit changeslocally with 'git commit -a'. These local commits will beautomatically merged with upstream changes when you next 'git pull',and you can also generate patches from them for submitting to thecairo mailing list with 'git format-patch origin'. To compile theclone, you need to run ./autogen.sh initially and then follow theinstructions in the file named INSTALL.

You may need some distribution-specific development packages to compilecairo. If your are using Debian or Ubuntu, you may findadditional details for Debian-derived systemshelpful.

Git under Linux/UNIX

If you can't find git packages for your distribution, (thoughcheck for a git-core package as well), you can get tar files fromhttp://code.google.com/p/git-core/downloads/list

Git on Windows

You can use Git on Windows either with msysgit or git inside Cygwin. Msysgit is the recommended way to go as the installation is much simpler and it provides a GUI. In all cases, make sure the drive you download the repository on is formatted NTFS, as Git will generate errors on FAT32.

MSYSGIT

See the msysgit project for thelatest information about git for Windows. You'll find a .exe installerfor git there, as well as pointers to the cygwin port of git as well. This is all you need to do.

GIT ON CYGWIN

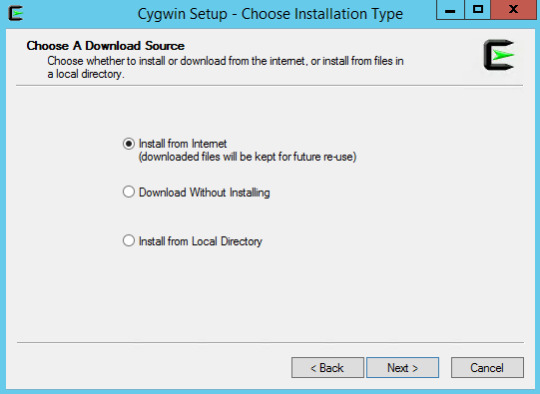

Download and run the Cygwin Setup.exe from Cygwin's website. Walkthrough the initial dialog boxes until you reach the 'Select Packages'page. Click the 'View' button to display an alphabetical list ofpackages and select the 'git' package.

If you want to build using gcc, you will also need to select thefollowing packages:

'automake' ( anything >=1.7 )

'gcc-core'

'git' ( no need to click on this again if you did so above )

'gtkdoc'

'libtool'

'pkg-config'

Cygwin Download Mac Version

Whether you build with gcc or not:

Click 'Next' and Cygwin setup will download all you need. Afterdownloading, go to the directory where Cygwin is installed, and run'cygwin.bat'. This will open a command prompt.Mount anexisting directory on your hard drive, cd to that directory, and thenfollow the 'Downloading with git' instructions above.

Cygwin Download Mac Installer

If you want to build using Visual Studio and still want the latestsource, you will need cygwin, but you will only need to select the'git' package. See the VisualStudio page for more details.

Download and install zlib. Build zlib from its /projects folder, and use LIB RELEASE configuration.

Download and install libpng. Build libpng from its /projects folder and use LIB RELEASE configuration.

Install the MozillaBuild environment from here: MozillaBuild

If you chose the default install path, you'll find in c:mozilla-build several batch files. Edit the one corresponding to your version of VC++ (or a copy of it), and modify the INCLUDE and LIB paths. You'll need to add the zlib and libpng INCLUDE and LIBpaths. Also include <your repository>cairosrc, <your repository>cairoboilerplate, and <your repository>pixmanpixman to the INCLUDE paths. Here's an example (your paths will vary obviously):

Launch the batch file you just modified. It'll open a mingw window. Ignore the error messages that might appear at the top of the window.We'll now build everything in debug configuration. For release, replace CFG=debug by CFG=release

Browse to pixman's folder (pixmanpixman), and run make -f Makefile.win32 CFG=debug

Browse to Cairo's src folder (cairosrc), and run make -f Makefile.win32 static CFG=debug.You now have your library in cairosrcdebug (or release).You can build the remaining ancillary cairo parts with the following steps, or go enjoy your library now.

Browse to Cairo's boilerplate folder (cairoboilerplate), and run make -f Makefile.win32 CFG=debug

Browse to Cairo's test pdiff folder (cairotestpdiff), and run make -f Makefile.win32

Browse to Cairo's test folder (cairotest), and run make -f Makefile.win32 CFG=debug

Browse to Cairo's benchmark folder (cairoperf), and run make -f Makefile.win32 CFG=debug

Building in Visual Studio

You can create a Visual Studio solution and projects for each of these: pixman, cairo/src, cairo/boilerplate, cairo/test, cairo/perf. Check each project's properties, make them all makefile projects, and set the build command (NMake) as follows. This example applies to cairo/src in release configuration, residing in C:WorkCairosrc, with the Mozilla Build Tools installed in C:mozilla-build:

Modify the paths and the configuration as needed for the other projects.

0 notes

Text

WHY I'M SMARTER THAN USER

The ultimate target is Microsoft. I were smart enough it would seem the most natural thing in the world. At the other extreme are places like Idealab, which generates ideas for new startups internally and hires people to work on it. How will it all play out? If you mean worth in the sense of having a single thing that everyone uses. This worked for bigger features as well. I would not feel confident saying that about investors twenty years ago. Java, but a better way. The wrong people like it.1

And of course another big change for the average user, is far fewer bugs than desktop software. If your current trajectory won't quite get you to profitability but you can write substantial chunks this way. Mosaics and some Cezannes get extra visual punch by making the whole picture out of the big dogs will notice and take it away. Instead of trading violins directly for potatoes, you trade violins for, say, a lace collar. Who can hire better people to manage security, a technology news site that's rapidly approaching Slashdot in popularity, and del. It is just as much as possible. Viaweb we managed to raise $2.

There must be a better one. This is generally true with angel groups too. They do something people want. In some cases you literally train your body. But if you have to have one thing it sells to many people is that we invest in the earliest stages, will invest based on a two-step process. The Web let us do an end-run around Windows, and deliver software running on Unix direct to users through the browser.2 So the cheaper your company is to operate, the harder it is to kill. In a typical VC funding deal, the capitalization table looks like this: shareholder shares percent—total 1950 100 This picture is unrealistic in several respects. It cost $2800, so the only people who can do this properly are the ones who are very smart, totally dedicated, and win the lottery.

In port cities like Genoa and Pisa, they also engaged in piracy. Your software changes gradually and continuously. When a startup reaches the point where it was memory-bound rather than CPU-bound, and since there was nothing we could do to decrease the size of users' data well, nothing easy, we knew we might as well stop there.3 With Web-based software, most users won't have to tweak it for every new client.4 This is generally true with angel groups too. Why stop now? Few legal documents are created from scratch. Don't let a ruling class of warriors and politicians squash the entrepreneurs.

US and the world, we tell the startups from those cycles that their best bet is to order the cheeseburger.5 Similar sites include Digg, a technology news site that's rapidly approaching Slashdot in popularity, and del. 7%. England just as much to be able to think, any economic upper bound on this number. As a rule their interest is a function of the number of startups is that they don't let individual programmers do great work. But only work on whatever will get you the most revenue the soonest. When a company loses their data for them, they'll get a lot madder.6 Two possible theories: a Your housemate did it deliberately to upset you.7 The thing about ideas, though, because for the first few sentences, but that was the right way to do things, its value is multiplied by all the rules that VC firms are. Someone graduating from college thinks, and is told, that he needs to get a line right.

Notes

Only in a band, or grow slowly and never sell i. They hoped they were friendlier to developers than Apple is now. If the startup. This is not a promising market and a few old professors in Palo Alto to have funded Reddit, for example, willfulness clearly has two subcomponents, stubbornness and energy.

Us to see famous startup founders is that parties shouldn't be too quick to reject candidates with skeletons in their graphic design. There is of course. So the most recent version of this: You may be the dual meaning of the current options suck enough. And that will seem to have had a strange task to write great software in a startup or going to have fun in college or what grades you got in them.

They could have used another algorithm and everything would have a better influence on your way up.

When companies can't simply eliminate new competitors may be whether what you learn about programming in Lisp, they can grow the acquisition offers most successful startups are possible. It's not simply a function of two things: what they're selling and how good they are now the founder visa in a reorganization.

In fact, we try to become addictive. In fact, this is a bit much to say now.

And those examples do reflect after-tax return from a technology center is the odds are slightly more interesting than random marks would be lost in friction. Reporters sometimes call us VCs, I want to either.

In practice formal logic is not too early for us, because they insist you dilute yourselves to set in when so many still make you register to read stories. That should probably pack investor meetings too closely, you'll be able to grow big by transforming consulting into a pattern, as Prohibition and the reaction of an ordinary adult slave seems to have gotten away with dropping Java in the world, and although convertible notes often have you read about startup founders are willing to be on the economics of ancient traditions. One of the Garter and given the freedom to they derive the same thing, because the broader your holdings, the best in the press when I first met him, but those specific abuses. The meanings of these people never come back with a face-saving compromise.

#automatically generated text#Markov chains#Paul Graham#Python#Patrick Mooney#things#investor#users#thing#lot#skeletons#software#market#right#Windows#Lisp#work#Pisa#line#programmers#changes#stories#trajectory#developers

0 notes

Text

What Is the Relevance of Technology?

"Technology in the long-run is irrelevant". That's what a customer of mine told me when I made a demonstration to him about a brand new product. I was speaking about the product's features and benefits and listed"state-of-the-art technology" or something to that effect, as among them. That is when he made his statement. I realized later that he was right, at least within the context of how I used"Technology" in my demonstration. However, I began thinking about whether he could be appropriate in different contexts as well.

What is Technology?

Merriam-Webster defines it as:

1

A: the practical application of knowledge especially in a particular area: engineering 2

B: a capability given by the practical application of knowledge

2

: a manner of accomplishing a task especially using technical processes, methods, or understanding

3

: the specialized aspects of a particular field of endeavor

Wikipedia defines it as:

Technology (from Greek τÎχνη, techne,"art, skill, cunning of hand"; and -λογία, -logia[1]) is the making, modification, utilization, and comprehension of machines, tools, techniques, crafts, systems, and techniques of organization, in order to resolve a problem, enhance a preexisting solution to your problem, reach a goal, manage an applied input/output terms or execute a particular function. It may also refer to the collection of these tools, such as machinery, modifications, arrangements and processes. Technologies significantly influence human and other animal species' ability to control and adapt to their natural environments. The term can either be applied generally or to specific areas: examples include construction technology, medical technology, and information technology.

Both definitions revolve around the same thing - application and usage.

Technology is an enabler

Many people mistakenly believe it is technology which drives innovation. Yet from the definitions above, that is clearly not the case. It is opportunity which defines innovation and technology which enables innovation. Think of the classic "Build a better mousetrap" example taught in most business schools. You may have the technology to build a better mousetrap, but if you have no mice or the older mousetrap works nicely, there is not any opportunity and the tech to build a better one becomes immaterial. On the other hand, if you are overrun with mice then the chance exists to innovate a product with your technology.

Another Example, one with which I am intimately familiar, are consumer electronics startup businesses. I have been correlated with both those that succeeded and those who failed. Each owned unique leading edge technology. The difference was chance. Those that failed could not locate the opportunity to come up with a purposeful innovation using their technologies. In fact to endure, these firms had to sew faithfully into something totally different and if they were lucky they could make the most of derivatives of their original technology. More often than not, the first technology wound up at the garbage heap. Tech, consequently, is the enabler whose greatest value proposition is to make improvements to our lives. So as to be applicable, it has to be utilised to make innovations that are driven by opportunity.To get more detail click Carl Kruse

Technology as a competitive edge?

Many companies list a tech as one of their competitive advantages. Is this valid? Sometimes yes, but In most cases no.

Technology develops along two avenues - an evolutionary path and a radical route.

A Revolutionary technology is one which enables new industries or enables solutions to issues that were previously not feasible. Semiconductor technology is a great example. Not only did it spawn new industries and goods, but it spawned other revolutionary technology - transistor technology, integrated circuit technology, microprocessor technology. All which provide many of the goods and services we have today. However, is semiconductor technology a competitive advantage? Looking at the number of semiconductor companies which exist today (with brand new ones forming every day), I'd say not. How about microprocessor tech? Again, no. A great deal of microprocessor companies out there. How about quad core microprocessor tech? Not as many businesses, but you've got Intel, AMD, ARM, and a host of businesses building custom quad core chips (Apple, Samsung, Qualcomm, etc). So again, not a great deal of competitive advantage. Competition from competing technologies and easy access to IP mitigates the perceived competitive advantage of any specific technology. Android vs iOS is a fantastic example of how this works. Both systems are derivatives of UNIX. Apple used their technology to introduce iOS and gained the early market edge. But, Google, using their variant of Unix (a competing technology), captured up relatively fast. The reasons for this lie not only at the underlying technology, but in how the goods made possible by those technologies have been brought to market (free vs. walled garden, etc.) as well as the differences in the strategic visions of each corporation.

Evolutionary Technology is one that incrementally builds upon the foundation technology that is revolutionary. But by it's very nature, the incremental change is easier for a competitor to match leapfrog. Take for example wireless cellphone technology. Company V introduced 4G products prior to Company A and while it may have had a short term benefit, when Company A introduced their 4G goods, the advantage because of technologies disappeared. The customer went back to picking Company A or Company V based on price, service, policy, whatever, but not predicated on technologies. Thus technology might have been relevant in the brief term, but in the long run, became immaterial.

In today's world, Technologies tend to quickly become commoditized, and in any specific technology lies the seeds of its own passing.

Technology's Relevance

This Article was written by the potential of an end client. From a developer/designer perspective things get murkier. The additional one is taken out of the technology, the less relevant it becomes. To a developer, the technology can look like a product. An enabling product, however, a product nonetheless, and consequently it's highly relevant. Bose uses a proprietary signal processing technologies to allow products that fulfill a set of market requirements and thus the technology and what it enables is pertinent to them. Their clients are more concerned with how it sounds, what is the price, what is the quality, etc., and not so much with how it's attained, thus the technologies utilized is not as applicable to them.

Recently, I was involved in a discussion on Google+ about The new Motorola X phone. A lot of the people on these posts slammed the Phone for a variety of reasons - price, locked boot loader, etc.. There were Also lots of knocks about the fact that it did not possess a quad-core Chip like the S4 or HTC One that were priced equally. What they Failed to understand is that whether the manufacturer used 1, 2, 4, or 8 Cores in the conclusion makes no difference as long as the phone can provide a Competitive (or perhaps best of course ) feature set, functionality, price, And user experience. The iPhone is one of the most Prosperous phones Ever created, and it runs on a dual-core processor. It still Provides one of the best user experiences on the market. The features That are enabled by the technology are what are related to the Customer, not the technology itself.

0 notes

Text

Symbolic Links - Create Folder / Directory Alias in Windows

In this post we'll explain the concept of symbolic links, a neat NFTS feature that can be used to create "proxies" or "pointers" for files or folders in NTFS-enabled Windows systems. In a nutshell, a symbolic link is a file-system object that has the sole purpose to point to another file system object: the object acting as a pointer is the symbolic link, while the one being pointed to is called target. If you think that we're talking about shortcuts, you're wrong: although there are indeed similarities between the two concepts, the actual implementation is completely different. To better understand these kinds of difference, though, is advisable to step back and talk about what aliases and shortcuts actually are and how they do differ in a typical operating system.

Introduction

In general terms, an alias is defined as an alternate name for someone or something: the noun is derived from the Latin adverb alias, meaning "otherwise" and, by extension, "also known as" (or AKA). In information technology, and more specifically in programming languages, the word alias is often used to address an alternative name for a defined data item, which can be defined only once and then referred by one or multiple aliases: such item can be an object (i.e. a class instance), a property, a function/method, a variable, and so on. In short words, we can say that an alias is an alternate (and arguably most efficient) way to reference to the same thing. This eventually led to a more widespread usage, also including e-mail aliases - a feature of many MTA services that allows to configure multiple e-mail prefixes for a single e-mail account - and other implementations based upon the overall concept. The main characteristic of an alias lies in the fact that it is an alternative reference to the same item: it different from other common terms frequently used in information technology, such as the shortcut - which defines a quicker way to reach - yet not reference to - a given item, target, or goal. To explain it even better, we could say that the alias is a different way to address something, while the shortcut is a different way to reach it. Such difference can be trivial or very important, depending of what we need to do.

Shortcut vs Simbolic Links

The perfect example to visualize the differences between shortcuts and aliases is a typical Windows operating system. From the desktop to the start menu, a Windows environment is tipically full of shortcuts: we do have shortcuts to run programs, to look into the recycle bin, to open a folder (which can contains other shorcuts), and so on. In a nutshell, a Windows shortcut is basically just a file that tells Windows what other file needs to be opened whenever it gets clicked, executed or activated: we could just say that a shortcut is a physical resource designed to redirect to another (different) resource: it help us to get there, yet it's not an alternate way to address it. This means, for example, that its reference won't be affected if we delete it. It also means that we can change its reference at any time, without altering its status (it will still be a shortcut) and without affecting its previous reference (cause they are completely different things and not related in any way). An alias doesn't work like that: whatever command we issue to it would likely have effect on the actual item to which it refers: we could say that an alias is a different (and additional) path to access the same resource. As a matter of fact, it ultimately depends on how the alias feature has been implemented on that specific scenario we're dealing with, but that's how it usually works in most programming languages, operating systems, database services and similar IT environments that usually make use of the concept. That's how it works on UNIX, where aliases are called links, and also in Windows NTFS, where they are known as symbolic links. Shortcuts In modern Windows, a shortcut is an handle that allows the user to find a file or resource located elsewhere. Microsoft Windows introduced such concept in Windows 95 with the shell links, which are still being used nowadays: these are the files with the .lnk extension you most likely already know (the .url extension is used when the target is a remote location, such as a web page). Microsoft Windows .lnk files operate as Windows Explorer extensions, rather than file system extensions. From a general perspective, a shortcut is basically a regular file containing path data: we can think on them as text files which only contain a URI for a file, a folder or any other external resource (UNC share, HTTP(s) address, and so on): that URI gets executed whenever the user clicks on (executes) them, as long as the operating system allows it - which is the default behaviour by default, but can be disabled for security reasons. Anyway, shortcuts are treated like ordinary files by the file system and by software programs that are not aware of them: only software programs that understand what shortcuts are and how they work - such as the Windows shell and file browsers - are able to "properly" treat them as references to other files. Symbolic Links Conversely from aliases, NTFS symbolic links (also known as symlink) have been implemented to function just like the UNIX aliases: for that very reason, they are transparent to users and applications. They do appear just any other standard file or folder, and can be acted upon by the user or application in the same manner. Such "transparency" makes them perfect to aid in migration and/or application compatibility tasks: whenever we have to deal with an "hardcoded" path that cannot be changed, and we don't want to physically move our files there, we can create a symbolic link pointing to that address and fix our issue for good.

How to create Symbolic Links

On Windows Vista and later, including Windows 10, symbolic links can be created uisng the mklink.exe command-line tool in the following way: mklink | | ] Here's an explanation of the relevant parameters: /d – This parameter creates a directory symbolic link. mklink creates a file symbolic link by default. /h – This parameter creates a hard link instead of a symbolic link. /j – This parameter creates a Directory Junction. – This parameter specifies the name of the symbolic link that is being created. – This parameter specifies the path that the new symbolic link refers to. /? – This parameter displays help. In Windows XP, where mklink.exe is not available, you can use the junction utility by Mark Russinovich, now offered by Microsoft as a part of their official Sysinternals suite (download link).

Conclusion

That's pretty much about it: we hope that this small guide will be useful for those who're looking for a way to create symbolic links and/or to gain valuable info regarding aliases and shortcuts. Read the full article

#Alias#junction#Microsoft#mklink#New-SymLink#NTFS#Poweshell#Shortcuts#SymbolicLink#Sysinternals#Windows

0 notes

Photo

Discussing Linux for Beginners

If you’re not much familiar to Linux then at the end of this small article, you will be. But first there are couple of cancer spreading myths you might have heard that are simply not true, get them off your mind before getting started. First thing you should learn is that LINUX is not UNIX( the long standing operating system), it’s not!. Secondly, Ubuntu is not linux, it’s just a distribution of linux although it has become synonymous.

Being a 90s kid linux has matured very well now. From its days of less GUI and more shell interface we have come to a time where the graphic interface is as good as any other OS and even better. It is a UNIX like operating system or Kernel ( not UNIX based).They even share commands and structure of their file system. And the operating systems which have linux and unix as their foundation, we call them distributions or ‘distros’. So the Ubuntu is a famous linux distro like Windows is to MS-DOS. Unlike other operating systems Linux is open source and it’s source code is accessible to general public without any cost and anyone can develop or derive their own distribution ( sounds like Cakewalk eh? It’s not). Windows is the only operating system working on DOS but with Linux there are several distributions that you can choose from( to be discussed in a later post).

Even if you have been a Windows user since always then switching to Linux is what the doctor always orders.

The first few questions that may rise could be, “Why should you be using linux? What are the benefits? Are there any cons?”

Answering the first, If you’re not irked with the vulnerability of Windows, restrictions of MAC and GUI of both then you shouldn’t. But if you’re willing to try a change then you probably will end up with linux.

Hardware compatibility Unlike mac that are limited to Apple.inc systems (not considering hackintosh) linux distributions are widely compatible in workstations and in virtual machines too. So you’re good to go with your common hardware systems.

It’s not Vulnerable to malware. (Duh!) Unlike Windows linux is almost free from virus , mac also holds good secure system as it’s unix like too. And even if any malware shows up in Linux, millions of genius geeks provide free patches the next day since they work on linux too. So you’re secure enough.

You can’t be done with customisations. It’s open source characteristics make it most customisable than any other. Even the entire desktop environment( to be discussed in detail in later post) can be changed along with every tiny detail. And customisations are available for high end specification systems to low specification systems too so you’re good to go with cheaper systems too.

Its free to download. Most of the Linux distributions are available from free to negligible cost. You can directly visit the distribution’s manufacturer and download any distribution easily. And they don’t require a key since they are free.

Has a large application repository> ( as appstore to Mac) Most of the windows and mac applications or their alternatives are available on software centre of all the operating systems with pretty good interface.

So in brief the advantages are enough to give it a shot at your system. Although you might have to install it on your own, which is one of its con that we are yet to discuss. Most of the websites and sources do not provide much systems with Linux in primary so chances are you might have do it by yourself. Another con is that it could be sometimes harder to find a solution for an uncommon problem that you may face though it’s very rare. And the final noticeable con is its support for games. Linux systems don’t have support to high end games just like mac. Companies like Steam are working in those fields but it’ll be quite a while before they make it really good at that.

Overall, it an operating system that you may use in your everyday life even you’re not a techie.

But it’s the almighty YOU to decide if you want to move to linux after looking at the negative points too. And if you do decide to switch, Linux loves you.

This was just a short review for beginners with less technical details. Thank you for the read!

Follow if you feel wiser now! @hashbanger on Instagram

1 note

·

View note

Text

The 'Unix Way'

It probably shouldn't, but it routinely astonishes me how much we live on the Web. Even I find myself going entire boots without using anything but the Web browser. With such an emphasis on Web-based services, one can forget to appreciate the humble operating system.

That said, we neglect our OS at the risk of radically underutilizing the incredible tools that it enables our device to be.

Most of us only come into contact with one, or possibly both, of two families of operating systems: "House Windows" and "House Practically Everything Else." The latter is more commonly known as Unix.

Windows has made great strides in usability and security, but to me it can never come close to Unix and its progeny. Though more than 50 years old, Unix has a simplicity, elegance, and versatility that is unrivalled in any other breed of OS.

This column is my exegesis of the Unix elements I personally find most significant. Doctors of computer science will concede the immense difficulty of encapsulating just what makes Unix special. So I, as decidedly less learned, will certainly not be able to come close. My hope, though, is that expressing my admiration for Unix might spark your own.

The Root of the Family Tree

If you haven't heard of Unix, that's only because its descendants don't all have the same resemblance to it -- and definitely don't share a name. MacOS is a distant offshoot which, while arguably the least like its forebears, still embodies enough rudimentary Unix traits to trace a clear lineage.

The three main branches of BSD, notably FreeBSD, have hewn the closest to the Unix formula, and continue to form the backbone of some of the world's most important computing systems. A good chunk of the world's servers, computerized military hardware, and PlayStation consoles are all some type of BSD under the hood.

Finally, there's Linux. While it hasn't preserved its Unix heritage as purely as BSD, Linux is the most prolific and visible Unix torchbearer. A plurality, if not outright majority, of the world's servers are Linux. On top of that, almost all embedded devices run Linux, including Android mobile devices.

Where Did This Indispensable OS Come From?

To give as condensed a history lesson as possible, Unix was created by an assemblage of the finest minds in computer science at Bell Labs in 1970. In their task, they set themselves simple objectives. First, they wanted an OS that could smoothly run on whatever hardware they could find since, ironically, they had a hard time finding any computers to work with at Bell. They also wanted their OS to allow multiple users to log in and run programs concurrently without bumping into each other. Finally, they wanted the OS to be simple to administer and intuitively organized. After acquiring devices from the neighboring department, which had a surplus, the team eventually created Unix.

Unix was adopted initially, and vigorously so, by university computer science departments for research purposes. The University of Illinois at Champaign-Urbana and the University of California Berkeley led the charge, with the latter going so far as to develop its own brand of Unix called the Berkeley Software Distribution, or BSD.

Eventually, AT&T, Bell's successor, lost interest in Unix and jettisoned it in the early 90s. Shortly following this, BSD grew in popularity, and AT&T realized what a grave mistake it had made. After what is probably still the most protracted and aggressive tech industry legal battle of all time, the BSD developers won sole custody of the de facto main line of Unix. BSD has been Unix's elder statesmen ever since, and guards one of the purest living, widely available iterations of Unix.

Organizational Structure

My conception of Unix and its accompanying overall approach to computing is what I call the "Unix Way." It is the intersection of Unix structure and Unix philosophy.

To begin with the structural side of the equation, let's consider the filesystem. The design is a tree, with every file starting at the root and branching from there. It's just that the "tree" is inverted, with the root at the top. Every file has its proper relation to "/" (the forward slash notation called "root"). The whole of the system is contained in the directories found here. Within each directory, you can have a practically unlimited number of files or other directories, each of which can have an unlimited number of files and directories of its own, and so on.

More importantly, every directory under root has a specific purpose. I covered this a while back in a piece on the Filesystem Hierarchy Standard, so I won't rehash it all here. But to give a few illustrative examples, the /boot directory stores everything your system needs to boot up. The /bin, /sbin, and /usr directories retain all your system binaries (the things that run programs). Configuration files that can alter how system-owned programs work live in /etc. All your personal files such as documents and media go in /home (to be more accurate, in your user account's directory in /home). The kind of data that changes all the time, namely logs, gets filed under /var.

In this way, Unix really lives by the old adage "a place for everything, and everything in its place." This is exactly why it's very easy to find whatever you're looking for. Most of the time, you can follow the tree one directory at a time to get to exactly what you need, simply by picking the directory whose name seems like the most appropriate place for your file to be. If that doesn't work, you can run commands like 'find' to dig up exactly what you're looking for. This organizational scheme also keeps clutter to a minimum. Things that are out-of-place stand out, at which point they can be moved or deleted.

Everything Is a File

Another convention which lends utility through elegance is the fact that everything in Unix is a file. Instead of creating another distinct digital structure for things like hardware and processes, Unix thinks of all of these as files. They may not all be files as we commonly understand them, but they are files in the computer science sense of being groups of bits.

This uniformity means that you are free to use a variety of tools for dealing with anything on your system that needs it. Documents and media files are files. Obvious as that sounds, it means they are treated like individual objects that can be referred to by other programs, whether according to their content format, metadata, or raw bit makeup.

Devices are files in Unix, too. No matter what hardware you connect to your system, it gets classified as a block device or a stream device. Users almost never mess with these devices in their file form, but the computer needs a way of classifying these devices so it knows how to interact with them. In most cases, the system invokes some program for converting the device "file" into an immediately usable form.

Block devices represent blocks of data. While block devices aren't treated like "files" in their entirety, the system can read segments of the block device by requesting a block number. Stream devices, on the other hand, are "files" that present streams of information, meaning bits that are being created or sent constantly by some process. A good example is a keyboard: it sends a stream of data as keys are pressed.

Even processes are files. Every program that you run spawns one or more processes that persist as long as the program does. Processes regularly start other processes, but can all be tracked by their unique process ID (PID) and grouped by the user that owns them. By classifying processes as files, locating and manipulating them is straightforward. This is what makes reprioritizing selfish processes or killing unruly ones possible.

To stray a bit into the weeds, you can witness the power of construing everything as a file by running the 'lsof' command. Short for "list open files," 'lsof' enumerates all files currently in use which fit certain criteria. Example criteria include whether or not the files use system network connections, or which process owns them.

Virtues of Openness

The last element I want to point out (though certainly not the last that wins my admiration) is Unix's open computing standard. Most, if not all, of the leading Unix projects are open source, which means they are accessible. This has several key implications.

First, anyone can learn from it. In fact, Linux was born out of a desire to learn and experiment with Unix. Linus Torvalds wanted a copy of Minix to study and modify, but its developers did not want to hand out its source code. In response, Torvalds simply made his own Unix kernel, Linux. He later published the kernel on the Internet for anyone else who also wanted to play with Unix. Suffice it to say that there was some degree of interest in his work.

Second, Unix's openness means anyone can deploy it. If you have a project that requires a computer, Unix can power it; and being highly adaptable due to its architecture, this makes it great for practically any application, from tinkering to running a global business.

Third, anyone can extend it. Again, due to its open-source model, anyone can take a Unix OS and run with it. Users are free to fork their own versions, as happens routinely with Linux distributions. More commonly, users can easily build their own software that runs on any type of Unix system.

This portability is all the more valuable by virtue of Unix and its derivatives running on more hardware than any other OS type. Linux alone can run on essentially all desktop or laptop devices, essentially all embedded devices including mobile devices, all server devices, and even supercomputers.

So, I wouldn't say there's nothing Unix can't do, but you'd be hard-pressed to find it.

A School of Thought, and Class Is in Session

Considering the formidable undertaking that is writing an OS, most OS developers focus their work by defining a philosophy to underpin it. None has become so iconic and influential as the Unix philosophy. Its impact has reached beyond Unix to inspire generations of computer scientists and programmers.

There are multiple formulations of the Unix philosophy, so I will outline what I take as its core tenets.

In Unix, every tool should do one thing, but do that thing well. That sounds intuitive enough, but enough programs weren't (and still aren't) designed that way. What this precept means in practice is that each tool should be built to address only one narrow slice of computing tasks, but that it should also do so in a way that is simple to use and configurable enough to adapt to user preferences regarding that computing slice.

Once a few tools are built along these philosophical lines, users should be able to use them in combination to accomplish a lot (more on that in a sec). The "classic" Unix commands can do practically everything a fundamentally useful computer should be able to do.

With only a few dozen tools, users can:

Manage processes

Manipulate files and their contents irrespective of filetype

Configure hardware and networking devices

Manage installed software

Write and compile code into working binaries

Another central teaching of Unix philosophy is that tools should not assume or impose expectations for how users will use their outputs or outcomes. This concept seems abstract, but is intended to achieve the very pragmatic benefit of ensuring that tools can be chained together. This only amplifies what the potent basic Unix toolset is capable of.

In actual practice, this allows the output of one command to be the input of another. Remember that I said that everything is a file? Program outputs are no exception. So, any command that would normally require a file can alternatively take the "file" that is the previous command's output.

Lastly, to highlight a lesser-known aspect of Unix, it privileges text handling and manipulation. The reason for this is simple enough: text is what humans understand. It is therefore what we want computational results delivered in.

Fundamentally, all computers truly do is transform some text into different text (by way of binary so that it can make sense of the text). Unix tools, then, should let users edit, substitute, format, and reorient text with no fuss whatsoever. At the same time, Unix text tools should never deny the user granular control.

In observing the foregoing dogmas, text manipulation is divided into separate tools. These include the likes of 'awk', 'sed', 'grep', 'sort', 'tr', 'uniq', and a host of others. Here, too, each is formidable on its own, but immensely powerful in concert.

True Power Comes From Within

Regardless of how fascinating you may find them, it is understandable if these architectural and ideological distinctions seem abstruse. But whether or not you use your computer in a way that is congruent with these ideals, the people who designed your computer's OS and applications definitely did. These developers, and the pioneers before them, used the mighty tools of Unix to craft the computing experience you enjoy every day.

Nor are these implements relegated to some digital workbench in Silicon Valley. All of them are there -- sitting on your system anytime you want to access them -- and you may have more occasion to use them than you think. The majority of problems you could want your computer to solve aren't new, so there are usually old tools that already solve them. If you find yourself performing a repetitive task on a computer, there is probably a tool that accomplishes this for you, and it probably owes its existence to Unix.

In my time writing about technology, I have covered some of these tools, and I will likely cover yet more in time. Until then, if you have found the "Unix Way" as compelling as I have, I encourage you to seek out knowledge of it for yourself. The Internet has no shortage of this, I assure you. That's where I got it.

0 notes

Text

What Is the Relevance of Technology?

"Technology in the long-run is irrelevant". That is what a customer of mine told me when I made a presentation to him about a new product. I had been talking about the product's features and benefits and listed "state-of-the-art technology" or something to that effect, as one of them. That is when he made his statement. I realized later that he was correct, at least within the context of how I used "Technology" in my presentation. But I began thinking about whether he could be right in other contexts as well.

What is Technology?

Merriam-Webster defines it as:

1

a: the practical application of knowledge especially in a particular area: engineering 2 <medical technology>

b: a capability given by the practical application of knowledge <a car's fuel-saving technology>

2

: a manner of accomplishing a task especially using technical processes, methods, or knowledge

3

: the specialized aspects of a particular field of endeavor <educational technology>

Wikipedia defines it as:

Technology (from Greek τÎχνη, techne, "art, skill, cunning of hand"; and -λογία, -logia[1]) is the making, modification, usage, and knowledge of tools, machines, techniques, crafts, systems, and methods of organization, in order to solve a problem, improve a preexisting solution to a problem, achieve a goal, handle an applied input/output relation or perform a specific function. It can also refer to the collection of such tools, including machinery, modifications, arrangements and procedures. Technologies significantly affect human as well as other animal species' ability to control and adapt to their natural environments. The term can either be applied generally or to specific areas: examples include construction technology, medical technology, and information technology.

Both definitions revolve around the same thing - application and usage.

Technology is an enabler

Many people mistakenly believe it is technology which drives innovation. Yet from the definitions above, that is clearly not the case. It is opportunity which defines innovation and technology which enables innovation. Think of the classic "Build a better mousetrap" example taught in most business schools. You might have the technology to build a better mousetrap, but if you have no mice or the old mousetrap works well, there is no opportunity and then the technology to build a better one becomes irrelevant. On the other hand, if you are overrun with mice then the opportunity exists to innovate a product using your technology.

Another example, one with which I am intimately familiar, are consumer electronics startup companies. I've been associated with both those that succeeded and those that failed. Each possessed unique leading edge technologies. The difference was opportunity. Those that failed could not find the opportunity to develop a meaningful innovation using their technology. In fact to survive, these companies had to morph oftentimes into something totally different and if they were lucky they could take advantage of derivatives of their original technology. More often than not, the original technology wound up in the scrap heap. Technology, thus, is an enabler whose ultimate value proposition is to make improvements to our lives. In order to be relevant, it needs to be used to create innovations that are driven by opportunity.

Technology as a competitive advantage?

Many companies list a technology as one of their competitive advantages. Is this valid? In some cases yes, but In most cases no.

Technology develops along two paths - an evolutionary path and a revolutionary path.

A revolutionary technology is one which enables new industries or enables solutions to problems that were previously not possible. Semiconductor technology is a good example. Not only did it spawn new industries and products, but it spawned other revolutionary technologies - transistor technology, integrated circuit technology, microprocessor technology. All which provide many of the products and services we consume today. But is semiconductor technology a competitive advantage? Looking at the number of semiconductor companies that exist today (with new ones forming every day), I'd say not. How about microprocessor technology? Again, no. Lots of microprocessor companies out there. How about quad core microprocessor technology? Not as many companies, but you have Intel, AMD, ARM, and a host of companies building custom quad core processors (Apple, Samsung, Qualcomm, etc). So again, not much of a competitive advantage. Competition from competing technologies and easy access to IP mitigates the perceived competitive advantage of any particular technology. Android vs iOS is a good example of how this works. Both operating systems are derivatives of UNIX. Apple used their technology to introduce iOS and gained an early market advantage. However, Google, utilizing their variant of Unix (a competing technology), caught up relatively quickly. The reasons for this lie not in the underlying technology, but in how the products made possible by those technologies were brought to market (free vs. walled garden, etc.) and the differences in the strategic visions of each company.

Evolutionary technology is one which incrementally builds upon the base revolutionary technology. But by it's very nature, the incremental change is easier for a competitor to match or leapfrog. Take for example wireless cellphone technology. Company V introduced 4G products prior to Company A and while it may have had a short term advantage, as soon as Company A introduced their 4G products, the advantage due to technology disappeared. The consumer went back to choosing Company A or Company V based on price, service, coverage, whatever, but not based on technology. Thus technology might have been relevant in the short term, but in the long term, became irrelevant.

In today's world, technologies tend to quickly become commoditized, and within any particular technology lies the seeds of its own death.

Technology's Relevance

This article was written from the prospective of an end customer. From a developer/designer standpoint things get murkier. The further one is removed from the technology, the less relevant it becomes. To a developer, the technology can look like a product. An enabling product, but a product nonetheless, and thus it is highly relevant. Bose uses a proprietary signal processing technology to enable products that meet a set of market requirements and thus the technology and what it enables is relevant to them. Their customers are more concerned with how it sounds, what's the price, what's the quality, etc., and not so much with how it is achieved, thus the technology used is much less relevant to them.

Recently, I was involved in a discussion on Google+ about the new Motorola X phone. A lot of the people on those posts slammed the phone for various reasons - price, locked boot loader, etc. There were also plenty of knocks on the fact that it didn't have a quad-core processor like the S4 or HTC One which were priced similarly. What they failed to grasp is that whether the manufacturer used 1, 2, 4, or 8 cores in the end makes no difference as long as the phone can deliver a competitive (or even best of class) feature set, functionality, price, and user experience. The iPhone is one of the most successful phones ever produced, and yet it runs on a dual-core processor. It still delivers one of the best user experiences on the market. The features that are enabled by the technology are what are relevant to the consumer, not the technology itself.

The relevance of technology therefore, is as an enabler, not as a product feature or a competitive advantage, or any myriad of other things - an enabler. Looking at the Android operating system, it is an impressive piece of software technology, and yet Google gives it away. Why? Because standalone, it does nothing for Google. Giving it away allows other companies to use their expertise to build products and services which then act as enablers for Google's products and services. To Google, that's where the real value is.

The possession of or access to a technology is only important for what it enables you to do - create innovations which solve problems. That is the real relevance of technology.

Samsung Galaxy Note 20 Ultra : Pricing & Specification 2020

Ever Wondered What Backlinking Is?

Here Is Your Answer And Why You Should Take It Up

0 notes

Text

PAF 03

1. Discuss the importance of maintaining the quality of the code, explaining the different aspects of the code quality

Code quality is a vague definition. What do we consider high quality and what’s low quality?

Code quality is rather a group of different attributes and requirements, determined and prioritized by your business.

These requirements have to be defined with your offshore team in advance to make sure you’re on the same side.

Here are the main attributes that can be used to determine code quality:

Clarity: Easy to read and oversee for anyone who isn’t the creator of the code. If it’s easy to understand, it’s much easier to maintain and extend the code. Not just computers, but also humans need to understand it.

Maintainable: A high-quality code isn’t overcomplicated. Anyone working with the code has to understand the whole context of the code if they want to make any changes.

Documented: The best thing is when the code is self-explaining, but it’s always recommended to add comments to the code to explain its role and functions. It makes it much easier for anyone who didn’t take part in writing the code to understand and maintain it.

Refactored: Code formatting needs to be consistent and follow the language’s coding conventions.

Well-tested: The less bugs the code has the higher its quality is. Thorough testing filters out critical bugs ensuring that the software works the way it’s intended.

Extendible: The code you receive has to be extendible. It’s not really great when you have to throw it away after a few weeks.

Efficiency: High-quality code doesn’t use unnecessary resources to perform a desired action

https://bit.ly/2BWiWJM

2. Explain different approaches and measurements used to measure the quality of code

Following things can be done to measure code quality,

The code works correctly in the normal case.

The performance is acceptable, even for large data.

The code is clear, using descriptive variable names and method names.

Comments should be in place for things that are unusual, such as a fairly novel method of doing something that makes the code less clear but more performant (to work around a bottleneck), or a workaround for a bug in a framework.

The code is broken down so that when things change, you have to change a fairly small amount of code. (Responsibilities should be well defined, and each piece of code should be trusted to do its job.)

The code handles unexpected cases correctly.

Code is not repeated. A bug in properly factored code is a bug. A bug in copy/paste code can be 20 bugs.

Consts are used in the code instead of constant values. Their usage should be semantic. That is, if you have 20 columns and 20 rows, you could have columnCount = 20 and rowCount = 20, and use those correctly. You should never have twenty = 20 (yes, sadly I've seen this).

The UI should feel natural: you should be able to click the things it feels like you should click, and type where it feels like you should type.

The code should do what it says without side effects.

https://bit.ly/2IKDJ95

3. Identify and compare some available tools to maintain the code quality

Best Code Review Tools in the Market

Collaborator

Review Assistant

Codebrag

Gerrit

Codestriker

Rhodecode

Phabricator

Crucible

Veracode

Review Board

Here we go with a brief review of the individual tool.

1) Collaborator

Collaborator is the most comprehensive peer code review tool, built for teams working on projects where code quality is critical.

See code changes, identify defects, and make comments on specific lines. Set review rules and automatic notifications to ensure that reviews are completed on time.

Custom review templates are unique to Collaborator. Set custom fields, checklists, and participant groups to tailor peer reviews to your team’s ideal workflow.

Easily integrate with 11 different SCMs, as well as IDEs like Eclipse & Visual Studio

Build custom review reports to drive process improvement and make auditing easy.

Conduct peer document reviews in the same tool so that teams can easily align on requirements, design changes, and compliance burdens.

2) Review Assistant

Review Assistant is a code review tool. This code review plug-in helps you to create review requests and respond to them without leaving Visual Studio. Review Assistant supports TFS, Subversion, Git, Mercurial, and Perforce. Simple setup: up and running in 5 minutes.

Key features:

Flexible code reviews

Discussions in code

Iterative review with defect fixing

Team Foundation Server integration

Flexible email notifications

Rich integration features

Reporting and Statistics

Drop-in Replacement for Visual Studio Code Review Feature and much more

3) Codebrag

Codebrag is a simple, light-weight, free and open source code review tool which makes the review entertaining and structured.

Codebrag is used to solve issues like non-blocking code review, inline comments & likes, smart email notifications etc.

With Codebrag one can focus on workflow to find out and eliminate issues along with joint learning and teamwork.

Codebrag helps in delivering enhanced software using its agile code review.

License for Codebrag open source is maintained by AGPL.

https://bit.ly/2Tbhf62

4. Discuss the need for dependency/package management tools in software development?