#AI Washing

Explore tagged Tumblr posts

Text

AI’s “human in the loop” isn’t

I'll be in TUCSON, AZ from November 8-10: I'm the GUEST OF HONOR at the TUSCON SCIENCE FICTION CONVENTION.

AI's ability to make – or assist with – important decisions is fraught: on the one hand, AI can often classify things very well, at a speed and scale that outstrips the ability of any reasonably resourced group of humans. On the other hand, AI is sometimes very wrong, in ways that can be terribly harmful.

Bureaucracies and the AI pitchmen who hope to sell them algorithms are very excited about the cost-savings they could realize if algorithms could be turned loose on thorny, labor-intensive processes. Some of these are relatively low-stakes and make for an easy call: Brewster Kahle recently told me about the Internet Archive's project to scan a ton of journals on microfiche they bought as a library discard. It's pretty easy to have a high-res scanner auto-detect the positions of each page on the fiche and to run the text through OCR, but a human would still need to go through all those pages, marking the first and last page of each journal and identifying the table of contents and indexing it to the scanned pages. This is something AI apparently does very well, and instead of scrolling through endless pages, the Archive's human operator now just checks whether the first/last/index pages the AI identified are the right ones. A project that could have taken years is being tackled with never-seen swiftness.

The operator checking those fiche indices is something AI people like to call a "human in the loop" – a human operator who assesses each judgment made by the AI and overrides it should the AI have made a mistake. "Humans in the loop" present a tantalizing solution to algorithmic misfires, bias, and unexpected errors, and so "we'll put a human in the loop" is the cure-all response to any objection to putting an imperfect AI in charge of a high-stakes application.

But it's not just AIs that are imperfect. Humans are wildly imperfect, and one thing they turn out to be very bad at is supervising AIs. In a 2022 paper for Computer Law & Security Review, the mathematician and public policy expert Ben Green investigates the empirical limits on human oversight of algorithms:

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3921216

Green situates public sector algorithms as the latest salvo in an age-old battle in public enforcement. Bureaucracies have two conflicting, irreconcilable imperatives: on the one hand, they want to be fair, and treat everyone the same. On the other hand, they want to exercise discretion, and take account of individual circumstances when administering justice. There's no way to do both of these things at the same time, obviously.

But algorithmic decision tools, overseen by humans, seem to hold out the possibility of doing the impossible and having both objective fairness and subjective discretion. Because it is grounded in computable mathematics, an algorithm is said to be "objective": given two equivalent reports of a parent who may be neglectful, the algorithm will make the same recommendation as to whether to take their children away. But because those recommendations are then reviewed by a human in the loop, there's a chance to take account of special circumstances that the algorithm missed. Finally, a cake that can be both had, and eaten!

For the paper, Green reviewed a long list of policies – local, national, and supra-national – for putting humans in the loop and found several common ways of mandating human oversight of AI.

First, policies specify that algorithms must have human oversight. Many jurisdictions set out long lists of decisions that must be reviewed by human beings, banning "fire and forget" systems that chug along in the background, blithely making consequential decisions without anyone ever reviewing them.

Second, policies specify that humans can exercise discretion when they override the AI. They aren't just there to catch instances in which the AI misinterprets a rule, but rather to apply human judgment to the rules' applications.

Next, policies require human oversight to be "meaningful" – to be more than a rubber stamp. For high-stakes decisions, a human has to do a thorough review of the AI's inputs and output before greenlighting it.

Finally, policies specify that humans can override the AI. This is key: we've all encountered instances in which "computer says no" and the hapless person operating the computer just shrugs their shoulders apologetically. Nothing I can do, sorry!

All of this sounds good, but unfortunately, it doesn't work. The question of how humans in the loop actually behave has been thoroughly studied, published in peer-reviewed, reputable journals, and replicated by other researchers. The measures for using humans to prevent algorithmic harms represent theories, and those theories are testable, and they have been tested, and they are wrong.

For example, people (including experts) are highly susceptible to "automation bias." They defer to automated systems, even when those systems produce outputs that conflict with their own expert experience and knowledge. A study of London cops found that they "overwhelmingly overestimated the credibility" of facial recognition and assessed its accuracy at 300% better than its actual performance.

Experts who are put in charge of overseeing an automated system get out of practice, because they no longer engage in the routine steps that lead up to the conclusion. Presented with conclusions, rather than problems to solve, experts lose the facility and familiarity with how all the factors that need to be weighed to produce a conclusion fit together. Far from being the easiest step of coming to a decision, reviewing the final step of that decision without doing the underlying work can be much harder to do reliably.

Worse: when algorithms are made "transparent" by presenting their chain of reasoning to expert reviewers, those reviewers become more deferential to the algorithm's conclusion, not less – after all, now the expert has to review not just one final conclusion, but several sub-conclusions.

Even worse: when humans do exercise discretion to override an algorithm, it's often to inject the very bias that the algorithm is there to prevent. Sure, the algorithm might give the same recommendation about two similar parents who are facing having their children taken away, but the judge who reviews the recommendations is more likely to override it for a white parent than for a Black one.

Humans in the loop experience "a diminished sense of control, responsibility, and moral agency." That means that they feel less able to override an algorithm – and they feel less morally culpable when they sit by and let the algorithm do its thing.

All of these effects are persistent even when people know about them, are trained to avoid them, and are given explicit instructions to do so. Remember, the whole reason to introduce AI is because of human imperfection. Designing an AI to correct human imperfection that only works when its human overseer is perfect produces predictably bad outcomes.

As Green writes, putting an AI in charge of a high-stakes decision, and using humans in the loop to prevent its harms, produces a "perverse effect": "alleviating scrutiny of government algorithms without actually addressing the underlying concerns." The human in the loop creates "a false sense of security" that sees algorithms deployed for high-stakes domains, and it shifts the responsibility for algorithmic failures to the human, creating what Dan Davies calls an "accountability sink":

https://profilebooks.com/work/the-unaccountability-machine/

The human in the loop is a false promise, a "salve that enables governments to obtain the benefits of algorithms without incurring the associated harms."

So why are we still talking about how AI is going to replace government and corporate bureaucracies, making decisions at machine speed, overseen by humans in the loop?

Well, what if the accountability sink is a feature and not a bug. What if governments, under enormous pressure to cut costs, figure out how to also cut corners, at the expense of people with very little social capital, and blame it all on human operators? The operators become, in the phrase of Madeleine Clare Elish, "moral crumple zones":

https://estsjournal.org/index.php/ests/article/view/260

As Green writes:

The emphasis on human oversight as a protective mechanism allows governments and vendors to have it both ways: they can promote an algorithm by proclaiming how its capabilities exceed those of humans, while simultaneously defending the algorithm and those responsible for it from scrutiny by pointing to the security (supposedly) provided by human oversight.

Tor Books as just published two new, free LITTLE BROTHER stories: VIGILANT, about creepy surveillance in distance education; and SPILL, about oil pipelines and indigenous landback.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/10/30/a-neck-in-a-noose/#is-also-a-human-in-the-loop

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en ==

290 notes

·

View notes

Text

Fake It Till You Make It...They Stake It, or You Break It.

JB: Hi. I read an interesting article in the International Business Times by Vinay Patel titled, “Builder.ai Collapses: $1.5bn ‘AI’ Startup Exposed as ‘Actually Indians’ Pretending to Be Bots.” Apparently, the AI goldrush involves quite a bit of “Fool’s Gold.” The article made me buy, “The AI Con” a book by Emily M. Bender & Alex Hanna. While I’m just a few chapters in, and appreciate their…

#AI BS#AI Investing#AI startup red flags#AI Startups#AI transparency#AI Washing#Alex Hanna#build.ai meltdown#Emily M. Bender#the AI Con#Vinay Patel

0 notes

Text

AI is Driving Investment — But Entrepreneurs Need to be Careful With What They Claim

New Post has been published on https://thedigitalinsider.com/ai-is-driving-investment-but-entrepreneurs-need-to-be-careful-with-what-they-claim/

AI is Driving Investment — But Entrepreneurs Need to be Careful With What They Claim

Artificial intelligence (AI) remains one of the strongest drivers of venture capital investment, proving that the hype cycle isn’t even close to finished. According to a recent EY report, 37% of fundraising in the third quarter of 2024 was for AI-related companies, similar to second-quarter volume. Startups using AI are getting noticed for their ability to tackle big problems in robotics, automation, healthcare, logistics, and more. But the reality is that investors hear, “We’re using AI” all day. The degree to which entrepreneurs actually use it varies substantially. There is even backlash from investors, including a 31-page report by Goldman Sachs that questions how worthy AI is of investment.

The Federal Trade Commission (FTC) recently announced a crackdown on companies making deceptive AI claims. This “AI washing” — lobbing AI into marketing without backing it up — might grab attention, but it’s a fast track to losing credibility. Founders need to communicate clearly and honestly about how AI fits into their business. The focus has to be on actual innovation, not just chasing buzzwords.

It is critical to avoid situations like Theranos, where bold claims were made without substance, leading to severe consequences. The stakes are even higher with AI, as the technical complexity makes it harder to verify claims of how it’s used and easier for misuse to slip through. According to insurer Allianz, 38 AI-related securities class action lawsuits were filed between March 2020 and October 2024 — 13 of them came in 2024 alone.

AI’s appeal to investors isn’t just about technical sophistication. It’s about solving problems that matter and creating a real business. Founders who take shortcuts or exaggerate their AI capabilities risk alienating the very backers they’re trying to attract. With regulators sharpening their scrutiny and the market growing more discerning, delivering substance is essential.

AI’s broad reach

Artificial intelligence encompasses far more than the conversational AI tools that dominate headlines. Patrick Winston, the late computer scientist and professor at MIT, outlined the foundational elements of AI more than 30 years ago in his seminal textbook, “Artificial Intelligence.” Long before large language models captured the public’s imagination, AI was driving advancements in problem solving, quantitative reasoning, and algorithmic control. These roots highlight the diverse applications of AI beyond chatbots and natural language processing.

Consider the role of AI in robotics and computer vision. Simultaneous localization and mapping (SLAM), for example, is a groundbreaking technique enabling machines to navigate and interpret environments. It underpins critical autonomous systems and exemplifies AI’s capability to address complex technical challenges. While not as widely recognized as large language models, these advancements are just as transformative.

Fields such as speech recognition and computer vision, once considered AI innovations, have since matured into distinct disciplines, transforming industries in the process and, in many cases, losing the ‘AI’ label. Speech recognition has revolutionized accessibility and voice-driven interfaces, while computer vision powers advancements in areas like autonomous vehicles, medical imaging, face recognition, and retail analytics. For founders, this underscores the importance of articulating how their innovations fit within AI’s broader landscape. Demonstrating a nuanced understanding of AI’s scope enables startups to stand out in an increasingly competitive funding ecosystem for early-stage companies.

For instance, machine learning models can optimize supply chain logistics, predict equipment failures, or enable dynamic pricing strategies. These applications may not command the same attention as chatbots, but they offer immense value to industries focused on efficiency and innovation.

Speaking investors’ language

When communicating to founders how they use AI, founders should focus on measurable impacts, such as improved efficiency, better user outcomes, or unique technical advantages. Many investors are not deeply technical, so it’s essential to present AI capabilities in simple, accessible language. Explaining what the AI does, how it works, and why it matters builds trust and credibility.

Investors are growing weary of hearing the term “AI,” concerned that entrepreneurs are over-branding their ventures with the technology instead of how it helps them solve problems. AI has become table stakes in many industries, and its role should not be overstated in a company’s strategy.

Equally important is transparency. With the FTC cracking down on exaggerated AI claims, being truthful about what your technology can and cannot do is a necessity. Overstating capabilities might generate initial interest but can quickly backfire, leading to reputational damage or regulatory scrutiny.

Founders should also highlight how their use of AI aligns with broader market opportunities. For example, leveraging AI for predictive analytics, optimization, or decision-making systems can demonstrate foresight and innovation. These applications may not dominate headlines like chatbots, but they address real-world needs that resonate with investors.

Ultimately, it’s about presenting AI as a tool that drives value and solves pressing problems. By focusing on clear communication, honesty, and alignment with investor priorities, founders can position themselves as credible and forward-thinking leaders in the AI space.

#2024#Accessibility#ai#AI Investment#ai tools#ai washing#Analytics#applications#artificial#Artificial Intelligence#attention#automation#autonomous#autonomous systems#autonomous vehicles#Branding#Business#chatbots#command#communication#Companies#complexity#computer#Computer vision#conversational ai#Deceptive AI#driving#efficiency#Entrepreneur#equipment

0 notes

Text

Is AI Washing A Real Thing?

What are your thoughts regarding "AI Washing" - is it real?

What is AI Washing? (Bandwagon Jumpers) – click here or the image below to watch a short video. Okay, Bob Mango, here is a link to my most recent LinkedIn post that very much aligns with what you are saying – https://bit.ly/3B2km72 I turned 65 this year, and after over 40 years in high-tech and procurement, I can say with great certainty that Hype Cycles like Gartner’s do more harm than good.…

0 notes

Text

Another random comic diary. I have a really tiresome job I should be doing(commission comic about washing machines. really. x_x) so I am drawing a lot of other things to avoid working on it :3

#diary comic#train#I have no money from diary comic but drawing those...#feels so much better :D#I would leave washing machine comic to AI any day...#...but I would not have any reall income this month#like I am trying to draw take those commissions as challenge#but this on is too much#comic

4K notes

·

View notes

Text

girl dad satoru

#— ai rambles#his little girl climbing on his back and putting cute little stamps on him and he doesn’t ever bother washing up he’s THE BEST DAD#he has them on his face too#i can bet my liver and pancreas that he goes on missions looking like that#papatoru ily

419 notes

·

View notes

Text

and nothing ever changes.

#my art#fnaf#five nights at freddy's#henry emily#charlotte emily#charlie emily#charliebot#this took ages to post bc some freak on twitter used that fuck ass ai to white wash them. anyways!#henry’s haunted house

243 notes

·

View notes

Text

#america#usa#funny#meme#html#html5#html css#htmlcoding#css#mcdonalds#americans#programming#computer science#cyberspace#sexually transmitted diseases#polls#intelligence#ai#stupid shit#stupidity#brainrot#brain wash#internet#illustration#daredevil#homestuck

90 notes

·

View notes

Text

Tex is very disappointed in her husband:(

#red vs blue#agent north dakota#agent washington#church rvb#rvb#rvb wash#rvb north#leonard church#pfl#project freelancer#missed shot#dead character is alive!!#rvb theta#rvb delta#ai#damn it church#agent texas#rvb tex#omega ai

281 notes

·

View notes

Text

does anyone want to help us grab some groceries? i put too much time into making it more liveable down here and we ran out of staples before i had a chance to pick anything else up lol..

tentative goal of $20/150 so we can shore up some, thanks!

#as soon as i get this day job its over for being broke all the time#i cannot wait#we got our kettle and blankets in the mail today tho and it made me SO ridiculously happy#the blankets in the wash rn...#gotta remember to put tea on the grocery list bc we had to throw our reserves out when we moved#gotta put that kettle to work<3#also ps naturally ofc u can always ask for artistic compensation! i like to work for what im given#its just been really scraping together pennies w ai and the economy crashing haha no Big Work really

74 notes

·

View notes

Text

everything we know about Red vs Blue means that there's a canonically supported reality in which every bit of the rvb timeline that happens post-Freelancer saga is actually just simulations Epsilon is running in WASH's head as he unravels

#true rvb end: wash wakes up in a freelancer medbay with york and north sitting next to him#'hey buddy how're you feeling? that ai sure did a number on you'#'how long was I out'#'2 hours'#'felt like 20 years; you guys both died'#red vs blue#rvb restoration#the ol' it was just a dream cliche#they're actually called simulation troopers because they're part of an epsilon simulation! /s#to be clear this would be a bad ending but it is funny#and I am never not thinking about agent washington#inspired by epsilon showing off his simulations in rvb19#+ my feelings about rvb19#+ a comment made on one of my other wash posts from help-we-are-out-of-chicken-fries#rvb19#rvb spoilers#rvb#epsilon rvb#agent washington

267 notes

·

View notes

Text

*colors your kiseki part 2: rainbow edition*

me when i see literally any kiseki scene: you are not immune to being color corrected. and given skintones. while Maintaining Pretty. 💝 (pt 1)

...i love working with this show ngl. & to the people who leave kind words on my sets: i love you too. 🫂❤️🥰🥰🥰

#julian watches kiseki#kiseki: dear to me#*mypost#kisekiedit#kdtm#flashing gif#the amount of grain i have to work with based on how washed out details are by the color... but its woorth iiiit#biggest pain in the ass sometimes but the BIGGEST ego booster when i see the end results#honestly it's just fun#and i get to look at my guys in the meantime theres really no losing#some like the fourth are sooooooo drenched in color that all i can do is pastel-ify it#but i tend to like those results as well as long as the grain isnt too bad. bc you can see more details after and brighten the deep shadows#bc like. tbh i love the way things are lit around chen yi and ai di#i loooove how colorful their half of the show is#i just also like. to make. it prettier.......#pdribs#uservid#userspring#usernik#userjjessi#userspicy#userrain#ai di x chen yi#chen yi x ai di#again no pressure to rb im just out here

93 notes

·

View notes

Text

The Growing Number of Tech Companies Getting Cancelled for AI Washing

New Post has been published on https://thedigitalinsider.com/the-growing-number-of-tech-companies-getting-cancelled-for-ai-washing/

The Growing Number of Tech Companies Getting Cancelled for AI Washing

In 2024, 15 AI technology companies were hit by regulators for exaggerating their products’ capabilities, and that number has more than doubled from 2023. AI-related filings are on the rise and tech companies could be caught in the crossfire if they don’t understand emerging regulations and how to avoid them.

What’s Wrong with AI Marketing Today?

While many are familiar with the phrase “greenwashing,” it’s only in the last year that a new form has emerged from the hype around artificial intelligence, and it’s called “AI washing.” According to BBC the phenomenon of AI washing can be defined as claiming to use AI when in reality a less-sophisticated method of computing is being used. They explain that AI washing can also occur when companies overstate how operational their AI is or when a company combines products or capabilities together. For example, when “firms are simply bolting an AI chatbot onto their existing non-AI operating software.”

Over-exaggerated AI claims are dangerous for users and other stakeholders. Three obvious concerns about AI washing come to mind:

The user paying for something they’re not getting

Users expecting an outcome that isn’t achievable

Company stakeholders not knowing if they’re investing in a business that is truly innovating with AI

AI washing is a growing issue as tech companies compete for greater market share. As many as 40% of companies who described themselves as an AI start-up in 2019 had zero artificial intelligence technology. The pressure to offer advanced technology is even greater now than it was five years ago.

What’s Driving AI Washing?

Experts have a few theories about what’s behind this growing phenomenon. Douglas Dick, the head of emerging technology risk at KPMG in the UK, told BBC that it is the lack of AI definition and the ambiguity that makes AI washing possible.

Experts at Berkely believe that the discourse of organizational culture is responsible for AI washing, and the core reasons for this phenomenon include:

Lack of technical AI knowledge in senior leadership

Pressure for continuous innovation

Short-termism and hype

Fear of missing out (FOMO)

AI washing can also be driven by funding. Investors want to see consistent innovation and outpacing of competitors. Even if brands haven’t fully developed an AI capability, they can attract the attention of investors with half-baked automation tools to earn additional capital.

With the global AI market set to reach approximately $250B by the end of 2025, it’s easy to understand why the bandwagon is in full effect, and startups eager for funding are quick to slap the AI label onto anything. Unfortunately, regulators have taken note.

AI Tech Companies Charged with AI Washing

Companies that claim to use artificial intelligence are often just using advanced computing and automation techniques. Unless true AI data science infrastructure is in place with machine learning algorithms, neural networks, natural language processing, image recognition, or some form of Gen AI is in play, the company may just be putting up smoke and mirrors with their AI claims.

One AI HR tech company called Joonko was shut down by the SEC for fraudulent practices.

Learning from Joonko

Joonko claimed that it could help employers identify near-hires so employers could tap into these pools. The idea was that this would create more diverse candidates to be put in front of recruiters and have a greater chance of getting hired. Joonko was so successful at marketing its AI that Eubanks wrote about Joonko in his first book, and the company raised $27 million in VC funding between 2021 and 2022.

When the SEC charged Joonko’s former CEO with AI washing securities fraud, it was because he had falsely represented the number and names of their customers. He claimed that Joonka sold to global credit cards, travel, and luxury brands, and forged bank statements and purchase orders for investors. The CEO received criminal charges in addition to the SEC charges against the company.

Learning from Codeway

In 2023, the Codeway app was charged for a misleading ad on Instagram that claimed their AI could fix blurry photos. The ad read “Enhance your image with AI” and when challenged by a complainant, the company failed to demonstrate how their app could fix a blurry image on its own without the help of other digital photo enhancement processes. The Advertising Standards Authority (ASA) upheld the complaint and banned the company from running that ad or any others like it.

Other Examples

In the US, the FTC and SEC recently carried out the following enforcement actions:

Multiple business schemes were halted after claiming people could use AI to make money with online storefronts

A claim for over 190k was actioned for ineffective robot lawyer services

A company called Rytr LLC falsely claimed that it could create AI-generated content

A settlement action against IntelliVision Technologies for misleading claims about its AI facial recognition

Delphia Inc. and Global Predictions Inc. were charged for making false claims about AI on their website and social media accounts

Emerging Regulations

The growth of AI technology, and AI washing, have caught the attention of regulators around the world. In the UK, the ASA is already setting a precedent by litigating against unsubstantiated AI-related ads.

In Canada, regulators are targeting unsubstantiated claims about AI as well and also marketing material that is misleading or overly promotes AI technology. The Canadian Securities Administrators released a staff notice on November 7th, 2024 that shared some examples of what it considers to be AI washing:

An AI company making the claim that their issuer is disrupting their industry with the most advanced and modern AI technology available

An AI company making the claim that they are the global leader in their AI category

An AI company over-exaggerating its usage or importance to the industry

In the US, there are state-specific regulations, like New York City’s mandatory AI bias audits that every AI tech company operating there is required to have. However, there are no comprehensive federal regulations that restrict the development or use of AI. In December 2024, the US Congress was considering more than 120 different AI bills. These regulations would cover everything from AI’s access to nuclear weapons to copyright, but they would rely on voluntary measures rather than strict protocols that could slow technological progress. While these bills are debated, there is a patchwork of US federal laws within specific departments, such as the Federal Aviation Administration that says AI in aviation must be reviewed. Similarly, there have been executive orders on AI within the White House. These orders put in place to mitigate the risk of AI use and ensure public safety, label AI-generated content, protect data privacy, ensure mandatory safety testing and other AI guidance have all just been removed by the Trump administration as recently as January 2025. The US-based AI companies that serve international markets will still have to adhere to their regulations.

Don’t Be an AI Poser

As regulators continue to enforce various types of actions against culprits of AI-washing, tech companies should take note. Any company that does claim to make real AI technology should be able to back up their claims. Their marketing teams should avoid overexaggerating the capability of their company’s AI products, as well as the outcomes, the customers, and the revenue. Any company that is unsure of its own technology or marketing should review emerging legislation locally and within the markets they sell to. Consumers or companies thinking of purchasing AI technology should look very closely at the product before buying it. With the 2024 cases of AI washing still in the early stages of litigation, the story is still unfolding, but one thing is sure, you don’t want your company to be a part of it.

#2022#2023#2024#2025#Administration#advertising#ai#AI bias#AI Chatbot#ai marketing#AI technology#ai washing#ai-generated content#Algorithms#app#artificial#Artificial Intelligence#attention#automation#aviation#back up#bank#BBC#Bias#book#brands#Business#Canada#CEO#chatbot

0 notes

Text

"Don't be afraid to let go."

Quick Wash sketch. I miss the freelancers dearly.

#i finally watch the final#i was really glad they focused on wash stuff hes been through it#Wash and Tucker help each other with AI aftermath#they deserve a good retirement#rvb#rvb wash#red vs blue#epsilon#rvb epsilon#doc scene was brutal

138 notes

·

View notes

Text

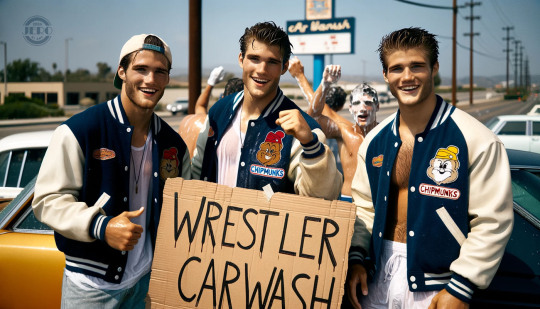

Wrestler Car Wash!

Come on out and support the guys - they're sure to be extra appreciative

189 notes

·

View notes