#AI and copyright law

Text

Groundbreaking Lawsuit: OpenAI Faces Copyright Battle with Prominent Authors

Discover the latest news about the significant copyright lawsuit against OpenAI, where popular fiction authors accuse the AI organization of copyright infringement in training ChatGPT. This headline horizon raises important questions about the intersection of artificial intelligence and copyright law.

1 note

·

View note

Text

AI models can seemingly do it all: generate songs, photos, stories, and pictures of what your dog would look like as a medieval monarch.

But all of that data and imagery is pulled from real humans — writers, artists, illustrators, photographers, and more — who have had their work compressed and funneled into the training minds of AI without compensation.

Kelly McKernan is one of those artists. In 2023, they discovered that Midjourney, an AI image generation tool, had used their unique artistic style to create over twelve thousand images.

“It was starting to look pretty accurate, a little infringe-y,” they told The New Yorker last year. “I can see my hand in this stuff, see how my work was analyzed and mixed up with some others’ to produce these images.”

For years, leading AI companies like Midjourney and OpenAI, have enjoyed seemingly unfettered regulation, but a landmark court case could change that.

On May 9, a California federal judge allowed ten artists to move forward with their allegations against Stability AI, Runway, DeviantArt, and Midjourney. This includes proceeding with discovery, which means the AI companies will be asked to turn over internal documents for review and allow witness examination.

Lawyer-turned-content-creator Nate Hake took to X, formerly known as Twitter, to celebrate the milestone, saying that “discovery could help open the floodgates.”

“This is absolutely huge because so far the legal playbook by the GenAI companies has been to hide what their models were trained on,” Hake explained...

“I’m so grateful for these women and our lawyers,” McKernan posted on X, above a picture of them embracing Ortiz and Andersen. “We’re making history together as the largest copyright lawsuit in history moves forward.” ...

The case is one of many AI copyright theft cases brought forward in the last year, but no other case has gotten this far into litigation.

“I think having us artist plaintiffs visible in court was important,” McKernan wrote. “We’re the human creators fighting a Goliath of exploitative tech.”

“There are REAL people suffering the consequences of unethically built generative AI. We demand accountability, artist protections, and regulation.”

-via GoodGoodGood, May 10, 2024

#ai#anti ai#fuck ai art#ai art#big tech#tech news#lawsuit#united states#us politics#good news#hope#copyright#copyright law

2K notes

·

View notes

Text

Also did you know that the reason NYT can sue openAI with the expectation of success is that the AI cites its sources about as well as James Somerton.

It regurgitates long sections of paywalled NYT articles verbatim, and then cites it wrong, if at all. It's not just a matter of stealing traffic and clicks etc, but also illegal redistribution and damaging the NYT's brand regarding journalistic integrity by misquoting or citing incorrectly.

OpenAI cannot claim fair use under these circumstances lmao.

#current events#open ai#openai#capitalism#lawsuits#nyt#new york times#the new york times#Phoenix Talks#copyright#copyright law#james somerton

3K notes

·

View notes

Text

An Epic antitrust loss for Google

A jury just found Google guilty on all counts of antitrust violations stemming from its dispute with Epic, maker of Fortnite, which brought a variety of claims related to how Google runs its app marketplace. This is huge:

https://www.nytimes.com/2023/12/11/technology/epic-games-google-antitrust-ruling.html

The mobile app store world is a duopoly run by Google and Apple. Both use a variety of tactics to prevent their customers from installing third party app stores, which funnels all app makers into their own app stores. Those app stores cream an eye-popping 30% off every purchase made in an app.

This is a shocking amount to charge for payment processing. The payments sector is incredibly monopolized and notorious for its price-gouging – and its standard (wildly inflated) rate is 2-5%:

https://pluralistic.net/2023/08/04/owning-the-libs/#swiper-no-swiping

Now, in theory, Epic doesn't have to sell in Google Play, the official Android app store. Unlike Apple's iOS, Android permit both sideloading (installing an app directly without using an app store) and configuring your device to use a different app store. In practice, Google uses a variety of anticompetitive tricks to prevent these app stores from springing up and to dissuade Android users from sideloading. Proving that Google's actions – like paying Activision $360m as part of "Project Hug" (no, really!) – were intended to prevent new app storesfrom springing up was a big lift for Epic. But they managed it, in large part thanks to Google's own internal communications, wherein executives admitted that this was exactly why Project Hug existed. This is part of a pattern with Big Tech antitrust: many of the charges are theoretically very hard to make stick, but because the companies put their evil plans in writing (think of the fraudulent crypto exchange FTX, whose top execs all conferred in a groupchat called "Wirefraud"), Big Tech keeps losing in court:

https://pluralistic.net/2023/09/03/big-tech-cant-stop-telling-on-itself/

Now, I do like to dunk on Big Tech for this kind of thing, because it's objectively funny and because the companies make so many unforced errors. But in an important sense, this kind of written record is impossible to avoid. Any large institution can only make and enact policy through administrative systems, and those systems leave behind a paper-trail: memos, meeting minutes, etc. Yes, we all know that quote from The Wire: "Is you taking notes on a fucking criminal conspiracy?" But inevitably, any ambitious conspiracy can only exist if someone is taking notes.

What's more, any large conspiracy involving lots of parties will inevitably produce leaks. Think of this as the corollary to the idea that the moon landing can't be a hoax, because there's no way 400,000 co-conspirators could keep the secret. Big Tech's conspiracies required hundreds or even thousands of collaborators to keep their mouths shut, and eventually someone blabs:

https://www.science.org/content/article/fake-moon-landing-you-d-need-400000-conspirators

This is part of a wave of antitrust cases being brought against the tech giants. As Matt Stoller writes, the guilty-on-all-counts jury verdict will leak into current and future actions. Remember, Google spent much of this year in court fighting the DoJ, who argued that the company bribed Apple not to make a competing search engine, paying tens of billions every year to keep a competitor from emerging. Now that a jury has convinced Google of doing that to prevent alternative app stores from emerging, claims that it used these pay-for-delay tactics in other sectros get a lot more credible:

https://www.thebignewsletter.com/p/boom-google-loses-antitrust-case

On that note: what about Apple? Epic brought a very similar case against Apple and lost. Both Apple and Epic are appealing that case to the Supreme Court, and now that Google has been convicted in a similar case, it might prompt the Supremes to weigh in and resolve the seeming inconsistencies in the interpretation of federal law.

This is a key moment in the long project to wrest antitrust away from the pro-monopoly side, who spent decades "training" judges to produce verdicts that run counter to the plain language of America's antitrust law:

https://pluralistic.net/2021/08/13/post-bork-era/#manne-down

There's 40 years' worth of bad precedent to overturn. The good news is that we've got the law on our side. Literally, the wording of the laws and the records of the Congressional debate leading to their passage, all militate towards the (incredibly obvious) conclusion that the purpose of anti-monopoly law is to fight monopoly, not defend it:

https://pluralistic.net/2023/04/14/aiming-at-dollars/#not-men

It's amazing to realize that we got into this monopoly quagmire because judges just literally refused to enforce the law. That's what makes one part of the jury verdict against Google so exciting: the jury found that Google's insistence that Play Store sellers use its payment processor was an act of illegal tying. Today, "tying" is an obscure legal theory, but few doctrines would be more useful in disenshittifying the internet. A company is guilty of illegal tying when it forces you to use unrelated products or services as a condition of using the product you actually want. The abandonment of tying led to a host of horribles, from printer companies forcing you to buy ink at $10,000/gallon to Livenation forcing venues to sell tickets through its Ticketmaster subsidiary.

The next phase of this comes when the judge decides on the penalty. Epic doesn't want cash damages – it wants the judge to order Google to fulfill its promise of "an open, competitive Android ecosystem for all users and industry participants." They've asked the judge to order Google to facilitate third-party app stores, and to separate app stores from payment processors. As Stoller puts it, they want to "crush Google’s control over Android":

https://www.epicgames.com/site/en-US/news/epic-v-google-trial-verdict-a-win-for-all-developers

Google has sworn to appeal, surprising no one. The Times's expert says that they will have a tough time winning, given how clear the verdict was. Whatever this means for Google and Android, it means a lot for a future free from monopolies.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/12/12/im-feeling-lucky/#hugger-mugger

#pluralistic#conspiracies#big tech#discovery#ai#copyright#copyfight#app stores#circuit splits#apple#apple v epic#law#trustbusting#competition#monopolies#google#epic#google v epic#fortnite#antitrust#tying#payment processing#scotus#project hug#pay for delay#games#gaming

1K notes

·

View notes

Text

So, Adobe's going ahead with pushing that law that'd make it illegal to intentionally "plagarize" someone's style with AI art. And with me, crowing like Cassandra, I feel I must explain why this is a bad idea.

Namely: There is an AI tool that lets you analyze the "style" of an art piece, several of them even. It is called CLIP Interrogator. It is likely the only way this could directly be re-enforced at scale.

There are also zero percent negative legal repercussions for "false positives" when it comes to saying someone infringed on your copyright, even moreso if the accuser is a megacorp and the accused is a small creator.

Put these two together, and you have a recipe for basically legalizing Content ID for visual artstyles over the anti-AI-art moral panic. Anyone who's seen Youtube and its false positives. knows why this is a bad idea.

Not to mention, it would criminalize anyone doing pastiche as they do in other mediums with AI art. Which might not be a big deal, except, when it creates the legal precedent for style being copyrightable, who's to say it might not spread to other mediums?

Like, we already have that as a major problem in music post-Blurred-Lines. And the bloat of copyright from "temporary protection designed to help compensate for one's work" to the idea of "intelectual property" (though that's its own rant) shows that when precedent for expanding copyright law is given, megacorps will always take it farther.

"Copyright law helps artists" only works at-scale if you're one of the Copyrights Georg-type megacorps who've been kicking small remix-based creators in the teeth since the dawn of the net.

If you're worried about the fate of small commission artists with regards to AI art, which let's face it is what's driving 90% of the most reactionary side of this discourse, look into politically organizing as art collectives both to pool resources/talent and to organize against the sites that're actually enshittifying things and doing horny bans that fuck over artists. Not terrible copyright-hell-laws.

You do not want this law.

129 notes

·

View notes

Text

I just think that if your work is getting scraped, you should be paid. Not the host website. The actual creator of the work.

If you cover a song and you try to sell that cover, you still have to pay the original songwriter their mechanical royalty. Sure, the publisher will take a cut for doing the administrative work but the writer still gets theirs too.

You can't have it both ways. It can't be art on one side of the transaction and then not be art on the other. You don't get to only use copyright law as protection to benefit corporations, and then turn around and demand it not benefit the artists making the works.

And honestly? Do you really think the AI bubble could withstand needing to actually compensate the artists that've been ripped off and rehashed? I don't.

#honestly I think statutory royalties might be the way to shut them down forever#oh no! now you have to pay people money for their work!#I know tumblr likes to treat copyright law like it's the boogeyman sometimes#but as far as legislation goes the 1976 act was actually pretty amazing for artists#like copyright reversion???#do you understand how fucking amazing that is?#not like congress will actually do anything because the GOP is committed to being useless to prove government is useless#but uh#maybe#anti ai

58 notes

·

View notes

Text

All of this because some shitty Star Wars author was buttmad about his books being put in a library (that was functional identical to a Real Library)

#internet archive#Gotta love how AI companies can scrape art & writing without consequences but its only the archive sites who get fucked by copyright laws

25 notes

·

View notes

Note

https://iatse.net/iatse-commends-introduction-of-generative-ai-copyright-disclosure-act-by-rep-schiff/

thank you for the info :)

[Image ID: An image of Dean Winchester from the confession scene saying 'I love you'. /End ID]

33 notes

·

View notes

Text

Episode 211: The Copyright Conundrum

In Episode 211, “The Copyright Conundrum,” Flourish and Elizabeth welcome prolific fic writer and copyright expert @earlgreytea68 back to the podcast to discuss her new Fansplaining article, “How U.S. Copyright Law Fails Fan Creators.” After giving a little primer on copyright, trademark, fair use, and how they all intersect with fandom, EGT discusses the ways current U.S. intellectual property law is unequipped to deal with non-monetized creativity—and how the system fails everyone but the big publishers and studios. They also discuss copyright and AI, and whether copyright claims have the potential to take down LLMs and AI tools.

Click through to our site to listen or read a full transcript!

And an exciting note: this episode has a sponsor!! Ellipsus is a new collaborative writing tool that lets you and your co-writers/editors/betas create different drafts and merge them together. They are very anti-generative AI, and they reached out to us because they have roots in fic fandom. Ellipsus is currently in closed beta, but if you use our SPECIAL LINK, you’ll go to the top of the list. We’ve really enjoyed testing it out—and we hope this can supplant Google Docs (ugh) in our fic writing.

#fansplaining#fandom#earlgreytea68#copyright law#intellectual property#fanfiction#fanworks#creativity#monetization#ai

76 notes

·

View notes

Text

AI-Created Art Isn’t Copyrightable, Judge Says in Ruling that Could Give Hollywood Studios Pause

A federal judge on Friday upheld a finding from the U.S. Copyright Office that a piece of "art" "created by" AI is not open to protection. The ruling was delivered in an order turning down a tech bro’s bid to challenge the government’s position refusing to register works made by AI.

The opinion stressed, “Human authorship is a bedrock requirement.”

Copyrights and patents, the judge said, were conceived as “forms of property that the government was established to protect, and it is understood that recognizing exclusive rights in that property would further the public good by incentivizing individuals to create and invent.”

The ruling continued, “The act of human creation — and how to best encourage human individuals to engage in that creation, and thereby promote science and the useful arts — is thus central to American copyright from its very inception.”

Copyright law wasn’t designed to protect non-human actors.

The order was delivered during the writers and actors strike seeking protection from AI infringement (among other corporate abuses), and as courts also weigh the legality of AI companies training their systems on copyrighted works. Those lawsuits, filed by artists and artists in California federal court, allege copyright infringement and could result in the firms having to destroy their large language models.

full story: X

Here's how humans can retain creative control despite tech bros trying to remove artists from the creative process: Make it so they can't profit from human creativity, and only give human creators legal protection for their work. When someone driven by profit can't profit from something, they'll move on to something else.

92 notes

·

View notes

Link

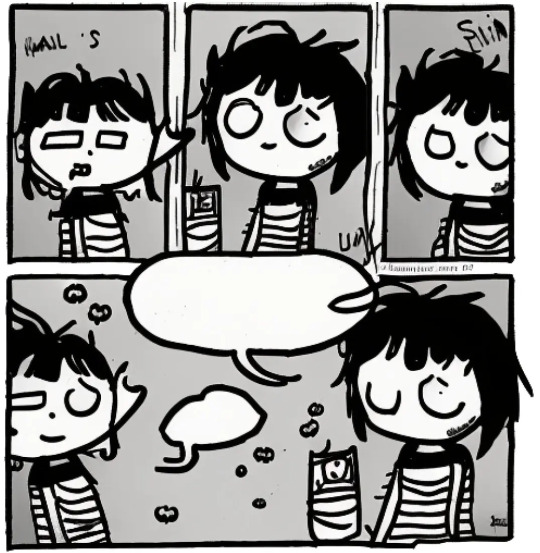

By Sarah Andersen

Ms. Andersen is a cartoonist and the illustrator of a semiautobiographical comic strip, “Sarah’s Scribbles.”

7 min read

At 19, when I began drafting my webcomic, I had just been flung into adulthood. I felt a little awkward, a little displaced. The glittering veneer of social media, which back then was mostly Facebook, told me that everyone around me had their lives together while I felt like a withering ball of mediocrity. But surely, I believed, I could not be the only one who felt that life was mostly an uphill battle of difficult moments and missed social cues.

I started my webcomic back in 2011, before “relatable” humor was as ubiquitous online as it is today. At the time, the comics were overtly simple, often drawn shakily in Microsoft Paint or poorly scanned sketchbook pages. The jokes were less punchline-oriented and more of a question: Do you feel this way too? I wrote about the small daily struggles of missed clock alarms, ill-fitting clothes and cringe-worthy moments.

I had hoped, at most, for a small, niche following, but to my elation, I had viral success. My first comic to reach a sizable audience was about simply not wanting to get up in the morning, and it was met with a chorus of “this is so me.” I felt as if I had my finger on the pulse of the collective underdog. To have found this way of communicating with others and to make it my work was, and remains, among the greatest gifts and privileges of my life.

But the attention was not all positive. In late 2016, I caught the eye of someone on the 4chan board /pol/. There was no particular incident that prompted the harassment, but in hindsight, I was a typical target for such groups. I am a woman, the messaging in the comics is feminist leaning, and importantly, the simplicity of my work makes it easy to edit and mimic. People on the forum began reproducing my work and editing it to reflect violently racist messages advocating genocide and Holocaust denial, complete with swastikas and the introduction of people getting pushed into ovens. The images proliferated online, with sites like Twitter and Reddit rarely taking them down.

A recent example of my work

For my comics, I keep things simple — punchy drawings, minimal text — because I like my ideas to be immediately accessible. I write a lot about day-to-day life. My pets, their personality quirks and my love for them are common themes.

The ways my images were altered were crude, but a few were convincing. Through the bombardment of my social media with these images, the alt-right created a shadow version of me, a version that advocated neo-Nazi ideology. At times people fell for it. I received outraged messages and had to contact my publisher to make my stance against this ultraclear. I started receiving late-night calls and had to change my number, and I got the distinct impression that the alt-right wanted a public meltdown.

At one point, someone appeared to have made a typeface, or a font, out of my handwriting. Something about the mimicking of my handwriting, streamlined into an easily accessible typeface, felt particularly violating. Handwriting is personal and intimate to me, a detail that defines me as much other unique traits like the color of my eyes or my name. I can easily recognize the handwriting of my family members and friends — it is literally their signature. Something about this new typeface made me feel as if the person who had created it was trying to program a piece of my soul.

One of the images created by the alt-right

In this comic, only the text was edited. The drawings are mine (though they are a bit dated as my style has evolved since 2017). I found the typeface to be a convincing imitation of my handwriting. Had the text been fitted into the speech bubbles more elegantly, I’d find this almost indistinguishable from my work.

The harassment shocked the naïveté out of my system. A shadow me hung over my head constantly, years after the harassment campaign ended. I had been writing differently, always trying to stay one step ahead of how my drawings could be twisted. Every deranged image the alt-right created required someone sitting down and physically editing or drawing it, and this took time and effort, allowing me to outpace them and salvage my career.

And then along comes artificial intelligence. In October, I was sent via Twitter an image generated by A.I. from a random fan who had used my name as a prompt. It wasn’t perfect, but the contours of my style were there. The notion that someone could type my name into a generator and produce an image in my style immediately disturbed me. This was not a human creating fan art or even a malicious troll copying my style; this was a generator that could spit out several images in seconds. With some technical improvement, I could see how the process of imitating my work would soon become fast and streamlined, and the many dark potentials bubbled to the forefront of my mind.

I felt violated. The way I draw is the complex culmination of my education, the comics I devoured as a child and the many small choices that make up the sum of my life. The details are often more personal than people realize — the striped shirt my character wears, for instance, is a direct nod to the protagonist of “Calvin and Hobbes,” my favorite newspaper comic. Even when a person copies me, the many variations and nuances in things like line weight make exact reproductions difficult. Humans cannot help bringing their own humanity into art. Art is deeply personal, and A.I. had just erased the humanity from it by reducing my life’s work to an algorithm.

A.I. text-to-image generators such as Stable Diffusion, Midjourney and DALL-E exploded onto the scene this year and in mere months have become widely used to create all sorts of images, ranging from digital art pieces to character designs. Stable Diffusion alone has more than 10 million daily users. These A.I. products are built on collections of images known as “data sets,” from which a detailed map of the data set’s contents, the “model,” is formed by finding the connections among images and between images and words. Images and text are linked in the data set, so the model learns how to associate words with images. It can then make a new image based on the words you type in.

An A.I. generated image that I created

when I used my name as a prompt

It’s not perfect — but it has captured the signature elements of my drawing style. The black bangs, striped shirt and wide eyes are immediately recognizable. As A.I. gets increasingly sophisticated, I am growing more concerned about what’s possible.

The data set for Stable Diffusion is called LAION 5b and was built by collecting close to six billion images from the internet in a practice called data scraping. Most, if not all, A.I. generators have my work in their data sets.

Legally, it appears as though LAION was able to scour what seems like the entire internet because it deems itself a nonprofit organization engaging in academic research. While it was funded at least in part by Stability AI, the company that created Stable Diffusion, it is technically a separate entity. Stability AI then used its nonprofit research arm to create A.I. generators first via Stable Diffusion and then commercialized in a new model called DreamStudio.

So what makes up these data sets? Well, pretty much everything. For artists, many of us had what amounted to our entire portfolios fed into the data set without our consent. This means that A.I. generators were built on the backs of our copyrighted work, and through a legal loophole, they were able to produce copies of varying levels of sophistication. When I checked the website haveibeentrained.com, a site created to allow people to search LAION data sets, so much of my work was on there that it filled up my entire desktop screen.

Many artists are not completely against the technology but felt blindsided by the lack of consideration for our craft. Being able to imitate a living artist has obvious implications for our careers, and some artists are already dealing with real challenges to their livelihood. Concept artists create works for films, video games, character designs and more. Greg Rutkowski, a hugely popular concept artist, has been used in a prompt for Stable Diffusion upward of 100,000 times. Now, his name is no longer attached to just his own work, but it also summons a slew of imitations of varying quality that he hasn’t approved. This could confuse clients, and it muddies the consistent and precise output he usually produces. When I saw what was happening to him, I thought of my battle with my shadow self. We were each fighting a version of ourself that looked similar but that was uncanny, twisted in a way to which we didn’t consent.

It gets darker. The LAION data sets have also been found to include photos of extreme violence, medical records and nonconsensual pornography. There’s a chance that somewhere in there lurks a photo of you. There are some guardrails for the more well-known A.I. generators, such as limiting certain search terms, but that doesn’t change the fact that the data set is still rife with disturbing material, and that users can find ways around the term limitations. Furthermore, because LAION is open source, people are creating new A.I. generators that don’t have these same guardrails and that are often used to make pornography.

In theory, everyone is at risk for their work or image to become a vulgarity with A.I., but I suspect those who will be the most hurt are those who are already facing the consequences of improving technology, namely members of marginalized groups. Alexandria Ocasio-Cortez, for instance, has an entire saga of deep-fake nonconsensual pornography attached to her image. I can only imagine that some of her more malicious detractors would be more than happy to use A.I. to harass her further. In the future, with A.I. technology, many more people will have a shadow self with whom they must reckon. Once the features that we consider personal and unique — our facial structure, our handwriting, the way we draw — can be programmed and contorted at the click of a mouse, the possibilities for violations are endless.

I’ve been playing around with several generators, and so far none have mimicked my style in a way that can directly threaten my career, a fact that will almost certainly change as A.I. continues to improve. It’s undeniable; the A.I.s know me. Most have captured the outlines and signatures of my comics — black hair, bangs, striped T-shirts. To others, it may look like a drawing taking shape.

I see a monster forming.

#sarah's scribbles (comic strip)#article#new york times#ai art#AI#memes#IP#copyright laws#copyright#web comics#art#comics

181 notes

·

View notes

Text

you can find something to be a real asshole move without also thinking it needs to be made illegal. this seems especially prescient with the rise of AI discourse.

#yeah it's a dick move to fire artists and use ai instead#yeah it's a dick move to scrape data without users consent#but is there really a good way to make it illegal#without tightening copyright law (which ALSO makes things harder for artists)#no#not at the moment#pigeon.txt#leftism#opinion

24 notes

·

View notes

Note

got anything good, boss?

Sure do!

-

"Weeks after The New York Times updated its terms of service (TOS) to prohibit AI companies from scraping its articles and images to train AI models, it appears that the Times may be preparing to sue OpenAI. The result, experts speculate, could be devastating to OpenAI, including the destruction of ChatGPT's dataset and fines up to $150,000 per infringing piece of content.

NPR spoke to two people "with direct knowledge" who confirmed that the Times' lawyers were mulling whether a lawsuit might be necessary "to protect the intellectual property rights" of the Times' reporting.

Neither OpenAI nor the Times immediately responded to Ars' request to comment.

If the Times were to follow through and sue ChatGPT-maker OpenAI, NPR suggested that the lawsuit could become "the most high-profile" legal battle yet over copyright protection since ChatGPT's explosively popular launch. This speculation comes a month after Sarah Silverman joined other popular authors suing OpenAI over similar concerns, seeking to protect the copyright of their books.

Of course, ChatGPT isn't the only generative AI tool drawing legal challenges over copyright claims. In April, experts told Ars that image-generator Stable Diffusion could be a "legal earthquake" due to copyright concerns.

But OpenAI seems to be a prime target for early lawsuits, and NPR reported that OpenAI risks a federal judge ordering ChatGPT's entire data set to be completely rebuilt—if the Times successfully proves the company copied its content illegally and the court restricts OpenAI training models to only include explicitly authorized data. OpenAI could face huge fines for each piece of infringing content, dealing OpenAI a massive financial blow just months after The Washington Post reported that ChatGPT has begun shedding users, "shaking faith in AI revolution." Beyond that, a legal victory could trigger an avalanche of similar claims from other rights holders.

Unlike authors who appear most concerned about retaining the option to remove their books from OpenAI's training models, the Times has other concerns about AI tools like ChatGPT. NPR reported that a "top concern" is that ChatGPT could use The Times' content to become a "competitor" by "creating text that answers questions based on the original reporting and writing of the paper's staff."

As of this month, the Times' TOS prohibits any use of its content for "the development of any software program, including, but not limited to, training a machine learning or artificial intelligence (AI) system.""

-via Ars Technica, August 17, 2023

#Anonymous#ask#me#open ai#chatgpt#anti ai#ai writing#lawsuit#united states#copyright law#new york times#good news#hope

748 notes

·

View notes

Note

Hello story how has your day been ? I guess you are in Europe so How has your night been ?

Time zones are funny because it is 3pm for me . And you have night time right now . Crazy stuff .

<3 - cab

right crazy how the world works. and its not even that big relatively

my days been good, though i fell asleep for like 3 hours at some point which is a shame......... did some artfight stuff too

my bf streamed some marvel rivals since he got into the closed beta yesterday and he played a few matches as the punisher so i could see :) which was very nice of him but also kind of funny

and how are you doing so far, cab? your days not done yet so i cant ask how its been yet but yk

#i have so much time bc im in college and its summer break [thumbsup emoji]#would have less if i wasnt such a delight to have in class and didnt pass copyright law just by arguing about ai with a dude#anyway yeah hello :)#ask

11 notes

·

View notes

Text

An open copyright casebook, featuring AI, Warhol and more

I'm coming to DEFCON! On Aug 9, I'm emceeing the EFF POKER TOURNAMENT (noon at the Horseshoe Poker Room), and appearing on the BRICKED AND ABANDONED panel (5PM, LVCC - L1 - HW1–11–01). On Aug 10, I'm giving a keynote called "DISENSHITTIFY OR DIE! How hackers can seize the means of computation and build a new, good internet that is hardened against our asshole bosses' insatiable horniness for enshittification" (noon, LVCC - L1 - HW1–11–01).

Few debates invite more uninformed commentary than "IP" – a loosely defined grab bag that regulates an ever-expaning sphere of our daily activities, despite the fact that almost no one, including senior executives in the entertainment industry, understands how it works.

Take reading a book. If the book arrives between two covers in the form of ink sprayed on compressed vegetable pulp, you don't need to understand the first thing about copyright to read it. But if that book arrives as a stream of bits in an app, those bits are just the thinnest scrim of scum atop a terminally polluted ocean of legalese.

At the bottom layer: the license "agreement" for your device itself – thousands of words of nonsense that bind you not to replace its software with another vendor's code, to use the company's own service depots, etc etc. This garbage novella of legalese implicates trademark law, copyright, patent, and "paracopyrights" like the anticircumvention rule defined by Section 1201 of the DMCA:

https://www.eff.org/press/releases/eff-lawsuit-takes-dmca-section-1201-research-and-technology-restrictions-violate

Then there's the store that sold you the ebook: it has its own soporific, cod-legalese nonsense that you must parse; this can be longer than the book itself, and it has been exquisitely designed by the world's best-paid, best-trained lawyer to liquefy the brains of anyone who attempts to read it. Nothing will save you once your brains start leaking out of the corners of your eyes, your nostrils and your ears – not even converting the text to a brilliant graphic novel:

https://memex.craphound.com/2017/03/03/terms-and-conditions-the-bloviating-cruft-of-the-itunes-eula-combined-with-extraordinary-comic-book-mashups/

Even having Bob Dylan sing these terms will not help you grasp them:

https://pluralistic.net/2020/10/25/musical-chairs/#subterranean-termsick-blues

The copyright nonsense that accompanies an ebook transcends mere Newtonian physics – it exists in a state of quantum superposition. For you, the buyer, the copyright nonsense appears as a license, which allows the seller to add terms and conditions that would be invalidated if the transaction were a conventional sale. But for the author who wrote that book, the copyright nonsense insists that what has taken place is a sale (which pays a 25% royalty) and not a license (a 50% revenue-share). Truly, only a being capable of surviving after being smeared across the multiverse can hope to embody these two states of being simultaneously:

https://pluralistic.net/2022/06/21/early-adopters/#heads-i-win

But the challenge isn't over yet. Once you have grasped the permissions and restrictions placed upon you by your device and the app that sold you the ebook, you still must brave the publisher's license terms for the ebook – the final boss that you must overcome with your last hit point and after you've burned all your magical items.

This is by no means unique to reading a book. This bites us on the job, too, at every level. The McDonald's employee who uses a third-party tool to diagnose the problems with the McFlurry machine is using a gadget whose mere existence constitutes a jailable felony:

https://pluralistic.net/2021/04/20/euthanize-rentier-enablers/#cold-war

Meanwhile, every single biotech researcher is secretly violating the patents that cover the entire suite of basic biotech procedures and techniques. Biotechnicians have a folk-belief in "patent fair use," a thing that doesn't exist, because they can't imagine that patent law would be so obnoxious as to make basic science into a legal minefield.

IP is a perfect storm: it touches everything we do, and no one understands it.

Or rather, almost no one understands it. A small coterie of lawyers have a perfectly fine grasp of IP law, but most of those lawyers are (very well!) paid to figure out how to use IP law to screw you over. But not every skilled IP lawyer is the enemy: a handful of brave freedom fighters, mostly working for nonprofits and universities, constitute a resistance against the creep of IP into every corner of our lives.

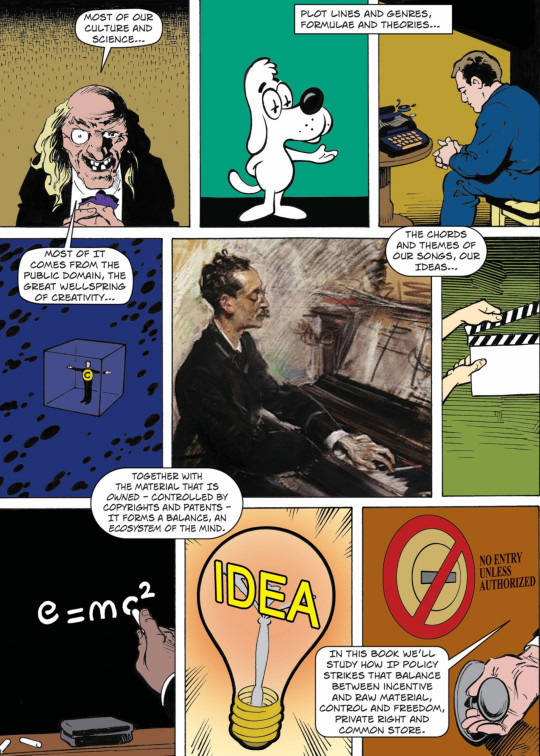

Two of my favorite IP freedom fighters are Jennifer Jenkins and James Boyle, who run the Duke Center for the Public Domain. They are a dynamic duo, world leading demystifiers of copyright and other esoterica. They are the creators of a pair of stunningly good, belly-achingly funny, and extremely informative graphic novels on the subject, starting with the 2008 Bound By Law, about fair use and film-making:

https://www.dukeupress.edu/Bound-by-Law/

And then the followup, THEFT! A History of Music:

https://web.law.duke.edu/musiccomic/

Both of which are open access – that is to say, free to download and share (you can also get handsome bound print editions made of real ink sprayed on real vegetable pulp!).

Beyond these books, Jenkins and Boyle publish the annual public domain roundups, cataloging the materials entering the public domain each January 1 (during the long interregnum when nothing entered the public domain, thanks to the Sonny Bono Copyright Extension Act, they published annual roundups of all the material that should be entering the public domain):

https://pluralistic.net/2023/12/20/em-oh-you-ess-ee/#sexytimes

This year saw Mickey Mouse entering the public domain, and Jenkins used that happy occasion as a springboard for a masterclass in copyright and trademark:

https://pluralistic.net/2023/12/15/mouse-liberation-front/#free-mickey

But for all that Jenkins and Boyle are law explainers, they are also law professors and as such, they are deeply engaged with minting of new lawyers. This is a hard job: it takes a lot of work to become a lawyer.

It also takes a lot of money to become a lawyer. Not only do law-schools charge nosebleed tuition, but the standard texts set by law-schools are eye-wateringly expensive. Boyle and Jenkins have no say over tuitions, but they have made a serious dent in the cost of those textbooks. A decade ago, the pair launched the first open IP law casebook: a free, superior alternative to the $160 standard text used to train every IP lawyer:

https://web.archive.org/web/20140923104648/https://web.law.duke.edu/cspd/openip/

But IP law is a moving target: it is devouring the world. Accordingly, the pair have produced new editions every couple of years, guaranteeing that their free IP law casebook isn't just the best text on the subject, it's also the most up-to-date. This week, they published the sixth edition:

https://web.law.duke.edu/cspd/openip/

The sixth edition of Intellectual Property: Law & the Information Society – Cases & Materials; An Open Casebook adds sections on the current legal controversies about AI, and analyzes blockbuster (and batshit) recent Supreme Court rulings like Vidal v Elster, Warhol v Goldsmith, and Jack Daniels v VIP Products. I'm also delighted that they chose to incorporate some of my essays on enshittification (did you know that my Pluralistic.net newsletter is licensed CC Attribution, meaning that you can reprint and even sell it without asking me?).

(On the subject of Creative Commons: Boyle helped found Creative Commons!)

Ten years ago, the Boyle/Jenkins open casebook kicked off a revolution in legal education, inspiring many legals scholars to create their own open legal resources. Today, many of the best legal texts are free (as in speech) and free (as in beer). Whether you want to learn about trademark, copyright, patents, information law or more, there's an open casebook for you:

https://pluralistic.net/2021/08/14/angels-and-demons/#owning-culture

The open access textbook movement is a stark contrast with the world of traditional textbooks, where a cartel of academic publishers are subjecting students to the scammiest gambits imaginable, like "inclusive access," which has raised the price of textbooks by 1,000%:

https://pluralistic.net/2021/10/07/markets-in-everything/#textbook-abuses

Meanwhile, Jenkins and Boyle keep working on this essential reference. The next time you're tempted to make a definitive statement about what IP permits – or prohibits – do yourself (and the world) a favor, and look it up. It won't cost you a cent, and I promise you you'll learn something.

Support me this summer on the Clarion Write-A-Thon and help raise money for the Clarion Science Fiction and Fantasy Writers' Workshop!

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/07/30/open-and-shut-casebook/#stop-confusing-the-issue-with-relevant-facts

Image:

Cryteria (modified)

Jenkins and Boyle

https://web.law.duke.edu/musiccomic/

CC BY-NC-SA 4.0

https://creativecommons.org/licenses/by-nc-sa/4.0/

#pluralistic#jennifer jenkins#james boyle#ip#law#law school#publishing#open access#scholarship#casebooks#copyright#copyfight#gen ai#ai#warhol

177 notes

·

View notes

Text

So, a tiny cool project that didn't have dick to do with generative AI (In that it was a lot simpler, but still useful) got bullied off the internet because the books it used to gather its data were pirated and people got pissy.

And like, while I previously didn't have much to say about this wrt AI except "Maybe we should just chill," I kinda realize that the backlash against this can lead into a valuable object lesson.

Because like, I've heard a lot of people suggest in response to well-informed folks like @chromegnomes saying "Anything we could do against AI would have a negative effect on fair use" that "Oh, well we should just make those laws apply to AI then."

And I can understand the mindset, cutting the gordian knot and all that. But the thing is, we tried that technique of "It doesn't count if it's on a computer" before. With online filesharing. And it fucked over everyone except the megacorps.

The right to "first sale," the right that lets you share movies/music/ect you bought was basically destroyed in the digital realm due to fears of stuff like Napster torpedoing the music industry.

The catastrophic consequences of this on preservation have been numerous as you are no doubt aware, but the most relevant one is that that destruction of "first sale" is currently being used to destroy the Internet Archive's online library in favor of the publishing industry's predatory ebook pricing/lending model, even with them being very careful to try and avoid it.

Note that a lot of the biggest names in anti-AI-art such as Jon Lam, Karla Oritz and Neil Turkewitz have been directly cheering on the attempts to kill the Archive, but that's neither here nor there.

But, imagine if the anti-AI-art laws, exempting anything using AI from protection by fair use, end up killing projects like this one.

Further, imagine if something like the Wayback Machine ends up using AI as a means of improving its operations in vital and important ways and some assholes like; say; Facebook or Google decide to sue to kill it.

Don't be fooled. There are better ways to defend your livelihood as an artist than putting the copyright noose around another neck.

30 notes

·

View notes