#Account Aggregation Service

Text

There are differences between UPI and Account Aggregator

Imagine having a central platform to look up your financial assets information for all of your account savings, fixed deposit and investment plans and pension savings, insurance premiums and more, all at the same time. There is no need to log and downloading financial information from different platforms, simple access, and a single view of your financial situation,

Because of the Bank Account Aggregator framework this framework is no longer restricted to the realms of imagination

The idea for Account Aggregator was conceived through the Reserve Bank of India to make it easier to access and share of financial information. In simpler terms, it acts as a "data bridge" between different participants in the financial industry.

The Account Aggregator framework is changing the method by which financial data is distributed. According to experts, it is likely to be a replica of the enormous UPI's success. UPI.

There is plenty of common ground among UPI as well as Account Aggregator it's important to understand what the distinction is since these differing concepts solve distinct issues.

This blog is designed to assist you to understand the differences between Account Aggregator and UPI.

What exactly is UPI and what are the problems UPI address?

Unified Payment Interface (UPI) is a mobile-based electronic payments system that allows you to transfer funds from bank accounts using a your mobile phones.

One of the most important benefits that comes with UPI payment is that it allows immediate real-time transactions without disclosing the bank's details. This creates a safe swift, simple and easy payment method. You don't have for carrying cash debit card or credit card. This makes it easier to make transactions while on the move.

The benefits of UPI isn't limited to transferring money between accounts. Through UPI the ability to seamlessly pay for your utilities or recharge your mobile phone. You can also perform quick and secure transactions via e-commerce platforms and pay for insurance premiums make investments in mutual funds as well as facilitate transactions using barcodes. There are numerous possibilities and this makes UPI an incredibly flexible and well-loved payment option for a wide range of applications.

What is Account Aggregator? how does it help solve problems?

Account Aggregator was created through the Reserve Bank of India (RBI) in order to make it easier for information exchange across Financial Information Providers (FIPs) as well as Financial Information Users (FIUs) with the consent of the customer.

Account Aggregator lets you easily access and examine the financial data from various sources like account balances, stocks and tax information, insurance policies specific to investments and many more in one screen. This comprehensive view of financial assets makes it easier to manage of financial assets and allows better-informed decision making.

Account aggregation also allows the secure exchange of financial data with financial institutions. This makes it simpler to join and transact with, as well as combine a variety of financial services. Use cases for Account Aggregator are vast ranging from getting loan or collaborating with wealth management professionals to organize and improve investment portfolios, and detecting potential fraud risks and reducing risk

The difference between UPI and Account Aggregator are stark.

Integration with financial institutions from other countries

UPI is a quick payment method that allows money transfers between two accounts. This means that its infrastructure is only connected to banks. However Account Aggregator provides an even greater scope since its use and impact can be extended to all financial institutions as well as all four regulatory bodies.

The focus area

Both UPI as well as Account Aggregator are both digital public infrastructures, this is the point where simjlarity ceases.. UPI is primarily concerned with the 'transfer of funds', whereas Account Aggregator is specifically focused on the transfer of financial information'.

The UPI infrastructure connects only to banks. AA connects every financial institution, including Banks as well as NBFCs, insurance companies, broking businesses, CRAs and more which makes it much more broad in terms of application and scope.

Authority to govern

National Payments Corporation of India (NPCI) is a not-for-profit organization established through the Government of India regulates UPI transactions. It also sets the standards and guidelines that govern how the system is used. NPCI assures the security as well as security for UPI transactions in addition to promoting the expansion and use of electronic payments across India. In contrast, Account Aggregator is an authorized by the RBI, and is expected to conform to various rules and rules which the RBI established to encourage responsible and fair behavior. Regulations of the RBI ensure the privacy and security of the customers is protected, and ensure that banks are committed to ethical lending policies. Sahamati additionally plays an important function in strengthening and promoting the ecosystem of Account Aggregators. Sahamati is an alliance of industry that functions as a self-organized organization in order to help facilitate coordination between all the players of the Account Aggregator community. The alliance establishes the fundamental rules and an ethical code to the entire community.

#Sahamati Account Aggregator#Account Aggregator Rbi#Account Aggregation Apps#Account Aggregator Nbfc#Rbi Account Aggregator#Account Aggregation Service#Account Aggregator Vendors#Yodlee Account Aggregation#Financial Account Aggregators#Mint Account Aggregation#Plaid Account Aggregation#Nbfc Account Aggregators#Best Account Aggregation App#Bank Account Aggregator App#Tink Account Aggregation

1 note

·

View note

Text

Credit Underwriting Process and the 5 C’s Of It

Lending to individuals or businesses is risky and a tough decision to be made, which involves credit risk decisions, operational processes, regulatory adherence to KYC norms, fraud checks, etc. which result in the final decision on approval or decline and the loan amount.

Previously, lending decisions were made manually which was subject to high-risk, cost-unfriendly, and laborious processes.

Nowadays, banks and other financial institutions utilize credit reports generated through an automated system to underwrite loan processing. Credit scores are generated through an automated system that uses algorithms to give each applicant a score. The system then uses scores for decisioning to provide loans, credit card approvals, or mortgage pre-approval.

Credit underwriting and assessment undergo a systematic process that follows a transparent process resulting in an authentic, cost-effective, and faster assessment.

The process of underwriting is as follows:

Acceptance of application from the applicant.

Intake and review of documents (balance sheet, KYC, bank balance sheets, stability report).

Pre-sanction visit by the Credit Officer (Personal Discussion), if required.

Check for the Defaulter list, Credit Score check, Verification, etc.

Creation of Credit Approval Memo.

Preparing & Analyzing the financial report.

Evaluating the proposal.

Approval/sanction of a proposal by the financial institution.

Final Review of Documentation, Contracts, and Agreements.

Credit/Loan Disbursement.

The 5 C’s of Credit Underwriting

The 5 C’s of the underwriting process are Character, Conditions, Capital, Capacity, & Collateral. These are the criteria to determine whether to sanction credit or not.

Character (Credit History) — It is simply the trustworthiness of the credit lender to repay the amount. It is perhaps the most difficult of the Five C’s to quantify, but probably the most important. In observing creditworthiness customers’ financial details are studied with the help of automated software. Also, past credit history, repayment with other lenders, credit scores, and reports are taken into consideration to determine the “Character”.

Conditions — Conditions include other information that helps determine whether one qualifies for the credit and the terms. It answers the questions as to how the borrower is planning to use the credit amount. A lender may be more willing to lend money for a specific purpose than a personal loan that can be used for anything. And other external factors such as the economy, central interest rates, and industry trends — before providing credit. This allows lenders to evaluate the risk involved.

Capacity (Cash Flow) — The borrower’s repayment ability for the credit application. The underwriter looks at the income sources, which determine the capacity to service all financial obligations. The primary source of repayment is often salaried income. And the secondary source of repayment. would be a spouse’s income, rental, or investment income.

Collateral — Collateral is the security provided to the lender for the credit. Providing collateral helps in securing the loan or credit card if one doesn’t qualify well for creditworthiness. The asset provided as collateral depends on the type of credit one has applied for. Secured loans and secured credit cards are considered less risky for lenders, and they are helpful for people who are establishing, building, or rebuilding credit.

Once all the components of underwriting are evaluated, the underwriter provides a recommendation for approval.

Credit Assessment and Robotic Transformation (CART) brings automated credit assessment & underwriting process. It is an advanced tool that uses algorithms with the help of Artificial Intelligence (AI) & Machine Learning (ML) to bring out automated results.

CART’s versatile features such as Bank Statement Analysis, ITR Analytics, GSTR, Salary Slips, & Account Aggregator Framework help in a thorough and detailed assessment of financial statements and details. The combined features analyze almost every section of the financial details which helps in generating accurate credit scores and thereby helps in making a better and less risky lending decision—a part of the credit underwriting process.

CART and its features help Financial Institutions in credit underwriting easily and effectively by making them less reliable than the manual underwriting processes.

#cart#fintech#novel patterns#account aggregator#bfsi#Financial Services#banking#finance#Bank statement analyzer#Credit underwriting

0 notes

Text

What is the Difference Between Bank Fees and Merchant Fees?

#What is the Difference Between Bank Fees and Merchant Fees?#merchant services#merchant account fees#does your merchant services raise rates and fees#merchant account pricing#merchant fees#merchant account rates#discover the secret of merchant services provider#difference between business bank account and merchant account#merchant one how much is the fees per credit card transaction#merchant account#difference between traditional merchant accounts and payment aggregators#what is a merchant account#what is a merchant accounts

1 note

·

View note

Text

#account aggregator#finserve#aa#api integration#technology service provider'#fintech#rbi#digital platforms#financial institutions#digital signature

0 notes

Text

How do Account Aggregation Services make Financial Management Easy?

The account aggregator (AA) framework facilitates sharing of financial and other information on a real-time basis and in a data blind manner between different regulated entities. Anumati is a secure and fast account aggregation services that allows you to view all your bank accounts.

0 notes

Text

Hypothetical Decentralised Social Media Protocol Stack

if we were to dream up the Next Social Media from first principles we face three problems. one is scaling hosting, the second is discovery/aggregation, the third is moderation.

hosting

hosting for millions of users is very very expensive. you have to have a network of datacentres around the world and mechanisms to sync the data between them. you probably use something like AWS, and they will charge you an eye-watering amount of money for it. since it's so expensive, there's no way to break even except by either charging users to access your service (which people generally hate to do) or selling ads, the ability to intrude on their attention to the highest bidder (which people also hate, and go out of their way to filter out). unless you have a lot of money to burn, this is a major barrier.

the traditional internet hosts everything on different servers, and you use addresses that point you to that server. the problem with this is that it responds poorly to sudden spikes in attention. if you self-host your blog, you can get DDOSed entirely by accident. you can use a service like cloudflare to protect you but that's $$$. you can host a blog on a service like wordpress, or a static site on a service like Github Pages or Neocities, often for free, but that broadly limits interaction to people leaving comments on your blog and doesn't have the off-the-cuff passing-thought sort of interaction that social media does.

the middle ground is forums, which used to be the primary form of social interaction before social media eclipsed them, typically running on one or a few servers with a database + frontend. these are viable enough, often they can be run with fairly minimal ads or by user subscriptions (the SomethingAwful model), but they can't scale indefinitely, and each one is a separate bubble. mastodon is a semi-return to this model, with the addition of a means to use your account on one bubble to interact with another ('federation').

the issue with everything so far is that it's an all-eggs-in-one-basket approach. you depend on the forum, instance, or service paying its bills to stay up. if it goes down, it's just gone. and database-backend models often interact poorly with the internet archive's scraping, so huge chunks won't be preserved.

scaling hosting could theoretically be solved by a model like torrents or IPFS, in which every user becomes a 'server' for all the posts they download, and you look up files using hashes of the content. if a post gets popular, it also gets better seeded! an issue with that design is archival: there is no guarantee that stuff will stay on the network, so if nobody is downloading a post, it is likely to get flushed out by newer stuff. it's like link rot, but it happens automatically.

IPFS solves this by 'pinning': you order an IPFS node (e.g. your server) not to flush a certain file so it will always be available from at least one source. they've sadly mixed this up in cryptocurrency, with 'pinning services' which will take payment in crypto to pin your data. my distaste for a technology designed around red queen races aside, I don't know how pinning costs compare to regular hosting costs.

theoretically you could build a social network on a backbone of content-based addressing. it would come with some drawbacks (posts would be immutable, unless you use some indirection to a traditional address-based hosting) but i think you could make it work (a mix of location-based addressing for low-bandwidth stuff like text, and content-based addressing for inline media). in fact, IPFS has the ability to mix in a bit of address-based lookup into its content-based approach, used for hosting blogs and the like.

as for videos - well, BitTorrent is great for distributing video files. though I don't know how well that scales to something like Youtube. you'd need a lot of hard drive space to handle the amount of Youtube that people typically watch and continue seeding it.

aggregation/discovery

the next problem is aggregation/discovery. social media sites approach this problem in various ways. early social media sites like LiveJournal had a somewhat newsgroup-like approach, you'd join a 'community' and people would post stuff to that community. this got replaced by the subscription model of sites like Twitter and Tumblr, where every user is simultaneously an author and a curator, and you subscribe to someone to see what posts they want to share.

this in turn got replaced by neural network-driven algorithms which attempt to guess what you'll want to see and show you stuff that's popular with whatever it thinks your demographic is. that's gotta go, or at least not be an intrinsic part of the social network anymore.

it would be easy enough to replicate the 'subscribe to see someone's recommended stuff' model, you just need a protocol for pointing people at stuff. (getting analytics such as like/reblog counts would be more difficult!) it would probably look similar to RSS feeds: you upload a list of suitably formatted data, and programs which speak that protocol can download it.

the problem of discovery - ways to find strangers who are interested in the same stuff you are - is more tricky. if we're trying to design this as a fully decentralised, censorship-resistant network, we face the spam problem. any means you use to broadcast 'hi, i exist and i like to talk about this thing, come interact with me' can be subverted by spammers. either you restrict yourself entirely to spreading across a network of curated recommendations, or you have to have moderation.

moderation

moderation is one of the hardest problems of social networks as they currently exist. it's both a problem of spam (the posts that users want to see getting swamped by porn bots or whatever) and legality (they're obliged to remove child porn, beheading videos and the like). the usual solution is a combination of AI shit - does the robot think this looks like a naked person - and outsourcing it to poorly paid workers in (typically) African countries, whose job is to look at reports of the most traumatic shit humans can come up with all day and confirm whether it's bad or not.

for our purposes, the hypothetical decentralised network is a protocol to help computers find stuff, not a platform. we can't control how people use it, and if we're not hosting any of the bad shit, it's not on us. but spam moderation is a problem any time that people can insert content you did not request into your feed.

possibly this is where you could have something like Mastodon instances, with their own moderation rules, but crucially, which don't host the content they aggregate. so instead of having 'an account on an instance', you have a stable address on the network, and you submit it to various directories so people can find you. by keeping each one limited in scale, it makes moderation more feasible. this is basically Reddit's model: you have topic-based hubs which people can subscribe to, and submit stuff to.

the other moderation issue is that there is no mechanism in this design to protect from mass harassment. if someone put you on the K*w*f*rms List of Degenerate Trannies To Suicidebait, there'd be fuck all you can do except refuse to receive contact from strangers. though... that's kind of already true of the internet as it stands. nobody has solved this problem.

to sum up

primarily static sites 'hosted' partly or fully on IPFS and BitTorrent

a protocol for sharing content you want to promote, similar to RSS, that you can aggregate into a 'feed'

directories you can submit posts to which handle their own moderation

no ads, nobody makes money off this

honestly, the biggest problem with all this is mostly just... getting it going in the first place. because let's be real, who but tech nerds is going to use a system that requires you to understand fuckin IPFS? until it's already up and running, this idea's got about as much hope as getting people to sign each others' GPG keys. it would have to have the sharp edges sanded down, so it's as easy to get on the Hypothetical Decentralised Social Network Protocol Stack as it is to register an account on tumblr.

but running over it like this... I don't think it's actually impossible in principle. a lot of the technical hurdles have already been solved. and that's what I want the Next Place to look like.

246 notes

·

View notes

Text

Let Me Read Into This Incorrectly

The Bias Blinders are FULLY on, so this won't make sense at all unless you're deep in the dirkjohn swamp

I propose the following:

IF the Breath is the aspect of freedom and individuality

AND Heart is the aspect of identity and introspection

IF the Heir is the class of embodiment in the personal sense, embodiment for the benefit of an individual

AND the Prince is the class of destruction in the personal sense, to destroy through or to destroy in relation to the self

THEN an Heir of Breath inverts to Knight of Heart and Prince of Heart inverts to Maid of Breath

Which I believe are part of the ultimate forms of John and Dirk respectively

In the way Heir and Prince are connotated with royalty - or more generically, wealth and power, Maid and Knight are connotated with service, with providing for the benefit of others. Heir and Prince classes are both singular, buying into the so-called Hero's Journey (and the monomyth which isn't actually a thing). Whereas, a Maid and Knight are communal - they fuss a lot more with everyone as a whole.

This actually plays well with the different takes on villainhood brought on by Jane and Dirk. Jane took over a whole planet in service for the people she felt obligated to care for, maintaining a communal stake. Dirk took a spaceship, some select people, but it's compact - it's efficient - it's not a sprawling civil war, maintaining only antagonists with personal stakes.

So how do I loop this back to Dirk and John? Well, if the Ultimate Self is an aggregate of all instances of themselves in Paradox Space, then these should include the complete opposites of themselves.

A response to Candy John's follies is by learning to see the people around him as people, learning to start taking into account their wants and needs, learning to serve in accordance with a better awareness of their personhood - such is the role of a Knight of Heart

A response to Meat Dirk's folloes is by learning to let go and accept that which he cannot change, learning to cater another's need to be as they are, learning to provide that sanctuary for sincerity - such is the role of a Maid of Breath

This isn't to say that these inverted versions are the Best versions of who they are.

Like a KnightofHeart!John could seek to comfort his community because he's too afraid of conflict and pain, burying problems in the name of peace like how HeirofBreath!John does in the name of his so-called personal stability

A MaidofBreath!Dirk could likewise create the same divisions in his community by creating too many clashes (drama) between people, encouraging conflict for the sake of freedom of expression like how PrinceofHeart!Dirk encourages conflict for the sake of creating a very specific storyline

(Also the way a MaidofBreath and KnightofHeart could work so well together to strike the sweet spot of maintaining communal comfort while encouraging individuality)

It's more like looking at yourself through various funhouse mirrors, seeing different parts of yourself exemplified, and learning the way it's all somehow still you. That's what the Ultimate Self is (or should be), this constant, persistant conversation with yourself and with others vis a vis your perception of yourself

(Shoutout to Everything Everywhere All At Once, the only movie in existence, they demonstrated and executed this idea so much better and in a single storyline too)

tl;dr This is How Dirk and John Can Grow By Learning From Each Other, And Also Why They Should Kiss

#homestuck#dirk strider#john egbert#dirkjohn#side note I think blood and time are complimentary#this is not just because i like davekat#said while wearing the biggest bias blinders#this is totally not inspired by how i read too many dirkjohn fics#wherein theyre loving partners#and the way who they are when theyre with each other#translates well into their inverse classes

41 notes

·

View notes

Text

Jonathan Cohn at HuffPost:

The first-ever negotiations between the federal government and pharmaceutical companies have led to agreements that will lower the prices of 10 treatments, reducing costs for the Medicare program and for some individual seniors, the Biden administration announced early Thursday morning.

This round of negotiations began in 2023 and took place because of the Inflation Reduction Act, the law that Democrats in Congress passed on a party-line vote and that President Joe Biden signed two years ago. The new prices are for drugs covering a variety of conditions, including diabetes and inflammatory illnesses, and are set to take effect in January 2026.

The negotiation process is going to happen each year, with a new set of drugs each time. If all goes to plan, that means the scope of drugs subject to negotiated prices will grow each year, while the savings will accumulate.

“When these lower prices go into effect, people on Medicare will save $1.5 billion in out-of-pocket costs for their prescription drugs and Medicare will save $6 billion in the first year alone,” Biden said in a prepared statement, citing figures that analysts at the U.S. Department of Health and Human Services calculated and published on Thursday. “It’s a relief for the millions of seniors that take these drugs to treat everything from heart failure, blood clots, diabetes, arthritis, Crohn’s disease, and more ― and it’s a relief for American taxpayers.”

Of course, those numbers refer to aggregate savings on drug spending. Figuring out what they will mean for individual Medicare beneficiaries is difficult, because so much depends on people’s individual circumstances ― like which drugs they take, or which options for prescription coverage they use.

It also depends on knowing the actual, real prices for these drugs today, after taking into account the discounts that private insurers managing Medicare drug plans extract from manufacturers. Those discounts are proprietary information that the federal government cannot release.

Great news: The Biden Administration, pharmaceutical companies, and Medicare have negotiated hefty price reductions for 10 high-cost drugs, including Januvia, FIASP, and Entresto.

#Joe Biden#Kamala Harris#Biden Administration#Prescription Drug Prices#Prescription Drugs#Medicare#Inflation Reduction Act#Senior Citizens#Policy

29 notes

·

View notes

Text

"THERE CAN BE a huge range of reasons why a show in 2024—this one or any other—doesn’t have the reach it deserves; endless pixels have been spilled on streamer fatigue and fractured audiences in the past few years. AMC, a darling of the prestige-TV-on-cable era, is in an especially strange position: Even when Interview’s first season was a hit on its streaming service, AMC+, it was still held up as an example of a troubled industry in transition. Two years and two Hollywood strikes later, the situation is even more complicated. As the industry restructures and changes who can watch what where, a disconnect has emerged between what viewers like and what critics do. At the same time, social media platforms—the loci of 21st-century word of mouth—continue to implode, fracturing the conversation of an already dispersed audience.

Amidst this, IWTV faces specific hurdles due to the nature of the show. An adaptation of Anne Rice’s 1976 novel that pulls heavily from the many Vampire Chronicles books that followed, the show racebends many of its leads—its titular vampire, Louis de Pointe du Lac, is now Black—and goes all in on the queerness of the books. And it is, of course, about vampires—specifically, vampires who do terrible things. “IWTV has so much that a modern audience could want from a series but, unfortunately, some people won’t receive it solely because it’s a queer horror show with majority BIPOC leads,” says Bobbi Miller, a culture critic who recaps the show on her YouTube channel. “Genre TV is always going to have to jump through more hoops for success than a standard drama.”

For the converted, the idea that more people aren’t watching Interview is maddening. One could certainly argue that the show, with its dark, twisted Gothicness and emotional maximalism, isn’t for everyone. But in an era of unceremonious cancellations—even of shows that execs touted as hits—and with an absence of information about the show’s future, it’s understandable that its most dedicated fans would be pushing for more viewers. Interview isn’t the only show whose fans question its marketing efforts; it’s a common accusation leveled at streamers of all sorts, especially when a show is canceled. But in this conversation, Interview fans pointed at specific decisions made by the network that many feel have made this season’s rollout feel so much more muted than the last.

“It’s been a conversation that fans have been talking about for a while now, but I think what really set them off was the comment made by Film Updates,” says Rei Gorrei, a fan who dubs herself the “Unofficial Vampire Chronicles Spokesperson.” A pop-culture aggregation account with nearly a million followers, Film Updates revealed they had been denied interview requests with the show’s talent—and since fans were worried no one was hearing about IWTV, they couldn’t understand why that reach wasn’t being capitalized on. “I think the combination of these things along with little marketing leaves fans in a word-of-mouth scenario where we now feel like it’s up to us to campaign for the season three renewal,” Gorrei says.

Many questioned the promotion the network had been implementing, too, like the decision to never have Anderson and Assad Zaman, whose characters’ romance is one of the main focuses of the season, interviewed together. Episode five in particular, with its explosive fight scene between the two, would have been a prime opportunity. (AMC did not respond to emails seeking comment for this story.) Other fans raised concerns about the unceremonious cancelation of the widely admired official podcast, whose Black female host, Naomi Ekperigin, felt like the perfect interviewer for a show with Black leads and nuanced racial storylines. Then there was the fact that too few episodes would air in time for Emmy consideration—not the fault of marketing, but yet one more source of fan worry."

Interview With the Vampire Fans Say the Stakes Have Never Been Higher by Elizabeth Minkel

#thought this was a really good summary of the discussions going on which those outside of Twitter circles may find interesting#hopefully the article may push AMC to address some of the concerns#(by which I don't mean getting on with announcing its renewal which I have complete confidence in)#and develop a proper sense of racial awareness#there have been several indie (YouTube channels and the like) interviews with Assad this week#Jacob has a lot of committments but it would be great to get something with both of them#Interview with the Vampire#Internet and Nerd Culture#Jagged Jottings

18 notes

·

View notes

Text

Podcasting "How To Think About Scraping"

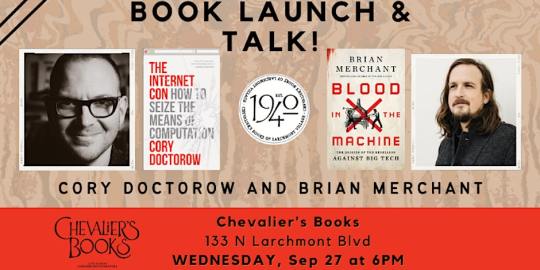

On September 27, I'll be at Chevalier's Books in Los Angeles with Brian Merchant for a joint launch for my new book The Internet Con and his new book, Blood in the Machine. On October 2, I'll be in Boise to host an event with VE Schwab.

This week on my podcast, I read my recent Medium column, "How To Think About Scraping: In privacy and labor fights, copyright is a clumsy tool at best," which proposes ways to retain the benefits of scraping without the privacy and labor harms that sometimes accompany it:

https://doctorow.medium.com/how-to-think-about-scraping-2db6f69a7e3d?sk=4a1d687171de1a3f3751433bffbb5a96

What are those benefits from scraping? Well, take computational linguistics, a relatively new discipline that is producing the first accounts of how informal language works. Historically, linguists overstudied written language (because it was easy to analyze) and underanalyzed speech (because you had to record speakers and then get grad students to transcribe their dialog).

The thing is, very few of us produce formal, written work, whereas we all engage in casual dialog. But then the internet came along, and for the first time, we had a species of mass-scale, informal dialog that also written, and which was born in machine-readable form.

This ushered in a new era in linguistic study, one that is enthusiastically analyzing and codifying the rules of informal speech, the spread of vernacular, and the regional, racial and class markers of different kinds of speech:

https://memex.craphound.com/2019/07/24/because-internet-the-new-linguistics-of-informal-english/

The people whose speech is scraped and analyzed this way are often unreachable (anonymous or pseudonymous) or impractical to reach (because there's millions of them). The linguists who study this speech will go through institutional review board approvals to make sure that as they produce aggregate accounts of speech, they don't compromise the privacy or integrity of their subjects.

Computational linguistics is an unalloyed good, and while the speakers whose words are scraped to produce the raw material that these scholars study, they probably wouldn't object, either.

But what about entities that explicitly object to being scraped? Sometimes, it's good to scrape them, too.

Since 1996, the Internet Archive has scraped every website it could find, storing snapshots of every page it found in a giant, searchable database called the Wayback Machine. Many of us have used the Wayback Machine to retrieve some long-deleted text, sound, image or video from the internet's memory hole.

For the most part, the Internet Archive limits its scraping to websites that permit it. The robots exclusion protocol (AKA robots.txt) makes it easy for webmasters to tell different kinds of crawlers whether or not they are welcome. If your site has a robots.txt file that tells the Archive's crawler to buzz off, it'll go elsewhere.

Mostly.

Since 2017, the Archive has started ignoring robots.txt files for news services; whether or not the news site wants to be crawled, the Archive crawls it and makes copies of the different versions of the articles the site publishes. That's because news sites – even the so-called "paper of record" – have a nasty habit of making sweeping edits to published material without noting it.

I'm not talking about fixing a typo or a formatting error: I'm talking about making a massive change to a piece, one that completely reverses its meaning, and pretending that it was that way all along:

https://medium.com/@brokenravioli/proof-that-the-new-york-times-isn-t-feeling-the-bern-c74e1109cdf6

This happens all the time, with major news sites from all around the world:

http://newsdiffs.org/examples/

By scraping these sites and retaining the different versions of their article, the Archive both detects and prevents journalistic malpractice. This is canonical fair use, the kind of copying that almost always involves overriding the objections of the site's proprietor. Not all adversarial scraping is good, but this sure is.

There's an argument that scraping the news-sites without permission might piss them off, but it doesn't bring them any real harm. But even when scraping harms the scrapee, it is sometimes legitimate – and necessary.

Austrian technologist Mario Zechner used the API from country's super-concentrated grocery giants to prove that they were colluding to rig prices. By assembling a longitudinal data-set, Zechner exposed the raft of dirty tricks the grocers used to rip off the people of Austria.

From shrinkflation to deceptive price-cycling that disguised price hikes as discounts:

https://mastodon.gamedev.place/@badlogic/111071627182734180

Zechner feared publishing his results at first. The companies whose thefts he'd discovered have enormous power and whole kennelsful of vicious attack-lawyers they can sic on him. But he eventually got the Austrian competition bureaucracy interested in his work, and they published a report that validated his claims and praised his work:

https://mastodon.gamedev.place/@badlogic/111071673594791946

Emboldened, Zechner open-sourced his monitoring tool, and attracted developers from other countries. Soon, they were documenting ripoffs in Germany and Slovenia, too:

https://mastodon.gamedev.place/@badlogic/111071485142332765

Zechner's on a roll, but the grocery cartel could shut him down with a keystroke, simply by blocking his API access. If they do, Zechner could switch to scraping their sites – but only if he can be protected from legal liability for nonconsensually scraping commercially sensitive data in a way that undermines the profits of a powerful corporation.

Zechner's work comes at a crucial time, as grocers around the world turn the screws on both their suppliers and their customers, disguising their greedflation as inflation. In Canada, the grocery cartel – led by the guillotine-friendly hereditary grocery monopolilst Galen Weston – pulled the most Les Mis-ass caper imaginable when they illegally conspired to rig the price of bread:

https://en.wikipedia.org/wiki/Bread_price-fixing_in_Canada

We should scrape all of these looting bastards, even though it will harm their economic interests. We should scrape them because it will harm their economic interests. Scrape 'em and scrape 'em and scrape 'em.

Now, it's one thing to scrape text for scholarly purposes, or for journalistic accountability, or to uncover criminal corporate conspiracies. But what about scraping to train a Large Language Model?

Yes, there are socially beneficial – even vital – uses for LLMs.

Take HRDAG's work on truth and reconciliation in Colombia. The Human Rights Data Analysis Group is a tiny nonprofit that makes an outsized contribution to human rights, by using statistical methods to reveal the full scope of the human rights crimes that take place in the shadows, from East Timor to Serbia, South Africa to the USA:

https://hrdag.org/

HRDAG's latest project is its most ambitious yet. Working with partner org Dejusticia, they've just released the largest data-set in human rights history:

https://hrdag.org/jep-cev-colombia/

What's in that dataset? It's a merger and analysis of more than 100 databases of killings, child soldier recruitments and other crimes during the Colombian civil war. Using a LLM, HRDAG was able to produce an analysis of each killing in each database, estimating the probability that it appeared in more than one database, and the probability that it was carried out by a right-wing militia, by government forces, or by FARC guerrillas.

This work forms the core of ongoing Colombian Truth and Reconciliation proceedings, and has been instrumental in demonstrating that the majority of war crimes were carried out by right-wing militias who operated with the direction and knowledge of the richest, most powerful people in the country. It also showed that the majority of child soldier recruitment was carried out by these CIA-backed, US-funded militias.

This is important work, and it was carried out at a scale and with a precision that would have been impossible without an LLM. As with all of HRDAG's work, this report and the subsequent testimony draw on cutting-edge statistical techniques and skilled science communication to bring technical rigor to some of the most important justice questions in our world.

LLMs need large bodies of text to train them – text that, inevitably, is scraped. Scraping to produce LLMs isn't intrinsically harmful, and neither are LLMs. Admittedly, nonprofits using LLMs to build war crimes databases do not justify even 0.0001% of the valuations that AI hypesters ascribe to the field, but that's their problem.

Scraping is good, sometimes – even when it's done against the wishes of the scraped, even when it harms their interests, and even when it's used to train an LLM.

But.

Scraping to violate peoples' privacy is very bad. Take Clearview AI, the grifty, sleazy facial recognition company that scraped billions of photos in order to train a system that they sell to cops, corporations and authoritarian governments:

https://pluralistic.net/2023/09/20/steal-your-face/#hoan-ton-that

Likewise: scraping to alienate creative workers' labor is very bad. Creators' bosses are ferociously committed to firing us all and replacing us with "generative AI." Like all self-declared "job creators," they constantly fantasize about destroying all of our jobs. Like all capitalists, they hate capitalism, and dream of earning rents from owning things, not from doing things.

The work these AI tools sucks, but that doesn't mean our bosses won't try to fire us and replace us with them. After all, prompting an LLM may produce bad screenplays, but at least the LLM doesn't give you lip when you order to it give you "ET, but the hero is a dog, and there's a love story in the second act and a big shootout in the climax." Studio execs already talk to screenwriters like they're LLMs.

That's true of art directors, newspaper owners, and all the other job-destroyers who can't believe that creative workers want to have a say in the work they do – and worse, get paid for it.

So how do we resolve these conundra? After all, the people who scrape in disgusting, depraved ways insist that we have to take the good with the bad. If you want accountability for newspaper sites, you have to tolerate facial recognition, too.

When critics of these companies repeat these claims, they are doing the companies' work for them. It's not true. There's no reason we couldn't permit scraping for one purpose and ban it for another.

The problem comes when you try to use copyright to manage this nuance. Copyright is a terrible tool for sorting out these uses; the limitations and exceptions to copyright (like fair use) are broad and varied, but so "fact intensive" that it's nearly impossible to say whether a use is or isn't fair before you've gone to court to defend it.

But copyright has become the de facto regulatory default for the internet. When I found someone impersonating me on a dating site and luring people out to dates, the site advised me to make a copyright claim over the profile photo – that was their only tool for dealing with this potentially dangerous behavior.

The reasons that copyright has become our default tool for solving every internet problem are complex and historically contingent, but one important point here is that copyright is alienable, which means you can bargain it away. For that reason, corporations love copyright, because it means that they can force people who have less power than the company to sign away their copyrights.

This is how we got to a place where, after 40 years of expanding copyright (scope, duration, penalties), we have an entertainment sector that's larger and more profitable than ever, even as creative workers' share of the revenues their copyrights generate has fallen, both proportionally and in real terms.

As Rebecca Giblin and I write in our book Chokepoint Capitalism, in a market with five giant publishers, four studios, three labels, two app platforms and one ebook/audiobook company, giving creative workers more copyright is like giving your bullied kid extra lunch money. The more money you give that kid, the more money the bullies will take:

https://chokepointcapitalism.com/

Many creative workers are suing the AI companies for copyright infringement for scraping their data and using it to train a model. If those cases go to trial, it's likely the creators will lose. The questions of whether making temporary copies or subjecting them to mathematical analysis infringe copyright are well-settled:

https://www.eff.org/deeplinks/2023/04/ai-art-generators-and-online-image-market

I'm pretty sure that the lawyers who organized these cases know this, and they're betting that the AI companies did so much sleazy shit while scraping that they'll settle rather than go to court and have it all come out. Which is fine – I relish the thought of hundreds of millions in investor capital being transferred from these giant AI companies to creative workers. But it doesn't actually solve the problem.

Because if we do end up changing copyright law – or the daily practice of the copyright sector – to create exclusive rights over scraping and training, it's not going to get creators paid. If we give individual creators new rights to bargain with, we're just giving them new rights to bargain away. That's already happening: voice actors who record for video games are now required to start their sessions by stating that they assign the rights to use their voice to train a deepfake model:

https://www.vice.com/en/article/5d37za/voice-actors-sign-away-rights-to-artificial-intelligence

But that doesn't mean we have to let the hyperconcentrated entertainment sector alienate creative workers from their labor. As the WGA has shown us, creative workers aren't just LLCs with MFAs, bargaining business-to-business with corporations – they're workers:

https://pluralistic.net/2023/08/20/everything-made-by-an-ai-is-in-the-public-domain/

Workers get a better deal with labor law, not copyright law. Copyright law can augment certain labor disputes, but just as often, it benefits corporations, not workers:

https://locusmag.com/2019/05/cory-doctorow-steering-with-the-windshield-wipers/

Likewise, the problem with Clearview AI isn't that it infringes on photographers' copyrights. If I took a thousand pictures of you and sold them to Clearview AI to train its model, no copyright infringement would take place – and you'd still be screwed. Clearview has a privacy problem, not a copyright problem.

Giving us pseudocopyrights over our faces won't stop Clearview and its competitors from destroying our lives. Creating and enforcing a federal privacy law with a private right action will. It will put Clearview and all of its competitors out of business, instantly and forever:

https://www.eff.org/deeplinks/2019/01/you-should-have-right-sue-companies-violate-your-privacy

AI companies say, "You can't use copyright to fix the problems with AI without creating a lot of collateral damage." They're right. But what they fail to mention is, "You can use labor law to ban certain uses of AI without creating that collateral damage."

Facial recognition companies say, "You can't use copyright to ban scraping without creating a lot of collateral damage." They're right too – but what they don't say is, "On the other hand, a privacy law would put us out of business and leave all the good scraping intact."

Taking entertainment companies and AI vendors and facial recognition creeps at their word is helping them. It's letting them divide and conquer people who value the beneficial elements and those who can't tolerate the harms. We can have the benefits without the harms. We just have to stop thinking about labor and privacy issues as individual matters and treat them as the collective endeavors they really are:

https://pluralistic.net/2023/02/26/united-we-stand/

Here's a link to the podcast:

https://craphound.com/news/2023/09/24/how-to-think-about-scraping/

And here's a direct link to the MP3 (hosting courtesy of the Internet Archive; they'll host your stuff for free, forever):

https://archive.org/download/Cory_Doctorow_Podcast_450/Cory_Doctorow_Podcast_450_-_How_To_Think_About_Scraping.mp3

And here's the RSS feed for my podcast:

http://feeds.feedburner.com/doctorow_podcast

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/09/25/deep-scrape/#steering-with-the-windshield-wipers

Image:

syvwlch (modified)

https://commons.wikimedia.org/wiki/File:Print_Scraper_(5856642549).jpg

CC BY-SA 2.0

https://creativecommons.org/licenses/by/2.0/deed.en

#pluralistic#cory doctorow#podcast#scraping#internet archive#wga strike#sag-aftra strike#wga#sag-aftra#labor#privacy#facial recognition#clearview ai#greedflation#price gouging#fr#austria#computational linguistics#linguistics#ai#ml#artificial intelligence#machine learning#llms#large language models#stochastic parrots#plausible sentence generators#hrdag#colombia#human rights

80 notes

·

View notes

Text

So I used a personal finance management app that aggregates all the accounts I have in one place. The company that owns it shut it down at the end of the last year and transitioned it to another site they own. It does absolutely fucking nothing that I need it to do, which includes:

show me balances from all my connected accounts

track spending

sort/recategorize/tag transactions

set budgets

Literally the only functionality it retains is the ability to sell me shit. Which I was fine with (lights gotta stay on somehow) when I got use out of the site, but now it has been enshittified.

The other thing this experience has reminded me of is you should find a service that aggregates all your bank accounts. Empower is the one I'm using because it's got the same functionality as Mint, but there are other options*. Your bank/credit union might also offer a similar service.

It's a lot like using a password manager--it's a giant pain to set up initially, but it will ultimately make your life so much easier. All your account balances in one place** so you don't have to log in to each individual site to check! A unified view of your finances!

It is, of course, not a solution for not having enough money, but clarity on your purchases and subscriptions can help you identify things you don't want/need, as well as overcharges and discrepancies.

I know better money management is a popular new year's resolution, and this is a pretty easy step towards that. You don't have to add all your accounts at once. But I find it satisfying to see the picture become clearer. Also, graphs.

--

* Search "mint pfm alternatives" if you want to know more. Most options I found were paid but maybe you're a person who would shell out for useful features. Monarch looks amazing for people with shared finances.

** It's totally safe. I deal with this shit for a living. I can explain more if you want but it's boring.

26 notes

·

View notes

Note

In terms of just the lost deposits, bank collapses don't affect most people due to deposit insurance. This seems to be set at $250k in the US. There are other concerns like individuals' liquidity, and systemic risk. But it seems like in cases of bank collapse with no systemic risk, most people are financially better off not bailing it out, as it means more of loss lies with the well off.

this isn't true! TL;DR it probably used to be true, but for ex in the case of SVB, there were a number of payroll providers in the bank, and if they go under, every person who works for one of the companies that uses those payroll providers can't get paid:

When Rippling’s bank recently went under, there was substantial risk that paychecks would not arrive at the employees of Rippling’s customers. Rippling wrote a press release whose title mostly contains the content: “Rippling calls on FDIC to release payments due to hundreds of thousands of everyday Americans.”

Prior to the FDIC et al’s decision to entirely back the depositors of the failed bank, the amount of coverage that the deposit insurance scheme provided depositors was $250,000 and the amount it afforded someone receiving a paycheck drawn on the dead bank was zero dollars and zero cents.

This is not a palatable result for society. Not politically, not as a matter of policy, not as a matter of ethics.

Every regulator sees the world through a lens that was painstakingly crafted over decades. The FDIC institutionally looks at this fact pattern and sees this as a single depositor over the insured deposit limit. It does not see 300,000 bounced paychecks.

Payroll providers are the tip of the iceberg for novel innovations in financial services over the last few decades. There exist many other things which society depends on which map very poorly to “insured account” abstraction. This likely magnifies the likely aggregate impact of bank failures, and makes some of our institutional intuitions about their blast radius wrong in important ways.

61 notes

·

View notes

Text

Hello, this is the Oldie Chinese Diaspora Anon™️

Anon here voiced a frustration that I think we all share, from time to time. The commentators have brought up some very important points, but I think it may be important to show you a fuller picture. It’s not just about a concept of freedom, but what it can actually mean.

First of all, to say there are few, or no incidences of fraud in the Chinese BJD community is objectively false. The infamous Baidu BJD Tucao Bar (

) and it’s more updated counterpart on Weixin exist because of three reasons – it’s a place to vent within a community, a place where two sides of a dispute can come together to share their side in a court of public opinion and finally, to call out and expose scammers. The following examples are all in Chinese, of course – but how else to show you what’s really going on unless you actually see for yourself? Now, the extent of what’s considered a “scam” is stretched far and wide, but included are common complaints about how individual indie artists have inconsistent pre-orders (https://tieba.baidu.com/p/8126795911 ), long wait times (http://c.tieba.baidu.com/p/8446099713 ) the quality of the resin from these indie artists are brittle/streaky/full of bubbles (https://tieba.baidu.com/p/7657831519 ), as well as horrid communication with customer service (https://tieba.baidu.com/p/7216544824 ).

Other scammers have:

Sold counterfeits as real: http://c.tieba.baidu.com/p/6319214040

Attempt to claw back prices after receiving a second-hand doll (usually banking on the seller being afraid of a bad feedback) http://c.tieba.baidu.com/p/8422233115

Scams that occur during trades (didn’t send anything, send trash instead of dolls, recalled a package while still receiving something from the sender, etc) https://tieba.baidu.com/p/6138333817

Running away with down-payments: https://tieba.baidu.com/p/7546265316

Cheaters trading across different fandoms: https://tieba.baidu.com/p/6237611363

What’s the use of this “social credit system” if it doesn’t really stop the scamming, then?

I think I may have mentioned this earlier; the point of a social credit system is more about controlling a population’s aggregate behaviour than the behaviour of individuals. It’s there to quash dissent instead of keeping a community crime-free. Think about it this way, BJD collectors are a small, niche market with a high price tag. And if the Chinese government is lax enough to allow rampant counterfeit foods and medication to go on for years (https://www.youtube.com/watch?v=hIpA_RwEtLE&t=33s and https://www.youtube.com/watch?v=GYr87XCAa48 and https://www.bbc.com/news/world-asia-china-56080092 ), would the system really protect a small group of rather privileged individuals? You might ask, “Hey, OCDA, aren’t these people caught? That means the system works, right?” The answer is, “Yes, and they are just the tip of the iceberg. There are plenty more of these people who were never caught.” I think we have all heard that just because something’s immoral doesn’t mean it’s illegal. Legality is never the bottom line that we should hold each other accountable to, but these folks get by banking on that their immorality will never be caught, and unless they were caught, what they do aren’t illegal.

On the other hand, the government is quick to stop anything that could cause a social stir. No matter how big the topic might be (human trafficking, public corruption, the existence of COVID, you name it), if there was too much interest in a topic that the government deemed “unsuitable” (if it gives you an answer at all), it can be rubbed out of social media overnight. This is possible because 1. There’s only one kind of social media, which is closely monitored by the State and 2. There’s the Great Firewall of China and scaling the wall can incur a fine and sometimes even probation. The person who started the whole furor may be invited by the police for a talking-to (or just disappeared, either way.)

BJDs are small fry, we know that, so where do minor “scams” lie? Yes, it is inherently frustrating as a consumer to realise that one cannot reinforce trade laws across borders or hold non-registered sellers under the same scrutiny as a registered company. I understand that personally as a victim of a bungled preorder as well. But this so-called “Social Credit” system is never the way to go. Not even Black Mirror or Minority Report can replicate the reality that’s inside China, folks. The truth is worse than fiction.

~Anonymous

20 notes

·

View notes

Text

DM Hutchins 2nd Research Project - 5-5-24

~ The DM Hutchins 2nd Digital Occult Library Research Project ~

The DM Hutchins 2nd Digital Occult Library Research Projectis the public end of my personal collection of research materials which I have aggregated over the past 20 years, and which cover a plethora of subject matter. Other than to educate myself, I aim to collect, preserve, share, and discuss, these occulted materials, which are valuable to the alchemical process, and ending the state of human slavery. Most specifically, it is my aim to collect valuable research materials, before the content is censored from the internet. I aim to organize and preserve those research materials, both online and offline. I aim to share these research materials, via links to my backups on the MEGA service, and by way of mailing physical HHD or SSD study drives, as well as customized media devices such as readers, tablets, MP3 players, and so on. It is my aim to discuss those most valuable research materials, as everyone is welcome to contact me, and to assist in future updates to the Library. Thus far my research material totals nearly 5 TB, with the Configuration Gifts totaling 4 TB, and the public backups on MEGA totaling an eventual 2 TB.

~ Public Backups of my Research Materials ~

My research material is divided in to two primary categories, which are Online Backups and In-Hand Study Drives. My Online Backups are hosted on MEGA. MEGA is the data storage and sharing platform upon which my personal research material backups are available to the public. MEGA does impose a bandwidth limitation on how much you can download per hour, however, using a VPN to change your IP occasionally, allows you to download data from MEGA indefinitely.

Please note that you can View, Read, Watch, and Listen, to all of the research materials in your browser, or the MEGA app, without the need to download anything, however, if you would like to download a personal backup of the research materials, you may download them to your computer or phone, and if you also have a MEGA account, you can cloud transfer materials directly from my account to your own account, provided you have the storage space. I highly recommend downloading all meaningful research materials, on personal offline devices, due to the nature of internet censorship. It is YOUR personal responsibility to Collect, Preserve, Know, and Teach, this information.

I intend to eventually make 2 TB of my personal research materials available on MEGA. Each of the five links below take you to individual collections, according to their content and format. Obviously, it will take time to upload 2 TB of BackUp files, so check back in often to see what has been added since you last visited. My progress will be indicated by the number of GB listed beside each section. If you see (Complete) next to a section, that section is fully backed up, and nothing more will be added to it. I have currently uploaded 507.42 GB out of 2 TB as of 4-21-24.

1 - DMH2ND Digital Occult Library - PDF & EPUB

Complete - 100.86 GB - 718 Folders - 21,171 Files.

2 - DMH2ND Digital Occult Library - Audiobook

123.85 GB of 446 GB - 307 Folders - 6339 Files.

3 - DMH2ND Digital Occult Library - Audio Lectures, Series

Complete - 167.55 GB - 1246 Folders - 13,116 Files.

4 - DMH2ND Digital Occult Library - Audio Podcast

203.86 GB of 1 TB - 19 Folders - 2959 Files.

5 - DMH2ND Digital Occult Library - Photo Library

Complete - 13.3 GB - 499 Folders - 28,899 Files.

~ Configuration Gifts ~

Of course, certain files are only included within Storage Drives and Personalized Devices via Configuration Gifts. In the event that you'd like to own a Hard Drive, Solid State Drive, or Personal Device, configured in to a "Study Station", meaning that it has been loaded with part, or all, of the DM Hutchins 2nd Digital Occult Library Research Project, please have a look at my post explaining Configuration Gifts.

~ Contact Information ~

[email protected] - My primary public email for information exchange.

Facebook - A Government Social Media platform which I use primarily to tell willful slaves to eat shit for timeless eternity.

Twitter - I use Twitter mainly for its chat feature. I have no idea what is trending, nor do I care. Use this only as a means of communication.

Tumblr - Tumblr is a platform intended for Artist and Writers focused upon the occult and personal mastery. Much to be gained here.

Reditt - An overly complicated group of know-nothings using admin buttons to be correct online, because they are idiot failures in real life...

YouTube - I do not post on YouTube, I merely have an account. If you are a YouTuber you can contact me in that manner.

BitChute - On this account I post random and occasional audio rants.

~ Support Methods ~

If you find value in my efforts, and these research materials have been beneficial to your studies and personal development, please consider assisting in my ability to continue this work.

One Time And Occasional Personal Donations

Paypal - My Paypal

Venmo - My Venmo

CashApp - My CashApp

GPay - My Google Pay

Shop On My Online Storefronts

Bonfire - My freedom themed tee shirts/hoodies.

Lulu - My printed poetry and essay publications.

Technological Needs

Without question, my greatest needs are technological in nature. I simply do not possess the means to purchase the various electronics required so as to manage and distribute Study Drives and Configuration Gifts, on the level that I'm striving for. I also require portable equipment in order to share large amounts of research materials in person, rather than via mail. If you would like to assist me in acquiring the technology necessary to continue this work, consider donating items from the Amazon Wishlist below.

1 - Cables and Adapters -

To Charge And Transfer Data Across A Range of Devices.

2 - Flashdrives and SD Cards -

To Manage And Transfer Data Across A Range of Devices.

3 - Hard Drives and Solid State Drives -

To Preserve Research Materials With Redundant Copies.

4 - Battery Banks and Chargers -

To Charge and Maintain Multiple Device With Portability.

5 - Audio Podcasting Gear -

Basic On-The-Go Voice Recording Equipment.

~ And A Very Special Thank You ~

A very special Thank You to Paolo Tonolo, Dion Plowman, Dawn Lavandowski and Gordon H Cairns, (And all of you who wish not to be mentioned) for your continual contributions of content and person time, to this massive and ever growing research project. You are each true Brothers, Sisters, and Warriors, in the Battle for Truth. Much Love and Respect to You and Yours...

5 notes

·

View notes

Text

Exciting news from Sony this evening, with the announcement that not only is a new Sly Cooper game at last in the works, it will be the first video game created entirely by AI.

The move was unveiled by Sony CEO Robert Sony Jr., who is battling ongoing accusations from shareholders that he is running the company his father founded into the ground. No journalists were invited to the press event, with Sony instead speaking the details into an automatic speech-to-text tool which was then improved with Grammarly(r) and emailed out to outlets that his Microsoft Outlook account considered to be relevant.

"AI has made impressive strides in muscling living, breathing humans out of various artistic fields permanently. With these programs now handling your dumb hobbies, you don't need to waste your time churning out paintings or poems or whatever and can now focus on your office job sixty hours a week," Sony reminded us.

To that end, every element of the upcoming Sly Cooper game will be procedurally generated from aggregated data pools. The series' famous art style will be briskly assembled via machine learning. Sly and friends will speak in recreations of their original actors' voices, uncanny both in their undeniable recognisability and their stilted, inhuman cadences. Their dialogue will be generated based on neural-network analysis of their previous adventures. Given the relatively short length of the series, however, Sony will also draw from other sources to fill out this last dataset, primarily Marvel movies and Sonic the Hedgehog games. That just happened - way past cool!

"Granted, that's the easy part," conceded Sony. "AI tools can easily replace writers, artists, and voice actors, but gameplay is another question. How hard could it really be, though? Code is code."

It's been a rough year for Sony financially, as the media and electronics giant only made a net profit of eleven billion dollars - far, far short of projected potential earnings of eleven and a half billion dollars. Sony has admitted this shortfall is unacceptable, firing seven thousand employees as a corrective measure. He hopes this experiment could turn his fortunes around.

"Nate Fox. Dev Madan. Kevin Miller, Matt Olsen, Chris Murphy, and whoever voices Carmelita," said Sony. "All of these are people we no longer need to pay. Hell, we didn't even pay the robot. What does it care? It's not like it's in a union. 100% of the profits will be going to the true backbone of society: faceless men in suits who used their pre-existing wealth to buy the legal rights to things. Like me!"

Once the AI generates the script, gameplay, art assets, voice lines, and any new characters, it will then blend these elements together in what Sony assures investors will most certainly be a video game.

"Just buy the damn thing," he concluded, prematurely loosening his tie. "If you love Sly Cooper, the only way to show it is to give me, the current rights holder, money. Do you kids still like NFTs? We can put an NFT in it too. That's not worth its own press conference though."

Sly Cooper: A Thief is a Person Who Takes Another Person's Property or Services Without Consent will be on sale, like, next week.

#april fools#fake news#listen. LISTEN#I swore that I would enter ''sly cooper game cover'' into some shitty online AI program and use the first result#but HOLY FUCK I WASN'T READY#the fear-laughter this image invoked in me was intense#anyway hope you enjoyed checking in with my oc Robert Sony Junior

28 notes

·

View notes

Text

“Stocks closed higher today amid brisk trading…” On the radio, television, in print and online, news outlets regularly report trivial daily changes in stock market indices, providing a distinctly slanted perspective on what matters in the economy. Except when they shift suddenly and by a large margin, the daily vagaries of the market are not particularly informative about the overall health of the economy. They are certainly not an example of news most people can use. Only about a third of Americans own stock outside of their retirement accounts and about one in five engage in stock trading on a regular basis. And yet the stock market’s minor fluctuations make up a standard part of economic news coverage.

But what if journalists reported facts more attuned to the lives of everyday Americans? For instance, facts like “in one month, the richest 25,000 Americans saw their wealth grow by an average of nearly $10 million each, compared to just $200 dollars apiece for the bottom 50% of households”? Thanks to innovative new research strategies from leading economists, we now have access to inequality data in much closer to real time. Reporters should be making use of it.

The outsized attention to the Dow Jones and Nasdaq fits with part of a larger issue: class bias in media coverage of the economy. A 2004 analysis of economic coverage in the Los Angeles Times found that journalists “depicted events and problems affecting corporations and investors instead of the general workforce.” While the media landscape has shifted since 2004, with labor becoming a “hot news beat,” this shift alone seems unlikely to correct the media’s bias. This is because, as an influential political science study found, biased reporting comes from the media’s focus on aggregates in a system where growth is not distributed equally; when most gains go to the rich, overall growth is a good indicator of how the wealthy are doing, but a poor indicator of how the non-rich are doing.

In other words, news is shaped by the data on hand. Stock prices are minute-by-minute information. Other economic data, especially about inequality, are less readily available. The Bureau of Labor Statistics releases data on job growth once a month, and that often requires major corrections. Data on inflation also become available on a monthly basis. Academic studies on inequality use data from the Census Bureau or the Internal Revenue Service, which means information is months or even years out of date before it reaches the public.

But the landscape of economic data is changing. Economists have developed new tools that can track inequality in near real-time:

From U.C. Berkeley, Realtime Inequality provides monthly statistics and even daily projections of income and wealth inequality — all with a fun interactive interface. You can see the latest data and also parse long-term trends. For instance, over the past 20 years, the top .01% percent of earners have seen their real income nearly double, while the bottom 50% of Americans have seen their real income decline.

The State of U.S. Wealth Inequality from the St. Louis Fed provides quarterly data on racial, generational, and educational wealth inequality. The Fed data reminds us, for example, that Black families own about 25 cents for every $1 of white family wealth.

While these sources do not update at the speed of a stock ticker, they represent a massive step forward in the access to more timely, accurate, and complete understanding of economic conditions.

Would more reporting on inequality change public attitudes? That is an open question. A few decades ago, political scientists found intriguing correlations between media coverage and voters’ economic assessments, but more recent analyses suggest that media coverage “does not systematically precede public perceptions of the economy.” Nonetheless, especially given the vast disparities in economic fortune that have developed in recent decades, it is the responsibility of reporters to present data that gives an accurate and informative picture of the economy as it is experienced by most people, not just by those at the top.

And these data matter for all kinds of political judgments, not just public perspectives on the economy. When Americans are considering the Supreme Court’s recent decision on affirmative action, for example, it is useful to know how persistent racial disparities remain in American society; white high school dropouts have a greater median net worth than Black and Hispanic college graduates. Generational, racial, and educational inequality structure the American economy. It’s past time that the media’s coverage reflects that reality, rather than waste Americans’ time on economic trivia of the day.

13 notes

·

View notes