#AppSec

Explore tagged Tumblr posts

Text

An Overview of Burp Suite: Acquisition, Features, Utilisation, Community Engagement, and Alternatives.

Introduction:

Burp Suite is one of the strongest web application security testing software tools used by cybersecurity experts, as well as ethical hackers. PortSwigger created Burp Suite, which provides potent scanning, crawling, and exploiting tools for web application vulnerabilities.

What is Burp Suite?

Burp Suite is one of the tools to conduct security testing of web applications. It assists security testers in detecting vulnerabilities and weaknesses like SQL injections, XSS, CSRF, etc.

Steps in Obtaining Burp Suite

Burp Suite is available for download on the PortSwigger official website. It is available in three versions:

Community Edition (Free)

Professional Edition (Subscription-Based)

Enterprise Edition (For Organisations)

Important Tools in Burp Suite

Proxy – Captures browser traffic

Spider – Crawls web application content

Scanner – Scans automatically for vulnerabilities (Pro only)

Intruder – Performs automated attack activities.

Repeater – Manually send requests.

Decoder – Translates encoded data.

Comparer – Compares HTTP requests/responses

Extender – Allows extensions through the BApp Store

How to Use Burp Suite

Set your browser to use Burp Proxy.

Capture and manipulate HTTP/S requests.

Utilise tools such as Repeater and Intruder for testing.

Scan server responses for risks.

Export reports for audit purposes.

Burp Suite Community

Burp Suite has a highly engaged worldwide user base of security experts. PortSwigger Forum and GitHub repositories have discussions, plugins, and tutorials. Many experts are contributing through YouTube, blogs, and courses.

Alternatives to Burp Suite

If you're searching for alternatives, then look at:

OWASP ZAP (Open Source)

Acunetix

Netsparker

Nikto

Wfuzz

Conclusion:

Burp Suite is widely used for web application security testing. Mastery of Burp Suite is one step towards web application security for both novice and professional ethical hackers.

#BurpSuite#CyberSecurity#EthicalHacking#PenTesting#BugBounty#InfoSec#WebSecurity#SecurityTools#AppSec#OWASP#HackingTools#TechTools#WhiteHatHacker#CyberTools#BurpSuiteCommunity#NetworkSecurity#PortSwigger#WebAppTesting#SecurityScanner#CyberAwareness

0 notes

Text

Mobile and third-party risk: How legacy testing leaves you exposed

Legacy security testing leaves mobile apps vulnerable to third-party risks. Without deeper binary analysis, attackers can exploit blind spots in the software supply chain. https://www.reversinglabs.com/blog/mobile-and-third-party-risk-how-legacy-testing-leaves-you-expose

0 notes

Text

What is Web Application Security Testing?

Web Application Security Testing, also known as Web AppSec, is a method to test whether web applications are vulnerable to attacks. It involves a series of automated and manual tests and different methodologies to identify and mitigate security risks in any web application. read more

#WebApplicationSecurity#SecurityTesting#CyberSecurity#AppSec#PenetrationTesting#VulnerabilityAssessment#InfoSec#SecureDevelopment#WebSecurity#QAandTesting

0 notes

Text

6 tipos de testes de segurança de aplicativos que você precisa conhecer

O teste de segurança de aplicativos é um componente crítico do desenvolvimento de software moderno, garantindo que os aplicativos sejam robustos e resilientes contra ataques maliciosos. À medida que as ameaças cibernéticas continuam a evoluir em complexidade e frequência, a necessidade de integrar medidas de segurança abrangentes em todo o SDLC nunca foi tão essencial. O pentesting tradicional…

View On WordPress

#AppSec#BreachLock#Cíber segurança#segurança cibernética#Segurança de aplicativos#Teste de penetração

0 notes

Text

rock around the security flaw

youtube

dual life - rock and security flaws

0 notes

Text

How to use OWASP Security Knowledge Framework | CyberSecurityTV

youtube

Learn how to harness the power of the OWASP Security Knowledge Framework with expert guidance on CyberSecurityTV! 🔒 Dive into the world of application security and sharpen your defenses. Get ready to level up your cybersecurity game with this must-watch video!

#OWASP#SecurityKnowledge#CyberSecurity#InfoSec#WebSecurity#AppSec#LearnSecurity#HackProof#OnlineSafety#SecureYourApps#CyberAware#Youtube

0 notes

Text

0 notes

Text

PHP is so god awful. My favorite concept from the security perspective are magic hashes. When you have a hash that begins with 0e... followed by numbers (you can also have an arbitrary number of zeroes at the beginning), it will end up being computed as a zero unless there's a strict comparison happening due to PHP natively supporting scientific notation.

So md5(240610708) is equal to 0e462097431906509019562988736854 which will then be interpreted as zero when doing a loose comparison ("==", double equal signs are loose comparisons in PHP). This can lead to an assortment of unexpected behavior, especially considering how hashing is used in many login functionalities.

This can be resolved by using strict comparisons (in PHP this is done with "===", triple equal signs), but if you didn't know about that difference because you come from a more sane language then bam you could have created a critical vulnerability without knowing it!

This is something that I love to encounter in the wild and if you think salting fixes this, think again! It's not difficult to find your own magic hashes if you know the salt.

Read more here:

Also... the dollar signs make PHP awful to read.

infinite respect for php for still having cursed shit im finding out about it even after working with it daily for 4 years

16 notes

·

View notes

Text

youtube

How To Generate Secure PGP Keys | CyberSecurityTV

🌟In the previous episodes we learned about encryption and decryption. Today, I will show you a couple methods to generate PGP keys and we will also see some of the attributes that we need to configure in order to generate a secure key. Once you have the key, we will also see how to use them to securely exchange the information.

#owasptop10#webapppentest#appsec#applicationsecurity#apitesting#apipentest#cybersecurityonlinetraining#freesecuritytraining#penetrationtest#ethicalhacking#burpsuite#pentestforbegineers#Youtube

0 notes

Text

Application Security : CSRF

Cross Site Request Forgery allows an attacker to capter or modify information from an app you are logged to by exploiting your authentication cookies.

First thing to know : use HTTP method carefully. For instance GET shoud be a safe method with no side effect. Otherwise a simple email opening or page loading can trigger the exploit of an app vulnerability

PortSwigger has a nice set of Labs to understand csrf vulnerabilities : https://portswigger.net/web-security/csrf

Use of CSRF protections in web frameworks

Nuxt

Based on express-csurf. I am not certain of potential vulnerabilities. The token is set in a header and the secret to validate the token in a cookie

Django

0 notes

Text

Hallucination Control: Benefits and Risks of Deploying LLMs as Part of Security Processes

New Post has been published on https://thedigitalinsider.com/hallucination-control-benefits-and-risks-of-deploying-llms-as-part-of-security-processes/

Hallucination Control: Benefits and Risks of Deploying LLMs as Part of Security Processes

Large Language Models (LLMs) trained on vast quantities of data can make security operations teams smarter. LLMs provide in-line suggestions and guidance on response, audits, posture management, and more. Most security teams are experimenting with or using LLMs to reduce manual toil in workflows. This can be both for mundane and complex tasks.

For example, an LLM can query an employee via email if they meant to share a document that was proprietary and process the response with a recommendation for a security practitioner. An LLM can also be tasked with translating requests to look for supply chain attacks on open source modules and spinning up agents focused on specific conditions — new contributors to widely used libraries, improper code patterns — with each agent primed for that specific condition.

That said, these powerful AI systems bear significant risks that are unlike other risks facing security teams. Models powering security LLMs can be compromised through prompt injection or data poisoning. Continuous feedback loops and machine learning algorithms without sufficient human guidance can allow bad actors to probe controls and then induce poorly targeted responses. LLMs are prone to hallucinations, even in limited domains. Even the best LLMs make things up when they don’t know the answer.

Security processes and AI policies around LLM use and workflows will become more critical as these systems become more common across cybersecurity operations and research. Making sure those processes are complied with, and are measured and accounted for in governance systems, will prove crucial to ensuring that CISOs can provide sufficient GRC (Governance, Risk and Compliance) coverage to meet new mandates like the Cybersecurity Framework 2.0.

The Huge Promise of LLMs in Cybersecurity

CISOs and their teams constantly struggle to keep up with the rising tide of new cyberattacks. According to Qualys, the number of CVEs reported in 2023 hit a new record of 26,447. That’s up more than 5X from 2013.

This challenge has only become more taxing as the attack surface of the average organization grows larger with each passing year. AppSec teams must secure and monitor many more software applications. Cloud computing, APIs, multi-cloud and virtualization technologies have added additional complexity. With modern CI/CD tooling and processes, application teams can ship more code, faster, and more frequently. Microservices have both splintered monolithic app into numerous APIs and attack surface and also punched many more holes in global firewalls for communication with external services or customer devices.

Advanced LLMs hold tremendous promise to reduce the workload of cybersecurity teams and to improve their capabilities. AI-powered coding tools have widely penetrated software development. Github research found that 92% of developers are using or have used AI tools for code suggestion and completion. Most of these “copilot” tools have some security capabilities. In fact, programmatic disciplines with relatively binary outcomes such as coding (code will either pass or fail unit tests) are well suited for LLMs. Beyond code scanning for software development and in the CI/CD pipeline, AI could be valuable for cybersecurity teams in several other ways:

Enhanced Analysis: LLMs can process massive amounts of security data (logs, alerts, threat intelligence) to identify patterns and correlations invisible to humans. They can do this across languages, around the clock, and across numerous dimensions simultaneously. This opens new opportunities for security teams. LLMs can burn down a stack of alerts in near real-time, flagging the ones that are most likely to be severe. Through reinforcement learning, the analysis should improve over time.

Automation: LLMs can automate security team tasks that normally require conversational back and forth. For example, when a security team receives an IoC and needs to ask the owner of an endpoint if they had in fact signed into a device or if they are located somewhere outside their normal work zones, the LLM can perform these simple operations and then follow up with questions as required and links or instructions. This used to be an interaction that an IT or security team member had to conduct themselves. LLMs can also provide more advanced functionality. For example, a Microsoft Copilot for Security can generate incident analysis reports and translate complex malware code into natural language descriptions.

Continuous Learning and Tuning: Unlike previous machine learning systems for security policies and comprehension, LLMs can learn on the fly by ingesting human ratings of its response and by retuning on newer pools of data that may not be contained in internal log files. In fact, using the same underlying foundational model, cybersecurity LLMs can be tuned for different teams and their needs, workflows, or regional or vertical-specific tasks. This also means that the entire system can instantly be as smart as the model, with changes propagating quickly across all interfaces.

Risk of LLMs for Cybersecurity

As a new technology with a short track record, LLMs have serious risks. Worse, understanding the full extent of those risks is challenging because LLM outputs are not 100% predictable or programmatic. For example, LLMs can “hallucinate” and make up answers or answer questions incorrectly, based on imaginary data. Before adopting LLMs for cybersecurity use cases, one must consider potential risks including:

Prompt Injection: Attackers can craft malicious prompts specifically to produce misleading or harmful outputs. This type of attack can exploit the LLM’s tendency to generate content based on the prompts it receives. In cybersecurity use cases, prompt injection might be most risky as a form of insider attack or attack by an unauthorized user who uses prompts to permanently alter system outputs by skewing model behavior. This could generate inaccurate or invalid outputs for other users of the system.

Data Poisoning: The training data LLMs rely on can be intentionally corrupted, compromising their decision-making. In cybersecurity settings, where organizations are likely using models trained by tool providers, data poisoning might occur during the tuning of the model for the specific customer and use case. The risk here could be an unauthorized user adding bad data — for example, corrupted log files — to subvert the training process. An authorized user could also do this inadvertently. The result would be LLM outputs based on bad data.

Hallucinations: As mentioned previously, LLMs may generate factually incorrect, illogical, or even malicious responses due to misunderstandings of prompts or underlying data flaws. In cybersecurity use cases, hallucinations can result in critical errors that cripple threat intelligence, vulnerability triage and remediation, and more. Because cybersecurity is a mission critical activity, LLMs must be held to a higher standard of managing and preventing hallucinations in these contexts.

As AI systems become more capable, their information security deployments are expanding rapidly. To be clear, many cybersecurity companies have long used pattern matching and machine learning for dynamic filtering. What is new in the generative AI era are interactive LLMs that provide a layer of intelligence atop existing workflows and pools of data, ideally improving the efficiency and enhancing the capabilities of cybersecurity teams. In other words, GenAI can help security engineers do more with less effort and the same resources, yielding better performance and accelerated processes.

#2023#agent#agents#ai#AI systems#ai tools#AI-powered#alerts#Algorithms#Analysis#APIs#app#applications#AppSec#Attack surface#attackers#automation#Behavior#binary#challenge#CI/CD#CISOs#Cloud#cloud computing#code#coding#communication#Companies#complexity#compliance

0 notes

Text

Changes to CVE program are a call to action on your AppSec strategy

Changes to the CVE program signal a critical moment for AppSec strategies. It's time to modernize your approach to risk management. https://jpmellojr.blogspot.com/2025/04/changes-to-cve-program-are-call-to.html

0 notes

Text

A Complete Security Testing Guide

In addition to being utilized by businesses, web-based payroll systems, shopping malls, banking, and stock trading software are now offered for sale as goods. read more

#SecurityTesting#CyberSecurity#AppSec#TestingGuide#PenetrationTesting#VulnerabilityAssessment#InfoSec#SoftwareSecurity#SecureDevelopment#QAandTesting

0 notes

Text

October 7, 2024

281/366 Days of Growth

Saturday study session and early morning. Started Python studies to improve my scripting skills.

One good news is that I found a Cybersecurity mentor and a cybersec community. All because I spoke with a guy at work who is a Senior AppSec coordinator and has the same client as me (but works at another consulting company). He is awesome and presented me to my mentor, and now I have a specific direction to go to 🤓

I walked a long way this year, and I know I have a lot more to walk, but at least all I did is finally feel right.

To complete my happiness, I only want to leave my job and find a Cybersecurity position... Things there are terrible for all teams, and are just getting worse. I am praying for a new job - and studying to help it to come true.

#studyblr#study#study blog#daily life#dailymotivation#study motivation#studying#study space#productivity#study desk#matcha#stemblr#cybersecurity

33 notes

·

View notes

Text

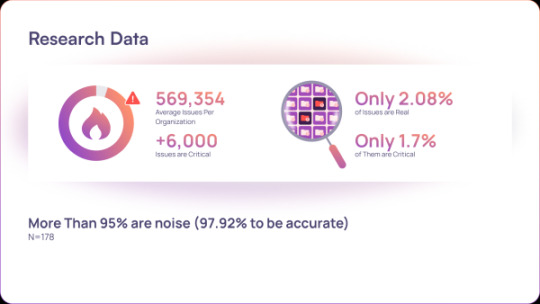

New Research Reveals: 95% of AppSec Fixes Don't Reduce Risk

Source: https://thehackernews.com/2025/05/new-research-reveals-95-of-appsec-fixes.html

More info: https://www.ox.security/ox-2025-application-security-benchmark-report

3 notes

·

View notes