#Jupyterlab extension

Explore tagged Tumblr posts

Text

The Best Open-Source Tools for Data Science in 2025

Data science in 2025 is thriving, driven by a robust ecosystem of open-source tools that empower professionals to extract insights, build predictive models, and deploy data-driven solutions at scale. This year, the landscape is more dynamic than ever, with established favorites and emerging contenders shaping how data scientists work. Here’s an in-depth look at the best open-source tools that are defining data science in 2025.

1. Python: The Universal Language of Data Science

Python remains the cornerstone of data science. Its intuitive syntax, extensive libraries, and active community make it the go-to language for everything from data wrangling to deep learning. Libraries such as NumPy and Pandas streamline numerical computations and data manipulation, while scikit-learn is the gold standard for classical machine learning tasks.

NumPy: Efficient array operations and mathematical functions.

Pandas: Powerful data structures (DataFrames) for cleaning, transforming, and analyzing structured data.

scikit-learn: Comprehensive suite for classification, regression, clustering, and model evaluation.

Python’s popularity is reflected in the 2025 Stack Overflow Developer Survey, with 53% of developers using it for data projects.

2. R and RStudio: Statistical Powerhouses

R continues to shine in academia and industries where statistical rigor is paramount. The RStudio IDE enhances productivity with features for scripting, debugging, and visualization. R’s package ecosystem—especially tidyverse for data manipulation and ggplot2 for visualization—remains unmatched for statistical analysis and custom plotting.

Shiny: Build interactive web applications directly from R.

CRAN: Over 18,000 packages for every conceivable statistical need.

R is favored by 36% of users, especially for advanced analytics and research.

3. Jupyter Notebooks and JupyterLab: Interactive Exploration

Jupyter Notebooks are indispensable for prototyping, sharing, and documenting data science workflows. They support live code (Python, R, Julia, and more), visualizations, and narrative text in a single document. JupyterLab, the next-generation interface, offers enhanced collaboration and modularity.

Over 15 million notebooks hosted as of 2025, with 80% of data analysts using them regularly.

4. Apache Spark: Big Data at Lightning Speed

As data volumes grow, Apache Spark stands out for its ability to process massive datasets rapidly, both in batch and real-time. Spark’s distributed architecture, support for SQL, machine learning (MLlib), and compatibility with Python, R, Scala, and Java make it a staple for big data analytics.

65% increase in Spark adoption since 2023, reflecting its scalability and performance.

5. TensorFlow and PyTorch: Deep Learning Titans

For machine learning and AI, TensorFlow and PyTorch dominate. Both offer flexible APIs for building and training neural networks, with strong community support and integration with cloud platforms.

TensorFlow: Preferred for production-grade models and scalability; used by over 33% of ML professionals.

PyTorch: Valued for its dynamic computation graph and ease of experimentation, especially in research settings.

6. Data Visualization: Plotly, D3.js, and Apache Superset

Effective data storytelling relies on compelling visualizations:

Plotly: Python-based, supports interactive and publication-quality charts; easy for both static and dynamic visualizations.

D3.js: JavaScript library for highly customizable, web-based visualizations; ideal for specialists seeking full control.

Apache Superset: Open-source dashboarding platform for interactive, scalable visual analytics; increasingly adopted for enterprise BI.

Tableau Public, though not fully open-source, is also popular for sharing interactive visualizations with a broad audience.

7. Pandas: The Data Wrangling Workhorse

Pandas remains the backbone of data manipulation in Python, powering up to 90% of data wrangling tasks. Its DataFrame structure simplifies complex operations, making it essential for cleaning, transforming, and analyzing large datasets.

8. Scikit-learn: Machine Learning Made Simple

scikit-learn is the default choice for classical machine learning. Its consistent API, extensive documentation, and wide range of algorithms make it ideal for tasks such as classification, regression, clustering, and model validation.

9. Apache Airflow: Workflow Orchestration

As data pipelines become more complex, Apache Airflow has emerged as the go-to tool for workflow automation and orchestration. Its user-friendly interface and scalability have driven a 35% surge in adoption among data engineers in the past year.

10. MLflow: Model Management and Experiment Tracking

MLflow streamlines the machine learning lifecycle, offering tools for experiment tracking, model packaging, and deployment. Over 60% of ML engineers use MLflow for its integration capabilities and ease of use in production environments.

11. Docker and Kubernetes: Reproducibility and Scalability

Containerization with Docker and orchestration via Kubernetes ensure that data science applications run consistently across environments. These tools are now standard for deploying models and scaling data-driven services in production.

12. Emerging Contenders: Streamlit and More

Streamlit: Rapidly build and deploy interactive data apps with minimal code, gaining popularity for internal dashboards and quick prototypes.

Redash: SQL-based visualization and dashboarding tool, ideal for teams needing quick insights from databases.

Kibana: Real-time data exploration and monitoring, especially for log analytics and anomaly detection.

Conclusion: The Open-Source Advantage in 2025

Open-source tools continue to drive innovation in data science, making advanced analytics accessible, scalable, and collaborative. Mastery of these tools is not just a technical advantage—it’s essential for staying competitive in a rapidly evolving field. Whether you’re a beginner or a seasoned professional, leveraging this ecosystem will unlock new possibilities and accelerate your journey from raw data to actionable insight.

The future of data science is open, and in 2025, these tools are your ticket to building smarter, faster, and more impactful solutions.

#python#r#rstudio#jupyternotebook#jupyterlab#apachespark#tensorflow#pytorch#plotly#d3js#apachesuperset#pandas#scikitlearn#apacheairflow#mlflow#docker#kubernetes#streamlit#redash#kibana#nschool academy#datascience

0 notes

Text

Developing and Deploying AI/ML Applications on Red Hat OpenShift AI with Hawkstack

Artificial Intelligence (AI) and Machine Learning (ML) are driving innovation across industries—from predictive analytics in healthcare to real-time fraud detection in finance. But building, scaling, and maintaining production-grade AI/ML solutions remains a significant challenge. Enter Red Hat OpenShift AI, a powerful platform that brings together the flexibility of Kubernetes with enterprise-grade ML tooling. And when combined with Hawkstack, organizations can supercharge observability and performance tracking throughout their AI/ML lifecycle.

Why Red Hat OpenShift AI?

Red Hat OpenShift AI (formerly Red Hat OpenShift Data Science) is a robust enterprise platform designed to support the full AI/ML lifecycle—from development to deployment. Key benefits include:

Scalability: Native Kubernetes integration allows seamless scaling of ML workloads.

Security: Red Hat’s enterprise security practices ensure that ML pipelines are secure by design.

Flexibility: Supports a variety of tools and frameworks, including Jupyter Notebooks, TensorFlow, PyTorch, and more.

Collaboration: Built-in tools for team collaboration and continuous integration/continuous deployment (CI/CD).

Introducing Hawkstack: Observability for AI/ML Workloads

As you move from model training to production, observability becomes critical. Hawkstack, a lightweight and extensible observability framework, integrates seamlessly with Red Hat OpenShift AI to provide real-time insights into system performance, data drift, model accuracy, and infrastructure metrics.

Hawkstack + OpenShift AI: A Powerful Duo

By integrating Hawkstack with OpenShift AI, you can:

Monitor ML Pipelines: Track metrics across training, validation, and deployment stages.

Visualize Performance: Dashboards powered by Hawkstack allow teams to monitor GPU/CPU usage, memory footprint, and latency.

Enable Alerting: Proactively detect model degradation or anomalies in your inference services.

Optimize Resources: Fine-tune resource allocation based on telemetry data.

Workflow: Developing and Deploying ML Apps

Here’s a high-level overview of what a modern AI/ML workflow looks like on OpenShift AI with Hawkstack:

1. Model Development

Data scientists use tools like JupyterLab or VS Code on OpenShift AI to build and train models. Libraries such as scikit-learn, XGBoost, and Hugging Face Transformers are pre-integrated.

2. Pipeline Automation

Using Red Hat OpenShift Pipelines (Tekton), you can automate training and evaluation pipelines. Integrate CI/CD practices to ensure robust and repeatable workflows.

3. Model Deployment

Leverage OpenShift AI’s serving layer to deploy models using Seldon Core, KServe, or OpenVINO Model Server—all containerized and scalable.

4. Monitoring and Feedback with Hawkstack

Once deployed, Hawkstack takes over to monitor inference latency, throughput, and model accuracy in real-time. Anomalies can be fed back into the training pipeline, enabling continuous learning and adaptation.

Real-World Use Case

A leading financial services firm recently implemented OpenShift AI and Hawkstack to power their loan approval engine. Using Hawkstack, they detected a model drift issue caused by seasonal changes in application data. Alerts enabled retraining to be triggered automatically, ensuring their decisions stayed fair and accurate.

Conclusion

Deploying AI/ML applications in production doesn’t have to be daunting. With Red Hat OpenShift AI, you get a secure, scalable, and enterprise-ready foundation. And with Hawkstack, you add observability and performance intelligence to every stage of your ML lifecycle.

Together, they empower organizations to bring AI/ML innovations to market faster—without compromising on reliability or visibility.

For more details www.hawkstack.com

0 notes

Text

Vertex AI Workbench Pricing, Advantages And Features

Get Vertex AI Workbench price clarity. Take use of its pay-as-you-go notebook instances to scale AI/ML applications on Google Cloud.

Secret Vertex AI Workbench

Google Cloud is expanding Vertex AI's Confidential Computing capabilities. Confidential Computing, in preview, helps Vertex AI Workbench customers meet data privacy regulations. This connection increases privacy and anonymity with a few clicks.

Vertex AI Notebooks

You can use Vertex AI Workbench or Colab Enterprise. Use Vertex AI Platform for data science initiatives from discovery to prototype to production.

Advantages

BigQuery, Dataproc, Spark, and Vertex AI integration simplifies data access and machine learning in-notebook.

Rapid prototyping and model development: Vertex AI Training delivers data to training at scale using infinite computing for exploration and prototyping.

Vertex AI Workbench or Colab Enterprise lets you run training and deployment procedures on Vertex AI from one place.

Important traits

Colab Enterprise blends Google Cloud enterprise-level security and compliance with Google Research's notebook, used by over 7 million data scientists. Launch a collaborative, serverless, zero-config environment quickly.

AI-powered code aid features like code completion and code generation make Python AI/ML model building easier so you can focus on data and models.

Vertex AI Workbench offers JupyterLab and advanced customisation.

Fully controlled compute: Vertex AI laptops provide enterprise-ready, scalable, user management, and security features.

Explore data and train machine learning models with Google Cloud's big data offerings.

End-to-end ML training portal: Implement AI solutions on Vertex AI with minimal transition.

Extensions will simplify data access to BigQuery, Data Lake, Dataproc, and Spark. Easily scale up or out for AI and analytics.

Research data sources with a catalogue: Write SQL and Spark queries in a notebook cell with auto-complete and syntax awareness.

Integrated, sophisticated visualisation tools make data insights easy.

Hands-off, cost-effective infrastructure: Computing is handled everywhere. Auto shutdown and idle timeout maximise TCO.

Unusual Google Cloud security for simplified enterprise security. Simple authentication and single sign-on for various Google Cloud services.

Vertex AI Workbench runs TensorFlow, PyTorch, and Spark.

MLOps, training, and Deep Git integration: Just a few clicks connect laptops to established operational workflows. Notebooks are useful for hyper-parameter optimisation, scheduled or triggered continuous training, and distributed training. Deep interface with Vertex AI services allows the notebook to implement MLOps without additional processes or code rewrite.

Smooth CI/CD: Notebooks are a reliable Kubeflow Pipelines deployment target.

Notebook viewer: Share output from regularly updated notebook cells for reporting and bookkeeping.

Pricing for Vertex AI Workbench

The VM configurations you choose determine Vertex AI Workbench pricing. The price is the sum of the virtual machine costs. To calculate accelerator costs, multiply accelerator pricing by machine hours when utilising Compute Engine machine types and adding accelerators.

Your Vertex AI Workbench instance is charged based on its status.

CPU and accelerator usage is paid during STARTING, PROVISIONING, ACTIVE, UPGRADING, ROLLBACKING, RESTORING, STOPPING, and SUSPENDING.

The sources also say that managed and user-managed laptop pricing data is available separately, although the extracts do not provide details.

Other Google Cloud resources (managed or user-controlled notebooks) used with Vertex AI Workbench may cost you. Running SQL queries on a notebook may incur BigQuery expenses. Customer-managed encryption keys incur Cloud Key Management Service key operations fees. Like compute Engine and Cloud Storage, Deep Learning Containers, Deep Learning VM Images, and AI Platform Pipelines are compensated for the computing and storage resources they use in machine learning processes.

#VertexAIWorkbenchpricing#VertexAI#VertexAIWorkbench#VertexAINotebooks#ColabEnterprise#googlecloudVertexAIWorkbench#Technology#TechNews#technologynews#news#govindhtech

0 notes

Text

Exploring Jupyter Notebooks: The Perfect Tool for Data Science

Exploring Jupyter Notebooks: The Perfect Tool for Data Science Jupyter Notebooks have become an essential tool for data scientists and analysts, offering a robust and flexible platform for interactive computing.

Let’s explore what makes Jupyter Notebooks an indispensable part of the data science ecosystem.

What Are Jupyter Notebooks?

Jupyter Notebooks are an open-source, web-based application that allows users to create and share documents containing live code, equations, visualizations, and narrative text.

They support multiple programming languages, including Python, R, and Julia, making them versatile for a variety of data science tasks.

Key Features of Jupyter Notebooks Interactive Coding Jupyter’s cell-based structure lets users write and execute code in small chunks, enabling immediate feedback and interactive debugging.

This iterative approach is ideal for data exploration and model development.

Rich Text and Visualizations Beyond code, Jupyter supports Markdown and LaTeX for documentation, enabling clear explanations of your workflow.

It also integrates seamlessly with libraries like Matplotlib, Seaborn, and Plotly to create interactive and static visualizations.

Language Flexibility With Jupyter’s support for over 40 programming languages, users can switch kernels to leverage the best tools for their specific task.

Python, being the most popular choice, often integrates well with other libraries like Pandas, NumPy, and Scikit-learn.

Extensibility Through Extensions Jupyter’s ecosystem includes numerous extensions, such as JupyterLab, nbconvert, and nbextensions, which add functionality like exporting notebooks to different formats or improving UI capabilities.

Collaborative Potential Jupyter Notebooks are easily shareable via GitHub, cloud platforms, or even as static HTML files, making them an excellent choice for team collaboration and presentations.

Why Are Jupyter Notebooks Perfect for Data Science? Data Exploration and Cleaning Jupyter is ideal for exploring datasets interactively.

You can clean, preprocess, and visualize data step-by-step, ensuring a transparent and repeatable workflow.

Machine Learning Its integration with machine learning libraries like TensorFlow, PyTorch, and XGBoost makes it a go-to platform for building and testing predictive models.

Reproducible Research The combination of narrative text, code, and results in one document enhances reproducibility and transparency, critical in scientific research.

Ease of Learning and Use The intuitive interface and immediate feedback make Jupyter a favorite among beginners and experienced professionals alike.

Challenges and Limitations While Jupyter Notebooks are powerful, they come with some challenges:

Version Control Complexity:

Tracking changes in notebooks can be tricky compared to plain-text scripts.

Code Modularity:

Managing large projects in a notebook can lead to clutter.

Execution Order Issues:

Out-of-order execution can cause confusion, especially for newcomers.

Conclusion

Jupyter Notebooks revolutionize how data scientists interact with data, offering a seamless blend of code, visualization, and narrative.

Whether you’re prototyping a machine learning model, teaching a class, or presenting findings to stakeholders, Jupyter Notebooks provide a dynamic and interactive platform that fosters creativity and productivity.

1 note

·

View note

Text

Data Science With Generative Ai | Data Science With Generative Ai Online Training

Top Tools and Techniques for Integrating Generative AI in Data Science

Introduction

Data Science with Generative Ai the integration of generative AI in data science has revolutionized the way insights are derived and predictions are made. Combining creativity and computational power, generative AI enables advanced modeling, automation, and innovation in various domains. With the rise of data science with generative AI, businesses and researchers are leveraging these technologies to develop sophisticated systems that solve complex problems efficiently. This article explores the top tools and techniques for integrating generative AI in data science, offering insights into their benefits, practical applications, and best practices for implementation.

Key Tools for Generative AI in Data Science

TensorFlow

Overview: An open-source library by Google, TensorFlow is widely used for machine learning and deep learning projects.

Applications: Supports tasks like image generation, natural language processing, and recommendation systems.

Tips: Leverage TensorFlow’s pre-trained models like GPT-3 or StyleGAN to kickstart generative AI projects.

PyTorch

Overview: Developed by Facebook, PyTorch is known for its dynamic computation graph and flexibility.

Applications: Ideal for research-driven projects requiring custom generative AI models.

Tips: Use PyTorch’s TorchServe for deploying generative AI models in production environments efficiently.

Hugging Face

Overview: A hub for natural language processing (NLP) models, Hugging Face is a go-to tool for text-based generative AI.

Applications: Chatbots, text summarization, and translation tools.

Tips: Take advantage of Hugging Face’s Model Hub to access and fine-tune pre-trained models.

Jupyter Notebooks

Overview: A staple in data science workflows, Jupyter Notebooks support experimentation and visualization.

Applications: Model training, evaluation, and interactive demonstrations.

Tips: Use extensions like JupyterLab for a more robust development environment.

OpenAI API

Overview: Provides access to cutting-edge generative AI models such as GPT-4 and Codex. Data Science with Generative Ai Online Training

Applications: Automating content creation, coding assistance, and creative writing.

Tips: Use API rate limits judiciously and optimize calls to minimize costs.

Techniques for Integrating Generative AI in Data Science

Data Preprocessing

Importance: Clean and structured data are essential for accurate AI modeling.

Techniques:

Data augmentation for diversifying training datasets.

Normalization and scaling for numerical stability.

Transfer Learning

Overview: Reusing pre-trained models for new tasks saves time and resources.

Applications: Adapting a generative AI model trained on large datasets to a niche domain.

Tips: Fine-tune models rather than training them from scratch for better efficiency.

Generative Adversarial Networks (GANs)

Overview: A two-part system where a generator and a discriminator compete to create realistic data.

Applications: Image synthesis, data augmentation, and anomaly detection.

Tips: Balance the generator and discriminator’s learning rates to ensure stable training.

Natural Language Processing (NLP)

Overview: NLP techniques power text-based generative AI systems.

Applications: Sentiment analysis, summarization, and language translation.

Tips: Tokenize data effectively and use attention mechanisms like transformers for better results.

Reinforcement Learning

Overview: A technique where models learn by interacting with their environment to achieve goals.

Applications: Automated decision-making and dynamic systems optimization.

Tips: Define reward functions clearly to avoid unintended behaviors.

Best Practices for Integrating Generative AI in Data Science

Define Objectives Clearly

Understand the problem statement and define measurable outcomes.

Use Scalable Infrastructure

Deploy tools on platforms like AWS, Azure, or Google Cloud to ensure scalability and reliability.

Ensure Ethical AI Use

Avoid biases in data and adhere to guidelines for responsible AI deployment.

Monitor Performance

Use tools like Tensor Board or MLflow for real-time monitoring of models in production. Data Science with Generative Ai Training

Collaborate with Interdisciplinary Teams

Work with domain experts, data scientists, and engineers for comprehensive solutions.

Applications of Data Science with Generative AI

Healthcare

Drug discovery and personalized medicine using AI-generated molecular structures.

Finance

Fraud detection and automated trading algorithms driven by generative models.

Marketing

Content personalization and predictive customer analytics.

Gaming

Procedural content generation and virtual reality enhancements.

Challenges and Solutions

Data Availability

Challenge: Scarcity of high-quality labeled data.

Solution: Use synthetic data generation techniques like GANs.

Model Complexity

Challenge: High computational requirements.

Solution: Optimize models using pruning and quantization techniques.

Ethical Concerns

Challenge: Bias and misuse of generative AI.

Solution: Implement strict auditing and transparency practices.

Conclusion

The integration of data science with generative AI has unlocked a world of possibilities, reshaped industries and driving innovation. By leveraging advanced tools like TensorFlow, PyTorch, and Hugging Face, along with techniques such as GANs and transfer learning, data scientists can achieve remarkable outcomes. However, success lies in adhering to ethical practices, ensuring scalable implementations, and fostering collaboration across teams. As generative AI continues to evolve, its role in data science will only grow, making it essential for professionals to stay updated with the latest trends and advancements.

Visualpath Advance your career with Data Science with Generative Ai. Gain hands-on training, real-world skills, and certification. Enroll today for the best Data Science with Generative Ai Online Training. We provide to individuals globally in the USA, UK, etc.

Call on: +91 9989971070

Course Covered:

Data Science, Programming Skills, Statistics and Mathematics, Data Analysis, Data Visualization, Machine Learning, Big Data Handling, SQL, Deep Learning and AI

WhatsApp: https://www.whatsapp.com/catalog/919989971070/

Blog link: https://visualpathblogs.com/

Visit us: https://www.visualpath.in/online-data-science-with-generative-ai-course.html

#Data Science Course#Data Science Course In Hyderabad#Data Science Training In Hyderabad#Data Science With Generative Ai Course#Data Science Institutes In Hyderabad#Data Science With Generative Ai#Data Science With Generative Ai Online Training#Data Science With Generative Ai Course Hyderabad#Data Science With Generative Ai Training

0 notes

Text

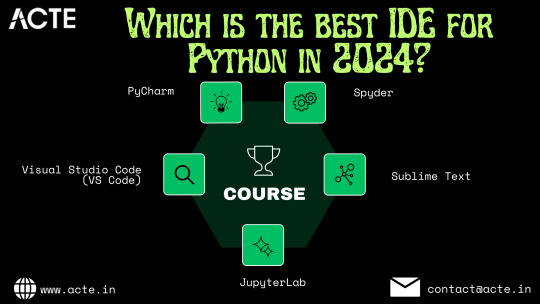

PyCharm vs. VS Code: A Comprehensive Comparison of Leading Python IDEs

Introduction: Navigating the Python IDE Landscape in 2024

As Python continues to dominate the programming world, the choice of an Integrated Development Environment (IDE) holds significant importance for developers. In 2024, the market is flooded with a plethora of IDE options, each offering unique features and capabilities tailored to diverse coding needs. Considering the kind support of Learn Python Course in Hyderabad, Whatever your level of experience or reason for switching from another programming language, learning Python gets much more fun.

This guide dives into the leading Python IDEs of 2024, showcasing their standout attributes to help you find the perfect fit for your coding endeavors.

1. PyCharm: Unleashing the Power of JetBrains' Premier IDE

PyCharm remains a cornerstone in the Python development realm, renowned for its robust feature set and seamless integration with various Python frameworks. With intelligent code completion, advanced code analysis, and built-in version control, PyCharm streamlines the development process for both novices and seasoned professionals. Its extensive support for popular Python frameworks like Django and Flask makes it an indispensable tool for web development projects.

2. Visual Studio Code (VS Code): Microsoft's Versatile Coding Companion

Visual Studio Code has emerged as a formidable player in the Python IDE landscape, boasting a lightweight yet feature-rich design. Armed with a vast array of extensions, including Python-specific ones, VS Code empowers developers to tailor their coding environment to their liking. Offering features such as debugging, syntax highlighting, and seamless Git integration, VS Code delivers a seamless coding experience for Python developers across all proficiency levels.

3. JupyterLab: Revolutionizing Data Science with Interactive Exploration

For data scientists and researchers, JupyterLab remains a staple choice for interactive computing and data analysis. Its support for Jupyter notebooks enables users to blend code, visualizations, and explanatory text seamlessly, facilitating reproducible research and collaborative work. Equipped with interactive widgets and compatibility with various data science libraries, JupyterLab serves as an indispensable tool for exploring complex datasets and conducting in-depth analyses. Enrolling in the Best Python Certification Online can help people realise Python's full potential and gain a deeper understanding of its complexities.

4. Spyder: A Dedicated IDE for Scientific Computing and Analysis

Catering specifically to the needs of scientific computing, Spyder provides a user-friendly interface and a comprehensive suite of tools for machine learning, numerical simulations, and statistical analysis. With features like variable exploration, profiling, and an integrated IPython console, Spyder enhances productivity and efficiency for developers working in scientific domains.

5. Sublime Text: Speed, Simplicity, and Customization

Renowned for its speed and simplicity, Sublime Text offers a minimalistic coding environment with powerful customization options. Despite its lightweight design, Sublime Text packs a punch with an extensive package ecosystem and adaptable interface. With support for multiple programming languages and a responsive developer community, Sublime Text remains a top choice for developers seeking a streamlined coding experience.

Conclusion: Choosing Your Path to Python IDE Excellence

In conclusion, the world of Python IDEs in 2024 offers a myriad of options tailored to suit every developer's needs and preferences. Whether you're a web developer, data scientist, or scientific researcher, there's an IDE designed to enhance your coding journey and boost productivity. By exploring the standout features and functionalities of each IDE, you can make an informed decision and embark on a path towards coding excellence in Python.

0 notes

Text

Embarking on Your Data Science Journey: A Beginner's Guide

Are you intrigued by the world of data, eager to uncover insights hidden within vast datasets? If so, welcome to the exciting realm of data science! At the heart of this field lies Python programming, a versatile and powerful tool that enables you to manipulate, analyze, and visualize data. Whether you're a complete beginner or someone looking to expand their skill set, this guide will walk you through the basics of Python programming for data science in simple, easy-to-understand terms.

1. Understanding Data Science and Python

Before we delve into the specifics, let's clarify what data science is all about. Data science involves extracting meaningful information and knowledge from large, complex datasets. This information can then be used to make informed decisions, predict trends, and gain valuable insights.

Python, a popular programming language, has become the go-to choice for data scientists due to its simplicity, readability, and extensive libraries tailored for data manipulation and analysis.

2. Installing Python

The first step in your data science journey is to install Python on your computer. Fortunately, Python is free and can be easily downloaded from the official website, python.org. Choose the version compatible with your operating system (Windows, macOS, or Linux) and follow the installation instructions.

3. Introduction to Jupyter Notebooks

While Python can be run from the command line, using Jupyter Notebooks is highly recommended for data science projects. Jupyter Notebooks provide an interactive environment where you can write and execute Python code in a more user-friendly manner. To install Jupyter Notebooks, use the command pip install jupyterlab in your terminal or command prompt.

4. Your First Python Program

Let's create your very first Python program! Open a new Jupyter Notebook and type the following code:

python

Copy code

print("Hello, Data Science!")

To execute the code, press Shift + Enter. You should see the phrase "Hello, Data Science!" printed below the code cell. Congratulations! You've just run your first Python program.

5. Variables and Data Types

In Python, variables are used to store data. Here are some basic data types you'll encounter:

Integers: Whole numbers, such as 1, 10, or -5.

Floats: Numbers with decimals, like 3.14 or -0.001.

Strings: Text enclosed in single or double quotes, such as "Hello" or 'Python'.

Booleans: True or False values.

To create a variable, simply assign a value to a name. For example:

python

Copy code

age = 25

name = "Alice"

is_student = True

6. Working with Lists and Dictionaries

Lists and dictionaries are essential data structures in Python. A list is an ordered collection of items, while a dictionary is a collection of key-value pairs.

Lists:

python

Copy code

fruits = ["apple", "banana", "cherry"]

print(fruits[0]) # Accessing the first item

fruits.append("orange") # Adding a new item

Dictionaries:

python

Copy code

person = {"name": "John", "age": 30, "is_student": False}

print(person["name"]) # Accessing value by key

person["city"] = "New York" # Adding a new key-value pair

7. Basic Data Analysis with Pandas

Pandas is a powerful library for data manipulation and analysis in Python. Let's say you have a dataset in a CSV file called data.csv. You can load and explore this data using Pandas:

python

Copy code

import pandas as pd

# Load the data into a DataFrame

df = pd.read_csv("data.csv")

# Display the first few rows of the DataFrame

print(df.head())

8. Visualizing Data with Matplotlib

Matplotlib is a versatile library for creating various types of plots and visualizations. Here's an example of creating a simple line plot:

python

Copy code

import matplotlib.pyplot as plt

# Data for plotting

x = [1, 2, 3, 4, 5]

y = [2, 4, 6, 8, 10]

# Create a line plot

plt.plot(x, y)

plt.xlabel('X-axis')

plt.ylabel('Y-axis')

plt.title('Simple Line Plot')

plt.show()

9. Further Learning and Resources

As you continue your data science journey, there are countless resources available to deepen your understanding of Python and its applications in data analysis. Here are a few recommendations:

Online Courses: Platforms like Coursera, Udemy, and DataCamp offer beginner-friendly courses on Python for data science.

Books: "Python for Data Analysis" by Wes McKinney and "Automate the Boring Stuff with Python" by Al Sweigart are highly recommended.

Practice: The best way to solidify your skills is to practice regularly. Try working on small projects or participating in Kaggle competitions.

Conclusion

Embarking on a journey into data science with Python is an exciting and rewarding endeavor. By mastering the basics covered in this guide, you've laid a strong foundation for exploring the vast landscape of data analysis, visualization, and machine learning. Remember, patience and persistence are key as you navigate through datasets and algorithms. Happy coding, and may your data science adventures be fruitful!

0 notes

Text

Supercharge Your Python Development Journey: Essential Tools for Enhanced Productivity

Python, renowned for its versatility and power, has gained widespread popularity among developers and data scientists. Its simplicity, readability, and extensive ecosystem make it a top choice for a diverse range of applications. To maximize productivity and elevate your Python development experience, harnessing the right tools is key. In this blog post, we'll explore a selection of indispensable tools available for Python development.

With this thorough Learn Python Course in Pune, you can unleash your potential and reach new heights in your skill set by mastering the foundations.

Integrated Development Environments (IDEs): Streamline your coding, debugging, and testing workflows with feature-rich IDEs. PyCharm by JetBrains offers a comprehensive suite of tools, including code completion, debugging support, version control integration, and web development framework assistance. Visual Studio Code, complemented by Python extensions, provides a versatile and customizable IDE. For scientific computing and data analysis, specialized IDEs like Anaconda and Spyder offer tailored features and libraries.

Text Editors: Optimize your Python coding experience with lightweight yet powerful text editors. Sublime Text, prized for its speed and versatility, boasts an array of plugins catering to Python development. Atom, a highly customizable text editor, offers a wealth of community-driven packages to enhance your Python workflow. Notepad++, a user-friendly choice favored by Windows users, provides an intuitive interface and extensive plugin support. Vim, a revered command-line text editor, offers unparalleled efficiency and extensibility.

Jupyter Notebooks: Unleash your data analysis and exploration potential with interactive Jupyter Notebooks. Seamlessly blending code, visualizations, and explanatory text, Jupyter Notebooks are invaluable tools. Jupyter Notebook, the original implementation, facilitates code execution in a notebook format. JupyterLab, a more recent iteration, provides a modular and flexible interface with advanced features such as multiple tabs, terminal access, and customizable layouts.

Package Managers: Simplify library and dependency management with Python's package managers. The default package manager, pip, empowers you to effortlessly install, upgrade, and uninstall packages. It integrates smoothly with virtual environments, enabling isolated Python environments for different projects. Anaconda, a prominent distribution in the data science realm, offers the conda package manager, which extends functionality to manage non-Python dependencies and create reproducible environments.

Linters and Code Formatters: Ensure code quality and consistency with linters and code formatters. Linters such as pylint perform static code analysis, detecting errors and enforcing coding conventions. flake8 combines the capabilities of multiple tools to provide comprehensive code analysis. Black, a favored code formatter, automates code formatting to maintain a consistent style and enhance readability.

Version Control Systems: Efficiently collaborate, track code changes, and revert when needed with version control systems. Git, a widely adopted distributed version control system, seamlessly integrates with Python development. Platforms like GitHub, GitLab, and Bitbucket offer hosting and collaboration features, simplifying project management and sharing. Take this Python Online Course to improve your Python programming skills can help people understand Python complexities and realize its full potential.

Testing Frameworks: Automate testing processes and ensure code reliability with Python's testing frameworks. The built-in unittest module provides a robust framework for writing and executing tests. pytest simplifies test discovery and offers powerful features such as fixture support and test parametrization. nose extends unittest with additional functionalities, enabling concise and expressive tests.

Data Analysis and Scientific Computing: Leverage Python's extensive ecosystem for data analysis and scientific computing. NumPy, a fundamental library for numerical computing, offers powerful data structures and mathematical functions. pandas provides flexible data structures and analysis tools for working with structured data. Matplotlib facilitates the creation of high-quality visualizations, while SciPy provides a broad range of scientific computing capabilities.

Web Development: Python frameworks like Django and Flask are go-to choices for web development. Django, a comprehensive framework, offers a batteries-included approach for building robust and scalable web applications. Flask, a lightweight and flexible framework, provides a solid foundation for creating web services and APIs. These frameworks simplify common web development tasks and benefit from extensive documentation and community support.

Machine Learning and Data Science: Python has emerged as a dominant language for machine learning and data science projects. Libraries such as scikit-learn, TensorFlow, PyTorch, and Keras provide cutting-edge tools for developing and deploying machine learning models. These libraries offer a range of functionalities, including data preprocessing, model training, evaluation, and deployment.

Python's popularity is not only due to its simplicity and versatility but also its vast ecosystem of tools. By leveraging IDEs, text editors, Jupyter Notebooks, package managers, linters, version control systems, testing frameworks, data analysis libraries, and frameworks for web development and machine learning, you can significantly enhance your Python development experience.

These tools streamline coding, debugging, testing, collaboration, and analysis processes, resulting in increased productivity and efficiency. Whether you are a beginner or an experienced Python developer, harnessing these tools will unlock the full potential of Python and empower you to excel in your projects.

0 notes

Text

python for data science beginners

Python is an excellent programming language for beginners in data science due to its simplicity, readability, and extensive libraries designed specifically for data manipulation, analysis, and visualization. Here's a step-by-step guide to getting started with Python for data science:

Install Python:

Download and install Python from the official website (https://www.python.org/). Choose the latest stable version (Python 3.x) as Python 2.x is no longer supported.

Install Anaconda (Optional, but recommended):

Anaconda is a popular distribution of Python that comes pre-packaged with essential data science libraries like Pandas, NumPy, and Matplotlib. It simplifies the setup process for data science beginners.

Download Anaconda from https://www.anaconda.com/products/individual and follow the installation instructions.

Jupyter Notebooks (Optional, but highly recommended):

Jupyter Notebooks provide an interactive environment to write and run Python code in blocks. They are widely used in data science for data exploration and analysis.

Install Jupyter Notebooks using Anaconda or with the command: pip install jupyterlab.

Explore Data Science Libraries:

Familiarize yourself with essential data science libraries like Pandas (for data manipulation), NumPy (for numerical computing), and Matplotlib/Seaborn (for data visualization).

Data Manipulation with Pandas:

Learn how to load data from different sources (CSV, Excel, SQL databases) using Pandas.

Practice basic data manipulation tasks like filtering, sorting, grouping, and merging datasets.

Data Visualization with Matplotlib/Seaborn:

Use Matplotlib or Seaborn to create basic plots, such as line charts, bar plots, scatter plots, and histograms.

Customize the appearance of plots to enhance visualizations.

Introduction to NumPy:

Learn the basics of NumPy arrays, which provide a foundation for data manipulation and mathematical operations in Python.

Introduction to Statistics:

Familiarize yourself with fundamental statistical concepts like mean, median, standard deviation, and hypothesis testing. These are crucial for data analysis

.

Basic Machine Learning Concepts (Optional):

Get a high-level understanding of machine learning concepts like supervised and unsupervised learning, classification, regression, and clustering.

Practice with Real Data:

Work on small data projects or Kaggle competitions to apply the skills you've learned.

Analyze datasets, draw insights, and visualize the results using Python's data science libraries.

Learn from Tutorials and Courses:

Take advantage of free online tutorials and courses to learn more about Python for data science. Websites like Coursera, edX, and DataCamp offer excellent resources.

Community and Practice:

Join data science communities, forums, and social media groups to interact with other data science enthusiasts and seek guidance when needed.

Practice regularly and build a strong foundation by working on diverse data science projects.

Remember that data science is a vast field, and learning is an ongoing process. Start with the basics, be patient, and gradually build your knowledge and skills. Python's ease of use and the supportive data science community make it a great choice for beginners in the data science journey.

Become a Data Science and AI expert with a single program. Go through 360DigiTMG's data science offline course in Hyderabad! Enroll today!

For more information

360DigiTMG - Data Analytics, Data Science Course Training Hyderabad

Address - 2-56/2/19, 3rd floor,,

Vijaya towers, near Meridian school,,

Ayyappa Society Rd, Madhapur,,

Hyderabad, Telangana 500081

099899 94319

https://goo.gl/maps/mD3pdQxmTa9W8xTJ8

Source link - https://dataanalyticscoursehyd.blogspot.com/2020/11/data-analytics-certification-course.html

1 note

·

View note

Text

Jupyterlab extension

JUPYTERLAB EXTENSION HOW TO

JUPYTERLAB EXTENSION INSTALL

JUPYTERLAB EXTENSION UPDATE

JUPYTERLAB EXTENSION CODE

JUPYTERLAB EXTENSION CODE

Since Jupyter notebooks and Jupyter lab have a limited set of features, we use extensions to make our code better and easier to manage. These extensions are written in Javascript and can add features like code formatting, adding more visualization, code debugging, etc. Jupyter notebook Extensions are simple add-ons that can increase the number of features to extend Jupiter notebook’s usability. Similarly, Jupiter lab is a development interface where you can manage different notebooks, codes, files, and data. It helps us to run code and interact within the browser, you can visualize your code and document the process using mask-down. It is one of the most used tools among data science enthusiasts. Jupyter notebook is open-source document formate based on JSON.

JUPYTERLAB EXTENSION HOW TO

And different features provided by these extensions, also how to use them.We will explore different useful extensions for the Jupyter lab.Once your extension is published on pypi.This article was published as a part of the Data Science Blogathon Overview This is possible thanks to a tool called playwright. The tests are defined in the ui-tests subfolder within each example. Those tests are emulating user action in JupyterLab to check the extension is behaving as expected. The installation is checked by listing the installed extension and running JupyterLab with the helper python -m jupyterlab.browser_check The examples are automatically tested for:Ĭonfiguration files are compared to the reference ones of the hello-world example

JUPYTERLAB EXTENSION UPDATE

If you make changes to the source code, ensure you update the README and run jlpm embedme from the root of the repository to regenerate the READMEs. We are using embedme to embed code snippets into the markdown READMEs. You can create a conda environment to get startedįrom there, you can change your extension source code, it will be recompiled,Īnd you can refresh your browser to see your changes. These examples are developed and tested on top of JupyterLab. JupyterLab you may find it easier to understand than pure JavaScript if you have aīasic understanding of object oriented programming and types. Writing an extension requires basic knowledge of JavaScript, Typescript and potentially Python.ĭon't be scared of Typescript, even if you never coded in TypeScript before you touch Use Signals to allow Widgets communicate with each others.Īdd a new button to the notebook toolbar.Īdd a new Widget element to the main window. server) and frontend parts.Ĭreate and use new Settings for your extension. Interact with a kernel from an extension.Ĭreate a minimal extension with backend (i.e. Set up the development environment and print to the console. Put widgets at the top of a main JupyterLab area widget.Īdd a new button to an existent context menu.Ĭreate new documents and make them collaborative. Register commands in the Command Palette. You are welcome to open any issue or pull request. Browse the previews below or skip them and jump directly to the sections for developers. We have structured the examples based on the extension points.

Explanations of the internal working, illustrated with code snippets.

The list of used JupyterLab API and Extension Points.

An image or screencast showing its usage.

Start with the Hello World and then jump to the topic you are interested in. You may find it easier to learn how to create extensions by examples, instead of going through the documentation. Note that the 1.x and 2.x branches are not updated anymore. If you would like to use the examples with JupyterLab 1.x, check out the 1.x branch. If you would like to use the examples with JupyterLab 2.x, check out the 2.x branch. The examples currently target JupyterLab 3.1 or later. # build the TypeScript source after making changes

JUPYTERLAB EXTENSION INSTALL

# install your development version of the extension with JupyterLab # go to the hello world example cd hello-world # go to the extension examples folder cd jupyterlab-extension-examplesĬonda activate jupyterlab-extension-examples

1 note

·

View note

Text

Jupyterlab extension

#Jupyterlab extension install

#Jupyterlab extension free

#Jupyterlab extension install

Is there a specific version of jupyterlab I should be installing? Or maybe the problem is something else altogether? Note that I can successfully install the extension on jupyterlab=1.0.0rc0, however this creates new conflicts between jupyter-server, jupyterlab and tornado. With a few clicks, you can perform any CRUD operation. Mito is a missing pandas extension that we were waiting for years When you start Mito, it shows a spreadsheet view of a pandas Dataframe.

#Jupyterlab extension free

ValueError: The extension "jupyterlab-prodigy" does not yet support the current version of JupyterLab.Īs a third attempt I tried jupyterlab=2.3.0: $ jupyter labextension install jupyterlab-prodigyīuilding jupyterlab assets (build:prod:minimize) Mito is a free JupyterLab extension that enables exploring and transforming datasets with the ease of Excel. Consider upgrading JupyterLab.īut with jupyterlab=3: $ jupyter labextension install jupyterlab-prodigy This means that nodejs or CLI installation activities to use an extension is no longer needed. Installing an extension with JupyterLab is since version 3 possible using the web interface only. However, it seems to support a new version of JupyterLab. JupyterLab extensions Some issues you might face using JupyterLab are excellent solved by an extension. ValueError: No version of jupyterlab-prodigy could be found that is compatible with the current version of JupyterLab. First I installed jupyterlab=2.0.0, then when trying to install the labextension on jupyterlab version 2: $ jupyter labextension install jupyterlab-prodigy Inspired by Gnome Shell Top Bar indicators. Similar to the status bar, the top bar can be used to place a few indicators and optimize the overall space. Jupyter Lab does provide this feature.I'm trying to get the jupyterlab prodigy extension installed but am having some trouble finding the correct version of jupyterlab to use. JupyterLab Top Bar¶ Monorepo to experiment with the top bar space in JupyterLab. However, if you are a user of VS Code, Sublime or Atom, you might also want to directly search what you want to install in a “manager”. In this example, We are plotting a JSON like data with Geometry right in. No Need to read the data or Visualise it with Other desktop software. The GeoSJON extension enables you to plot Geosjon data on the fly inside Jupyter lab quickly. jupyter labextension install using the command line is also my favourite. These are specific tools for rendering maps or geospatial data inside JupyterLab. Most of the online resource will tell you to run the command like the following to install a Jupyter Lab extension. In this article, I’ll introduce 10 Jupyter Lab extensions that I found are very useful to dramatically improve the productivity of a typical data scientist or data engineer. JupyterLab allows the use of extensions, such as notebook table of contents and rendering of custom data formats such as FASTA. Now, even the Jupyter Lab development team is excited to have such a robust and thrive third-party extension community. As the “next-generation” web-based application for Jupyter Notebook, Jupyter Lab provides much more convenient features than its old bother. Note: A clean reinstall of the JupyterLab extension can be done by first running the jupyter lab clean command which will remove the staging and static directories from the lab directory To streamline third-party development of extensions, this library provides a build script for generating third party extension JavaScript bundles I’ll post that talk. If none of these work for you, then refer to the JupyterLab documentation on extensions here and the Git extensions documentation. Install Extensions into JupyterLab Teradata Vantage Modules for Jupyter - 3.3 - Installing the Extensions into JupyterLab from Source - Teradata Vantage. Requirements JupyterLab > 3.0 ( older version available for 2.x) Git (version >2. There are two main ways to install it one might be easier on some computers than others. A JupyterLab extension for version control using Git To see the extension in action, open the example notebook included in the Binder demo. If you are a Data Scientist or a Data Engineer using Python as your primary programming language, I believe you must use Jupyter Notebook. Git for JupyterLab is an official Jupyter Lab extension. Customise Jupyter Lab, an IDE tool, for yourself

0 notes

Text

TOP 10 PYTHON LIBRARIES TO USE IN DATA SCIENCE PROJECTS IN 2022

Python is a simple yet powerful object-oriented programming language that is extensively used and free to install.

Table of Content

- TensorFlow - NumPy - SciPy - Pandas - Matplotlib - Keras - Plotly - Statsmodels - Seaborn - SciKit-Learn Python is a simple yet powerful object-oriented programming language that is extensively used and free to install. It has high-performance and open-source characteristics, as well as an easy to debug coding environment. Data scientists can find Python libraries for machine learning both on the internet and through companies like JupyterLab or Anaconda.

1. TensorFlow

TensorFlow is a free, open-source library for deep learning applications that Google uses. Originally designed for numeric computations, it now provides developers with a vast range of tools to create their machine learning-based applications. Google Brain has just released version 2.5.0 of TensorFlow, which includes new improvements in functionality and usability.

2. NumPy

NumPy, also known as Numerical Python, was released in 2015 by Travis Oliphant. It is a powerful library used for scientific and mathematical computing. NumPy allows you to use linear algebra, Fourier transform, and other mathematical functions to perform a vast array of calculations. It's mostly used for applications which require both performance and resources. By contrast, Python lists are 50 times slower than the NumPy arrays in these cases. NumPy is the foundation for data science packages such as SciPy, Matplotlib, Pandas, Scikit-Learn and Statsmodels.

3. SciPy

SciPy is a programming language and environment for solving math, science, and engineering problems. It's built on the popular NumPy extension, making it easy to import data from other formats and graphs into SciPy. SciPy is a library for linear algebra, statistics, integration, and optimization. It can also be used to perform multidimensional image processing and Fourier transformations as well as integrate differential equations.

4. Pandas

Pandas is a powerful and versatile data manipulation tool created by Wes McKinney. It's efficient across various data types and has powerful data structures, as well as useful functions like handling missing data and aligning your data in useful ways. Prolog is commonly used to manipulate labeled and relational data. It offers quick, agile and powerful ways of handling structured data that handles both labelled as well as relational data.

5. Matplotlib

Matplotlib is a highly-used library for data visualization in Python. It is used to make static, animated, and interactive graphics and charts. With plenty of customization options, it can suit the needs of many different projects. Plotton allows programmers to scatter, customize, and modify graphs using histograms. For adding plots to applications, the open-source library provides an object-oriented API.

6. Keras

Keras is an open-source TensorFlow library interface that has become popular in the past few years. It was originally created by François Chollet, and first launched in 2015. Keras is a Python library for building high-level neural networks, which allows you to use its pre-labeled dataset, with a variety of well-crafted tools. It's easy to use and bug free - perfect for exploratory research!

7. Plotly

"Plotly is web-based, interactive analytics and graphing application. It’s one of the most advanced libraries for machine learning, data science, & AI. It is a data visualization tool with great features such as publishable and engaging visualizations." Dash & Chart Studio is an awesome software. The information you have can be easily imported into charts and graphs, enabling you to create presentations and dashboards in seconds. It can also be used to create programs such as Dash & Chart Studio.

8. Statsmodels

If you're looking for some refined statistical analysis and need a robust library, Statsmodels is a fantastic choice! It's based on several sources such as Matplotlib, Pandas, and Pasty. One admittedly niche example of where AI is especially useful is developing statistical models. For instance, it can help you build OLS models or run any number of statistical tests on your data.

9. Seaborn

Seaborn is a useful plotting library that is also built on Matplotlib so it's easy to integrate into your existing data visualization development. One of Seaborn's most important features is that it can process and display bigger data sets in more concise form. Seeing the model and its related trends may not be clear to audiences without experience, but Seaborn's graphs make their implications very explicit. Sagemath provides finely crafted, jaw-dropping data visualizations that would be perfect for showcasing to stakeholders. It's also very easy-to-use with an adjustable template, high-level interfaces, and more

10. SciKit-Learn

Scikit-Learn has a huge variety of classification, regression and clustering methods built in. You can find conventional ML applications ranging from gradient boosting, support vector machines and random forests to just the plain old median method. It was designed by David Cournapeau. I hope you will like the content and it will help you to learn TOP 10 PYTHON LIBRARIES TO USE IN DATA SCIENCE PROJECTS IN 2022 If you like this content, do share. Read the full article

0 notes

Text

Chroma and OpenCLIP Reinvent Image Search With Intel Max

OpenCLIP Image search

Building High-Performance Image Search with Intel Max, Chroma, and OpenCLIP GPUs

After reviewing the Intel Data Centre GPU Max 1100 and Intel Tiber AI Cloud, Intel Liftoff mentors and AI developers prepared a field guide for lean, high-throughput LLM pipelines.

All development, testing, and benchmarking in this study used the Intel Tiber AI Cloud.

Intel Tiber AI Cloud was intended to give developers and AI enterprises scalable and economical access to Intel’s cutting-edge AI technology. This includes the latest Intel Xeon Scalable CPUs, Data Centre GPU Max Series, and Gaudi 2 (and 3) accelerators. Startups creating compute-intensive AI models can deploy Intel Tiber AI Cloud in a performance-optimized environment without a large hardware investment.

Advised AI startups to contact Intel Liftoff for AI Startups to learn more about Intel Data Centre GPU Max, Intel Gaudi accelerators, and Intel Tiber AI Cloud’s optimised environment.

Utilising resources, technology, and platforms like Intel Tiber AI Cloud.

AI-powered apps increasingly use text, audio, and image data. The article shows how to construct and query a multimodal database with text and images using Chroma and OpenCLIP embeddings.

These embeddings enable multimodal data comparison and retrieval. The project aims to build a GPU or XPU-accelerated system that can handle image data and query it using text-based search queries.

Advanced AI uses Intel Data Centre GPU Max 1100

The performance described in this study is attainable with powerful hardware like the Intel Data Centre GPU Max Series, specifically Intel Extension for PyTorch acceleration. Dedicated instances and the free Intel Tiber AI Cloud JupyterLab environment with the GPU (Max 1100):

The Xe-HPC Architecture:

GPU compute operations use 56 specialised Xe-cores. Intel XMX engines: Deep systolic arrays from 448 engines speed up dense matrix and vector operations in AI and deep learning models. XMX units are complemented with 448 vector engines for larger parallel computing workloads. 56 hardware-accelerated ray tracing units increase visualisation.

Memory hierarchy

48 GB of HBM2e delivers 1.23 TB/s of bandwidth, which is needed for complex models and large datasets like multimodal embeddings. Cache: A 28 MB L1 and 108 MB L2 cache keeps data near processing units to reduce latency.

Connectivity

PCIe Gen 5: Uses a fast x16 host link to transport data between the CPU and GPU. OneAPI Software Ecosystem: Integrating the open, standards-based Intel oneAPI programming architecture into Intel Data Centre Max Series GPUs is simple. HuggingFace Transformers, Pytorch, Intel Extension for Pytorch, and other Intel architecture-based frameworks allow developers to speed up AI pipelines without being bound into proprietary software.

This code’s purpose?

This code shows how to create a multimodal database using Chroma as the vector database for picture and text embeddings. It allows text queries to search the database for relevant photos or metadata. The code also shows how to utilise Intel Extension for PyTorch (IPEX) to accelerate calculations on Intel devices including CPUs and XPUs using Intel’s hardware acceleration.

This code’s main components:

It embeds text and images using OpenCLIP, a CLIP-based approach, and stores them in a database for easy access. OpenCLIP was chosen for its solid benchmark performance and easily available pre-trained models.

Chroma Database: Chroma can establish a permanent database with embeddings to swiftly return the most comparable text query results. ChromaDB was chosen for its developer experience, Python-native API, and ease of setting up persistent multimodal collections.

Function checks if XPU is available for hardware acceleration. High-performance applications benefit from Intel’s hardware acceleration with IPEX, which speeds up embedding generation and data processing.

Application and Use Cases

This code can be used whenever:

Fast, scalable multimodal data storage: You may need to store and retrieve text, images, or both.

Image Search: Textual descriptions can help e-commerce platforms, image search engines, and recommendation systems query photographs. For instance, searching for “Black colour Benz” will show similar cars.

Cross-modal Retrieval: Finding similar images using text or vice versa, or retrieving images from text. This is common in caption-based photo search and visual question answering.

The recommendation system: Similarity-based searches can lead consumers to films, products, and other content that matches their query.

AI-based apps: Perfect for machine learning pipelines including training data, feature extraction, and multimodal model preparation.

Conditions:

Deep learning torch.

Use intel_extension_for_pytorch for optimal PyTorch performance.

Utilise chromaDB for permanent multimodal vector database creation and querying, and matplotlib for image display.

Embedding extraction and image loading employ chromadb.utils’ OpenCLIP Embedding Function and Image Loader.

#technology#technews#govindhtech#news#technologynews#OpenCLIP#Intel Tiber AI Cloud#Intel Tiber#Intel Data Center GPU Max 1100#GPU Max 1100#Intel Data Center

0 notes

Text

Github jupyterlab

#Github jupyterlab install

Optionally, if you have more than one key managed by your ssh agent: Create a config file for the ssh-agent.Register the public part of it to your Git server:.Here are the steps to follow to set up SSH authentication (skip any that is already accomplished for your project): This is a new feature since v0.37.0 SSH protocol You can set a longer cache timeout see Server Settings. To use the caching, you will need toĬheck the option Save my login temporarily in the dialog asking your credentials. The extension can cache temporarily (by default for an hour) credentials. In order to connect to a remote host, it is recommended to use SSH. It will automatically set up a credential manager.

#Github jupyterlab install

But not for other SSH connections.įor Windows users, it is recommended to install git for windows. This extension tries to handle credentials for HTTP(S) connections (if you don't have set up a credential manager). Or with conda: conda install -c conda-forge jupyterlab jupyterlab-gitįor JupyterLab on the console which is running the JupyterLab server, you probably need to set up a credentials store for your local Git repository. To install perform the following steps, with pip: pip install -upgrade jupyterlab jupyterlab-git Open the Git extension from the Git tab on the left panel.JupyterLab >= 3.0 ( older version available for 2.x).To see the extension in action, open the example notebook included in the Binder demo. A JupyterLab extension for version control using Git

0 notes

Text

TOP 10 PYTHON LIBRARIES TO USE IN DATA SCIENCE PROJECTS IN 2022

New Post has been published on https://www.codesolutionstuff.com/top-10-python-libraries-to-use-in-data-science-projects-in-2022/

TOP 10 PYTHON LIBRARIES TO USE IN DATA SCIENCE PROJECTS IN 2022

Python is a simple yet powerful object-oriented programming language that is extensively used and free to install.

Table of Content

TensorFlow

NumPy

SciPy

Pandas

Matplotlib

Keras

Plotly

Statsmodels

Seaborn

SciKit-Learn

Python is a simple yet powerful object-oriented programming language that is extensively used and free to install. It has high-performance and open-source characteristics, as well as an easy to debug coding environment. Data scientists can find Python libraries for machine learning both on the internet and through companies like JupyterLab or Anaconda.

1. TensorFlow

TensorFlow is a free, open-source library for deep learning applications that Google uses. Originally designed for numeric computations, it now provides developers with a vast range of tools to create their machine learning-based applications. Google Brain has just released version 2.5.0 of TensorFlow, which includes new improvements in functionality and usability.

2. NumPy

NumPy, also known as Numerical Python, was released in 2015 by Travis Oliphant. It is a powerful library used for scientific and mathematical computing. NumPy allows you to use linear algebra, Fourier transform, and other mathematical functions to perform a vast array of calculations. It’s mostly used for applications which require both performance and resources. By contrast, Python lists are 50 times slower than the NumPy arrays in these cases. NumPy is the foundation for data science packages such as SciPy, Matplotlib, Pandas, Scikit-Learn and Statsmodels.

3. SciPy

SciPy is a programming language and environment for solving math, science, and engineering problems. It’s built on the popular NumPy extension, making it easy to import data from other formats and graphs into SciPy. SciPy is a library for linear algebra, statistics, integration, and optimization. It can also be used to perform multidimensional image processing and Fourier transformations as well as integrate differential equations.

4. Pandas

Pandas is a powerful and versatile data manipulation tool created by Wes McKinney. It’s efficient across various data types and has powerful data structures, as well as useful functions like handling missing data and aligning your data in useful ways. Prolog is commonly used to manipulate labeled and relational data. It offers quick, agile and powerful ways of handling structured data that handles both labelled as well as relational data.

5. Matplotlib

Matplotlib is a highly-used library for data visualization in Python. It is used to make static, animated, and interactive graphics and charts. With plenty of customization options, it can suit the needs of many different projects. Plotton allows programmers to scatter, customize, and modify graphs using histograms. For adding plots to applications, the open-source library provides an object-oriented API.

6. Keras

Keras is an open-source TensorFlow library interface that has become popular in the past few years. It was originally created by François Chollet, and first launched in 2015. Keras is a Python library for building high-level neural networks, which allows you to use its pre-labeled dataset, with a variety of well-crafted tools. It’s easy to use and bug free – perfect for exploratory research!

7. Plotly

“Plotly is web-based, interactive analytics and graphing application. It’s one of the most advanced libraries for machine learning, data science, & AI. It is a data visualization tool with great features such as publishable and engaging visualizations.” Dash & Chart Studio is an awesome software. The information you have can be easily imported into charts and graphs, enabling you to create presentations and dashboards in seconds. It can also be used to create programs such as Dash & Chart Studio.

8. Statsmodels

If you’re looking for some refined statistical analysis and need a robust library, Statsmodels is a fantastic choice! It’s based on several sources such as Matplotlib, Pandas, and Pasty. One admittedly niche example of where AI is especially useful is developing statistical models. For instance, it can help you build OLS models or run any number of statistical tests on your data.

9. Seaborn

Seaborn is a useful plotting library that is also built on Matplotlib so it’s easy to integrate into your existing data visualization development. One of Seaborn’s most important features is that it can process and display bigger data sets in more concise form. Seeing the model and its related trends may not be clear to audiences without experience, but Seaborn’s graphs make their implications very explicit. Sagemath provides finely crafted, jaw-dropping data visualizations that would be perfect for showcasing to stakeholders. It’s also very easy-to-use with an adjustable template, high-level interfaces, and more

10. SciKit-Learn

Scikit-Learn has a huge variety of classification, regression and clustering methods built in. You can find conventional ML applications ranging from gradient boosting, support vector machines and random forests to just the plain old median method. It was designed by David Cournapeau.

I hope you will like the content and it will help you to learn TOP 10 PYTHON LIBRARIES TO USE IN DATA SCIENCE PROJECTS IN 2022 If you like this content, do share.

Python, PYTHON LIBRARIES, Top Programming

0 notes

Link

With the deprecation of classic notebook, and future notebook releases to be based on JupyterLab UI components, we’ll have to make a decision somewhen soon about whether to pin the notebook version in the environment we release to students in September starts modules to <7, move to RetroLab/new no via Pocket

0 notes