#Kubernetes components

Explore tagged Tumblr posts

Text

Demystifying Kubernetes Components - Understanding the Core of K8s

Kubernetes, often abbreviated as K8s, has taken the container orchestration world by storm. To harness the true power of K8s, one must have a solid grasp of its core components. In this comprehensive guide, we’ll embark on a journey to explore Kubernetes components, demystifying the intricacies of this powerful container orchestration platform. Unveiling Kubernetes Components Kubernetes…

View On WordPress

0 notes

Text

#DevOps lifecycle#components of devops lifecycle#different phases in devops lifecycle#best devops consulting in toronto#best devops consulting in canada#DevOps#kubernetes#docker#agile

2 notes

·

View notes

Text

Exploring the Azure Technology Stack: A Solution Architect’s Journey

Kavin

As a solution architect, my career revolves around solving complex problems and designing systems that are scalable, secure, and efficient. The rise of cloud computing has transformed the way we think about technology, and Microsoft Azure has been at the forefront of this evolution. With its diverse and powerful technology stack, Azure offers endless possibilities for businesses and developers alike. My journey with Azure began with Microsoft Azure training online, which not only deepened my understanding of cloud concepts but also helped me unlock the potential of Azure’s ecosystem.

In this blog, I will share my experience working with a specific Azure technology stack that has proven to be transformative in various projects. This stack primarily focuses on serverless computing, container orchestration, DevOps integration, and globally distributed data management. Let’s dive into how these components come together to create robust solutions for modern business challenges.

Understanding the Azure Ecosystem

Azure’s ecosystem is vast, encompassing services that cater to infrastructure, application development, analytics, machine learning, and more. For this blog, I will focus on a specific stack that includes:

Azure Functions for serverless computing.

Azure Kubernetes Service (AKS) for container orchestration.

Azure DevOps for streamlined development and deployment.

Azure Cosmos DB for globally distributed, scalable data storage.

Each of these services has unique strengths, and when used together, they form a powerful foundation for building modern, cloud-native applications.

1. Azure Functions: Embracing Serverless Architecture

Serverless computing has redefined how we build and deploy applications. With Azure Functions, developers can focus on writing code without worrying about managing infrastructure. Azure Functions supports multiple programming languages and offers seamless integration with other Azure services.

Real-World Application

In one of my projects, we needed to process real-time data from IoT devices deployed across multiple locations. Azure Functions was the perfect choice for this task. By integrating Azure Functions with Azure Event Hubs, we were able to create an event-driven architecture that processed millions of events daily. The serverless nature of Azure Functions allowed us to scale dynamically based on workload, ensuring cost-efficiency and high performance.

Key Benefits:

Auto-scaling: Automatically adjusts to handle workload variations.

Cost-effective: Pay only for the resources consumed during function execution.

Integration-ready: Easily connects with services like Logic Apps, Event Grid, and API Management.

2. Azure Kubernetes Service (AKS): The Power of Containers

Containers have become the backbone of modern application development, and Azure Kubernetes Service (AKS) simplifies container orchestration. AKS provides a managed Kubernetes environment, making it easier to deploy, manage, and scale containerized applications.

Real-World Application

In a project for a healthcare client, we built a microservices architecture using AKS. Each service—such as patient records, appointment scheduling, and billing—was containerized and deployed on AKS. This approach provided several advantages:

Isolation: Each service operated independently, improving fault tolerance.

Scalability: AKS scaled specific services based on demand, optimizing resource usage.

Observability: Using Azure Monitor, we gained deep insights into application performance and quickly resolved issues.

The integration of AKS with Azure DevOps further streamlined our CI/CD pipelines, enabling rapid deployment and updates without downtime.

Key Benefits:

Managed Kubernetes: Reduces operational overhead with automated updates and patching.

Multi-region support: Enables global application deployments.

Built-in security: Integrates with Azure Active Directory and offers role-based access control (RBAC).

3. Azure DevOps: Streamlining Development Workflows

Azure DevOps is an all-in-one platform for managing development workflows, from planning to deployment. It includes tools like Azure Repos, Azure Pipelines, and Azure Artifacts, which support collaboration and automation.

Real-World Application

For an e-commerce client, we used Azure DevOps to establish an efficient CI/CD pipeline. The project involved multiple teams working on front-end, back-end, and database components. Azure DevOps provided:

Version control: Using Azure Repos for centralized code management.

Automated pipelines: Azure Pipelines for building, testing, and deploying code.

Artifact management: Storing dependencies in Azure Artifacts for seamless integration.

The result? Deployment cycles that previously took weeks were reduced to just a few hours, enabling faster time-to-market and improved customer satisfaction.

Key Benefits:

End-to-end integration: Unifies tools for seamless development and deployment.

Scalability: Supports projects of all sizes, from startups to enterprises.

Collaboration: Facilitates team communication with built-in dashboards and tracking.

4. Azure Cosmos DB: Global Data at Scale

Azure Cosmos DB is a globally distributed, multi-model database service designed for mission-critical applications. It guarantees low latency, high availability, and scalability, making it ideal for applications requiring real-time data access across multiple regions.

Real-World Application

In a project for a financial services company, we used Azure Cosmos DB to manage transaction data across multiple continents. The database’s multi-region replication ensure data consistency and availability, even during regional outages. Additionally, Cosmos DB’s support for multiple APIs (SQL, MongoDB, Cassandra, etc.) allowed us to integrate seamlessly with existing systems.

Key Benefits:

Global distribution: Data is replicated across regions with minimal latency.

Flexibility: Supports various data models, including key-value, document, and graph.

SLAs: Offers industry-leading SLAs for availability, throughput, and latency.

Building a Cohesive Solution

Combining these Azure services creates a technology stack that is flexible, scalable, and efficient. Here’s how they work together in a hypothetical solution:

Data Ingestion: IoT devices send data to Azure Event Hubs.

Processing: Azure Functions processes the data in real-time.

Storage: Processed data is stored in Azure Cosmos DB for global access.

Application Logic: Containerized microservices run on AKS, providing APIs for accessing and manipulating data.

Deployment: Azure DevOps manages the CI/CD pipeline, ensuring seamless updates to the application.

This architecture demonstrates how Azure’s technology stack can address modern business challenges while maintaining high performance and reliability.

Final Thoughts

My journey with Azure has been both rewarding and transformative. The training I received at ACTE Institute provided me with a strong foundation to explore Azure’s capabilities and apply them effectively in real-world scenarios. For those new to cloud computing, I recommend starting with a solid training program that offers hands-on experience and practical insights.

As the demand for cloud professionals continues to grow, specializing in Azure’s technology stack can open doors to exciting opportunities. If you’re based in Hyderabad or prefer online learning, consider enrolling in Microsoft Azure training in Hyderabad to kickstart your journey.

Azure’s ecosystem is continuously evolving, offering new tools and features to address emerging challenges. By staying committed to learning and experimenting, we can harness the full potential of this powerful platform and drive innovation in every project we undertake.

#cybersecurity#database#marketingstrategy#digitalmarketing#adtech#artificialintelligence#machinelearning#ai

2 notes

·

View notes

Text

What is Argo CD? And When Was Argo CD Established?

What Is Argo CD?

Argo CD is declarative Kubernetes GitOps continuous delivery.

In DevOps, ArgoCD is a Continuous Delivery (CD) technology that has become well-liked for delivering applications to Kubernetes. It is based on the GitOps deployment methodology.

When was Argo CD Established?

Argo CD was created at Intuit and made publicly available following Applatix’s 2018 acquisition by Intuit. The founding developers of Applatix, Hong Wang, Jesse Suen, and Alexander Matyushentsev, made the Argo project open-source in 2017.

Why Argo CD?

Declarative and version-controlled application definitions, configurations, and environments are ideal. Automated, auditable, and easily comprehensible application deployment and lifecycle management are essential.

Getting Started

Quick Start

kubectl create namespace argocd kubectl apply -n argocd -f https://raw.githubusercontent.com/argoproj/argo-cd/stable/manifests/install.yaml

For some features, more user-friendly documentation is offered. Refer to the upgrade guide if you want to upgrade your Argo CD. Those interested in creating third-party connectors can access developer-oriented resources.

How it works

Argo CD defines the intended application state by employing Git repositories as the source of truth, in accordance with the GitOps pattern. There are various approaches to specify Kubernetes manifests:

Applications for Customization

Helm charts

JSONNET files

Simple YAML/JSON manifest directory

Any custom configuration management tool that is set up as a plugin

The deployment of the intended application states in the designated target settings is automated by Argo CD. Deployments of applications can monitor changes to branches, tags, or pinned to a particular manifest version at a Git commit.

Architecture

The implementation of Argo CD is a Kubernetes controller that continually observes active apps and contrasts their present, live state with the target state (as defined in the Git repository). Out Of Sync is the term used to describe a deployed application whose live state differs from the target state. In addition to reporting and visualizing the differences, Argo CD offers the ability to manually or automatically sync the current state back to the intended goal state. The designated target environments can automatically apply and reflect any changes made to the intended target state in the Git repository.

Components

API Server

The Web UI, CLI, and CI/CD systems use the API, which is exposed by the gRPC/REST server. Its duties include the following:

Status reporting and application management

Launching application functions (such as rollback, sync, and user-defined actions)

Cluster credential management and repository (k8s secrets)

RBAC enforcement

Authentication, and auth delegation to outside identity providers

Git webhook event listener/forwarder

Repository Server

An internal service called the repository server keeps a local cache of the Git repository containing the application manifests. When given the following inputs, it is in charge of creating and returning the Kubernetes manifests:

URL of the repository

Revision (tag, branch, commit)

Path of the application

Template-specific configurations: helm values.yaml, parameters

A Kubernetes controller known as the application controller keeps an eye on all active apps and contrasts their actual, live state with the intended target state as defined in the repository. When it identifies an Out Of Sync application state, it may take remedial action. It is in charge of calling any user-specified hooks for lifecycle events (Sync, PostSync, and PreSync).

Features

Applications are automatically deployed to designated target environments.

Multiple configuration management/templating tools (Kustomize, Helm, Jsonnet, and plain-YAML) are supported.

Capacity to oversee and implement across several clusters

Integration of SSO (OIDC, OAuth2, LDAP, SAML 2.0, Microsoft, LinkedIn, GitHub, GitLab)

RBAC and multi-tenancy authorization policies

Rollback/Roll-anywhere to any Git repository-committed application configuration

Analysis of the application resources’ health state

Automated visualization and detection of configuration drift

Applications can be synced manually or automatically to their desired state.

Web user interface that shows program activity in real time

CLI for CI integration and automation

Integration of webhooks (GitHub, BitBucket, GitLab)

Tokens of access for automation

Hooks for PreSync, Sync, and PostSync to facilitate intricate application rollouts (such as canary and blue/green upgrades)

Application event and API call audit trails

Prometheus measurements

To override helm parameters in Git, use parameter overrides.

Read more on Govindhtech.com

#ArgoCD#CD#GitOps#API#Kubernetes#Git#Argoproject#News#Technews#Technology#Technologynews#Technologytrends#govindhtech

2 notes

·

View notes

Text

Ansible Collections: Extending Ansible’s Capabilities

Ansible is a powerful automation tool used for configuration management, application deployment, and task automation. One of the key features that enhances its flexibility and extensibility is the concept of Ansible Collections. In this blog post, we'll explore what Ansible Collections are, how to create and use them, and look at some popular collections and their use cases.

Introduction to Ansible Collections

Ansible Collections are a way to package and distribute Ansible content. This content can include playbooks, roles, modules, plugins, and more. Collections allow users to organize their Ansible content and share it more easily, making it simpler to maintain and reuse.

Key Features of Ansible Collections:

Modularity: Collections break down Ansible content into modular components that can be independently developed, tested, and maintained.

Distribution: Collections can be distributed via Ansible Galaxy or private repositories, enabling easy sharing within teams or the wider Ansible community.

Versioning: Collections support versioning, allowing users to specify and depend on specific versions of a collection. How to Create and Use Collections in Your Projects

Creating and using Ansible Collections involves a few key steps. Here’s a guide to get you started:

1. Setting Up Your Collection

To create a new collection, you can use the ansible-galaxy command-line tool:

ansible-galaxy collection init my_namespace.my_collection

This command sets up a basic directory structure for your collection:

my_namespace/

└── my_collection/

├── docs/

├── plugins/

│ ├── modules/

│ ├── inventory/

│ └── ...

├── roles/

├── playbooks/

├── README.md

└── galaxy.yml

2. Adding Content to Your Collection

Populate your collection with the necessary content. For example, you can add roles, modules, and plugins under the respective directories. Update the galaxy.yml file with metadata about your collection.

3. Building and Publishing Your Collection

Once your collection is ready, you can build it using the following command:

ansible-galaxy collection build

This command creates a tarball of your collection, which you can then publish to Ansible Galaxy or a private repository:

ansible-galaxy collection publish my_namespace-my_collection-1.0.0.tar.gz

4. Using Collections in Your Projects

To use a collection in your Ansible project, specify it in your requirements.yml file:

collections:

- name: my_namespace.my_collection

version: 1.0.0

Then, install the collection using:

ansible-galaxy collection install -r requirements.yml

You can now use the content from the collection in your playbooks:--- - name: Example Playbook hosts: localhost tasks: - name: Use a module from the collection my_namespace.my_collection.my_module: param: value

Popular Collections and Their Use Cases

Here are some popular Ansible Collections and how they can be used:

1. community.general

Description: A collection of modules, plugins, and roles that are not tied to any specific provider or technology.

Use Cases: General-purpose tasks like file manipulation, network configuration, and user management.

2. amazon.aws

Description: Provides modules and plugins for managing AWS resources.

Use Cases: Automating AWS infrastructure, such as EC2 instances, S3 buckets, and RDS databases.

3. ansible.posix

Description: A collection of modules for managing POSIX systems.

Use Cases: Tasks specific to Unix-like systems, such as managing users, groups, and file systems.

4. cisco.ios

Description: Contains modules and plugins for automating Cisco IOS devices.

Use Cases: Network automation for Cisco routers and switches, including configuration management and backup.

5. kubernetes.core

Description: Provides modules for managing Kubernetes resources.

Use Cases: Deploying and managing Kubernetes applications, services, and configurations.

Conclusion

Ansible Collections significantly enhance the modularity, distribution, and reusability of Ansible content. By understanding how to create and use collections, you can streamline your automation workflows and share your work with others more effectively. Explore popular collections to leverage existing solutions and extend Ansible’s capabilities in your projects.

For more details click www.qcsdclabs.com

#redhatcourses#information technology#linux#containerorchestration#container#kubernetes#containersecurity#docker#dockerswarm#aws

2 notes

·

View notes

Text

Tumblr has been making a lot of controversial changes lately, and this post has some great points that inspired me to say more.

It seems like people are very split on whether our hellsite doing stupid anti-user stuff means that we need to show more support or show less support. In my opinion, it's a cry for help that means we need more support (especially monetary), and I'll explain why.

Tumblr is currently a financially sinking ship. It's costing more money in upkeep than it's making. Automattic, the company that owns it, is trying to make it profitable, because they're a business. It's what they do. In my opinion, they have much better intentions than the previous overlords. Matt Mullenweg, the CEO of Automattic, said (a bit indirectly) in a blog post that he wants to open source Tumblr. That was 12 August 2019.

At the time of writing this post (23 August 2023), they're doing a damn good job of it. Looking through the blog of Tumblr's engineering team, they've already open-sourced several of the site's components:

StreamBuilder (the thing that makes the dashboard)

Kanvas (media editor and camera)

Tumblr's custom Kubernetes system (this is what allows them to scale the site's software to a huge number of servers to handle all the traffic)

webpack-web-app-manifest-plugin (I have no idea what this one does, maybe some JavaScript developer can enlighten me)

and that's great! More importantly, it shows that they have good intentions. Making the site open source is a very pro-consumer thing to do, because it means they care about consumers having good services more than they care about being profitable. If they only cared about profit, they would avoid the risk of inadvertently assisting competitors with the open source effort.

My point here is that they are genuinely trying to balance keeping users happy with not having Tumblr die completely.

So at this point, their options are:

sit still and let the platform die

change stuff until the platform is profitable

and since doing #1 would be stupid, they're doing #2. Needless to say, they are not doing a great job of it, for many many reasons. The most direct thing we can do is give them money so that the platform becomes profitable, that way they're no longer being held hostage by their finances. However compelling user feedback may be, it's not more compelling than the company dying. So we save the site from dying financially, then we work on improving the other stuff.

Some people think that giving them money is endorsing what they're doing right now, like disproportionately applying the mature label to trans folks, and twitterifying the dashboard. I disagree.

Giving money to Tumblr is saying "I think you can do better with more resources, why don't you show me."

They clearly need more resources to moderate properly, and to figure out how their decisions are impacting users. In the first post I linked it talks about how running experiments on people's behavior (and getting meaningful results) is really hard. They clearly need more resources for that, so they can accurately quantify how shit their decisions are, and then make better ones. They can't do that if the site is fucking dead.

Tumblr can't get better if it's fucking dead.

so buy crabs, support the site, and have faith that it will improve eventually. If it doesn't, we can all jump ship to cohost or something, but I would prefer to stay here.

8 notes

·

View notes

Text

Level Up Your Software Development Skills: Join Our Unique DevOps Course

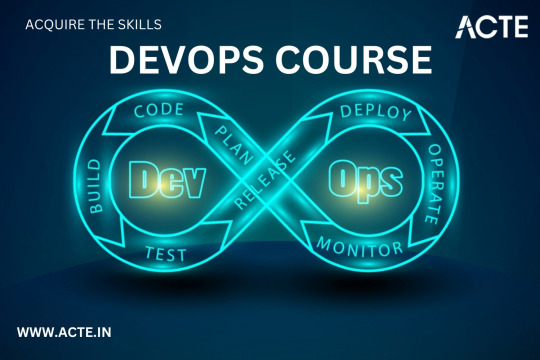

Would you like to increase your knowledge of software development? Look no further! Our unique DevOps course is the perfect opportunity to upgrade your skillset and pave the way for accelerated career growth in the tech industry. In this article, we will explore the key components of our course, reasons why you should choose it, the remarkable placement opportunities it offers, and the numerous benefits you can expect to gain from joining us.

Key Components of Our DevOps Course

Our DevOps course is meticulously designed to provide you with a comprehensive understanding of the DevOps methodology and equip you with the necessary tools and techniques to excel in the field. Here are the key components you can expect to delve into during the course:

1. Understanding DevOps Fundamentals

Learn the core principles and concepts of DevOps, including continuous integration, continuous delivery, infrastructure automation, and collaboration techniques. Gain insights into how DevOps practices can enhance software development efficiency and communication within cross-functional teams.

2. Mastering Cloud Computing Technologies

Immerse yourself in cloud computing platforms like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform. Acquire hands-on experience in deploying applications, managing serverless architectures, and leveraging containerization technologies such as Docker and Kubernetes for scalable and efficient deployment.

3. Automating Infrastructure as Code

Discover the power of infrastructure automation through tools like Ansible, Terraform, and Puppet. Automate the provisioning, configuration, and management of infrastructure resources, enabling rapid scalability, agility, and error-free deployments.

4. Monitoring and Performance Optimization

Explore various monitoring and observability tools, including Elasticsearch, Grafana, and Prometheus, to ensure your applications are running smoothly and performing optimally. Learn how to diagnose and resolve performance bottlenecks, conduct efficient log analysis, and implement effective alerting mechanisms.

5. Embracing Continuous Integration and Delivery

Dive into the world of continuous integration and delivery (CI/CD) pipelines using popular tools like Jenkins, GitLab CI/CD, and CircleCI. Gain a deep understanding of how to automate build processes, run tests, and deploy applications seamlessly to achieve faster and more reliable software releases.

Reasons to Choose Our DevOps Course

There are numerous reasons why our DevOps course stands out from the rest. Here are some compelling factors that make it the ideal choice for aspiring software developers:

Expert Instructors: Learn from industry professionals who possess extensive experience in the field of DevOps and have a genuine passion for teaching. Benefit from their wealth of knowledge and practical insights gained from working on real-world projects.

Hands-On Approach: Our course emphasizes hands-on learning to ensure you develop the practical skills necessary to thrive in a DevOps environment. Through immersive lab sessions, you will have opportunities to apply the concepts learned and gain valuable experience working with industry-standard tools and technologies.

Tailored Curriculum: We understand that every learner is unique, so our curriculum is strategically designed to cater to individuals of varying proficiency levels. Whether you are a beginner or an experienced professional, our course will be tailored to suit your needs and help you achieve your desired goals.

Industry-Relevant Projects: Gain practical exposure to real-world scenarios by working on industry-relevant projects. Apply your newly acquired skills to solve complex problems and build innovative solutions that mirror the challenges faced by DevOps practitioners in the industry today.

Benefits of Joining Our DevOps Course

By joining our DevOps course, you open up a world of benefits that will enhance your software development career. Here are some notable advantages you can expect to gain:

Enhanced Employability: Acquire sought-after skills that are in high demand in the software development industry. Stand out from the crowd and increase your employability prospects by showcasing your proficiency in DevOps methodologies and tools.

Higher Earning Potential: With the rise of DevOps practices, organizations are willing to offer competitive remuneration packages to skilled professionals. By mastering DevOps through our course, you can significantly increase your earning potential in the tech industry.

Streamlined Software Development Processes: Gain the ability to streamline software development workflows by effectively integrating development and operations. With DevOps expertise, you will be capable of accelerating software deployment, reducing errors, and improving the overall efficiency of the development lifecycle.

Continuous Learning and Growth: DevOps is a rapidly evolving field, and by joining our course, you become a part of a community committed to continuous learning and growth. Stay updated with the latest industry trends, technologies, and best practices to ensure your skills remain relevant in an ever-changing tech landscape.

In conclusion, our unique DevOps course at ACTE institute offers unparalleled opportunities for software developers to level up their skills and propel their careers forward. With a comprehensive curriculum, remarkable placement opportunities, and a host of benefits, joining our course is undoubtedly a wise investment in your future success. Don't miss out on this incredible chance to become a proficient DevOps practitioner and unlock new horizons in the world of software development. Enroll today and embark on an exciting journey towards professional growth and achievement!

10 notes

·

View notes

Text

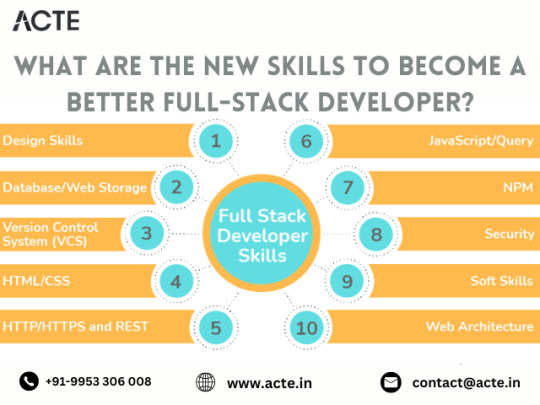

Elevating Your Full-Stack Developer Expertise: Exploring Emerging Skills and Technologies

Introduction: In the dynamic landscape of web development, staying at the forefront requires continuous learning and adaptation. Full-stack developers play a pivotal role in crafting modern web applications, balancing frontend finesse with backend robustness. This guide delves into the evolving skills and technologies that can propel full-stack developers to new heights of expertise and innovation.

Pioneering Progress: Key Skills for Full-Stack Developers

1. Innovating with Microservices Architecture:

Microservices have redefined application development, offering scalability and flexibility in the face of complexity. Mastery of frameworks like Kubernetes and Docker empowers developers to architect, deploy, and manage microservices efficiently. By breaking down monolithic applications into modular components, developers can iterate rapidly and respond to changing requirements with agility.

2. Embracing Serverless Computing:

The advent of serverless architecture has revolutionized infrastructure management, freeing developers from the burdens of server maintenance. Platforms such as AWS Lambda and Azure Functions enable developers to focus solely on code development, driving efficiency and cost-effectiveness. Embrace serverless computing to build scalable, event-driven applications that adapt seamlessly to fluctuating workloads.

3. Crafting Progressive Web Experiences (PWEs):

Progressive Web Apps (PWAs) herald a new era of web development, delivering native app-like experiences within the browser. Harness the power of technologies like Service Workers and Web App Manifests to create PWAs that are fast, reliable, and engaging. With features like offline functionality and push notifications, PWAs blur the lines between web and mobile, captivating users and enhancing engagement.

4. Harnessing GraphQL for Flexible Data Management:

GraphQL has emerged as a versatile alternative to RESTful APIs, offering a unified interface for data fetching and manipulation. Dive into GraphQL's intuitive query language and schema-driven approach to simplify data interactions and optimize performance. With GraphQL, developers can fetch precisely the data they need, minimizing overhead and maximizing efficiency.

5. Unlocking Potential with Jamstack Development:

Jamstack architecture empowers developers to build fast, secure, and scalable web applications using modern tools and practices. Explore frameworks like Gatsby and Next.js to leverage pre-rendering, serverless functions, and CDN caching. By decoupling frontend presentation from backend logic, Jamstack enables developers to deliver blazing-fast experiences that delight users and drive engagement.

6. Integrating Headless CMS for Content Flexibility:

Headless CMS platforms offer developers unprecedented control over content management, enabling seamless integration with frontend frameworks. Explore platforms like Contentful and Strapi to decouple content creation from presentation, facilitating dynamic and personalized experiences across channels. With headless CMS, developers can iterate quickly and deliver content-driven applications with ease.

7. Optimizing Single Page Applications (SPAs) for Performance:

Single Page Applications (SPAs) provide immersive user experiences but require careful optimization to ensure performance and responsiveness. Implement techniques like lazy loading and server-side rendering to minimize load times and enhance interactivity. By optimizing resource delivery and prioritizing critical content, developers can create SPAs that deliver a seamless and engaging user experience.

8. Infusing Intelligence with Machine Learning and AI:

Machine learning and artificial intelligence open new frontiers for full-stack developers, enabling intelligent features and personalized experiences. Dive into frameworks like TensorFlow.js and PyTorch.js to build recommendation systems, predictive analytics, and natural language processing capabilities. By harnessing the power of machine learning, developers can create smarter, more adaptive applications that anticipate user needs and preferences.

9. Safeguarding Applications with Cybersecurity Best Practices:

As cyber threats continue to evolve, cybersecurity remains a critical concern for developers and organizations alike. Stay informed about common vulnerabilities and adhere to best practices for securing applications and user data. By implementing robust security measures and proactive monitoring, developers can protect against potential threats and safeguard the integrity of their applications.

10. Streamlining Development with CI/CD Pipelines:

Continuous Integration and Deployment (CI/CD) pipelines are essential for accelerating development workflows and ensuring code quality and reliability. Explore tools like Jenkins, CircleCI, and GitLab CI/CD to automate testing, integration, and deployment processes. By embracing CI/CD best practices, developers can deliver updates and features with confidence, driving innovation and agility in their development cycles.

#full stack developer#education#information#full stack web development#front end development#web development#frameworks#technology#backend#full stack developer course

2 notes

·

View notes

Text

Demystifying Microsoft Azure Cloud Hosting and PaaS Services: A Comprehensive Guide

In the rapidly evolving landscape of cloud computing, Microsoft Azure has emerged as a powerful player, offering a wide range of services to help businesses build, deploy, and manage applications and infrastructure. One of the standout features of Azure is its Cloud Hosting and Platform-as-a-Service (PaaS) offerings, which enable organizations to harness the benefits of the cloud while minimizing the complexities of infrastructure management. In this comprehensive guide, we'll dive deep into Microsoft Azure Cloud Hosting and PaaS Services, demystifying their features, benefits, and use cases.

Understanding Microsoft Azure Cloud Hosting

Cloud hosting, as the name suggests, involves hosting applications and services on virtual servers that are accessed over the internet. Microsoft Azure provides a robust cloud hosting environment, allowing businesses to scale up or down as needed, pay for only the resources they consume, and reduce the burden of maintaining physical hardware. Here are some key components of Azure Cloud Hosting:

Virtual Machines (VMs): Azure offers a variety of pre-configured virtual machine sizes that cater to different workloads. These VMs can run Windows or Linux operating systems and can be easily scaled to meet changing demands.

Azure App Service: This PaaS offering allows developers to build, deploy, and manage web applications without dealing with the underlying infrastructure. It supports various programming languages and frameworks, making it suitable for a wide range of applications.

Azure Kubernetes Service (AKS): For containerized applications, AKS provides a managed Kubernetes service. Kubernetes simplifies the deployment and management of containerized applications, and AKS further streamlines this process.

Exploring Azure Platform-as-a-Service (PaaS) Services

Platform-as-a-Service (PaaS) takes cloud hosting a step further by abstracting away even more of the infrastructure management, allowing developers to focus primarily on building and deploying applications. Azure offers an array of PaaS services that cater to different needs:

Azure SQL Database: This fully managed relational database service eliminates the need for database administration tasks such as patching and backups. It offers high availability, security, and scalability for your data.

Azure Cosmos DB: For globally distributed, highly responsive applications, Azure Cosmos DB is a NoSQL database service that guarantees low-latency access and automatic scaling.

Azure Functions: A serverless compute service, Azure Functions allows you to run code in response to events without provisioning or managing servers. It's ideal for event-driven architectures.

Azure Logic Apps: This service enables you to automate workflows and integrate various applications and services without writing extensive code. It's great for orchestrating complex business processes.

Benefits of Azure Cloud Hosting and PaaS Services

Scalability: Azure's elasticity allows you to scale resources up or down based on demand. This ensures optimal performance and cost efficiency.

Cost Management: With pay-as-you-go pricing, you only pay for the resources you use. Azure also provides cost management tools to monitor and optimize spending.

High Availability: Azure's data centers are distributed globally, providing redundancy and ensuring high availability for your applications.

Security and Compliance: Azure offers robust security features and compliance certifications, helping you meet industry standards and regulations.

Developer Productivity: PaaS services like Azure App Service and Azure Functions streamline development by handling infrastructure tasks, allowing developers to focus on writing code.

Use Cases for Azure Cloud Hosting and PaaS

Web Applications: Azure App Service is ideal for hosting web applications, enabling easy deployment and scaling without managing the underlying servers.

Microservices: Azure Kubernetes Service supports the deployment and orchestration of microservices, making it suitable for complex applications with multiple components.

Data-Driven Applications: Azure's PaaS offerings like Azure SQL Database and Azure Cosmos DB are well-suited for applications that rely heavily on data storage and processing.

Serverless Architecture: Azure Functions and Logic Apps are perfect for building serverless applications that respond to events in real-time.

In conclusion, Microsoft Azure's Cloud Hosting and PaaS Services provide businesses with the tools they need to harness the power of the cloud while minimizing the complexities of infrastructure management. With scalability, cost-efficiency, and a wide array of services, Azure empowers developers and organizations to innovate and deliver impactful applications. Whether you're hosting a web application, managing data, or adopting a serverless approach, Azure has the tools to support your journey into the cloud.

#Microsoft Azure#Internet of Things#Azure AI#Azure Analytics#Azure IoT Services#Azure Applications#Microsoft Azure PaaS

2 notes

·

View notes

Text

How a Web Development Company Builds Scalable SaaS Platforms

Building a SaaS (Software as a Service) platform isn't just about writing code—it’s about designing a product that can grow with your business, serve thousands of users reliably, and continuously evolve based on market needs. Whether you're launching a CRM, learning management system, or a niche productivity tool, scalability must be part of the plan from day one.

That’s why a professional Web Development Company brings more than just technical skills to the table. They understand the architectural, design, and business logic decisions required to ensure your SaaS product is not just functional—but scalable, secure, and future-proof.

1. Laying a Solid Architectural Foundation

The first step in building a scalable SaaS product is choosing the right architecture. Most development agencies follow a modular, service-oriented approach that separates different components of the application—user management, billing, dashboards, APIs, etc.—into layers or even microservices.

This ensures:

Features can be developed and deployed independently

The system can scale horizontally (adding more servers) or vertically (upgrading resources)

Future updates or integrations won’t require rebuilding the entire platform

Development teams often choose cloud-native architectures built on platforms like AWS, Azure, or GCP for their scalability and reliability.

2. Selecting the Right Tech Stack

Choosing the right technology stack is critical. The tech must support performance under heavy loads and allow for easy development as your team grows.

Popular stacks for SaaS platforms include:

Frontend: React.js, Vue.js, or Angular

Backend: Node.js, Django, Ruby on Rails, or Laravel

Databases: PostgreSQL or MongoDB for flexibility and performance

Infrastructure: Docker, Kubernetes, CI/CD pipelines for automation

A skilled agency doesn’t just pick trendy tools—they choose frameworks aligned with your app’s use case, team skills, and scaling needs.

3. Multi-Tenancy Setup

One of the biggest differentiators in SaaS development is whether the platform is multi-tenant—where one codebase and database serve multiple customers with logical separation.

A web development company configures multi-tenancy using:

Separate schemas per tenant (isolated but efficient)

Shared databases with tenant identifiers (cost-effective)

Isolated instances for enterprise clients (maximum security)

This architecture supports onboarding multiple customers without duplicating infrastructure—making it cost-efficient and easy to manage.

4. Building Secure, Scalable User Management

SaaS platforms must support a range of users—admins, team members, clients—with different permissions. That’s why role-based access control (RBAC) is built into the system from the start.

Key features include:

Secure user registration and login (OAuth2, SSO, MFA)

Dynamic role creation and permission assignment

Audit logs and activity tracking

This layer is integrated with identity providers and third-party auth services to meet enterprise security expectations.

5. Ensuring Seamless Billing and Subscription Management

Monetization is central to SaaS success. Development companies build subscription logic that supports:

Monthly and annual billing cycles

Tiered or usage-based pricing models

Free trials and discounts

Integration with Stripe, Razorpay, or other payment gateways

They also ensure compliance with global standards (like PCI DSS for payment security and GDPR for user data privacy), especially if you're targeting international customers.

6. Performance Optimization from Day One

Scalability means staying fast even as traffic and data grow. Web developers implement:

Caching systems (like Redis or Memcached)

Load balancers and auto-scaling policies

Asynchronous task queues (e.g., Celery, RabbitMQ)

CDN integration for static asset delivery

Combined with code profiling and database indexing, these enhancements ensure your SaaS stays performant no matter how many users are active.

7. Continuous Deployment and Monitoring

SaaS products evolve quickly—new features, fixes, improvements. That’s why agencies set up:

CI/CD pipelines for automated testing and deployment

Error tracking tools like Sentry or Rollbar

Performance monitoring with tools like Datadog or New Relic

Log management for incident response and debugging

This allows for rapid iteration and minimal downtime, which are critical in SaaS environments.

8. Preparing for Scale from a Product Perspective

Scalability isn’t just technical—it’s also about UX and support. A good development company collaborates on:

Intuitive onboarding flows

Scalable navigation and UI design systems

Help center and chatbot integrations

Data export and reporting features for growing teams

These elements allow users to self-serve as the platform scales, reducing support load and improving retention.

Conclusion

SaaS platforms are complex ecosystems that require planning, flexibility, and technical excellence. From architecture and authentication to billing and performance, every layer must be built with growth in mind. That’s why startups and enterprises alike trust a Web Development Company to help them design and launch SaaS solutions that can handle scale—without sacrificing speed or security.

Whether you're building your first SaaS MVP or upgrading an existing product, the right development partner can transform your vision into a resilient, scalable reality.

0 notes

Text

CNAPP Explained: The Smartest Way to Secure Cloud-Native Apps with EDSPL

Introduction: The New Era of Cloud-Native Apps

Cloud-native applications are rewriting the rules of how we build, scale, and secure digital products. Designed for agility and rapid innovation, these apps demand security strategies that are just as fast and flexible. That’s where CNAPP—Cloud-Native Application Protection Platform—comes in.

But simply deploying CNAPP isn’t enough.

You need the right strategy, the right partner, and the right security intelligence. That’s where EDSPL shines.

What is CNAPP? (And Why Your Business Needs It)

CNAPP stands for Cloud-Native Application Protection Platform, a unified framework that protects cloud-native apps throughout their lifecycle—from development to production and beyond.

Instead of relying on fragmented tools, CNAPP combines multiple security services into a cohesive solution:

Cloud Security

Vulnerability management

Identity access control

Runtime protection

DevSecOps enablement

In short, it covers the full spectrum—from your code to your container, from your workload to your network security.

Why Traditional Security Isn’t Enough Anymore

The old way of securing applications with perimeter-based tools and manual checks doesn’t work for cloud-native environments. Here’s why:

Infrastructure is dynamic (containers, microservices, serverless)

Deployments are continuous

Apps run across multiple platforms

You need security that is cloud-aware, automated, and context-rich—all things that CNAPP and EDSPL’s services deliver together.

Core Components of CNAPP

Let’s break down the core capabilities of CNAPP and how EDSPL customizes them for your business:

1. Cloud Security Posture Management (CSPM)

Checks your cloud infrastructure for misconfigurations and compliance gaps.

See how EDSPL handles cloud security with automated policy enforcement and real-time visibility.

2. Cloud Workload Protection Platform (CWPP)

Protects virtual machines, containers, and functions from attacks.

This includes deep integration with application security layers to scan, detect, and fix risks before deployment.

3. CIEM: Identity and Access Management

Monitors access rights and roles across multi-cloud environments.

Your network, routing, and storage environments are covered with strict permission models.

4. DevSecOps Integration

CNAPP shifts security left—early into the DevOps cycle. EDSPL’s managed services ensure security tools are embedded directly into your CI/CD pipelines.

5. Kubernetes and Container Security

Containers need runtime defense. Our approach ensures zero-day protection within compute environments and dynamic clusters.

How EDSPL Tailors CNAPP for Real-World Environments

Every organization’s tech stack is unique. That’s why EDSPL never takes a one-size-fits-all approach. We customize CNAPP for your:

Cloud provider setup

Mobility strategy

Data center switching

Backup architecture

Storage preferences

This ensures your entire digital ecosystem is secure, streamlined, and scalable.

Case Study: CNAPP in Action with EDSPL

The Challenge

A fintech company using a hybrid cloud setup faced:

Misconfigured services

Shadow admin accounts

Poor visibility across Kubernetes

EDSPL’s Solution

Integrated CNAPP with CIEM + CSPM

Hardened their routing infrastructure

Applied real-time runtime policies at the node level

✅ The Results

75% drop in vulnerabilities

Improved time to resolution by 4x

Full compliance with ISO, SOC2, and GDPR

Why EDSPL’s CNAPP Stands Out

While most providers stop at integration, EDSPL goes beyond:

🔹 End-to-End Security: From app code to switching hardware, every layer is secured. 🔹 Proactive Threat Detection: Real-time alerts and behavior analytics. 🔹 Customizable Dashboards: Unified views tailored to your team. 🔹 24x7 SOC Support: With expert incident response. 🔹 Future-Proofing: Our background vision keeps you ready for what’s next.

EDSPL’s Broader Capabilities: CNAPP and Beyond

While CNAPP is essential, your digital ecosystem needs full-stack protection. EDSPL offers:

Network security

Application security

Switching and routing solutions

Storage and backup services

Mobility and remote access optimization

Managed and maintenance services for 24x7 support

Whether you’re building apps, protecting data, or scaling globally, we help you do it securely.

Let’s Talk CNAPP

You’ve read the what, why, and how of CNAPP — now it’s time to act.

📩 Reach us for a free CNAPP consultation. 📞 Or get in touch with our cloud security specialists now.

Secure your cloud-native future with EDSPL — because prevention is always smarter than cure.

0 notes

Text

Enterprise Kubernetes Storage with Red Hat OpenShift Data Foundation (DO370)

In the era of cloud-native transformation, data is the fuel powering everything from mission-critical enterprise apps to real-time analytics platforms. However, as Kubernetes adoption grows, many organizations face a new set of challenges: how to manage persistent storage efficiently, reliably, and securely across distributed environments.

To solve this, Red Hat OpenShift Data Foundation (ODF) emerges as a powerful solution — and the DO370 training course is designed to equip professionals with the skills to deploy and manage this enterprise-grade storage platform.

🔍 What is Red Hat OpenShift Data Foundation?

OpenShift Data Foundation is an integrated, software-defined storage solution that delivers scalable, resilient, and cloud-native storage for Kubernetes workloads. Built on Ceph and Rook, ODF supports block, file, and object storage within OpenShift, making it an ideal choice for stateful applications like databases, CI/CD systems, AI/ML pipelines, and analytics engines.

🎯 Why Learn DO370?

The DO370: Red Hat OpenShift Data Foundation course is specifically designed for storage administrators, infrastructure architects, and OpenShift professionals who want to:

✅ Deploy ODF on OpenShift clusters using best practices.

✅ Understand the architecture and internal components of Ceph-based storage.

✅ Manage persistent volumes (PVs), storage classes, and dynamic provisioning.

✅ Monitor, scale, and secure Kubernetes storage environments.

✅ Troubleshoot common storage-related issues in production.

🛠️ Key Features of ODF for Enterprise Workloads

1. Unified Storage (Block, File, Object)

Eliminate silos with a single platform that supports diverse workloads.

2. High Availability & Resilience

ODF is designed for fault tolerance and self-healing, ensuring business continuity.

3. Integrated with OpenShift

Full integration with the OpenShift Console, Operators, and CLI for seamless Day 1 and Day 2 operations.

4. Dynamic Provisioning

Simplifies persistent storage allocation, reducing manual intervention.

5. Multi-Cloud & Hybrid Cloud Ready

Store and manage data across on-prem, public cloud, and edge environments.

📘 What You Will Learn in DO370

Installing and configuring ODF in an OpenShift environment.

Creating and managing storage resources using the OpenShift Console and CLI.

Implementing security and encryption for data at rest.

Monitoring ODF health with Prometheus and Grafana.

Scaling the storage cluster to meet growing demands.

🧠 Real-World Use Cases

Databases: PostgreSQL, MySQL, MongoDB with persistent volumes.

CI/CD: Jenkins with persistent pipelines and storage for artifacts.

AI/ML: Store and manage large datasets for training models.

Kafka & Logging: High-throughput storage for real-time data ingestion.

👨🏫 Who Should Enroll?

This course is ideal for:

Storage Administrators

Kubernetes Engineers

DevOps & SRE teams

Enterprise Architects

OpenShift Administrators aiming to become RHCA in Infrastructure or OpenShift

🚀 Takeaway

If you’re serious about building resilient, performant, and scalable storage for your Kubernetes applications, DO370 is the must-have training. With ODF becoming a core component of modern OpenShift deployments, understanding it deeply positions you as a valuable asset in any hybrid cloud team.

🧭 Ready to transform your Kubernetes storage strategy? Enroll in DO370 and master Red Hat OpenShift Data Foundation today with HawkStack Technologies – your trusted Red Hat Certified Training Partner. For more details www.hawkstack.com

0 notes

Text

Why DevOps and Microservices Are a Perfect Match for Modern Software Delivery

In today’s time, businesses are using scalable and agile software development methods. Two of the most transformative technologies, DevOps and microservices, have achieved substantial momentum. Both of these have advantages, but their full potential is seen when used together. DevOps gives automation and cooperation, and microservices divide complex monolithic apps into manageable services. They form a powerful combination and allow faster releases, higher quality, and more scalable systems.

Here's why DevOps and microservices are ideal for modern software delivery:

1. Independent Deployments Align Perfectly with Continuous Delivery

One of the best features of microservices is that each service can be built, tested, and deployed separately. This decoupling allows businesses to release features or changes without building or testing the complete program. DevOps, which focuses on continuous integration and delivery (CI/CD), thrives in this environment. Individual microservices can be fitted into CI/CD pipelines to enable more frequent and dependable deployments. The result is faster innovation cycles and reduced risk, as smaller changes are easier to manage and roll back if needed.

2. Team Autonomy Enhances Ownership and Accountability

Microservices encourage small, cross-functional teams to take ownership of specialized services from start to finish. This is consistent with the DevOps principle of breaking down the division between development and operations. Teams that receive experienced DevOps consulting services are better equipped to handle the full lifecycle, from development and testing to deployment and monitoring, by implementing best practices and automation tools.

3. Scalability Is Easier to Manage with Automation

Scaling a monolithic application often entails scaling the entire thing, even if only a portion is under demand. Microservices address this by enabling each service to scale independently based on demand. DevOps approaches like infrastructure-as-code (IaC), containerization, and orchestration technologies like Kubernetes make scaling strategies easier to automate. Whether scaling up a payment module during the holiday season or shutting down less-used services overnight, DevOps automation complements microservices by ensuring systems scale efficiently and cost-effectively.

4. Fault Isolation and Faster Recovery with Monitoring

DevOps encourages proactive monitoring, alerting, and issue response, which are critical to the success of distributed microservices systems. Because microservices isolate failures inside specific components, they limit the potential impact of a crash or performance issue. DevOps tools monitor service health, collect logs, and evaluate performance data. This visibility allows for faster detection and resolution of issues, resulting in less downtime and a better user experience.

5. Shorter Development Cycles with Parallel Workflows

Microservices allow teams to work on multiple components in parallel without waiting for each other. Microservices development services help enterprises in structuring their applications to support loosely connected services. When combined with DevOps, which promotes CI/CD automation and streamlined approvals, teams can implement code changes more quickly and frequently. Parallelism greatly reduces development cycles and enhances response to market demands.

6. Better Fit for Cloud-Native and Containerized Environments

Modern software delivery is becoming more cloud-native, and both microservices and DevOps support this trend. Microservices are deployed in containers, which are lightweight, portable, and isolated. DevOps tools are used to automate processes for deployment, scaling, and upgrades. This compatibility guarantees smooth delivery pipelines, consistent environments from development to production, and seamless rollback capabilities when required.

7. Streamlined Testing and Quality Assurance

Microservices allow for more modular testing. Each service may be unit-tested, integration-tested, and load-tested separately, increasing test accuracy and speed. DevOps incorporates test automation into the CI/CD pipeline, guaranteeing that every code push is validated without manual intervention. This collaboration results in greater software quality, faster problem identification, and reduced stress during deployments, especially in large, dynamic systems.

8. Security and Compliance Become More Manageable

Security can be implemented more accurately in a microservices architecture since services are isolated and can be managed by service-level access controls. DevOps incorporates DevSecOps, which involves integrating security checks into the CI/CD pipeline. This means security scans, compliance checks, and vulnerability assessments are performed early and frequently. Microservices and DevOps work together to help enterprises adopt a shift-left security approach. They make securing systems easier while not slowing development.

9. Continuous Improvement with Feedback Loops

DevOps and microservices work best with feedback. DevOps stresses real-time monitoring and feedback loops to continuously improve systems. Microservices make it easy to assess the performance of individual services, find inefficiencies, and improve them. When these feedback loops are integrated into the CI/CD process, teams can act quickly on insights, improving performance, reliability, and user satisfaction.

Conclusion

DevOps and microservices are not only compatible but also complementary forces that drive the next generation of software delivery. While microservices simplify complexity, DevOps guarantees that those units are efficiently produced, tested, deployed, and monitored. The combination enables teams to develop high-quality software at scale, quickly and confidently. Adopting DevOps and microservices is helpful and necessary for enterprises seeking to remain competitive and agile in a rapidly changing market.

#devops#microservices#software#services#solutions#business#microservices development#devops services#devops consulting services

0 notes

Text

Legacy Software Modernization Services In India – NRS Infoways

In today’s hyper‑competitive digital landscape, clinging to outdated systems is no longer an option. Legacy applications can slow innovation, inflate maintenance costs, and expose your organization to security vulnerabilities. NRS Infoways bridges the gap between yesterday’s technology and tomorrow’s possibilities with comprehensive Software Modernization Services In India that revitalize your core systems without disrupting day‑to‑day operations.

Why Modernize?

Boost Performance & Scalability

Legacy architectures often struggle under modern workloads. By re‑architecting or migrating to cloud‑native frameworks, NRS Infoways unlocks the flexibility you need to scale on demand and handle unpredictable traffic spikes with ease.

Reduce Technical Debt

Old codebases are costly to maintain. Our experts refactor critical components, streamline dependencies, and implement automated testing pipelines, dramatically lowering long‑term maintenance expenses.

Strengthen Security & Compliance

Obsolete software frequently harbors unpatched vulnerabilities. We embed industry‑standard security protocols and data‑privacy controls to safeguard sensitive information and keep you compliant with evolving regulations.

Enhance User Experience

Customers expect snappy, intuitive interfaces. We upgrade clunky GUIs into sleek, responsive designs—whether for web, mobile, or enterprise portals—boosting user satisfaction and retention.

Our Proven Modernization Methodology

1. Deep‑Dive Assessment

We begin with an exhaustive audit of your existing environment—code quality, infrastructure, DevOps maturity, integration points, and business objectives. This roadmap pinpoints pain points, ranks priorities, and plots the most efficient modernization path.

2. Strategic Planning & Architecture

Armed with data, we design a future‑proof architecture. Whether it’s containerization with Docker/Kubernetes, serverless microservices, or hybrid-cloud setups, each blueprint aligns performance goals with budget realities.

3. Incremental Refactoring & Re‑engineering

To mitigate risk, we adopt a phased approach. Modules are refactored or rewritten in modern languages—often leveraging Java Spring Boot, .NET Core, or Node.js—while maintaining functional parity. Continuous integration pipelines ensure rapid, reliable deployments.

4. Data Migration & Integration

Smooth, loss‑less data transfer is critical. Our team employs advanced ETL processes and secure APIs to migrate databases, synchronize records, and maintain interoperability with existing third‑party solutions.

5. Rigorous Quality Assurance

Automated unit, integration, and performance tests catch issues early. Penetration testing and vulnerability scans validate that the revamped system meets stringent security and compliance benchmarks.

6. Go‑Live & Continuous Support

Once production‑ready, we orchestrate a seamless rollout with minimal downtime. Post‑deployment, NRS Infoways provides 24 × 7 monitoring, performance tuning, and incremental enhancements so your modernized platform evolves alongside your business.

Key Differentiators

Domain Expertise: Two decades of transforming systems across finance, healthcare, retail, and logistics.

Certified Talent: AWS, Azure, and Google Cloud‑certified architects ensure best‑in‑class cloud adoption.

DevSecOps Culture: Security baked into every phase, backed by automated vulnerability management.

Agile Engagement Models: Fixed‑scope, time‑and‑material, or dedicated team options adapt to your budget and timeline.

Result‑Driven KPIs: We measure success via reduced TCO, improved response times, and tangible ROI, not just code delivery.

Success Story Snapshot

A leading Indian logistics firm grappled with a decade‑old monolith that hindered real‑time shipment tracking. NRS Infoways migrated the application to a microservices architecture on Azure, consolidating disparate data silos and introducing RESTful APIs for third‑party integrations. The results? A 40��% reduction in server costs, 60 % faster release cycles, and a 25 % uptick in customer satisfaction scores within six months.

Future‑Proof Your Business Today

Legacy doesn’t have to mean liability. With NRS Infoways’ Legacy Software Modernization Services In India, you gain a robust, scalable, and secure foundation ready to tackle tomorrow’s challenges—whether that’s AI integration, advanced analytics, or global expansion.

Ready to transform?

Contact us for a free modernization assessment and discover how our Software Modernization Services In India can accelerate your digital journey, boost operational efficiency, and drive sustainable growth.

0 notes

Text

Full Stack Development Trends in 2025: What to Expect

In the rapidly evolving tech landscape, full stack development continues to be a crucial area for innovation and career growth. As we step into 2025, the demand for skilled professionals who can handle both front-end and back-end technologies is only expected to surge. From artificial intelligence integration to serverless architectures, this field is experiencing some major transformations.

Whether you're a student, a working professional, or someone planning to switch careers, understanding these full stack development trends is essential. And if you're planning to learn full stack development in Pune, one of India’s tech hubs, staying updated with these trends will give you a competitive edge.

Why Full Stack Development Matters More Than Ever

Modern businesses seek agility and efficiency in software development. Full stack developers can handle various layers of a web or app project—from UI/UX to database management and server logic. This ability to operate across multiple domains makes full stack professionals highly valuable.

Here’s what’s changing in 2025 and why it matters:

Key Full Stack Development Trends to Watch in 2025

1. AI and Machine Learning-Driven Development

Integration of AI for predictive user experiences

Chatbots and intelligent systems as part of app architecture

Developers using AI tools to assist with debugging, code generation, and optimization

With these technologies becoming more accessible, full stack developers are expected to understand how AI models work and how to implement them efficiently.

2. Serverless Architectures on the Rise

Reduction in infrastructure management tasks

Focus shifts to writing quality code without worrying about deployment

Increased use of platforms like AWS Lambda, Azure Functions, and Google Cloud Functions

Serverless frameworks will empower developers to build scalable applications faster, and those enrolled in a Java programming course with placement are already being introduced to these platforms as part of their curriculum.

3. Micro Frontends and Component-Based Architectures

Projects are being split into smaller, manageable front-end components

Encourages reuse and parallel development

Helps large teams work on different parts of an application efficiently

This trend is changing the way teams collaborate, especially in agile environments.

4. Progressive Web Applications (PWAs) Becoming the Norm

PWAs offer app-like experiences in browsers

Offline support, push notifications, and fast load times

Ideal for startups and enterprises alike

A full stack developer in 2025 must be proficient in building PWAs using modern tools like React, Angular, and Vue.js.

5. API-First Development

Focus on creating flexible, scalable backend systems

REST and GraphQL APIs powering multiple frontends (web, mobile, IoT)

Encourages modular architecture

Many courses teaching full stack development in Pune are already emphasizing this model to prepare students for real-world industry demands.

6. Focus on Security and Compliance

Developers now need to consider security during initial coding phases

Emphasis on secure coding practices, data privacy, and GDPR compliance

DevSecOps becoming a standard practice

7. DevOps and Automation

CI/CD pipelines becoming essential in full stack workflows

Containerization using Docker and Kubernetes is standard

Developers expected to collaborate closely with DevOps engineers

8. Real-Time Applications with WebSockets and Beyond

Messaging apps, live dashboards, and real-time collaboration tools are in demand

Tools like Socket.IO and WebRTC are becoming essential in the developer toolkit

Skills That Will Define the Future Full Stack Developer

To thrive in 2025, here are the skills you need to master:

Strong foundation in JavaScript, HTML, CSS

Backend frameworks like Node.js, Django, or Spring Boot

Proficiency in databases – both SQL and NoSQL

Familiarity with Java programming, especially if pursuing a Java programming course with placement

Understanding of cloud platforms like AWS, GCP, or Azure

Working knowledge of version control (Git), CI/CD, and Docker

Why Pune is the Ideal Place to Start Your Full Stack Journey

If you're serious about making a career in this domain, it's a smart move to learn full stack development in Pune. Here's why:

Pune is home to hundreds of tech companies and startups, offering abundant internship and placement opportunities

Numerous training institutes offer industry-aligned courses, often bundled with certifications and placement assistance

Exposure to real-world projects through bootcamps, hackathons, and meetups

Several programs in Pune combine full stack development training with a Java programming course with placement, ensuring you gain both frontend/backend expertise and a strong OOP (Object-Oriented Programming) base.

Final Thoughts

The field of full stack development is transforming, and 2025 is expected to bring more intelligent, scalable, and modular application ecosystems. Whether you’re planning to switch careers or enhance your current skill set, staying updated with the latest full stack development trends will be essential to succeed.

Pune’s tech ecosystem makes it an excellent place to start. Enroll in a trusted institute that offers you a hands-on experience and includes in-demand topics like Java, serverless computing, DevOps, and microservices.

To sum up:

2025 Full Stack Development Key Highlights:

AI integration and smart development tools

Serverless and micro-frontend architectures

Real-time and API-first applications

Greater focus on security and cloud-native environments

Now is the time to upskill, get certified, and stay ahead of the curve. Whether you learn full stack development in Pune or pursue a Java programming course with placement, the tech world of 2025 is full of opportunities for those prepared to seize them.

0 notes

Text

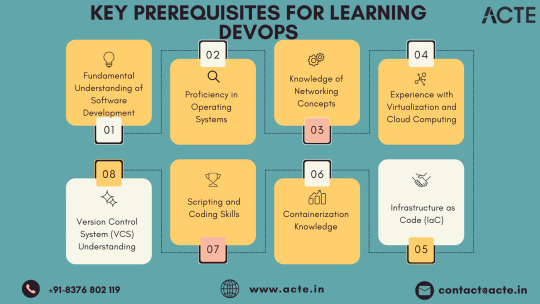

DevOps Landscape: Building Blocks for a Seamless Transition

In the dynamic realm where software development intersects with operations, the role of a DevOps professional has become instrumental. For individuals aspiring to make the leap into this dynamic field, understanding the key building blocks can set the stage for a successful transition. While there are no rigid prerequisites, acquiring foundational skills and knowledge areas becomes pivotal for thriving in a DevOps role.

1. Embracing the Essence of Software Development: At the core of DevOps lies collaboration, making it essential for individuals to have a fundamental understanding of software development processes. Proficiency in coding practices, version control, and the collaborative nature of development projects is paramount. Additionally, a solid grasp of programming languages and scripting adds a valuable dimension to one's skill set.

2. Navigating System Administration Fundamentals: DevOps success is intricately linked to a foundational understanding of system administration. This encompasses knowledge of operating systems, networks, and infrastructure components. Such familiarity empowers DevOps professionals to adeptly manage and optimize the underlying infrastructure supporting applications.

3. Mastery of Version Control Systems: Proficiency in version control systems, with Git taking a prominent role, is indispensable. Version control serves as the linchpin for efficient code collaboration, allowing teams to track changes, manage codebases, and seamlessly integrate contributions from multiple developers.

4. Scripting and Automation Proficiency: Automation is a central tenet of DevOps, emphasizing the need for scripting skills in languages like Python, Shell, or Ruby. This skill set enables individuals to automate repetitive tasks, fostering more efficient workflows within the DevOps pipeline.

5. Embracing Containerization Technologies: The widespread adoption of containerization technologies, exemplified by Docker, and orchestration tools like Kubernetes, necessitates a solid understanding. Mastery of these technologies is pivotal for creating consistent and reproducible environments, as well as managing scalable applications.

6. Unveiling CI/CD Practices: Continuous Integration and Continuous Deployment (CI/CD) practices form the beating heart of DevOps. Acquiring knowledge of CI/CD tools such as Jenkins, GitLab CI, or Travis CI is essential. This proficiency ensures the automated execution of code testing, integration, and deployment processes, streamlining development pipelines.

7. Harnessing Infrastructure as Code (IaC): Proficiency in Infrastructure as Code (IaC) tools, including Terraform or Ansible, constitutes a fundamental aspect of DevOps. IaC facilitates the codification of infrastructure, enabling the automated provisioning and management of resources while ensuring consistency across diverse environments.

8. Fostering a Collaborative Mindset: Effective communication and collaboration skills are non-negotiable in the DevOps sphere. The ability to seamlessly collaborate with cross-functional teams, spanning development, operations, and various stakeholders, lays the groundwork for a culture of collaboration essential to DevOps success.

9. Navigating Monitoring and Logging Realms: Proficiency in monitoring tools such as Prometheus and log analysis tools like the ELK stack is indispensable for maintaining application health. Proactive monitoring equips teams to identify issues in real-time and troubleshoot effectively.

10. Embracing a Continuous Learning Journey: DevOps is characterized by its dynamic nature, with new tools and practices continually emerging. A commitment to continuous learning and adaptability to emerging technologies is a fundamental trait for success in the ever-evolving field of DevOps.

In summary, while the transition to a DevOps role may not have rigid prerequisites, the acquisition of these foundational skills and knowledge areas becomes the bedrock for a successful journey. DevOps transcends being a mere set of practices; it embodies a cultural shift driven by collaboration, automation, and an unwavering commitment to continuous improvement. By embracing these essential building blocks, individuals can navigate their DevOps journey with confidence and competence.

5 notes

·

View notes