#Natural Language Processing Annotation

Explore tagged Tumblr posts

Text

Text Annotation with HAIVO AI: Empowering Natural Language Processing and Machine Learning

Explore HAIVO AI's text annotation services, your key to advancing natural language processing and text annotation machine learning. Unleash the potential of your text data projects with our expertise.

0 notes

Text

Crime Alley’s Sweetheart <3

pairing: Jason Todd X Reader Dating Headcanons

Summary: Dating Jason Todd is a whirlwind of adrenaline, sarcasm, and surprisingly tender moments. Beneath the Red Hood, he’s your protector, partner-in-crime, and personal comedian. But loving him also means navigating the scars of his past and embracing the thrill of Gotham's chaos together.

Warnings:

Mentions of violence (Jason’s vigilante work)

Brief mentions of trauma (Jason’s resurrection and past)

Language (Jason’s colorful vocabulary)

Slight angst (his protective tendencies)

[Masterlist]

The Meet Cute: Jason probably met you in the most chaotic way—maybe you were caught in the middle of a street fight, or he caught you sneaking out of a sketchy area. Either way, he admired your guts (and your sarcasm) from the start.

Protective Tendencies: Jason’s protective nature goes into overdrive when it comes to you. If anyone messes with you, they’d better pray to whoever they believe in because Jason doesn’t play nice when you’re involved.

Soft Side: Despite his tough exterior, Jason melts when it comes to you. He shows affection in small but meaningful ways: keeping his hand on the small of your back, pulling you close during quiet nights, and making sure you always feel safe.

Late-Night Adventures: Jason loves taking you on impromptu motorcycle rides through Gotham at night. The wind in your hair, the city lights, and his gruff laugh in your ear make every ride unforgettable.

Cooking Skills: He’s surprisingly good at cooking. After a long patrol, you’ll often find him whipping up a late-night meal in the kitchen—something hearty and comforting to share with you.

Vulnerable Moments: Jason has a hard time opening up about his past. It’s a slow process, but once he trusts you, he shares the pain, anger, and love that make him who he is. You’re his anchor on bad days, especially when the nightmares resurface.

Teasing and Banter: Your relationship thrives on witty comebacks and playful jabs. Jason loves making you laugh and will do just about anything to hear it, even if it means teasing himself.

The Batfamily: Dating Jason means inevitably meeting the Batfamily. While he doesn’t always get along with them, he’s oddly proud when you win them over—especially Alfred, who adores you.

Shared Book Love: Jason’s a book nerd, and he loves sharing his favorite novels with you. He’ll leave little annotations in the margins, his sarcastic or thoughtful comments adding to the experience.

The L Word: Jason doesn’t say “I love you” lightly. When he does, it’s raw and heartfelt, probably during an intense moment where he’s overwhelmed by his feelings for you.

Gift-Giving: Jason has a knack for thoughtful gifts, like a book you mentioned in passing or a leather jacket similar to his. He enjoys seeing your face light up when you realize how much he pays attention.

Crime Alley Prince: He introduces you to the community he quietly protects in Crime Alley. Seeing the way they respect and appreciate him gives you even more insight into his big heart.

Training Together: Jason insists on teaching you some self-defense. It starts off serious but ends in laughter when you manage to knock him over for the first time.

Quiet Nights: After a rough patrol, Jason loves nothing more than collapsing on the couch with you. Movie marathons, takeout, and just being in your presence help him recharge.

Falling Asleep Together: Jason falls asleep holding you close, his body relaxed in a way only you can make happen. In those moments, you’re his peace in the chaos.

#jason todd#jason todd x reader#jason todd x y/n#jason todd x you#jason todd x oc#jason todd angst#jason todd fluff#jason todd comfort#jason todd fic#jason todd fanfiction#jason todd imagine#titans fanfiction#dc fanfic#dc fanfiction#dick grayson fanfiction#dick grayson x reader#red hood#redhood x reader#redhood x you#arkham knight#arkham knight x reader#arkham knight x you#fanfic#fanfiction#angst#fluff#hurt/comfort#comfort#red hood x reader#jellofish-plant

254 notes

·

View notes

Text

The Portland Bridge Book by Sharon Wood Wortman with Ed Wortman

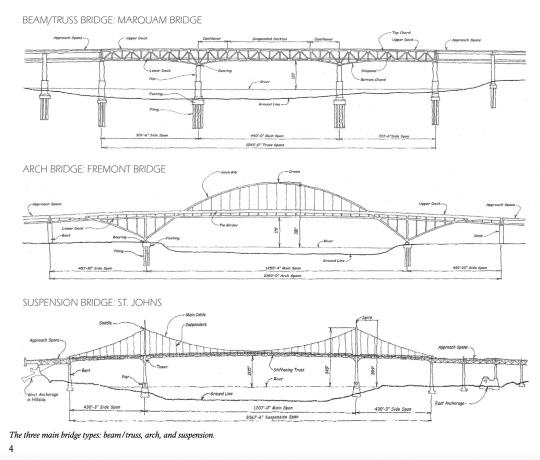

Third edition of The Portland Bridge Book is like an old faithful bridge that's been restored by a dedicated crew of specialists to increase its carrying capacity for the public's enjoyment. Over a span of 225 pages (50 percent larger than the second edition and more than twice the size of the first edition), this edition delivers: Profiles 15 highway bridges and four railroad bridges across the Willamette and Columbia rivers in the metro area. Each structure's general history, technical details, source of its name, and more are explained in accessible language. 150 historic and large format contemporary photographs, many published for the first time. Annotated drawings by Joseph Boquiren showing Portland's movable bridges in operation. How & Why Bridges Are Built, written by Fremont Bridge field engineer Ed Wortman. Expanded and localized glossary of bridge termsPortland transportation history timeline, truss patterns, bridge poetry, lyrics.

Images 1 & 2 from the Portland Bridge Book. Image 3 is the cover of the book.

Snippets

Multnomah County owns four of the five large movable bridges: Hawthorne, Morrison, Burnside, and Broadway bridges, as well as Sellwood and Sauvie Island bridges. Oregon Department of Transportation (ODOT) owns state and interstate highway structures, i.e. Ross Island, Marquam, Fremont, St. Johns, Oregon City, and Abernethy bridges, and across the Columbia River, Glenn Jackson Bridge and in a joint agreement with WA state, the Interstate Bridge.

Other notable bridges in Portland Oregon that do not cross the river.

Balch Gulch Bridge on Thurman Street in Northwest Portland, built for the 1905 Lewis and Clark Exposition. One of about 150 highway and pedestrian bridges owned and maintained by the City of Portland, this unusual hanging deck truss is the oldest highway deck truss bridge in Oregon. Vista Avenue Viaduct, a 248-foot open-spandrel reinforced concrete highway arch located 128 feet above SW Jefferson St on the way to US Highway 26 in Portland's West Hills.

Tilikum Crossing: Portland's Bridges and a New Icon by Donald MacDonald & Ira Nadel (2020)

Portland, Oregon's innovative and distinctive landmark, Tilikum Crossing Bridge of the People, is the first major bridge in the U.S, carrying trains, busses, streetcars, bicycles, and pedestrians- but no private automobiles. When regional transportation agency TriMet began planning for the first bridge to be constructed across the Willamette River since 1973, the goal was to build a something symbolic, which would represent the progressive nature of the Twenty-First Century. Part of that progressiveness was engaging in a public process that involved neighborhood associations, small businesses, environmentalists, biologists, bicycling enthusiasts, designers, engineers, and the City Council. The result of this collaboration was an entirely unique bridge that increased the transportation capacity of the city while allowing Portlanders to experience their urban home in an entirely new way—car-free. In this book, the award winning architect of Tilikum Crossing, Donald MacDonald, and co-author Ira Nadel, tell the story of Portland through its bridges. Written in a friendly voice, readers will learn how Portland came to be known as "The City of Bridges" and the home to this new icon in the city's landscape. MacDonald uses 98 of his own drawings to illustrate the history of Portland river crossings and to show the process of building a Twenty-First Century landmark

Image 4 is the Tilikum Crossing book cover. Image 5 is a photo of the bridge itself.

youtube

Short video: Aerial footage of some of the bridges in Portland, Oregon. Relaxing views of the Broadway, Fremont and Steel bridges. Apr 26, 2021

3 notes

·

View notes

Text

ChatGPT and Machine Learning: Advancements in Conversational AI

Introduction: In recent years, the field of natural language processing (NLP) has witnessed significant advancements with the development of powerful language models like ChatGPT. Powered by machine learning techniques, ChatGPT has revolutionized conversational AI by enabling human-like interactions with computers. This article explores the intersection of ChatGPT and machine learning, discussing their applications, benefits, challenges, and future prospects.

The Rise of ChatGPT: ChatGPT is an advanced language model developed by OpenAI that utilizes deep learning algorithms to generate human-like responses in conversational contexts. It is based on the underlying technology of GPT (Generative Pre-trained Transformer), a state-of-the-art model in NLP, which has been fine-tuned specifically for chat-based interactions.

How ChatGPT Works: ChatGPT employs a technique called unsupervised learning, where it learns from vast amounts of text data without explicit instructions or human annotations. It utilizes a transformer architecture, which allows it to process and generate text in a parallel and efficient manner.

The model is trained using a massive dataset and learns to predict the next word or phrase given the preceding context.

Applications of ChatGPT: Customer Support: ChatGPT can be deployed in customer service applications, providing instant and personalized assistance to users, answering frequently asked questions, and resolving common issues.

Virtual Assistants: ChatGPT can serve as intelligent virtual assistants, capable of understanding and responding to user queries, managing calendars, setting reminders, and performing various tasks.

Content Generation: ChatGPT can be used for generating content, such as blog posts, news articles, and creative writing, with minimal human intervention.

Language Translation: ChatGPT's language understanding capabilities make it useful for real-time language translation services, breaking down barriers and facilitating communication across different languages.

Benefits of ChatGPT: Enhanced User Experience: ChatGPT offers a more natural and interactive conversational experience, making interactions with machines feel more human-like.

Increased Efficiency: ChatGPT automates tasks that would otherwise require human intervention, resulting in improved efficiency and reduced response times.

Scalability: ChatGPT can handle multiple user interactions simultaneously, making it scalable for applications with high user volumes.

Challenges and Ethical Considerations: Bias and Fairness: ChatGPT's responses can sometimes reflect biases present in the training data, highlighting the importance of addressing bias and ensuring fairness in AI systems.

Misinformation and Manipulation: ChatGPT's ability to generate realistic text raises concerns about the potential spread of misinformation or malicious use. Ensuring the responsible deployment and monitoring of such models is crucial.

Future Directions: Fine-tuning and Customization: Continued research and development aim to improve the fine-tuning capabilities of ChatGPT, enabling users to customize the model for specific domains or applications.

Ethical Frameworks: Efforts are underway to establish ethical guidelines and frameworks for the responsible use of conversational AI models like ChatGPT, mitigating potential risks and ensuring accountability.

Conclusion: In conclusion, the emergence of ChatGPT and its integration into the field of machine learning has opened up new possibilities for human-computer interaction and natural language understanding. With its ability to generate coherent and contextually relevant responses, ChatGPT showcases the advancements made in language modeling and conversational AI.

We have explored the various aspects and applications of ChatGPT, including its training process, fine-tuning techniques, and its contextual understanding capabilities. Moreover, the concept of transfer learning has played a crucial role in leveraging the model's knowledge and adapting it to specific tasks and domains.

While ChatGPT has shown remarkable progress, it is important to acknowledge its limitations and potential biases. The continuous efforts by OpenAI to gather user feedback and refine the model reflect their commitment to improving its performance and addressing these concerns. User collaboration is key to shaping the future development of ChatGPT and ensuring it aligns with societal values and expectations.

The integration of ChatGPT into various applications and platforms demonstrates its potential to enhance collaboration, streamline information gathering, and assist users in a conversational manner. Developers can harness the power of ChatGPT by leveraging its capabilities through APIs, enabling seamless integration and expanding the reach of conversational AI.

Looking ahead, the field of machine learning and conversational AI holds immense promise. As ChatGPT and similar models continue to evolve, the focus should remain on user privacy, data security, and responsible AI practices. Collaboration between humans and machines will be crucial, as we strive to develop AI systems that augment human intelligence and provide valuable assistance while maintaining ethical standards.

With further advancements in training techniques, model architectures, and datasets, we can expect even more sophisticated and context-aware language models in the future. As the dialogue between humans and machines becomes more seamless and natural, the potential for innovation and improvement in various domains is vast.

In summary, ChatGPT represents a significant milestone in the field of machine learning, bringing us closer to human-like conversation and intelligent interactions. By harnessing its capabilities responsibly and striving for continuous improvement, we can leverage the power of ChatGPT to enhance user experiences, foster collaboration, and push the boundaries of what is possible in the realm of artificial intelligence.

2 notes

·

View notes

Text

Why Do Companies Outsource Text Annotation Services?

Building AI models for real-world use requires both the quality and volume of annotated data. For example, marking names, dates, or emotions in a sentence helps machines learn what those words represent and how to interpret them.

At its core, different applications of AI models require different types of annotations. For example, natural language processing (NLP) models require annotated text, whereas computer vision models need labeled images.

While some data engineers attempt to build annotation teams internally, many are now outsourcing text annotation to specialized providers. This approach speeds up the process and ensures accuracy, scalability, and access to professional text annotation services for efficient, cost-effective AI development.

In this blog, we will delve into why companies like Cogito Tech offer the best, most reliable, and compliant-ready text annotation training data for the successful deployment of your AI project. What are the industries we serve, and why is outsourcing the best option so that you can make an informed decision!

What is the Need for Text Annotation Training Datasets?

A dataset is a collection of learning information for the AI models. It can include numbers, images, sounds, videos, or words to teach machines to identify patterns and make decisions. For example, a text dataset may consist of thousands of customer reviews. An audio dataset might contain hours of speech. A video dataset could have recordings of people crossing the street.

Text annotation services are crucial for developing language-specific or NLP models, chatbots, applying sentiment analysis, and machine translation applications. These datasets label parts of text, such as named entities, sentiments, or intent, so algorithms can learn patterns and make accurate predictions. Industries such as healthcare, finance, e-commerce, and customer service rely on annotated data to build and refine AI systems.

At Cogito Tech, we understand that high-quality reference datasets are critical for model deployment. We also understand that these datasets must be large enough to cover a specific use case for which the model is being built and clean enough to avoid confusion. A poor dataset can lead to a poor AI model.

How Do Text Annotation Companies Ensure Scalability?

Data scientists, NLP engineers, and AI researchers need text annotation training datasets for teaching machine learning models to understand and interpret human language. Producing and labeling this data in-house is not easy, but it is a serious challenge. The solution to this is seeking professional help from text annotation companies.

The reason for this is that as data volumes increase, in-house annotation becomes more challenging to scale without a strong infrastructure. Data scientists focusing on labeling are not able to focus on higher-level tasks like model development. Some datasets (e.g., medical, legal, or technical data) need expert annotators with specialized knowledge, which can be hard to find and expensive to employ.

Diverting engineering and product teams to handle annotation would have slowed down core development efforts and compromised strategic focus. This is where specialized agencies like ours come into play to help data engineers support their need for training data. We also provide fine-tuning, quality checks, and compliant-labeled training data, anything and everything that your model needs.

Fundamentally, data labeling services are needed to teach computers the importance of structured data. For instance, labeling might involve tagging spam emails in a text dataset. In a video, it could mean labeling people or vehicles in each frame. For audio, it might include tagging voice commands like “play” or “pause.”

Why is Text Annotation Services in Demand?

Text is one of the most common data types used in AI model training. From chatbots to language translation, text annotation companies offer labeled text datasets to help machines understand human language.

For example, a retail company might use text annotation to determine whether customers are happy or unhappy with a product. By labeling thousands of reviews as positive, negative, or neutral, AI learns to do this autonomously.

As stated in Grand View Research, “Text annotation will dominate the global market owing to the need to fine-tune the capacity of AI so that it can help recognize patterns in the text, voices, and semantic connections of the annotated data”.

Types of Text Annotation Services for AI Models

Annotated textual data is needed to help NLP models understand and process human language. Text labeling companies utilize different types of text annotation methods, including:

Named Entity Recognition (NER) NER is used to extract key information in text. It identifies and categorizes raw data into defined entities such as person names, dates, locations, organizations, and more. NER is crucial for bringing structured information from unstructured text.

Sentiment Analysis It means identifying and tagging the emotional tone expressed in a piece of textual information, typically as positive, negative, or neutral. This is commonly used to analyze customer reviews and social media posts to review public opinion.

Part-of-Speech (POS) Tagging It refers to adding metadata like assigning grammatical categories, such as nouns, pronouns, verbs, adjectives, and adverbs, to each word in a sentence. It is needed for comprehending sentence structure so that the machines can learn to perform downstream tasks such as parsing and syntactic analysis.

Intent Classification Intent classification in text refers to identifying the purpose behind a user’s input or prompt. It is generally used in the context of conversational models so that the model can classify inputs like “book a train,” “check flight,” or “change password” into intents and enable appropriate responses for them.

Importance of Training Data for NLP and Machine Learning Models

Organizations must extract meaning from unstructured text data to automate complex language-related tasks and make data-driven decisions to gain a competitive edge.

The proliferation of unstructured data, including text, images, and videos, necessitates text annotation to make this data usable as it powers your machine learning and NLP systems.

The demand for such capabilities is rapidly expanding across multiple industries:

Healthcare: Medical professionals employed by text annotation companies perform this annotation task to automate clinical documentation, extract insights from patient records, and improve diagnostic support.

Legal: Streamlining contract analysis, legal research, and e-discovery by identifying relevant entities and summarizing case law.

E-commerce: Enhancing customer experience through personalized recommendations, automated customer service, and sentiment tracking.

Finance: In order to identify fraud detection, risk assessment, and regulatory compliance, text annotation services are needed to analyze large volumes of financial text data.

By investing in developing and training high-quality NLP models, businesses unlock operational efficiencies, improve customer engagement, gain deeper insights, and achieve long-term growth.

Now that we have covered the importance, we shall also discuss the roadblocks that may come in the way of data scientists and necessitate outsourcing text annotation services.

Challenges Faced by an In-house Text Annotation Team

Cost of hiring and training the teams: Having an in-house team can demand a large upfront investment. This refers to hiring, recruiting, and onboarding skilled annotators. Every project is different and requires a different strategy to create quality training data, and therefore, any extra expenses can undermine large-scale projects.

Time-consuming and resource-draining: Managing annotation workflows in-house often demands substantial time and operational oversight. The process can divert focus from core business operations, such as task assignments, to quality checks and revisions.

Requires domain expertise and consistent QA: Though it may look simple, in actual, text annotation requires deep domain knowledge. This is especially valid for developing task-specific healthcare, legal, or finance models. Therefore, ensuring consistency and accuracy across annotations necessitates a rigorous quality assurance process, which is quite a challenge in terms of maintaining consistent checks via experienced reviewers.

Scalability problems during high-volume annotation tasks: As annotation needs grow, scaling an internal team becomes increasingly tough. Expanding capacity to handle large influx of data volume often means getting stuck because it leads to bottlenecks, delays, and inconsistency in quality of output.

Outsource Text Annotation: Top Reasons and ROI Benefits

The deployment and success of any model depend on the quality of labeling and annotation. Poorly labeled information leads to poor results. This is why many businesses choose to partner with Cogito Tech because our experienced teams validate that the datasets are tagged with the right information in an accurate manner.

Outsourcing text annotation services has become a strategic move for organizations developing AI and NLP solutions. Rather than spending time managing expenses, businesses can benefit a lot from seeking experienced service providers. Mentioned below explains why data scientists must consider outsourcing:

Cost Efficiency: Outsourcing is an economical way that can significantly reduce labor and infrastructure expenses compared to hiring internal workforce. Saving costs every month in terms of salary and infrastructure maintenance costs makes outsourcing a financially sustainable solution, especially for startups and scaling enterprises.

Scalability: Outsourcing partners provide access to a flexible and scalable workforce capable of handling large volumes of text data. So, when the project grows, the annotation capacity can increase in line with the needs.

Speed to Market: Experienced labeling partners bring pre-trained annotators, which helps projects complete faster and means streamlined workflows. This speed helps businesses bring AI models to market more quickly and efficiently.

Quality Assurance: Annotation providers have worked on multiple projects and are thus professional and experienced. They utilize multi-tiered QA systems, benchmarking tools, and performance monitoring to ensure consistent, high-quality data output. This advantage can be hard to replicate internally.

Focus on Core Competencies: Delegating annotation to experts has one simple advantage. It implies that the in-house teams have more time refining algorithms and concentrate on other aspects of model development such as product innovation, and strategic growth, than managing manual tasks.

Compliance & Security: A professional data labeling partner does not compromise on following security protocols. They adhere to data protection standards such as GDPR and HIPAA. This means that sensitive data is handled with the highest level of compliance and confidentiality. There is a growing need for compliance so that organizations are responsible for utilizing technology for the greater good of the community and not to gain personal monetary gains.

For organizations looking to streamline AI development, the benefits of outsourcing with us are clear, i.e., improved quality, faster project completion, and cost-effectiveness, all while maintaining compliance with trusted text data labeling services.

Use Cases Where Outsourcing Makes Sense

Outsourcing to a third party rather than performing it in-house can have several benefits. The foremost advantage is that our text annotation services cater to the needs of businesses at multiple stages of AI/ML development, which include agile startups to large-scale enterprise teams. Here’s how:

Startups & AI Labs Quality and reliable text training data must comply with regulations to be usable. This is why early-stage startups and AI research labs often need compliant labeled data. When startups choose top text annotation companies, they save money on building an internal team, helping them accelerate development while staying lean and focused on innovation.

Enterprise AI Projects Big enterprises working on production-grade AI systems need scalable training datasets. However, annotating millions of text records at scale is challenging. Outsourcing allows enterprises to ramp up quickly, maintain annotation throughput, and ensure consistent quality across large datasets.

Industry-specific AI Models Sectors such as legal and healthcare need precise and compliant training data because they deal with personal data that may violate individual rights while training models. However, experienced vendors offer industry-trained professionals who understand the context and sensitivity of the data because they adhere to regulatory compliance, which benefits in the long-term and model deployment stages.

Conclusion

There is a rising demand for data-driven solutions to support this innovation, and quality-annotated data is a must for developing AI and NLP models. From startups building their prototypes to enterprises deploying AI at scale, the demand for accurate, consistent, and domain-specific training data remains.

However, managing annotation in-house has significant limitations, as discussed above. Analyzing return on investment is necessary because each project has unique requirements. We have mentioned that outsourcing is a strategic choice that allows businesses to accelerate project deadlines and save money.

Choose Cogito Tech because our expertise spans Computer Vision, Natural Language Processing, Content Moderation, Data and Document Processing, and a comprehensive spectrum of Generative AI solutions, including Supervised Fine-Tuning, RLHF, Model Safety, Evaluation, and Red Teaming.

Our workforce is experienced, certified, and platform agnostic to accomplish tasks efficiently to give optimum results, thus reducing the cost and time of segregating and categorizing textual data for businesses building AI models. Original Article : Why Do Companies Outsource Text Annotation Services?

#text annotation#text annotation service#text annotation service company#cogitotech#Ai#ai data annotation#Outsource Text Annotation Services

0 notes

Text

Data Labeling Services | AI Data Labeling Company

AI models are only as effective as the data they are trained on. This service page explores how Damco’s data labeling services empower organizations to accelerate AI innovation through structured, accurate, and scalable data labeling.

Accelerate AI Innovation with Scalable, High-Quality Data Labeling Services

Accurate annotations are critical for training robust AI models. Whether it’s image recognition, natural language processing, or speech-to-text conversion, quality-labeled data reduces model errors and boosts performance.

Leverage Damco’s Data Labeling Services

Damco provides end-to-end annotation services tailored to your data type and use case.

Computer Vision: Bounding boxes, semantic segmentation, object detection, and more

NLP Labeling: Text classification, named entity recognition, sentiment tagging

Audio Labeling: Speaker identification, timestamping, transcription services

Who Should Opt for Data Labeling Services?

Damco caters to diverse industries that rely on clean, labeled datasets to build AI solutions:

Autonomous Vehicles

Agriculture

Retail & Ecommerce

Healthcare

Finance & Banking

Insurance

Manufacturing & Logistics

Security, Surveillance & Robotics

Wildlife Monitoring

Benefits of Data Labeling Services

Precise Predictions with high-accuracy training datasets

Improved Data Usability across models and workflows

Scalability to handle projects of any size

Cost Optimization through flexible service models

Why Choose Damco for Data Labeling Services?

Reliable & High-Quality Outputs

Quick Turnaround Time

Competitive Pricing

Strict Data Security Standards

Global Delivery Capabilities

Discover how Damco’s data labeling can improve your AI outcomes — Schedule a Consultation.

#data labeling#data labeling services#data labeling company#ai data labeling#data labeling companies

0 notes

Text

Simplify Learning with AI-Powered Support for Science and Coding Work

Simplify Learning with AI-Powered Support for Science and Coding Work

Academic pressure can escalate quickly, especially when multiple subjects demand attention at once. Students often find themselves navigating complicated topics with little time or guidance. From undefined instructions to tight deadlines, the experience can become overwhelming fast. These struggles are not symptoms of laziness or lack of ability; they're symptoms of a plan that doesn’t always match the speed or style of unique learners. Today’s students require responsive, intuitive tools that help them feel supported and capable, not further stressed or left behind in the process.

Smarter Support Through Online science assignment help

When topics like chemistry equations or biology concepts seem too dense to crack alone, online science assignment help offers a calming, guided alternative. Instead of shortcuts or copied answers, it gives students the chance to understand theories, explore visuals, and walk through explanations at their own pace. Complex ideas are made clear with language that doesn’t overwhelm. This shift from panic to clarity means learners gain more than a grade—they gain confidence. It’s an essential upgrade from traditional tutoring, designed for how today’s students actually learn and retain knowledge.

Helping Students Grasp Concepts, Not Just Memorize Answers

One of the numerous frustrating parts of schoolwork is memorizing facts without really knowing why they matter. Learners want context, relevance, and connection. Effective online science assignment help goes beyond static explanations. It engages the student with step-by-step logic, real-life examples, and diagrams that light up the process behind the answers. Rather than drilling for recall, it builds true comprehension. For struggling or distracted students, this plunging experience can be a breakthrough point, the change from thinking to knowing, from stagnant completion to committed education.

Empowering Students to Learn on Their Terms

Not every student studies well at the same time, pace, or in the same environment. Some prefer quick late-night reviews, while others need repeated visual walkthroughs. Modern learning tools acknowledge this reality by delivering support that fits each person’s habits and needs. Whether it’s mobile access on the bus or targeted help during an after-school cram session, students stay in control. This sense of autonomy encourages stronger learning habits and builds resilience. When learners feel they can manage their work, their motivation naturally increases, and their academic growth follows.

Bringing Technology Into the Learning Experience

Education doesn’t need to be stuck in the past. Tools built with AI now offer real-time, adaptive feedback and accurate guidance without waiting for a tutor or sifting through outdated videos. With innovative support, students can solve problems faster while actually improving their thinking process. This smart technology evolves with each question asked, helping learners improve with every session. It’s not just tech for tech’s sake—it’s the practical future of self-guided study, empowering learners to do more in less time without sacrificing accuracy or understanding.

Interactive and Visual Computer science homework solver

Coding can be tough when the logic doesn’t click. A computer science homework solver that visually breaks down loops, functions, and errors can be a major relief. It’s not about giving answers but helping learners see where the code fails and how to fix it. For STEM learners, these interactive diagrams and annotated lines of code help demystify the abstract. Whether it’s Java, Python, or C++, students gain insight into structure, logic, and best practices, helping them build not only working code but real understanding and skill.

Encouraging Growth in Real-world Coding Skills

As coding becomes a key skill in modern education, students need more than syntax correction—they need help forming logic, writing clean scripts, and debugging effectively. A computer science homework solver supports this by mimicking the process of a mentor reviewing their code. Rather than solving it all, it provides direction, flags inefficiencies, and explains outcomes. This process promotes problem-solving abilities and encourages independent thinking. For students juggling other subjects, it’s an efficient way to make progress in coding without getting stuck or falling behind.

Designed for Diverse Learning Styles and Needs

No two students are exactly alike. While one may be visual and quick, another might be analytical and methodical. This variety is often ignored in classrooms with fixed timelines and limited resources. Digital platforms that adapt to pace and preference offer learners a rare opportunity to learn in a way that genuinely works for them. Whether helping ESL students decode scientific terms or guiding fast learners through advanced coding logic, today’s tools make personalized education a practical reality, not a distant ideal. This level of adaptability changes how students relate to learning entirely.

Conclusion

Academic tools today must do more than answer questions—they must ease anxiety, promote understanding, and build confidence across subjects. For students needing guidance in science or coding, intelligent support systems deliver insights that foster growth rather than dependency. Whether clarifying equations or decoding algorithms, digital solutions are shaping stronger learners. Platforms like thehomework.ai/home offer this kind of support, not by replacing effort, but by amplifying it with clarity and strategy. Students aren't just surviving their assignments; they're learning how to think, apply, and succeed in the classroom and beyond.

Blog Source Url :- https://thehomework0.blogspot.com/2025/06/simplify-learning-with-ai-powered.html

0 notes

Text

Natural Language Processing combines AI technology with expert human input to accurately interpret, annotate, and train language models, empowering enhanced text understanding for applications such as customer service, content analysis, and automation.

0 notes

Text

Accelerate AI Development

Artificial Intelligence (AI) is no longer a futuristic concept — it’s a present-day driver of innovation, efficiency, and automation. From self-driving cars to intelligent customer service chatbots, AI is reshaping the way industries operate. But behind every smart algorithm lies an essential component that often doesn’t get the spotlight it deserves: data.

No matter how advanced an AI model may be, its potential is directly tied to the quality, volume, and relevance of the data it’s trained on. That’s why companies looking to move fast in AI development are turning their attention to something beyond algorithms: high-quality, ready-to-use datasets.

The Speed Factor in AI

Time-to-market is critical. Whether you’re a startup prototyping a new feature or a large enterprise deploying AI at scale, delays in sourcing, cleaning, and labeling data can slow down innovation. Traditional data collection methods — manual scraping, internal sourcing, or custom annotation — can take weeks or even months. This timeline doesn’t align with the rapid iteration cycles that AI teams are expected to maintain.

The solution? Pre-collected, curated datasets that are immediately usable for training machine learning models.

Why Pre-Collected Datasets Matter

Pre-collected datasets offer a shortcut without compromising on quality. These datasets are:

Professionally Curated: Built with consistency, structure, and clear labeling standards.

Domain-Specific: Tailored to key AI areas like computer vision, natural language processing (NLP), and audio recognition.

Scalable: Ready to support models at different stages of development — from testing hypotheses to deploying production systems.

Instead of spending months building your own data pipeline, you can start training and refining your models from day one.

Use Cases That Benefit

The applications of AI are vast, but certain use cases especially benefit from rapid access to quality data:

Computer Vision: For tasks like facial recognition, object detection, autonomous driving, and medical imaging, visual datasets are vital. High-resolution, diverse, and well-annotated images can shave weeks off development time.

Natural Language Processing (NLP): Chatbots, sentiment analysis tools, and machine translation systems need text datasets that reflect linguistic diversity and nuance.

Audio AI: Whether it’s voice assistants, transcription tools, or sound classification systems, audio datasets provide the foundation for robust auditory understanding.

With pre-curated datasets available, teams can start experimenting, fine-tuning, and validating their models immediately — accelerating everything from R&D to deployment.

Data Quality = Model Performance

It’s a simple equation: garbage in, garbage out. The best algorithms can’t overcome poor data. And while it’s tempting to rely on publicly available datasets, they’re often outdated, inconsistent, or not representative of real-world complexity.

Using high-quality, professionally sourced datasets ensures that your model is trained on the type of data it will encounter in the real world. This improves performance metrics, reduces bias, and increases trust in your AI outputs — especially critical in sensitive fields like healthcare, finance, and security.

Save Time, Save Budget

Data acquisition can be one of the most expensive parts of an AI project. It requires technical infrastructure, human resources for annotation, and ongoing quality control. By purchasing pre-collected data, companies reduce:

Operational Overhead: No need to build an internal data pipeline from scratch.

Hiring Costs: Avoid the expense of large annotation or data engineering teams.

Project Delays: Eliminate waiting periods for data readiness.

It’s not just about moving fast — it’s about being cost-effective and agile.

Build Better, Faster

When you eliminate the friction of data collection, you unlock your team’s potential to focus on what truly matters: experimentation, innovation, and performance tuning. You free up data scientists to iterate more often. You allow product teams to move from ideation to MVP more quickly. And you increase your competitive edge in a fast-moving market.

Where to Start

If you’re looking to power up your AI development with reliable data, explore BuyData.Pro. We provide a wide range of high-quality, pre-labeled datasets in computer vision, NLP, and audio. Whether you’re building your first model or optimizing one for production, our datasets are built to accelerate your journey.

Website: https://buydata.pro Contact: [email protected]

0 notes

Text

What is text annotation in machine learning? Explain with examples

Text annotation in machine learning refers to the process of labeling or tagging textual data to make it understandable and useful for AI models. It is essential for various AI applications, such as natural language processing (NLP), chatbots, sentiment analysis, and machine translation. With cutting-edge tools and skilled professionals, EnFuse Solutions has the expertise to drive impactful AI solutions for your business.

#TextAnnotation#MachineLearning#NLPAnnotation#DataLabeling#MLTrainingData#AnnotatedText#NaturalLanguageProcessing#SupervisedLearning#AIModelTraining#TextDataPreparation#MLDataAnnotation#AIAnnotationCompanies#DataAnnotationServices#EnFuseSolutions#EnFuseSolutionsIndia

0 notes

Text

Unlocking Language Insights | Expert Text Annotation at Haivo AI

Empower your natural language understanding with Haivo AI's text annotation services. From intricate linguistic nuances to machine learning integration, we provide comprehensive solutions for refining your language-focused AI applications.

0 notes

Text

The Future of Patent Drawings: AI and Automation in Illustration Services

Patent drawings are a critical component of the patent application process. They visually communicate the structure, function, and details of an invention in a way that words alone cannot. Traditionally created by skilled draftspersons, patent drawings have historically required precision, technical know-how, and strict adherence to regulatory standards. However, the emergence of Artificial Intelligence (AI) and automation is now reshaping how these illustrations are produced.

As we move further into a digitally driven world, technological advancements are revolutionising the future of patent drawings. This article explores how AI and automation are transforming patent illustration services and what that means for inventors, law firms, and intellectual property professionals.

Why Patent Drawings Matter

Before diving into how AI is changing things, it’s important to understand the foundational role of patent drawings in a utility or design patent application.

Patent drawings:

Support written claims with visual clarity

Help patent examiners understand the invention

Fulfil legal requirements in many jurisdictions

Improve the enforceability of the patent

Can reduce office actions and delays when accurate

Given their importance, creating patent drawings has traditionally required skilled professionals who understand both technical illustration and patent office regulations (such as those from the USPTO, EPO, or WIPO).

Current Challenges in Patent Drawing Preparation

Although patent drawings are a crucial part of the application process, their preparation remains largely manual and time-consuming. Several key challenges impact efficiency and quality:

Time-Consuming Drafting: Creating detailed, multi-view patent illustrations often requires hours or even days of meticulous work.

Risk of Non-Compliance: Minor errors in formatting, margins, line weights, or part labelling can result in costly rejections or delays.

Reliance on Specialised Expertise: Skilled patent illustrators are in limited supply and often come with high costs, making access difficult for many applicants.

Lengthy Revision Cycles: Multiple rounds of revisions between inventors and attorneys extend timelines and increase expenses.

These persistent pain points highlight the urgent need for innovation, opening the door for AI and automation to transform the patent drawing process.

The Rise of AI in Patent Drawing Services

AI is transforming industries worldwide, and patent illustration is no exception. In the context of patent drawings, AI and automation refer to technologies that can assist or completely generate illustrations using computational models.

Key Applications of AI in Patent Drawing Creation:

Auto-generation from CAD Files: AI tools can analyse 3D CAD models and automatically extract relevant 2D views (e.g., front, side, top, perspective). These views can be converted into compliant patent drawings with proper line weights and annotations.

Natural Language Processing (NLP): AI can read the technical specifications in patent drafts and generate corresponding visual layouts or suggest figure numbers and part labels based on contextual cues.

Intelligent Labelling and Numbering: AI can automate the repetitive task of assigning part numbers and ensure consistency across multiple figures, reducing human error.

Compliance Checks: AI can review drawings and flag issues such as improper margins, line thickness inconsistencies, or missing views, helping avoid common USPTO or PCT rejections.

Template Matching: For standard components (e.g., motors, gears, levers), AI can match the invention to a database of illustration templates, speeding up the creation process.

Automation in Workflow and Project Management

Beyond illustration, automation tools are being integrated into the project management side of patent drawing services:

Automated Client Portals: These allow inventors to upload reference materials and receive status updates in real time.

Auto-generated Quotes and Turnaround Times: Depending on the invention's complexity, platforms can estimate timelines and costs with minimal human intervention.

Collaborative Review Platforms: Tools that allow patent attorneys and clients to annotate drawings and approve versions online streamline the review and revision process.

Benefits of AI and Automation in Patent Drawings

1. Speed and Efficiency

AI-generated drawings can be produced in a fraction of the time it takes a human draftsperson. This acceleration shortens application timelines, giving inventors a competitive edge.

2. Cost Reduction

Automation reduces labour-intensive processes, cutting costs for both service providers and clients. Lower drawing costs make patent protection more accessible for startups and individual inventors.

3. Accuracy and Compliance

With built-in compliance algorithms, AI tools can help reduce the number of Office Actions due to drawing errors, improving approval rates.

4. Scalability

Large IP firms or multinational companies with high patent volumes can handle drawing workloads more effectively using AI and automation tools.

5. Consistency Across Jurisdictions

AI systems can be programmed to accommodate multiple regulatory standards (USPTO, EPO, JPO, etc.), ensuring drawings meet global requirements.

Limitations and Concerns

While AI offers significant advantages, it also comes with notable limitations:

Complex Inventions: AI tools may struggle to accurately interpret intricate or unconventional inventions without human guidance.

Lack of Visual Judgment: Decisions such as selecting the most informative view or adjusting illustrations for maximum clarity still benefit from human creativity and experience.

Security and Confidentiality: Using cloud-based AI platforms can raise concerns about the security of sensitive invention data and the risk of intellectual property exposure.

Regulatory Caution: Patent offices continue to emphasise the importance of human oversight. AI-introduced errors could compromise the application’s acceptance.

As a result, the most effective approach today is AI-assisted drafting—a hybrid model that leverages AI's speed and scalability while retaining human expertise to ensure precision, clarity, and compliance.

Case Study: AI in Action

An increasing number of patent illustration companies are adopting AI-driven tools to streamline the drafting process. These platforms typically offer capabilities such as:

Uploading a 3D CAD model

Selecting required views (e.g., top, side, cross-section)

Automatically labelling parts and numbering figures

Generating compliant, ready-to-submit drawings within minutes

Early adopters report a 60–70% reduction in turnaround time, with significantly fewer revision cycles thanks to minimised formatting errors. By automating routine tasks, AI allows illustrators and attorneys to focus on more strategic aspects of the application process.

What the Future Holds

As AI capabilities expand, the future of patent drawing services promises to be smarter, faster, and more integrated. Key developments on the horizon include:

End-to-End AI Workflows: From drafting to electronic filing, AI platforms may soon offer fully automated pipelines that handle illustrations, claims writing, and submission in one seamless process.

Voice-to-Drawing Interfaces: Advanced Natural Language Processing (NLP) could allow inventors to describe their ideas verbally and receive instant draft drawings, bridging the gap between concept and visualisation.

AR-Based Validation: Augmented reality environments may let users preview and interact with patent drawings in 3D, improving accuracy and communication before submission.

Blockchain-Backed IP Security: AI-generated illustrations could be timestamped and verified using blockchain technology, adding a new layer of protection and authenticity to IP assets.

These innovations hint at a future where patent drafting is not only more efficient but also more accessible, secure, and intuitive.

Final Thoughts

AI and automation are not here to replace professional patent illustrators, but to enhance and streamline their work. By automating routine tasks, flagging compliance issues, and speeding up the drawing process, these technologies offer immense value to inventors, patent attorneys, and IP firms.

The future of patent drawings lies in collaboration between machines and humans, where AI handles the heavy lifting and experts focus on strategy, creativity, and legal compliance. Embracing this change will be key to staying competitive in the evolving IP landscape.

0 notes

Text

Building Smarter AI: The Role of LLM Development in Modern Enterprises

As enterprises race toward digital transformation, artificial intelligence has become a foundational component of their strategic evolution. At the core of this AI-driven revolution lies the development of large language models (LLMs), which are redefining how businesses process information, interact with customers, and make decisions. LLM development is no longer a niche research endeavor; it is now central to enterprise-level innovation, driving smarter solutions across departments, industries, and geographies.

LLMs, such as GPT, BERT, and their proprietary counterparts, have demonstrated an unprecedented ability to understand, generate, and interact with human language. Their strength lies in their scalability and adaptability, which make them invaluable assets for modern businesses. From enhancing customer support to optimizing legal workflows and generating real-time insights, LLM development has emerged as a catalyst for operational excellence and competitive advantage.

The Rise of Enterprise AI and the Shift Toward LLMs

In recent years, AI adoption has moved beyond experimentation to enterprise-wide deployment. Organizations are no longer just exploring AI for narrow, predefined tasks; they are integrating it into their core operations. LLMs represent a significant leap in this journey. Their capacity to understand natural language at a contextual level means they can power a wide range of applications—from automated document processing to intelligent chatbots and AI agents that can draft reports or summarize meetings.

Traditional rule-based systems lack the flexibility and learning capabilities that LLMs offer. With LLM development, businesses can move toward systems that learn from data patterns, continuously improve, and adapt to new contexts. This shift is particularly important for enterprises dealing with large volumes of unstructured data, where LLMs excel in extracting relevant information and generating actionable insights.

Custom LLM Development: Why One Size Doesn’t Fit All

While off-the-shelf models offer a powerful starting point, they often fall short in meeting the specific needs of modern enterprises. Data privacy, domain specificity, regulatory compliance, and operational context are critical considerations that demand custom LLM development. Enterprises are increasingly recognizing that building their own LLMs—or fine-tuning open-source models on proprietary data—can lead to more accurate, secure, and reliable AI systems.

Custom LLM development allows businesses to align the model with industry-specific jargon, workflows, and regulatory environments. For instance, a healthcare enterprise developing an LLM for patient record summarization needs a model that understands medical terminology and adheres to HIPAA regulations. Similarly, a legal firm may require an LLM that comprehends legal documents, contracts, and precedents. These specialized applications necessitate training on relevant datasets, which is only possible through tailored development processes.

Data as the Foundation of Effective LLM Development

The success of any LLM hinges on the quality and relevance of the data it is trained on. For enterprises, this often means leveraging internal datasets that are rich in context but also sensitive in nature. Building smarter AI requires rigorous data curation, preprocessing, and annotation. It also involves establishing data pipelines that can continually feed the model with updated, domain-specific content.

Enterprises must implement strong data governance frameworks to ensure the ethical use of data, mitigate biases, and safeguard user privacy. Data tokenization, anonymization, and access control become integral parts of the LLM development pipeline. In many cases, businesses opt to build LLMs on-premise or within private cloud environments to maintain full control over their data and model parameters.

Infrastructure and Tooling for Scalable LLM Development

Developing large language models at an enterprise level requires robust infrastructure. Training models with billions of parameters demands high-performance computing resources, including GPUs, TPUs, and distributed systems capable of handling parallel processing. Cloud providers and AI platforms have stepped in to offer scalable environments tailored to LLM development, allowing enterprises to balance performance with cost-effectiveness.

Beyond hardware, the LLM development lifecycle involves a suite of software tools. Frameworks like PyTorch, TensorFlow, Hugging Face Transformers, and LangChain have become staples in the AI development stack. These tools facilitate model training, evaluation, and deployment, while also supporting experimentation with different architectures and hyperparameters. Additionally, version control, continuous integration, and MLOps practices are essential for managing iterations and maintaining production-grade models.

The Business Impact of LLMs in Enterprise Workflows

The integration of LLMs into enterprise workflows yields transformative results. In customer service, LLM-powered chatbots and virtual assistants provide instant, human-like responses that reduce wait times and enhance user satisfaction. These systems can handle complex queries, understand sentiment, and escalate issues intelligently when human intervention is needed.

In internal operations, LLMs automate time-consuming tasks such as document drafting, meeting summarization, and knowledge base management. Teams across marketing, HR, legal, and finance can benefit from AI-generated insights that save hours of manual work and improve decision-making. LLMs also empower data analysts and business intelligence teams by providing natural language interfaces to databases and dashboards, making analytics more accessible across the organization.

In research and development, LLMs accelerate innovation by assisting in technical documentation, coding, and even ideation. Developers can use LLM-based tools to generate code snippets, debug errors, or explore APIs, thus enhancing productivity and reducing turnaround time. In regulated industries like finance and healthcare, LLMs support compliance by reviewing policy documents, tracking changes in legislation, and ensuring internal communications align with legal requirements.

Security and Privacy in Enterprise LLM Development

As LLMs gain access to sensitive enterprise data, security becomes a top concern. Enterprises must ensure that the models they build or use do not inadvertently leak confidential information or produce biased, harmful outputs. This calls for rigorous testing, monitoring, and auditing throughout the development lifecycle.

Security measures include embedding guardrails within the model, applying reinforcement learning with human feedback (RLHF) to refine responses, and establishing filters to detect and block inappropriate content. Moreover, differential privacy techniques can be employed to train LLMs without compromising individual data points. Regulatory compliance, such as GDPR or industry-specific mandates, must also be factored into the model’s architecture and deployment strategy.

Organizations are also investing in private LLM development, where the model is trained and hosted in isolated environments, disconnected from public access. This approach ensures that enterprise IP and customer data remain secure and that the model aligns with internal standards and controls. Regular audits, red-teaming exercises, and bias evaluations further strengthen the reliability and trustworthiness of the deployed LLMs.

LLM Development and Human-AI Collaboration

One of the most significant shifts LLMs bring to enterprises is the redefinition of human-AI collaboration. Rather than replacing human roles, LLMs augment human capabilities by acting as intelligent assistants. This symbiotic relationship allows professionals to focus on strategic thinking and creative tasks, while the LLM handles the repetitive, data-intensive parts of the workflow.

This collaboration is evident in industries like journalism, where LLMs draft article outlines and summarize press releases, freeing journalists to focus on investigative reporting. In legal services, LLMs assist paralegals by reviewing documents, identifying inconsistencies, and organizing case information. In software development, engineers collaborate with AI tools to write code, generate tests, and explore new libraries or functions more efficiently.

For human-AI collaboration to be effective, enterprises must foster a culture that embraces AI as a tool, not a threat. This includes reskilling employees, encouraging experimentation, and designing interfaces that are intuitive and transparent. Trust in AI systems grows when users understand how the models work and how they arrive at their conclusions.

Future Outlook: From Smarter AI to Autonomous Systems

LLM development marks a crucial milestone in the broader journey toward autonomous enterprise systems. As models become more sophisticated, enterprises are beginning to explore autonomous agents that can perform end-to-end tasks with minimal supervision. These agents, powered by LLMs, can navigate workflows, take decisions based on goals, and coordinate with other systems to complete complex objectives.

This evolution requires not just technical maturity but also organizational readiness. Governance frameworks must be updated, roles and responsibilities must be redefined, and AI literacy must become part of the corporate fabric. Enterprises that successfully embrace these changes will be better positioned to innovate, compete, and lead in a rapidly evolving digital economy.

The future will likely see the convergence of LLMs with other technologies such as computer vision, knowledge graphs, and edge computing. These integrations will give rise to multimodal AI systems capable of understanding not just text, but images, videos, and real-world environments. For enterprises, this means even more powerful and versatile tools that can drive efficiency, personalization, and growth across every vertical.

Conclusion

LLM development is no longer a luxury or an experimental initiative—it is a strategic imperative for modern enterprises. By building smarter AI systems powered by large language models, businesses can transform how they operate, innovate, and deliver value. From automating routine tasks to enhancing human creativity, LLMs are enabling a new era of intelligent enterprise solutions.

As organizations invest in infrastructure, talent, and governance to support LLM initiatives, the payoff will be seen in improved efficiency, reduced costs, and stronger customer relationships. The companies that lead in LLM development today are not just adopting a new technology—they are building the foundation for the AI-driven enterprises of tomorrow.

#crypto#ai#blockchain#ai generated#cryptocurrency#blockchain app factory#dex#ico#ido#blockchainappfactory

0 notes

Text

How Much Does It Cost to Build an AI Video Agent? A Comprehensive 2025 Guide

In today’s digital era, video content dominates the online landscape. From social media marketing to corporate training, video is the most engaging medium for communication. However, creating high-quality videos requires time, skill, and resources. This is where AI Video Agents come into play- automated systems designed to streamline video creation, editing, and management using cutting-edge technology.

If you’re considering investing in an AI Video Agent, one of the first questions you’ll ask is: How much does it cost to build one? This comprehensive guide will walk you through the key factors, cost breakdowns, and considerations involved in developing an AI Video Agent in 2025. Whether you’re a startup, multimedia company, or enterprise looking for advanced AI Video Solutions, this article will help you understand what to expect.

What Is an AI Video Agent?

An AI Video Agent is a software platform that leverages artificial intelligence to automate and enhance various aspects of video production. This includes:

AI video editing: Automatically trimming, color grading, adding effects, or generating subtitles.

AI video generation: Creating videos from text, images, or data inputs without manual filming.

Video content analysis: Understanding video context, tagging scenes, or summarizing content.

Personalization: Tailoring video content to specific audiences or user preferences.

Integration: Seamlessly working with other marketing, analytics, or content management systems.

These capabilities make AI Video Agents invaluable for businesses seeking scalable, efficient, and cost-effective video creation workflows.

Why Are AI Video Agents in Demand?

The rise of video marketing, e-learning, and digital entertainment has created an urgent need for faster and smarter video creation tools. Traditional video editing and production are labor-intensive and expensive, often requiring skilled professionals and expensive equipment.

AI Video Applications can:

Accelerate video production timelines.

Reduce human error and repetitive tasks.

Enable non-experts to create professional-quality videos.

Provide data-driven insights to optimize video content.

Support multi-language and multi-format video creation.

This explains why many companies are partnering with AI Video Solutions Companies or investing in AI Video Software Development to build custom AI video creators tailored to their needs.

Key Components of an AI Video Agent

Before diving into costs, it’s important to understand what goes into building an AI Video Agent. The main components include:

1. Data Collection and Preparation

AI video creators rely heavily on large datasets of annotated videos, images, and audio to train machine learning models. This step involves:

Collecting diverse video samples.

Labeling and annotating key features (e.g., objects, scenes, speech).

Cleaning and formatting data for training.

2. Model Development and Training

This is the core AI development phase where algorithms are designed and trained to perform tasks such as:

Video segmentation and object detection.

Natural language processing for script-to-video generation.

Style transfer and video enhancement.

Automated editing decisions.

Deep learning models, including convolutional neural networks (CNNs) and transformers, are commonly used.

3. Software Engineering and UI/UX Design

Developers build the user interface and backend systems that allow users to interact with the AI video editor or generator. This includes:

Web or mobile app development.

Cloud infrastructure for processing and storage.

APIs for integration with other platforms.

4. Integration and Deployment

The AI Video Agent needs to be integrated with existing workflows, such as content management systems, marketing automation tools, or social media platforms. Deployment may involve cloud services like AWS, Azure, or Google Cloud.

5. Testing and Quality Assurance

Extensive testing ensures the AI video creation tool works reliably across different scenarios and devices.

6. Maintenance and Updates

Post-launch support includes fixing bugs, updating models with new data, and adding features.

Detailed Cost Breakdown

The cost of building an AI Video Agent varies widely depending on complexity, scale, and specific requirements. Below is a detailed breakdown of typical expenses.

Component

Estimated Cost Range (USD)

Notes

Data Collection & Preparation

$10,000 – $100,000+

Larger, high-quality datasets increase costs; proprietary data is pricier.

Model Development & Training

$30,000 – $200,000+

Advanced deep learning models require more time and computational resources.

Software Engineering

$40,000 – $150,000+

Includes frontend, backend, UI/UX, cloud infrastructure, and APIs.

Integration & Deployment

$10,000 – $50,000+

Depends on the number and complexity of integrations.

Licensing & Tools

$5,000 – $50,000+

Third-party SDKs, cloud compute costs, and software licenses.

Testing & QA

$5,000 – $20,000+

Ensures reliability and user experience.

Maintenance & Updates (Annual)

$10,000 – $40,000+

Ongoing support, bug fixes, and model retraining.

Example Cost Scenarios

Basic AI Video Agent

Features: Automated trimming, captioning, simple effects.

Target users: Small businesses, content creators.

Estimated cost: $20,000 – $50,000.

Timeframe: 3-6 months.

Intermediate AI Video Agent

Features: Script-to-video generation, multi-language support, style transfer.

Target users: Marketing agencies, multimedia companies.

Estimated cost: $100,000 – $250,000.

Timeframe: 6-12 months.

Advanced AI Video Agent

Features: Real-time video editing, deep personalization, multi-format export, enterprise integrations.

Target users: Large enterprises, AI Video Applications Companies.

Estimated cost: $300,000+.

Timeframe: 12+ months.

Factors That Influence Cost

1. Feature Complexity

More advanced features, such as AI clip generator capabilities, voice synthesis, or 3D video creation, significantly increase development time and cost.

2. Data Quality and Quantity

High-quality, diverse datasets are crucial for effective AI video creation tools. Licensing proprietary datasets or creating custom datasets can be expensive.

3. Platform and Deployment

Building a cloud-based AI video creation tool with scalable infrastructure costs more than a simple desktop application.

4. Customization Level

Tailoring the AI Video Agent to specific industries (e.g., healthcare, education) or branding requirements adds to the cost.

5. Team Expertise

Hiring experienced AI developers, data scientists, and multimedia engineers commands premium rates but ensures better results.

Alternatives to Building From Scratch

If your budget is limited or you want to test the waters, several best AI video generators and AI video maker platforms offer ready-made solutions:

Synthesia: AI video creator focused on avatar-based videos.

Runway: AI video editor with creative tools.

Lumen5: AI-powered video creation from blog posts.

InVideo: Easy-to-use AI video generator for marketers.

These platforms offer subscription-based pricing, allowing you to create video with AI without a heavy upfront investment.

How to Choose the Right AI Video Solutions Company

When partnering with an AI Video Solutions Company or AI Video Software Company, consider these factors:

Proven track record: Look for companies with successful AI video projects.

Transparency: Clear pricing and project timelines.

Technical expertise: Experience in AI for video creation and multimedia development.

Customization capabilities: Ability to tailor solutions to your unique needs.

Support and maintenance: Reliable post-launch assistance.

The Future of AI Video Creation

As AI technology advances, the cost of building AI Video Agents is expected to decrease due to improved tools, open-source frameworks, and more efficient algorithms. Meanwhile, the capabilities will expand to include:

Hyper-personalized video marketing.

Real-time interactive video content.

AI-powered video analytics and optimization.

Integration with AR/VR and metaverse platforms.

Investing in AI video creation tools today positions your business to stay ahead in the evolving multimedia landscape.

Conclusion

Building an AI Video Agent is a significant but rewarding investment. Depending on your requirements, the cost can range from $20,000 for a basic AI video editor to over $300,000 for a sophisticated enterprise-grade AI video creation tool. Understanding the components, cost drivers, and alternatives will help you make informed decisions.

Whether you want to develop a custom AI video generator or leverage existing AI video creation tools, partnering with the right AI Video Applications Company or multimedia company is crucial. With the right strategy, you can harness AI for video creation to boost engagement, reduce production costs, and accelerate your content pipeline.

0 notes

Text

AI Research Methods: Designing and Evaluating Intelligent Systems

The field of artificial intelligence (AI) is evolving rapidly, and with it, the importance of understanding its core methodologies. Whether you're a beginner in tech or a researcher delving into machine learning, it’s essential to be familiar with the foundational artificial intelligence course subjects that shape the study and application of intelligent systems. These subjects provide the tools, frameworks, and scientific rigor needed to design, develop, and evaluate AI-driven technologies effectively.

What Are AI Research Methods?

AI research methods are the systematic approaches used to investigate and create intelligent systems. These methods allow researchers and developers to model intelligent behavior, simulate reasoning processes, and validate the performance of AI models.

Broadly, AI research spans across several domains, including natural language processing (NLP), computer vision, robotics, expert systems, and neural networks. The aim is not only to make systems smarter but also to ensure they are safe, ethical, and efficient in solving real-world problems.

Core Approaches in AI Research

1. Symbolic (Knowledge-Based) AI

This approach focuses on logic, rules, and knowledge representation. Researchers design systems that mimic human reasoning through formal logic. Expert systems like MYCIN, for example, use a rule-based framework to make medical diagnoses.

Symbolic AI is particularly useful in domains where rules are well-defined. However, it struggles in areas involving uncertainty or massive data inputs—challenges addressed more effectively by modern statistical methods.

2. Machine Learning

Machine learning (ML) is one of the most active research areas in AI. It involves algorithms that learn from data to make predictions or decisions without being explicitly programmed. Supervised learning, unsupervised learning, and reinforcement learning are key types of ML.

This approach thrives in pattern recognition tasks such as facial recognition, recommendation engines, and speech-to-text applications. It heavily relies on data availability and quality, making dataset design and preprocessing crucial research activities.

3. Neural Networks and Deep Learning

Deep learning uses multi-layered neural networks to model complex patterns and behaviors. It’s particularly effective for tasks like image recognition, voice synthesis, and language translation.

Research in this area explores architecture design (e.g., convolutional neural networks, transformers), optimization techniques, and scalability for real-world applications. Evaluation often involves benchmarking models on standard datasets and fine-tuning for specific tasks.

4. Evolutionary Algorithms

These methods take inspiration from biological evolution. Algorithms such as genetic programming or swarm intelligence evolve solutions to problems by selecting the best-performing candidates from a population.

AI researchers apply these techniques in optimization problems, game design, and robotics, where traditional programming struggles to adapt to dynamic environments.

5. Probabilistic Models

When systems must reason under uncertainty, probabilistic methods like Bayesian networks and Markov decision processes offer powerful frameworks. Researchers use these to create models that can weigh risks and make decisions in uncertain conditions, such as medical diagnostics or autonomous driving.

Designing Intelligent Systems

Designing an AI system requires careful consideration of the task, data, and objectives. The process typically includes:

Defining the Problem: What is the task? Classification, regression, decision-making, or language translation?

Choosing the Right Model: Depending on the problem type, researchers select symbolic models, neural networks, or hybrid systems.

Data Collection and Preparation: Good data is essential. Researchers clean, preprocess, and annotate data before feeding it into the model.

Training and Testing: The system learns from training data and is evaluated on unseen test data.

Evaluation Metrics: Accuracy, precision, recall, F1 score, or area under the curve (AUC) are commonly used to assess performance.

Iteration and Optimization: Models are tuned, retrained, and improved over time.

Evaluating AI Systems

Evaluating an AI system goes beyond just checking accuracy. Researchers must also consider:

Robustness: Does the system perform well under changing conditions?

Fairness: Are there biases in the predictions?

Explainability: Can humans understand how the system made a decision?

Efficiency: Does it meet performance standards in real-time settings?

Scalability: Can the system be applied to large-scale environments?

These factors are increasingly important as AI systems are integrated into critical industries like healthcare, finance, and security.

The Ethical Dimension

Modern AI research doesn’t operate in a vacuum. With powerful tools comes the responsibility to ensure ethical standards are met. Questions around data privacy, surveillance, algorithmic bias, and AI misuse have become central to contemporary research discussions.

Ethics are now embedded in many artificial intelligence course subjects, prompting students and professionals to consider societal impact alongside technical performance.

Conclusion

AI research methods offer a structured path to innovation, enabling us to build intelligent systems that can perceive, reason, and act. Whether you're designing a chatbot, developing a recommendation engine, or improving healthcare diagnostics, understanding these methods is crucial for success.