#T-SQL in Power Query

Explore tagged Tumblr posts

Text

Streamlining Power BI Report Deployment for Multiple Customers with Dynamic Data Sources.

Navigating the maze of business intelligence, especially when it comes to crafting and rolling out Power BI reports for a diverse client base, each with their unique data storage systems, is no small feat. The trick lies in concocting a reporting solution that’s as flexible as it is robust, capable of connecting to a variety of data sources without the need for constant tweaks. This isn’t just…

View On WordPress

#automated Power BI deployment#multi-customer Power BI reports#Power BI data source parameters#Power BI dynamic data sources#T-SQL in Power Query

0 notes

Text

Top 5 Selling Odoo Modules.

In the dynamic world of business, having the right tools can make all the difference. For Odoo users, certain modules stand out for their ability to enhance data management and operations. To optimize your Odoo implementation and leverage its full potential.

That's where Odoo ERP can be a life savior for your business. This comprehensive solution integrates various functions into one centralized platform, tailor-made for the digital economy.

Let’s drive into 5 top selling module that can revolutionize your Odoo experience:

Dashboard Ninja with AI, Odoo Power BI connector, Looker studio connector, Google sheets connector, and Odoo data model.

1. Dashboard Ninja with AI:

Using this module, Create amazing reports with the powerful and smart Odoo Dashboard ninja app for Odoo. See your business from a 360-degree angle with an interactive, and beautiful dashboard.

Some Key Features:

Real-time streaming Dashboard

Advanced data filter

Create charts from Excel and CSV file

Fluid and flexible layout

Download Dashboards items

This module gives you AI suggestions for improving your operational efficiencies.

2. Odoo Power BI Connector:

This module provides a direct connection between Odoo and Power BI Desktop, a Powerful data visualization tool.

Some Key features:

Secure token-based connection.

Proper schema and data type handling.

Fetch custom tables from Odoo.

Real-time data updates.

With Power BI, you can make informed decisions based on real-time data analysis and visualization.

3. Odoo Data Model:

The Odoo Data Model is the backbone of the entire system. It defines how your data is stored, structured, and related within the application.

Key Features:

Relations & fields: Developers can easily find relations ( one-to-many, many-to-many and many-to-one) and defining fields (columns) between data tables.

Object Relational mapping: Odoo ORM allows developers to define models (classes) that map to database tables.

The module allows you to use SQL query extensions and download data in Excel Sheets.

4. Google Sheet Connector:

This connector bridges the gap between Odoo and Google Sheets.

Some Key features:

Real-time data synchronization and transfer between Odoo and Spreadsheet.

One-time setup, No need to wrestle with API’s.

Transfer multiple tables swiftly.

Helped your team’s workflow by making Odoo data accessible in a sheet format.

5. Odoo Looker Studio Connector:

Looker studio connector by Techfinna easily integrates Odoo data with Looker, a powerful data analytics and visualization platform.

Some Key Features:

Directly integrate Odoo data to Looker Studio with just a few clicks.

The connector automatically retrieves and maps Odoo table schemas in their native data types.

Manual and scheduled data refresh.

Execute custom SQL queries for selective data fetching.

The Module helped you build detailed reports, and provide deeper business intelligence.

These Modules will improve analytics, customization, and reporting. Module setup can significantly enhance your operational efficiency. Let’s embrace these modules and take your Odoo experience to the next level.

Need Help?

I hope you find the blog helpful. Please share your feedback and suggestions.

For flawless Odoo Connectors, implementation, and services contact us at

[email protected] Or www.techneith.com

#odoo#powerbi#connector#looker#studio#google#microsoft#techfinna#ksolves#odooerp#developer#web developers#integration#odooimplementation#crm#odoointegration#odooconnector

4 notes

·

View notes

Text

25 Udemy Paid Courses for Free with Certification (Only for Limited Time)

2023 Complete SQL Bootcamp from Zero to Hero in SQL

Become an expert in SQL by learning through concept & Hands-on coding :)

What you'll learn

Use SQL to query a database Be comfortable putting SQL on their resume Replicate real-world situations and query reports Use SQL to perform data analysis Learn to perform GROUP BY statements Model real-world data and generate reports using SQL Learn Oracle SQL by Professionally Designed Content Step by Step! Solve any SQL-related Problems by Yourself Creating Analytical Solutions! Write, Read and Analyze Any SQL Queries Easily and Learn How to Play with Data! Become a Job-Ready SQL Developer by Learning All the Skills You will Need! Write complex SQL statements to query the database and gain critical insight on data Transition from the Very Basics to a Point Where You can Effortlessly Work with Large SQL Queries Learn Advanced Querying Techniques Understand the difference between the INNER JOIN, LEFT/RIGHT OUTER JOIN, and FULL OUTER JOIN Complete SQL statements that use aggregate functions Using joins, return columns from multiple tables in the same query

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Python Programming Complete Beginners Course Bootcamp 2023

2023 Complete Python Bootcamp || Python Beginners to advanced || Python Master Class || Mega Course

What you'll learn

Basics in Python programming Control structures, Containers, Functions & Modules OOPS in Python How python is used in the Space Sciences Working with lists in python Working with strings in python Application of Python in Mars Rovers sent by NASA

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Learn PHP and MySQL for Web Application and Web Development

Unlock the Power of PHP and MySQL: Level Up Your Web Development Skills Today

What you'll learn

Use of PHP Function Use of PHP Variables Use of MySql Use of Database

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

T-Shirt Design for Beginner to Advanced with Adobe Photoshop

Unleash Your Creativity: Master T-Shirt Design from Beginner to Advanced with Adobe Photoshop

What you'll learn

Function of Adobe Photoshop Tools of Adobe Photoshop T-Shirt Design Fundamentals T-Shirt Design Projects

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Complete Data Science BootCamp

Learn about Data Science, Machine Learning and Deep Learning and build 5 different projects.

What you'll learn

Learn about Libraries like Pandas and Numpy which are heavily used in Data Science. Build Impactful visualizations and charts using Matplotlib and Seaborn. Learn about Machine Learning LifeCycle and different ML algorithms and their implementation in sklearn. Learn about Deep Learning and Neural Networks with TensorFlow and Keras Build 5 complete projects based on the concepts covered in the course.

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Essentials User Experience Design Adobe XD UI UX Design

Learn UI Design, User Interface, User Experience design, UX design & Web Design

What you'll learn

How to become a UX designer Become a UI designer Full website design All the techniques used by UX professionals

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Build a Custom E-Commerce Site in React + JavaScript Basics

Build a Fully Customized E-Commerce Site with Product Categories, Shopping Cart, and Checkout Page in React.

What you'll learn

Introduction to the Document Object Model (DOM) The Foundations of JavaScript JavaScript Arithmetic Operations Working with Arrays, Functions, and Loops in JavaScript JavaScript Variables, Events, and Objects JavaScript Hands-On - Build a Photo Gallery and Background Color Changer Foundations of React How to Scaffold an Existing React Project Introduction to JSON Server Styling an E-Commerce Store in React and Building out the Shop Categories Introduction to Fetch API and React Router The concept of "Context" in React Building a Search Feature in React Validating Forms in React

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Complete Bootstrap & React Bootcamp with Hands-On Projects

Learn to Build Responsive, Interactive Web Apps using Bootstrap and React.

What you'll learn

Learn the Bootstrap Grid System Learn to work with Bootstrap Three Column Layouts Learn to Build Bootstrap Navigation Components Learn to Style Images using Bootstrap Build Advanced, Responsive Menus using Bootstrap Build Stunning Layouts using Bootstrap Themes Learn the Foundations of React Work with JSX, and Functional Components in React Build a Calculator in React Learn the React State Hook Debug React Projects Learn to Style React Components Build a Single and Multi-Player Connect-4 Clone with AI Learn React Lifecycle Events Learn React Conditional Rendering Build a Fully Custom E-Commerce Site in React Learn the Foundations of JSON Server Work with React Router

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Build an Amazon Affiliate E-Commerce Store from Scratch

Earn Passive Income by Building an Amazon Affiliate E-Commerce Store using WordPress, WooCommerce, WooZone, & Elementor

What you'll learn

Registering a Domain Name & Setting up Hosting Installing WordPress CMS on Your Hosting Account Navigating the WordPress Interface The Advantages of WordPress Securing a WordPress Installation with an SSL Certificate Installing Custom Themes for WordPress Installing WooCommerce, Elementor, & WooZone Plugins Creating an Amazon Affiliate Account Importing Products from Amazon to an E-Commerce Store using WooZone Plugin Building a Customized Shop with Menu's, Headers, Branding, & Sidebars Building WordPress Pages, such as Blogs, About Pages, and Contact Us Forms Customizing Product Pages on a WordPress Power E-Commerce Site Generating Traffic and Sales for Your Newly Published Amazon Affiliate Store

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

The Complete Beginner Course to Optimizing ChatGPT for Work

Learn how to make the most of ChatGPT's capabilities in efficiently aiding you with your tasks.

What you'll learn

Learn how to harness ChatGPT's functionalities to efficiently assist you in various tasks, maximizing productivity and effectiveness. Delve into the captivating fusion of product development and SEO, discovering effective strategies to identify challenges, create innovative tools, and expertly Understand how ChatGPT is a technological leap, akin to the impact of iconic tools like Photoshop and Excel, and how it can revolutionize work methodologies thr Showcase your learning by creating a transformative project, optimizing your approach to work by identifying tasks that can be streamlined with artificial intel

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

AWS, JavaScript, React | Deploy Web Apps on the Cloud

Cloud Computing | Linux Foundations | LAMP Stack | DBMS | Apache | NGINX | AWS IAM | Amazon EC2 | JavaScript | React

What you'll learn

Foundations of Cloud Computing on AWS and Linode Cloud Computing Service Models (IaaS, PaaS, SaaS) Deploying and Configuring a Virtual Instance on Linode and AWS Secure Remote Administration for Virtual Instances using SSH Working with SSH Key Pair Authentication The Foundations of Linux (Maintenance, Directory Commands, User Accounts, Filesystem) The Foundations of Web Servers (NGINX vs Apache) Foundations of Databases (SQL vs NoSQL), Database Transaction Standards (ACID vs CAP) Key Terminology for Full Stack Development and Cloud Administration Installing and Configuring LAMP Stack on Ubuntu (Linux, Apache, MariaDB, PHP) Server Security Foundations (Network vs Hosted Firewalls). Horizontal and Vertical Scaling of a virtual instance on Linode using NodeBalancers Creating Manual and Automated Server Images and Backups on Linode Understanding the Cloud Computing Phenomenon as Applicable to AWS The Characteristics of Cloud Computing as Applicable to AWS Cloud Deployment Models (Private, Community, Hybrid, VPC) Foundations of AWS (Registration, Global vs Regional Services, Billing Alerts, MFA) AWS Identity and Access Management (Mechanics, Users, Groups, Policies, Roles) Amazon Elastic Compute Cloud (EC2) - (AMIs, EC2 Users, Deployment, Elastic IP, Security Groups, Remote Admin) Foundations of the Document Object Model (DOM) Manipulating the DOM Foundations of JavaScript Coding (Variables, Objects, Functions, Loops, Arrays, Events) Foundations of ReactJS (Code Pen, JSX, Components, Props, Events, State Hook, Debugging) Intermediate React (Passing Props, Destrcuting, Styling, Key Property, AI, Conditional Rendering, Deployment) Building a Fully Customized E-Commerce Site in React Intermediate React Concepts (JSON Server, Fetch API, React Router, Styled Components, Refactoring, UseContext Hook, UseReducer, Form Validation)

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Run Multiple Sites on a Cloud Server: AWS & Digital Ocean

Server Deployment | Apache Configuration | MySQL | PHP | Virtual Hosts | NS Records | DNS | AWS Foundations | EC2

What you'll learn

A solid understanding of the fundamentals of remote server deployment and configuration, including network configuration and security. The ability to install and configure the LAMP stack, including the Apache web server, MySQL database server, and PHP scripting language. Expertise in hosting multiple domains on one virtual server, including setting up virtual hosts and managing domain names. Proficiency in virtual host file configuration, including creating and configuring virtual host files and understanding various directives and parameters. Mastery in DNS zone file configuration, including creating and managing DNS zone files and understanding various record types and their uses. A thorough understanding of AWS foundations, including the AWS global infrastructure, key AWS services, and features. A deep understanding of Amazon Elastic Compute Cloud (EC2) foundations, including creating and managing instances, configuring security groups, and networking. The ability to troubleshoot common issues related to remote server deployment, LAMP stack installation and configuration, virtual host file configuration, and D An understanding of best practices for remote server deployment and configuration, including security considerations and optimization for performance. Practical experience in working with remote servers and cloud-based solutions through hands-on labs and exercises. The ability to apply the knowledge gained from the course to real-world scenarios and challenges faced in the field of web hosting and cloud computing. A competitive edge in the job market, with the ability to pursue career opportunities in web hosting and cloud computing.

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Cloud-Powered Web App Development with AWS and PHP

AWS Foundations | IAM | Amazon EC2 | Load Balancing | Auto-Scaling Groups | Route 53 | PHP | MySQL | App Deployment

What you'll learn

Understanding of cloud computing and Amazon Web Services (AWS) Proficiency in creating and configuring AWS accounts and environments Knowledge of AWS pricing and billing models Mastery of Identity and Access Management (IAM) policies and permissions Ability to launch and configure Elastic Compute Cloud (EC2) instances Familiarity with security groups, key pairs, and Elastic IP addresses Competency in using AWS storage services, such as Elastic Block Store (EBS) and Simple Storage Service (S3) Expertise in creating and using Elastic Load Balancers (ELB) and Auto Scaling Groups (ASG) for load balancing and scaling web applications Knowledge of DNS management using Route 53 Proficiency in PHP programming language fundamentals Ability to interact with databases using PHP and execute SQL queries Understanding of PHP security best practices, including SQL injection prevention and user authentication Ability to design and implement a database schema for a web application Mastery of PHP scripting to interact with a database and implement user authentication using sessions and cookies Competency in creating a simple blog interface using HTML and CSS and protecting the blog content using PHP authentication. Students will gain practical experience in creating and deploying a member-only blog with user authentication using PHP and MySQL on AWS.

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

CSS, Bootstrap, JavaScript And PHP Stack Complete Course

CSS, Bootstrap And JavaScript And PHP Complete Frontend and Backend Course

What you'll learn

Introduction to Frontend and Backend technologies Introduction to CSS, Bootstrap And JavaScript concepts, PHP Programming Language Practically Getting Started With CSS Styles, CSS 2D Transform, CSS 3D Transform Bootstrap Crash course with bootstrap concepts Bootstrap Grid system,Forms, Badges And Alerts Getting Started With Javascript Variables,Values and Data Types, Operators and Operands Write JavaScript scripts and Gain knowledge in regard to general javaScript programming concepts PHP Section Introduction to PHP, Various Operator types , PHP Arrays, PHP Conditional statements Getting Started with PHP Function Statements And PHP Decision Making PHP 7 concepts PHP CSPRNG And PHP Scalar Declaration

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Learn HTML - For Beginners

Lean how to create web pages using HTML

What you'll learn

How to Code in HTML Structure of an HTML Page Text Formatting in HTML Embedding Videos Creating Links Anchor Tags Tables & Nested Tables Building Forms Embedding Iframes Inserting Images

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Learn Bootstrap - For Beginners

Learn to create mobile-responsive web pages using Bootstrap

What you'll learn

Bootstrap Page Structure Bootstrap Grid System Bootstrap Layouts Bootstrap Typography Styling Images Bootstrap Tables, Buttons, Badges, & Progress Bars Bootstrap Pagination Bootstrap Panels Bootstrap Menus & Navigation Bars Bootstrap Carousel & Modals Bootstrap Scrollspy Bootstrap Themes

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

JavaScript, Bootstrap, & PHP - Certification for Beginners

A Comprehensive Guide for Beginners interested in learning JavaScript, Bootstrap, & PHP

What you'll learn

Master Client-Side and Server-Side Interactivity using JavaScript, Bootstrap, & PHP Learn to create mobile responsive webpages using Bootstrap Learn to create client and server-side validated input forms Learn to interact with a MySQL Database using PHP

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Linode: Build and Deploy Responsive Websites on the Cloud

Cloud Computing | IaaS | Linux Foundations | Apache + DBMS | LAMP Stack | Server Security | Backups | HTML | CSS

What you'll learn

Understand the fundamental concepts and benefits of Cloud Computing and its service models. Learn how to create, configure, and manage virtual servers in the cloud using Linode. Understand the basic concepts of Linux operating system, including file system structure, command-line interface, and basic Linux commands. Learn how to manage users and permissions, configure network settings, and use package managers in Linux. Learn about the basic concepts of web servers, including Apache and Nginx, and databases such as MySQL and MariaDB. Learn how to install and configure web servers and databases on Linux servers. Learn how to install and configure LAMP stack to set up a web server and database for hosting dynamic websites and web applications. Understand server security concepts such as firewalls, access control, and SSL certificates. Learn how to secure servers using firewalls, manage user access, and configure SSL certificates for secure communication. Learn how to scale servers to handle increasing traffic and load. Learn about load balancing, clustering, and auto-scaling techniques. Learn how to create and manage server images. Understand the basic structure and syntax of HTML, including tags, attributes, and elements. Understand how to apply CSS styles to HTML elements, create layouts, and use CSS frameworks.

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

PHP & MySQL - Certification Course for Beginners

Learn to Build Database Driven Web Applications using PHP & MySQL

What you'll learn

PHP Variables, Syntax, Variable Scope, Keywords Echo vs. Print and Data Output PHP Strings, Constants, Operators PHP Conditional Statements PHP Elseif, Switch, Statements PHP Loops - While, For PHP Functions PHP Arrays, Multidimensional Arrays, Sorting Arrays Working with Forms - Post vs. Get PHP Server Side - Form Validation Creating MySQL Databases Database Administration with PhpMyAdmin Administering Database Users, and Defining User Roles SQL Statements - Select, Where, And, Or, Insert, Get Last ID MySQL Prepared Statements and Multiple Record Insertion PHP Isset MySQL - Updating Records

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Linode: Deploy Scalable React Web Apps on the Cloud

Cloud Computing | IaaS | Server Configuration | Linux Foundations | Database Servers | LAMP Stack | Server Security

What you'll learn

Introduction to Cloud Computing Cloud Computing Service Models (IaaS, PaaS, SaaS) Cloud Server Deployment and Configuration (TFA, SSH) Linux Foundations (File System, Commands, User Accounts) Web Server Foundations (NGINX vs Apache, SQL vs NoSQL, Key Terms) LAMP Stack Installation and Configuration (Linux, Apache, MariaDB, PHP) Server Security (Software & Hardware Firewall Configuration) Server Scaling (Vertical vs Horizontal Scaling, IP Swaps, Load Balancers) React Foundations (Setup) Building a Calculator in React (Code Pen, JSX, Components, Props, Events, State Hook) Building a Connect-4 Clone in React (Passing Arguments, Styling, Callbacks, Key Property) Building an E-Commerce Site in React (JSON Server, Fetch API, Refactoring)

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Internet and Web Development Fundamentals

Learn how the Internet Works and Setup a Testing & Production Web Server

What you'll learn

How the Internet Works Internet Protocols (HTTP, HTTPS, SMTP) The Web Development Process Planning a Web Application Types of Web Hosting (Shared, Dedicated, VPS, Cloud) Domain Name Registration and Administration Nameserver Configuration Deploying a Testing Server using WAMP & MAMP Deploying a Production Server on Linode, Digital Ocean, or AWS Executing Server Commands through a Command Console Server Configuration on Ubuntu Remote Desktop Connection and VNC SSH Server Authentication FTP Client Installation FTP Uploading

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Linode: Web Server and Database Foundations

Cloud Computing | Instance Deployment and Config | Apache | NGINX | Database Management Systems (DBMS)

What you'll learn

Introduction to Cloud Computing (Cloud Service Models) Navigating the Linode Cloud Interface Remote Administration using PuTTY, Terminal, SSH Foundations of Web Servers (Apache vs. NGINX) SQL vs NoSQL Databases Database Transaction Standards (ACID vs. CAP Theorem) Key Terms relevant to Cloud Computing, Web Servers, and Database Systems

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Java Training Complete Course 2022

Learn Java Programming language with Java Complete Training Course 2022 for Beginners

What you'll learn

You will learn how to write a complete Java program that takes user input, processes and outputs the results You will learn OOPS concepts in Java You will learn java concepts such as console output, Java Variables and Data Types, Java Operators And more You will be able to use Java for Selenium in testing and development

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Learn To Create AI Assistant (JARVIS) With Python

How To Create AI Assistant (JARVIS) With Python Like the One from Marvel's Iron Man Movie

What you'll learn

how to create an personalized artificial intelligence assistant how to create JARVIS AI how to create ai assistant

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Keyword Research, Free Backlinks, Improve SEO -Long Tail Pro

LongTailPro is the keyword research service we at Coursenvy use for ALL our clients! In this course, find SEO keywords,

What you'll learn

Learn everything Long Tail Pro has to offer from A to Z! Optimize keywords in your page/post titles, meta descriptions, social media bios, article content, and more! Create content that caters to the NEW Search Engine Algorithms and find endless keywords to rank for in ALL the search engines! Learn how to use ALL of the top-rated Keyword Research software online! Master analyzing your COMPETITIONS Keywords! Get High-Quality Backlinks that will ACTUALLY Help your Page Rank!

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

#udemy#free course#paid course for free#design#development#ux ui#xd#figma#web development#python#javascript#php#java#cloud

2 notes

·

View notes

Text

Exploring Dynamic SQL in T-SQL Server

Dynamic SQL in T-SQL Server: A Complete Guide to Building Dynamic Queries Hello, fellow SQL enthusiasts! In this blog post, I will introduce you to Dynamic SQL in T-SQL – one of the most powerful and flexible features of T-SQL Server – Dynamic SQL. Dynamic SQL allows you to construct and execute SQL statements dynamically at runtime, enabling more adaptable and responsive database operations. It…

0 notes

Text

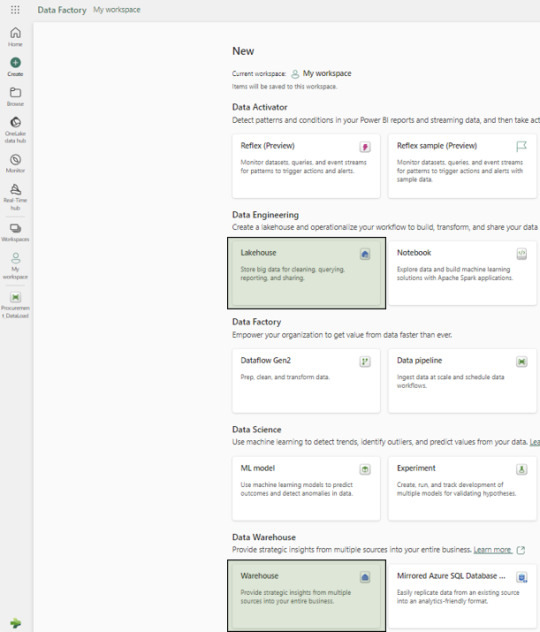

Microsoft Fabric data warehouse

Microsoft Fabric data warehouse

What Is Microsoft Fabric and Why You Should Care?

Unified Software as a Service (SaaS), offering End-To-End analytics platform

Gives you a bunch of tools all together, Microsoft Fabric OneLake supports seamless integration, enabling collaboration on this unified data analytics platform

Scalable Analytics

Accessibility from anywhere with an internet connection

Streamlines collaboration among data professionals

Empowering low-to-no-code approach

Components of Microsoft Fabric

Fabric provides comprehensive data analytics solutions, encompassing services for data movement and transformation, analysis and actions, and deriving insights and patterns through machine learning. Although Microsoft Fabric includes several components, this article will use three primary experiences: Data Factory, Data Warehouse, and Power BI.

Lake House vs. Warehouse: Which Data Storage Solution is Right for You?

In simple terms, the underlying storage format in both Lake Houses and Warehouses is the Delta format, an enhanced version of the Parquet format.

Usage and Format Support

A Lake House combines the capabilities of a data lake and a data warehouse, supporting unstructured, semi-structured, and structured formats. In contrast, a data Warehouse supports only structured formats.

When your organization needs to process big data characterized by high volume, velocity, and variety, and when you require data loading and transformation using Spark engines via notebooks, a Lake House is recommended. A Lakehouse can process both structured tables and unstructured/semi-structured files, offering managed and external table options. Microsoft Fabric OneLake serves as the foundational layer for storing structured and unstructured data Notebooks can be used for READ and WRITE operations in a Lakehouse. However, you cannot connect to a Lake House with an SQL client directly, without using SQL endpoints.

On the other hand, a Warehouse excels in processing and storing structured formats, utilizing stored procedures, tables, and views. Processing data in a Warehouse requires only T-SQL knowledge. It functions similarly to a typical RDBMS database but with a different internal storage architecture, as each table’s data is stored in the Delta format within OneLake. Users can access Warehouse data directly using any SQL client or the in-built graphical SQL editor, performing READ and WRITE operations with T-SQL and its elements like stored procedures and views. Notebooks can also connect to the Warehouse, but only for READ operations.

An SQL endpoint is like a special doorway that lets other computer programs talk to a database or storage system using a language called SQL. With this endpoint, you can ask questions (queries) to get information from the database, like searching for specific data or making changes to it. It’s kind of like using a search engine to find things on the internet, but for your data stored in the Fabric system. These SQL endpoints are often used for tasks like getting data, asking questions about it, and making changes to it within the Fabric system.

Choosing Between Lakehouse and Warehouse

The decision to use a Lakehouse or Warehouse depends on several factors:

Migrating from a Traditional Data Warehouse: If your organization does not have big data processing requirements, a Warehouse is suitable.

Migrating from a Mixed Big Data and Traditional RDBMS System: If your existing solution includes both a big data platform and traditional RDBMS systems with structured data, using both a Lakehouse and a Warehouse is ideal. Perform big data operations with notebooks connected to the Lakehouse and RDBMS operations with T-SQL connected to the Warehouse.

Note: In both scenarios, once the data resides in either a Lakehouse or a Warehouse, Power BI can connect to both using SQL endpoints.

A Glimpse into the Data Factory Experience in Microsoft Fabric

In the Data Factory experience, we focus primarily on two items: Data Pipeline and Data Flow.

Data Pipelines

Used to orchestrate different activities for extracting, loading, and transforming data.

Ideal for building reusable code that can be utilized across other modules.

Enables activity-level monitoring.

To what can we compare Data Pipelines ?

microsoft fabric data pipelines Data Pipelines are similar, but not the same as:

Informatica -> Workflows

ODI -> Packages

Dataflows

Utilized when a GUI tool with Power Query UI experience is required for building Extract, Transform, and Load (ETL) logic.

Employed when individual selection of source and destination components is necessary, along with the inclusion of various transformation logic for each table.

To what can we compare Data Flows ?

Dataflows are similar, but not same as :

Informatica -> Mappings

ODI -> Mappings / Interfaces

Are You Ready to Migrate Your Data Warehouse to Microsoft Fabric?

Here is our solution for implementing the Medallion Architecture with Fabric data Warehouse:

Creation of New Workspace

We recommend creating separate workspaces for Semantic Models, Reports, and Data Pipelines as a best practice.

Creation of Warehouse and Lakehouse

Follow the on-screen instructions to setup new Lakehouse and a Warehouse:

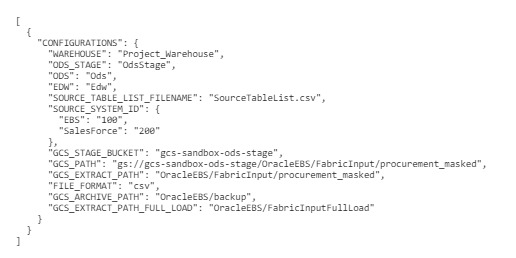

Configuration Setups

Create a configurations.json file containing parameters for data pipeline activities:

Source schema, buckets, and path

Destination warehouse name

Names of warehouse layers bronze, silver and gold – OdsStage,Ods and Edw

List of source tables/files in a specific format

Source System Id’s for different sources

Below is the screenshot of the (config_variables.json) :

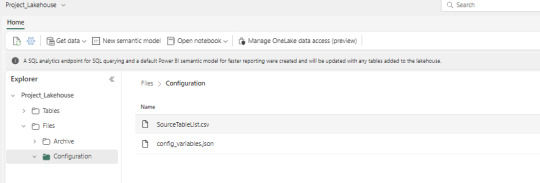

File Placement

Place the configurations.json and SourceTableList.csv files in the Fabric Lakehouse.

SourceTableList will have columns such as – SourceSystem, SourceDatasetId, TableName, PrimaryKey, UpdateKey, CDCColumnName, SoftDeleteColumn, ArchiveDate, ArchiveKey

Data Pipeline Creation

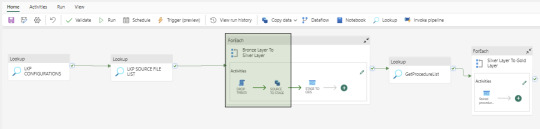

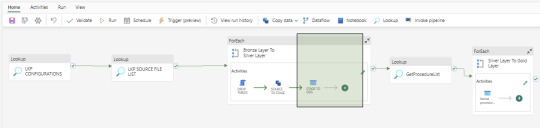

Create a data pipeline to orchestrate various activities for data extraction, loading, and transformation. Below is the screenshot of the Data Pipeline and here you can see the different activities like – Lookup, ForEach, Script, Copy Data and Stored Procedure

Bronze Layer Loading

Develop a dynamic activity to load data into the Bronze Layer (OdsStage schema in Warehouse). This layer truncates and reloads data each time.

We utilize two activities in this layer: Script Activity and Copy Data Activity. Both activities receive parameterized inputs from the Configuration file and SourceTableList file. The Script activity drops the staging table, and the Copy Data activity creates and loads data into the OdsStage table. These activities are reusable across modules and feature powerful capabilities for fast data loading.

Silver Layer Loading

Establish a dynamic activity to UPSERT data into the Silver layer (Ods schema in Warehouse) using a stored procedure activity. This procedure takes parameterized inputs from the Configuration file and SourceTableList file, handling both UPDATE and INSERT operations. This stored procedure is reusable. At this time, MERGE statements are not supported by Fabric Warehouse. However, this feature may be added in the future.

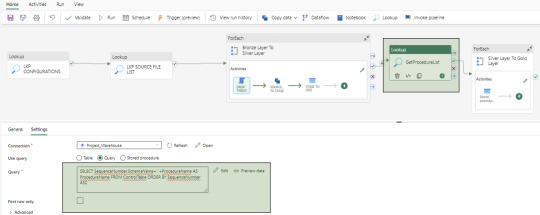

Control Table Creation

Create a control table in the Warehouse with columns containing Sequence Numbers and Procedure Names to manage dependencies between Dimensions, Facts, and Aggregate tables. And finally fetch the values using a Lookup activity.

Gold Layer Loading

To load data into the Gold Layer (Edw schema in the warehouse), we develop individual stored procedures to UPSERT (UPDATE and INSERT) data for each dimension, fact, and aggregate table. While Dataflow can also be used for this task, we prefer stored procedures to handle the nature of complex business logic.

Dashboards and Reporting

Fabric includes the Power BI application, which can connect to the SQL endpoints of both the Lakehouse and Warehouse. These SQL endpoints allow for the creation of semantic models, which are then used to develop reports and applications. In our use case, the semantic models are built from the Gold layer (Edw schema in Warehouse) tables.

Upcoming Topics Preview

In the upcoming articles, we will cover topics such as notebooks, dataflows, lakehouse, security and other related subjects.

Conclusion

microsoft Fabric data warehouse stands as a potent and user-friendly data manipulation platform, offering an extensive array of tools for data ingestion, storage, transformation, and analysis. Whether you’re a novice or a seasoned data analyst, Fabric empowers you to optimize your workflow and harness the full potential of your data.

We specialize in aiding organizations in meticulously planning and flawlessly executing data projects, ensuring utmost precision and efficiency.

Curious and would like to hear more about this article ?

Contact us at [email protected] or Book time with me to organize a 100%-free, no-obligation call

Follow us on LinkedIn for more interesting updates!!

DataPlatr Inc. specializes in data engineering & analytics with pre-built data models for Enterprise Applications like SAP, Oracle EBS, Workday, Salesforce to empower businesses to unlock the full potential of their data. Our pre-built enterprise data engineering models are designed to expedite the development of data pipelines, data transformation, and integration, saving you time and resources.

Our team of experienced data engineers, scientists and analysts utilize cutting-edge data infrastructure into valuable insights and help enterprise clients optimize their Sales, Marketing, Operations, Financials, Supply chain, Human capital and Customer experiences.

0 notes

Text

Unlocking the Future of Data Engineering with Ask On Data: An Open Source, GenAI-Powered NLP-Based Data Engineering Tool

In the rapidly evolving world of data engineering, businesses and organizations face increasing challenges in managing and integrating vast amounts of data from diverse sources. Traditional data engineering processes, including ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform), often require specialized expertise, are time-consuming, and can be error-prone. To address these challenges, innovative technologies like Generative AI (GenAI), Large Language Models (LLM), and Natural Language Processing (NLP) are reshaping how data engineers and analysts work with data. One such breakthrough solution is Ask On Data, an NLP based data engineering tool that simplifies the complexities of data integration, transformation, and loading through conversational interfaces.

What is Ask On Data?

Ask On Data is an open-source NLP based ETL tool that leverages Generative AI and Large Language Models (LLMs) to enable users to interact with complex data systems in natural language. With Ask On Data, the traditionally technical processes of data extraction, data transformation, and data loading are streamlined and made accessible to a broader range of users, including those without a deep technical background in data engineering.

The tool bridges the gap between the technical demands of data engineering and the need for more intuitive, accessible interfaces. Through simple, conversational commands, users can query, transform, and integrate data, eliminating the need for writing complex code. This functionality significantly reduces the time and effort required for managing data pipelines, making it ideal for organizations looking to automate and optimize their data workflows.

How Ask On Data Works: NLP Meets Data Engineering

The core innovation of Ask On Data lies in its ability to understand and process natural language commands related to data integration, data transformation, and data loading. The tool uses Natural Language Processing (NLP) to parse and execute commands, enabling users to work with data systems without needing to master SQL or scripting languages.

Data Integration: Ask On Data connects seamlessly with various data sources, whether it’s a data lake, data warehouse, or cloud-based data storage system. Users can simply input commands like, “Integrate data from my sales database with customer feedback from the cloud,” and Ask On Data will manage the connection, data extraction, and integration processes.

Data Transformation: Data transformation is another area where Ask On Data excels. Users can request transformations in natural language, such as “Convert all dates in this dataset to ISO format” or “Aggregate sales data by region for the last quarter,” and the tool will apply the transformations without needing complex scripts.

Data Loading: Once data is transformed, Ask On Data can automatically load it into the target system, whether it’s a data warehouse for analytics or a data lake for storage. Users can issue simple commands to initiate loading, such as “Load transformed data into the reporting database.”

Benefits of Ask On Data in Data Engineering

Simplified Data Operations: By using natural language to manage ETL/ELT processes, Ask On Data lowers the barrier for non-technical users to access and manipulate data. This democratization of data engineering allows business analysts, data scientists, and even executives to interact with data more easily.

Increased Efficiency: Automation of routine data tasks, like data extraction, transformation, and loading, speeds up the process and reduces human errors. With GenAI at its core, Ask On Data can also generate code or queries based on user instructions, making it a powerful assistant for data engineers.

Open-Source Flexibility: Ask On Data is an open-source tool, meaning it is freely available and highly customizable. Organizations can adapt the tool to fit their specific needs, from integrating it into custom workflows to extending its capabilities through plugins or custom scripts.

Improved Collaboration: With its intuitive, chat-based interface, Ask On Data fosters better collaboration across teams. Data engineers can focus on more complex tasks while empowering other stakeholders to interact with data without fear of making mistakes or having to understand complex technologies.

The Future of Data Engineering with NLP and GenAI

The integration of Generative AI and LLMs into data engineering tools like Ask On Data represents a paradigm shift in how data operations are managed. By combining the power of AI with the simplicity of NLP, Ask On Data enables organizations to make smarter, faster decisions and streamline their data workflows.

As businesses continue to generate more data and migrate towards cloud-based solutions, tools like Ask On Data will become increasingly important in helping them integrate, transform, and analyze data efficiently. By making ETL and ELT processes more accessible and intuitive, Ask On Data is laying the groundwork for the future of data transformation, data loading, and data integration.

Conclusion,

Ask On Data is an innovative NLP-based data engineering tool that empowers users to manage complex data workflows with ease. Whether you're working with a data lake, a data warehouse, or cloud-based platforms, Ask On Data’s conversational interface simplifies the tasks of data transformation, data integration, and data loading, making it a must-have tool for modern data engineering teams.

0 notes

Text

Price: [price_with_discount] (as of [price_update_date] - Details) [ad_1] In Beginning Big Data with Power BI and Excel 2013, you will learn to solve business problems by tapping the power of Microsofts Excel and Power BI to import data from NoSQL and SQL databases and other sources, create relational data models, and analyze business problems through sophisticated dashboards and data-driven maps. While Beginning Big Data with Power BI and Excel 2013 covers prominent tools such as Hadoop and the NoSQL databases, it recognizes that most small and medium-sized businesses dont have the Big Data processing needs of a Netflix, Target, or Facebook. Instead, it shows how to import data and use the self-service analytics available in Excel with Power BI. As youll see through the books numerous case examples, these toolswhich you already know how to usecan perform many of the same functions as the higher-end Apache tools many people believe are required to carry out in Big Data projects. Through instruction, insight, advice, and case studies, Beginning Big Data with Power BI and Excel 2013 will show you how to: Import and mash up data from web pages, SQL and NoSQL databases, the Azure Marketplace and other sources. Tap into the analytical power of PivotTables and PivotCharts and develop relational data models to track trends and make predictions based on a wide range of data. Understand basic statistics and use Excel with PowerBI to do sophisticated statistical analysisincluding identifying trends and correlations. Use SQL within Excel to do sophisticated queries across multiple tables, including NoSQL databases. Create complex formulas to solve real-world business problems using Data Analysis Expressions (DAX). Publisher : Springer Nature; 1st ed. edition (29 September 2015) Language : English Paperback : 300 pages ISBN-10 : 1484205308 ISBN-13 : 978-1484205303 Item Weight : 476 g Dimensions : 17.8 x 1.55 x 25.4 cm Country of Origin : India [ad_2]

0 notes

Text

Struggling with slow database queries? Discover the power of SQL Performance Tuning with Simple Logic! Learn how to optimize query execution, improve response times, and enhance overall database efficiency. This blog covers expert strategies to boost performance and keep your systems running smoothly. 🚀🔧

To learn more click here

0 notes

Text

Microsoft SQL Server Training

Welcome to our comprehensive Microsoft SQL Server training program! As the backbone of many enterprise-level applications, SQL Server is a powerful relational database management system (RDBMS) developed by Microsoft. This training is designed to equip you with the knowledge and skills needed to efficiently manage, develop, and maintain SQL Server databases.

Whether you are a beginner looking to understand the fundamentals of SQL Server or an experienced professional aiming to enhance your database management capabilities, our program caters to all levels. You will learn to navigate the SQL Server Training in India environment, perform data manipulation and retrieval, design and implement robust database solutions, and ensure data security and integrity.

Our training covers a wide range of topics, including:

Introduction to SQL Server: Understanding the architecture, installation, and configuration of SQL Server.

SQL Fundamentals: Writing queries, using joins, and manipulating data with Transact-SQL (T-SQL).

Database Design and Development: Creating and managing databases, tables, indexes, and relationships.

Advanced Querying Techniques: Optimizing queries, using advanced functions, and working with stored procedures and triggers.

Data Security and Backup: Implementing security measures, performing backups, and ensuring data recovery.

Performance Tuning and Optimization: Monitoring and optimizing database performance to handle large-scale data efficiently.

Throughout the course, you will engage in hands-on exercises and real-world scenarios that will help you apply what you’ve learned in practical situations. By the end of the training, you will be proficient in managing SQL Server environments, ensuring data reliability, and leveraging advanced features to optimize database performance.

Join us to unlock the full potential of Microsoft SQL Server and take your database management skills to the next level.

0 notes

Text

What skills are required for an MSBI developer?

In today's data-driven world, the role of a Microsoft Business Intelligence (MSBI) developer is important. These professionals wield the power to extract valuable insights from data, steering organizations toward informed decision-making and strategic growth. With the demand for proficient MSBI developers soaring, it's paramount to grasp the core skills requisite for success in this domain. In this article, we delve into the fundamental skills necessary for an MSBI developer while addressing common queries surrounding embarking on a career in MSBI.

Critical Skills for an MSBI Developer

SQL Proficiency: A robust command over SQL (Structured Query Language) is indispensable for MSBI developers. Given that MSBI solutions primarily involve querying and manipulating data stored in relational databases, a sound grasp of SQL queries, joins, and data manipulation techniques is imperative.

Data Modeling and ETL: MSBI developers must possess a strong foundation in data modeling concepts and hands-on experience with ETL tools like SQL Server Integration Services (SSIS). Their ability to design and execute efficient ETL processes, encompassing data extraction, transformation, and loading, is paramount for success in this role.

Comprehension of Business Intelligence Concepts: An MSBI developer's repertoire should include a profound understanding of business intelligence principles and methodologies. This entails familiarity with data warehousing fundamentals, dimensional modeling techniques, and OLAP (Online Analytical Processing) methodologies, empowering developers to craft BI solutions tailored to meet business users' analytical needs.

Mastery of the MSBI Stack: Proficiency in Microsoft's BI stack—comprising SQL Server, SSIS, SQL Server Analysis Services (SSAS), and SQL Server Reporting Services (SSRS)—is non-negotiable for MSBI developers. Their adeptness with these tools enables them to architect end-to-end BI solutions seamlessly.

Problem-Solving Aptitude and Analytical Skills: MSBI developers must exhibit robust problem-solving skills and analytical prowess to discern business requirements, analyze data effectively, and devise solutions aligned with organizational objectives. Their capacity to troubleshoot issues and fine-tune BI processes for optimal performance is critical for delivering impactful solutions.

Effective Communication and Collaboration: Strong communication and collaboration skills are indispensable for MSBI developers. Their ability to convey technical concepts to non-technical stakeholders and collaborate seamlessly with cross-functional teams facilitates the delivery of BI solutions that resonate with business imperatives.

Source : msbi certification

Conclusion

Becoming a proficient MSBI developer demands a blend of technical prowess, analytical acumen, and business acuity. By honing these requisite skills and staying abreast of industry trends, individuals can carve a rewarding career path in MSBI, driving organizational success through data-driven insights and solutions.

Frequently Asked Questions

1.Does MSBI require coding?

Yes, MSBI development typically entails coding, encompassing SQL queries, T-SQL scripts, and SSIS packages. While a strong coding acumen is advantageous, MSBI developers may also leverage graphical tools and wizards offered by MSBI platforms to design and deploy BI solutions.

2.What does an MSBI developer do?

An MSBI developer shoulders the responsibility of conceptualizing, crafting, and maintaining Business Intelligence (BI) solutions using Microsoft's BI stack. This entails a gamut of activities such as data modeling, ETL development, creation of analytical cubes, report and dashboard generation, and optimization of BI processes for enhanced performance and scalability.

3.Is MSBI a promising career path?

Indeed, MSBI offers a promising career trajectory for individuals inclined towards data analytics and business intelligence. Given the escalating demand for data-driven decision-making, organizations are increasingly reliant on BI solutions to derive actionable insights from their data. Consequently, adept MSBI professionals are highly sought after across industries, rendering it a lucrative career avenue for aspiring BI developers.

People also read : How To Start Your Career As MSBI Developer?

0 notes

Text

Azure Data Engineer | Azure Data Engineering Certification

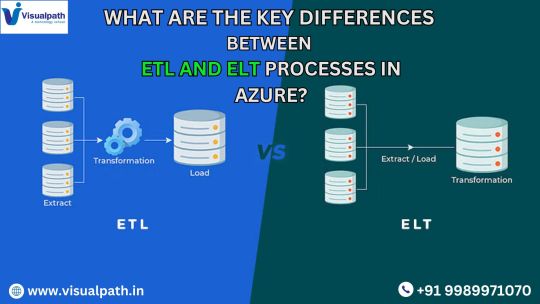

Key Differences Between ETL and ELT Processes in Azure

Azure data engineering offers two common approaches for processing data: ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform). These methods are essential for moving and processing data from source systems into data warehouses or data lakes for analysis. While both serve similar purposes, they differ in their workflows, tools, and technologies, particularly when implemented within Azure's cloud ecosystem. This article will explore the key distinctions between ETL and ELT in the context of Azure data services, helping organizations make informed decisions about their data processing strategies. Azure Data Engineer Training

1. Process Flow: Extraction, Transformation, and Loading

The most fundamental difference between ETL and ELT is the sequence in which data is processed: Microsoft Azure Data Engineer

ETL (Extract, Transform, Load):

In the ETL process, data is first extracted from source systems, transformed into the desired format or structure, and then loaded into the data warehouse or data lake.

The transformation step occurs before loading the data into the destination, ensuring that the data is cleaned, enriched, and formatted properly during the data pipeline.

ELT (Extract, Load, Transform):

ELT, on the other hand, follows a different sequence: data is extracted from the source, loaded into the destination system (e.g., a cloud data warehouse), and then transformed directly within the destination system.

The transformation happens after the data has already been stored, utilizing the computational power of the cloud infrastructure to process and modify the data.

2. Tools and Technologies in Azure

Both ETL and ELT processes require specific tools to handle data extraction, transformation, and loading. Azure provides robust tools for both approaches, but the choice of tool depends on the processing flow:

ETL in Azure:

Azure Data Factory is the primary service used for building and managing ETL pipelines. It offers a wide range of connectors for various data sources and destinations and allows for data transformations to be executed in the pipeline itself using Data Flow or Mapping Data Flows.

Azure Databricks, a Spark-based service, can also be integrated for more complex transformations during the ETL process, where heavy lifting is required for batch or streaming data processing.

ELT in Azure:

For the ELT process, Azure Synapse Analytics (formerly SQL Data Warehouse) is a leading service, leveraging the power of cloud-scale data warehouses to perform in-place transformations.

Azure Data Lake and Azure Blob Storage are used for storing raw data in ELT pipelines, with Azure Synapse Pipelines or Azure Data Factory responsible for orchestrating the load and transformation tasks.

Azure SQL Database and Azure Data Explorer are also used in ELT scenarios where data is loaded into the database first, followed by transformations using T-SQL or Azure's native query processing capabilities.

3. Performance and Scalability

The key advantage of ELT over ETL lies in its performance and scalability, particularly when dealing with large volumes of data: Azure Data Engineering Certification

ETL Performance:

ETL can be more resource-intensive because the transformation logic is executed before the data is loaded into the warehouse. This can lead to bottlenecks during the transformation step, especially if the data is complex or requires significant computation.

With Azure Data Factory, transformation logic is executed during the pipeline execution, and if there are large datasets, the process may be slower and require more manual optimization.

ELT Performance:

ELT leverages the scalable and high-performance computing power of Azure’s cloud services like Azure Synapse Analytics and Azure Data Lake. After the data is loaded into the cloud storage or data warehouse, the transformations are run in parallel using the cloud infrastructure, allowing for faster and more efficient processing.

As data sizes grow, ELT tends to perform better since the processing occurs within the cloud infrastructure, reducing the need for complex pre-processing and allowing the system to scale with the data.

4. Data Transformation Complexity

ETL Transformations:

ETL processes are better suited for complex transformations that require extensive pre-processing of data before it can be loaded into a warehouse. In scenarios where data must be cleaned, enriched, and aggregated, ETL provides a structured and controlled approach to transformations.

ELT Transformations:

ELT is more suited to scenarios where the data is already clean or requires simpler transformations that can be efficiently performed using the native capabilities of cloud platforms. Azure’s Synapse Analytics and SQL Database offer powerful querying and processing engines that can handle data transformations once the data is loaded, but this may not be ideal for very complex transformations.

5. Data Storage and Flexibility

ETL Storage:

ETL typically involves transforming the data before storage in a structured format, like a relational database or data warehouse, which makes it ideal for scenarios where data must be pre-processed or aggregated before analysis.

ELT Storage:

ELT offers greater flexibility, especially for handling raw, unstructured data in Azure Data Lake or Blob Storage. After data is loaded, transformation and analysis can take place in a more dynamic environment, enabling more agile data processing.

6. Cost Implications

ETL Costs: Azure Data Engineer Course

ETL processes tend to incur higher costs due to the additional processing power required to transform the data before loading it into the destination. Since transformations are done earlier in the pipeline, more resources (compute and memory) are required to handle these operations.

ELT Costs:

ELT typically incurs lower costs, as the heavy lifting of transformation is handled by Azure’s scalable cloud infrastructure, reducing the need for external computation resources during data ingestion. The elasticity of cloud computing allows for more cost-efficient data processing.

Conclusion

In summary, the choice between ETL and ELT in Azure largely depends on the nature of your data processing needs. ETL is preferred for more complex transformations, while ELT provides better scalability, performance, and cost-efficiency when working with large datasets. Both approaches have their place in modern data workflows, and Azure’s cloud-native tools provide the flexibility to implement either process based on your specific requirements. By understanding the key differences between these processes, organizations can make informed decisions on how to best leverage Azure's ecosystem for their data processing and analytics needs.

Visualpath is the Best Software Online Training Institute in Hyderabad. Avail complete Azure Data Engineering worldwide. You will get the best course at an affordable cost.

Attend Free Demo

Call on - +91-9989971070.

Visit: https://www.visualpath.in/online-azure-data-engineer-course.html

WhatsApp: https://www.whatsapp.com/catalog/919989971070/

Visit Blog: https://azuredataengineering2.blogspot.com/

#Azure Data Engineer Course#Azure Data Engineering Certification#Azure Data Engineer Training In Hyderabad#Azure Data Engineer Training#Azure Data Engineer Training Online#Azure Data Engineer Course Online#Azure Data Engineer Online Training#Microsoft Azure Data Engineer

0 notes

Text

Understanding Row-Level Security (RLS) in T-SQL Server

Row-Level Security (RLS) in T-SQL Server: Implementation, Examples, and Best Practices Hello, fellow SQL enthusiasts! In this blog post, I will introduce you to Row-Level Security in T-SQL – one of the most important and powerful security features in T-SQL Server – Row-Level Security (RLS). RLS allows you to control access to rows in a database table based on the user executing the query. It…

0 notes

Text

Unraveling the Mystery: Why SQL Server Ignores Query Hints

Discover why SQL Server ignores query hints through practical T-SQL code examples and applications. Learn how to analyze and resolve ignored hints for optimized queries.

SQL Server query hints are powerful tools that allow developers to influence the optimizer’s behavior. However, sometimes these hints are unexpectedly ignored, leaving developers puzzled. In this article, we’ll explore practical T-SQL code examples and applications to understand why SQL Server might disregard a given query hint. Conflicting Query Hints One common reason for ignored query hints…

View On WordPress

0 notes

Text

8 Engaging Games for Developing Programming Skills

1. Oh My Git!

Git, an essential tool for software developers, can be daunting for beginners. _Oh My Git!_ addresses this challenge by providing a gamified introduction to Git commands and file manipulation. With visualizations of Git commands, this game allows developers to understand the effects of their instructions on files. Players can progress through levels to learn increasingly complex Git techniques, test commands on real Git repositories within the game, and practice remote interactions with team members.

Cost: Free

2. Vim Adventures

Vim, a powerful code editor, is a valuable skill for developers, but its command-line interface can be intimidating for beginners. _Vim Adventures_ offers an innovative approach to teaching essential Vim commands through gameplay. Players navigate through the game and solve puzzles using commands and keyboard shortcuts, effectively making these commands feel like second nature. This game provides a fun experience that eases the learning curve of Vim usage.

Cost: Free demo level; $25 for six months access to the full version.

3. CSS Diner

Styling front-end applications with CSS can be challenging due to the intricate rules of CSS selection. _CSS Diner_ aims to teach developers these rules through a game that uses animated food arrangements to represent HTML elements. Players are prompted to select specific food items by writing the correct CSS code, offering an interactive and engaging way to understand CSS selection.

Cost: Free

4. Screeps

_Screeps_ combines JavaScript programming with massively multiplayer online gameplay, creating self-sustaining colonies. Geared towards developers comfortable with JavaScript, this game allows players to interact through an interface similar to browser developer tools, enabling them to write and execute JavaScript code. The game also facilitates interactions between players, such as trading resources and attacking each other's colonies.

Cost: $14.99 on Steam

5. SQL Murder Mystery

Combining SQL queries with real sleuthing, _SQL Murder Mystery_ challenges players to execute SQL queries to gather information about a murder and find the culprit. This game effectively teaches different ways of extracting data and metadata using SQL queries, making it an entertaining and educational experience for novice and experienced players.

Cost: Free

6. Shenzhen I/O

In _Shenzhen I/O_, players take on the role of an engineer tasked with designing computer chips at a fictional electronics company. The game provides challenges that help players visualize the interface between hardware and software, emphasizing the efficient design of circuits. With a 47-page employee reference manual as the only source of help, players are expected to read the manual for instructions, making the game both educational and engaging.

Cost: $14.99 on Steam

7. Human Resource Machine

In _Human Resource Machine_, players work at a corporation where employees are automated instead of the work itself. Players program employee minions doing assembly-line work, using draggable commands that mimic assembly language. The game's design, combined with a grimly whimsical narrative, offers an entertaining way to understand programming concepts.

Cost: $14.99 on Steam

8. While True: learn()

_Machine learning can feel complex to those not familiar with it, but_ while True: learn() _aims to demystify it. The game takes players through different types of machine learning techniques, introducing new techniques in the order that they were invented in the real world. This game offers an engaging way to understand the steps involved in building working machine learning models.

Cost: $12.99 on Steam

These games provide an engaging and interactive approach to learning and practicing programming skills. Whether you're a beginner or an experienced developer, incorporating these games into your learning journey can enhance your understanding of programming concepts and technologies in a fun way.

Level up your programming skills while having a great time!

#gaming#skills#tutortacademy#tutort#learning#excel#technical#education#programming languages#programming#programmingskills

0 notes

Text

DATABASE SYSTEMS BLOG In this installment, we'll explore the functionalities and nuances of Microsoft SQL Server, PostgreSQL, and Redis, shedding light on how they handle data and contribute to various use cases.

As the digital landscape evolves, so do the demands placed on data storage, retrieval, and analysis. With Microsoft SQL Server's robustness in managing large-scale enterprise data, PostgreSQL's commitment to open-source extensibility, and Redis's lightning-fast in-memory capabilities, these databases offer distinct strengths catering to a diverse set of requirements.

Join us as we dissect the Hardware, Software, Procedure, Data, and People aspects of each database system. Whether you're an IT professional seeking the ideal solution for your organization or a curious individual eager to explore the intricacies of data management, this exploration promises to provide valuable insights into the world of database technology.

Microsoft SQL Server - Empowering Enterprise Data Management In the realm of relational database management systems (RDBMS), Microsoft SQL Server stands tall as a versatile solution catering to diverse enterprise needs. Let's delve into its core components:

Hardware Microsoft SQL Server is designed to leverage the capabilities of robust hardware. It thrives on multi-core processors, ample memory, and high-speed storage to handle large-scale data processing and analytics.

Software The SQL Server software suite includes the SQL Server Database Engine, which powers data storage and processing. It also provides a suite of management tools, such as SQL Server Management Studio (SSMS), for administering and querying databases.

Procedure SQL Server employs the Transact-SQL (T-SQL) language for managing and querying data. T-SQL offers advanced features like stored procedures, triggers, and user-defined functions, enabling developers to build complex applications.

Data SQL Server organizes data in structured tables, following a relational model. It supports a wide range of data types, and users can define relationships between tables using primary and foreign keys. SQL Server's indexing and partitioning features enhance data retrieval performance.

People Database administrators (DBAs) oversee SQL Server instances, ensuring availability, performance, and security. Developers use T-SQL to create and manage databases. Business analysts and reporting professionals leverage SQL Server Reporting Services (SSRS) to generate meaningful insights.

PostgreSQL - Open-Source Powerhouse for Advanced Data Management

PostgreSQL, an open-source relational database management system, has gained immense popularity for its extensibility and adherence to SQL standards. Let's delve into its key components:

Hardware: PostgreSQL is flexible and can run on a variety of hardware configurations. It is optimized for performance on systems with multi-core processors, ample memory, and fast storage.

Software: The PostgreSQL software suite includes the PostgreSQL Server, responsible for data storage and retrieval. It offers a rich ecosystem of extensions and libraries, allowing users to tailor the database to their specific needs.

Procedure PostgreSQL employs SQL for querying and managing data. It also supports procedural languages like PL/pgSQL, enabling developers to create stored procedures, functions, and triggers to implement custom logic.

Data PostgreSQL follows a relational model and supports a wide range of data types. It offers advanced features such as JSONB data type for handling semi-structured data and supports indexing and partitioning for efficient data management.

People Database administrators manage PostgreSQL instances, ensuring optimal performance and security. Developers utilize SQL and procedural languages to build applications. Data analysts and scientists perform complex analyses using PostgreSQL's rich querying capabilities.

Redis - Unleashing Speed and Scalability with In-Memory Data Redis, a high-performance NoSQL database, is revered for its lightning-fast data storage and retrieval capabilities. Let's explore its core components:

Hardware Redis is optimized for in-memory data storage and is often deployed on servers with ample memory to deliver high-speed access to data.

Software Redis provides the Redis Server, which stores data in memory and offers various data structures, including strings, lists, sets, and more. It also includes client libraries for different programming languages.

Procedure Redis employs a simple command-line interface and supports a wide range of commands for data manipulation. It is well-suited for caching, real-time analytics, and messaging scenarios.

Data Redis stores data in memory, making it exceptionally fast for read-heavy workloads. It supports data structures like strings, lists, sets, and hashes, enabling developers to address diverse use cases efficiently.

People System administrators manage Redis instances, ensuring availability and performance. Developers integrate Redis into applications to enhance data access speed. Data analysts and developers leverage Redis for caching and real-time data processing. by Maureen Marie A. Portillo IT Student DBMS LOGOS

1 note

·

View note

Text

Power BI Certification Training – Unlock Your Bright Future Goals

Power BI is a Microsoft analytics tool that converts unstructured data into a visually compelling story. It consists of the Power BI Desktop, the SaaS Power BI Service, and several mobile apps (iOS and Android compatible).

There is more data in the world than we can use. Every organization is bombarded with data about processes, products, operations, customers, and everything else. If it has a digital footprint, it is somewhere out there.

The more we can learn from consumer behavior data, the more accurate our predictions will be. We’ll need the right tools for the job. This is where Microsoft Power BI plays a role.

Power BI Developers are the lifeline of any Power BI development project. Whatever project you are working on, you must have a basic understanding of the following topics:

ETL/data preparation processes

Data storage and retrieval

Modeling data/Star schema

Visualization of data

To be a successful Power BI developer, you must learn the following Power BI languages:

Power Query

DAX

Depending on the projects you will be working on, you may also need to learn the following languages:

Visual Basic for Windows (for Paginated Reports)

Python

R

T-SQL

PL/SQL

The Power BI Course from SkillIQ provides an overview of how to get started with this powerful tool. Our comprehensive training prepares you to use Power BI desktop, Power BI mobile, and Power BI Service. You’ll learn how to create Power BI reports and dashboards for team members as well as customers.

https://www.skilliq.co.in/blog/power-bi-certification-training-unlock-your-bright-future-goals/

For detailed inquiry

Contact us on: +91 7600 7800 67 / +91 7777-997-894

Email us at: [email protected]

#Power BI Course#Power BI Certification Course#Power BI Training#Power BI Training Institute#Power BI Course Training In Ahmedabad#Power BI Training In Ahmedabad

1 note

·

View note