#Top E-commerce Web Scraping API Services

Explore tagged Tumblr posts

Text

iWeb Scraping provides Top E-commerce Websites API Scraping services to scrape or Extract eCommerce sites with using API like Amazon Web Scraping API, Walmart Web Scraping API, eBay Web Scraping API, AliExpress Web Scraping API, Best Buy Web Scraping API, & Rakuten Web Scraping API.

For More Information:-

0 notes

Text

Top Programming Languages to Learn for Freelancing in India

The gig economy in India is blazing a trail and so is the demand for skilled programmers and developers. Among the biggest plus points for freelancing work is huge flexibility, independence, and money-making potential, which makes many techies go for it as a career option. However, with the endless list of languages available to choose from, which ones should you master to thrive as a freelance developer in India?

Deciding on the language is of paramount importance because at the end of the day, it needs to get you clients, lucrative projects that pay well, and the foundation for your complete freelance career. Here is a list of some of the top programming languages to learn for freelancing in India along with their market demand, types of projects, and earning potential.

Why Freelance Programming is a Smart Career Choice

Let's lay out really fast the benefits of freelance programmer in India before the languages:

Flexibility: Work from any place, on the hours you choose, and with the workload of your preference.

Diverse Projects: Different industries and technologies put your skills to test.

Increased Earning Potential: When most people make the shift toward freelancing, they rapidly find that the rates offered often surpass customary salaries-with growing experience.

Skill Growth: New learning keeps on taking place in terms of new technology and problem-solving.

Autonomy: Your own person and the evolution of your brand.

Top Programming Languages for Freelancing in India:

Python:

Why it's great for freelancing: Python's versatility is its superpower. It's used for web development (Django, Flask), data science, machine learning, AI, scripting, automation, and even basic game development. This wide range of applications means a vast pool of freelance projects. Clients often seek Python developers for data analysis, building custom scripts, or developing backend APIs.

Freelance Project Examples: Data cleaning scripts, AI model integration, web scraping, custom automation tools, backend for web/mobile apps.

JavaScript (with Frameworks like React, Angular, Node.js):

Why it's great for freelancing: JavaScript is indispensable for web development. As the language of the internet, it allows you to build interactive front-end interfaces (React, Angular, Vue.js) and powerful back-end servers (Node.js). Full-stack JavaScript developers are in exceptionally high demand.

Freelance Project Examples: Interactive websites, single-page applications (SPAs), e-commerce platforms, custom web tools, APIs.

PHP (with Frameworks like Laravel, WordPress):

Why it's great for freelancing: While newer languages emerge, PHP continues to power a significant portion of the web, including WordPress – which dominates the CMS market. Knowledge of PHP, especially with frameworks like Laravel or Symfony, opens up a massive market for website development, customization, and maintenance.

Freelance Project Examples: WordPress theme/plugin development, custom CMS solutions, e-commerce site development, existing website maintenance.

Java:

Why it's great for freelancing: Java is a powerhouse for enterprise-level applications, Android mobile app development, and large-scale backend systems. Many established businesses and startups require Java expertise for robust, scalable solutions.

Freelance Project Examples: Android app development, enterprise software development, backend API development, migration projects.

SQL (Structured Query Language):

Why it's great for freelancing: While not a full-fledged programming language for building applications, SQL is the language of databases, and almost every application relies on one. Freelancers proficient in SQL can offer services in database design, optimization, data extraction, and reporting. It often complements other languages.

Freelance Project Examples: Database design and optimization, custom report generation, data migration, data cleaning for analytics projects.

Swift/Kotlin (for Mobile Development):

Why it's great for freelancing: With the explosive growth of smartphone usage, mobile app development remains a goldmine for freelancers. Swift is for iOS (Apple) apps, and Kotlin is primarily for Android. Specializing in one or both can carve out a lucrative niche.

Freelance Project Examples: Custom mobile applications for businesses, utility apps, game development, app maintenance and updates.

How to Choose Your First Freelance Language:

Consider Your Interests: What kind of projects excite you? Web, mobile, data, or something else?

Research Market Demand: Look at popular freelance platforms (Upwork, Fiverr, Freelancer.in) for the types of projects most requested in India.

Start with a Beginner-Friendly Language: Python or JavaScript is an excellent start due to their immense resources and helpful communities.

Focus on a Niche: Instead of trying to learn everything, go extremely deep on one or two languages within a domain (e.g., Python for data science, JavaScript for MERN stack development).

To be a successful freelance programmer in India, technical skills have to be combined with powerful communication, project management, and self-discipline. By mastering either one or all of these top programming languages, you will be set to seize exciting opportunities and project yourself as an independent professional in the ever-evolving digital domain.

Contact us

Location: Bopal & Iskcon-Ambli in Ahmedabad, Gujarat

Call now on +91 9825618292

Visit Our Website: http://tccicomputercoaching.com/

#Freelance Programming#Freelance India#Programming Languages#Coding for Freelancers#Learn to Code#Python#JavaScript#Java#PHP#SQL#Mobile Development#Freelance Developer#TCCI Computer Coaching

0 notes

Text

Amazon Scraper API Made Easy: Get Product, Price, & Review Data

If you’re in the world of e-commerce, market research, or product analytics, then you know how vital it is to have the right data at the right time. Enter the Amazon Scraper API—your key to unlocking real-time, accurate, and comprehensive product, price, and review information from the world's largest online marketplace. With this amazon scraper, you can streamline data collection and focus on making data-driven decisions that drive results.

Accessing Amazon’s extensive product listings and user-generated content manually is not only tedious but also inefficient. Fortunately, the Amazon Scraper API automates this process, allowing businesses of all sizes to extract relevant information with speed and precision. Whether you're comparing competitor pricing, tracking market trends, or analyzing customer feedback, this tool is your secret weapon.

Using an amazon scraper is more than just about automation—it’s about gaining insights that can redefine your strategy. From optimizing listings to enhancing customer experience, real-time data gives you the leverage you need. In this blog, we’ll explore what makes the Amazon Scraper API a game-changer, how it works, and how you can use it to elevate your business.

What is an Amazon Scraper API?

An Amazon Scraper API is a specialized software interface that allows users to programmatically extract structured data from Amazon without manual intervention. It acts as a bridge between your application and Amazon's web pages, parsing and delivering product data, prices, reviews, and more in machine-readable formats like JSON or XML. This automated process enables businesses to bypass the tedious and error-prone task of manual scraping, making data collection faster and more accurate.

One of the key benefits of an Amazon Scraper API is its adaptability. Whether you're looking to fetch thousands of listings or specific review details, this amazon data scraper can be tailored to your exact needs. Developers appreciate its ease of integration into various platforms, and analysts value the real-time insights it offers.

Why You Need an Amazon Scraper API

The Amazon marketplace is a data-rich environment, and leveraging this data gives you a competitive advantage. Here are some scenarios where an Amazon Scraper API becomes indispensable:

1. Market Research: Identify top-performing products, monitor trends, and analyze competition. With accurate data in hand, businesses can launch new products or services with confidence, knowing there's a demand or market gap to fill.

2. Price Monitoring: Stay updated with real-time price fluctuations to remain competitive. Automated price tracking via an amazon price scraper allows businesses to react instantly to competitors' changes.

3. Inventory Management: Understand product availability and stock levels. This can help avoid stock outs or overstocking. Retailers can optimize supply chains and restocking processes with the help of an amazon product scraper.

4. Consumer Sentiment Analysis: Use review data to improve offerings. With Amazon Review Scraping, businesses can analyze customer sentiment to refine product development and service strategies.

5. Competitor Benchmarking: Compare products across sellers to evaluate strengths and weaknesses. An amazon web scraper helps gather structured data that fuels sharper insights and marketing decisions.

6. SEO and Content Strategy: Extract keyword-rich product titles and descriptions. With amazon review scraper tools, you can identify high-impact phrases to enrich your content strategies.

7. Trend Identification: Spot emerging trends by analyzing changes in product popularity, pricing, or review sentiment over time. The ability to scrape amazon product data empowers brands to respond proactively to market shifts.

Key Features of a Powerful Amazon Scraper API

Choosing the right Amazon Scraper API can significantly enhance your e-commerce data strategy. Here are the essential features to look for:

Scalability: Seamlessly handle thousands—even millions—of requests. A truly scalable Amazon data scraper supports massive workloads without compromising speed or stability.

High Accuracy: Get real-time, up-to-date data with high precision. Top-tier Amazon data extraction tools constantly adapt to Amazon’s evolving structure to ensure consistency.

Geo-Targeted Scraping: Extract localized data across regions. Whether it's pricing, availability, or listings, geo-targeted Amazon scraping is essential for global reach.

Advanced Pagination & Sorting: Retrieve data by page number, relevance, rating, or price. This allows structured, efficient scraping for vast product categories.

Custom Query Filters: Use ASINs, keywords, or category filters for targeted extraction. A flexible Amazon scraper API ensures you collect only the data you need.

CAPTCHA & Anti-Bot Bypass: Navigate CAPTCHAs and Amazon’s anti-scraping mechanisms using advanced, bot-resilient APIs.

Flexible Output Formats: Export data in JSON, CSV, XML, or your preferred format. This enhances integration with your applications and dashboards.

Rate Limiting Controls: Stay compliant while maximizing your scraping potential. Good Amazon APIs balance speed with stealth.

Real-Time Updates: Track price drops, stock changes, and reviews in real time—critical for reactive, data-driven decisions.

Developer-Friendly Documentation: Enjoy a smoother experience with comprehensive guides, SDKs, and sample codes—especially crucial for rapid deployment and error-free scaling.

How the Amazon Scraper API Works

The architecture behind an Amazon Scraper API is engineered for robust, scalable scraping, high accuracy, and user-friendliness. At a high level, this powerful Amazon data scraping tool functions through the following core steps:

1. Send Request: Users initiate queries using ASINs, keywords, category names, or filters like price range and review thresholds. This flexibility supports tailored Amazon data retrieval.

2. Secure & Compliant Interactions: Advanced APIs utilize proxy rotation, CAPTCHA solving, and header spoofing to ensure anti-blocking Amazon scraping that mimics legitimate user behavior, maintaining access while complying with Amazon’s standards.

3. Fetch and Parse Data: Once the target data is located, the API extracts and returns it in structured formats such as JSON or CSV. Data includes pricing, availability, shipping details, reviews, ratings, and more—ready for dashboards, databases, or e-commerce tools.

4. Real-Time Updates: Delivering real-time Amazon data is a core advantage. Businesses can act instantly on dynamic pricing shifts, consumer trends, or inventory changes.

5. Error Handling & Reliability: Intelligent retry logic and error management keep the API running smoothly, even when Amazon updates its site structure, ensuring maximum scraping reliability.

6. Scalable Data Retrieval: Designed for both startups and enterprises, modern APIs handle everything from small-scale queries to high-volume Amazon scraping using asynchronous processing and optimized rate limits.

Top 6 Amazon Scraper APIs to Scrape Data from Amazon

1. TagX Amazon Scraper API

TagX offers a robust and developer-friendly Amazon Scraper API designed to deliver accurate, scalable, and real-time access to product, pricing, and review data. Built with enterprise-grade infrastructure, the API is tailored for businesses that need high-volume data retrieval with consistent uptime and seamless integration.

It stands out with anti-blocking mechanisms, smart proxy rotation, and responsive documentation, making it easy for both startups and large enterprises to deploy and scale their scraping efforts quickly. Whether you're monitoring price fluctuations, gathering review insights, or tracking inventory availability, TagX ensures precision and compliance every step of the way.

Key Features:

High-volume request support with 99.9% uptime.

Smart proxy rotation and CAPTCHA bypassing.

Real-time data scraping with low latency.

Easy-to-integrate with structured JSON/CSV outputs.

Comprehensive support for reviews, ratings, pricing, and more.

2. Zyte Amazon Scraper API

Zyte offers a comprehensive Amazon scraping solution tailored for businesses that need precision and performance. Known for its ultra-fast response times and nearly perfect success rate across millions of Amazon URLs, Zyte is an excellent choice for enterprise-grade projects. Its machine learning-powered proxy rotation and smart fingerprinting ensure you're always getting clean data, while dynamic parsing helps you retrieve exactly what you need—from prices and availability to reviews and ratings.

Key Features:

Ultra-reliable with 100% success rate on over a million Amazon URLs.

Rapid response speeds averaging under 200ms.

Smart proxy rotation powered by machine learning.

Dynamic data parsing for pricing, availability, reviews, and more.

3. Oxylabs Amazon Scraper API

Oxylabs delivers a high-performing API for Amazon data extraction, engineered for both real-time and bulk scraping needs. It supports dynamic JavaScript rendering, making it ideal for dealing with Amazon’s complex front-end structures. Robust proxy management and high reliability ensure smooth data collection for large-scale operations. Perfect for businesses seeking consistency and depth in their scraping workflows.

Key Features:

99.9% success rate on product pages.

Fast average response time (~250ms).

Offers both real-time and batch processing.

Built-in dynamic JavaScript rendering for tough-to-reach data.

4. Bright Data Amazon Scraper API

Bright Data provides a flexible and feature-rich API designed for heavy-duty Amazon scraping. It comes equipped with advanced scraping tools, including automatic CAPTCHA solving and JavaScript rendering, while also offering full compliance with ethical web scraping standards. It’s particularly favored by data-centric businesses that require validated, structured, and scalable data collection.

Key Features:

Automatic IP rotation and CAPTCHA solving.

Support for JavaScript rendering for dynamic pages.

Structured data parsing and output validation.

Compliant, secure, and enterprise-ready.

5. ScraperAPI

ScraperAPI focuses on simplicity and developer control, making it perfect for teams who want easy integration with their own tools. It takes care of all the heavy lifting—proxies, browsers, CAPTCHAs—so developers can focus on building applications. Its customization flexibility and JSON parsing capabilities make it a top choice for startups and mid-sized projects.

Key Features:

Smart proxy rotation and automatic CAPTCHA handling.

Custom headers and query support.

JSON output for seamless integration.

Supports JavaScript rendering for complex pages.

6. SerpApi Amazon Scraper

SerpApi offers an intuitive and lightweight API that is ideal for fetching Amazon product search results quickly and reliably. Built for speed, SerpApi is especially well-suited for real-time tasks and applications that need low-latency scraping. With flexible filters and multi-language support, it’s a great tool for localized e-commerce tracking and analysis.

Key Features:

Fast and accurate search result scraping.

Clean JSON output formatting.

Built-in CAPTCHA bypass.

Localized filtering and multi-region support.

Conclusion

In the ever-evolving digital commerce landscape, real-time Amazon data scraping can mean the difference between thriving and merely surviving. TagX’s Amazon Scraper API stands out as one of the most reliable and developer-friendly tools for seamless Amazon data extraction.

With a robust infrastructure, unmatched accuracy, and smooth integration, TagX empowers businesses to make smart, data-driven decisions. Its anti-blocking mechanisms, customizable endpoints, and developer-focused documentation ensure efficient, scalable scraping without interruptions.

Whether you're tracking Amazon pricing trends, monitoring product availability, or decoding consumer sentiment, TagX delivers fast, secure, and compliant access to real-time Amazon data. From agile startups to enterprise powerhouses, the platform grows with your business—fueling smarter inventory planning, better marketing strategies, and competitive insights.

Don’t settle for less in a competitive marketplace. Experience the strategic advantage of TagX—your ultimate Amazon scraping API.

Try TagX’s Amazon Scraper API today and unlock the full potential of Amazon data!

Original Source, https://www.tagxdata.com/amazon-scraper-api-made-easy-get-product-price-and-review-data

0 notes

Text

Unlock the Power of Web Scraping Services for Data-Driven Success

Why Businesses Need Web Scraping Services?

Web scraping enables organizations to collect vast amounts of structured data efficiently. Here’s how businesses benefit:

Market Research & Competitive Analysis – Stay ahead by monitoring competitor prices, strategies, and trends.

Lead Generation & Sales Insights – Gather potential leads and customer insights from various platforms.

Real-Time Data Access – Automate data collection to access the latest industry trends and updates.

Top Use Cases of Web Scraping Services

1. Data Extraction for Indian Markets

For businesses targeting the Indian market, specialized data extraction services in India can scrape valuable data from news portals, e-commerce websites, and other local sources to refine business strategies.

2. SERP Scraping for SEO & Marketing

A SERP scraping API helps businesses track keyword rankings, analyze search engine results, and monitor competitor visibility. This data-driven approach enhances SEO strategies and online presence.

3. Real Estate Data Scraping

For real estate professionals and investors, scraping real estate data provides insights into property listings, pricing trends, and rental data, making informed decision-making easier.

4. E-commerce & Amazon Data Scraping

E-commerce businesses can leverage Amazon seller scraper to track best-selling products, price fluctuations, and customer reviews, optimizing their sales strategy accordingly.

Why Choose Actowiz Solutions for Web Scraping?

Actowiz Solutions specializes in robust and scalable web scraping services, ensuring:

High-quality, structured data extraction

Compliance with data regulations

Automated and real-time data updates

Whether you need web scraping for market research, price monitoring, or competitor analysis, our customized solutions cater to various industries.

Get Started Today!Harness the power of data with our web scraping service and drive business success. Contact Actowiz Solutions for tailored solutions that meet your data needs!

#WebScraping#DataExtraction#DataAnalytics#SERPScraping#EcommerceData#RealEstateData#AmazonScraper#CompetitiveAnalysis#MarketResearch#LeadGeneration#SEO#BusinessIntelligence#ActowizSolutions#BigData#Automation#TechSolutions#DigitalTransformation

0 notes

Text

Top 5 Data Scraping Tools for 2025

In the data-driven era, data collectors (Web Scraping Tools) have become an important tool for extracting valuable information from the Internet. Whether it is market research, competitive analysis or academic research, data collectors can help users efficiently obtain the required data. This article will introduce the 5 most popular data collectors abroad, including their features, applicable scenarios, advantages and disadvantages, to help you choose the most suitable tool.

1. ScrapeStorm

ScrapeStorm is an intelligent data collection tool based on artificial intelligence, which is widely popular for its ease of use and powerful functions. It supports multiple data collection modes and is suitable for users of different technical levels.

Main features:

Intelligent identification: Based on AI technology, it can automatically identify data fields in web pages and reduce the workload of manual configuration.

Multi-task support: Supports running multiple collection tasks at the same time to improve efficiency.

Multiple export formats: Supports exporting data to Excel, CSV, JSON and other formats for subsequent analysis.

Cloud service integration: Provides cloud collection services, and users can complete data collection without local deployment.

Applicable scenarios:

Suitable for users who need to collect data quickly, especially beginners who do not have high requirements for technical background.

Suitable for scenarios such as e-commerce price monitoring and social media data collection.

Advantages:

Simple operation and low learning cost.

Supports multiple languages and website types.

Disadvantages:

Advanced features require paid subscriptions.

2. Octoparse

Octoparse is a powerful visual data collection tool suitable for extracting data from static and dynamic web pages.

Main features:

Visual operation: Through the drag-and-drop interface design, users can complete data collection tasks without writing code.

Dynamic web page support: Able to handle dynamic web pages rendered by JavaScript.

Cloud collection and scheduling: Supports scheduled collection and cloud deployment, suitable for large-scale data collection needs.

Applicable scenarios:

Suitable for users who need to extract data from complex web page structures.

Applicable to data collection in e-commerce, finance, real estate and other fields.

Advantages:

User-friendly interface, suitable for non-technical users.

Supports multiple data export methods.

Disadvantages:

The free version has limited functions, and advanced functions require payment.

3. ParseHub

ParseHub is a cloud-based data collection tool known for its powerful functions and flexibility.

Main features:

Multi-level data collection: supports extracting data from multi-level web pages, suitable for complex websites.

API support: provides API interface for easy integration with other systems.

Cross-platform support: supports Windows, Mac and Linux systems.

Applicable scenarios:

Suitable for users who need to extract data from multi-level web pages.

Suitable for scenarios such as academic research and market analysis.

Advantages:

Powerful functions and support for complex web page structures.

Free version is available, suitable for individual users.

Disadvantages:

The learning curve is steep, and novices may need time to adapt.

4. Scrapy

Scrapy is an open source Python framework suitable for developers to build custom data collection tools.

Main features:

Highly customizable: developers can write custom scripts according to needs to implement complex data collection logic.

High performance: based on asynchronous framework design, it can efficiently handle large-scale data collection tasks.

Rich extension library: supports multiple plug-ins and extensions, with flexible functions.

Applicable scenarios:

Suitable for developers with programming experience.

Applicable to scenarios that require highly customized data collection.

Advantages:

Completely free, with strong support from the open source community.

Suitable for handling large-scale data collection tasks.

Disadvantages:

Requires programming knowledge, not suitable for non-technical users.

5. Import.io

Import.io is a cloud-based data collection platform that focuses on converting web page data into structured data.

Main features:

Automated collection: supports automated data extraction and update.

API integration: provides RESTful API for easy integration with other applications.

Data cleaning function: built-in data cleaning tools to ensure data quality.

Applicable scenarios:

Suitable for users who need to convert web page data into structured data.

Applicable to business intelligence, data analysis and other scenarios.

Advantages:

High degree of automation, suitable for long-term data monitoring.

Provides data cleaning function to reduce post-processing workload.

Disadvantages:

Higher price, suitable for enterprise users.

How to choose the right data collector?

Choose based on technical background

If you have no programming experience, you can choose ScrapeStorm or Octoparse.

If you are a developer, Scrapy may be a better choice.

Choose based on data needs

If you need to handle complex web page structures, ParseHub and Scrapy are good choices.

If you need to monitor data for a long time, Import.io's automation function is more suitable.

Choose based on budget

If you have a limited budget, ScrapeStorm is free, while ScrapeStorm and Octoparse offer free versions.

If you need enterprise-level features, the premium versions of Import.io and ScrapeStorm are worth considering.

No matter which tool you choose, the data collector can provide you with powerful data support to help you get ahead in the data-driven world.

0 notes

Text

Benefits of Amazon Product Rankings Data Scraping Services

What Are the Benefits of Using Amazon Product Rankings Data Scraping Services?

Introduction

In the fiercely competitive realm of eCommerce, grasping market trends and consumer behavior is essential. Amazon, a significant force in online retail, provides a wealth of data ideal for various applications such as market analysis, competitive intelligence, and product enhancement. One of the most crucial data points for businesses and analysts is Amazon product ranking data scraping services. These services help extract Amazon product ranking information, offering insights into product performance relative to competitors.

Utilizing these services enables businesses to extract e-commerce website data effectively. This includes understanding market dynamics and adjusting strategies based on product rankings. For comprehensive insights, companies often seek to scrape retail product price data to monitor pricing trends and inventory levels. However, while the data is invaluable, the process involves challenges such as navigating Amazon's anti-scraping measures and ensuring data accuracy. By employing best practices, businesses can overcome these challenges and leverage the data to drive strategic decisions and gain a competitive edge in online retail.

The Importance of Scraping Product Ranking Data

Product ranking data on Amazon is a crucial metric for understanding how products perform compared to their competitors. This data holds significant value for several key reasons:

1. Market Trends: Businesses can gain insights into which products are leading the market by utilizing services that scrape Amazon's top product rankings. Understanding market trends and consumer preferences allows companies to adjust their product offerings and marketing strategies to better align with current demands.

2. Competitive Analysis: Analyzing the rankings of competitors' products through an Amazon product data scraping service provides valuable insights into their strengths and weaknesses. This analysis helps identify market gaps and opportunities for differentiation, enabling businesses to position their products strategically.

3. Optimization Strategies: Knowing which products perform well allows sellers and marketers to refine their strategies. Utilizing Amazon product datasets helps optimize pricing, enhance product descriptions, and improve customer service, which can lead to better sales performance.

4. Inventory Management: Product ranking data is instrumental in managing inventory effectively. By web scraping e-commerce website data, businesses can identify which products are in high demand and which are not. This helps make informed decisions about stock levels, reducing the risk of overstocking or stockouts and ensuring efficient inventory management.

Incorporating these insights through an eCommerce data scraper can significantly enhance business strategies and operational efficiency.

Methods of Scraping Product Ranking Data

Scraping product ranking data from Amazon involves extracting various metrics, including product positions, reviews, ratings, and other relevant information. Here's an in-depth look at the methods used, incorporating essential tools and services:

1. Web Scraping: is the most common method for extracting data from websites. It involves employing web crawlers or scrapers to navigate Amazon's pages and collect data. Popular tools for this purpose include BeautifulSoup, Scrapy, and Selenium. These tools can automate the data extraction process and handle complex data structures effectively. An Amazon products data scraper systematically extracts and organizes ranking data.

2. Amazon API: Amazon provides various APIs, such as the Amazon Product Scraping API, which offers structured access to product details, including rankings, reviews, and prices. This method is generally more reliable and organized than traditional web scraping, though it comes with usage limits and requires adherence to Amazon's terms of service. The API facilitates detailed and accurate data extraction.

3. Data Aggregators: Some third-party services specialize in aggregating product data from Amazon and other eCommerce platforms. These services provide pre-packaged datasets that are ready for analysis, eliminating the need for manual scraping. However, these aggregated datasets might only sometimes be as up-to-date or detailed as data collected directly through other methods.

4. Manual Data Collection: Manual data collection can be employed for specific needs or smaller-scale projects. This involves visiting Amazon's website directly to record product rankings, reviews, and ratings. While labor-intensive, manual collection can be helpful for targeted data gathering on a limited scale. Scrape Amazon product reviews and ratings using automated methods for comprehensive data collection.

Each method has advantages and challenges, and the choice depends on the project's specific requirements and the scale of data needed.

Challenges in Scraping Product Ranking Data

While scraping product ranking data from Amazon can provide valuable insights, several challenges must be addressed:

1. Data Accuracy: It is crucial to ensure the accuracy of the scraped data. Amazon's website structure and ranking algorithms can change frequently, affecting the reliability of the data.

2. Anti-Scraping Measures: Amazon employs various anti-scraping measures to prevent automated data extraction. This includes CAPTCHA challenges, IP blocking, and rate limiting. Scrapers must navigate these barriers to obtain data.

3. Legal and Ethical Considerations: Scraping data from websites can raise legal and ethical issues. It's essential to adhere to Amazon's terms of service and data usage policies. Unauthorized scraping can lead to legal consequences and damage a business's reputation.

4. Data Volume and Management: Amazon offers vast amounts of data, and managing this data can be challenging. Storing, processing, and analyzing large volumes of data requires robust data management systems and infrastructure.

5. Data Freshness: Product rankings can change rapidly due to fluctuations in sales, reviews, and other factors. Ensuring that the data is up-to-date is crucial for accurate analysis.

Best Practices for Scraping Product Ranking Data

To effectively scrape product ranking data from Amazon, consider the following best practices:

1. Respect Amazon's Terms of Service: Always ensure that your scraping activities comply with Amazon's terms of service. This helps avoid legal issues and ensures ethical practices.

2. Use Proxies and IP Rotation: To circumvent IP blocking and rate limiting, use proxies and IP rotation techniques. This helps distribute requests and reduces the risk of being blocked.

3. Implement Error Handling and Retry Mechanisms: Due to potential disruptions and changes in Amazon's website structure, implement error handling and retry mechanisms in your scraping process. This ensures the reliability and completeness of the data.

4. Monitor Data Quality: Regularly monitor the quality of the scraped data to ensure accuracy and relevance. Implement validation checks to identify and address data inconsistencies.

5. Update Scrapers Regularly: Amazon's website and ranking algorithms can change frequently. Update your scrapers regularly to adapt to these changes and maintain data accuracy.

6. Handle Data Responsibly: Ensure that the data collected is used responsibly and by privacy and data protection regulations. Avoid storing or misusing sensitive information.

Conclusion

Scraping product ranking data from Amazon offers valuable insights into market trends, competitive dynamics, and product performance. Utilizing Amazon Product Rankings Data Scraping Services allows businesses to track and analyze product rankings effectively. While the process involves various methods and tools, it also comes with challenges that need careful consideration. By adhering to best practices and focusing on data accuracy and ethical standards, businesses and analysts can leverage eCommerce data scraping from Amazon to drive informed decision-making and gain a competitive edge in the eCommerce landscape.

Experience top-notch web scraping service and mobile app scraping solutions with iWeb Data Scraping. Our skilled team excels in extracting various data sets, including retail store locations and beyond. Connect with us today to learn how our customized services can address your unique project needs, delivering the highest efficiency and dependability for all your data requirements.

Source: https://www.iwebdatascraping.com/benefits-of-amazon-product-rankings-data-scraping-services.php

#AmazonProductRankingDataScrapingServices#ScrapingProductRankingData#ScrapeRetailProductPriceData#AmazonProductDataScraping#WebScrapingEcommerceWebsiteData#AmazonProductsDataScraper

0 notes

Text

E-commerce Web Scraping API for Accurate Product & Pricing Insights

Access structured e-commerce data efficiently with a robust web scraping API for online stores, marketplaces, and retail platforms. This API helps collect data on product listings, prices, reviews, stock availability, and seller details from top e-commerce sites. Ideal for businesses monitoring competitors, following trends, or managing records, it provides consistent and correct results. Built to scale, the service supports high-volume requests and delivers results in easy-to-integrate formats like JSON or CSV. Whether you need data from Amazon, eBay, or Walmart. iWeb Scraping provides unique e-commerce data scraping services. Learn more about the service components and pricing by visiting iWebScraping E-commerce Data Services.

0 notes

Text

Top amazon product scraper tool

With Outsource Bigdata's comprehensive Amazon product scraper tools and services, companies can turn their digital transformation journey into an automated one. As an AI-powered company, we specialize in enhancing customer experiences and driving business results. We prioritize result-oriented Amazon scraping tools and services, along with data preparation, including IT application management.

Visit: https://outsourcebigdata.com/data-automation/web-scraping-services/amazon-scraping-tools-services/

About AIMLEAP

Outsource Bigdata is a division of Aimleap. AIMLEAP is an ISO 9001:2015 and ISO/IEC 27001:2013 certified global technology consulting and service provider offering AI-augmented Data Solutions, Data Engineering, Automation, IT Services, and Digital Marketing Services. AIMLEAP has been recognized as a ‘Great Place to Work®’.

With a special focus on AI and automation, we built quite a few AI & ML solutions, AI-driven web scraping solutions, AI-data Labeling, AI-Data-Hub, and Self-serving BI solutions. We started in 2012 and successfully delivered IT & digital transformation projects, automation-driven data solutions, on-demand data, and digital marketing for more than 750 fast-growing companies in the USA, Europe, New Zealand, Australia, Canada; and more.

-An ISO 9001:2015 and ISO/IEC 27001:2013 certified -Served 750+ customers -11+ Years of industry experience -98% client retention -Great Place to Work® certified -Global delivery centers in the USA, Canada, India & Australia

Our Data Solutions

APISCRAPY: AI driven web scraping & workflow automation platform APISCRAPY is an AI driven web scraping and automation platform that converts any web data into ready-to-use data. The platform is capable to extract data from websites, process data, automate workflows, classify data and integrate ready to consume data into database or deliver data in any desired format.

AI-Labeler: AI augmented annotation & labeling solution AI-Labeler is an AI augmented data annotation platform that combines the power of artificial intelligence with in-person involvement to label, annotate and classify data, and allowing faster development of robust and accurate models.

AI-Data-Hub: On-demand data for building AI products & services On-demand AI data hub for curated data, pre-annotated data, pre-classified data, and allowing enterprises to obtain easily and efficiently, and exploit high-quality data for training and developing AI models.

PRICESCRAPY: AI enabled real-time pricing solution An AI and automation driven price solution that provides real time price monitoring, pricing analytics, and dynamic pricing for companies across the world.

APIKART: AI driven data API solution hub APIKART is a data API hub that allows businesses and developers to access and integrate large volume of data from various sources through APIs. It is a data solution hub for accessing data through APIs, allowing companies to leverage data, and integrate APIs into their systems and applications.

Locations: USA: 1-30235 14656 Canada: +1 4378 370 063 India: +91 810 527 1615 Australia: +61 402 576 615 Email: [email protected]

0 notes

Text

How Scraping LinkedIn Data Can Give You a Competitive Edge Over Your Competitors?

In the era of digital world, data is an important part in any growing business. Apart from extracting core data for investigation, companies believe in the usefulness of open internet data for competitive benefits. There are various data resources, but we consider LinkedIn as the most advantageous data source.

LinkedIn is the largest social media website for professionals and businesses. Also, it is one of the best sources for social media data and job data. Using LinkedIn web scraping, it becomes easy to fetch the data fields to investigate performance.

LinkedIn holds a large amount of data for both businesses and researchers. Along with finding profile information of companies and businesses, it is also possible to get data for profile details of the employees. LinkedIn is the biggest platform for job posting and searching jobs related information.

LinkedIn does not provide an inclusive API that will allow data analysts to access.

How Valuable is LinkedIn Data?

LinkedIn has registered almost 772 million clients among 200 countries. According 2018 data, there is 70% growth in information workers for the LinkedIn platform among 1.25 billion global workers’ population.

There are about 55 million firms, 14 million disclosed job posts, and 36000 skills are mentioned in LinkedIn platform. Hence, it is proved that web data for LinkedIn is extremely important for Business Intelligence.

Which type of Information Is Extracted From LinkedIn Scraping?

Millions of data items are accessible on the platform. There is some useful information for customers and businesses. Here are several data points commonly utilized by LinkedIn extracting services. According to data facts, LinkedIn registers two or more users every second!

Public data

Having 772 million registered user accounts on LinkedIn and increasing, it is not possible to manually tap on the capital of such user information. There are around 100 data opinions available per LinkedIn account. Importantly, LinkedIn’s profile pattern is very reflective where a employer enters entire details of career or study history. Then it possible goes to rebuild an individual’s professional career from their work knowledge in a information.

Below shown is an overview of data points fetched from a personal LinkedIn users.

Company Data

Organizations invest resources for upholding their LinkedIn profile using key firmographic, job data, and public activity. Fetching main firmographic data will enhance companies to remain ahead of the opposite companies and investigate the market site. Firms need to search filters such as industry, business size, and geological location to receive detailed competitive intelligence.

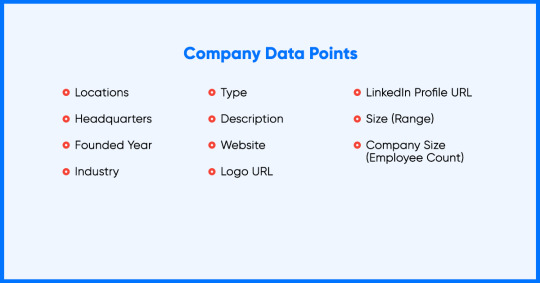

It is possible to scrape company’s data with necessary information as shown below:

Social Listening Information:

Either in research, business discipline, or any other economics, what data a top executive will share on social media platforms is as valuable as statistics with probable business impact. We can capture new market expansion, executive hires, and product failures, M&As, or departures. This is the fact because individuals and companies initiate to be more live on LinkedIn.

The point is to stay updated on those indicators by observing the social actions of the top experts and companies.

One can fetch data from the social actions of LinkedIn by highlighting the below data points.

How Businesses use LinkedIn Data Scraping?

Using all the available data, how will business effectively utilize the data to compete in the market. To learn this, there are few common use cases for information.

Research

Having the capability of rebuilding whole careers for creating a LinkedIn profile, one can only think how broad it is in the research arena.

Research institutions and top academic are initiating to explore the power of information and are even starting to integrate LinkedIn in their publications as a reference.

We can choose an example as fetching all the profiles corporated with company X from 2012 to 2018 and collecting data items such like skills, and interacting to the sales presentation of the company X. It can be assumed that few abilities found in workers would result in an outstanding company.

Human Resources

Human resources are all about people, and LinkedIn is a database record of experts across the globe. One creates a competitive advantage that goes to any recruiter, HR technical SaaS, or any service provider. It is obvious that searching them physically on LinkedIn is not that extensible, but it is valuable when you have all the data with you.

Alternative Information For Finance

Financial organizations are looking forward for data to calculate better investment capability of the company. That is where people’s data play an important role and drives the presentation of the company.

The capability of LinkedIn User information of all the employees cannot be judged. Organizations can utilize this information to monitor business hierarchies, educational backgrounds, functional compositions, and more. Furthermore, gathering the information will provide insights like key departures/ executive hires, talent acquisition policies, and geographical market expansions.

Ways to Extract LinkedIn Data

Looking forward to reader’s advantage, web data scraping for LinkedIn is a method that will use computer programming to enhance data fetching from several sources like sites, digital media platforms, e-commerce, and webpages business platforms. If someone is professional enough to build the scraper, then you can build it. But, extracting LinkedIn information has its challenges with a huge figure of information and social media platform control.

There are various LinkedIn data scraping tools available in the marketplace with distinguishing factors such as data points, data quality, and aggregation scale. Selecting the perfect data partner according to your company’s requirement is quite difficult.

Here at iWeb Scraping, our data collecting methods are continuously evolving in providing LinkedIn extracting service delivering scraping results with well-formatted complete data points, datasets, and even first-class data.

Conclusions

LinkedIn is a far-reaching and filter-after data source from public and company information. This is predictable for more growth as the company continues to utilize more employers. The possibility of using the volume of data is boundless and business firms might start capitalization for this opportunity.

Unless you know the effective methods for scraping, it is better to use LinkedIn scrapers developed by experts. Also, you can ping us for more assistance regarding LinkedIn data web scraping.

https://www.iwebscraping.com/how-scraping-linkedin-data-can-give-you-a-competitive-edge-over-your-competitors.php

6 notes

·

View notes

Text

Top 5 Web Scraping Tools in 2021

Web scraping, also known as Web harvesting, Web data extraction, is the process of obtaining and analyzing data from a website. After that, for various purposes, the extracted data is saved in a local database. Web crawling can be performed manually or automatically through the software. There is no doubt that automated processes are more cost-effective than manual processes. Because there is a large amount of digital information online, companies equipped with these tools can collect more data at a lower cost than they did not collect, and gain a competitive advantage in the long run.

Web scraping benefits businesses > HOW!

Modern companies build the best data. Although the Internet is actually the largest database in the world, the Internet is full of unstructured data, which organizations cannot use directly. Web scraping can help overcome this hurdle and turn the site into structured data, which in many cases is of great value. The benefits of sales and marketing of a specific type of web scraping are contact scraping, which can collect business contact information from websites. This helps to attract more sales leads, close more deals, and improve marketing. By using a web scraper to monitor job commission updates, recruiters can find ideal candidates with very specific searches. By monitoring job board updates with a web scrape, recruiters are able to find their ideal candidates with very specific searches. Financial analysts use web scraping to collect data about global stock markets, financial markets, transactions, commodities, and economic indicators to make better decisions. E-commerce / travel sites get product prices and availability from competitors and use the extracted data to maintain a competitive advantage. Get data from social media and review sites (Facebook, Twitter, Yelp, etc.). Monitor the impact of your brand and take your brand reputation / customer review department to a new level. It's also a great tool for data scientists and journalists. An automatic web scraping can collect millions of data points in your database in just a few minutes. This data can be used to support the data model and academic research. Moreover, if you are a journalist, you can collect rich data online to practice data-driven journalism.

Web scraping tools List.....

In many cases, you can use a web crawler to extract website data for your own use. You can use browser tools to extract data from the website you are browsing in a semi-automatic way, or you can use free API/paid services to automate the crawling process. If you are technically proficient, you can even use programming languages like Python to develop your own web dredging applications.

No matter what your goal is, there are some tools that suit your needs. This is our curated list of top web crawlers.

Scraper site API

License: FREE

Website: https://www.scrapersite.com/

Scraper site enables you to create scalable web detectors. It can handle proxy, browser, and verification code on your behalf, so you can get data from any webpage with a simple API call.

The location of the scraper is easy to integrate. Just send your GET request along with the API key and URL to their API endpoint and they'll return the HTML.

Scraper site is an extension for Chrome that is used to extract data from web pages. You can make a site map, and how and where the content should be taken. Then you can export the captured data to CSV.

Web Scraping Function List:

Checking in multiple pages

Dental data stored in local storage

Multiple data selection types

Extract data from dynamic pages (JavaScript + AJAX)

Browse the captured data

Export the captured data to CSV

Importing and exporting websites

It depends on Chrome browser only

The Chrome extension is completely free to use

Highlights: sitemap, e-commerce website, mobile page.

Beautiful soup

License: Free Site: https://www.crummy.com/software/BeautifulSoup/ Beautiful Soup is a popular Python language library designed for web scraping. Features list: • Some simple ways to navigate, search and modify the analysis tree • Document encodings are handled automatically • Provide different analysis strategies Highlights: Completely free, highly customizable, and developer-friendly.

dexi.io

License: Commercial, starting at $ 119 a month.

Site: https://dexi.io/

Dexi provides leading web dredging software for enterprises. Their solutions include web scraping, interaction, monitoring, and processing software to provide fast data insights that lead to better decisions and better business performance.

Features list:

• Scraping the web on a large scale Intelligent data mining • Real-time data points

Import.io

License: Commercial starts at $ 299 per month. Site: https://www.import.io/ Import.io provides a comprehensive web data integration solution that makes it fast, easy and affordable to increase the strategic value of your web data. It also has a professional service team who can help clients maximize the solution value. Features list: Pointing and clicking training • Interactive workflow • Scheduled abrasion • Machine learning proposal • Works with login • Generates website screen shots • Notifications upon completion • URL generator Highlights: Point and Click, Machine Learning, URL Builder, Interactive Workflow.

Scrapinghub

License: Commercial version, starting at $299 per month. Website: https://scrapinghub.com/ Scraping hub is the main creator and administrator of Scrapy, which is the most popular web scraping framework written in Python. They can also provide data services on demand in the following situations: product and price information, alternative financial data, competition and market research, sales and market research, news and content monitoring, and retail and distribution monitoring. functions list: • Scrapy Cloud crawling, standardized as Scrapy Cloud •Robot countermeasures and other challenges Focus: Scalable crawling cloud.

Highlights: scalable scraping cloud.

Summary The web is a huge repository of data. Firms involved in web dredging prefer to maintain a competitive advantage. Although the main objective of the aforementioned web dredging tools / services is to achieve the goal of converting a website into data, they differ in terms of functionality, price, ease of use, etc. We hope you can find the one that best suits your needs. Happy scraping!

2 notes

·

View notes

Text

E-Commerce Website Data Scraping Services

Web Scraping is the process where you can automate the process of data extraction in speed and a better manner. By this, you will come to know about implementing the use of crawlers or robots that automatically scrape a particular page and website and extract the required information. It can help you to extract data that is invisible and you can copy-past also. However, it can also help to take care of saving the extracted data in a better way and readable format. Usually, the extracted data is available in CSV format

3i Data Scraping Services can be useful in extracting product data from E-commerce Website Data Scraping Services doesn’t matter how big data is.

How to use Web Scraping for E-Commerce?

E-commerce data scraping is the best way to take out the better result. Before I should start various benefits of using an E-Commerce Product Scraper, I want to go over how you can use potentially it.

Evaluate Demand:

E-commerce data can be monitor to maintain all the categories, products, price, reviews, listing rates. By this, you can rearrange the entire product sale in various categories depending on various demands.

Better Price Strategy:

In this, you can use product data sets which include product name, categories, type of products, reviews, ratings, and you will get all the information from top e-commerce websites so that you can influence Competitors' pricing strategy and

Competitors’ Price Scraping from the eCommerce Website

Reseller Management:

From this, you can manage all your partners & resellers through E-Commerce Product Data Extraction data from all the different stores. Various types of data processing can be disclosed if there are different terms of MAP violation.

Marketplace Tracking:

You can easily monitor all your ranking which is advised for all the keywords for specific products through 3i Data Scraping Services and you can measure the competitors on how you can optimize

Product Review & ratings scraping

for ranking and you can scrape the data via different tools and we can able to help you to scrape the data for E-Commerce Website Data Scraper Tools.

Identify Frauds:

While using the crawling method which can automatically scrape the product data as well as you will be able to see Ups & Downs in the pricing. By this, you can utilize to discover the authenticity of a seller.

Campaign Monitoring:

There are many famous websites like Twitter, LinkedIn, Facebook, and YouTube, in which we can scrape the data like comments which is associated with brands as well as the competitor’s brands.

List of Data Fields

At 3i Data Scraping Services, we can scrape or extract the data fields for E-commerce Website Data Scraping Services. The list is given below:

Description

Product Name

Breadcrumbs

Price/Currency

Brand

MPN/GTIN/SKU

Images

Availability

Review Count

Average Rating

URL

Additional Properties

E-Commerce Web Scraping API

Our one of the best E-commerce web Scraping API Services using Python can extract different data from E-commerce sites to provide quick replies within real-time and can scrape E-Commerce Product Reviews within real-time. We do have the ability to automate the business processes using API as well as empower various apps and workflow within data integrations. You can easily use our ready to use customized APIs.

List of E-commerce Product Data Scraping, Web Scraping API

At 3i Data Scraping, we can scrape data fields for any of the web scraping API

Amazon API

BestBuy.com API

AliExpress API

eBay API

HM.com API

Costco.com API

Google Shopping API

Macys.com API

Nordstrom.com API

Target API

Walmart.com API

Tmall API

This is the above data fields for the web scraping API we can scrape or extract the data as per the client’s needs.

How You Can Scrape Product from Different Websites

The another way to scrape product information is you can easily make different API calls using product URL for claiming the product data in real-time. It is just like and close API for all the shopping websites.

Why 3i Data Scraping Services

We are providing the services in such a way that the customer experience should be wonderful. All the clients like to works with us and we are having a 99% customer retention ratio. We do have the team which talks to you within a few minutes and you can ask regarding your requirements.

We provide services that are scalable and capable of crawling that we have the capability to scrape thousands of pages per second as well as scraping data from millions of pages every day. Our wide-range infrastructure makes enormous scale for web scraping becomes easier and trouble-free through many complexes with JavaScript website or Ajax, IP Blocking, and CAPTCHA.

If you are looking for the best E-Commerce Data Scraping Services then contact 3i Data Scraping Services.

#Webscraping#datascraping#webdatascraping#web data extraction#web data scraping#Ecommerce#eCommerceWebScraping#3idatascraping#USA

1 note

·

View note

Text

Top 5 Web Scraping Tools in 2024

Web scraping tools are designed to grab the information needed on the website. Such tools can save a lot of time for data extraction.

Here is a list of 5 recommended tools with better functionality and effectiveness.

ScrapeStorm is an efficient data scraping tool based on artificial intelligence technology that automatically detects and collects content by simply entering a URL. It supports multi-threading, scheduled collection and other functions, making data collection more convenient.

Features:

1)Easy-to-use interface.

2)RESTful API and Webhook

3)Automatic Identification of E-commerce SKU and big images

Cons:

No cloud services

ParseHub is a robust, browser-based web scraping tool that offers straightforward data extraction in a no-code environment. It’s designed with user-friendly features and functionalities in mind, and has amassed quite the following thanks to its free point of entry.

Features:

1)Easy-to-use interface.

2)Option to scheduling scraping runs as needed.

3)Ability to scrape dynamic websites built with JavaScript or AJAX.

Cons:

1)Although not steep, there might be a learning curve for absolute beginners.

2)It lacks some advanced feature sets needed by larger corporations, as reflected in its comparative affordability.

Dexi.io stands out for offering more than web scraping. It’s a specialist in intelligent automation, revolutionizing data extraction.

Features:

1)Can handle complex sites, including dynamic AJAX or JavaScript-filled pages.

2)Offers advanced logic functionality.

3)Delivers cloud-based operation, ensuring high-speed processing.

Cons:

1)The multitude of features might frustrate or bamboozle beginners.

2)Imperfections mean debugging is sometimes necessary.

Zyte, rebranded from Scrapinghub, is a comprehensive no-code web scraping solution offering powerful automation capabilities beyond basic data extraction.

Features:

1)IP rotation to counter blocking while scraping.

2)Built-in storage for scraped data, supplied via the cloud.

3)Additional services such as data cleansing available.

Cons:

1)Prioritizes a hands-off approach, so it’s less like web scraping, and more like outsourcing.

2)Offers an API for total data collection control, but this requires more technical knowledge to harness

Import.io champions itself as a comprehensive tool for turning web pages into actionable data, seamlessly catering to personal use and professional requirements. Machine learning integration lets it understand how you want it to work, and get better over time.

Features:

1)User-friendly dashboard and flexible API.

2)Real-time data updates.

3)Intelligent recognition makes scraping even from complicated sources easy.

Cons:

1)May struggle with websites using AJAX or JavaScript.

2)Some learning curve involved in setting up more complex scrapes.

0 notes

Text

Awesome Things to Do with PHP Web Development in 2023

PHP is a versatile programming language that is used for web development. When it comes to website development, PHP web development is always a popular choice for many years. PHP continues to evolve, it provides developers with new opportunities and creates amazing websites with its flexibility and functionality. Many incredible things can be done with PHP web development. As a website development company in India, you can leverage the power of PHP to create amazing websites that are both functional and attractive. We will explore some of the top things that website development companies in India and website developers in India can do with PHP in 2023.

PHP web development is used to create responsive websites which adapt to any device. With the increasing use of mobile devices, websites must be optimized for mobile screens. Using PHP web development techniques, website developers in India can create responsive websites and provide a seamless user experience across all devices.

PHP web development can be used to create e-commerce websites that are feature-rich and highly customizable. Online shopping has become a part of our daily lives, and businesses need to have an e-commerce website that meets to the needs of their customers. With PHP web development website development companies in India can create e-commerce websites that are visually appealing, easy to navigate, and provide a seamless checkout process.

PHP web development can be used to create customized content. Without requiring technical knowledge CMS allows website owners to create, manage, and publish digital content. To get fit your client's specific needs you can build custom CMS with PHP, you can use frameworks like WordPress, Joomla, and Drupal to create a user-friendly and scalable CMS that offers robust functionality.

PHP web development can be used to create dynamic web applications that provide a rich user experience. Website developers in India can create web applications with PHP that are highly interactive, responsive, and provide real-time updates. This will create opportunities to create web applications for various industries.

PWAs are web apps that offer an immersive user experience and offline functionality. They look and feel like native mobile apps but are accessed through a browser and can be installed on a user's home screen. With PHP, you can build PWAs that are fast, reliable, and engaging.

Chatbots are gaining popularity as they offer personalized customer support, answer frequently asked questions, and provide round-the-clock assistance. With PHP, you can build chatbots that integrate with popular messaging platforms.

With PHP, you can build web scrapers that can collect data from multiple sources and store them in a database. You can use this data for market research, competitor analysis, and lead generation.

With PHP, you can build web scrapers that can collect data from multiple sources and store them in a database. Web scraping is the process that automatically extracts data from websites. This data is used further for market research, competitor analysis, and lead generation.

With PHP, you can create APIs that can be integrated with various applications and services. You can use frameworks like Laravel, Symfony, and CodeIgniter to build robust APIs that offer security, scalability, and flexibility and the systems can communicate with each other seamlessly.

Interactive dashboards allow users to visualize data and make informed decisions. With PHP, you can build interactive dashboards that integrate with various data sources like databases, APIs, and spreadsheets. You can use frameworks like D3.js, Highcharts, and Chart.js to create stunning visualizations.

Machine learning is an emerging technology that can help websites provide personalized recommendations, predictions, and insights. With PHP, you can integrate machine learning algorithms into your websites using frameworks.

Microservices architecture allows you to break down your application into small, independent services that can be developed, tested, and deployed separately. With PHP, you can use frameworks like Lumen, Silex, and Slim to build lightweight and scalable microservices.

To create awesome websites in 2023 PHP web development will offer an array of opportunities.

0 notes

Text

Top E-commerce Websites Scraping API | Extract API from eCommerce Website

In the world of e-commerce, data is power. Whether you're tracking market trends, monitoring competitor pricing, or keeping an eye on your supply chain, having access to real-time data is essential. This is where scraping APIs come into play. In this blog, we'll dive into the top e-commerce websites scraping APIs and explore how they can help you extract valuable data from e-commerce websites efficiently and effectively.

What is an E-commerce Website Scraping API?

An e-commerce website scraping API is a tool that allows you to extract data from e-commerce websites. This data can include product information, pricing, availability, reviews, and more. Scraping APIs can automate the process of gathering data from multiple websites, making it easier to analyze market trends and gain insights.

Why Use an E-commerce Website Scraping API?

Market Research: Understand the trends and demands in your industry by tracking data from various e-commerce websites.

Competitive Analysis: Monitor your competitors' pricing, product offerings, and customer reviews to stay ahead of the game.

Dynamic Pricing: Keep your pricing strategy agile by adjusting prices based on real-time data from other e-commerce platforms.

Product Discovery: Find new products and suppliers by exploring different e-commerce websites.

Inventory Management: Track product availability and update your inventory in real-time to avoid stockouts.

Top E-commerce Websites Scraping APIs

Scrapy: A popular open-source web crawling framework, Scrapy provides a flexible and efficient way to extract data from e-commerce websites. It supports custom spiders and pipelines for processing data.

ParseHub: ParseHub offers an easy-to-use visual scraping interface, allowing you to create scraping projects without any coding knowledge. It supports advanced features such as pagination and dynamic content handling.

Octoparse: Octoparse is a no-code scraping tool that offers a visual editor to create web scraping tasks. It supports cloud extraction, scheduling, and automated data parsing.

Diffbot: Diffbot provides advanced AI-powered scraping with pre-built APIs for product data extraction. It offers real-time data updates and can handle complex websites.

Bright Data: Bright Data (formerly Luminati Networks) offers a scraping API that supports a wide range of use cases, including e-commerce data extraction. It provides residential and data center proxies for high-quality scraping.

Extracting Data from E-commerce Websites

When using a scraping API, you can extract data from e-commerce websites such as:

Product Information: Extract product names, descriptions, prices, images, and categories.

Pricing: Monitor competitor pricing and dynamic pricing changes.

Availability: Track product availability and stock levels.

Reviews: Gather customer reviews and ratings for products.

Categories: Analyze product categories and subcategories for trends.

Best Practices for Web Scraping

Respect Website Terms of Service: Always adhere to the terms of service of the websites you are scraping to avoid legal issues.

Rate Limiting: Respect the rate limits of websites to avoid overwhelming their servers.

Rotate Proxies: Use proxy servers to avoid getting blocked and to maintain anonymity.

Data Accuracy: Validate the data you collect to ensure its accuracy and reliability.

Conclusion

E-commerce websites scraping APIs are powerful tools for gaining insights into the competitive landscape and staying ahead in the market. By leveraging these APIs, you can automate the process of gathering data and make data-driven decisions for your business. Just remember to follow best practices and respect the websites you're scraping to maintain a positive online presence.

0 notes

Text

How Can You Scrape Retail Product Price Data?

About Retail Product

Web data scraping services play a vital role in collecting critical data from the web, helping companies to stay up-to-date with the market values. However, extracting and gathering appropriate data from the website is a difficult procedure, which calls for expert’s resources as well as access to the latest tools.

We rapidly extract retail data from a wide range of websites utilizing sophisticated crawlers & hybrid methodology to offer well-organized datasets, which can assist you in making a smart decision. Our experts scrape retail product price data to help various company’s enterprises in many ways.

List of Data Fields

At iWeb Scraping, we help you to extract required data fields from the Retail Website: -

Product Title

Product Features

Price: Sales, Product Lists

Product Images

Product Brand

Product Description

Product Part Number

Number of Product

SKU Number

Model Number

How Retail Website Data Scraping Assist You Your Business?

Analyze Demands

Better Price Strategy

Reseller Management

Tracking Marketplace

Identify Frauds

Observe Campaigns

Benefits of Retail Website Scraping

E-commerce Website Scraping API

Our top Retail Website API scraping services can extract data from Retail Website so that we can provide the solution and we can easily do that on an immediate basis. Automate industry processes utilizing API and it can inspire internal apps as well as a workflow with data integrations.

Scrape Product Data from E-commerce Websites

Create API calls with product URLs to retrieve product details within seconds. You might connect that with price intelligence tools, which can monitor & track product prices.

Implement Lookups in Different Websites and Recover Results

With a particular API call, it is easy to inquiry on numerous websites and improve results. You don’t need to add information to multiple forms or click on any buttons. Pass the inputs to our APIs and extract the data. This is beneficial to pull the immediate data from websites such as event, travel, finance, etc.

Why Choose Us?

We have designed our program sources for upcoming areas of Retail Websites Data Scraping to ind the best imaginable web scraping solutions.

Our dedicated hard work for giving accurate data extracting services has continuously helped us in being amongst the Finest in the business and we assist you in services like Retail Websites Data Scraping Services.

Our Retail Product Scraper collects valuable data from various search engine results that might be appreciated in creating prospective data.

Our Retail Data Scraping services consist of details from the retail websites and adapt them into structured formats.

If you are looking for the best Retail Product Price Scraping Services, then you can contact iWeb Scraping Services for all your queries.

1 note

·

View note