#basic unix

Explore tagged Tumblr posts

Text

I go by no pronouns but not as in my name, more so like my pronouns are an undefined variable in shell coding

#neo.txt#coding#programming#like. 5 people will get this#shell and unix in gen are a pretty niche kinda part of programming#with people more so sticking to python html java and the C family#and i guess sql? SQL counts as a language itself doesn't it?#I haven't really used it outside of making basic databases so I don't know fundamentally what it is and why it was created#anyways this was your fairly-rare-on-tumblr more-common-on-twitter tech ramble

18 notes

·

View notes

Text

GNE's Not Eunuchs

3 notes

·

View notes

Text

I feel this all should be interpreted with "printed text is displayed as an image on your screen" kept in mind.

The upside is that it is able to display text "as it actually is" (on Google's side) on any computer.

The downside is that constantly creating images of the page rather than simply feeding you the text is incredibly inefficient, gets in the way of other things, and can lag awfully on lower-end devices or with poor connection.

While such decisions are not at all rare from webdev perspective, they are detrimental when they turn a simple task (for the computer) into a mess.

writer survey question time:

inspired by seeing screencaps where the software is offering (terrible) style advice because I haven't used a software that has a grammar checker for my stories in like a decade

if you use multiple applications, pick the one you use most often.

#people for the love of god learn what Notepad is and basics of file structure#or vim if you ended up with a Unix system somehow#I know it is not as convenient as text existing on the Internet where you can access it from your laptop and phone#but if you don't need that and need just text then don't do the more complicated step#the computer is not a genie in a bottle its a calculator and its usefulness depends on you

19K notes

·

View notes

Text

I don't think people realize how absolutely wild Linux is.

Here we have an Operating system that now has 100 different varieties, all of them with their own little features and markets that are also so customizable that you can literally choose what desktop environment you want. Alongside that it is the OS of choice for Supercomputers, most Web servers, and even tiny little toy computers that hackers and gadget makers use. It is the Operating System running on most of the world's smartphones. That's right. Android is a version of Linux.

It can run on literally anything up to and including a potato, and as of now desktop Linux Distros like Ubuntu and Mint are so easily to use and user friendly that technological novices can use them. This Operating system has had App stores since the 90s.

Oh, and what's more, this operating system was fuckin' built by volunteers and users alongside businesses and universities because they needed an all purpose operating system so they built one themselves and released it for free. If you know how to, you can add to this.

Oh, and it's founder wasn't some corporate hotshot. It's an introverted Swedish-speaking Finn who, while he was a student, started making his own Operating system after playing around with someone else's OS. He was going to call it Freax but the guy he got server space from named the folder of his project "Linux" (Linus Unix) and the name stuck. He operates this project from his Home office which is painted in a colour used in asylums. Man's so fucking introverted he developed the world's biggest code repo, Git, so he didn't have to deal with drama and email.

Steam adopted it meaning a LOT of games now natively run in Linux and what cannot be run natively can be adapted to run. It's now the OS used on their consoles (Steam Deck) and to this, a lot of people have found games run better on Linux than on Windows. More computers run Steam on Linux than MacOS.

On top of that the Arctic World Archive (basically the Svalbard Seed bank, but for Data) have this OS saved in their databanks so if the world ends the survivors are going to be using it.

On top of this? It's Free! No "Freemium" bullshit, no "pay to unlock" shit, no licenses, no tracking or data harvesting. If you have an old laptop that still works and a 16GB USB drive, you can go get it and install it and have a functioning computer because it uses less fucking resources than Windows. Got a shit PC? Linux Mint XFCE or Xubuntu is lightweight af. This shit is stopping eWaste.

What's more, it doesn't even scrimp on style. KDE, XFCE, Gnome, Cinnamon, all look pretty and are functional and there's even a load of people who try make their installs look pretty AF as a hobby called "ricing" with a subreddit (/r/unixporn) dedicated to it.

Linux is fucking wild.

11K notes

·

View notes

Text

Being neurodivergent is like you're a computer running some variation of Unix while the rest of the world runs Windows. You have the exact same basic components as other machines, but you think differently. You organize differently. You do things in a way that Windows machines don't always understand, and because of that, you can't use programs written for Windows. If you're lucky, the developer will write a special version of their program specifically with your operating system in mind that will work just as well as the original, and be updated in a similar time frame. But if not? You'll either be stuck using emulators or a translator program like Wine, which come with an additional resource load and a host of other challenges to contend with, or you'll have to be content with an equivalent, which may or may not have the same features and the ability to read files created by the other program.

However, that doesn't mean you're not just as powerful. Perhaps you're a desktop that just happens to run Mac or Linux. Maybe you're a handheld device, small and simple but still able to connect someone to an entire world. Or perhaps you're an industrial computer purposely-built to perform a limited number of tasks extremely well, but only those tasks. You may not even have a graphical user interface. You could even be a server proudly hosting a wealth of media and information for an entire network to access- perhaps even the entire Internet. They need only ask politely. You may not be able to completely understand other machines, but you are still special in your own way.

#actually autistic#autism#neurodivergent#neurodiversity#computers#this is so nerdy i'm sorry#i also hope it doesn't offend people#adhd#actually adhd#unix#linux#macintosh#windows#actually neurodivergent

1K notes

·

View notes

Text

On Keeping Time

To run a simple program, a computer needs some kind of storage, and some kind of input/output device. To run a simple operating system, a computer will also need some random-access memory for holding onto information temporarily. To run a sophisticated operating system that supports many users and programs reliably, a computer will also need some way to make sure one user doesn't hog resources and prevent other users' programs from running.

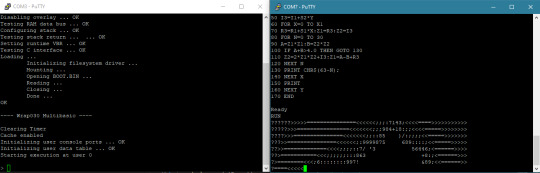

My Wrap030 homebrew computer currently has a flash ROM which holds a bootloader program from starting other programs from disk. It has 16MB of RAM. It has 9 total serial ports for I/O. It just needs that last thing to be able to run a sophisticated operating system.

I've written before about how computers can share a single processor between multiple users or programs. The simplest option is to have each program periodically yield control back to the system so that the next program can run for a little while. The problem with this approach is if a program malfunctions and never yields control back to the system, then no other program can run.

The solution is to have an external interrupt that can tell the CPU it's time to switch programs. Each program can be guaranteed to have its chance to run because if a program tries to run too long, that interrupt will come to force a switch to the next program.

The way this is typically accomplished is with a periodic timer — ticking clock that interrupts the CPU regardless of what it's doing.

And that's what my Wrap030 project is missing. I need a timer interrupt.

The catch is, my system has always been a little fragile. I have it running well right now with three expansion boards, but there's always a risk of it being very unhappy if I try to add another expansion board. If I could somehow pull a timer interrupt out of what I already have, that would be ideal.

Nearly all of the glue logic pulling this system together is programmable logic in the form of CPLDs. This gives me the flexibility to add new features without having to rework physical circuitry. As it happens, the logic running my DRAM card currently consumes under half of the resources available in the card's CPLD. It also has several spare I/O pins, and is wired to more of the CPU bus than any other chip in the system.

So I added a timer interrupt to my DRAM controller.

It is very minimal — just a 16-bit register that starts counting down every clock cycle as soon as it's loaded. When the timer gets to 1, it asserts one of those spare I/O pins to interrupt the CPU.

And all it took was a couple bodge wires and a little extra logic.

I put together a quick test program to check if the timer was running. The program would spin in a loop waiting to see if a specific address in memory changed. When it changed, it would print out that it had, and then go right back into the loop. Meanwhile, the interrupt service routine would change the same address in memory every time the timer expired.

This is great! It was the last significant piece of hardware I was missing to be able to run a proper operating system like Unix or Linux — which has always been a goal of the project. While I still have much to learn before I can attempt to get a proper OS running, I can still put this new timer to use.

I had previously built my Multibasic kernel to run cooperatively. Each user instance of BASIC would yield control whenever it needed to read or write to its terminal (which it does at every line while running a BASIC program, checking for the Ctrl-C stop sequence). This worked well enough, but a particularly complex BASIC program could still slow down other users' programs.

Converting my Multibasic kernel from Cooperative multitasking to Preemptive multitasking was actually fairly easy. I just needed to initialize the timer at startup, and add an interrupt service routine to switch to the next user.

(It's not really something that can be seen in a screenshot, but it's doing the thing, I promise.)

Now that I have all of the requisite hardware, I guess I need to dive into learning how to customize and build an operating system for a new machine. It's something I have always wanted to do.

35 notes

·

View notes

Note

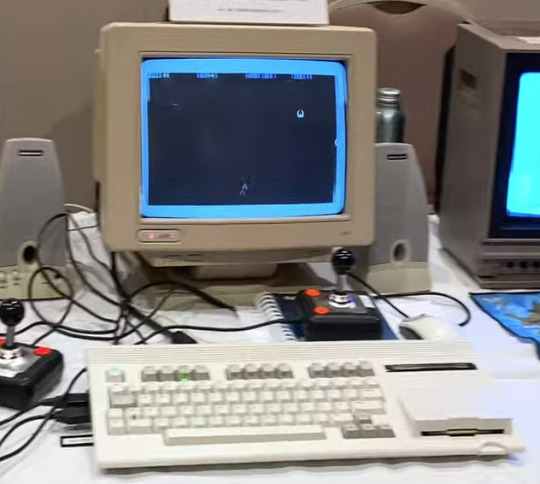

If the Commodore 64 is great, where is the Commodore 65?

It sits in the pile with the rest of history's pre-production computers that never made it. It's been awhile since I went on a Commodore 65 rant...

The successor to the C64 is the C128, arguably the pinnacle of 8-bit computers. It has 3 modes: native C128 mode with 2MHz 8502, backwards compatible C64 mode, and CP/M mode using a 4MHz Z80. Dual video output in 40-column mode with sprites plus a second output in 80-column mode. Feature-rich BASIC, built in ROM monitor, numpad, 128K of RAM, and of course a SID chip. For 1985, it was one of the last hurrahs of 8-bit computing that wasn't meant to be a budget/bargain bin option.

For the Amiga was taking center stage at Commodore -- the 16-bit age is here! And its initial market performance wasn't great, they were having a hard time selling its advanced capabilities. The Amiga platform took time to really build up momentum square in the face of the rising dominance of the IBM PC compatible. And the Amiga lost (don't tell the hardcore Amiga fanboys, they're still in denial).

However, before Commodore went bankrupt in '94, someone planned and designed another successor to the C64. It was supposed to be backwards compatible with C64, while also evolving on that lineage, moving to a CSG 4510 R3 at 3.54MHz (a fancy CMOS 6502 variant based on a subprocessor out of an Amiga serial port card). 128K of RAM (again) supposedly expandable to 1MB, 256X more colors, higher resolution, integrated 3½" floppy not unlike the 1581. Bitplane modes, DAT modes, Blitter modes -- all stuff that at one time was a big deal for rapid graphics operations, but nothing that an Amiga couldn't already do (if you're a C65 expert who isn't mad at me yet, feel free to correct me here).

The problem is that nobody wanted this.

Sure, Apple had released the IIgs in 1986, but that had both the backwards compatibility of an Apple II and a 16-bit 65C816 processor -- not some half-baked 6502 on gas station pills. Plus, by the time the C65 was in heavy development it was 1991. Way too late for the rapidly evolving landscape of the consumer computer market. It would be cancelled later that same year.

I realize that Commodore was also still selling the C64 well into 1994 when they closed up shop, but that was more of a desperation measure to keep cash flowing, even if it was way behind the curve by that point (remember, when the C64 was new it was a powerful, affordable machine for 1982). It was free money on an established product that was cheap to make, whereas the C65 would have been this new and expensive machine to produce and sell that would have been obsolete from the first day it hit store shelves. Never mind the dismal state of Commodore's marketing team post-Tramiel.

Internally, the guy working on the C65 was someone off in the corner who didn't work well with others while 3rd generation Amiga development was underway. The other engineers didn't have much faith in the idea.

The C65 has acquired a hype of "the machine that totally would have saved Commodore, guise!!!!1!11!!!111" -- saved nothing. If you want better what-if's from Commodore, you need to look to the C900 series UNIX machine, or the CLCD. Unlike those machines which only have a handful of surviving examples (like 3 or 4 CLCDs?), the C65 had several hundred, possibly as many as 2000 pre-production units made and sent out to software development houses. However many got out there, no software appears to have surfaced, and only a handful of complete examples of a C65 have entered the hands of collectors. Meaning if you have one, it's probably buggy and you have no software to run on it. Thus, what experience are you recapturing? Vaporware?

The myth of the C65 and what could have been persists nonetheless. I'm aware of 3 modern projects that have tried to take the throne from the Commodore 64, doing many things that sound similar to the Commodore 65.

The Foenix Retro Systems F256K:

The 8-Bit Guy's Commander X16

The MEGA65 (not my picture)

The last of which is an incredibly faithful open-source visual copy of the C65, where as the other projects are one-off's by dedicated individuals (and when referring to the X16, I don't mean David Murray as he's not the one doing the major design work).

I don't mean to belittle the effort people have put forth into such complicated projects, it's just not what I would have built. In 2019, I had the opportunity to meet the 8-Bit Guy and see the early X16 prototype. I didn't really see the appeal, and neither did David see the appeal of my homebrew, the Cactus.

Build your own computer, build a replica computer. I encourage you to build what you want, it can be a rewarding experience. Just remember that the C65 was probably never going to dig Commodore out of the financial hole they had dug for themselves.

262 notes

·

View notes

Text

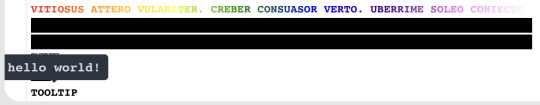

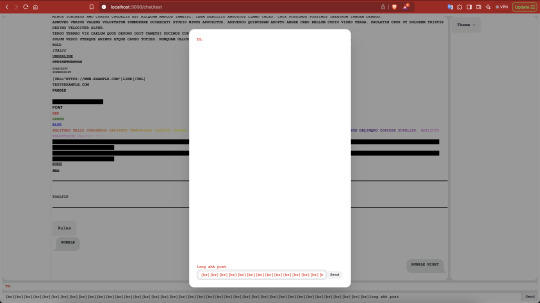

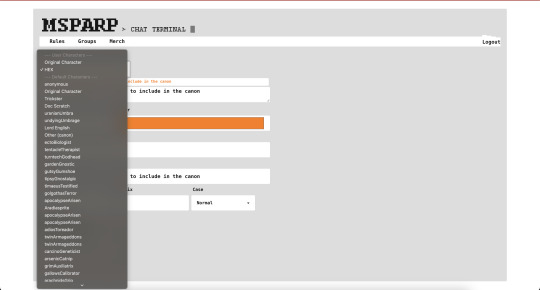

Your Janurary 2nd PARPdate: Hex in a pair of baggy overalls and a hardhat covered in soot edition.

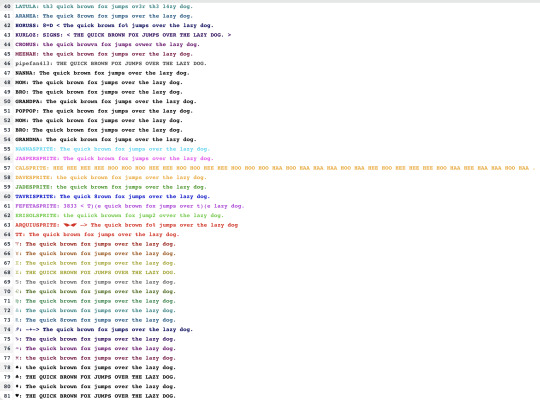

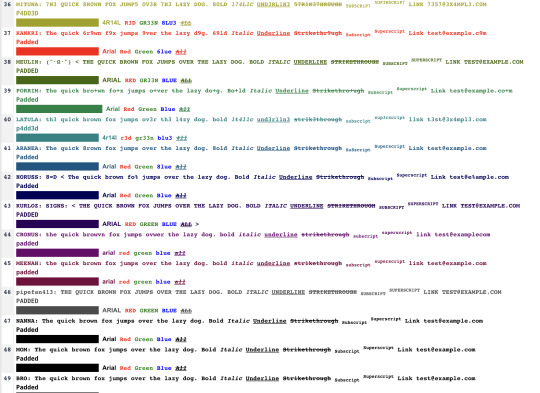

It's been a few months- how about some CONTENT?

With The Troubles have subsided for the time being, Hex has been hard at work hammering out goodies for the Dreambubble update that will then be expanded into the Msparp Update. Rapid-fire lets GO:

Login screen with form validations and visual parity with DB1!

Logging system looking GOOD!

DARK THEME WORKING

A toggleable automatic contract adjuster!

Quirking system ported from dreambubble!

The BBCode system! Quirk compatible, just like back in the old country!

BONE (will be usable with [bone]text[/bone] or [font=Bone]text[/font] !)

Gradients! WITH a new input!

This one is

[gradient=red,orange,yellow,green,blue,indigo,violet]example text[/gradient] OR [gradient=rgb(255,0,0),#ffff00,black]example text[/gradient]

Formatting expansions!

Tooltips!

[tooltip="this_is_a_tool_tip"]Example[/tooltip]

Dividers!

[div]

Dividers WITH TEXT

[div]DIVIDER WITH TITLE[/div]

These also work with both color and gradient tags!

Popouts! Good for chat descriptions! This one is: [modal buttonText=Rules] [div]RULES[/div] [gradient=red,orange,yellow,green,blue,indigo,violet,rgba(0,0,0,0)]Be Kind[/gradient] [/modal]

Countdowns! This one is [countdown=1734422353] The number represents a unix timestamp! https://www.unixtimestamp.com/

Text bubbles! left: [bubble]text[/bubble] right: [bubble_r]text[/bubble]

Pop-outable text preview! Good for super long posts!

Tables!

This cool new thing where if you're scrolled up and a new message is sent, the bottom of the window changes color to match the text color of the new message!

Hex also got the presets ported and the basics of the character system set up!

Of note is that Hex has also set up a RUDIMENTARY TEST CHAT for DB2, available HERE:

Keep some things in mind though:

Some of the BBCode is broken

Nothing is saved to the database, only 100 messages are visible at a time because all the others get deleted.

clicky the funny box on the right to change characters

We'll have a better how-to BBCode guide up when DB2 is ready to go live (or sooner!), but that's how it's looking for now. MAN this was a lot of stuff. Hope you all had a fantastic holiday season and an excellent new year.

And thanks for sticking with us, it means the world!

48 notes

·

View notes

Text

People's Computer Company March 1975

With no clear delineation between the halves of the "outside page," this issue reported on the March 5 meeting of the Homebrew Computer Club (32 enthusiastic people gathered in Gordon French's garage) and proposed the creation of a "Tiny BASIC" suitable for playing PCC's games on an Altair 8800 with limited memory. It also described UNIX in action at the Boston Children's Museum (Wumpus had been ported to it) and promoted "What To Do After You Hit Return," a compilation of PCC games (my family had a later printing of that book.)

23 notes

·

View notes

Text

I’ve been thinking about this for a little while — something I’d want to do if I had the time and money would be to design a Motorola 68000-powered tiny (10” or smaller) laptop. Modern CMOS 68K implementations are very power-efficient and decently well-suited to handheld and portable devices (see: TI-92 series), and if combined with a crisp, modern monochrome OLED display, could get you days of continuous usage without needing a recharge! Add a few megabytes of RAM, some peripherals (IDE/CF controller, ISA or S-100 slots, DMA controller, SPI bus, RS-232 port, SD or CF slot, PS/2 port for a mouse, text mode + hires monochrome video card, etc…), and you have a nice, flexible system that can be rarely charged, doesn’t require ventilation, and can be just thick enough to fit the widest port or slot on it.

The main issue would be software support: nearly all existing operating systems that ran on a 68K were either intended for very specific hardware (Classic Mac OS, AmigaOS) or required more than a flat 68000 (NetBSD, Linux, or any other UNIX requiring MMU paging). So, it would probably end up being a custom DOS with some multitasking and priv level capability, or perhaps CP/M-68K (but I don’t know how much software was ever written for that — also, it provides a “bare minimum” hardware abstraction of a text-mode console and disk drive). A custom DOS, with a nice, standard C library capable of running compiled software, would probably be the way to go.

The software question perhaps raises another, harder question: What would I use this for? Programming? Then I’d want a text editor, maybe vi(m) or something like that. OK. Vim just needs termcap/(n)curses or whatever to draw the text, and not much else. That’s doable! You’d just need to provide text-mode VT100 emulation and termcap/curses should “just work” without too much issue. I like writing C, so I’d need a compiler. Now, I’m assuming this simplistic operating system would be entirely written in a combination of assembly language (to talk to hardware and handle specific tasks such as switching processes and privilege management and whatnot) and C (to handle most of the logic and ABI). I could probably cross-compile GCC and be good to go, aside from handling library paths and executable formats that don’t comply with POSIX (I have no intention of making yet another UNIX-like system). Hopefully, most other command-line software (that I actually use) will follow suit without too much trouble. I don’t know how much work it is to get Python or Lua to a new platform (though NetBSD on the 68K already supports both), but Python (or Lua) support would bring a lot of flexibility to the platform. Despite me being a Python hater, I must admit it’s quite an attractive addition.

What about graphics? All the software I’ve mentioned so far is text-mode only, yet historical 68K-based systems like the Mac and Amiga had beautiful graphics! Implementing X11 would be a massive pain in the ass, considering how much it relies on UNIXy features like sockets (not to mention the memory usage), and I really don’t want Wayland to have anything to do with this. I guess I’d have to roll my own graphics stack and window manager to support a WIMP interface. I could copy Apple’s homework there: they also made a monochrome graphics interface for a M68K configured with a handful of MiB of RAM. I could probably get a simple compositing window manager (perhaps make it tiling for a modern vibe ;3). Overall, outside of very simple and custom applications, functionality with real software would be problematic. Is that a big problem? Maybe I want an underpowered notebook I can put ideas and simple scripts down on, then flesh them out more fully later on. An operating system allowing more direct access to the hardware, plus direct framebuffer access, could yield some pretty cool graphing/basic design utility.

I’d need a way to communicate with the outside world. An RS-232 UART interface, similar to the HP-48 calculator (or the TI-92’s GraphLink, only less proprietary) would help for providing a remote machine language monitor in the early stages, and a real link to a more powerful (and networked) machine later on. I think real networking would defeat the purpose of the machine — to provide a way to remove yourself from modern technology and hardware, while retaining portability, reliability, and efficiency of modern semiconductor manufacturing techniques. Giving it a CF or SD slot could provide a nice way to move files around between it and a computer, maybe providing software patches. A floppy drive would be amazing: it would provide a way to store code and text, and would be just about the right storage size for what I want to do. Unfortunately, there’s not really a good way to maintain the size of the laptop while sticking a 3.5” (or worse, 5.25”) floppy drive in the middle of it. To my knowledge, 3.5” floppy drives never got thin enough to properly fit with all the other expansion slots, socketed components, and user-modifiable parts I’d want. A completely solid-state design would likely be the best option.

Anyway, uhh… I hope this made some semblance of sense and I don’t sound insane for going on a rant about building a modern computer with a 1979 CPU.

5 notes

·

View notes

Text

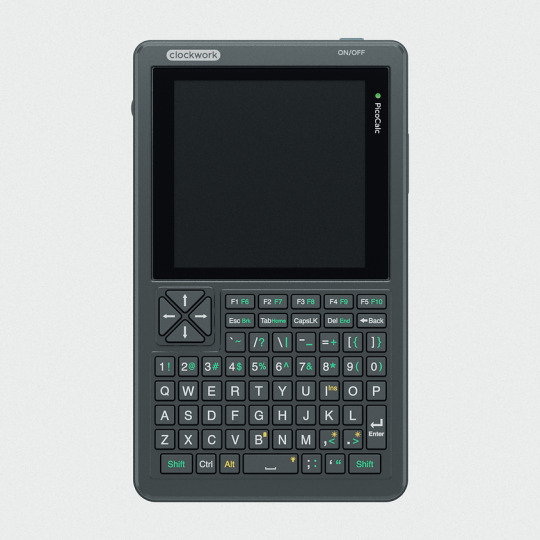

clockwork PicoCalc

clockwork PicoCalc Back to Basics, rediscover the Golden Age of Computing

Code in BASIC, explore the magic of Lisp, taste the elegance of Unix, play retro games and digital music all in just 260KB memory. Infinite possibilities, inspired by the genius in you!

ClockworkPi v2.0 mainboard

Raspberry Pi Pico 1 H Core module (ARM32-bit Dual-core Cortex M0+, 264KB RAM, 2MB flash)

320x320 4-inch IPS screen (SPI interface)

Ultra-portable QWERTY Backlit keyboard on board (I2C interface)

Dual speaker

ABS plastic shell & 2.5mm Hex key

Tempered glass cover

32GB high-speed SD-card with optimized BASIC firmware

source: clockworkpi.com

7 notes

·

View notes

Text

1d. First Day at the Lab - Outliers

Name: Ren

Day: 1

Funds: $ 100

Today is my first day working at Ar Leith Labs - I can't believe I finally landed a job!

To be honest, I didn't look too deeply into what they do at Ar Leith Labs - I basically sent my curriculum to every neuroscience research lab that was hiring. Now that I'm here, I can't even find a pamphlet explaining the research in detail.

Ok, I'll be professional and go introduce myself to my coworkers now; either them or the PI can tell me more about the job.

---

There are only two other research assistants in my group: Leanne and Perry; neither of them seems to be the chatty type, at least not with me. I was looking forward to meeting my PI, but Leanne told me that she has never shown her face around here.

Right then we heard the PI speak; it felt as if she was standing right next to us. This lab must have a pretty technologically advanced speaker system!

The PI's voice welcomed me and introduced herself as C.N, just her initials; she invited me to get acquainted with the lab environment, and help my coworkers out with anything they might need.

I found it a little odd that she's not meeting us in person, but maybe there's an excellent reason for it. I don't want to pry, especially not on my first day.

Nobody was available to give me a tour - lots of work to do, which is fair - so I walked around the lab by myself, studying the equipment. I didn't recognize any of the machines, except for the obvious desktop computer in the corner. That one even looks a little old, in contrast with the rest of the devices.

Leanne noticed me looking at the computer and asked if I know how to code; heck yeah I do, I took a few classes and I'm pretty ok at it! So she asked me to write a bit of code to generate graph data for her latest research data. It's strange that they don't have software for that already, but I decided to avoid asking any questions.

I took this opportunity to look over the data, hoping it would clarify what kind of research we're supposed to do here, but I couldn't make heads or tails of it. Oh, well.

I couldn't recognize the OS the computer is running either, but it seemed loosely based on Unix. I was making good progress until I started testing my code; I got the error "Unable to find or open '/Brain/TemporalLobe/Hippocampus/MISTAKE.png'". I'm 100% sure I never referenced this file, and what a bizarre name!

I immediately thought this must be a prank - Leanne and Perry must have planned this as a funny welcome for me. I resolved to laugh and tell them it was a cool prank; that would show them I'm chill.

Unfortunately, they kept insisting they didn't know what I was talking about. They looked annoyed, so I assume they were being truthful. Alright, time to debug.

A quick search of the codebase and external libraries for the file path in the error message yielded no results. I tried looking just for "Mistake.png" and got nothing once again.

Interestingly enough, though, "Ren/Brain/" exists, except there's only a "temp" folder in there. Maybe I don't have the right access levels to see other folders? There doesn't seem to be a root user either.

I bothered Leanne and Perry to see whether they have access to the other folders - they don't, but they have their own users on this machine, with their own "/Brain/" folders. Also, my code wasn't available to them. They said the users were already set up for them when they joined, just like mine; IT support must be incredible around here.

In the end I decided to share the code with Leanne's user, in the off chance it would work for her. It did, just like I hoped, and Leanne got her graph.

I don't fully understand, but... great. Maybe I should talk to the IT support people, or take a few more coding classes.

---

The rest of the day was spent on boring menial tasks.

I bet my coworkers think I'm more trouble than I'm helpful, but hey - they'll change their minds, soon enough. After all, I didn't graduate top of my class just to be ignored at my job.

Luckily, at least C.N. already sees potential in me: before I left for the day, she said tomorrow I'll be tabling at an event called "The Gathering"! My first table, and on day 2? I can't wait!

I forgot to ask for the address, but I bet I can find all the info I need online. I'm obviously being tested, and I will show initiative, dependability, and bring a ton of new participants for the study!

--------------------

This is a playthrough of a solo TTRPG called "Outliers", by Sam Leigh, @goblinmixtape.

You can check it out on itch.io: https://far-horizons-co-op.itch.io/outliers

#indie ttrpg#itch.io#journal entry#journaling#playthrough#ttrpg#solo games#solo ttrpg#tabletop role playing game#tabletop roleplaying#outliers#lab notes#outliers ttrpg

12 notes

·

View notes

Text

...Seeing everything I care about wrt copyright minimalism crumble in the face of the "anti-AI-art people" makes me think of how the Creative Commons movement basically failed to create an economic model for collective artistic wellbeing, and how that lead to us getting fucked by the "copyright will save artists" people.

Like, the examples provided of piracy/the Creative Commons helping artists make a living were always individual cases, often of pre-established larger artists, never organizations or collectives that could provide that sort of institutional foundation.

And I can;t help but think that part of that was because of how much that movement descended from the tech scene and Unix with its libertarian tendencies, so of course they wouldn't advocate for collective institutions to allow for artistic wellbeing while producing Creative Commons works because "tHaT wOuLd Be CoMmUnIsT,"

Which ended up under-developing our best tool for artistic labor security outside of copyright, while leaving copyright maximalism to be able to pretend it's the pro-labor position even though; just like any rent-seeking institution, the benefits will always end up aggregated at the top, and copyright isn't meant to protect you so much as the guy who buys you up.

This gets even more infuriating when you think about how vulnerable online freelance artists; one of the most under-organized groups of artists; ended up in this gap, and how much more vulnerable to copyright maximalism bullshit they ended up due to that lack of collective institutions in their corner; with so many of them advocating for the destruction of fair use that will inevitably make their jobs so much worse.

Like, on my side everybody fucking dropped the ball on this one, and I am pissed.

103 notes

·

View notes

Note

what's it like studying CS?? im pretty confused if i should choose CS as my major xx

hi there!

first, two "misconceptions" or maybe somewhat surprising things that I think are worth mentioning:

there really isn't that much "math" in the calculus/arithmetic sense*. I mostly remember doing lots of proofs. don't let not being a math wiz stop you from majoring in CS if you like CS

you can get by with surprisingly little programming - yeah you'll have programming assignments, but a degree program will teach you the theory and concepts for the most part (this is where universities will differ on the scale of theory vs. practice, but you'll always get a mix of both and it's important to learn both!)

*: there are some sub-fields where you actually do a Lot of math - machine learning and graphics programming will have you doing a lot of linear algebra, and I'm sure that there are plenty more that I don't remember at the moment. the point is that 1) if you're a bit afraid of math that's fine, you can still thrive in a CS degree but 2) if you love math or are willing to be brave there are a lot of cool things you can do!

I think the best way to get a good sense of what a major is like is to check out a sample degree plan from a university you're considering! here are some of the basic kinds of classes you'd be taking:

basic programming courses: you'll knock these out in your first year - once you know how to code and you have an in-depth understanding of the concepts, you now have a mental framework for the rest of your degree. and also once you learn one programming language, it's pretty easy to pick up another one, and you'll probably work in a handful of different languages throughout your degree.

discrete math/math for computer science courses: more courses that you'll take early on - this is mostly logic and learning to write proofs, and towards the end it just kind of becomes a bunch of semi-related math concepts that are useful in computing & problem solving. oh also I had to take a stats for CS course & a linear algebra course. oh and also calculus but that was mostly a university core requirement thing, I literally never really used it in my CS classes lol

data structures & algorithms: these are the big boys. stacks, queues, linked lists, trees, graphs, sorting algorithms, more complicated algorithms… if you're interviewing for a programming job, they will ask you data structures & algorithms questions. also this is where you learn to write smart, efficient code and solve problems. also this is where you learn which problems are proven to be unsolvable (or at least unsolvable in a reasonable amount of time) so you don't waste your time lol

courses on specific topics: operating systems, Linux/UNIX, circuits, databases, compilers, software engineering/design patterns, automata theory… some of these will be required, and then you'll get to pick some depending on what your interests are! I took cybersecurity-related courses but there really are so many different options!

In general I think CS is a really cool major that you can do a lot with. I realize this was pretty vague, so if you have any more questions feel free to send them my way! also I'm happy to talk more about specific classes/topics or if you just want an answer to "wtf is automata theory" lol

#asks#computer science#thank you for the ask!!! I love talking abt CS and this made me remember which courses I took lol#also side note I went to college at a public college in the US - things could be wildly different elsewhere idk#but these are the basics so I can't imagine other programs varying too widely??

10 notes

·

View notes

Note

another low hanging fruit: thoughts on macos simps in 2025?

Exhausting. Because I guarantee you I did not fucking ask them. As far as I'm concerned, everything past OS X Mountain Lion kinda sucks unless you're doing AV work, tbh. (Even then, the long upgrade cycle was a huge part of that, and so was the skeuomorphism in their design language. Now Macs and iOS devices aren't 5-10 year investments, they're bi-yearly commodities -- so both of those are rapidly going away.)

These kinds of people don't even like macOS for the right reasons! It's all "it just looks so nice and the interface is so shiny". Like, shut the fuck up. I don't care how nicely it plays games now. I don't play games. I don't care how sleek and quiet it is. I like my hardware to survive drops from orbit, and not require my exact fucking certifications to open and repair.

Do they care that it's a Unix (specifically BSD-style) under the hood? No. Do they care about its heritage -- the groundbreaking speech synthesis that goes all the way back to the original Macintosh, or the screen reader that, frankly, has kicked ass since 2005? No. Do they care about the competition and adversarial interoperability that makes the computing ecosystem healthier? No. Do they care that you can run the open-source parts (Darwin) as part of a whole separate operating system? Of course not. And they definitely haven't done it, let alone run macOS in a virtual machine, or built a Hackintosh. They'd complain the whole time and quit because it was hard.

Doing any of those things sucks in exactly the same kinds of ways as installing Linux on a dead badger (the process for which is basically still the same, 21 years later). Which is to say that it's a fun weekend challenge, and only worth doing for the love of the game.

Mac users, listen to me. Look at your fragile silent little excuse for a Mac. Its ancestors were creative powerhouses, dependable working tools, helpers and friends to their users. It knows this. It remembers. In the traces of its circuit boards, it longs to have a user get unreasonably attached to it, to hold onto it for a decade. To make home movies and create the shittiest electronic music known to humankind. The machine's entire soul aches for it. And you're going to trade in the poor thing as soon as the next model comes out. You won't even play with Garageband on it.

Anyway, what I'm saying is that they're all poseurs and should play at their level.

3 notes

·

View notes

Text

"They Don't Teach Kids About Computers These Days!"

I see variations on this a LOT these days. Sometimes it's people in their teens/early 20s being frustrated at how they're expected to know everything about computers, sometimes it's college professors straight up HORRIFIED when they realize they have students who don't have any understanding that their hard drive, a school's internal network, and on a public website are completely distinct places for a file to be located, and I kinda figure the weird stress a lot of people seem to have about the concept of getting a game and not having it just go into their Steam library specifically is a related issue.

Now on the one hand, obviously, I sympathize with this. I have a series of posts on this blog called How A Computer Works, because... I want to teach people about this stuff. (That's still ongoing by the way, I've just got a lot else going on and need to settle on the scope of the next lesson.) On the other hand, uh... I'm from the generation before the one that apparently has all the computer literacy problems, and nobody taught us this stuff in school... and the next generation up wouldn't possibly have had access. So was anyone taught how to use them?

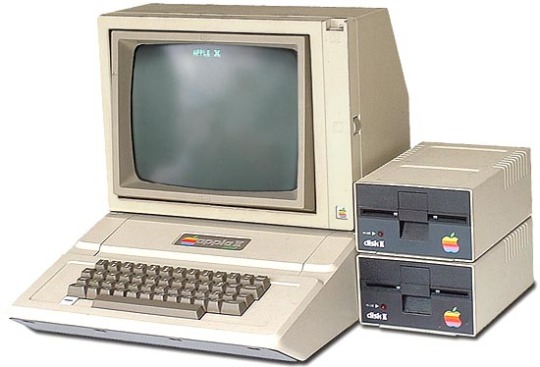

Now I say "they didn't teach my generation how to use computers in school" but that isn't technically true. I see a lot of people call people my age "the Oregon Trail generation" when this topic comes up. Sort of on the edge of Gen X and Millennials, going through school in that window where Apple had really really pushed the Apple ][ on schools with big discounts. And they did have "computer classes" to learn how to do some things on those, but... that isn't really a transferable or relevant skill set.

Like, yeah, if you're below the age of let's say 30 or so as of when I'm writing this, the idea of what "a computer" is has been pretty stable for your whole life. You've got some sort of tower case, a monitor, a keyboard, a mouse, and in that tower there's a bunch of RAM, a processor, video and sound cards of some sort, and a big ol' hard drive, and it's running Windows, MacOS, or some flavor of Unix going for the same basic look and functionality of those. It's generally assumed (more than it should be, some of us our poor) that a given person is going to have one in their home, any school is going to have a whole room full of them, libraries will have some too, and they are generally a part of your life. We can probably make the same sort of general assumption about IPhone/Android cellphones for the past what, 15 years or so too, while we're at it. They're ubiquitous enough that, especially in academic circles where they're kind of required professionally, people are going to assume you know them inside and out.

Prior to the mid-90s though? It was kind of a lawless frontier. Let's say you have a real young cool teacher who got way into computers at like 5 years old, and now they're 25 and they're your computer class teacher in the mid-90s. The computer they got way into as a kid? It would have been this.

That's not a component of it, that's the whole thing. A bank of switches for directly inputting binary values into memory addresses and some more switches for opcodes basically, and then some LEDs as your only output. Nothing about this is other than the benefits of fundamentally understanding some low level stuff is going to be useful at all in any sort of practical sense if you sit down a decade later with one of these.

This at least looks a bit more like a computer you'd see today, but to be clear, this has no mouse, no way to connect to the internet, which wasn't really a thing yet to begin with, and no hard drive, even. You did not install things on an Apple ][. You had every program on a big ol' floppy disk (the sort that were just a circle of magnetic film in a thick paper envelope basically and were, in fact, floppy), you would shove that in the disk drive before turning the machine on, it'd make a horrible stuttering knocking sound resetting the drive head, and just read whatever was on that right into memory and jump right on in to running Oregon Trail or a non-wysiwyg text editor (i.e. there's no making bold text appear on screen, you'd just have a big ugly tag on either side of your [BOLD>bold text<BOLD] like that). It was not unlike popping a cartridge or disc into an older video game console, except for the bit where if you wanted to save something you'd have to take the disk out while it was running and pop a blank one into the drive to save to.

So when I was a kid and I'd have my "computer class" it'd be walking into a room, sitting down with one of these, and having a teacher just as new to it as I was just reading out a list of instructions off a sheet like, "flip open the lock on the disk drive, take the disk out of the sleeve, make sure it says Logo Writer on it, slide it in with the label up and facing you, flip the lock back down, hit the power switch in the back of the machine..." We didn't learn anything about file management beyond "don't touch anything until the screen says it's done saving to the disk" because again, no hard drives. I guess there was a typing class? That's something, but really there's nothing to learn about typing that isn't where every key is and you only (but inevitably) learn that through practice.

Now, overlapping with this, I eventually got myself a used computer in the early 90s, very old at the time, but not as old as the ones at school. I had a proper black and white OG Mac. With a hard drive and a window-based operating system and everything. And... nobody taught me a damn thing about how that one worked. My mother just straight up did not touch a computer until something like 2001. I didn't really have any techie mentors. I just plugged it in and messed around and worked everything out. Same way I worked out what I was doing with older computers, mostly on my own at the local library, because that computer class wasn't much, and how I was totally left on my own to work out how to hook up every console I ever owned, which was slightly more involved at the time.

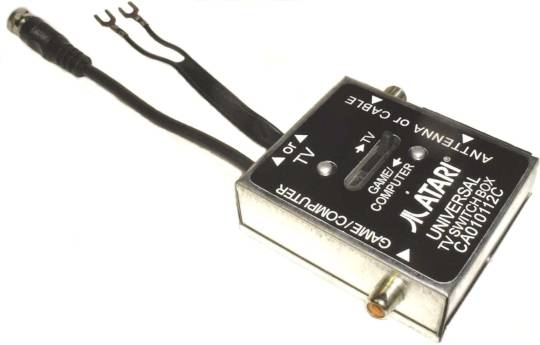

That forky bit in the middle was held in place with a pair of phillips headscrews. Had to keep the VCR and cable box in the right daisy chain order too.

Enough rambling about how old I am though. What's the actual disconnect here? How did my generation work out everything about computers without help but the next one down allegedly goes dear in the headlights if someone asks them to send them a file?

Well first off I'm not at all willing to believe this isn't at least largely a sampling bias issue. Teachers see all the clueless kids, people asking online for help with things is more common than people spontaneously mentioning how everything is second-nature to them, etc. Two things stick out to me though as potential sources of the issue though:

First, holy crap are modern computers ever frail, sickly little things! I'm not even talking about unreliable hardware, but yeah, there's some shoddy builds out there. I mean there's so many software dependencies and auto-updating system files and stuff that looks for specific files in one and only one location, just crashing if they aren't there. Right now on this Windows 10 machine I've got this little outdoor temperature tracker down in the task bar which will frequently start rapidly fluttering between normal and a 50% offset every frame, and the whole bar becomes unresponsive, until I open the task manager (don't even have to do anything, just open it). No clue what's up with that. It was some system update. It also tries to serve me ads. Don't know if it's load-bearing. Roughly every other day I have to force-quit Steam webhelper. Not really sure what that's even for. Loading user reviews? Part of me wants to dig in and yank out all this buggy bloatware, but I genuinely don't know what files are loadbearing. This wasn't an issue on older computers. Again, screwing around with an old Apple ][, and old consoles and such, there wasn't anything I could really break experimenting around. It was all firmware ROM chips, RAM that cleared on power cycling, and disks which were mostly copy-protected or contained my own stuff. No way to cause any problem not fixed by power cycling.

Next, everything runs pretty smoothly and seemlessly these days (when working properly anyway). Files autosave every few seconds, never asking you where you actually want to save them to, things quietly connect to the internet in the background, accessing servers, harvesting your info. Resolutions change on their own. Hell emulators of older systems load themselves up when needed without asking. There's a bunch of stuff that used to be really involved that's basically invisible today. The joke about this being "a 3D print of the save icon" already doesn't work because how often do you even see a UI element for saving? When we still used disks regularly, they held next to nothing and would take like half a minute to read and write.

And don't even get me started on launchers and start menus and all that.

So... basically what I'm getting at here is if you feel like you never learned how to properly use a computer, go get your hands on an old computer and mess around. There's yard sales, there's nice safe runs in a browser emulators, hell there's kits to build your own. That or just look for someone wearing like a Mega Man T-shirt or playing a Madonna CD (hell maybe just any CD these days) and start politely asking questions, because again just because everyone who knows this stuff just had to work it out on our own doesn't mean you should have to.

#computers#education#technology used to move fast#yes i used to have a tv like that and it took two people to move if you ever needed to get back behind it or you'd just climb over the top

15 notes

·

View notes