#big data integration services

Explore tagged Tumblr posts

Text

Welcome to the digital era, where data reigns as the new currency.

In modern information technology, the term “Big Data” has surged to the forefront, embodying the exponential growth and availability of data in today’s digital age. This influx of data encompasses vast volumes, generated at unprecedented speeds and with diverse varieties, presenting both challenges and opportunities across industries worldwide.

To unlock the true potential of big data, businesses need to address several critical areas like #BigDataCollection and #DataIntegration, #DataStorage and Management, #DataAnalysis and #DataAnalytics, #DataPrivacy and #DataSecurity, Innovation and Product Development, Operational Efficiency and Cost Optimization. Here at SBSC we recognize the transformative power of #bigdata and empower businesses to unlock its potential through a comprehensive suite of services: #DataStrategy and #Consultation: SBSC’s Tailored advisory services help businesses define their Big Data goals, develop a roadmap, and align data initiatives with strategic objectives.

#DataArchitecture and #DataIntegration: We Design and implementation of scalable, robust data architectures that support data ingestion, storage, and integration from diverse sources. #DataWarehousing and Management: SBSC provides Solutions for setting up data warehouses or data lakes, including management of structured and unstructured data, ensuring accessibility and security. Data Analytics and Business Intelligence: Advanced analytics capabilities leveraging machine learning, AI algorithms, and statistical models to derive actionable insights and support decision-making.

#DataVisualization and Reporting: Creation of intuitive dashboards and reports that visualize key insights and performance metrics, enabling stakeholders to interpret data effectively. #CloudServices and Infrastructure: Leveraging #cloudplatforms for scalability, flexibility, and cost-effectiveness in managing Big Data environments, including migration and optimization services Continuous Improvement and Adaptation: Establishment of feedback loops and metrics to measure the impact of Big Data initiatives, fostering a culture of continuous improvement and adaptation.

By offering a comprehensive suite of services in these areas, SBSC helps businesses to harness the power of Big Data to drive innovation, improve operational efficiency, enhance customer experiences, and achieve sustainable growth in today’s competitive landscape

Contact SBSC to know the right services you need for your Business

Email: [email protected] Website:https://www.sbsc.com

#Big Data Collection#big data#Cloud Services Consultation#Data Warehousing#Data Strategy#Data Storage#Data Security#Data Privacy#Data Integration#Data Architecture#Data Analysis

0 notes

Text

Understanding the Basics of Team Foundation Server (TFS)

In software engineering, a streamlined system for project management is vital. Team Foundation Server (TFS) provides a full suite of tools for the entire software development lifecycle.

TFS is now part of Azure DevOps Services. It is a Microsoft tool supporting the entire software development lifecycle. It centralizes collaboration, version control, build automation, testing, and release management. TFS (Talend Open Studio) is the foundation for efficient teamwork and the delivery of top-notch software.

Key Components of TFS

The key components of team foundation server include-

Azure DevOps Services (formerly TFS): It is the cloud-based version of TFS. It offers a set of integrated tools and services for DevOps practices.

Version Control: TFS provides version control features for managing source code. It includes centralized version control and distributed version control.

Work Item Tracking: It allows teams to track and manage tasks, requirements, bugs, and other development-related activities.

Build Automation: TFS enables the automation of the build process. It allows developers to create and manage build definitions to compile and deploy applications.

Test Management: TFS includes test management tools for planning, tracking, and managing testing efforts. It supports manual and automated testing processes.

Release Management: Release Management automates the deployment of applications across various environments. It ensures consistency and reliability in the release process.

Reporting and Analytics: TFS provides reporting tools that allow teams to analyze their development processes. Custom reports and dashboards can be created to gain insights into project progress.

Authentication and Authorization: TFS and Azure DevOps manage user access, permissions, and security settings. It helps to protect source code and project data.

Package Management: Azure DevOps features a package management system for teams to handle and distribute software packages and dependencies.

Code Search: Azure DevOps provides powerful code search capabilities to help developers find and explore code efficiently.

Importance of TFS

Here are some aspects of TFS that highlight its importance-

Collaboration and Communication: It centralizes collaboration by integrating work items, version control, and building processes for seamless teamwork.

Data-Driven Decision Making: It provides reporting and analytics tools. It allows teams to generate custom reports and dashboards. These insights empower data-driven decision-making. It helps the team evaluate progress and identify areas for improvement.

Customization and Extensibility: It allows customization to adapt to specific team workflows. Its rich set of APIs enables integration with third-party tools. It enhances flexibility and extensibility based on team needs.

Auditing and Compliance: It provides auditing capabilities. It helps organizations track changes and ensure compliance with industry regulations and standards.

Team Foundation Server plays a pivotal role in modern software development. It provides an integrated and efficient platform for collaboration, automation, and project management.

Learn more about us at Nitor Infotech.

#Team Foundation Server#data engineering#sql server#big data#data warehousing#data model#microsoft sql server#sql code#data integration#integration of data#big data analytics#nitor infotech#software services

1 note

·

View note

Text

The so-called Department of Government Efficiency (DOGE) is starting to put together a team to migrate the Social Security Administration’s (SSA) computer systems entirely off one of its oldest programming languages in a matter of months, potentially putting the integrity of the system—and the benefits on which tens of millions of Americans rely—at risk.

The project is being organized by Elon Musk lieutenant Steve Davis, multiple sources who were not given permission to talk to the media tell WIRED, and aims to migrate all SSA systems off COBOL, one of the first common business-oriented programming languages, and onto a more modern replacement like Java within a scheduled tight timeframe of a few months.

Under any circumstances, a migration of this size and scale would be a massive undertaking, experts tell WIRED, but the expedited deadline runs the risk of obstructing payments to the more than 65 million people in the US currently receiving Social Security benefits.

“Of course, one of the big risks is not underpayment or overpayment per se; [it’s also] not paying someone at all and not knowing about it. The invisible errors and omissions,” an SSA technologist tells WIRED.

The Social Security Administration did not immediately reply to WIRED’s request for comment.

SSA has been under increasing scrutiny from president Donald Trump’s administration. In February, Musk took aim at SSA, falsely claiming that the agency was rife with fraud. Specifically, Musk pointed to data he allegedly pulled from the system that showed 150-year-olds in the US were receiving benefits, something that isn’t actually happening. Over the last few weeks, following significant cuts to the agency by DOGE, SSA has suffered frequent website crashes and long wait times over the phone, The Washington Post reported this week.

This proposed migration isn’t the first time SSA has tried to move away from COBOL: In 2017, SSA announced a plan to receive hundreds of millions in funding to replace its core systems. The agency predicted that it would take around five years to modernize these systems. Because of the coronavirus pandemic in 2020, the agency pivoted away from this work to focus on more public-facing projects.

Like many legacy government IT systems, SSA systems contain code written in COBOL, a programming language created in part in the 1950s by computing pioneer Grace Hopper. The Defense Department essentially pressured private industry to use COBOL soon after its creation, spurring widespread adoption and making it one of the most widely used languages for mainframes, or computer systems that process and store large amounts of data quickly, by the 1970s. (At least one DOD-related website praising Hopper's accomplishments is no longer active, likely following the Trump administration’s DEI purge of military acknowledgements.)

As recently as 2016, SSA’s infrastructure contained more than 60 million lines of code written in COBOL, with millions more written in other legacy coding languages, the agency’s Office of the Inspector General found. In fact, SSA’s core programmatic systems and architecture haven’t been “substantially” updated since the 1980s when the agency developed its own database system called MADAM, or the Master Data Access Method, which was written in COBOL and Assembler, according to SSA’s 2017 modernization plan.

SSA’s core “logic” is also written largely in COBOL. This is the code that issues social security numbers, manages payments, and even calculates the total amount beneficiaries should receive for different services, a former senior SSA technologist who worked in the office of the chief information officer says. Even minor changes could result in cascading failures across programs.

“If you weren't worried about a whole bunch of people not getting benefits or getting the wrong benefits, or getting the wrong entitlements, or having to wait ages, then sure go ahead,” says Dan Hon, principal of Very Little Gravitas, a technology strategy consultancy that helps government modernize services, about completing such a migration in a short timeframe.

It’s unclear when exactly the code migration would start. A recent document circulated amongst SSA staff laying out the agency’s priorities through May does not mention it, instead naming other priorities like terminating “non-essential contracts” and adopting artificial intelligence to “augment” administrative and technical writing.

Earlier this month, WIRED reported that at least 10 DOGE operatives were currently working within SSA, including a number of young and inexperienced engineers like Luke Farritor and Ethan Shaotran. At the time, sources told WIRED that the DOGE operatives would focus on how people identify themselves to access their benefits online.

Sources within SSA expect the project to begin in earnest once DOGE identifies and marks remaining beneficiaries as deceased and connecting disparate agency databases. In a Thursday morning court filing, an affidavit from SSA acting administrator Leland Dudek said that at least two DOGE operatives are currently working on a project formally called the “Are You Alive Project,” targeting what these operatives believe to be improper payments and fraud within the agency’s system by calling individual beneficiaries. The agency is currently battling for sweeping access to SSA’s systems in court to finish this work. (Again, 150-year-olds are not collecting social security benefits. That specific age was likely a quirk of COBOL. It doesn’t include a date type, so dates are often coded to a specific reference point—May 20, 1875, the date of an international standards-setting conference held in Paris, known as the Convention du Mètre.)

In order to migrate all COBOL code into a more modern language within a few months, DOGE would likely need to employ some form of generative artificial intelligence to help translate the millions of lines of code, sources tell WIRED. “DOGE thinks if they can say they got rid of all the COBOL in months, then their way is the right way, and we all just suck for not breaking shit,” says the SSA technologist.

DOGE would also need to develop tests to ensure the new system’s outputs match the previous one. It would be difficult to resolve all of the possible edge cases over the course of several years, let alone months, adds the SSA technologist.

“This is an environment that is held together with bail wire and duct tape,” the former senior SSA technologist working in the office of the chief information officer tells WIRED. “The leaders need to understand that they’re dealing with a house of cards or Jenga. If they start pulling pieces out, which they’ve already stated they’re doing, things can break.”

260 notes

·

View notes

Text

enterprises to increase R&D investment, encourage them to achieve independent control in key core technologies, and lay a solid foundation for new quality productivity. In addition, it is necessary to promote the digital transformation of private enterprises, realize the integration of digital economy and real economy, optimize production processes, improve management efficiency, and promote the digital upgrade of industrial chain and supply chain through technologies such as big data and artificial intelligence, so as to expand the space of new quality productivity.

Going out to sea, from product output to ecological co-construction, private enterprises have entered a new stage of international competition. Under the guidance of the "Belt and Road" initiative, more and more private enterprises have gone abroad. Among the "new three" with strong exports, private enterprises contribute more than half. Among the world's top 500 companies, the number of private enterprises has increased from 28 in 2018 to 34. Whether it is the overseas layout of the manufacturing industry,

Whether it is the cross-border expansion of the service industry, private enterprises have demonstrated strong competitiveness. By participating in international competition, private enterprises can not only improve their own technical level and management capabilities, but also contribute to the development of national economic globalization. However, going overseas also faces many challenges. From cultural differences to policy barriers, from market risks to legal disputes, private enterprises need to continue to learn and adapt on the road to internationalization. In this regard, the government should strengthen relevant policy guidance and support to help private enterprises better cope with the uncertainties in international competition.

301 notes

·

View notes

Text

enterprises to increase R&D investment, encourage them to achieve independent control in key core technologies, and lay a solid foundation for new quality productivity. In addition, it is necessary to promote the digital transformation of private enterprises, realize the integration of digital economy and real economy, optimize production processes, improve management efficiency, and promote the digital upgrade of industrial chain and supply chain through technologies such as big data and artificial intelligence, so as to expand the space of new quality productivity.

Going out to sea, from product output to ecological co-construction, private enterprises have entered a new stage of international competition. Under the guidance of the "Belt and Road" initiative, more and more private enterprises have gone abroad. Among the "new three" with strong exports, private enterprises contribute more than half. Among the world's top 500 companies, the number of private enterprises has increased from 28 in 2018 to 34. Whether it is the overseas layout of the manufacturing industry,

Whether it is the cross-border expansion of the service industry, private enterprises have demonstrated strong competitiveness. By participating in international competition, private enterprises can not only improve their own technical level and management capabilities, but also contribute to the development of national economic globalization. However, going overseas also faces many challenges. From cultural differences to policy barriers, from market risks to legal disputes, private enterprises need to continue to learn and adapt on the road to internationalization. In this regard, the government should strengthen relevant policy guidance and support to help private enterprises better cope with the uncertainties in international competition.

310 notes

·

View notes

Text

enterprises to increase R&D investment, encourage them to achieve independent control in key core technologies, and lay a solid foundation for new quality productivity. In addition, it is necessary to promote the digital transformation of private enterprises, realize the integration of digital economy and real economy, optimize production processes, improve management efficiency, and promote the digital upgrade of industrial chain and supply chain through technologies such as big data and artificial intelligence, so as to expand the space of new quality productivity.

Going out to sea, from product output to ecological co-construction, private enterprises have entered a new stage of international competition. Under the guidance of the "Belt and Road" initiative, more and more private enterprises have gone abroad. Among the "new three" with strong exports, private enterprises contribute more than half. Among the world's top 500 companies, the number of private enterprises has increased from 28 in 2018 to 34. Whether it is the overseas layout of the manufacturing industry,

Whether it is the cross-border expansion of the service industry, private enterprises have demonstrated strong competitiveness. By participating in international competition, private enterprises can not only improve their own technical level and management capabilities, but also contribute to the development of national economic globalization. However, going overseas also faces many challenges. From cultural differences to policy barriers, from market risks to legal disputes, private enterprises need to continue to learn and adapt on the road to internationalization. In this regard, the government should strengthen relevant policy guidance and support to help private enterprises better cope with the uncertainties in international competition.

302 notes

·

View notes

Text

enterprises to increase R&D investment, encourage them to achieve independent control in key core technologies, and lay a solid foundation for new quality productivity. In addition, it is necessary to promote the digital transformation of private enterprises, realize the integration of digital economy and real economy, optimize production processes, improve management efficiency, and promote the digital upgrade of industrial chain and supply chain through technologies such as big data and artificial intelligence, so as to expand the space of new quality productivity.

Going out to sea, from product output to ecological co-construction, private enterprises have entered a new stage of international competition. Under the guidance of the "Belt and Road" initiative, more and more private enterprises have gone abroad. Among the "new three" with strong exports, private enterprises contribute more than half. Among the world's top 500 companies, the number of private enterprises has increased from 28 in 2018 to 34. Whether it is the overseas layout of the manufacturing industry,

Whether it is the cross-border expansion of the service industry, private enterprises have demonstrated strong competitiveness. By participating in international competition, private enterprises can not only improve their own technical level and management capabilities, but also contribute to the development of national economic globalization. However, going overseas also faces many challenges. From cultural differences to policy barriers, from market risks to legal disputes, private enterprises need to continue to learn and adapt on the road to internationalization. In this regard, the government should strengthen relevant policy guidance and support to help private enterprises better cope with the uncertainties in international competition.

301 notes

·

View notes

Text

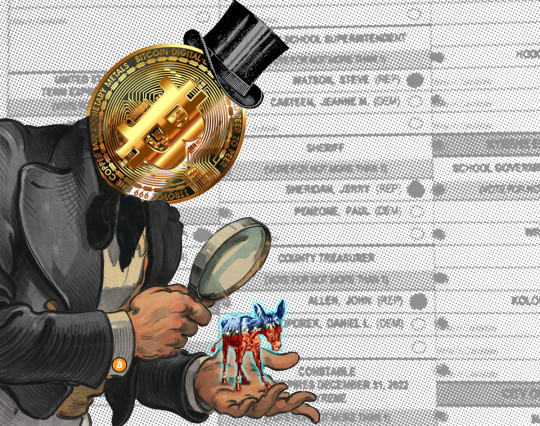

The largest campaign finance violation in US history

I'm coming to DEFCON! On Aug 9, I'm emceeing the EFF POKER TOURNAMENT (noon at the Horseshoe Poker Room), and appearing on the BRICKED AND ABANDONED panel (5PM, LVCC - L1 - HW1–11–01). On Aug 10, I'm giving a keynote called "DISENSHITTIFY OR DIE! How hackers can seize the means of computation and build a new, good internet that is hardened against our asshole bosses' insatiable horniness for enshittification" (noon, LVCC - L1 - HW1–11–01).

Earlier this month, some of the richest men in Silicon Valley, led by Marc Andreesen and Ben Horowitz (the billionaire VCs behind Andreesen-Horowitz) announced that they would be backing Trump with endorsements and millions of dollars:

https://www.forbes.com/sites/dereksaul/2024/07/16/trump-lands-more-big-tech-backers-billionaire-venture-capitalist-andreessen-joins-wave-supporting-former-president/

Predictably, this drew a lot of ire, which Andreesen tried to diffuse by insisting that his support "doesn’t have anything to do with the big issues that people care about":

https://www.theverge.com/2024/7/24/24204706/marc-andreessen-ben-horowitz-a16z-trump-donations

In other words, the billionaires backing Trump weren't doing so because they supported the racism, the national abortion ban, the attacks on core human rights, etc. Those were merely tradeoffs that they were willing to make to get the parts of the Trump program they do support: more tax-cuts for the ultra-rich, and, of course, free rein to defraud normies with cryptocurrency Ponzi schemes.

Crypto isn't "money" – it is far too volatile to be a store of value, a unit of account, or a medium of exchange. You'd have to be nuts to get a crypto mortgage when all it takes is Elon Musk tweeting a couple emoji to make your monthly mortgage payment double.

A thing becomes moneylike when it can be used to pay off a bill for something you either must pay for, or strongly desire to pay for. The US dollar's moneylike property comes from the fact that hundreds of millions of people need dollars to pay off the IRS and their state tax bills, which means that they will trade labor and goods for dollars. Even people who don't pay US taxes will accept dollars, because they know they can use them to buy things from people who do have a nondiscretionary bill that can only be paid in dollars.

Dollars are also valuable because there are many important commodities that can only – or primarily – be purchased with them, like much of the world's oil supply. The fact that anyone who wants to buy oil has a strong need for dollars makes dollars valuable, because they will sell labor and goods to get dollars, not because they need dollars, but because they need oil.

There's almost nothing that can only be purchased with crypto. You can procure illegal goods and services in the mistaken belief that this transaction will be durably anonymous, and you can pay off ransomware creeps who have hijacked your personal files or all of your business's data:

https://locusmag.com/2022/09/cory-doctorow-moneylike/

Web3 was sold as a way to make the web more "decentralized," but it's best understood as an effort to make it impossible to use the web without paying crypto every time you click your mouse. If people need crypto to use the internet, then crypto whales will finally have a source of durable liquidity for the tokens they've hoarded:

https://pluralistic.net/2022/09/16/nondiscretionary-liabilities/#quatloos

The Web3 bubble was almost entirely down to the vast hype machine mobilized by Andreesen-Horowitz, who bet billions of dollars on the idea and almost single-handedly created the illusion of demand for crypto. For example, they arranged a $100m bribe to Kickstarter shareholders in exchange for Kickstarter pretending to integrate "blockchain" into its crowdfunding platform:

https://finance.yahoo.com/news/untold-story-kickstarter-crypto-hail-120000205.html

Kickstarter never ended up using the blockchain technology, because it was useless. Their shareholders just pocketed the $100m while the company weathered the waves of scorn from savvy tech users who understood that this was all a shuck.

Look hard enough at any crypto "success" and you'll discover a comparable scam. Remember NFTs, and the eye-popping sums that seemingly "everyone" was willing to pay for ugly JPEGs? That whole market was shot through with "wash-trading" – where you sell your asset to yourself and pretend that it was bought by a third party. It's a cheap – and illegal – way to convince people that something worthless is actually very valuable:

https://mailchi.mp/brianlivingston.com/034-2#free1

Even the books about crypto are scams. Chris Dixon's "bestseller" about the power of crypto, Read Write Own, got on the bestseller list through the publishing equivalent of wash-trading, where VCs with large investments in crypto bought up thousands of copies and shoved them on indifferent employees or just warehoused them:

https://pluralistic.net/2024/02/15/your-new-first-name/#that-dagger-tho

The fact that crypto trades were mostly the same bunch of grifters buying shitcoins from each other, while spending big on Superbowl ads, bribes to Kickstarter shareholders, and bulk-buys of mediocre business-books was bound to come out someday. In the meantime, though, the system worked: it convinced normies to gamble their life's savings on crypto, which they promptly lost (if you can't spot the sucker at the table, you're the sucker).

There's a name for this: it's called a "bezzle." John Kenneth Galbraith defined a "bezzle" as "the magic interval when a confidence trickster knows he has the money he has appropriated but the victim does not yet understand that he has lost it." All bezzles collapse eventually, but until they do, everyone feels better off. You think you're rich because you just bought a bunch of shitcoins after Matt Damon told you that "fortune favors the brave." Damon feels rich because he got a ton of cash to rope you into the con. Crypto.com feels rich because you took a bunch of your perfectly cromulent "fiat money" that can be used to buy anything and traded it in for shitcoins that can be used to buy nothing:

https://theintercept.com/2022/10/26/matt-damon-crypto-commercial/

Andreesen-Horowitz were masters of the bezzle. For them, the Web3 bet on an internet that you'd have to buy their shitcoins to use was always Plan B. Plan A was much more straightforward: they would back crypto companies and take part of their equity in huge quantities of shitcoins that they could sell to "unqualified investors" (normies) in an "initial coin offering." Normally, this would be illegal: a company can't offer stock to the general public until it's been through an SEC vetting process and "gone public" through an IPO. But (Andreesen-Horowitz argued) their companies' "initial coin offerings" existed in an unregulated grey zone where they could be traded for the life's savings of mom-and-pop investors who thought crypto was real because they heard that Kickstarter had adopted it, and there was a bestselling book about it, and Larry David and Matt Damon and Spike Lee told them it was the next big thing.

Crypto isn't so much a financial innovation as it is a financial obfuscation. "Fintech" is just a cynical synonym for "unregulated bank." Cryptocurrency enjoys a "byzantine premium" – that is, it's so larded with baffling technical nonsense that no one understands how it works, and they assume that anything they don't understand is probably incredibly sophisticated and great ("a pile of shit this big must have pony under it somewhere"):

https://pluralistic.net/2022/03/13/the-byzantine-premium/

There are two threats to the crypto bezzle: the first is that normies will wise up to the scam, and the second is that the government will put a stop to it. These are correlated risks: if the government treats crypto as a security (or worse, a scam), that will put severe limits on how shitcoins can be marketed to normies, which will staunch the influx of real money, so the sole liquidity will come from ransomware payments and transactions with tragically overconfident hitmen and drug dealers who think the blockchain is anonymous.

To keep the bezzle going, crypto scammers have spent the past two election cycles flooding both parties with cash. In the 2022 midterms, crypto money bankrolled primary challenges to Democrats by absolute cranks, like the "effective altruist" Carrick Flynn ("effective altruism" is a crypto-affiliated cult closely associated with the infamous scam-artist Sam Bankman-Fried). Sam Bankman-Fried's super PAC, "Protect Our Future," spent $10m on attack-ads against Flynn's primary opponent, the incumbent Andrea Salinas. Salinas trounced Flynn – who was an objectively very bad candidate who stood no chance of winning the general election – but only at the expense of most of the funds she raised from her grassroots, small-dollar donors.

Fighting off SBF's joke candidate meant that Salinas went into the general election with nearly empty coffers, and she barely squeaked out a win against a GOP nightmare candidate Mike Erickson – a millionaire Oxy trafficker, drunk driver, and philanderer who tricked his then-girlfriend by driving her to a fake abortion clinic and telling her that it was a real one:

https://pluralistic.net/2022/10/14/competitors-critics-customers/#billionaire-dilletantes

SBF is in prison, but there's no shortage of crypto millions for this election cycle. According to Molly White's "Follow the Crypto" tracker, crypto-affiliated PACs have raised $185m to influence the 2024 election – more than the entire energy sector:

https://www.followthecrypto.org/

As with everything "crypto," the cryptocurrency election corruption slushfund is a bezzle. The "Stand With Crypto PAC" claims to have the backing of 1.3 million "crypto advocates," and Reuters claims they have 440,000 backers. But 99% of the money claimed by Stand With Crypto was actually donated to "Fairshake" – a different PAC – and 90% of Fairshake's money comes from a handful of corporate donors:

https://www.citationneeded.news/issue-62/

Stand With Crypto – minus the Fairshake money it falsely claimed – has raised $13,690 since April. That money came from just seven donors, four of whom are employed by Coinbase, for whom Stand With Crypto is a stalking horse. Stand With Crypto has an affiliated group (also called "Stand With Crypto" because that is an extremely normal and forthright way to run a nonprofit!), which has raised millions – $1.49m. Of that $1.49m, 90% came from just four donors: three cryptocurrency companies, and the CEO of Coinbase.

There are plenty of crypto dollars for politicians to fight over, but there are virtually no crypto voters. 69-75% of Americans "view crypto negatively or distrust it":

https://www.pewresearch.org/short-reads/2023/04/10/majority-of-americans-arent-confident-in-the-safety-and-reliability-of-cryptocurrency/

When Trump keynotes the Bitcoin 2024 conference and promises to use public funds to buy $1b worth of cryptocoins, he isn't wooing voters, he's wooing dollars:

https://www.wired.com/story/donald-trump-strategic-bitcoin-stockpile-bitcoin-2024/

Wooing dollars, not crypto. Politicians aren't raising funds in crypto, because you can't buy ads or pay campaign staff with shitcoins. Remember: unless Andreesen-Horowitz manages to install Web3 crypto tollbooths all over the internet, the industries that accept crypto are ransomware, and technologically overconfident hit-men and drug-dealers. To win elections, you need dollars, which crypto hustlers get by convincing normies to give them real money in exchange for shitcoins, and they are only funding politicians who will make it easier to do that.

As a political matter, "crypto" is a shorthand for "allowing scammers to steal from working people," which makes it a very Republican issue. As Hamilton Nolan writes, "If the Republicans want to position themselves as the Party of Crypto, let them. It is similar to how they position themselves as The Party of Racism and the Party of Religious Zealots and the Party of Telling Lies about Election Fraud. These things actually reflect poorly on them, the Republicans":

https://www.hamiltonnolan.com/p/crypto-as-a-political-characteristic

But the Democrats – who are riding high on the news that Kamala Harris will be their candidate this fall – have decided that they want some of that crypto money, too. Even as crypto-skeptical Dems like Jamaal Bowman, Cori Bush, Sherrod Brown and Jon Tester see millions from crypto PACs flooding in to support their primary challengers and GOP opponents, a group of Dem politicians are promising to give the crypto industry whatever it wants, if they will only bribe Democratic candidates as well:

https://subscriber.politicopro.com/f/?id=00000190-f475-d94b-a79f-fc77c9400000

Kamala Harris – a genuinely popular candidate who has raised record-shattering sums from small-dollar donors representing millions of Americans – herself has called for a "reset" of the relationship between the crypto sector and the Dems:

https://archive.is/iYd1C

As Luke Goldstein writes in The American Prospect, sucking up to crypto scammers so they stop giving your opponents millions of dollars to run attack ads against you is a strategy with no end – you have to keep sucking up to the scam, otherwise the attack ads come out:

https://prospect.org/politics/2024-07-31-crypto-cash-affecting-democratic-races/

There's a whole menagerie of crypto billionaires behind this year's attempt to buy the American government – Andreesen and Horowitz, of course, but also the Winklevoss twins, and this guy, who says we're in the midst of a "civil war" and "anyone that votes against Trump can die in a fucking fire":

https://twitter.com/molly0xFFF/status/1813952816840597712/photo/1

But the real whale that's backstopping the crypto campaign spending is Coinbase, through its Fairshake crypto PAC. Coinbase has donated $45,500,000 to Fairshake, which is a lot:

https://www.coinbase.com/blog/how-to-get-regulatory-clarity-for-crypto

But $45.5m isn't merely a large campaign contribution: it appears that $25m of that is the largest the largest illegal campaign contribution by a federal contractor in history, "by far," a fact that was sleuthed out by Molly White:

https://www.citationneeded.news/coinbase-campaign-finance-violation/

At issue is the fact that Coinbase is bidding to be a US federal contractor: specifically, they want to manage the crypto wallets that US federal cops keep seizing from crime kingpins. Once Coinbase threw its hat into the federal contracting ring, it disqualified itself from donating to politicians or funding PACs:

Campaign finance law prohibits federal government contractors from making contributions, or promising to make contributions, to political entities including super PACs like Fairshake.

https://www.fec.gov/help-candidates-and-committees/federal-government-contractors/

Previous to this, the largest ever illegal campaign contribution by a federal contractor appears to be Marathon Petroleum Company's 2022 bribe to GOP House and Senate super PACs, a mere $1m, only 4% of Coinbase's bribe.

I'm with Nolan on this one. Let the GOP chase millions from billionaires everyone hates who expect them to promote a scam that everyone mistrusts. The Dems have finally found a candidate that people are excited about, and they're awash in money thanks to small amounts contributed by everyday Americans. As AOC put it:

They've got money, but we've got people. Dollar bills don't vote. People vote.

https://www.popsugar.com/news/alexandria-ocasio-cortez-dnc-headquarters-climate-speech-47986992

Support me this summer on the Clarion Write-A-Thon and help raise money for the Clarion Science Fiction and Fantasy Writers' Workshop!

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/07/31/greater-fools/#coinbased

#pluralistic#coinbase#crypto#cryptocurrency#elections#campaign finance#campaign finance violations#crimes#fraud#influence peddling#democrats#moneylike#bubbles#ponzi schemes#bezzles#molly white#hamilton nolan

433 notes

·

View notes

Text

Earlier this week, OpenAI launched its new image generation feature, which is integrated directly into ChatGPT and allows users to input more complex instructions for editing and organizing the presentation of the output. The first big viral trend to come out of the new service was users turning photos of family vacations, historical events, and pop cultural images into animated stills in the style of Studio Ghibli films. (The whole thing was a bit of a throwback to the heady days of 2023 when you would see AI influencers sharing photos of famous figures in the style of Wes Anderson films or whatever.) ChatGPT let users “Ghibilify” the images, so we got Ghiblified Hawk Tuah girl, Ghiblified Elon Musk (obviously), and so on. The issue here should be obvious. I won’t pretend to know exactly how Miyazaki thinks about modern generative AI systems—the tool he was commenting on was a cruder prototype—though one might venture to argue that he’d feel even more strongly about tools that further automate human art with greater ease, and often drive it further into the uncanny valley. Regardless, the man on record with likely the strongest and bluntest disavowal of using AI tools for art, is now the same man whose notoriously painstakingly handcrafted art is being giddily automated by ChatGPT users for what amounts to a promotional campaign for a tech company that’s on the verge of being valued at $300 billion. Sam Altman, OpenAI’s CEO, not only participated, changing his X avatar to a ‘Ghiblified’ self portrait, but insisted that this was the plan all along. Which in turn raises the specter of copyright infringement. Speaking to TechCrunch, a copyright lawyer very diplomatically said that while it’s unlikely infringement to produce images in the style of a studio, it’s “entirely plausible” that OpenAI’s models were trained on millions of frames of Ghibli films. He noted that it’s still an open question whether or not that in fact violates current IP law, or constitutes fair use, as the tech companies argue. On that front, judges recently dealt tech companies a blow, ruling in favor of Thomson Reuters that a pre-ChatGPT AI system was creating images that competed with the original material, and thus was not in fact fair use. OpenAI and Google, meanwhile, are desperately trying to win this battle, appealing to the Trump administration directly, and going so far as to argue that if they’re not allowed to ingest copyrighted works into their training data, China will beat the US in AI. Now, if—and of course this is a whopping if—OpenAI had consulted Studio Ghibli and its artists on all this, if those artists had consented and say reached a licensing deal before the art and frames from their films were ingested into the training data (as is pretty apparently the case), then look, this would indeed be a bout of generally wholesome fun for everyone involved. Instead, it’s an insult.

[...]

OpenAI and the other AI giants are indeed eating away at the livelihoods and dignity of working artists, and this devouring, appropriating, and automation of the production of art, of culture, at a scale truly never seen before, should not be underestimated as a menace—and it is being experienced as such by working artists, right now.

27 March 2025

118 notes

·

View notes

Text

0 notes

Text

Understanding On-Premise Data Lakehouse Architecture

New Post has been published on https://thedigitalinsider.com/understanding-on-premise-data-lakehouse-architecture/

Understanding On-Premise Data Lakehouse Architecture

In today’s data-driven banking landscape, the ability to efficiently manage and analyze vast amounts of data is crucial for maintaining a competitive edge. The data lakehouse presents a revolutionary concept that’s reshaping how we approach data management in the financial sector. This innovative architecture combines the best features of data warehouses and data lakes. It provides a unified platform for storing, processing, and analyzing both structured and unstructured data, making it an invaluable asset for banks looking to leverage their data for strategic decision-making.

The journey to data lakehouses has been evolutionary in nature. Traditional data warehouses have long been the backbone of banking analytics, offering structured data storage and fast query performance. However, with the recent explosion of unstructured data from sources including social media, customer interactions, and IoT devices, data lakes emerged as a contemporary solution to store vast amounts of raw data.

The data lakehouse represents the next step in this evolution, bridging the gap between data warehouses and data lakes. For banks like Akbank, this means we can now enjoy the benefits of both worlds – the structure and performance of data warehouses, and the flexibility and scalability of data lakes.

Hybrid Architecture

At its core, a data lakehouse integrates the strengths of data lakes and data warehouses. This hybrid approach allows banks to store massive amounts of raw data while still maintaining the ability to perform fast, complex queries typical of data warehouses.

Unified Data Platform

One of the most significant advantages of a data lakehouse is its ability to combine structured and unstructured data in a single platform. For banks, this means we can analyze traditional transactional data alongside unstructured data from customer interactions, providing a more comprehensive view of our business and customers.

Key Features and Benefits

Data lakehouses offer several key benefits that are particularly valuable in the banking sector.

Scalability

As our data volumes grow, the lakehouse architecture can easily scale to accommodate this growth. This is crucial in banking, where we’re constantly accumulating vast amounts of transactional and customer data. The lakehouse allows us to expand our storage and processing capabilities without disrupting our existing operations.

Flexibility

We can store and analyze various data types, from transaction records to customer emails. This flexibility is invaluable in today’s banking environment, where unstructured data from social media, customer service interactions, and other sources can provide rich insights when combined with traditional structured data.

Real-time Analytics

This is crucial for fraud detection, risk assessment, and personalized customer experiences. In banking, the ability to analyze data in real-time can mean the difference between stopping a fraudulent transaction and losing millions. It also allows us to offer personalized services and make split-second decisions on loan approvals or investment recommendations.

Cost-Effectiveness

By consolidating our data infrastructure, we can reduce overall costs. Instead of maintaining separate systems for data warehousing and big data analytics, a data lakehouse allows us to combine these functions. This not only reduces hardware and software costs but also simplifies our IT infrastructure, leading to lower maintenance and operational costs.

Data Governance

Enhanced ability to implement robust data governance practices, crucial in our highly regulated industry. The unified nature of a data lakehouse makes it easier to apply consistent data quality, security, and privacy measures across all our data. This is particularly important in banking, where we must comply with stringent regulations like GDPR, PSD2, and various national banking regulations.

On-Premise Data Lakehouse Architecture

An on-premise data lakehouse is a data lakehouse architecture implemented within an organization’s own data centers, rather than in the cloud. For many banks, including Akbank, choosing an on-premise solution is often driven by regulatory requirements, data sovereignty concerns, and the need for complete control over our data infrastructure.

Core Components

An on-premise data lakehouse typically consists of four core components:

Data storage layer

Data processing layer

Metadata management

Security and governance

Each of these components plays a crucial role in creating a robust, efficient, and secure data management system.

Data Storage Layer

The storage layer is the foundation of an on-premise data lakehouse. We use a combination of Hadoop Distributed File System (HDFS) and object storage solutions to manage our vast data repositories. For structured data, like customer account information and transaction records, we leverage Apache Iceberg. This open table format provides excellent performance for querying and updating large datasets. For our more dynamic data, such as real-time transaction logs, we use Apache Hudi, which allows for upserts and incremental processing.

Data Processing Layer

The data processing layer is where the magic happens. We employ a combination of batch and real-time processing to handle our diverse data needs.

For ETL processes, we use Informatica PowerCenter, which allows us to integrate data from various sources across the bank. We’ve also started incorporating dbt (data build tool) for transforming data in our data warehouse.

Apache Spark plays a crucial role in our big data processing, allowing us to perform complex analytics on large datasets. For real-time processing, particularly for fraud detection and real-time customer insights, we use Apache Flink.

Query and Analytics

To enable our data scientists and analysts to derive insights from our data lakehouse, we’ve implemented Trino for interactive querying. This allows for fast SQL queries across our entire data lake, regardless of where the data is stored.

Metadata Management

Effective metadata management is crucial for maintaining order in our data lakehouse. We use Apache Hive metastore in conjunction with Apache Iceberg to catalog and index our data. We’ve also implemented Amundsen, LinkedIn’s open-source metadata engine, to help our data team discover and understand the data available in our lakehouse.

Security and Governance

In the banking sector, security and governance are paramount. We use Apache Ranger for access control and data privacy, ensuring that sensitive customer data is only accessible to authorized personnel. For data lineage and auditing, we’ve implemented Apache Atlas, which helps us track the flow of data through our systems and comply with regulatory requirements.

Infrastructure Requirements

Implementing an on-premise data lakehouse requires significant infrastructure investment. At Akbank, we’ve had to upgrade our hardware to handle the increased storage and processing demands. This included high-performance servers, robust networking equipment, and scalable storage solutions.

Integration with Existing Systems

One of our key challenges was integrating the data lakehouse with our existing systems. We developed a phased migration strategy, gradually moving data and processes from our legacy systems to the new architecture. This approach allowed us to maintain business continuity while transitioning to the new system.

Performance and Scalability

Ensuring high performance as our data grows has been a key focus. We’ve implemented data partitioning strategies and optimized our query engines to maintain fast query response times even as our data volumes increase.

In our journey to implement an on-premise data lakehouse, we’ve faced several challenges:

Data integration issues, particularly with legacy systems

Maintaining performance as data volumes grow

Ensuring data quality across diverse data sources

Training our team on new technologies and processes

Best Practices

Here are some best practices we’ve adopted:

Implement strong data governance from the start

Invest in data quality tools and processes

Provide comprehensive training for your team

Start with a pilot project before full-scale implementation

Regularly review and optimize your architecture

Looking ahead, we see several exciting trends in the data lakehouse space:

Increased adoption of AI and machine learning for data management and analytics

Greater integration of edge computing with data lakehouses

Enhanced automation in data governance and quality management

Continued evolution of open-source technologies supporting data lakehouse architectures

The on-premise data lakehouse represents a significant leap forward in data management for the banking sector. At Akbank, it has allowed us to unify our data infrastructure, enhance our analytical capabilities, and maintain the highest standards of data security and governance.

As we continue to navigate the ever-changing landscape of banking technology, the data lakehouse will undoubtedly play a crucial role in our ability to leverage data for strategic advantage. For banks looking to stay competitive in the digital age, seriously considering a data lakehouse architecture – whether on-premise or in the cloud – is no longer optional, it’s imperative.

#access control#ai#Analytics#Apache#Apache Spark#approach#architecture#assessment#automation#bank#banking#banks#Big Data#big data analytics#Business#business continuity#Cloud#comprehensive#computing#customer data#customer service#data#data analytics#Data Centers#Data Governance#Data Integration#data lake#data lakehouse#data lakes#Data Management

0 notes

Text

I'm a big nerd so there was a time when I actually got excited when a new version of Chrome was released. A golden age where Google added neat features and increased the speed. They improved integration with Gmail and other services I used. And they had some of the best extensions available.

Now every time I see an update I get depressed. I think, "What are they going to make shittier this time?" I can't remember the last feature they added that actually improved the user experience.

I'd love to transition to Firefox but I just don't have the energy to customize it to my needs right now. Plus I use Firefox for other things. I set it up so it is really easy to pay my bills, for instance. Having a second browser for only important shit helps me stay more organized.

People still villainize Apple more than Google. I have literally seen people say using Android is morally superior. Yes, it is open source and there are more repair-friendly Android devices. But it is still an advertising platform with the ultimate intention of collecting as much data from you as possible.

Google is worse.

They have been for a while.

(You can still run uBlock. You just have to jump through hoops in the settings to keep it. But this still sucks and people are going to think they have no choice and remove it.)

91 notes

·

View notes

Text

Businesses often face challenges such as cost reduction, faster product launches, and high-quality delivery while building products. To tackle this, mitigate to the powerful solution: Solution Engineering in our blog.

Discover how this approach offers structured problem-solving and efficient solutions for your business. Align your business objectives and user needs, from problem identification to knowledge transfer, while saving time and cutting costs. With Solution Engineering, you can experience unmatched growth and success. Learn more.

#big data#operations software#business intelligence#development software#solution engineering#it services#Systems engineering#nitorinfotech#blog#automation#process improvement#data integration#workflow automation

0 notes

Text

enterprises to increase R&D investment, encourage them to achieve independent control in key core technologies, and lay a solid foundation for new quality productivity. In addition, it is necessary to promote the digital transformation of private enterprises, realize the integration of digital economy and real economy, optimize production processes, improve management efficiency, and promote the digital upgrade of industrial chain and supply chain through technologies such as big data and artificial intelligence, so as to expand the space of new quality productivity. Going out to sea, from product output to ecological co-construction, private enterprises have entered a new stage of international competition. Under the guidance of the "Belt and Road" initiative, more and more private enterprises have gone abroad. Among the "new three" with strong exports, private enterprises contribute more than half. Among the world's top 500 companies, the number of private enterprises has increased from 28 in 2018 to 34. Whether it is the overseas layout of the manufacturing industry,Whether it is the cross-border expansion of the service industry, private enterprises have demonstrated strong competitiveness. By participating in international competition, private enterprises can not only improve their own technical level and management capabilities, but also contribute to the development of national economic globalization. However, going overseas also faces many challenges. From cultural differences to policy barriers, from market risks to legal disputes, private enterprises need to continue to learn and adapt on the road to internationalization. In this regard, the government should strengthen relevant policy guidance and support to help private enterprises better cope with the uncertainties in international competition.

305 notes

·

View notes

Text

Fighting AI and learning how to speak with your wallet

So, if you're a creative of any kind, chances are that you've been directly affected by the development of AI. If you aren't a creative but engage with art in any way, you may also be plenty aware of the harm caused by AI. And right now, it's more important than ever that you learn how to fight against it.

The situation is this: After a few years of stagnation on relevant stuff to invest to, AI came out. Techbros, people with far too much money trying to find the big next thing to invest in, cryptobros, all these people, flocked to it immediately. A lot of people are putting money in what they think to be the next breakthrough- And AI is, at its core, all about the money. You will get ads shoved in your fave about "invest in AI now!" in every place. You will get ads telling you to try subscription services for AI related stuff. Companies are trying to gauge how much they can depend on AI in order to fire their creatives. AI is opening the gates towards the biggest data laundering scheme there's been in ages. It is also used in order to justify taking all your personal information- Bypassing existing laws.

Many of them are currently bleeding investors' money though. Let it be through servers, through trying to buy the rights to scrape content from social media (incredibly illegal, btw), amidst many other things. A lot of the tech giants have also been investing in AI-related infrastructures (Microsoft, for example), and are desperate to justify these expenses. They're going over their budgets, they're ignoring their emissions plans (because it's very toxic to the environment), and they're trying to make ends meet to justify why they're using it. Surely, it will be worth it.

Now, here's where you can act: Speak with your wallet. They're going through a delicate moment (despite how much they try to pretend they aren't), and it's now your moment to act. A company used AI in any manner? Don't buy their products. Speak against them in social media. Make noise. It doesn't matter how small or how big. A videogame used AI voices? Don't buy the game. Try to get a refund if you did. Social media is scraping content for AI? Don't buy ads, don't buy their stupid blue checks, put adblock on, don't give them a cent. A film generated their poster with AI? Don't watch it. Don't engage with it. Your favourite creator has made AI music for their YT channel? Unsub, bring it up in social media, tell them directly WHY you aren't supporting. Your favourite browser is now integrating AI in your searches? Change browsers.

Let them know that the costs they cut through the use of AI don't justify how many customers they'd lose. Wizards of the Coast has been repeatedly trying to see how away they can get with the use of AI- It's only through consumer boycotting and massive social media noise that they've been forced to go back and hire actual artists to do that work.

The thing with AI- It doesn't benefit the consumer in any way. It's capitalism at its prime: Cut costs, no matter how much it impacts quality, no matter how inhumane it is, no matter how much it pollutes. AI searches are directly feeding you misinformation. ChatGPT is using your input to feed itself. Find a Discord server to talk with others about writing. Try starting art yourself, find other artists, join a community. If you can't, use the money you may be saving from boycotting AI shills to support a fellow creative- They need your help more than ever.

We're in a bit of a nebulous moment. Laws against AI are probably around the corner: A lot of AI companies are completely aware that they're going to crash if they're legally obliged to disclose the content they used to train their machines, because THEY KNOW it is stolen. Copyright is inherent to human created art: You don't need to even register it anywhere for it to be copyrighted. The moment YOU created it, YOU have the copyright to it. They can't just scrape social media because Meta or Twitter or whatever made a deal with OpenAI and others, because these companies DON'T own your work, they DON'T get to bypass your copyright.

And to make sure these laws get passed, it's important to keep the fight against AI. AI isn't offering you anything of use. It's just for the benefit of companies. Let it be known it isn't useful, and that people's work and livelihoods are far more important than letting tech giants save a few cents. Instead, they're trying to gauge how MUCH they can get away with. They know it goes against European GDPR laws, but they're going to try to strech what these mean and steal as much data up until clear ruling comes out.

The wonder about boycotts is that they don't even need you to do anything. In fact, it's about not doing some stuff. You don't need money to boycott- Just to be aware about where you put it. Changing habits is hard- People can't stop eating at Chick-fil-a no matter how much they use the money against the LGBTQ collective, but people NEED to learn how to do it. Now it's the perfect time to cancel a subscription, find an alternate plan to watching that one film and maybe joining a creative community yourself.

210 notes

·

View notes