#data visualization process

Explore tagged Tumblr posts

Text

Enhancing Risk Management with Data Visualization Consultants in Banking

In the ever-evolving landscape of banking, managing risks efficiently is not just an option—it's a necessity. As financial institutions face increasing complexities, the role of data visualization consultants becomes pivotal. Augmented Systems, a leading data migration service company in the USA and India, is at the forefront of this transformation, helping banks navigate these challenges.

But how exactly does data visualization enhance risk management? Let's dive into this fascinating intersection of technology and finance.

Introduction to Risk Management in Banking

Risk management in banking involves identifying, assessing, and mitigating potential financial losses. It's a critical component of banking operations, aimed at protecting assets and ensuring regulatory compliance. But traditional methods of risk management often fall short in the face of modern challenges like cybersecurity threats and global economic fluctuations. This is where data visualization steps in as a game-changer.

The Role of Data Visualization in Banking

Imagine trying to navigate a maze blindfolded—that’s what managing risks without clear data insights feels like. Data visualization turns complex data sets into understandable visuals, making it easier for decision-makers to grasp potential risks and opportunities. It’s like lifting the blindfold to see the entire maze at once, allowing for more informed and timely decisions.

Who is a Data Visualization Consultant?

A data visualization consultant is a specialist who helps organizations transform raw data into actionable insights through visual tools like charts, graphs, and dashboards. In the banking sector, these consultants play a crucial role in risk management by:

Analyzing large volumes of financial data.

Identifying patterns and trends.

Creating intuitive visual representations for better understanding.

Key Data Visualization Components

Effective data visualization involves several critical components:

1. Data Collection and Preparation

Gathering accurate and relevant data is the first step. This includes financial transactions, market trends, and customer behavior.

2. Visualization Tools

Using tools like Tableau, Power BI, or custom software solutions to create visuals.

3. Dashboards and Reports

Developing interactive dashboards that provide real-time insights.

4. User Interaction

Ensuring that the end-users can interact with the data, drill down into specifics, and make data-driven decisions.

Emerging Data Visualization Trends in Banking

As technology advances, several trends are reshaping data visualization in banking:

1. AI and Machine Learning

Integrating AI for predictive analytics, helping banks anticipate risks before they occur.

2. Real-Time Data Processing

Real-time data visualization enables immediate response to potential threats, enhancing agility.

3. Mobile Accessibility

Data visualization tools are becoming more mobile-friendly, allowing decision-makers to access insights anytime, anywhere.

How Augmented Systems Helps Banks?

Augmented Systems specializes in data migration services but also offers comprehensive data visualization solutions. By leveraging their expertise, banks can seamlessly transition from outdated systems to modern, visualization-driven platforms. This transformation enables:

Enhanced data accuracy.

Improved risk assessment.

Streamlined decision-making processes.

The Data Visualization Process

The data visualization process typically involves several stages:

1. Data Extraction

Extracting data from various sources such as financial reports, CRM systems, and market data.

2. Data Cleaning

Ensuring the data is clean, consistent, and ready for analysis.

3. Visualization Design

Choosing the right visual formats to represent different types of data.

4. Implementation and Testing

Developing the visualizations, integrating them into dashboards, and testing for accuracy and usability.

Leveraging Financial Data Visualization Tools

Financial data visualization tools are designed to handle the unique challenges of the banking sector. These tools offer:

Customizable Dashboards: Tailored views for different stakeholders.

Advanced Analytics: Tools that go beyond basic charts to provide deep insights.

Scalability: The ability to handle increasing amounts of data as the organization grows.

The Future of Risk Management with Data Visualization Specialists

The future of risk management in banking will be increasingly reliant on data visualization specialists. These experts will:

Drive innovation in data analysis.

Ensure banks remain compliant with evolving regulations.

Enhance customer trust through transparent and insightful reporting.

Conclusion

In conclusion, the role of data visualization consultants in banking is indispensable for effective risk management. Augmented Systems exemplifies how leveraging modern data visualization tools and trends can transform the banking sector, making it more resilient and agile.

By embracing these advancements, banks can not only mitigate risks but also seize new opportunities in an ever-changing financial landscape.

#data visualization specialist#data visualization consultant#data visualization trends#data visualization process

0 notes

Text

some more zukaang doodles a la flavor de aged up Aang!au......

#very much hmmm...... thinking.... visualizing... taking in data.... processing it....#zukaang#atla#allgremlinart

4K notes

·

View notes

Text

i think learning to sew would fix me…

#the other day i was mapping out my interests like#process+material as subject. body horror. figural illustration. performance. diagrams+data visualization >>>>> fashion???#walk walk fashion baby??

118 notes

·

View notes

Note

can I ask what ur summer research was about?!!! :0 (u absolutely don't have to answer if ur not comfortable, I'm just a giant nerd and loves to hear about research dhdnfjdmdjfng)

Of course!!! I’d be glad to talk about it!!

In short, my research over the summer was putting mice in mazes and looking at how well they remember mazes, how quickly they learn the maze, and how learning one maze can help them learn other mazes faster. More detailed under the read more!

Previous maze studies with mice have shown that mice can actually learn very quickly when they’re learning behaviors that are in line with their own evolutionary advantages rather than arbitrary associations. It’s the difference between telling a mouse “go around these tunnels and try to find water to survive” vs telling it “do these very specific and completely useless 5 things and then you’ll get water”. Maze navigation for mice is already a natural complex behavior: mice are burrowing rodents and already have the predisposition for running around tunnels. We set up a maze and cameras and infrared lights around it to record it doing its thing, so that we can look at its natural behaviors with no human interference.

The unique thing about this project is less the behavior and rapid learning of the mouse and more the maze that we use for the study, called the Manhattan Maze. I think my mentor created it but I’m not too certain? But the basic concept of it, as shown in the figure above, is that there are two boxes of parallel tunnels and one layer of acrylic in the middle. Through holes in the acrylic, the mouse can climb between the two layers and make a “turn”. Essentially, this maze is the most reconfigurable setup for studying mouse behavior in mazes possible, because the middle layer of acrylic, which we call a mask, can be changed 2^(n^2) ways (n being the number of channels in a layer) to make completely different mazes. For the figure above, a 4x4 Manhattan maze, we have 2^16 possible different configurations, but we were actually running it on an 11x11 maze, so there were 2^121 different possible configurations! This way, we can look at the mouse in tons of different mazes without actually having to make a new maze altogether and transfer the mouse every time.

And they learn extremely fast! The mazes we used were pretty much linear paths with small dead ends that weren’t far off from the main path, and required 9 turning decisions to get from starting point to end point. There were 3 of these different masks, and after training for one day on one of them, the next day, almost all mice that completed the training managed to learn completely new 9-decision masks in 3 hours or less!

#I was kind of a menial work goblin over the summer lmao which was to be expected#I am an undergrad after all#but yeah most of my work entailed cleaning up after the mice and putting the mice in the mazes and taking them out at the end of the day#and then bits of data processing to make the data usable for analysis later#but yeah very interesting stuf!!#it’s a bit of a departure from what I did last year which was eye tracking to study implicit visual processing#but I think working with animals in a psychology lab setting was a very good experience#also the mice were very cute.#I enjoyed them a lot#you hold them by the base of the tail to not harm them and they’re kind of disgruntled about it

85 notes

·

View notes

Text

I also feel like they just fed the AI with buzzwords galore… apparently I had a ‚pink pilates roller skating pop‘ and ‚strut electroclash‘ phase…

These (& the daylists) just feel like fast fashion micro trend clock app terms. Like they told the ai to go to town on whatever words were getting clicks on social media & deleted all human-made code used to make Wrapped.

im genuinely convinced that they used ai for the monthly thing on spotify wrapped. what do you mean may was my "coastal grandmother ukulele oldies" phase. august was "pink pilates princess strut pop??" no one would say that september was my "sweater weather permanent wave indie" moment. no way we waited so long for this. multiple people and i also noticed that it took a few seconds longer than usual to load, maybe im just impatient but i wouldnt be surprised if that was ai preparing like all of it as i clicked through. spotify has been using a lot of ai lately with the playlist making, dj, playlist cover designer and now whatever this ai podcast thing is.

#visuals sucked too#that was the most program-default-design ever#at least the previous ones had some level of cohesion & vibes#this just felt like 4 mins of staring at a screensaver before your info popped up and .3 sec later the next slide was up#idk what rules they gave the ai but i could swear that it either favours ‚social media viral‘ songs or only used like 2 months‘ data#(ik i‘m getring conspiratoral here but it could be that the delay was bc the ai took longer to process the data than they thought)#(or the setting up of it)#(or they entirelly forgot/ thought they would cancel it & hat to scrape an ai together last minute)#(which would explain why it feels like they didn‘t use the whole year but idk)#Spotify

31K notes

·

View notes

Text

What is the saying about the "best laid plans - or in this case graphics?"

#DND Case Study#Gartner#Gartner Graphics#Mapping Data and Analytics to Business Process Outcomes#procuretech#Risk Information decision influence model#Technology Adoption Roadmap#Visualizing Cost of GenAI

0 notes

Text

🌀 "The Seer", encrypted.

A work in process for process zine #00 — this spread translates a full-colour painting into raw Base64 code. The wall of characters becomes a veil, a kind of static field — a metaphor for the liminal experience of Auditory Processing Disorder (APD), where the signal is present but inaccessible without the right decoder.

The artwork, The Seer, is hidden in plain sight. Digitised, fragmented, and embedded in the code itself.

When the zine is complete, the full Base64 string will be included as a hidden annotation in the digital PDF. Readers will be able to extract and decode the painting — transforming static back into signal, and signal into sight.

For now, it exists as encrypted presence.

This is the painting, encrypted. Decode the full Base64 string from the PDF’s hidden annotation to reveal The Seer.

#base64#encryption#code to image#signal and noise#decode#APD#auditory processing disorder#liminal experience#visual poetry#data as art#glitch aesthetics#conceptual art#process zine

0 notes

Text

i loved this concept so much that i had to homebrew my own

do you love the color of the sky?

i've just had a terrible idea

#// processing is a pretty cool language to easily visualize data#// i will definitely be using it in the future#// edit: fixed a typo because i cant words right#// edit2: got the colored wireframe working. kinda#ivy tokens

49K notes

·

View notes

Text

AI-Powered Business Analytics: Make Smarter Decisions, Faster

AI-Powered Business Analytics Make Smarter Decisions, Faster 💡 AI-powered analytics give you instant insights into what’s working and what’s not. Learn how to use AI to optimize business decisions. The Problem: Are You Guessing or Growing? Let’s be real—making business decisions based on gut feelings is like throwing darts blindfolded. Sure, you might hit the target occasionally, but most of…

#AI automation for business#AI business analytics#AI business optimization tools#AI customer insights#AI data analysis#AI data visualization#AI for advertising performance#AI for audience segmentation#AI for business intelligence#AI for business scalability#AI for customer retention#AI for customer segmentation#AI for demand prediction#AI for eCommerce analytics#AI for financial forecasting#AI for operational decision-making#AI for process optimization#AI for revenue optimization#AI for ROI maximization#AI for sales forecasting#AI for sales optimization#AI for small business growth#AI in corporate decision-making#AI in digital marketing#AI in marketing analytics#AI operational efficiency#AI performance tracking#AI predictive analytics#AI-driven business strategy#AI-driven competitive analysis

0 notes

Text

Real-World Applications of AI in Data Analytics Across Industries

Artificial Intelligence (AI) has transformed the way businesses leverage data analytics, enabling organizations to gain deeper insights, improve decision-making, and enhance operational efficiency. Across various industries, AI-driven data analytics is revolutionizing processes, uncovering hidden patterns, and driving innovation. This article explores the real-world applications of AI in data analytics across multiple sectors, demonstrating its profound impact on modern business landscapes.

1. Healthcare: Enhancing Diagnostics and Personalized Medicine

The healthcare industry has significantly benefited from AI-powered data analytics, particularly in diagnostics and personalized medicine. AI-driven algorithms analyze vast datasets from electronic health records (EHRs), medical images, and genomic sequences to detect diseases at an early stage. For example, AI systems are being used to identify cancerous cells in radiology scans with higher accuracy than human radiologists. Additionally, predictive analytics help in forecasting disease outbreaks and patient deterioration, allowing for timely medical interventions. Personalized treatment plans are another crucial application, where AI assesses genetic data and medical history to recommend tailored therapies, improving patient outcomes.

2. Finance: Fraud Detection and Risk Management

In the financial sector, AI-powered data analytics is instrumental in fraud detection and risk management. Traditional fraud detection methods often fail to keep up with sophisticated cyber threats. AI algorithms analyze transaction patterns in real time, identifying anomalies that may indicate fraudulent activities. Machine learning models assess credit risk by evaluating an individual's financial behavior and history, enabling banks to make informed lending decisions. Additionally, AI aids in algorithmic trading, where vast datasets are processed to predict market trends and execute high-frequency trades, optimizing investment portfolios.

3. Retail: Enhancing Customer Experience and Inventory Management

Retail businesses use AI in data analytics to enhance customer experience and optimize inventory management. AI-powered recommendation engines analyze customer preferences, purchase history, and browsing behavior to provide personalized product suggestions, increasing sales and customer satisfaction. Predictive analytics help retailers forecast demand, ensuring optimal stock levels and reducing wastage. AI-driven chatbots and virtual assistants improve customer service by offering instant support and resolving queries efficiently. Furthermore, AI enables dynamic pricing strategies, adjusting product prices based on demand fluctuations, competitor pricing, and customer behavior.

4. Manufacturing: Predictive Maintenance and Supply Chain Optimization

AI-driven data analytics is transforming the manufacturing industry by enabling predictive maintenance and optimizing supply chains. Predictive maintenance uses AI to analyze data from sensors and IoT devices to detect potential equipment failures before they occur, reducing downtime and maintenance costs. In supply chain management, AI assesses historical data, market trends, and weather conditions to optimize logistics, ensuring timely deliveries and cost efficiency. Smart factories integrate AI-powered robotics and automation, enhancing production efficiency and minimizing errors.

5. Marketing: Advanced Customer Insights and Campaign Optimization

In marketing, AI is revolutionizing data analytics by providing advanced customer insights and optimizing campaigns. AI-driven sentiment analysis assesses social media and customer feedback, allowing brands to understand consumer emotions and preferences. Predictive analytics help marketers identify potential customers, improving targeting strategies. AI automates content personalization, ensuring the right messages reach the right audience at the right time. Additionally, AI enhances advertising performance by analyzing engagement metrics and adjusting campaign strategies in real-time for maximum ROI.

6. Transportation and Logistics: Route Optimization and Traffic Management

The transportation and logistics industry leverages AI-driven data analytics for route optimization and traffic management. AI algorithms process real-time traffic data, weather conditions, and historical patterns to determine the most efficient delivery routes, reducing fuel consumption and improving delivery times. Ride-sharing companies use AI to match drivers with passengers efficiently, minimizing wait times and optimizing fleet utilization. In urban planning, AI-powered traffic management systems analyze congestion patterns and suggest alternative routes to improve traffic flow and reduce emissions.

7. Energy Sector: Smart Grids and Renewable Energy Optimization

In the energy sector, AI is playing a crucial role in optimizing energy consumption and integrating renewable energy sources. Smart grids leverage AI to predict energy demand and supply fluctuations, ensuring efficient distribution and reducing power outages. AI-powered data analytics help in monitoring equipment health, preventing failures in power plants and transmission networks. Renewable energy companies use AI to forecast weather patterns and optimize solar and wind energy generation, improving sustainability efforts.

8. Education: Personalized Learning and Administrative Efficiency

The education sector is increasingly adopting AI-driven data analytics to enhance personalized learning and streamline administrative tasks. AI-powered learning platforms analyze student performance data to customize learning materials and provide personalized recommendations. Automated grading systems reduce the burden on educators, allowing them to focus on interactive teaching. Predictive analytics help institutions identify students at risk of dropping out, enabling timely interventions to improve retention rates. Additionally, AI assists in optimizing resource allocation and curriculum planning, enhancing overall educational effectiveness.

9. Real Estate: Property Valuation and Market Trends Analysis

AI-driven data analytics is reshaping the real estate industry by improving property valuation and market trend analysis. AI models assess various factors, such as location, market demand, historical pricing data, and economic indicators, to predict property values accurately. Real estate platforms use AI to recommend properties based on user preferences, streamlining the home-buying process. AI-powered chatbots assist customers in finding suitable listings and answering queries in real time, improving customer experience.

Conclusion

AI has become an indispensable tool in data analytics, revolutionizing industries by offering predictive insights, automation, and efficiency improvements. Whether in healthcare, finance, retail, or manufacturing, AI-driven data analytics enhances decision-making and drives innovation. Businesses that integrate AI for data analytics into their operations gain a competitive edge by harnessing the power of machine learning and automation. As advancements in AI and data analytics software continue to evolve, their impact across industries will only become more profound, shaping the future of business intelligence and strategic decision-making.

#data visualization softwares#data visualization tools#tools for data visualization#rpa software#robotic process automation#unified data analytics platform

0 notes

Text

Top 9 AI Tools for Data Analytics in 2025

In 2025, the landscape of data analytics is rapidly evolving, thanks to the integration of artificial intelligence (AI). AI-powered tools are transforming how businesses analyze data, uncover insights, and make data-driven decisions. Here are the top nine AI tools for data analytics that are making a significant impact: 1. ChatGPT by OpenAI ChatGPT is a powerful AI language model developed by…

#Ai#AI Algorithms#Automated Analytics#Big Data#Business Intelligence#Data Analytics#Data Mining#Data Science#Data Visualization#Deep Learning#Machine Learning#Natural Language Processing#Neural Networks#predictive analytics#Statistical Analysis

0 notes

Text

Me when people draw Crocodile with thick eyebrows:

(he's still really handsome^^)

sir cockodile

#what is this thick eyebrows disease which infects so many artists on social media /hj#matthew mcconaughey is indeed a pretty good reference for him#he (the actor) still doesn't have such thick and black eyebrows tho 😭#I mean I get it when artists who work in theatre always draw people with thick eyebrows because#that's just the rule of theatre. If your character doesn't have expressive eyebrows the audience will not see their face#but this is internet where we don't have to use binoculars to observe and enjoy. we all can just relax#Crocodile has deep-set eyes and a high forehead#which visually creates the effect of large and thick eyebrows.#and I see that many artists do... welp I don't wanna call this a mistake because he's not a real person#but when people add thick eyebrows to someone who has deep-set eyes - this is just a sign#(to me)#that they don't understand some points about how facial features work#This is in no way a complaint against the artist - simply a conclusion based on processed data.

3K notes

·

View notes

Text

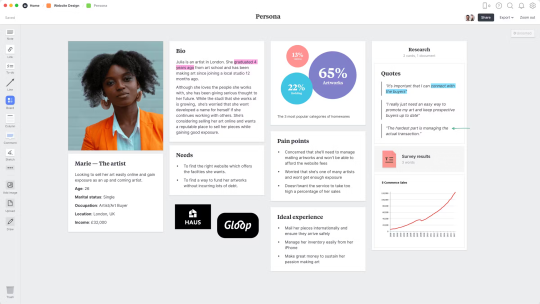

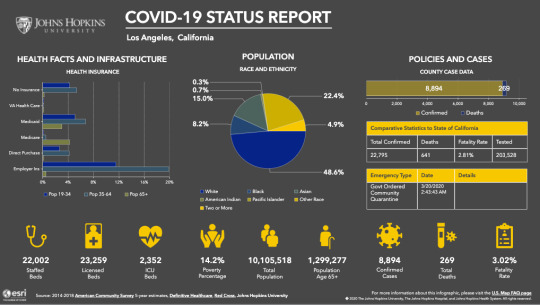

CTS-B | Week01 Creative Practice and Critical Thinking

Fact-based material elucidating statistics and figures is presented critically. Typically, it releases data with little to no reprocessing in its original format as a percentage or figure. The data may be presented as pure fact, free from embellishment or distortion, even if this information does not make the data clear.

Conversely, the information obtained through creative means offers re-edited data instead of numbers and statistics. Refined information works well for presenting the data in an understandable and visually appealing way, reaching a broad audience, and piqueing people's curiosity. Instead of just presenting data or facts, we frequently employ techniques to reprocess data and visually represent data and results, which makes them clearer and simpler to comprehend.

For example, we could recognize the significance of reconstructing data while providing imaginative explanations of the same content, such as explaining Seoul's overcrowding in comparison to other cities. Visually comparing Seoul with Los Angeles and Rome makes more sense than relying solely on statistics.

Perhaps people are wondering why innovative ways of disseminating information are so important. Re-edited data offers a simpler method of communication. We discovered that comparing Seoul and Los Angeles' population densities through visuals is more intuitive than through numerical comparisons. Properly refined data can ease the recipient's pressure to comprehend every detail. Schematization of information would be very beneficial in today's fast-paced communication environment.

(224 words)

References:

Balla, Erika. “Data Visualization: The Underrated Skill in Business Analytics.” Data Science Central, TechTarget, 25 Aug. 2023, https://www.datasciencecentral.com/data-visualization-the-underrated-skill-in-business-analytics/.

Cruickshank, Saralyn. “Johns Hopkins Launches New U.S.-Focused COVID-19 Tracking Map.” Johns Hopkins University, 14 Apr. 2020, https://hub.jhu.edu/2020/04/14/johns-hopkins-launches-us-coronavirus-tracking-map/.

Dospon, Elise. “What Is a Persona? Definition, Examples, and Templates.” Shopify, 21 May 2024, https://www.shopify.com/ph/blog/what-is-a-persona.

Juillet, Romain. “7 Ways Infographics Can Benefit Your Online Business.” BOCASAY, 14 Oct. 2022, https://www.bocasay.com/7-ways-infographics-benefit-online-business/.

Image references:

Functions of the U.S. map include county-level infection and population data from: Johns Hopkins University, 13 April 2020 https://hub.jhu.edu/2020/04/14/johns-hopkins-launches-us-coronavirus-tracking-map/

Infographic about retailer survey results from: J.R. Webber https://www.behance.net/gallery/127378549/Imagery-is-important-Infographic?tracking_source=search_projects_recommended%7Cdata+visualization+infographic

Infographic about Risky business: The importance of reputation from: Jeff Desjardins, 22 August, 2014 https://www.visualcapitalist.com/the-reputational-risks-that-ceos-are-most-worried-about/

Infographic about The great agency exodus from: 99designs by Vista https://www.itsnicethat.com/news/vista-99designs-design-without-borders-report-creative-industry-030322

Warren, Brett. “How to Make Effective User Personas with Milanote.” Milanote, 31 Aug. 2024, https://milanote.com/guide/persona.

Population references:

“Los Angeles Population 2024.” Population 2024, 2024, https://www.usapopulation.org/los-angeles-population/.

“New York Population 2024.” WORLD POPULATION REVIEW, 2024, https://worldpopulationreview.com/states/new-york.

“Rome, Italy Population 2024.” WORLD POPULATION REVIEW, 2024, https://worldpopulationreview.com/cities/italy/rome.

“Seoul, South Korea Population 2024.” WORLD POPULATION REVIEW, 2024, https://worldpopulationreview.com/world-cities/seoul-population.

1 note

·

View note

Text

New open-source tool helps to detangle the brain

New Post has been published on https://thedigitalinsider.com/new-open-source-tool-helps-to-detangle-the-brain/

New open-source tool helps to detangle the brain

In late 2023, the first drug with potential to slow the progression of Alzheimer’s disease was approved by the U.S. Federal Drug Administration. Alzheimer’s is one of many debilitating neurological disorders that together affect one-eighth of the world’s population, and while the new drug is a step in the right direction, there is still a long journey ahead to fully understanding it, and other such diseases.

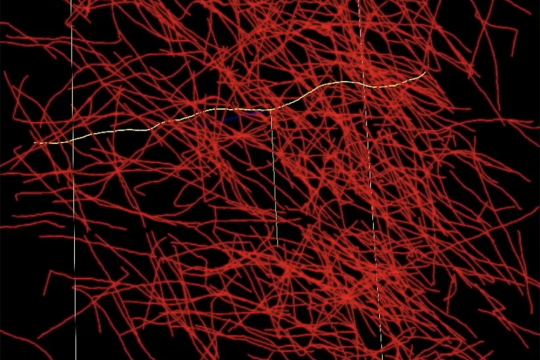

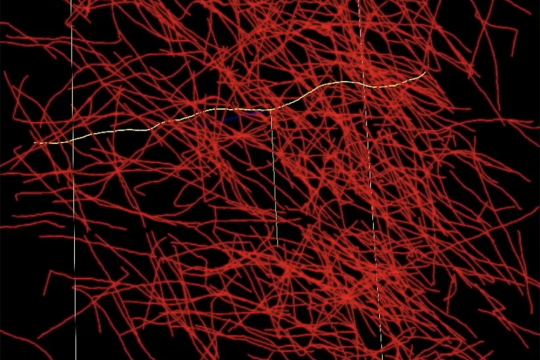

“Reconstructing the intricacies of how the human brain functions on a cellular level is one of the biggest challenges in neuroscience,” says Lars Gjesteby, a technical staff member and algorithm developer from the MIT Lincoln Laboratory’s Human Health and Performance Systems Group. “High-resolution, networked brain atlases can help improve our understanding of disorders by pinpointing differences between healthy and diseased brains. However, progress has been hindered by insufficient tools to visualize and process very large brain imaging datasets.”

A networked brain atlas is in essence a detailed map of the brain that can help link structural information with neural function. To build such atlases, brain imaging data need to be processed and annotated. For example, each axon, or thin fiber connecting neurons, needs to be traced, measured, and labeled with information. Current methods of processing brain imaging data, such as desktop-based software or manual-oriented tools, are not yet designed to handle human brain-scale datasets. As such, researchers often spend a lot of time slogging through an ocean of raw data.

Gjesteby is leading a project to build the Neuron Tracing and Active Learning Environment (NeuroTrALE), a software pipeline that brings machine learning, supercomputing, as well as ease of use and access to this brain mapping challenge. NeuroTrALE automates much of the data processing and displays the output in an interactive interface that allows researchers to edit and manipulate the data to mark, filter, and search for specific patterns.

Untangling a ball of yarn

One of NeuroTrALE’s defining features is the machine-learning technique it employs, called active learning. NeuroTrALE’s algorithms are trained to automatically label incoming data based on existing brain imaging data, but unfamiliar data can present potential for errors. Active learning allows users to manually correct errors, teaching the algorithm to improve the next time it encounters similar data. This mix of automation and manual labeling ensures accurate data processing with a much smaller burden on the user.

“Imagine taking an X-ray of a ball of yarn. You’d see all these crisscrossed, overlapping lines,” says Michael Snyder, from the laboratory’s Homeland Decision Support Systems Group. “When two lines cross, does it mean one of the pieces of yarn is making a 90-degree bend, or is one going straight up and the other is going straight over? With NeuroTrALE’s active learning, users can trace these strands of yarn one or two times and train the algorithm to follow them correctly moving forward. Without NeuroTrALE, the user would have to trace the ball of yarn, or in this case the axons of the human brain, every single time.” Snyder is a software developer on the NeuroTrALE team along with staff member David Chavez.

Because NeuroTrALE takes the bulk of the labeling burden off of the user, it allows researchers to process more data more quickly. Further, the axon tracing algorithms harness parallel computing to distribute computations across multiple GPUs at once, leading to even faster, scalable processing. Using NeuroTrALE, the team demonstrated a 90 percent decrease in computing time needed to process 32 gigabytes of data over conventional AI methods.

The team also showed that a substantial increase in the volume of data does not translate to an equivalent increase in processing time. For example, in a recent study they demonstrated that a 10,000 percent increase in dataset size resulted in only a 9 percent and a 22 percent increase in total data processing time, using two different types of central processing units.

“With the estimated 86 billion neurons making 100 trillion connections in the human brain, manually labeling all the axons in a single brain would take lifetimes,” adds Benjamin Roop, one of the project’s algorithm developers. “This tool has the potential to automate the creation of connectomes for not just one individual, but many. That opens the door for studying brain disease at the population level.”

The open-source road to discovery

The NeuroTrALE project was formed as an internally funded collaboration between Lincoln Laboratory and Professor Kwanghun Chung’s laboratory on MIT campus. The Lincoln Lab team needed to build a way for the Chung Lab researchers to analyze and extract useful information from their large amount of brain imaging data flowing into the MIT SuperCloud — a supercomputer run by Lincoln Laboratory to support MIT research. Lincoln Lab’s expertise in high-performance computing, image processing, and artificial intelligence made it exceptionally suited to tackling this challenge.

In 2020, the team uploaded NeuroTrALE to the SuperCloud and by 2022 the Chung Lab was producing results. In one study, published in Science, they used NeuroTrALE to quantify prefrontal cortex cell density in relation to Alzheimer’s disease, where brains affected with the disease had a lower cell density in certain regions than those without. The same team also located where in the brain harmful neurofibers tend to get tangled in Alzheimer’s-affected brain tissue.

Work on NeuroTrALE has continued with Lincoln Laboratory funding and funding from the National Institutes of Health (NIH) to build up NeuroTrALE’s capabilities. Currently, its user interface tools are being integrated with Google’s Neuroglancer program — an open-source, web-based viewer application for neuroscience data. NeuroTrALE adds the ability for users to visualize and edit their annotated data dynamically, and for multiple users to work with the same data at the same time. Users can also create and edit a number of shapes such as polygons, points, and lines to facilitate annotation tasks, as well as customize color display for each annotation to distinguish neurons in dense regions.

“NeuroTrALE provides a platform-agnostic, end-to-end solution that can be easily and rapidly deployed on standalone, virtual, cloud, and high performance computing environments via containers.” says Adam Michaleas, a high performance computing engineer from the laboratory’s Artificial Intelligence Technology Group. “Furthermore, it significantly improves the end user experience by providing capabilities for real-time collaboration within the neuroscience community via data visualization and simultaneous content review.”

To align with NIH’s mission of sharing research products, the team’s goal is to make NeuroTrALE a fully open-source tool for anyone to use. And this type of tool, says Gjesteby, is what’s needed to reach the end goal of mapping the entirety of the human brain for research, and eventually drug development. “It’s a grassroots effort by the community where data and algorithms are meant to be shared and accessed by all.”

The codebases for the axon tracing, data management, and interactive user interface of NeuroTrALE are publicly available via open-source licenses. Please contact Lars Gjesteby for more information on using NeuroTrALE.

#000#2022#2023#Administration#ai#algorithm#Algorithms#Alzheimer's#Alzheimer's disease#artificial#Artificial Intelligence#automation#billion#Brain#brain imaging#brains#cell#challenge#Cloud#Collaboration#Color#Community#computing#Containers#content#data#Data Management#data processing#Data Visualization#datasets

0 notes

Text

Moolamore data integration and analysis with leading accounting software

In today's fast-paced business world, having an accurate, up-to-date picture of your ins and outs can mean the difference between flourishing and barely scraping by.

Good thing there's Moolamore—a powerful solution designed to help you seamlessly integrate and analyze real-time data, providing your SMEs with the information they need to stay ahead and resilient in any economic climate. Make sure you keep reading this blog!

#real-time data integration#real-time data analysis#real-time data processing#real-time data pipeline#real-time data analytics#real-time data visualization

0 notes

Text

What is the First Step of the Data Analytics Process?

Understanding the First Step of Data Analytics

Data analytics is about uncovering insights from data to help businesses make smarter decisions and improve their performance. It involves several important steps, with the first one being the most crucial: defining the objective. Let's dive into why this step is so important and how to approach it.

Why Defining the Objective Matters

The first thing you do in data analytics is setting a clear goal. This means figuring out what problem you're trying to solve or what question you need to answer with your data. Without a clear objective, you might end up wasting time and resources on the wrong things.

Focus and Direction: Having a clear objective gives you a roadmap for your data analysis journey. It helps you stay focused on what matters and avoid getting lost in too much information.

Using Resources Wisely: When you know your objective, you can allocate your time, people, and technology more effectively. This means you're not wasting resources on data that won't help you achieve your goal.

Setting Realistic Expectations: Defining your objective helps manage expectations. It lets everyone involved know what the data analysis can realistically achieve and what insights they can expect.

Read More: Bi & Data Analytics: Transform Your Business Strategy Now

Steps to Define Your Objective

Identify the Problem:

Understand what's going on in your business. This includes knowing your industry, market conditions, and what's happening inside your company.

Talk to the people who care about the outcome. This could be your boss, managers, or teammates who have a stake in the results.

Ask the Right Questions:

Once you know the problem, ask specific questions that your data can answer. For example, if sales are dropping, ask which products aren't selling well or what customers are looking for.

Choose Key Metrics:

Find the numbers that matter. These are the metrics that show how well you're doing with your goal. For sales, this might be growth rate, how much it costs to get a new customer, or how long customers stick around.

Set Boundaries:

Be clear about what your analysis will cover. This means saying what data you'll use and what you won't. For example, you might only look at sales in one place or a certain kind of product.

Example: Fixing Cart Abandonment in Online Shopping

Imagine an online store where lots of people put things in their cart but don't buy them. The goal here would be to figure out why and then find ways to stop it.

The Problem: People leaving items in their cart without buying them.

The Goal: Understand why this happens and find ways to fix it.

Questions: When and why do people leave their carts? Are there patterns based on who the shoppers are or what they want to buy?

Metrics: How often carts get left, how many people finish checking out, and how much people usually spend.

Focus: Look at what happens during checkout over six months, and don't count people who come back within a day.

Limits: Can't look at everything about who buys things because of privacy rules.

By setting a clear goal like this, the store can make sure it gets the right information, look at it the right way, and come up with good ideas to fix the cart problem.

In Conclusion

The first step in data analytics is super important because it sets up everything else. When you know what you're looking for, you can use your data to really understand what's happening and make good choices. This helps businesses get smarter, do better, and reach their goals faster.

Ready to take your business to the next level? Discover how our Business Intelligence and Data Analytics services can help you achieve your goals. Contact us today!

#data analytics#data visualization#datainsights#data analytics process#business intellegences#data analytics services

0 notes