#datastream

Explore tagged Tumblr posts

Text

Datastream martyn kind of a time loop situation. Something to consider

5 notes

·

View notes

Text

I’ve remembered why I stopped writing on wattpad. It’s cuz I lose motivation too fast and can never finish stuff, so in a day or two I’m gonna post just a simple overview of Himiko’s past. But for now I’m gonna show you all another oc of mine

Her names Toshiko Niwa

Fandom: my hero academia

Age: 20/ five years older than class A

Height: 6’1

Sexuality: asexual/ doesn’t feel romantic love

Pronouns: she/her

Eye color: I can’t show it in picrews so I usually just go with random colors but her eyes are like streaks of different colors like the Netflix thing where it looks like string. That’s what her eyes look like

Hair color and length: dark brown and short

Skin tone: pale with a bit of a tan

Scars: one cheek scar, and other scars across her body

Quirk:

I did borrow this quirk from a fanfic on Ao3 called datastream

Datastream: this quirk gives its user the ability to see streams of data such as, a phone call, a YouTube video, text messages, or any other thing related to tech, and allows the user to access it doing so the user can see and or hear what the stream is and says. The user can also access cameras by tapping into their data stream doing so the user can jump from camera to camera or even tap into hero comms to contact a hero. The user does not have to touch the streams to enter them and is able to both be half in a stream and half still conscious so the user can have a conversation with a hero through a stream whilst still having a conversation with a friend physically. The downsides of this quirk are, even though the user can multitask and both interact with the world whilst still being in a stream if the user wants to fully dive into a stream they can no longer interact with the outside world using their physical body until they tap out of a stream, if fully in a stream the user becomes vulnerable to attack given that they are unable to fight back or even realize they’re being touched, moved, or spoken to, when quirk is overused the user becomes tired, gets headaches, and their eyes hurt as if they had been looking at a screen for too long, they also become more tired.

Sorry for giving up on writing all of Himiko’s back story but it’s never gonna get finished if I keep doing it this way

Also Toshiko is not a hero at first she’s actually a vigilante who then becomes an intelligence hero!

#bnha#boku no hero academia#mha#my hero academia#bnha quirks#mha quirks#quirk ideas#original quirk#my work#oc#oc help#my oc#ocs#quirks#quirk#original oc#not my quirk#borrowed idea#fan fiction#fanfic#datastream#emitter quirk#mental mutant quirk#mutant quirk

2 notes

·

View notes

Text

DDTO Bad Ending: DataStream Fan Remake + New AU

I love DDTO and DDTO: Bad Ending, and its latest addition DataStream was such an effin' banger!!! However, people have been saying that the gameplay was messy and hard to play. So if I were to make a fanmade restyling of DataStream, here's how I would do it. -New Sprites: The idle and poses could show more of Monika's agony than just having a glitch filter layered on the spritesheet. -New Mechanics: Arrows moving everywhere, switching from side-to-side, going from upscroll to downscroll, jumbling the order. As the song starts, the window where you're playing the mod in slowly becomes smaller, making it seem like the mod has affected your "Screen", as seen in the official gameplay, but your computer is fine!! The "Screen" in question would actually be an image file or a texture file, so it'll appear that your screen is smothered by text saying 'Just Monika', and to make it more believable, the mod would temporally disable the esc key or whatever key gets you out of full screen. After the song is finished, the mod will crash like in the original. -Cutscenes and In-Game Dialogue: Since DataStream takes place either after or during DDTO: Bad Ending, the start of DataStream would be Monika stranded in the void, unsuccessfully trying to get back into DDLC. During the pause in the song, there would be some dialogue from Monika. For the end cutscene at the end of the song, I have two ideas. Canon Bad Ending cutscene: Monika finally disappears and Boyfriend goes insane one final time, then the mod would crash and you would get the end-game dialogue. The end-dialogue with Monika.chr would be the same. Poems and Thorns Ending cutscene: Instead of being deleted, Monika gets dragged back to Hating Simulator, and the last thing you'd see before the mod crashes is Senpai. The end-dialogue would be with Senpai knowing that he's just a character and now he wants Monika to himself because he's become self-aware like she has. In case you're wondering what the second ending was about, I'm in the process of making a fan AU of DDTO based off one of the side stories, hence why the second ending has Senpai. About DDTO: Poems and Thorns(so far): Basically, after the events of Wilted, the Dokis lure Senpai into the Bad Ending mod, an identical copy of DDLC/DDTO, to teach Senpai a lesson. Senpai ends up defeating all of them and taking control of the mod and his own game. Yuri successfully disguises her files and sends out an SOS message to Monika, who's in the real DDLC/DDTO talking to some of the FNF mod characters. Monika reads the message, finds out about Senpai, and decides to confront him. Hope you all enjoyed my fan interpretation of DataStream!! Have a blessed morning/noon/evening/night/day!!! (Don't forget to hydrate!!)

#cartoonlover speaks#long reads#fnf#FNF AU#Doki Doki Takeover#FNF Doki Doki Takeover#FNF DDTO#DDTO#DDTO: Bad Ending#fnf senpai#monika#sayori#natsuki#yuri ddlc#doki doki literature club#monika ddlc#sayori ddlc#ddlc au#DataStream#DDTO: Poems and Thorns#fanmod#wow#that's a lot of tags

3 notes

·

View notes

Text

Datastream’s BigQuery Append only simplifies data tracking

Datastream Documentation

DataStream’s BigQuery append only CDC makes historical data monitoring in BigQuery easier. Organizations frequently struggle to maintain both an up-to-date “source of truth” and the capacity to follow the whole history of changes made to their data. Change data capture (CDC) is a popular method for managing this data in operational databases like MySQL or PostgreSQL. It replicates the changes to a cloud data warehouse like BigQuery.

A new feature called BigQuery append only mode has been added to Data stream, Google Cloud‘s serverless CDC service. This feature makes it easier to replicate changes from your operational databases to BigQuery. This feature provides a productive and economical method of tracking changes to operational data over time and keeping track of historical records.

BigQuery append only

When a record is modified or removed in your source database using conventional CDC-based replication, the matching record in the destination is rewritten, making it challenging to trace the history of changes. This problem is solved by appending only, which maintains each modification as a new row in your BigQuery target table. You can use the metadata to sort and filter the data as needed. Each row contains the type of modification (insert, update, or delete), a unique identifier, a timestamp, and other pertinent information.

BigQuery append only Applications

Append only database

BigQuery append only mode comes in very handy in situations when you have to keep a history of changes. Common usage scenarios include the following:

Auditing and compliance: Keep track of any data modifications for internal audits or regulatory compliance.

Trend analysis: Examine past data to find trends, anomalies, and patterns throughout time.

Customer 360: Monitor changes in customer data to keep an all-encompassing picture of client interactions and preferences.

Examining embedding drift: You can examine how embeddings have changed over time and evaluate the effects on the functionality of your model by using a historical record of embeddings.

Time travel: Conduct historical analysis and comparisons by querying your data warehouse as it existed at a certain moment in time.

As an illustration

Let’s say you require MySQL to serve as your main source of truth since you keep customer information in a MySQL table. For the purpose of analyzing behavior and preferences, your analytics team must monitor modifications to client records. All inserts, updates, and deletes made to this table when append-only mode is enabled will be documented as new rows in the related BigQuery table. This makes it easier to get the data that the data analytics team needs for analysis.

The append-only mode’s advantages

Economy of cost: minimizes processing expenses by simply adding new rows rather than combining existing data with complicated merge processes.

Increased data accuracy: Reduces the possibility of data loss by guaranteeing a thorough and accurate history of modifications.

Real-time insights: Allows for the examination of changes in real-time as they happen, which speeds up decision-making.

Using append-only mode: A guide

Enabling BigQuery append only mode during the stream creation process through the user interface or API is a simple process. To enable you to track changes, DataStream automatically creates BigQuery tables with the necessary metadata fields.

BigQuery destination configuration

Configure destination datasets

Configuring datasets for BigQuery allows you to choose from the following:

The dataset for each schema is selected or produced in BigQuery based on the source schema name. Therefore, Datastream automatically produces a BigQuery dataset for each schema in the source.

Datastream creates datasets in the stream project if you select this option.

Datastream produces the mydb dataset and employees table in BigQuery from a MySQL source.

One dataset for all schemas: BigQuery datasets can be chosen for streams. This dataset receives all data from DataStream. For the selected dataset, DataStream produces all tables as _.

If your MySQL source has a mydb database and an employees table, Datastream generates mydb_employeestable in the dataset you select.

Write behaviour

Each table sent to BigQuery has a STRUCT field named datastream_metadata.

If a table has a source primary key, the column contains these fields:

UUID: String data type.

This field is integer. The column has IS_DELETED if a table has no primary key.

This parameter is true and specifies whether Datastream streams data from a DELETE action at the source.

Append-only tables lack primary keys.

BigQuery allows 20 MB events per stream.

Change writing mode

You can choose how DataStream writes change data to BigQuery when configuring your stream:

Merge: The default write mode. BigQuery displays your source database data when selected. Datastream sends all data updates to BigQuery, which consolidates them with existing data to create replicas of the source tables. Merge mode erases change history.

When you insert and update a row, BigQuery only keeps the modified data. Once you delete the row from the source table, BigQuery no longer stores it.

Append-only: In append-only write mode, BigQuery receives a stream of modifications (INSERT, UPDATE-INSERT, UPDATE-DELETE, and DELETE events). Use this mode to preserve data history.

BigQuery append only write mode

Consider the following scenarios to understand BigQuery append only write mode:

BigQuery writes two rows when a primary key changes.

UPDATE-DELETE row with main key-original.

UPDATE-INSERT row with new main key.

One UPDATE-INSERT row is written to BigQuery when you update a row.

A single DELETE row is written to BigQuery when you delete a row.

BigQuery Destination Table

Using BigQuery tables with max_staleness

DataStream employs BigQuery’s built-in upsert functionality for near-real-time ingestion, updating, inserting, and removing data. Upset operations update BigQuery destinations as rows are added, updated, or deleted. DataStream uses BigQuery Storage Write API to upset these operations into the destination table.

Set data staleness limit

BigQuery modifies sources in the background or at query run time based on the data staleness limit. DataStream sets the table’s max_staleness option to the stream’s data staleness limit when creating a BigQuery table.

Control BigQuery costs

BigQuery and DataStream expenses are separate. See BigQuery CDC pricing for BigQuery cost control.

In brief

The BigQuery append only mode of DataStream is a useful complement to Google Cloud‘s data integration and replication features. Businesses are enabled to obtain more insights from their data, enhance data accuracy, and optimize their data pipelines by means of a streamlined change data gathering process and a comprehensive history of modifications. See the documentation for further information.

Read more on govindhtech.com

#datastream#Datastreamdocumentetion#bigquery#BigQueryappendonly#mysql#postgresql#googlecloud#cloudcomputing#news#technews#technologynews#technologytrends#govindhtech

0 notes

Text

Datastream defender mini comic!! :D

2K notes

·

View notes

Text

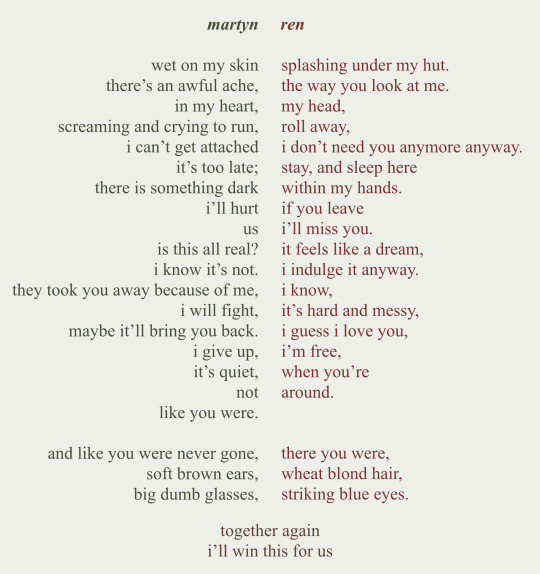

a contrapuntal poem of martyn and ren throughout the seasons (and the lack thereof)

#renthedog#inthelittlewood#martyn littlewood#bluerabb’s writing#<- that’s a new one#rendog#martyn inthelittlewood#treebark#trafficshipping#trafficblr#traffic smp#life series#third life#limited life#datastream defender#<- datastream reference is small but i reckoned i better add it in#secret life#wild life#original poem#poems on tumblr#original poetry#contrapuntal poem

629 notes

·

View notes

Note

[it was so awesome to have a communicator again. It was strange to see the case so clean though, very different from his original one which had been dirty and covered in stickers. He just hangs a charm off the new one for now, it’ll get more decorated over time.]

[he looks over it a bit, making sure it still has everything his old one had. All his messages had transferred over thankfully, meaning all his conversations were her- oh that’s martyn’s contact. Oh he was supposed to message the other once he was out of exile-]

RENDOG: martyn

RENDOG: marty

RENDOG: dude :D

RENDOG: new comm!!! and no more exile!!

RENDOG: you still fine with maybe hanging out soon now that im out??

@rendogs-mailbox

[His communicator buzzed- Ren? Okay, this was- yes! Martyn was both ecstatic and incredibly apprehensive. If Ren was back, that likely meant Doc was back too, which in turn meant communication, and likely a few more of his visions before they got it all worked out, basically, it was Martyn’s hell.]

[On the other hand, he got to see his not quite boyfriend, which would be nice. His communicator buzzed a few more times, and maybe he was hopeful thinking it’d just be Ren again.]

Doc(tor): you’re about to go back

Doc(tor): make an effort to stay in my sight this time

Doc(tor): and stop trusting people so freely

Doc(tor): doubt your ren is a C.H.E.S.T agent, he’s had plenty of chances to kill you, but doc? Watch your back

Doc(tor): everyone is either an npc or a C.H.E.S.T agent, don’t be fooled.

Doc(tor): I’ll be in touch

[Out of spite, Martyn didn’t even offer a reply to the man, he could shove his npcs and agents where the-]

[Reply to Ren, got it.]

InTheLittleWood: glad to have ya back bud

InTheLittleWood: I’ll be right there

[Without a moment more of hesitation, he tore back through the datastream, and into the hermitcraft server. Convenient. He began his search for Ren, slipping momentarily into code when any other hermit came into sight, not up for any conversations yet.]

83 notes

·

View notes

Text

The ratchanting divorce isn't gonna happen because, Ren is overly friendly/trusting & doesn't know what the word "no" means and Martyn doesn't ever communicate his feelings/thoughts about anything that bothers him(because gods forbid we have healthy communications in minecraft smps/j)

It's gonna happen because Martyn was never even supposed to come back to the rats world in the first place and even so he still has a mission given to him to complete and at the end of every mission he has to leave the world he completed it in. So even if it wasn't rats but another world where everything played out exactly the same, the ultimate outcome of the situation would have been the same, that's just how it's supposed to be. No matter how long he has stayed there, no matter how attached he gets to everyone he meets, he still has to leave it all behind if he wants to make it back to his own world/reality(<<<idk???)

So in short, it was always gonna happen but it is only now hitting us all cause it's happening right now and it's as sad & angsty as we could have predicted

18/02/25 edit/update: Post canceled...

23/02/25 edit: the previous cancellation is no longer viable

#the real reason why the divorce is happening is actually cause martyn pressed a stupid fucking button 2 years ago#this was supposed to be about the pirats break up but it kinda turned into a generic datastream defender lore post but it is what it is ig#it also doesn't help that they are 'arguing/not in good terms' rn thats what really makes it worse#yeah but anyways i can't wait to see how it all boils over and goes down its gonna be great#rats smp#rats smp 2#rats in paris#squeaksblr#squeakblr#ratchanting#renchanting#treebark#rendog#inthelittlewood#itlwlore

55 notes

·

View notes

Text

"The Search" - a webweave for Room 3 of @mcytblrescape !!

Wild Life sources: clock, "Ohne Titel (Geldig)" by Kurt Schwitters, "Aucassin Seeks for Nicolette" by Maxfield Parrish, "Tamarisk Trees in Early Sunlight" by Guy Rose, window, blue flower, green flower, pink flower, pink flowers, camera, "Starting Fires" by Bears in Trees, "Like Real People Do" by Hozier, "Puppet Loosely Strung" by the Correspondents

Pirates & Rats 2 sources: clock, "Merzzeichnung in Merzzeichnung" by Kurt Schwitters, stamps, books, trinket tin, crystal skull, "14 Verses" by Declan Bennett, "Farewell Wanderlust" by the Amazing Devil, "Gods & Monsters" by Lana del Rey

New Life sources: clock, "20 Ore mit Koranseiten" by Kurt Schwitters, "Snow-Covered Landscape" by Guillaume Vogels, tamagotchi, camera, hat, backpack, "I Could Never Be" from Steven Universe, "Tread on Me" by Matt Maeson

Ultimate Survival SMP sources: clock, "C 50 Last Birds and Flowers" by Kurt Schwitters, "Ceylonese Jungle" by Hermann von Konigsbrunn, bear, beetle, moth, crown, gloves, vined hand, "King" by Lauren Aquilina, "I Just Don't Care That Much" by Matt Maeson

Limited & Secret Life sources: clock, "Spitzbergen Merzzeichnung" by Kurt Schwitters, "She came to the blue sea-ocean" by Ivan Bilibin, bird, letter, fish, cassette, "Queen of Nothing" by the Crane Wives, "What's a Devil to Do" by Harley Poe, "14 Verses" by Declan Bennett, "Bullet" by Saint Motel

Rats sources: clock, "Zeichnung I 9 Hebel 2" by Kurt Schwitters, "Candles" by Gerhard Richter, "Sunflower Seeds" by Ai Weiwei, band-aid tin, bazooka gum, amethyst geode, amethyst crystal, socks, tag, knife, "Puppet Loosely Strung" by the Correspondents, "What's a Devil to Do" by Harley Poe

Double Life sources: clock, "Mz x 21 Street" by Kurt Schwitters, "Loup Scar, Wharfdale" by Richard Jack, coin, coffin, cat in moon, bottle cap, receipt, "Honeybee" by Steam Powered Giraffe, "the broken hearts club" by gnash

Last Life sources: clock, "Merz 30, 42" by Kurt Schwitters, "Trees and Church Tower" by Raymond McIntyre, mask, locket, clover, scarecrow and rabbit, "Whispering Grass" by the Ink Spots, "How to Rest" by the Crane Wives

3rd Life sources: clock, "Sans Titre" by Kurt Schwitters, "Forest and Dove" by Max Ernst, window, heart, pomegranate, stamp, fox, "14 Verses" by Declan Bennett, "Honeybee" by Steam Powered Giraffe

Evo sources: clock, "Ohne Titel" by Kurt Schwitters, "The man with the cart" by Ivan Grohar, pearls, stars, window, feather, dog, "Rule #9 - Child of the Stars" by Fish in a Birdcage, "Dancing After Death" by Matt Maeson

Finale-unique sources: tv, warning window, video player, error tabs, handheld game console, progress bar, axe, "The Circle Maker" by Sparkbird, "The Mask" by Matt Maeson

All skins from namemc; all stereo pngs from this post. As I'm sure you can tell, this is a hell of a source list, so I apologize if I linked anything incorrectly or managed to forget something!

#martyn inthelittlewood#inthelittlewood#webweave#no thoughts tags empty#life series#new life smp#trafficblr#evolution smp#rats smp#rats in paris#pirates smp#ultimate survival smp#datastream defender lore

42 notes

·

View notes

Text

what two years can do to a man 😔😔😔

blud does NOT know that he's gonna turn into a rat. twice 💀💀‼️‼️

#inthelittlewood#rats smp#silly little pre-datastream c!martyn#this is what happens when we press unknown buttons#dont press buttons guys

38 notes

·

View notes

Text

#datastream martyn. regular martyn.#martyn the character and Martyn the man. just in general#martyn#wyrming

29 notes

·

View notes

Text

Going insane going insane going insane

I am making a stupid Jimmy Solidarity Datastream Defender au where Empires smp takes the place of Rats smp and Jimmy just sucks at it he's so bad at this lying thing I swear

Basic sketches and concepts.. it is wip but it is mine

Xornoths Crystal is the L.O.O.T. shard and Jimmy has to steal it and destroy it to recover the shard..

No seablings in this au cause he's from another world and can't be related to Lizzie....

He's just a horrible liar so he's covering his face in every game world to try and make up for it- but since he's just lying ALL THE TIME no one can tell he's lying cause they've never seen him telling the truth, they just think hes always this defensive over nothing and stuff

Fhajtbskska I'm going insane over this concept save me Jimmy Solidarity save me. I know if no one got me Martyn Littlewood also does not got me, but Jimmy will save my soul I fear.

I don't even know what to call this au I'm thinking something like Datastream Offender cause idk maybe uhhhh.. COD au since.. I'm calling ][][ (Doc) [[]] (Cod) for the comedic value

My favorite past time is putting Jimmy into situations and Martyn is ALREADY IN a situation its perfect its perfect

#rats smp#empires smp#jimmy solidarity#jimmy solidarity fanart#martyn littlewood#martyn inthelittlewood#solidaritygaming#jimmy solidarity and Martyn littlewood swap au#datastream defender#datastream defender lore#martyn vtuber lore#jimmy datastream defender au#martyn itlw#itlw#i want to assimilate him into my evil hivemind#both of them actually#GRRRR martyn and jimmy and SITUATIONS

14 notes

·

View notes

Text

Google Cloud Pub Sub Provides Scalable Managed Messaging

Google Cloud Pub Sub

Many businesses employ a multi-cloud architecture to support their operations, for various reasons, such as avoiding provider lock-in, boosting redundancy, or using unique offerings from various cloud providers.

BigQuery, which provides a fully managed, AI-ready multi-cloud data analytics platform, is one of Google Cloud’s most well-liked and distinctive offerings. With BigQuery Omni’s unified administration interface, you can query data in AWS or Azure using BigQuery and view the results in the Google Cloud panel. Then, Pub/Sub introduces a new feature that enables one-click streaming ingestion into Pub/Sub from external sources: import topics. This is useful if you want to integrate and transport data between clouds in real-time. Amazon Kinesis Data Streams is the first external source that is supported. Let’s examine how you can use these new import topics in conjunction with Pub/Sub’s BigQuery subscriptions to quickly and easily access your streaming AWS data in BigQuery.

Google Pub Sub

Learning about important subjects

Pub/Sub is an asynchronous messaging service that is scalable and allows you to separate services that generate messages from those that consume them. With its client libraries, Pub/Sub allows data to be streamed from any source to any sink and is well-integrated into the Google Cloud environment. BigQuery and Cloud Storage can receive data automatically through export subscriptions supported by Pub/Sub. Pub/Sub may send messages to any arbitrary publicly visible destination, such as on Google Compute Engine, Google Kubernetes Engine (GKE), or even on-premises. It is also naturally linked with Cloud Functions and Cloud Run. Image credit to Google Cloud

Amazon Kinesis Data Streams

While export subscriptions enable the uploading of data to BigQuery and Cloud Storage, import topics offer a fully managed and efficient method of reading data from Amazon Kinesis Data Streams and ingesting it directly into Pub/Sub. This greatly reduces the complexity of setting up data pipelines between clouds. Additionally, import topics offer out-of-the-box monitoring for performance and health visibility into the data ingestion processes. Furthermore, automated scalability is provided by import topics, which removes the requirement for human setting to manage variations in data volume.

Import topics facilitate the simple movement of streaming data from Amazon Kinesis Data Streams into Pub/Sub and enable multi-cloud analytics with BigQuery. On an arbitrary timeline, the Amazon Kinesis producers can be gradually moved to Pub/Sub publishers once an import topic has established a connection between the two systems.Image credit to Google Cloud

Please be aware that at this time, only users of Enhanced Fan-Out Amazon Kinesis are supported.

Using BigQuery to analyse the data from your Amazon Kinesis Data Streams

Now, picture yourself running a company that uses Amazon Kinesis Data Streams to store a highly fluctuating volume of streaming data. Using BigQuery to analyse this data is important for analysis and decision-making. Initially, make an import topic by following these detailed procedures. Both the Google Cloud console and several official Pub/Sub frameworks allow you to create an import topic. After selecting “Create Topic” on the console’s Pub/Sub page

Important

Pub/Sub starts reading from the Amazon Kinesis data stream as soon as you click “Create,” publishing messages to the import topic. When starting an import topic, there are various precautions you should take if you already have data in your Kinesis data stream to avoid losing it. Pub/Sub may drop messages published to a topic if it is not associated with any subscriptions and if message retention is not enabled. It is insufficient to create a default subscription at the moment of topic creation; these are still two distinct processes, and the subject survives without the subscription for a short while.

There are two ways to stop data loss:

To make a topic an import topic, create it and then update it:

Make a topic that isn’t importable.

Make sure you subscribe to the subject.

To make the subject an import topic, update its setup to allow ingestion from Amazon Kinesis Data Streams.

Check for your subscription and enable message retention:

Make an import topic and turn on message retention.

Make sure you subscribe to the subject.

Look for the subscription to a timestamp that comes before the topic is created.

Keep in mind that export subscriptions begin writing data the moment they are established. As a result, going too far in the past may produce duplicates. For this reason, the first option is the suggested method when using export subscriptions.

Create a BigQuery subscription by going to the Pub/Sub console’s subscriptions page and selecting “Create Subscription” in order to send the data to BigQuery:

By continuously observing the Amazon Kinesis data stream, Pub/Sub autoscales. In order to keep an updated view of the stream’s shards, it periodically sends queries to the Amazon Kinesis ListShards API. Pub/Sub automatically adjusts its ingestion configuration to guarantee that all data is gathered and published to your Pub/Sub topic if changes occur inside the Amazon Kinesis data stream (resharding).

To ensure ongoing data intake from the various shards of the Amazon Kinesis data stream, Pub/Sub uses the Amazon Kinesis Subscribe ToShard API to create a persistent connection for any shard that either doesn’t have a parent shard or whose parent shard has already been swallowed. A child shard cannot be consumed by Pub/Sub until its parent has been fully consumed. Nevertheless, since messages are broadcast without an ordering key, there are no rigorous ordering guarantees. By copying the data blob from each Amazon Kinesis record to the data field of the Pub/Sub message before it is published, each unique record is converted into its matching Pub/Sub message. Pub/Sub aims to optimise each Amazon Kinesis shard’s data read rate.

You can now query the BigQuery table directly to confirm that the data transfer was successful. Once the data from Amazon Kinesis has been entered into the table, a brief SQL query verifies that it is prepared for additional analysis and integration into larger analytics workflows.

Keeping an eye on your cross-cloud import

To guarantee seamless operations, you must monitor your data ingestion process. Three new metrics that google cloud has added to Pub/Sub allow you to assess the performance and health of the import topic. They display the topic’s condition, the message count, and the number of bytes. Ingestion is prevented by an incorrect setup, an absent stream, or an absent consumer unless the status is “ACTIVE.” For an exhaustive list of possible error situations and their corresponding troubleshooting procedures, consult the official documentation. These metrics are easily accessible from the topic detail page, where you can also view the throughput, messages per second, and if your topic is in the “ACTIVE” status.

In summary

For many businesses, functioning in various cloud environments has become routine procedure. You should be able to utilise the greatest features that each cloud has to offer, even if you’re using separate clouds for different aspects of your organisation. This may require transferring data back and forth between them. It’s now simple to transport data from AWS into Google Cloud using Pub/Sub. Visit Pub/Sub in the Google Cloud dashboard to get started, or register for a free trial to get going right now.

Read more on govindhtech.com

#BigQuery#sqlquery#amazonkinesis#Amazon#API#AI#GoogleCloud#cloudstorage#DataStream#CloudRun#AWS#news#technews#technology#technologynews#technologytrends#govindhtech

0 notes

Text

HAPPY BIRTHDAY BOSS!!!

#itlwart#itlw#martyn inthelittlewood#inthelittlewood#my art#trafficblr#life series#traffic smp#pirates smp#rats smp#datastream defender#inthelittlewood fanart#dogwarts#misadventures#misadventures smp#mismp

321 notes

·

View notes

Note

may I ask for either Pi-Rats or majorwood?

now on ao3

"What's funny?" The captain snickers. "Your little face! It's adorable!" "Alright, fair," Mratyn admits. "I just missed you, Lieutenant. Let me give you a smooch." He scampers over, past the conversation Ratman and Ratchela and Ros are having, and plants one right on Mratyn's lips. It sets him all aflutter, admittedly - he honest-to-goodness swoons, so overcome is he by affection. "Oh, lovestruck! I'm knocked off my feet." It's wild. He's never felt like this about the other characters he's played alongside; this genuine crushing investment, like he's got way more than three months' connection with Ren - Jaque - the captain - whatever.

VOTE TREEBARK

#ilexworks#poll rigging#treebark#ratsshipping#mcytshipping#one day i'll understand the unified watcher datastream timeline

16 notes

·

View notes

Text

Honestly the biggest question I have for rats 2 is, if Martyn is going to be in it... like in the main cast again or just in the server in general

Cause spoilers for those who don't know, he LEFT the world of the rats. Like literally. He in a easy way to explain "world hopped" out of there

Guess we'll wait and see till we get some news about it...

#rats smp#rats smp 2#squeaksblr#squeakblr#inthelittlewood#martyn inthelittlewood#martyn itlw#also this just came to me and I am writing it here in the tags as a reminder to anyone else who also wasn't watched it#but watch his pirates smp pov cause I really dropped the ball on watching pirates smp as a whole#so I probably need to watch it to understand the future datastream lore... but you might need to watch it to brush up on the lore

54 notes

·

View notes