#doomers

Text

People need to realize how the internet changes the context of things, because everyone acts like an authority or like they're an example of common public opinion when they're online. I recently met someone who had a lot of reddit environmentalist takes irl. And while those are things that would seem like serious climate takes online, when they say them irl in an actual conversation my internal reaction tends to be "do you need help, I don't think thinking about this is good for your mental health". And now I'm thinking of analyzing things I read online (or even say online) as if they were meatspace statements. '

Someone calling a kid's interests cringe. Imagine them doing that irl. Imagine how disgustingly unseemly an adult making fun of a teenager's interests to their face would be. Or if they're calling you out for not being good enough at something that's a hobby, imagine them saying that to you while you're showing off your hobby, imagine how terrible they look.

Someone has a hot take about how it's wrong to be attracted to adult women with short heights or flat chests, imagine someone telling that to a guy with a petite girlfriend. Someone tells you that enjoying a story with incest means you support incest, imagine someone telling that to someone reading an ASOIAF book on the train. Someone says you can't enjoy something with a problematic creator, imagine them saying that to someone reading Lovecraft on the train.

Someone has a hot take in activist space that seems really violent or somewhat facisty. Imagine them saying that irl, even with fellow activists. Imagine someone trying to defend Stalin in an actual human conversation, or trying to defend population control for environmentalist reasons.

I know I'm privileged to live in a large city and be pretty socially active, and even I can easily fall into overly online ways of thinking. But remember, even if you can't touch grass, you can imagine how things would be on the grass.

#my thougts#196#hot take#touch grass#leftism#proship#humanity#discourse#gen z#gen z life#gen z culture#cringe culture is dead#cringe culture#tankies#hopepunk#hopeposting#hope#activism#enviormentalism#doomers#doomer

625 notes

·

View notes

Text

The real AI fight

Tonight (November 27), I'm appearing at the Toronto Metro Reference Library with Facebook whistleblower Frances Haugen.

On November 29, I'm at NYC's Strand Books with my novel The Lost Cause, a solarpunk tale of hope and danger that Rebecca Solnit called "completely delightful."

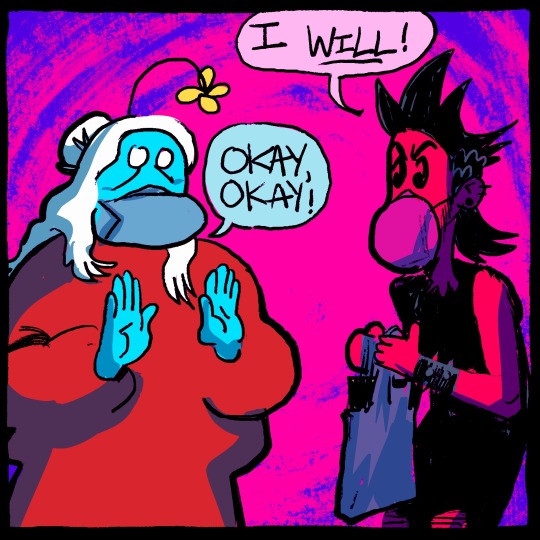

Last week's spectacular OpenAI soap-opera hijacked the attention of millions of normal, productive people and nonsensually crammed them full of the fine details of the debate between "Effective Altruism" (doomers) and "Effective Accelerationism" (AKA e/acc), a genuinely absurd debate that was allegedly at the center of the drama.

Very broadly speaking: the Effective Altruists are doomers, who believe that Large Language Models (AKA "spicy autocomplete") will someday become so advanced that it could wake up and annihilate or enslave the human race. To prevent this, we need to employ "AI Safety" – measures that will turn superintelligence into a servant or a partner, nor an adversary.

Contrast this with the Effective Accelerationists, who also believe that LLMs will someday become superintelligences with the potential to annihilate or enslave humanity – but they nevertheless advocate for faster AI development, with fewer "safety" measures, in order to produce an "upward spiral" in the "techno-capital machine."

Once-and-future OpenAI CEO Altman is said to be an accelerationists who was forced out of the company by the Altruists, who were subsequently bested, ousted, and replaced by Larry fucking Summers. This, we're told, is the ideological battle over AI: should cautiously progress our LLMs into superintelligences with safety in mind, or go full speed ahead and trust to market forces to tame and harness the superintelligences to come?

This "AI debate" is pretty stupid, proceeding as it does from the foregone conclusion that adding compute power and data to the next-word-predictor program will eventually create a conscious being, which will then inevitably become a superbeing. This is a proposition akin to the idea that if we keep breeding faster and faster horses, we'll get a locomotive:

https://locusmag.com/2020/07/cory-doctorow-full-employment/

As Molly White writes, this isn't much of a debate. The "two sides" of this debate are as similar as Tweedledee and Tweedledum. Yes, they're arrayed against each other in battle, so furious with each other that they're tearing their hair out. But for people who don't take any of this mystical nonsense about spontaneous consciousness arising from applied statistics seriously, these two sides are nearly indistinguishable, sharing as they do this extremely weird belief. The fact that they've split into warring factions on its particulars is less important than their unified belief in the certain coming of the paperclip-maximizing apocalypse:

https://newsletter.mollywhite.net/p/effective-obfuscation

White points out that there's another, much more distinct side in this AI debate – as different and distant from Dee and Dum as a Beamish Boy and a Jabberwork. This is the side of AI Ethics – the side that worries about "today’s issues of ghost labor, algorithmic bias, and erosion of the rights of artists and others." As White says, shifting the debate to existential risk from a future, hypothetical superintelligence "is incredibly convenient for the powerful individuals and companies who stand to profit from AI."

After all, both sides plan to make money selling AI tools to corporations, whose track record in deploying algorithmic "decision support" systems and other AI-based automation is pretty poor – like the claims-evaluation engine that Cigna uses to deny insurance claims:

https://www.propublica.org/article/cigna-pxdx-medical-health-insurance-rejection-claims

On a graph that plots the various positions on AI, the two groups of weirdos who disagree about how to create the inevitable superintelligence are effectively standing on the same spot, and the people who worry about the actual way that AI harms actual people right now are about a million miles away from that spot.

There's that old programmer joke, "There are 10 kinds of people, those who understand binary and those who don't." But of course, that joke could just as well be, "There are 10 kinds of people, those who understand ternary, those who understand binary, and those who don't understand either":

https://pluralistic.net/2021/12/11/the-ten-types-of-people/

What's more, the joke could be, "there are 10 kinds of people, those who understand hexadecenary, those who understand pentadecenary, those who understand tetradecenary [und so weiter] those who understand ternary, those who understand binary, and those who don't." That is to say, a "polarized" debate often has people who hold positions so far from the ones everyone is talking about that those belligerents' concerns are basically indistinguishable from one another.

The act of identifying these distant positions is a radical opening up of possibilities. Take the indigenous philosopher chief Red Jacket's response to the Christian missionaries who sought permission to proselytize to Red Jacket's people:

https://historymatters.gmu.edu/d/5790/

Red Jacket's whole rebuttal is a superb dunk, but it gets especially interesting where he points to the sectarian differences among Christians as evidence against the missionary's claim to having a single true faith, and in favor of the idea that his own people's traditional faith could be co-equal among Christian doctrines.

The split that White identifies isn't a split about whether AI tools can be useful. Plenty of us AI skeptics are happy to stipulate that there are good uses for AI. For example, I'm 100% in favor of the Human Rights Data Analysis Group using an LLM to classify and extract information from the Innocence Project New Orleans' wrongful conviction case files:

https://hrdag.org/tech-notes/large-language-models-IPNO.html

Automating "extracting officer information from documents – specifically, the officer's name and the role the officer played in the wrongful conviction" was a key step to freeing innocent people from prison, and an LLM allowed HRDAG – a tiny, cash-strapped, excellent nonprofit – to make a giant leap forward in a vital project. I'm a donor to HRDAG and you should donate to them too:

https://hrdag.networkforgood.com/

Good data-analysis is key to addressing many of our thorniest, most pressing problems. As Ben Goldacre recounts in his inaugural Oxford lecture, it is both possible and desirable to build ethical, privacy-preserving systems for analyzing the most sensitive personal data (NHS patient records) that yield scores of solid, ground-breaking medical and scientific insights:

https://www.youtube.com/watch?v=_-eaV8SWdjQ

The difference between this kind of work – HRDAG's exoneration work and Goldacre's medical research – and the approach that OpenAI and its competitors take boils down to how they treat humans. The former treats all humans as worthy of respect and consideration. The latter treats humans as instruments – for profit in the short term, and for creating a hypothetical superintelligence in the (very) long term.

As Terry Pratchett's Granny Weatherwax reminds us, this is the root of all sin: "sin is when you treat people like things":

https://brer-powerofbabel.blogspot.com/2009/02/granny-weatherwax-on-sin-favorite.html

So much of the criticism of AI misses this distinction – instead, this criticism starts by accepting the self-serving marketing claim of the "AI safety" crowd – that their software is on the verge of becoming self-aware, and is thus valuable, a good investment, and a good product to purchase. This is Lee Vinsel's "Criti-Hype": "taking press releases from startups and covering them with hellscapes":

https://sts-news.medium.com/youre-doing-it-wrong-notes-on-criticism-and-technology-hype-18b08b4307e5

Criti-hype and AI were made for each other. Emily M Bender is a tireless cataloger of criti-hypeists, like the newspaper reporters who breathlessly repeat " completely unsubstantiated claims (marketing)…sourced to Altman":

https://dair-community.social/@emilymbender/111464030855880383

Bender, like White, is at pains to point out that the real debate isn't doomers vs accelerationists. That's just "billionaires throwing money at the hope of bringing about the speculative fiction stories they grew up reading – and philosophers and others feeling important by dressing these same silly ideas up in fancy words":

https://dair-community.social/@emilymbender/111464024432217299

All of this is just a distraction from real and important scientific questions about how (and whether) to make automation tools that steer clear of Granny Weatherwax's sin of "treating people like things." Bender – a computational linguist – isn't a reactionary who hates automation for its own sake. On Mystery AI Hype Theater 3000 – the excellent podcast she co-hosts with Alex Hanna – there is a machine-generated transcript:

https://www.buzzsprout.com/2126417

There is a serious, meaty debate to be had about the costs and possibilities of different forms of automation. But the superintelligence true-believers and their criti-hyping critics keep dragging us away from these important questions and into fanciful and pointless discussions of whether and how to appease the godlike computers we will create when we disassemble the solar system and turn it into computronium.

The question of machine intelligence isn't intrinsically unserious. As a materialist, I believe that whatever makes me "me" is the result of the physics and chemistry of processes inside and around my body. My disbelief in the existence of a soul means that I'm prepared to think that it might be possible for something made by humans to replicate something like whatever process makes me "me."

Ironically, the AI doomers and accelerationists claim that they, too, are materialists – and that's why they're so consumed with the idea of machine superintelligence. But it's precisely because I'm a materialist that I understand these hypotheticals about self-aware software are less important and less urgent than the material lives of people today.

It's because I'm a materialist that my primary concerns about AI are things like the climate impact of AI data-centers and the human impact of biased, opaque, incompetent and unfit algorithmic systems – not science fiction-inspired, self-induced panics over the human race being enslaved by our robot overlords.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/11/27/10-types-of-people/#taking-up-a-lot-of-space

Image:

Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0

https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#criti-hype#ai doomers#doomers#eacc#effective acceleration#effective altruism#materialism#ai#10 types of people#data science#llms#large language models#patrick ball#ben goldacre#trusted research environments#science#hrdag#human rights data analysis group#red jacket#religion#emily bender#emily m bender#molly white

287 notes

·

View notes

Text

I’m so done with doomers I swear. ‘Oh it’s too late, we can’t do anything’. Not true!! Yes, the climate has already and continues to change, and those problems are largely locked in. But there’s always something we can do to mitigate, to prevent, to adapt, to prepare. Giving up is doing the fossil CEOs work for them! Also, POC please correct me if I’m wrong but doomism always comes across to me as…kinda racist?? I’ve never met a doomer who wasn’t a white person from the Global North, misanthropically proselytising about how nothing can save us and how we probably don’t even deserve saving. And I’m just like ok?? So you’re just going to abandon low-lying islanders to their fate? You’re going to act like indigenous societies are as responsible for this crisis as you are? Congrats, you suck.

#solarpunk#hopepunk#environmentalism#climate justice#social justice#optimism#doomers#global north#I just feel like it’s an excuse not to have to take any action y’know#these are usually the kind of people who go all ecofash about population control as well#racism

119 notes

·

View notes

Text

Alright the demo just came out and is packed with lots of content but the most important right now is the trailer after defeating wispy

Theres 4 moments i wanna focus on

1-

First of all, he looks adorable with that determined face. But on what I wanna focus on is the background (and the fact this is a cutscene) probably is Magolor getting upgraded since we see him shine a bit, so this must be the Equivalent of the Lor on the epilogue, but what is that place? It kinda looks like a church? Which is weird since that place is theorized to be the underworld “aka Hades or hell or the afterlife” so we might be getting more lore than the expected since in the novels they never mention the underworld having any kind of handmade structure

2-

Those who have seen the leaks knows of Fatty Devil has I like to call him since he appears in the back of the box, he’s the water boss yet he’s covered in fire,volcanic rock and has horns like a demon which may state how all the bosses may have this Hellish look. But what surprise me the most is he’s Horn designs and those symbols on he’s belly. The horns instead of looking like Morpho knight or landias or even necrodeus… they look like Void termina horns which match with he’s belly having symbols similar to the ones void termina had on he’s belly

3-

This four Doomers not only have their own cinematic but also resemble landia AND have gears on them. Maybe Kirby and Co forgot 4 spheres and this Doomers completely merged with them, making them evolve into something above a grand doomer, however there’s also the chance this are Doomers who feed of possibly other ship like the Lor star cutter? Maybe this place is also a interdimensional shipwreck for interdimensional ships or maybe is like a kind of place of the underworld destined for the ancients and whoever uses the ancients artifacts which could be related to the Church like place of before. Like if the civilization of the ancient literally went to hell

4-

This might be seen has nothing but other than “cool op attack” but makes me question… what kind of eldritch horror is the replacement of Magolor soul because in forgotten land the morpho knight sword (designed to defeat the phantom bosses) didn’t give you a warranted win against Chaos elfilis, And if the new secret final boss is like chaos elfilis in that aspect, then what kind of monster could be waiting for us?…

Maybe unfair Magolor

Conclusion:HOLY SHOT THIS WILL BE WORTH LOSING ALL MY MONEY

#kirby#necrodeus#morpho knight kirby#void termina#galacta knight#kirby magolor#concept magolor#magolor epilogue#kirby return to dreamland deluxe#kirby return to dreamland#krtdldx#krtdl#krtdl spoilers#krtdldx spoilers#fatty puffer#underworld#Hades#Hell#landia#Doomers

49 notes

·

View notes

Text

Doomers and accelerationists fuck off

#anti doomerism#anti accelerationism#doomers#accelerationists#there’s no one post making me write this I’m just expressing it because I want to

3 notes

·

View notes

Text

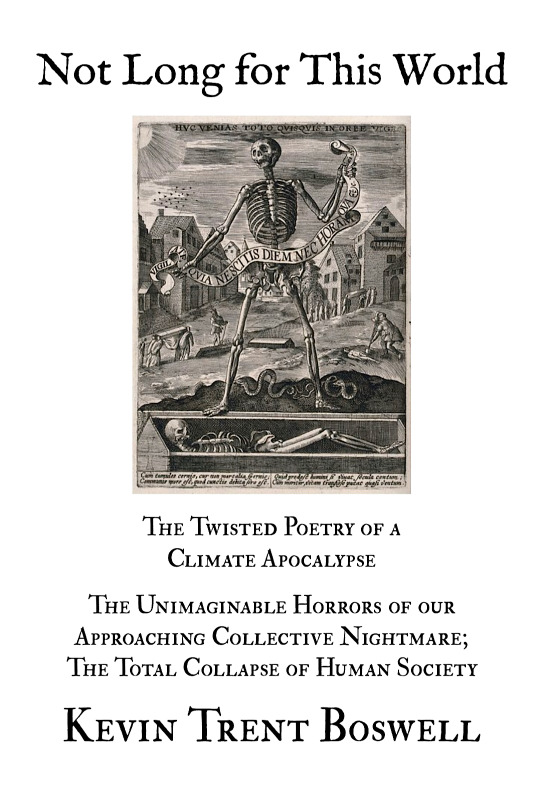

-an excerpt from my upcoming book, Not Long for This World - The Twisted Poetry of a Climate Apocalypse - The Unimaginable Horrors of Our Approaching Collective Nightmare; The Total Collapse of Human Society

by Kevin Trent Boswell

soon to be released on Amazon

#climate catastrophe#climate crisis#extinction#NTHE#climate change#dark poetry#poetry#doomer#doomers#climate collapse#collapse#neartermhumanextinction#global warming#existential crisis#existential threat#nihilsm#poetsandwriters#poets

3 notes

·

View notes

Text

This might be a hot take, but I have no patience for doomers. I’m talking folks who platform “all is lost, we can just lie down and rot” rhetoric.

“I can’t fix a systematic issue so I’m just gonna dunk on people trying.” Bitch, no one expects you to fix the systematic issue yourself. But you can help like, a least one person suffering from the systematic issue of you listen to them! Helping one person isn’t nothing: that’s a tangible impact on the world that makes it a better place.

#frankly I think it’s an ego thing where like people view small acts as beneath them#like they want to lead the revolution they don’t want to be the guy proving mutual aid#like congrats you’ve missed the point of helping people#I’ve talked to a friend about this and she has mentioned this is usually seen among white leftists and she’s right#there’s a type of white leftist who bitches on Twitter about community but won’t do the dishes in their apartment#because at the end of the day they want to do activism that suits their ego#when doing small stuff is important especially in movements where you SHOULDNT be the focus#anyway people who use hopium unironically are my beloathed#and to be clear this is about communicating doomerism not being like depressed that shit sucks and I’m sorry if you feel that way#one is an ideology the other is a feeling#politics#doomers

11 notes

·

View notes

Text

Please read this!

#omg I’ve been waiting for this article for 6 years#mine#doomerism#doomers#obama has been fighting self-identified ‘progressives’ on this for ages but not enough ppl listen!#anti doomer

11 notes

·

View notes

Text

Luddites

Holding back the AI of today, will stop the space exploration of tomorrow. But it sure will make some billionaires even bigger billionaires. Which would you prefer? Being chained to the past will just make you slaves in the future.

#ai#machine learning#space#space exploration#luddites#luddite#fear mongers#doom bringers#naysayers#explore#go forth#the establishment#boomers#billionaires#the ruling class#artificial intelligence#don't let them stop us#everyone has a right to this#everyone created this#doomers#tech#technology#technologists#future#future tech#bypass the gatekeepers

5 notes

·

View notes

Text

Climate misinformation on YouTube is changing from saying that climate change isn't happening—now the new form of climate misinformation is saying it's impossible to fix it.

"arguments suggesting climate solutions won't work...have grown 21.4 percentage points"

9K notes

·

View notes

Text

Idk what doomer needs to hear this but the very fact that you recognize injustice is doing something about injustice. Your stance on political issues matters actually, especially to the people around you effected by them. Of course it's great if you do more for a cause you care about, but public opinion is the most powerful force in a world made up of social constructs.

For almost all of human history a major goal of every movement was to get as many people as possible on board with the movement. Propaganda and censorship have been major issues throughout human history because what we believe matters. The fact that you hold, express, and act on your worldview means something.

Praxis isn't just direct action to do something, that is important, but if all you can do right now in your circumstances is limited, being a member of the movements you're part of matters. You shouldn't feel ashamed because some people have the privilege (and it is a privilege) to do more.

If your beliefs weren't important, there wouldn't be people trying to sway them. And if nobody believes a king has a right to rule, or that a serf is bound to his lord, then those things would simply not be.

#politics#socalism#praxis#communism#anti capitalism#activism#doomer#doomers#hope#radical optimism#hopepunk#hopeposting

358 notes

·

View notes

Text

Excerpts from What Doomer Are You? A New Political Map

"Liberals, neoliberals, conservatives, socialists, progressives, libertarians, these are all borders on a map. Meanwhile, the very Earth beneath is irreversibly shifting. Pollard calls all of these old political camps Deniers. All of these ways of organizing a civilization are based on some sense of planetary stability, which is gone."

"A more difficult place to be is in the Humanist camp, where I think a lot of you are. This is the idea of a collective messiah, that if we all just think this and do that [insert Medium article here] we’ll be saved. That human reason will lead us out."

"The Transition/Resilience Movement thinks that getting ready together might save us. They’re like Humanitarians that are more prepared to accept heavy losses."

"Then we get Communitarians, who are like Humanists with more realistic expectations. Rather than expecting everyone to change their minds and change everything, they hope to gather a few like-minded people and change a few square kilometers. Existentialists are, I assume, the more lonely types, who would prefer the people to be fewer, and those few kilometers to be on a hill."

"We’re destroying the Earth of ages out of sheer inertia and laziness."

"To a degree, no map makes sense. We’re all just clinging onto a rock for dear life, still hurtling outwards from the Big Bang. Drawing lines across this molten spinning ball makes little sense and drawing lines across our shared mind space makes even less. The only thing sure is that we’re going to die, and how is perhaps not the most important question."

"Humans are both the center of the observable universe (by definition) and also completely insignificant. We both know lots of things and are completely lost."

"Where are we? Where are we going? I don’t fucking know. I have a strong sense of falling, but I have no idea where we’ll end up."

1 note

·

View note

Text

Doofy Babby Turt

Doofy Babby Turt - One day after complaining about it, I finish the Dragon's End meta in Guild Wars 2 and start raising a Siege Turtle.

Good Morning Friends! I swear sometimes all it takes for me is to complain about something and then the universe decides to immediately make me wrong. Yesterday I complained about how difficult it was to get a Dragon’s End group so that I could get my turtle egg. Then yesterday over lunch while eating my shin ramen, I zoned into Dragon’s End with the thought of grinding out some Writs, and…

View On WordPress

0 notes

Text

What completely irks me is the fact that people are shocked when someone who they thought was a good person does something terrible, especially celebrities. Like no way, human beings are capable of being evil and cruel? Who would have known?

Seriously, forever thankful to our Lord that He has given us the wisdom to understand many things that a non Christian cannot understand or else I would have been stuck in that doomer crap.

1 note

·

View note