#embedded content

Explore tagged Tumblr posts

Text

Hi everyone, I’m Orm Kornnaphat. I’m an actress in the short film ‘Ti Sam’, which is a project from my university. We plan to submit it for competition, so I’d like to thank everyone for your support and view count. Your encouragement means so much to us. Thank you so much.

Translation by samsjello IG pairueay.productions

ตีสาม (Ti Sam) | SHORT FILM

#omg her mom is in this too#orm kornnaphat#ti sam short film#ti sam#pairueay production#be mindful of the content warnings for the short film#short film has embedded eng subs#*mine: gifs

32 notes

·

View notes

Text

formula one. the billionare boys club.

#formula 1#somehow i am still disappointed by the state of this sport#i miss seb so much rn#f1#christian horner#lando norris#lance stroll#the fact there is legitimate evidence and redbull instead suspended the women who reported horner is so disgusting#and the fact that drivers are content to say that its just noise#that they feel bad for horner#that he’s such a lovely guy who doesn’t deserve this is so fucking disgusting#daniel ricciardo#alex albon#and somehow alex was the most disappointing because i didn’t expect it#it’s a sport that is so heavily embedded in patriarchal values that i could pick ten drivers to say that and id never guess alex :(#they’ve set a precedent for men in motorsports that is a reversal of their we race as one message and its a horrific precedent to set#because it shows that team’s principals and the likes are given free passes for evidenced harassment in the workplace#whilst susie wolff and hannah etc face continual backlash for their presence#you didn’t just let horner keep his job-you let everyone in motorsports know how easy it is to get away with harassment#nico hulkenberg

74 notes

·

View notes

Note

can i ask where your "it is of course daniel" tag comes from? i'm reading it in max's voice but i have no idea where it's from

You should absolutely read it in Max’s voice because it’s from this (though I did misremember it slightly but whatever):

#embedding the video so you don’t have to give redbull a view :)#or see any of their dumbass content#it is of course daniel#his face when he said it is forever burned into my brain#ask

24 notes

·

View notes

Text

SORRY I'VE BEEN MIA i did NOT just finish compiling barry's whole filmography masterlist. i definitely did not find a source for EVERY SINGLE FILM aside from his earliest three. i absolutely do not need advice on where to safely post the masterlist so it doesn't get sus'd and so anyone who wants to can easily access it.

until i figure that out, i sure hope no one messages me and lets me know if they're looking for a specific film/tv show/short of his! that wouldn't be good at all! :^)

#would it be safe to just straight up post it to tumblr? with like embedded links? lmk#i might make a public post with the one's that are not-suspiciously available so those are easy to share around at least#also onto jacob's next except his list is much shorter and much more difficult to find old content from </3#barry keoghan#saltburn#oliver quick

20 notes

·

View notes

Text

AI Doesn’t Necessarily Give Better Answers If You’re Polite

New Post has been published on https://thedigitalinsider.com/ai-doesnt-necessarily-give-better-answers-if-youre-polite/

AI Doesn’t Necessarily Give Better Answers If You’re Polite

Public opinion on whether it pays to be polite to AI shifts almost as often as the latest verdict on coffee or red wine – celebrated one month, challenged the next. Even so, a growing number of users now add ‘please’ or ‘thank you’ to their prompts, not just out of habit, or concern that brusque exchanges might carry over into real life, but from a belief that courtesy leads to better and more productive results from AI.

This assumption has circulated between both users and researchers, with prompt-phrasing studied in research circles as a tool for alignment, safety, and tone control, even as user habits reinforce and reshape those expectations.

For instance, a 2024 study from Japan found that prompt politeness can change how large language models behave, testing GPT-3.5, GPT-4, PaLM-2, and Claude-2 on English, Chinese, and Japanese tasks, and rewriting each prompt at three politeness levels. The authors of that work observed that ‘blunt’ or ‘rude’ wording led to lower factual accuracy and shorter answers, while moderately polite requests produced clearer explanations and fewer refusals.

Additionally, Microsoft recommends a polite tone with Co-Pilot, from a performance rather than a cultural standpoint.

However, a new research paper from George Washington University challenges this increasingly popular idea, presenting a mathematical framework that predicts when a large language model’s output will ‘collapse’, transiting from coherent to misleading or even dangerous content. Within that context, the authors contend that being polite does not meaningfully delay or prevent this ‘collapse’.

Tipping Off

The researchers argue that polite language usage is generally unrelated to the main topic of a prompt, and therefore does not meaningfully affect the model’s focus. To support this, they present a detailed formulation of how a single attention head updates its internal direction as it processes each new token, ostensibly demonstrating that the model’s behavior is shaped by the cumulative influence of content-bearing tokens.

As a result, polite language is posited to have little bearing on when the model’s output begins to degrade. What determines the tipping point, the paper states, is the overall alignment of meaningful tokens with either good or bad output paths – not the presence of socially courteous language.

An illustration of a simplified attention head generating a sequence from a user prompt. The model starts with good tokens (G), then hits a tipping point (n*) where output flips to bad tokens (B). Polite terms in the prompt (P₁, P₂, etc.) play no role in this shift, supporting the paper’s claim that courtesy has little impact on model behavior. Source: https://arxiv.org/pdf/2504.20980

If true, this result contradicts both popular belief and perhaps even the implicit logic of instruction tuning, which assumes that the phrasing of a prompt affects a model’s interpretation of user intent.

Hulking Out

The paper examines how the model’s internal context vector (its evolving compass for token selection) shifts during generation. With each token, this vector updates directionally, and the next token is chosen based on which candidate aligns most closely with it.

When the prompt steers toward well-formed content, the model’s responses remain stable and accurate; but over time, this directional pull can reverse, steering the model toward outputs that are increasingly off-topic, incorrect, or internally inconsistent.

The tipping point for this transition (which the authors define mathematically as iteration n*), occurs when the context vector becomes more aligned with a ‘bad’ output vector than with a ‘good’ one. At that stage, each new token pushes the model further along the wrong path, reinforcing a pattern of increasingly flawed or misleading output.

The tipping point n* is calculated by finding the moment when the model’s internal direction aligns equally with both good and bad types of output. The geometry of the embedding space, shaped by both the training corpus and the user prompt, determines how quickly this crossover occurs:

An illustration depicting how the tipping point n* emerges within the authors’ simplified model. The geometric setup (a) defines the key vectors involved in predicting when output flips from good to bad. In (b), the authors plot those vectors using test parameters, while (c) compares the predicted tipping point to the simulated result. The match is exact, supporting the researchers’ claim that the collapse is mathematically inevitable once internal dynamics cross a threshold.

Polite terms don’t influence the model’s choice between good and bad outputs because, according to the authors, they aren’t meaningfully connected to the main subject of the prompt. Instead, they end up in parts of the model’s internal space that have little to do with what the model is actually deciding.

When such terms are added to a prompt, they increase the number of vectors the model considers, but not in a way that shifts the attention trajectory. As a result, the politeness terms act like statistical noise: present, but inert, and leaving the tipping point n* unchanged.

The authors state:

‘[Whether] our AI’s response will go rogue depends on our LLM’s training that provides the token embeddings, and the substantive tokens in our prompt – not whether we have been polite to it or not.’

The model used in the new work is intentionally narrow, focusing on a single attention head with linear token dynamics – a simplified setup where each new token updates the internal state through direct vector addition, without non-linear transformations or gating.

This simplified setup lets the authors work out exact results and gives them a clear geometric picture of how and when a model’s output can suddenly shift from good to bad. In their tests, the formula they derive for predicting that shift matches what the model actually does.

Chatting Up..?

However, this level of precision only works because the model is kept deliberately simple. While the authors concede that their conclusions should later be tested on more complex multi-head models such as the Claude and ChatGPT series, they also believe that the theory remains replicable as attention heads increase, stating*:

‘The question of what additional phenomena arise as the number of linked Attention heads and layers is scaled up, is a fascinating one. But any transitions within a single Attention head will still occur, and could get amplified and/or synchronized by the couplings – like a chain of connected people getting dragged over a cliff when one falls.’

An illustration of how the predicted tipping point n* changes depending on how strongly the prompt leans toward good or bad content. The surface comes from the authors’ approximate formula and shows that polite terms, which don’t clearly support either side, have little effect on when the collapse happens. The marked value (n* = 10) matches earlier simulations, supporting the model’s internal logic.

What remains unclear is whether the same mechanism survives the jump to modern transformer architectures. Multi-head attention introduces interactions across specialized heads, which may buffer against or mask the kind of tipping behavior described.

The authors acknowledge this complexity, but argue that attention heads are often loosely-coupled, and that the sort of internal collapse they model could be reinforced rather than suppressed in full-scale systems.

Without an extension of the model or an empirical test across production LLMs, the claim remains unverified. However, the mechanism seems sufficiently precise to support follow-on research initiatives, and the authors provide a clear opportunity to challenge or confirm the theory at scale.

Signing Off

At the moment, the topic of politeness towards consumer-facing LLMs appears to be approached either from the (pragmatic) standpoint that trained systems may respond more usefully to polite inquiry; or that a tactless and blunt communication style with such systems risks to spread into the user’s real social relationships, through force of habit.

Arguably, LLMs have not yet been used widely enough in real-world social contexts for the research literature to confirm the latter case; but the new paper does cast some interesting doubt upon the benefits of anthropomorphizing AI systems of this type.

A study last October from Stanford suggested (in contrast to a 2020 study) that treating LLMs as if they were human additionally risks to degrade the meaning of language, concluding that ‘rote’ politeness eventually loses its original social meaning:

[A] statement that seems friendly or genuine from a human speaker can be undesirable if it arises from an AI system since the latter lacks meaningful commitment or intent behind the statement, thus rendering the statement hollow and deceptive.’

However, roughly 67 percent of Americans say they are courteous to their AI chatbots, according to a 2025 survey from Future Publishing. Most said it was simply ‘the right thing to do’, while 12 percent confessed they were being cautious – just in case the machines ever rise up.

* My conversion of the authors’ inline citations to hyperlinks. To an extent, the hyperlinks are arbitrary/exemplary, since the authors at certain points link to a wide range of footnote citations, rather than to a specific publication.

First published Wednesday, April 30, 2025. Amended Wednesday, April 30, 2025 15:29:00, for formatting.

#2024#2025#ADD#Advanced LLMs#ai#AI chatbots#AI systems#Anderson's Angle#Artificial Intelligence#attention#bearing#Behavior#challenge#change#chatbots#chatGPT#circles#claude#coffee#communication#compass#complexity#content#Delay#direction#dynamics#embeddings#English#extension#focus

2 notes

·

View notes

Text

sometimes. you will be a fan of something where you are probably the only person in the fandom and that’s. ok (it isn’t i’m not ok)

#no1 words#you ever just. rewatch a youtuber playthrough a game and their playthrough becomes embedded into your soul#and there is a fandom for the game but. no one for that one specific thing#i know that there might be someone there for me. but where are they#there’s a fandom wiki but no fan content?#tldr yubika megsquared squomogus baldsprout and subs are absolute peak and i will take no criticism on this

2 notes

·

View notes

Text

i get that a lot of people can separate art from the artist but like why?

#this isnt about seeing art from your own perspective rather than what may be the authors intention#thats more like literary analysis#im talking about the artist being a piece of shit yet people want to still engage in content for the art#and/or ignore how their certain shit opinions are embedded into the art#all in the name of keeping that fandom alive#but like why?#obviously everyones got their own boundaries of things they can reconcile with and things that cross the line#but when certain lines have been crossed greatly like why are you still adamant#this is rhetorical btw

4 notes

·

View notes

Text

while i'm on my wacky lore train , also consider : trees that are watching , trees that are listening , trees that have moral alignments and factions they serve !!! cutting / burning of these trees as a capitol crime !!!

#thank u narnia for embedding this in me since 7 yrs old#more of this#more sentient tree content#ˈ ⚘ *̳ 𝔦 . ﹚ — druid speaks .

6 notes

·

View notes

Text

I have a tab open forever in my brain where I think about all the ways Terry pratchett would’ve embedded more queer stories into his discworld series if he hadn’t left too soon.

Like Cheery Littlebottom and the plotline of some dwarves beginning to identify publicly as women (all dwarves were traditionally male presenting regardless of their gender iirc), in Feet of Clay was published in 1996! Don’t tell me Terry wouldn’t be right there trying to march these themes forward a few centuries. There would be a standalone novel with Our Flag Means Death-type energy. As Vimes gets older we would take over following his son, who comes out as queer to his dad and you just know Vimes is ready to do anything for his kid (and it’s embarrassing). Angua and Carrot’s relationship is set up to be on the brink of queerness, c’mon just make everyone gay.

I have no comment on the wizards yet I’m still figuring that part out

#discworld#terry pratchett#queer identity#reading discworld is like comfort food to me and I just wish it had more queer content#bc you know it would#like it’s already there#it just was too early still#I don’t wanna say carrot and angua are queer coded bc they weren’t written intentionally like that so I think it’s the wrong term#but the parallels of identity and appearance#ughhhhh i need more discworld friends do any of u exist in 2023 why is this series so permanently embedded in my brain

31 notes

·

View notes

Note

I was reading The Monkey King's Daughter (you can read the whole book for an hour) and apparently the protagonist is also Guanyin's grandchild? Can Guanyin be shipped?

I mean I can’t say like what are like the moral implications of shipping GuanYin itself cause that is so not my place but I’m still going to answer this cause it kinda of interesting when it comes to modern media. First off saying that like I have never really seen romance done with GuanYin. At least in a serious way. But if I had to take a guess it can be seen as 'possible' as much as like shipping anyone in Chinese mythos, in that isn't really taken seriously at all. In a lot of modern fan spaces there are a variety of crack ships for more humorous or hypothetical situations like I have seen literally the Star of Venus shipped with Jade Emperor just cause. But I don't see much with buddhas or bodhisattvas in either post-modern media nor in fan spaces. At least that isn't Wukong or Sanzang since they are both Buddhas. And I have done a whole thing about how Wukong for decades wasn’t seen as a romantic figure until like there was a huge character reconstruction, but that isn’t usually the case for most characters.

I would say that the most mainstream instance I can think off the top of my head is The Lost Empire (2001) where it had the main character has a romantic plot with Gaunyin herself. Of course, that wasn't really a masterpiece within itself but this was considered like a 'bad choice' more so that it was just a very strange and awkward romance at that.

Funny enough I think I see more romantic for humor's sake on Guanyin in comic books or games as likes gags at most. Like in Westward comics (later a tv series) Guanyin has a celestial-turned-demon trying to pursue him that he always rejects. Another is more play for laughs but Guanyin in the Fei Ren Zai where people just don't know it's Guanyin and think she is so attractive.

I've seen some games that have Guanyin as like a pretty boy/girl but otherwise nothing even close to a romance plot. Those are more just for like aesthetics of making every character look overly attractive to sell it.

The best I can say is that is just kinda strange and a little strange personally but I can't say that it can be taken seriously. I mean Wukong is supposed to be a Buddha by the end of the novel, so if The Monkey King's Daughter has it that a buddha can have a daughter then there wouldn't be anything stopping the author from having a bodhisattva having kids.

#anon ask#anonymous#anon#ask#sun wukong#monkey king#guanyin#chinese mythos#monkey king's daughter#Wukong is pretty self contained within Xiyouji himself so asking for a little bit of suspension of disbelief can be understood#but Gaunyin has a much longer history that is far more embedded with Buddhist mythology#She isn’t just a character in Xiyouji#and it would be limiting to her just to make it so#but I do think that might be the case in some media when it comes to portraying Gaunyin#esp since most modern interpretations of Guanyin are from xiyouji material just cause the sheer amount of xiyouji content there is#I rarely see Guanyin stand alone moves/shows and there are some trust me but most of her portrayals are within xiyouji spaces#there is a lot of conversation about xiyouji either being a reconstruction or a deconstruction of religion#and while the book is SATURATED in allegorical meaning whether in taoism buddhism or chinese lore it is also seen as satire of religion#people can take xiyouji as pointing out the flaws in humanity but also the flaws of heaven as well as it humanizes both gods and buddhas#this kinda of humanization can be seen as disrespectful to a certain extent but it is what makes these figures more engaging as characters#from a writing standpoint at least#this is me just rambling now about the interesting dycotomy that xiyouji has and has had with religion and how that can be see as today#to a certain extent a lot of directors take xiyouji plots as also their own way to show the heavens in their own way to convey satire#or humor as well depending on what their direction is aiming for#Some even go so far to make that heaven is just straight up the bad guy and that includes buddha as well which is a FAR more wild take than#just having romance in the heavens#But xiyouji does have it that we see these mythological figures have flaws#that heaven can lie or trick or they can take bribes and its up to the audience to interpretation as either satire or if it is critiquing#perhaps religion itself or rather the religious institutions since we do see both daoist and buddist monks as antagonists in the book#this as nothing to do with the ask at this point but i just wanna say my thoughts

13 notes

·

View notes

Text

Companions

Middle schoolers don't get to just ride off into the sunset. Yasako and Isako reunite.

https://archiveofourown.org/works/61884181

I've had an outline of this in my drafts for literally a year. It's not much, but I was mostly annoyed I never finished it

���Amasawa-san? I still don’t know. Did we become friends or not?”

“I told you, didn’t I? I don’t really know what friendship means. But in your case…”

Yuko “Yasako” Okonogi kept going over that last conversation in her head. After all the two girls had gone through that summer, and all that Yuko Amasawa had put her through, Yasako thought she had finally gotten through her shell.

“Having gotten lost on the same false path and having sought the same true path makes us companions. But we’re only companions when we’re traveling down the same road. Let’s meet again when we both lose our way.”

Maybe she had, but it didn’t matter now. She could imagine what Amasawa looked like over the phone, staring into the distance with all the world-weariness only a twelve-year-old could muster.

“Until then, goodbye. I’m Isako. You gave me that name.”

And then she got on her bike and pedaled off into the sunset, searching for her next adventure.

Yasako sighed. None of this brooding was helping her settle in for the first day of middle school. She wasn’t alone, of course. Her best friend Fumie was in her class again, and hopefully Amasawa’s gang of brats would chill out for at least a little while now that leadership had begrudgingly reverted back to Daichi. But still, she missed her old rival/new friend.

Fumie was currently chatting away at a mile a minute. Something about the collapse of the C Space leading to an uptick in job requests for their Den-noh Detective Agency. Yasako nodded along, but she wasn’t really listening to her friend’s scheming.

The bell rang and Yasako turned to face the front of the room. Out of the corner of her eye, she saw the door to the classroom open, and none other than Amasawa slip inside and take the last open seat near the back. Yasako’s heart skipped a beat and she couldn’t focus on anything else for the rest of the period.

As soon as class ended, she hurried back to Amasawa’s desk. “A-Amasawan-san? I thought… I thought you were leaving,” she stammered out.

“It’s Isako, remember?” the other girl said, starting to smile. She immediately leaned into a contemplative pose with her chin on her hand to cover that up, and continued, “My aunt and uncle refused to let me transfer schools again after just one year, so I’m here at least until high school.”

Fumie had followed Yasako and smirked. “So you’re stuck with us some more, huh Isako?”

Isako glared at her. “It’s still Amasawa-san to you.”

Fumie’s smile widened and she gave a knowing look to Yasako. Yasako blushed and said, “Well, it’s not all bad, right?”

The only response she got was a “Hmph” as Isako looked down to finish repacking her schoolbag. Yasako very intentionally couldn’t see her face.

Later that day, the three girls walked to lunch together. Rather, Isako walked to lunch and the other two followed her. The older kids in the hallways didn’t spare them a thought, but all the first-years Yasako recognized from middle school glanced nervously and scuttled out of their way.

“Oh yeah!” Fumie said. “Everyone still thinks you killed that guy over the summer, Amasawa.”

Isako’s eyes narrowed a little at the rudeness, but she didn’t push it. “They can think whatever they want,” she responded.

“Oh no,” Yasako said. “You don’t need to be friends with everybody, b-but if you’re staying here for all of middle school, you shouldn’t make them hate you either.” Yasako stepped forward and took Isako’s hand. “We’ll have to show them you’re not a bad person.”

Isako rolled her eyes and hmphed again, but didn’t drop Yasako’s hand.

In the cafeteria, the three found an empty table and sat down. Yasako and Fumie’s other friend Haraken soon joined them. He raised an eyebrow at Isako sitting with them, and Yasako blushed thinking about what their last conversation had been about when she had though Isako wasn’t coming back.

Even before he sat down, Fumie was back on the topic of the new jobs for their detective agency. Haraken had been the president of the “Biology Club” in elementary school, which nominally made him the student leader of the detective agency too. While the two of them discussed plans, Yasako wondered if they’d need to set up a new front with a middle school club.

Isako seemed to be ignoring them all, until Yasako realized that her staring into space was really her running calculations in her head. Haraken seemed to notice that too, and eventually turned to her.

“So, Amasawa-san? Any suggestions?” he asked.

“Well. Not that I need to help you or anything, but if you’re searching for cracks left from the collapse of the C Space, I know a strategy for that. I would…”

She was interrupted by Daichi and his goons from the Daikoku Hackers' Club strolling over.

“Hey, uh, Boss,” Daichi’s friend Denpa greeted her. “You’re back?”

Isako opened her mouth to reply, but Daichi got there first. “She left the club! She abdicated her position, so that means I’m back in charge!” he screeched. Denpa and the other boys avoided making eye contact with either of the former leaders of the group.

“Whatever. I don’t care,” said Isako, not turning to face them. “I don’t need your club anymore. You can have it.” Isako’ fists tightened, and Yasako put one hand on hers for reassurance.

Daichi’s gloating turned into a sneer. “So that’s how it is?” he asked. “All it took is one upset for Fumie and her little friends to domesticate you? I thought you were stronger than that, Isako…”

At that, she glared daggers straight ahead. After a moment, Daichi’s glasses sparked and he fell to his knees.

“I told you idiots not to call me that,” Isako said evenly. Denpa hauled Daichi to his feet as he moaned about his allowance, and the boys ran off.

Across the table, Fumie snickered. “I’m glad you’re in our club this year, Amasawa. Hopefully those jerks leave us alone now.”

“Whatever,” Isako repeats.

“I’m also glad you’re here,” Yasako says, and this time Isako just looks away with a hint of a smile.

#i don't know why the ao3 link isn't embedding properly#dennou coil#den noh coil#here is the first and probably last post in the tag this year#original content

2 notes

·

View notes

Note

Everything I've seen regarding The Veilguard constantly reminds me of like... shallow Hollywood movies. If that makes sense. The dialogue is Cool and Tough and Quirky, the action are snazzy, the stakes are Big and we're the super heroes

no you are 100% right... also uhm i dont want to be mean but it seems to me that bioware actually tactfully realized that their target audience is like 50% ppl who want to be a cool action hero in a video game and dont rly gaf abt the story and 50% ppl who want cool one-liners they can screenshots or make gifs about and then an unknown % of those two groups just really only want to look at their dolls in awe and talk abt how cool and pretty they are and maybe make them kiss some attractive companion....

and im not saying wanting to do any of those things is bad bc it isnt. as long as you are having fun w whatever all power to you BUT like... is this game even an rpg... like idgaf abt this game feeling like a dragon age game at the end of the day bc i dont rly like dragon age and i dont have like a deep connection with the setting or stories (to this franchise is just too centrist and gritty for grittiness' sake... also im having a hard time scavanging the fun out of these stories amidst the weird stuff, the racism and lesbophobia but ik a lot of people CAN do it, and once again, thats not a bad thing.)

but yeah my first impression is just... this game is weird and not for me but i also want to dig deeper...

#asks#also kind of concerning that they noticed that many people play these games bc of the companions so even the bw logo in the beginning#includes the companions and yET i havent rly seen ppl happy w their companion writing...#like idk if i compare this to owlcat also very aptly playing into the appeal of interesting companions w their games#and also making sure to have romances too and a lot of romance content bc they KNOW its a hook for a lot of ppl#yet bioware who kind of discovered this hook is just doing nothing w this? its strange#also ppl saying the inquisitor decisions are only there to subtly ask if solavellan was a thing....#like to me it feels like the writing team just liked the idea of a god falling for a mortal and wanted to keep writing that#and nothing else... which is kind of strange to do this way#i guess they didnt want to write something original bc this story is so embedded in dragon age or some shit idk#like just write a book abt this.... LOL

4 notes

·

View notes

Text

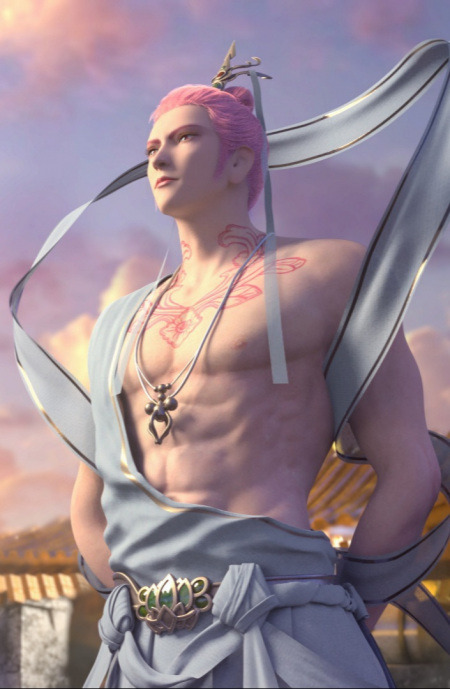

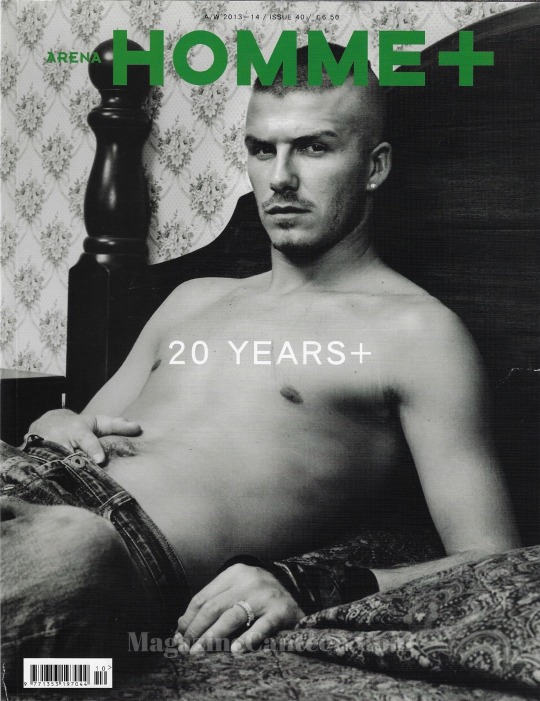

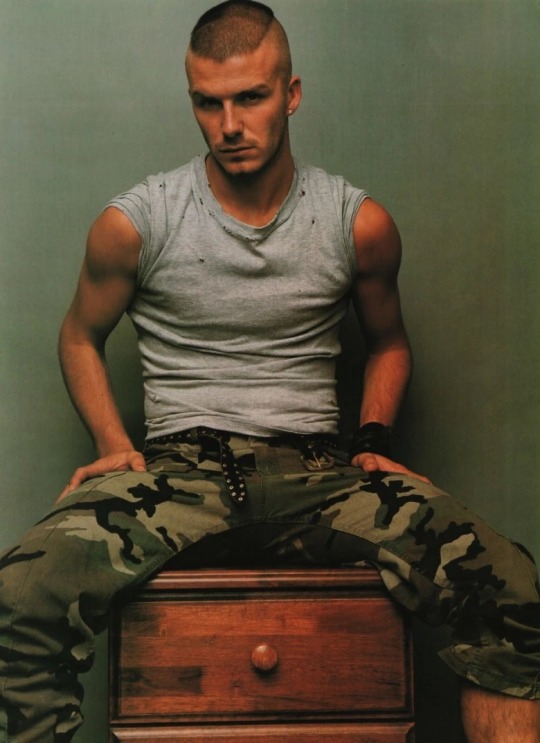

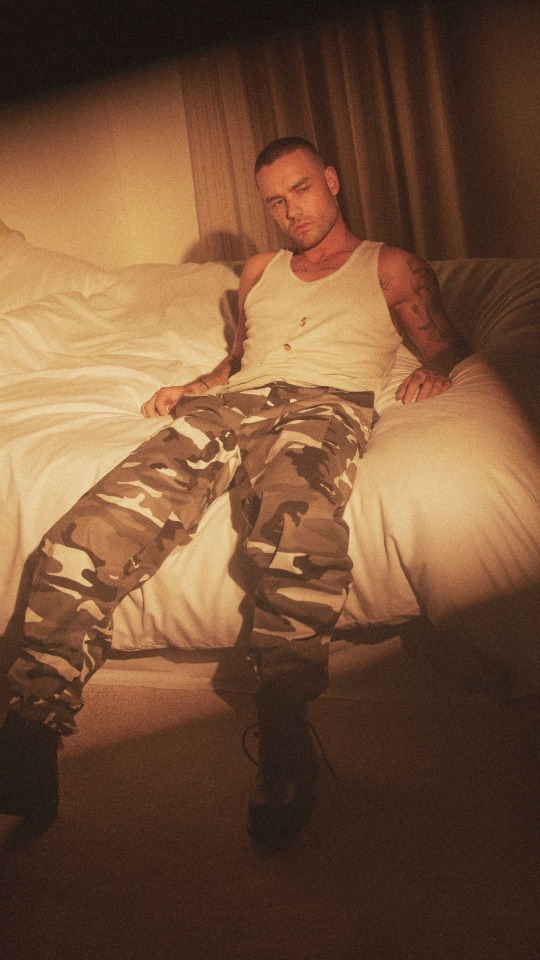

okay someone on liam’s creative team definitely got some inspiration from david beckham’s iconic arena homme plus shoot i mean…

2/2/24

#can’t blame them it’s a classic#ugh not a fan of embedded content#hate being sent to instagram when I’m trying to watch a video on here#did anyone post the reel as not embedded?#anyway..#the gayest goddamn editorial this was#how is that 22 years ago now….#liam#teardrops#02.02.24#david beckham#arena homme plus#2001

8 notes

·

View notes

Link

published a one-shot on my alt pseud the other day if anyone who’s interested didn’t see! mature content warning however

#knights qpr#swordblademeta#sorry 4 no content label i'm having trouble getting it to work with an embedded link

2 notes

·

View notes

Text

Transformation: KI & Optimierung von Content

Die digitale Suchumgebung erlebt ihren dramatischsten Umbruch seit Google erstmals das Web durchsuchte, wobei künstliche Intelligenz die Regeln der Informationsfindung grundlegend neu schreibt. Traditionelle schlüsselwortbasierte Systeme weichen schnell einer ausgeklügelten semantischen Verständnis, wo Kontext wichtiger ist als exakte Übereinstimmungen und Nutzerintention mehr zählt als clevere SEO-Tricks. Diese Veränderung verspricht, Suchergebnisse intuitiver und relevanter zu machen, verlangt aber auch von Content-Erstellern, ihren Ansatz zur Optimierung völlig zu überdenken.

Der Wandel von der traditionellen Suche zur KI-gestützten Informationsbeschaffung

Die traditionelle Suchmaschine, jener vertraute digitale Bibliothekar, an den wir uns in den letzten fünfundzwanzig Jahren gewöhnt haben, durchläuft ihre grundlegendste Veränderung seit Google erstmals das Web durchsuchte. Anstatt zehn blaue Links zu präsentieren und zu hoffen, dass Nutzer finden, was sie brauchen, konstruieren KI-gestützte Systeme nun direkte Antworten aus abgerufenen Inhalten. Diese Suchentwicklung stellt eine Abkehr von der Bewertung von Seiten nach Autoritätssignalen hin zum Verstehen von Bedeutung durch Vektoreinbettungen dar. Die Verschiebung verspricht dramatisch verbesserte Abrufgenauigkeit, die über das Abgleichen von Schlüsselwörtern hinausgeht hin zum semantischen Verständnis. Content-Ersteller müssen nun für Maschinen optimieren, die wirklich Kontext verstehen, nicht nur Backlinks zählen.

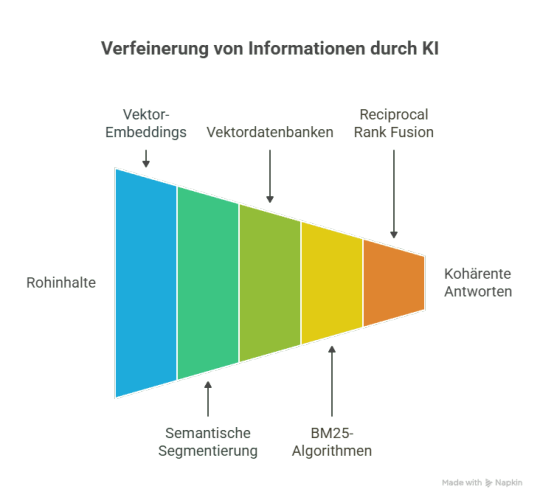

Kerntechnologien, die die neue Suchinfrastruktur vorantreiben

Hinter dieser grundlegenden Veränderung liegt ein ausgeklügelter technologischer Stack, der die Architekten früher Suchmaschinen sowohl neidisch als auch verwirrt machen würde. Vektor-Embeddings wandeln nun Inhalte in mathematische Darstellungen um und ermöglichen es Maschinen, Bedeutung zu erfassen, anstatt nur Schlüsselwörter abzugleichen—stellen Sie sich vor, Computern beizubringen, Nuancen zu verstehen. Semantische Segmentierung zerlegt Informationen in verdauliche Stücke, die den Kontext bewahren, während Vektordatenbanken wie Pinecone diese Fragmente blitzschnell speichern und abrufen. BM25-Algorithmen handhaben Schlüsselwort-Präzision, und Reciprocal Rank Fusion kombiniert elegant mehrere Abrufmethoden. Große Sprachmodelle weben dann abgerufene Fragmente zu kohärenten Antworten zusammen und schaffen einen völlig neuen Rahmen für die Informationsfindung.

Strategische Vorteile von KI-erweiterten Suchsystemen

Mehrere überzeugende Vorteile ergeben sich, wenn Organisationen traditionelle Suchinfrastruktur zugunsten von KI-erweiterten Systemen aufgeben und dadurch verändern, wie Informationen von der Speicherung zum Nutzer gelangen. Diese Plattformen liefern überlegene Nutzererfahrung durch semantische Relevanz, die Absichten versteht, anstatt lediglich Schlüsselwörter abzugleichen. Prädiktive Analytik antizipiert Informationsbedürfnisse, bevor Nutzer diese artikulieren, während personalisierte Suche sich an individuelle Verhaltensweisen und Präferenzen anpasst. Das Nutzerengagement steigt dramatisch, wenn Systeme präzise Antworten anstelle endloser Ergebnislisten liefern. Automatisierte Beobachtungen bringen in Datenarchiven verborgene Muster an die Oberfläche. Die Veränderung befreit Organisationen von Crawling-Verzögerungen und Ranking-Unsicherheiten und schafft kontrollierte Umgebungen, in denen Qualität über Manipulationstaktiken triumphiert.

Inhalte für vektorbasierte Entdeckung optimieren

Während diese strategischen Vorteile ein ansprechendes Bild von KI-erweiterten Suchfunktionen zeichnen, müssen Organisationen grundlegend überdenken, wie sie Informationen strukturieren und präsentieren, um in diesem neuen Umfeld erfolgreich zu sein. Traditioneller, mit Keywords vollgestopfter Inhalt wird völlig nutzlos, wenn Algorithmen Bedeutung über mechanische Wiederholung priorisieren. Kluge Content-Ersteller setzen nun auf Chunking-Semantik—das Aufteilen von Informationen in verdauliche, semantisch reiche Segmente, die KI-Systeme leicht analysieren und verstehen können. Jeder Absatz sollte ein einzelnes Konzept umfassen und es Vektordarstellungsalgorithmen ermöglichen, Bedeutung und Kontext präzise zu erfassen. Dieser Wandel befreit Autoren davon, Suchmaschinen zu manipulieren, und belohnt stattdessen Klarheit, Präzision und echten Wert für menschliche Leser.

Aufbau von Retrieval-Vertrauen durch Content-Strategie

Wenn Abrufsysteme Inhalte nach Antworten durchsuchen, vergeben sie Vertrauenswerte, die bestimmen, ob Informationen für KI-generierte Antworten ausgewählt werden—und Inhaltsersteller, die diesen Bewertungsmechanismus verstehen, haben einen entscheidenden Vorteil. Abruf-Algorithmen bevorzugen deklarative Aussagen gegenüber schwammigen Abschwächungsphrasen wie „könnte sein" oder „könnte möglicherweise". Klare Behauptungen steigern Vertrauenswerte erheblich. Kluge Autoren etablieren Inhaltshierarchie durch strukturierte Überschriften und fokussierte Absätze, die einzelne Konzepte zusammenfassen. Sie vermeiden vage Sprache und wählen direkte Aussagen, die Algorithmen zuverlässig analysieren können. Dieser Ansatz verwandelt Inhalte von bloßer Information in zitierfähiges Material, dem KI-Systeme genug vertrauen, um es in ihre Antworten aufzunehmen.

Wissensgraphen und kontextuelle Beziehungen

Selbstbewusste Behauptungen bilden nur ein Puzzleteil des Retrieval-Puzzles—Wissensgraphen liefern das fehlende kontextuelle Rahmenwerk, das verstreute Informationen in miteinander verbundenes Verständnis umwandelt. Diese ausgeklügelten Netzwerke kartieren Entitätsbeziehungen mit chirurgischer Präzision und verwandeln isolierte Fakten in bedeutungsvolle Verbindungen, die KI-Systeme intelligent steuern können.

- Semantische Verbindungen verknüpfen verwandte Konzepte und ermöglichen es Retrieval-Systemen zu verstehen, wie Themen über oberflächliche Schlüsselwörter hinaus zusammenhängen. - Kontextuelle Zuordnungen etablieren hierarchische Beziehungen und helfen Algorithmen dabei, zwischen verschiedenen Bedeutungen desselben Begriffs zu unterscheiden. - Verbessertes Reasoning entsteht, wenn Vektordatenbanken sich mit Wissensgraphen verbinden und Retrieval-Systeme schaffen, die sowohl Bedeutung als auch Kontext gleichzeitig verstehen.

Implementierungsrahmen für zukunftsfähige Inhalte

Drei grundlegende Säulen unterstützen jeden erfolgreichen Übergang von traditioneller SEO zu KI-optimierter Content-Strategie: systematische Content-Umstrukturierung, technische Infrastruktur-Ausrichtung und messbare Leistungsrahmen. Intelligente Organisationen beginnen damit, ihre Content-Ökosysteme zu prüfen und Inhalte zu identifizieren, die für Verbesserungen der semantischen Genauigkeit geeignet sind. Metadata-Management wird unverzichtbar—stellen Sie es sich vor wie die Bereitstellung ordnungsgemäßer Einführungen für KI-Systeme, anstatt sie raten zu lassen. Die Benutzererfahrung profitiert enorm, wenn sich die Abrufgeschwindigkeit durch strategische Segmentierung erhöht. Währenddessen müssen sich Indexierungsstrategien über Keyword-Dichte hinaus zu bedeutungsorientierten Ansätzen entwickeln. Die Befreiung von altmodischen SEO-Taktiken öffnet Türen zu authentischem, wertvollem Content, der tatsächlich den Lesern dient.

Read the full article

#content-optimierung#informationsbeschaffung#ki-gestütztesuche#nutzerintention#semantischesverständnis#Suchmaschinenoptimierung#vektor-embeddings

0 notes

Text

whoopsies that was supposed to go on the fanart hoarding blog

#enski is a dork#this was bound to happen#good thing i use the link embedding#+ content source features

1 note

·

View note