#for reasons of using the same window managers linux does

Explore tagged Tumblr posts

Text

Installing Linux (Mint) as a Non-Techy Person

I've wanted Linux for various reasons since college. I tried it once when I no longer had to worry about having specific programs for school, but it did not go well. It was a dedicated PC that was, I believe, poorly made. Anyway.

In the process of deGoogling and deWindows365'ing, I started to think about Linux again. Here is my experience.

Pre-Work: Take Stock

List out the programs you use regularly and those you need. Look up whether or not they work on Linux. For those that don't, look up alternatives.

If the alternative works on Windows/Mac, try it out first.

Make sure you have your files backed up somewhere.

Also, pick up a 5GB minimum USB drive.

Oh and make a system restore point (look it up in your Start menu) and back-up your files.

Step One: Choose a Distro

Dear god do Linux people like to talk about distros. Basically, from what all I've read, if you don't want to fuss a lot with your OS, you've got two options: Ubuntu and Linux Mint. Ubuntu is better known and run by a company called Canonical. Linux Mint is run by a small team and paid for via donations.

I chose Linux Mint. Some of the stuff I read about Ubuntu reminded me too much of my reasons for wanting to leave Windows, basically. Did I second-guess this a half-dozen times? Yes, yes I did.

The rest of this is true for Linux Mint Cinnamon only.

Step Two: Make your Flash Drive

Linux Mint has great instructions. For the most part they work.

Start here:

The trickiest part of creating the flash drive is verifying and authenticating it.

On the same page that you download the Linux .iso file there are two links. Right click+save as both of those files to your computer. I saved them and the .iso file all to my Downloads folder.

Then, once you get to the 'Verify your ISO image' page in their guide and you're on Windows like me, skip down to this link about verifying on Windows.

Once it is verified, you can go back to the Linux Mint guide. They'll direct you to download Etchr and use that to create your flash drive.

If this step is too tricky, then please reconsider Linux. Subsequent steps are both easier and trickier.

Step Three: Restart from your Flash Drive

This is the step where I nearly gave up. The guide is still great, except it doesn't mention certain security features that make installing Linux Mint impossible without extra steps.

(1) Look up your Bitlocker recovery key and have it handy.

I don't know if you'll need it like I did (I did not turn off Bitlocker at first), but better to be safe.

(2) Turn off Bitlocker.

(3) Restart. When on the title screen, press your Bios key. There might be more than one. On a Lenovo, pressing F1 several times gets you to the relevant menu. This is not the menu you'll need to install, though. Turn off "Secure Boot."

(4) Restart. This time press F12 (on a Lenovo). The HDD option, iirc, is your USB. Look it up on your phone to be sure.

Now you can return to the Linux Mint instructions.

Figuring this out via trial-and-error was not fun.

Step Four: Install Mint

Just follow the prompts. I chose to do the dual boot.

You will have to click through some scary messages about irrevocable changes. This is your last chance to change your mind.

I chose the dual boot because I may not have anticipated everything I'll need from Windows. My goal is to work primarily in Linux. Then, in a few months, if it is working, I'll look up the steps for making my machine Linux only.

Some Notes on Linux Mint

Some of the minor things I looked up ahead of time and other miscellany:

(1) HP Printers supposedly play nice with Linux. I have not tested this yet.

(2) Linux Mint can easily access your Windows files. I've read that this does not go both ways. I've not tested it yet.

(3) You can move the taskbar (panel in LM) to the left side of your screen.

(4) You are going to have to download your key programs again.

(5) The LM software manager has most programs, but not all. Some you'll have to download from websites. Follow instructions. If a file leads to a scary wall of strange text, close it and just do the Terminal instructions instead.

(6) The software manager also has fonts. I was able to get Fanwood (my favorite serif) and JetBrains (my favorite mono) easily.

In the end, be prepared for something to go wrong. Just trust that you are not the first person to ever experience the issue and look it up. If that doesn't help, you can always ask. The forums and reddit community both look active.

178 notes

·

View notes

Text

Linux distros - what is the difference, which one should I choose?

Caution, VERY long post.

With more and more simmers looking into linux lately, I've been seeing the same questions over and over again: Which distro should I choose? Is distro xyz newbie-friendly? Does this program work on that distro?

So I thought I'd explain the concept of "distros" and clear some of that up.

What are the key differences between distros?

Linux distros are NOT different operating systems (they're all still linux!) and the differences between them aren't actually as big as you think.

Update philosophy: Some distros, like Ubuntu, (supposedly) focus more on stability than being up-to-date. These distros will release one big update once every year or every other year and they are thoroughly tested. However, because the updates are so huge, they inevitably tend to break stuff anyway. On the other end of the spectrum are so-called "rolling release" distros like Arch. They don't do big annual updates, but instead release smaller updates very frequently. They are what's called "bleeding edge" - if there is something new out there, they will be the first ones to get it. This can of course impact stability, but on the other hand, stuff gets improved and fixed very fast. Third, there are also "middle of the road" distros like Fedora, which kind of do... both. Fedora gets big version updates like Ubuntu, but they happen more frequently and are comparably smaller, thus being both stable and reasonably up-to-date.

Package manager: Different distros come with different package managers (APT on ubuntu, DNF on Fedora, etc.). Package managers keep track of all the installed programs on your PC and allow you to update/install/remove programs. You'll often work with the package manager in the terminal: For example, if you want to install lutris on Fedora, you'd type in "sudo dnf install lutris" ("sudo" stands for "super user do", it's the equivalent of administrator rights on Windows). Different package managers come with different pros and cons.

Core utilities and programs: 99% of distros use the same stuff in the background (you don’t even directly interact with it, e.g. background process managing). The 1% that do NOT use the same stuff are obscure distros like VoidLinux, Artix, Alpine, Gentoo, Devuan. If you are not a Linux expert, AVOID THOSE AT ALL COST.

Installation process: Some distros are easier to install than others. Arch is infamous for being a bit difficult to install, but at the same time, its documentation is unparalleled. If you have patience and good reading comprehension, installing arch would literally teach you all you ever need to know about Linux. If you want to go an easier and safer route for now, anything with an installer like Mint or Fedora would suit you better.

Community: Pick a distro with an active community and lots of good documentation! You’ll need help. If you are looking at derivatives (e.g. ZorinOS, which is based on Ubuntu which is based on Debian), ask yourself: Does this derivative give you enough benefits to potentially give up community support of the larger distro it is based on? Usually, the answer is no.

Okay, but what EDITION of this distro should I choose?

"Editions" or “spins” usually refer to variations of the same distro with different desktop environments. The three most common ones you should know are GNOME, KDE Plasma and Cinnamon.

GNOME's UI is more similar to MacOS, but not exactly the same.

KDE Plasma looks and feels a lot like Windows' UI, but with more customization options.

Cinnamon is also pretty windows-y, but more restricted in terms of customization and generally deemed to be "stuck in 2010".

Mint vs. Pop!_OS vs. Fedora

Currently, the most popular distros within the Sims community seem to be Mint and Fedora (and Pop!_OS to some extent). They are praised for being "beginner friendly". So what's the difference between them?

Both Mint and Pop!_OS are based on Ubuntu, whereas Fedora is a "standalone" upstream distro, meaning it is not based on another distro.

Personally, I recommend Fedora over Mint and Pop!_OS for several reasons. To name only a few:

I mentioned above that Ubuntu's update philosophy tends to break things once a big update rolls around every two years. Since both Mint and Pop!_OS are based on Ubuntu, they are also affected by this.

Ubuntu, Mint and Pop!_OS like to modify their stuff regularly for theming/branding purposes, but this ALSO tends to break things. It is apparently so bad that there is an initiative to stop this.

Pop!_OS uses the GNOME desktop environment, which I would not recommend if you are switching from Windows. Mint offers Cinnamon, which is visually and technically outdated (they use the x11 windowing system standard from 1984), but still beloved by a lot of people. Fedora offers the more modern KDE Plasma.

Personal observation: Most simmers I've encountered who had severe issues with setting up Linux went with an Ubuntu-based distro. There's just something about it that's fucked up, man.

And this doesn't even get into the whole Snaps vs. Flatpak controvery, but I will skip this for brevity.

Does SimPE (or any other program) work on this distro?

If it works on Fedora, then it works on Mint/Ubuntu/Arch/etc., and vice versa. This is all just a question of having the necessary dependencies installed and installing the program itself properly. Some distros may have certain prerequisites pre-installed, while others don't, but you can always just install those yourself. Like I said, different distros are NOT different operating systems. It's all still Linux and you can ultimately customize it however you want.

In short: Yeah, all Sims 2-related programs work. Yes, ReShade too. It ultimately doesn't really matter what distro you use as long as it is not part of the obscure 1% I mentioned above.

A little piece of advice

Whatever distro you end up choosing: get used to googling stuff and practice reading comprehension! There are numerous forums, discord servers and subreddits where you can ask people for help. Generally speaking, the linux community is very open to helping newbies. HOWEVER, they are not as tolerant to nagging and laziness as the Sims community tends to be. Show initiative, use google search & common sense, try things out before screaming for help and be detailed and respectful when explaining your problems. They appreciate that. Also, use the arch wiki even if you do not use Arch Linux – most of it is applicable to other distros as well.

#simming on linux#bnb.txt#if anyone wants to use this as a base for a video feel free#i don't feel like like recording and editing lol

120 notes

·

View notes

Text

okay whenever i talk about linux i say shit like "development is easier" or throw around things like LXC or POSIX/UNIX, or whatever insane terms but:

here's my list of actual shit that the average person would care about

Most updates including core system components usually don't even need a reboot(please reboot your computer at least once a week). If it does, it waits for me to reboot. It wont ever stop me in the middle of something to ask me to or force it on me.

If i plug in a device it will just work. I do not need to install drivers or some stinky special crap software for it to be detected, it will most often just work (every new linux kernel version adds so much support for new and old hardware. If it doesn't work now, it might work later!)

Package management. I've sung it's praises so much already but. every other device i know you can click a button and it will update all the apps on your device. except windows. App has an update? Open the software centre or Discover or whatever, click a button boom it's updated. All controlled from one place, no worries about does the app update itself, or whether you're downloading the right installer for your system, just use the package manager that comes with the system and it's good.

It's as minimal as i want it to be. Both windows and mac suffer a lot from just having a bunch of crap that you cannot get rid of. I installed a distro which didnt even come with a graphical interface, it was that minimal. If the distro you use is a bit more reasonable, but it comes with some software you dont want, you can just get rid of it. Shit if you wanted to you can just uninstall the linux kernel and it will just let you, and your computer will be unbootable. You have full control over what you want on your system. Also uninstalling things is less stupid, there's much less cases of leftover files or shit laying around in the registry. (there is no registry)

Audio. "linux audio is bad" is a thing of the past and i'm so serious. Pipewire is an amazing thing. I have full control over which applications give output to which speakers, being able to route one app to multiple speakers at the same time, or even doing things like mapping an input device to speakers so i can monitor it back very easily. I still dont understand why windows does the stupid "default communication device" thing, and they often reset my settings like randomly changing it to 24 bit audio when i only use 16 and certain programs break with it set to 24 idfk. Maybe this is less of an "average user" thing and more of a poweruser thing but i feel like there's SOMETHING in here which may be handy to the average person at some point. i love qpwgraph.

i could think of more but i dont use a computer like a normal person so it will take me time to think of it

14 notes

·

View notes

Text

things that i think actually matter for beginner friendliness:

ROBUST FALLBACKS- i think opensuse's default installation is with btrfs root + grub + snapper + snapper hooks so that if an update ever goes bad you have an archive that you can boot from, immediately, straight from the boot screen, no terminal stuff. thats excellent and more distros should offer this oob. vanillaos's abroot system is also really cool with similar benefits

DISCOVERABLE UI- cinnamon (linux mint) is ok with this but i remember trying to figure out desktop and panel (taskbar) configuration was absolutely opaque to me and there weren't easy answers to how you were supposed to do things. i think kde is really excellent in terms of immediately discoverable ui from a windows expat perspective even though i dont really like the mouthfeel of it for personal use

PAINLESS UPDATES- EITHER use a rolling release system or make your default installation use separate root and home partitions, so that when a million dependencies are changed in your point release and you either have to reinstall or upgrade in place and find out everything broke and THEN reinstall, you dont have to frantically go through your entire computer to find where you might have important user files, save them to a usb, and reorganize them on a new install. this one is frustrating because it would be so easy for this to already be a standard for point release distros but it isnt. ??????

the package management thing is like. the whole reason any distros are different. i have my own opinions but it takes all types probably. but if flatpak's repos were better maintained, or if it were more common practice/more friendly and integrated to use distrobox for gui user programs, i think it would make everyones lives easier and level the playing field for people who actually do reasonably need stable* system components but up to date user apps. i think people are being whiny babies when they say its JUST IMPOSSIBLE to remember "apt-get (program name)" instead of pressing a button that says "get program name" but they got guis all over for the people that want them its an overstated problem

THINGS THAT DONT MATTER FOR USER FRIENDLINESS: why does everyone fucking act like ubuntu/mint's point releases and dead and rotting "stable" repos are an absolute benefit to newbies who dont know what they want or how to express what they want yet. breaking and changing dependencies are a bad situation to thrust upon unsuspecting noobs for sure but im pretty sure there is the exact same amount of that regardless of distro because it just does not happen often enough to be dampened by slower releases. like, the appimage integrator they packaged in mint going dead because of aging dependencies. like what did they want me to do about that as the end user who still wanted to integrate appimages?? as far as i could tell the answer is Nothing

*stupid word that causes like 90% of all problems when talking about linux

3 notes

·

View notes

Text

How to install and use IrfanView in Linux - Tutorial

How to install and use IrfanView in Linux - Tutorial

Updated: May 30, 2022

My Windows to Linux migration saga continues. We're still a long way off from finishing it, but it has begun, and I've also outlined a basic list of different programs I will need to try and test in Linux, to make sure when the final switch cometh that I have the required functionality. You can find a fresh bouquet of detailed tutorials on how to get SketchUp, Kerkythea, KompoZer, as well as Notepad++ running in Linux, all of them using WINE and successfully too, in my Linux category.

Today, my focus will be on IrfanView, a small, elegant image viewer for Windows, which I've been using with delight for decades now. It's got everything one needs, and often more than the competitors, hence this bold foray of using it in Linux despite the fact there are tons of native programs available. But let's proceed slowly and not get too far ahead of ourselves. After me.

As I said, it's majestic. A tiny program that does everything. It's fast and extremely efficient. When I posted my software checklist article, a lot of Linux folks said, well, you should try XnView instead. And I did, honest, several times, including just recently, which we will talk about in a separate article, but the endeavor reminded me of why I'd chosen IrfanView all those years back. And those reasons remain.

Then, I did play with pretty much every Linux image viewer out there. None is as good as IrfanView. It comes down to small but important things. For instance, in IrfanView, S will save a file, O will trigger the open dialog. Esc quits the program. Very fast. Most other programs use Ctrl + or Shift + modifiers, and that simply means more actions. I did once try to make GwenView use the full range of Irfan's shortcuts, but then I hit a problem of an ambiguous shortcut, wut. I really don't like the fact that hitting Esc takes you to a thumbnail overview mode. But that's what most programs do.

WINE configuration

The first step is to have WINE installed on your system. I am going to use the exact same method outlined in the SketchUp Make 2017 tutorial. I have the WINE repositories added, and I installed the 6.X branch on my system (at the time of writing).

IrfanView installation

Download the desired 32/64-bit version of the program and then install it. The process should be fast and straightforward. You will be asked to make file type association. You can do this, or simply skip the step, because it doesn't make any difference. You need to associate IrfanView as the default image viewer, if this is your choice, through your distros' file type management utility, whatever it may be.

And the program now works! In Plasma, on top of that, you can also easily pin the icon to the task manager.

Plugins and existing configuration(s)

Much like with Notepad++, you can import your existing workspace from a Windows machine. You can copy plugins into the plugins folder, and the IrfanView INI files into the AppData/Roaming folder. If you don't have any plugins, but you'd like to use some, then you will need to download the IrfanView plugins bundle, extract it, and then selectively, manually copy the plugins into the WINE installation folder. For instance, for the 64-bit version of the program, this is the path:

~/.wine/drive_c/Program Files/IrfanView/Plugins

As a crude example, you may want to make IrfanView be able to open WebP files. In that case, you will need to copy the WebP.dll file into the folder above, and relaunch the program. Or you can copy the entire set of IrfanView plugins. Your choice, of course.

Conclusion

And thus, IrfanView is now part of our growing awesome collection of dependable tools that will make the Windows to Linux migration easier. I am quite sure the Linux purists will be angry by this article, as well as the other tutorials. But the real solution is to develop programs with equivalent if not superior functionality, and then, there will be no reason for any WINE hacks.

If you're an IrfanView user, and you're pondering a move to Linux, then you should be happy with this guide. It shows how to get the program running, and even import old settings and plugins. I've been using IrfanView in Linux for many years, and there have been no problems. That doesn't say anything about the future, of course, but then, if you look at what Windows was 10 years ago, and what it is now, it doesn't really matter. Well, that's the end of our mini-project for today. See you around. More tutorials on the way!

Cheers.

3 notes

·

View notes

Text

NixOS Rice Journey

I've always considered myself something of a minimalist when it comes to function over form and beauty within simplicity, but there comes a time in every Linux user's life when they must rice.

Now, firstly, I want to acknowledge that @kfithen's recent ricing journey is like, 50% of the reason I went through with this (\shrug/ he had a good idea, what can I say?!). To be fair, the other 50% is the control and understanding that a good rice gives a person over their computer and environment. I want to know how everything works, and I want to be the person who makes it all come together really well.

I'm not really the type for flashy things or eye-catching rices / eye-candy (I've been using only I3wm for almost the entirety of my Linux history), so I want my rice to take a more subtle, simple approach. I use NixOS because I want my system to stay with me forever and only do what it needs to. I want to spend years optimizing everything I use until my OS reaches its minimal state. In the same way, I want my rice to display the elegant simplicity of nothing extra. I want some basic utilities and visuals that look nice, but aren't distracting.

Most of all, I want my rice to embody my own spirit, or at least what I strive to be. I want to put work into making something that does everything it needs to without encroaching on others. Ideally, I will be able to look at this every day for the rest of my life and it will help me feel secure in myself.

All that said, here's what I've done so far:

Migrated from X11 to Wayland

Switched to greetd and tuigreet for my displaymanager

Switched from I3wm to Sway

Setup Waybar to tell me what I need to know

Setup a custom desktop wallpaper (as opposed to the default Xfce wallpaper or Sway grey)

Setup vifm to view and manage my filesystem

Setup ivm, foot, mpv, etc. to replace xfce-given programs

Upgraded from NixOS 23.11 to 24.05

[Image ID: A (16:9) screenshot of my desktop. There are no windows open. The wallpaper prominently features a modified Nix logo in the center, taking up a little over a third of the vertical space. The logo has been modified so that each of the six "arms" corresponds to a stripe in the trans-nonbinary-flag; the top-right corresponds to the blue stripe at the top of the flag and the arms continue down the flag in a clockwise motion (i.e. blue, pink, yellow, white, purple, black). The background of the wallpaper is a dark grey that is light enough for the black arm to be visible. At the bottom of the screenshot is a Waybar status bar. On the left it shows (left to right) the sway workspaces, workspace name, and scratchpad; on the right it shows (left to right) the system volume (with wireplumber), the keyboard layout, the free space on the root partition, the memory and sway information of the system, the local ip address and wifi-connection strength of the system, the core usage of the system, and the current time and date of the system. The bar is styled with the default styles (for now). \End ID]

[Image ID: Another desktop screenshot. This one shows three windows open with the Sway window manager/compositor. One window, containing my home-manager configuration open in neovim (using the slate colorscheme), is the result of a horizontal split and lies on the left half of the screen. The right half of the screen is vertically split into two windows. The top displays an unstyled vifm, and the bottom displays the output of neofetch. The inner gaps of the windows are set to 10 in sway and there are no other gap configurations. \End ID]

So far, I've been focusing mostly on getting my system working again (leaving xfce completely left a big mark on my system, previously I was using Thunar and a billion other things I took for granted). I'm going through another terminal-based-stuff craze so I'm trying to do more and more stuff through cli and tui applications (flameshot -> shotman, xfce-img-viewer -> imv, xfce-video-player -> mpv, thunar -> vifm).

The only thing I've done cosmetically so far is the background. I wanted to get something that wouldn't clutter my screen if I ever implement transparency, so I didn't want to do anything too complicated. (I'll admit, my first thoughts were Homestuck, Lackadaisy, trains, etc., but those were way to complicated (save for some of the Homestuck stuff, that was good, I just didn't super vibe with anything)). I'm really happy with how it turned out though (the Nix logo is great for customization)! I think the trans-nonbinary-flag colors look great here and fit the vibe sickly. Also, it's Pride Month, so how could I not have something queer on my screen all the time?!?!?! (Well, besides Linux, and NixOS especially, that's queer already, lol).

This post is getting a bit long, so I'll quit my yappin' and end it off with a little summary of what I hope to do next:

Get some sort of transparency (what's the use in having that beautiful wallpaper if you can't see it, plus the background has a low enough complexity that transparency will actually work well)

Set some standards for theming/colors and put them in place (right now my Waybar and vifm especially just don't look right) (this one is going to require a lot of work, but there are also a lot of people who do this amazingly; plus, I've got some colors to work with already :), I really like the the "slate" vim theme and those trans-nonbinary colors are a great start as well, particularly that purple!)

MOAR TERMINAL (maybe try again with steam-tui, risk discord-tui, and re-examine links/lynx) (plus this really helps with fileviewer in vifm)

Try out nix-flakes (I really need to figure out what these things are, they sound right up my alley!)

Setup backups of my system / get all my configs into nix (the few that aren't already there) (I have some suspicion that nix-flakes might help with this)

Learn more (there's always more to learn!)

Welp, that's about it for now! See ya :3

3 notes

·

View notes

Text

Common BSoD reasons (Blue Screen of DESU)

Your computer worked fine yesterday, and now it keeps crashing. Wa da heq?

The most common issues I've seen causing BSoD isn't malware, or even user error. Though they're not the least common causes either.

They're from updates pushed by developers.

Usually it's from some system update that manages your memory allocation incorrectly. Or processor execution errors and mishaps handled incorrectly.

But it is also quite common for a driver update to cause the same issue. And because Video Card drivers update monthly; they're the most likely cause of breakage.

To be fair; not updating your driver's can be just as hard as updating your driver's so it's important to know which driver configuration worked last.

And this is why Windows has system restore points.

Interestingly; one of the most common driver failures I've seen comes from Nvidia Graphics Driver failing to regulate the cards internal temperature appropriately.

There's usually an internal thermal switch that cuts off when the driver gets too hot which then throttles your graphics card and will cause crashing.

And this thermal switch can degrade over time. Which means your graphics card has a lower tolerance for heat than it should.

However; elevation and humidity can *also* cause the same issue. Which means your rig might operate differently in Detroit Mi than it does in Dallas TX, or even SLC UT.

And quite often devs try to reduce their tolerances for kicking in the graphics cards cooling fans; power saving conferences maybe.

And often the will wind up with your card running hotter than it should. This is why the EVGA tools (and other similar software tools) include manual fan controls.

Because the onboard regulation and default drivers tend to heck it all up.

But that's not the only issue I've seen with graphics cards; sometimes: the devs try to use more VRam than the card has. Or even less VRam and then just forget which blocks of VRam they set to be used by the system.

It can literally be fixed one day, and then unfixed and then next, and just toggle back and forth despite user complaints.

In fact; nearly any issue that is commonly considered "due to heating issues" can be traced back to driver issues for similar reasons to what I listed.

Incorrect memory usage, cooling regulation not appropriately modulated cooling modules, processor and graphics processor being sent incorrect commands.

Registry issues can also cause memory issues as certain blocks of memory get used by too many sources at the same time (but not often) multiple drivers trying to run the same hardware (which can also be caused by software creating extra instances of graphics level singleton interfaces)

So if you're ever wondering what could possibly be wrong with your PC, it's more likely a driver issue.

But... You could also have forgotten to install cooling modules, or decided to overclock your hardware without increasing cooling.

Or even overclocked your hardware too much.

But barring overclock; is most likely driver issues. I've even seen windows surface devices on their end of life updates get driver updates that break graphics, causing overheat and ghosting (leftover images) on the screen.

Which I feel like may have been on purpose.

Though locating an older driver, or installing Linux usually worked if no *real* hardware issue was present.

2 notes

·

View notes

Text

How Does Dedicated Server Hosting Work?

A client is provided a physical server that is exclusively dedicated to him with dedicated server hosting. While in shared or virtual hosting, the resources are shared between different users, a dedicated server provides all the resources that comprise the CPU, the RAM, the storage space, the bandwidth that are solely assigned for usage. Here’s an overview of how it works:

How Does Dedicated Server Hosting Work?

1. Provisioning and Setup

Choosing Specifications: Hardware requirements including CPUs cores, RAM size, storage type (SSD/HDD), network bandwidth are chosen by the client according to requirements.

Operating System Installation: The preferred OS being Linux, Windows Server and others are preinstalled in the hosting provider’s server.

Alt Text: Image showing how a dedicated server allows full control over resources

Server Management Software: Some of the additional packages: Control panel (cPanel, Plesk), Database server (MySQL, MSSQL) can be also preset.

Initial Configuration: The hosting provider sets up the network access on the server, updates the necessary security issues, and secures the appropriate firewall.

2. Access and Control

Full Root/Administrator Access: Clients fully manage the Chicago dedicated server or any location. So they can implement applications, regulate exigent services, and modify settings.

Remote Management: Remote access is usually affirmed with SSH for Linux servers and a Remote Desktop Protocol for Windows-based servers.

Control Panels (Optional): cPanel is an example of how server management on various flavors can be done through a web-based graphical interface.

3. Performance and Resources

No Resource Sharing: Everything is calculated for one client – CPU, memory, and disk usage are thus concentrated on the client.

Scalability: Unlike cloud hosting, Chile dedicated servers or any place are a little more constricted. But they can be upgraded with increased hardware or load balancers.

4. Security and Monitoring

Isolated Environment: The risks resulting from other users are also absent on the Chicago dedicated server or any geographical location. The reason is the other clients are not served on the same server.

Alt text: Image representation to show how to secure a dedicated server

DDoS Protection and Firewalls: Web hosts who offer such services provide various security features to counteract cyber threats.

Monitoring Tools: Through the dashboard or some other tool, a Chile or any location’s dedicated server on which the bot runs (load, CPU usage, memory, overall network activity) can be checked.

5. Backup and Maintenance

Automated Backups: This should be noted that the hosting provider or client can set common backups to avoid this kind of dilemma.

Managed vs. Unmanaged Hosting:

Managed: The server management monitors updates, security patches, monitoring, and backup with the server provider’s assistance.

Unmanaged: The client is fully responsible for maintenance tasks.

Alt Text: Pictorial representation of the managed and unmanaged server hosting difference

6. Network and Bandwidth

High-Speed Connections: Data centers that are solely rented mostly offer large bandwidth for traffic without incurring a lag.

Dedicated IP Address: It is common that each server obtains its IP, which can be useful for a website, mail server or application hosting.

7. Cost and Use Cases

Higher Cost: As it gives an environment to use only by one client, dedicated hosting is more costly than shared or VPS hosting.

Use Cases: It is appropriate for loads that require a lot of resources. It also benefits game servers, active websites, SAAS solutions, and enterprise-level databases.

To sum up, with the help of dedicated server hosting, a client gains the maximal control, security, and performance provided by the possibility of using the entire server. The device is suitable for companies and/or organizations, that require high reliability, have a large workload or need a high level of data protection.

0 notes

Text

Boring Blog Episode 1

Hello ladies and gentlemen and welcome to the Boring Blog. I have spent many years playing with Linux but having got tired of fighting with graphics card settings and games I have this new Dell Inspiron 3525.

For the first two weeks of it’s life it ran EndeavourOS Gallileo (Arch based Linux) however after getting tired of fighting with Proton and getting certain games not working. I actually have restored the Dell back to its Windows 11 Home setup.

I admit I really didn’t want to go back to Windows but I tired of not being able to use the full potential of the Vega 8 graphics card. While it is never going to set the world on fire. I was having issues to get it to just play games it is more than capable of playing.

Do you honestly care which game broke the camels back. I got a little bored and wanted to play Blur (Activision driving game). I tried with 5 different versions of Proton, messed with Lutris and after having to re-download the game each time was frustrating as hell.

So I eventually decided it was time to dump Linux and go back to familiar Windows world.

Now I know there is going to be many people informing me of the inherent evil corporation that Microsoft is and all its constant spying upon you. Yes I know but one thing Windows does have is most of the software/hardware in the world is supported and works out of the box first time normally.

To be honest I no longer give a toss who has my information. i am a very boring nearly 50 years old man. I am not exactly challenging Rockefeller for his billions and nothing I do is remotely exciting.

I am probably the one man who if he was identity frauded would probably end up getting it back as it would be chronically sad how little you could with it

Please don’t do that…

So far I have managed to install and play everything I have thrown at Windows 11.

Only minor annoyance is for some reason the right click menu has been shortened and you have to pick the extra options just to get things like copy and paste. Sure I could use the keyboard shortcuts but surely the fact I was given a mouse means I don’t have to do this.

I guess this is the same as those who look at Linux and its reliance upon the terminal. We have designed a system which is more graphical and millions are spent on things like UX and UI and people still resorting to a text based prompt makes it all feel kind of redundant.

Then again this is from a guy who has a Ryzen 7 laptop and is using it to play spectrum games most of the time, so I guess I am nothing if not ironic. I doubt I am really stressing the processor by doing such.

Some people sit and play the latest Call of Duty at 4K with every graphical enhancement they can get to see the slightest flicker of a muzzle flash in the dark. I am playing Diablo from 1997 and hoping it will still play on a modern machine without fifteen patches.

I jest but I really did install OpenRCT2 0.47 which is the latest version in order to play Rollercoaster Tycoon 2 so I could design rollercoasters rather than play the actual parks.

Trust me I have tried modern games I last about eight and a half seconds before someone destroys me. It’s not fun. You know when you sit and play Untitled Goose Game because you think its fun then something is seriously wrong with your life.

I admit I was never a great games player… However I do laugh when the guy who annihilated you at WRX Rally driving gets annoyed because you can get to Level 2 of Double Dragon without losing a life and they can’t.

I may not be able to 100% Cyberpunk 2077 as I doubt my machine would even get beyond 30 FPS but I can consistently get beyond Eugene’s Lair on Manic Miner.

People sit and brag about all the games they have completed. I have completed a small handful of games. Bruce Lee on the Spectrum, Both versions of Knight Tyme 48 and 128 versions. I have completed Diablo and Diablo II on PC beyond that I also completed Untitled Goose Game.

I am never going to be classed a world class gamer.

To be honest once you have completed a game what is the point of going back?

You pay money for these games then decide oh well lets put it on the shelf never to be used again. Maybe I’m missing something, like a brain.

I don’t buy a game to complete it. I buy it to enjoy the experience and have fun. Maybe I am wrong but if you’re only goal is to say yep I’ve beaten it. I think there is something fundamentally wrong.

Or is it just me that actually enjoys the game and if it challenges you and you have to try several times and eventually admit hey I just can’t get this. I’m the one who is fundamentally flawed. No because I can always aspire to come back and try harder.

However if you are at a position where no game is challenging unless you require it to be brutally punishing then surely there is a problem.

I tire of this “Git Gud” culture. I don’t want to play a fighting game where each move requires sixteen sequenced button presses to impress people.

That’s not a game it’s a memory test. Sorry I play games to escape and enjoy myself not to be an endurance test of my stamina and ability to be a human being.

I admit I have been playing TLL on the Spectrum for over twenty years and I have never managed to beat the fourth round of targets. I have got four but the last one eludes me I just don’t have the control.

I have watched RZX playback of someone else doing it and even tried replicating it. I just don’t have the skill. However I still play the game.

Now people are trying to beat games in as quick a time as possible. Completing games using shortcuts and exploits meaning they complete the game in under 5 minutes.

Using techniques to take tenths of seconds of the run. Seriously if I ever take that up then be the first to come and beat me senseless.

What a waste of life. Not only have I beat the game I do so quickly it ain’t worth watching. I honestly just don’t understand the mentality. Sure it shows they have skills to do such but now you have just completed another game and it goes on the shelf to be ignored faster than it was yesterday.

Mind you watching modern games that is the game. Wait for game to load, play for four minutes, get shot, wait thirty seconds for it reload, rinse, repeat.

This is why I don’t own a modern console. I really do not want one I really don’t need one and if I had to choose one I would probably go for a Switch.

It may be the slowest of the bunch but at least the games seem to last longer than three minutes a go.

Also its not 4K and you can see the balls of your horse retract in the cold. I just don’t need that level of detail.

Right I am going to crawl back under my rock and play games that I can’t complete because I am incompetent but I don’t care as I can keep playing not getting any better and I still have a game to play

Until next time … take care people.

0 notes

Text

Linux Life Episode 83

Well, here we are again ladies and gentlemen back at the blog stuff regarding my ongoing Linux experience.

Well since we last spoke I am afraid I had to retire my Dell Inspiron M6800 (Mangelwurzel) as the sound card finally decided to give up. So that meant the touchpad, the sound card and the top pair of memory sockets had stopped working so it had to go.

I have recovered the two 480GB SSDs that it had so I can reuse them in another project should the time come for it. However when one machine exits stage right to the farm. Luck would have it I managed to get a new laptop.

The machine admittedly is another Dell laptop but this one is new. The machine in question is a Dell Inspiron 3525. It's a 15.6” laptop with a Ryzen 7 5200U with 16GB RAM, an Integrated Radeon Vega 8 graphics card, and a 1TB NVME drive.

Sure enough for the first 2 hours of its new existence, it did have a copy of Windows 11 Home (stop spitting at the back there). However, after a bit of learning how to get around the BIOS, I managed to install Endeavour OS Galileo (the latest version).

As I had an AMD graphics card (even if it’s integrated) I decided to run KDE Plasma (in the past I have ran MATE but I thought I would change it up).

Now for the first few days, I was running just the basic setup but when I installed Steam only a few games would start. Terraria, Stardew Valley, and Starbound worked fine as they, I believe use Open GL. However, when I tried to run a game using Proton there was no dice as Vulkan was not listed.

I had to install the version of MESA with Vulkan from the extras and then I could get Untitled Goose Game to run including picking up my XBOX 360-style gamepad. However Path of Exile and Pacman Championship Edition 2 both threw errors running Linux native versions.

However, I then turned on Proton usage, and using the Windows versions both games worked without error. Strange but I am not going to argue they work and I am not going to question beyond that.

For some reason they work if it’s through DXVK but not through the actual Linux Vulkan driver go figure that. Considering I can now play them both fine I am not going to fight it.

Parkitect 1.9a works fine through Wine as it’s a GOG game version I am using.

I admit while I am not a huge game player it’s nice to see them in action.

I have also installed and tested various emulators the list includes Fuse (ZX Spectrum), VICE (c64), Caprice32 (Amstrad CPC), Atari800 (Atari 8 bit), and DOSBox-X (MSDOS). I will probably test a few more in time but all successful so far.

I even did my usual build of GDASH and it works fine. So I can play various incarnations of Boulder Dash should I ever feel so.

Set up OBS Studio, KDEnlive, VLC, Audacity, and more so it can be used to create videos or podcasts should the decision take me.

Also, Cairo-Dock is my choice of on-screen dock as it has been for many years. It’s pretty reliable and I can set it up pretty quickly now.

I have also installed some productivity apps in the form of LibreOffice, RedNotebook, Obsidian, and Focuswriter. I also installed InMyDiary via Wine as the Windows version is the most up-to-date one (I like Lotus Organiser and it looks/works the same).

So it has been running for over a week and I admit I am impressed with its capabilities.

However, it does seem the world of Linux is looking to dump X11 in favour of the Wayland compositor. Now on Mangelwurzel, I could not use Wayland as Nouveau could not run it.

But this new Dell (currently named Parsnip but could be subject to change) has a better graphics card and I have installed the version of KDE Plasma Wayland also.

So I can log out of X11 and switch to Wayland if necessary. It works and I admit speed-wise, it's slightly faster at program opening than X11, but Cairo Dock doesn’t support Wayland just yet.

However, I did manage to get a dock in the form of Latte Dock and it does work fine.

However Steam doesn’t like Wayland it works but man is slow and problematic so at this time I still have the system boot into X11 and change up to Wayland should I need it.

So where do I go with this new Dell laptop so far it has performed more than adequately. Also, EndeavourOS once again proves to be my preferred Linux flavour and I won’t be going back to a stable (Debian, Mint, Ubuntu) environment anytime soon unless forced.

Well, that’s a wrap for the moment… In turn, I will probably install MAME and maybe play with QEMU but that’s for the next episode should I get around to it.

Until next time… Take care.

1 note

·

View note

Text

(you might know some of this already, but I'm going in detail because it's not often I get to info-dump this to someone)

What linux is:

Linux is a free and open source operating system kernel -in itself it isn't a full Os, it needs other parts; if you want I can explain what a kernel is/does- made by Linus Torvalds in 1991 based on the Minix Os, which was based on the Berkley Software Distribution version of Unix. Although linux isn't a full Os, people will generally refer to any Os that uses the linux kernel as "linux"

Since then it has become a major corner stone of the internet. ~90% of servers online are running some kind of linux. Although online surveys show that only 3-5% of people use linux as a desktop Os.

because linux is free and open source -meaning anyone can download or edit the source code- there are several distributors (or distro's) to download a version of linux, each with different package managers (a place to download new software), desktop environments (which determines what apps you have installed and how your desktop looks), and other stuff.Some linux distro's are based on others and they may have some things in common. For example: all distro's based on Debian use Debian's "apt" package manager.

Because all linux distro's are based on the same kernel (it's not often that distro's mod the kernel for various reasons) most (not all) software is compatible between distro's with a little finagling.

How to install linux:

installing linux may very from computer to computer, and on some computers it is nearly impossible; although in theory anything with 512 mb of ram could run linux. But a lot of these details may be different on your computer, so look into that first.

find a distro you want to use. Some distro's I'd advise staying away from if you are a beginner, like Arch. There are tons of distro's out there so it may seem like a daunting task, but here are a good few recommendations for beginners. Linux Mint is a good place to start, it's basically the beginner distro. it's simple, works with most computers, and suites just about anyone, from long time users to beginners, to shitty little cousins. Pop_OS! is another good one I've seen recommended, I've never used it personally but I've heard good things. For a long time Ubuntu (mint's daddy in a sort of way) was considered one of the best general distro's, but in recent years it has kind of fallen off, but still, it's good to keep in mind as a backup if something goes wrong.

make a bootable usb thumdrive. to do this you have to download the iso of the OS you are going to use and use a flashing software to make the usb bootable. Don't just put the iso on a thumb drive like what I did when I first installed installed linux, make sure you are using a flashing software. Rufus is a good flashing sofware for Windows (assuming that is what you're using). here is a good article on how to make a bootable usb

go into a one time boot/bios. the next thing you need to do is boot your computer from the usb. which can be done by rearranging the boot order in the bios, or running a one time boot on your computer. this is where things start to change depending on your computer. for most computers if you reboot you will see a little screen flash that may have some technical info and say something like "[key] for bios/uefi setup [key] for one-time boot launch." On my computer those keys are f2 and f12 respectively, but it may be different on yours. Some computers *ahem chromebook* may not give you access to the bios or one time boot. Here is a guide on common ways on how to boot into bios/one time boot. although I recommend looking up your laptop model/mother board model for specific details.

boot from the usb. now that you are in the bios/boot utility you need to boot into the usb. when booted into the usb, most distro's will allow you to test how performance is on your computer, test the desktop environment, and make sure you have all your drivers and other things worked out so you have access to wifi and bluetooth and stuff.

run the installer. on the desktop there should be an installer application that you can run, most of them are pretty simple; they will just ask you stuff like usernames, times zones, pre-installed apps, etc. etc. WARNING: installing linux will wipe your drive clean, anything you had will be gone. Make sure you have backups of anything that may be important.

reboot your computer & unplug the usb; after you run the installer linux should be on your computer now

many distro's have installation guides on their websites if you want to follow those.

if you want to install linux on a chromebook it is possible (my first laptop was a chromebook and I installed Solus on it, it was my first distro and a big regret; nothing aginst Solus, I just didn't like it). here are some resousrces if you want to do that: [1] [2] [3]

How to use linux:

one of the big corner stones of linux is the terminal. due to its development history the terminal is the dominate way to interact with your computer. a lot of distro's may have a way to do things with a gui, but you will always have a terminal, so you best be used to it.

this is what a terminal emulator looks like. Terminals are technically a part of the hardware (and can be found under /dev/tty* in linux), but you can access them from the desktop through the emulator.

on the terminal you will be greeted to a command prompt, from here you can type out different commands. when you type a command the shell (the software that the terminal uses to communicate with the OS) will search through the $PATH variable and check every folder it points to, and if the command you type is found then it will run the command with what ever parameters you give.

commands follow a formula: CommandName parameter1 parameter2 ...

some commands wont have any parameters, others will, it all depends on the command. But here are some common commands to help you understand

before you begin, just know that "~" refers to your home folder; directory is just a fancy term for folders (that is due to the history of computers); "." refers to the directory you are currently in, and ".." refers to the directory about you.

ls (list): lists the contents of whatever folder you're in

pwd (print working directory): prints the file path to your fold (ex: /home/uppereepy/Documents)

cd (change directory): lets you change which folder you're in (ex cd ..)

cat (concatenate): concatenates (prints) files to the screen

mv (move): move files to new locations (ex: mv HelloWorld.txt ~/Desktop), can also be used to rename files (ex: mv HelloWorld.txt Hello.txt)

man (manual): if you don't know what a command does then you can use the man command to look up what a command does (ex: man ls).

some advice: you will grow to understand the terminal in time, and although tutorials will help, using the terminal will help you understand things a lot more. manual entries, when you first start, may seem esoteric and hard to read, so just know it is ok to look up what a command does on the internet. learning the C programing language can really help you understand the terminal, and so does reading about the history of computers or using older computers

Resources to get started:

Wikipedia page for linux computer terminals The GNU project (where took off) top 50 commands how to use pipes online manual linux for beginners yt linux for hackers by network chuck (he is a little annoying, but he's how I got started)

and if you want to get into the history of computers I'd suggest looking into The 8-bit Guy on yt and Unix: a History and a Memoir by Brian Kernighan (one of the developers behind Unix and wrote "The C Programming Language")

anyways, that's all I got, I am tired (it is nearly 12:00 am while I am writing this), sorry for the extremely long post

gee i really want to get into linux but idk how to even start.. I wonder if there are any smart transgender women out there who could explain it to me in extreme detail while i bat my eyes at them.....

#long post#linux#linuxposting#really long post#linux mint#Pop_OS!#linux for beginners#information#infodump

606 notes

·

View notes

Text

I use Arch, BTW

I made the switch from Ubuntu 23.04 to Arch Linux. I embraced the meme. After over a decade since my last failed attempt at daily driving Arch, I'm gonna put this as bluntly as I can possibly make it:

Arch is a solid Linux distribution, but some assembly is required.

But why?

Hear me out here Debian and Fedora family enjoyers. I have long had the Debian family as my go-to distros and also swallowed the RHEL pill and switched my server over to Rocky Linux from Ubuntu LTS. on another machine. More on that in a later post when I'm more acclimated with that. But for my personal primary laptop, a Dell Latitude 5580, after being continually frustrated with Canonical's decision to move commonly used applications, particularly the web browsers, exclusively to Snap packages and the additional overhead and just weird issues that came with those being containerized instead of just running on the bare metal was ultimately my reason for switching. Now I understand the reason for this move from deb repo to Snap, but the way Snap implements these kinds of things just leaves a sour taste in my mouth, especially compared to its alternative from the Fedora family, Flatpak. So for what I needed and wanted, something up to date and with good support and documentation that I didn't have to deal with 1 particular vendors bullshit, I really only had 2 options: Arch and Gentoo (Fedora is currently dealing with some H264 licensing issues and quite honestly I didn't want to bother with that for 2 machines).

Arch and Gentoo are very much the same but different. And ultimately Arch won over the 4chan /g/ shitpost that has become Gentoo Linux. So why Arch? Quite honestly, time. Arch has massive repositories of both Arch team maintained and community software, the majority of what I need already packaged in binary form. Gentoo is much the same way, minus the precompiled binary aspect as the Portage package manager downloads source code packages and compiles things on the fly specifically for your hardware. While yes this can make things perform better than precompiled binaries, the reality is the difference is negligible at best and placebo at worst depending on your compiler settings. I can take a weekend to install everything and do the fine tuning but if half or more of that time is just waiting for packages to compile, no thanks. That plus the massive resource that is the Arch User Repository (AUR), Arch was a no-brainer, and Vanilla arch was probably the best way to go. It's a Lego set vs 3D printer files and a list of hardware to order from McMaster-Carr to screw it together, metaphorically speaking.

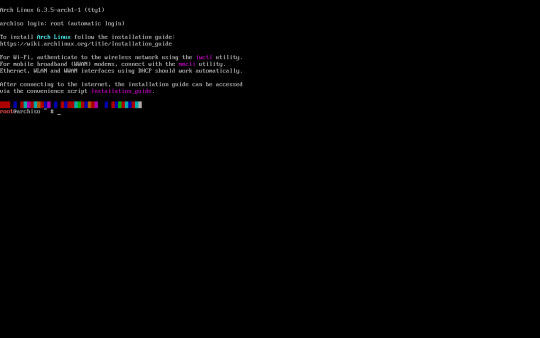

So what's the Arch experience like then?

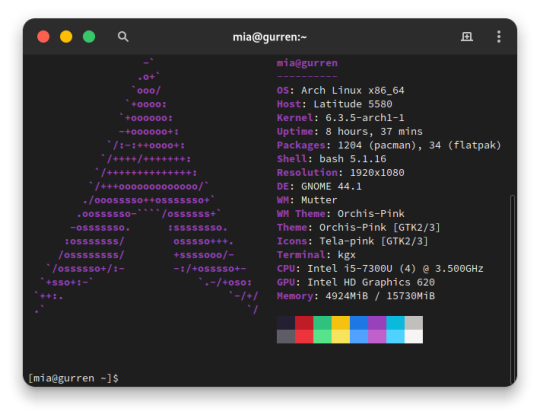

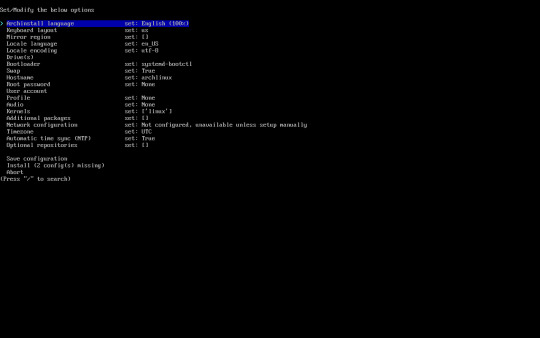

As I said in the intro, some assembly is required. To start, the installer image you typically download is incredibly barebones. All you get is a simple bash shell as the root user in the live USB/CD environment. From there we need to do 2 things, 1) get the thing online, the nmcli command came in help here as this is on a laptop and I primarily use it wirelessly, and 2) run the archinstall script. At the time I downloaded my Arch installer, archinstall was broken on the base image but you can update it with a quick pacman -S archinstall once you have it online. Arch install does pretty much all the heavy lifting for you, all the primary options you can choose: Desktop environment/window manager, boot loader, audio system, language options, the whole works. I chose Gnome, GRUB bootloader, Pipewire audio system, and EN-US for just about everything. Even then, it's a minimal installation once you do have.

Post-install experience is straightforward, albeit just repetitive. Right off the archinstall script what you get is relatively barebones, a lot more barebones than I was used to with Ubuntu and Debian Linux. I seemingly constantly was missing one thing for another, checking the wiki, checking the AUR, asking friends who had been using arch for even longer than I ever have how to address dumb issues. Going back to the Lego set analogy, archinstall is just the first bag of a larger set. It is the foundation for which you can make it your own further. Everything after that point is the second and onward parts bags, all of the additional media codecs, supporting applications, visual tweaks like a boot animation instead of text mode verbose boot, and things that most distributions such as Ubuntu or Fedora have off the rip, you have to add on yourself. This isn't entirely a bad thing though, as at the end if you're left with what you need and at most very little of what you don't. Keep going through the motions, one application at a time, pulling from the standard pacman repos, AUR, and Flatpak, and eventually you'll have a full fledged desktop with all your usual odds and ends.

And at the end of all of that, what you're left with is any other Linux distro. I admit previously I wrote Arch off as super unstable and only for the diehard masochists after my last attempt at running Arch when I was a teenager went sideways, but daily driving it on my personal Dell Latitude for the last few months has legitimately been far better than any recent experiences I've had with Ubuntu now. I get it. I get why people use this, why people daily drive this on their work or gaming machines, why people swear off other distros in favor of Arch as their go to Linux distribution. It is only what you want it to be. That said, I will not be switching to Arch any time soon on mission critical systems or devices that will have a high run time with very specific purposes in mind, things like servers or my Raspberry Pi's will get some flavor of RHEL or Debian stable still, and since Arch is one of the most bleeding edge distros, I know my chance of breakage is non zero. But so far the seas have been smooth sailing, and I hope to daily this for many more months to come.

39 notes

·

View notes

Note

I've seen a couple people talk about Linux. Is it worth it for someone who basically uses their laptop like an app machine? I play don't starve together, I have firefox, and I write, aaannnnnd that's about it. It slows down sometimes when I'm playing different games but I always thought that was what I get for not having the space, or the desk, for a desktop.

Oh, you are actually the ideal candidate.

If you primarily use your computer for web browsing, media consumption, office work and/or steam games, you are THE target audience for Linux desktop OSes.

The only people who I wouldn't recommend make the jump are people who do very specialized art, like 3D modelling and animation from scratch (blender works on linux but little else in that field), and people who do high end or competitive gaming without the use of steam.

I keep specifying steam, because the steam launcher has a bunch of whateverthefuck going on that makes it basically trivial to run steam supported games on linux. They even provide the setting for it inside the linux version of Steam, called "proton compatibilty layer." Which is very roughly versions of all the files that a game would normally be able to call from the windows OS, only with linux information in this. So there's very little impact on hardware performance.

There can even be improvements in performance because the actual operating system is so lightweight that there are more hardware resources available to the game.

Personally, I find that the thing that is the most improved in terms of response is actually Firefox. On Windows, launchingfirefox always took me upwards of 10 seconds. Once open, it worked like a dream, but for whatever reason Window shated actually opening the program for me.

On linux, it's instant. If there's a loading speed, it's too short for me to notice in the time it takes to move my eyes from the firefox option in the start menu, to the center of the screen where the window actually opens.

Plus, and this is something I LOVE for using linux as an "app machine," the linux software manager actually works. If you've ever use an app store on your phone, a software manager is basically the same function. You isntall programs from it, and then it updates and secures them automatically for you.

Compare that to windows, where most programs need to be downloaded as .exe files from the developer, then installed manually by you, then either you check for updates on the website manually or the program checks automatically whenever it opens, and then you have to download a whole other fucking exe file to run the update.

None of that bullshit.

You install it in the software manager and then any time your computer runs system updates, it will also update all the software.

Fucking magnificent. I live for that shit. In fact, it's such an integral feature and so much better from a use perspective that windows tried to re=create it with the windows store. Only their version sucks ass and has like no fucking useful programs. So then windows users tried to re-create it with a system called Chocolate Software Management For WIndows . And, credit where it is due, if a program has choco support it does work beautifully!

It's just that very few programs do.

Meanwhile, back on linux, they even introduced a feature called flatpacking that allows people who make programs to basically just.... pops it into the software store, so even weird shit like the specific game mod for FFXIV that I personally use has its own software manager entry.

Anyway, okay, TLDR:

You are literally, almost as if divinely crafted, the ideal kind of user for switching to Linux.

I like Linux Mint in XFCE, but many people swear that Ubuntu is the place to start. Which one I recommend to you would come down to whether you prefer the "feel" and "look" of older windows like XP and 7, or MacOS and newer Windows like 11. For the older style, do Mint. For the newer, try Ubuntu.

#I really have just become a Linux Guy haven't I#Linux Mint#XFCE#Ubuntu#Lubuntu#Kubuntu#(Those are different types of Ubuntu)#Linux#God help me#Also to the people reading this and thinking THAT IS NOT WHAT A FLATPAK IS!!!!#or THAT IS NOT HOW CHOCO UPDATER WORKS#Or whatever else#Please understand that I am hypersimplifying because???? NONE OF THAT IS RELEVENT

70 notes

·

View notes

Text

NXX Members with Coding Languages I Think They Would Enjoy

In which I use some of my vague knowledge for entertainment purposes :D

WC: 0.7K

MC/Rosa: the building blocks in Scratch

Whatever MC does, she gives it her all and coding is no exception!

I'm thinking she'll likely start small and build from there (pun unintended), and that she'll probably take a lot of enjoyment from the puzzle-shaped pieces in Scratch

She's learning the foundation to writing programs!

Soon she will be able to master every language and become an expert in all things programming

Luke: Command line / Python

Command line isn't exactly a coding language per se, but if Luke did have a favorite language, I think it'd be Python (although I think he can comfortably code in multiple languages since he can easily hack into the Big Data Lab!)

I'm saying Python slightly because I'm biased, but also because Python offers syntax that is easy to read and is also fairly simple to write -- because of said less complicated syntax

Also, snek! A young Luke sees that the language is named after a Cool Reptile and says, "yeah I'm gonna go learn that right now"

Moving on to the Not A Coding Language: Command line (could be Windows, Linux, whatever they have in Stellis)

Luke is the kind of person who likes taking things apart, building them back together again, but it's not the same as before oh no you'll find that your toaster is not an ordinary toaster anymore, it also triples as an inkjet printer and a metal detector!

With any kind of command line interface he's allowed to go crazy with an electronic device! He used to have a cheat sheet of all the commands next to him but NOT ANYMORE, he's memorized them after hours of playing around with them (and if he forgets anything, there's probably a command along the line of /help that he can type in to get the information he's looking for)

Artem: CoffeeScript

While Artem likes JavaScript well enough, he enjoys the simplicity of CoffeeScript

Because CoffeeScript is essentially just Javascript with nicer syntax (or as the CoffeeScript website puts it, "an attempt to expose the good parts of JavaScript in a simple way.")

Also, it has coffee in the name and while he's not going to admit it, it's hard to resist (and coffee is pretty on brand for him)

If Artem is in the mood for curly brackets, he can switch to JavaScript fairly easily, but he's very comfortable with CoffeeScript, even if it does raise eyebrows at times due to its status as a lesser known language

"CoffeeScript?" Celestine asks. "Is that a real thing?"

"Yes," Artem says, and quotes the CoffeeScript website: "It's just Javascript."

Vyn: C or C++

Because Vyn is insane

That's it. That's my reasoning

I think other than C or C++, Vyn might like SQL? That's the database language for managing/sorting data and who knows? He might like that. Might find it relaxing, even

But back to C!

Vyn falls in love with C by first going through the full experience of pain: the missing semicolons, the dropped bracket, each and every skipped indentation

But C can be used for loads of things, I mean it's been around for approximately 50 years and heaps of things are coded in C

And Vyn knows, that when he's mastered it

He will be unstoppable

Marius: LOLCODE

I have been waiting for the day I can finally bring up this coding language, and today is that day!

Because tell me Marius von Hagen would not have the utmost pleasure beginning programs with HAI and ending them with KTHXBYE

Tell me he wouldn't enjoy loops that are essentially written as IM IN YR LOOP and IM OUTTA YR LOOP (rather than the conventional phrasing for while and for loops)

While I don't have enough knowledge to write in LOLCODE, I still find it hilarious and I think Marius would have a blast just playing around with the commands

Other than LOLCODE, I think Marius would like CSS (and maybe even HTML, they go hand in hand)! It can be a pain sometimes but the possibilities are next to endless (making things pretty with computer language? Seems like it could be right up his alley)

Side note on Vyn and C/C++: I have the utmost respect for people who can write in C, because I have struggled with it -- although honestly maybe that's because I don't spend enough time learning it (and because Python 3 is my favorite haha)

Edit: to all the people in the tags who said Luke would like Java, yes you are completely right I just know next to nothing about Java :')

#tears of themis#let me be a nerd for a quick second#yes I am indeed talking about programming languages today#sam wherever you are I think you might enjoy this#luke pearce#artem wing#vyn richter#marius von hagen#mc | rosa

85 notes

·

View notes

Text

5m Mathmrs. Mac's Messages

TLDR: With a bit of research and support we were able to demonstrate a proof of concept for introducing a fraudulent payment message to move £0.5M from one account to another, by manually forging a raw SWIFT MT103 message, and leveraging specific system trust relationships to do the hard work for us!

5m Mathmrs. Mac's Messages App

5m Mathmrs. Mac's Messages Message

5m Mathmrs. Mac's Messages To My

5m Mathmrs. Mac's Messages For Her

Before we begin: This research is based on work we performed in close-collaboration with one of our clients; however, the systems, architecture, and payment-related details have been generalized / redacted / modified as to not disclose information specific to their environment.

A desktop application for Instagram direct messages. Download for Windows, Mac and Linux.

Have a question, comment, or need assistance? Send us a message or call (630) 833-0300. Will call available at our Chicago location Mon-Fri 7:00am–6:00pm and Sat 7:00am–2:00pm.

5m Mathmrs. Mac's Messages App

With that said.. *clears throat*

The typical Tactics, Techniques and Procedures (TTPs) against SWIFT systems we see in reports and the media are - for the most part - the following:

Compromise the institution's network;

Move laterally towards critical payment systems;

Compromise multiple SWIFT Payment Operator (PO) credentials;

Access the institution's SWIFT Messaging Interface (MI);

Keys in - and then authorize - payment messages using the compromised PO accounts on the MI.

This attack-path requires the compromise of multiple users, multiple systems, an understanding of how to use the target application, bypass of 2FA, attempts to hide access logs, avoid alerting the legitimate operators, attempts to disrupt physical evidence, bespoke malware, etc. – so, quite involved and difficult. Now that’s all good and fine, but having reviewed a few different payment system architectures over the years, I can’t help but wonder:

“Can't an attacker just target the system at a lower level? Why not target the Message Queues directly? Can it be done?”

A hash-based MAC might simply be too big. On the other hand, hash-based MACs, because they are larger, are less likely to have clashes for a given size of message. A MAC that is too small might turn out to be useless, as a variety of easy-to-generate messages might compute to the same MAC value, resulting in a collision. WhatsApp Messenger is a FREE messaging app available for iPhone and other smartphones. WhatsApp uses your phone's Internet connection (4G/3G/2G/EDGE or Wi-Fi, as available) to let you message and call friends and family. Switch from SMS to WhatsApp to send and receive messages, calls, photos, videos, documents, and Voice Messages. WHY USE WHATSAPP. Garrick Hello, I'm Garrick Chow, and welcome to this course on computer literacy for the Mac. This course is aimed at the complete computer novice, so if you're the sort of person who feels some mild anxiety, nervousness, or even dread every time you sit down in front of your computer, this course is for you.

Well, let's find out! My mission begins!

So, first things first! I needed to fully understand the specific “section” of the target institution's payment landscape I was going to focus on for this research. In this narrative, there will be a system called “Payment System” (SYS). This system is part of the institution's back-office payment landscape, receiving data in a custom format and output's an initial payment instructions in ISO 15022 / RJE / SWIFT MT format. The reason I sought this scenario was specifically because I wanted to focus on attempting to forge an MT103 payment message - that is:

In this video I will show you where to locate the serial number on a Western golf cart. Ebay Store: Please SUBSCRIBE. Western golf cart serial number lookuplastevil.

MT – “Message Type” Literal;

1 – Category 1 (Customer Payments and Cheques);

0 – Group 0 (Financial Institution Transfer);

3 – Type 3 (Notification);

All together this is classified as the MT103 “Single Customer Credit Transfer”.

Message type aside, what does this payment flow look like at a high level? Well I’ve only gone and made a fancy diagram for this!

Overall this is a very typical and generic architecture design. However, let me roughly break down what this does:

The Payment System (SYS) ingests data in a custom - or alternative - message format from it's respective upstream systems. SYS then outputs an initial payment instruction in SWIFT MT format;

SYS sends this initial message downstream to a shared middelware (MID) component, which converts (if necessary) the received message into the modern MT format understood by SWIFT - Essentially a message broker used by a range of upstream payment systems within the institution;

MID forwards the message in it's new format on to the institution's Messaging Interface (let's say its SAA in this instance) for processing;

Once received by SAA, the message content is read by the institution's sanction screening / Anti-money laundering systems (SANCT).

Given no issues are found, the message is sent on to the institution's Communication Interface (SWIFT Alliance Gateway), where it's then signed and routed to the recipient institution over SWIFTNet.

OK, so now I have a general understanding of what I'm up against. But if I wanted to exploit the relationships between these systems to introduce a fraudulent payment without targeting any payment operators, I was going to need to dig deeper and understand the fundamental technologies in use!

So how are these messages actually 'passed' between each system? I need to know exactly what this looks like and how its done!

More often than not, Message Queues (MQ) are heavily used to pass messages between components in a large payment system. However, there are also various “Adapter” that may be used between systems communicating directly with the SAG (Such as SAA or other bespoke/3rd party systems). These are typically the:

Remote API Host Adapter (RAHA);

MQ Host Adapter (MQHA);

Web Services Host Adapter (WSHA).

Having identified that MQ was in use, my initial assumption was that there was most likely a dedicated Queue Manager (QM) server somewhere hosting various queues that systems push and pull messages from? However, due to SWIFT CSP requirements, this would most likely - at a minimum - take the form of two Queue Managers. One which manages the queues within the SWIFT Secure Zone, and another that manages queues for the general corporate network and back office systems.

Let's update that diagram to track / represent this understanding: Now I could research how this 'messaging' worked!

There are multiple ways to configure Message Queues architectures, in this case there were various dedicated input and output queues for each system, and the message flow looks something like this: Full disclosure, turns out it’s hard to draw an accurate - yet simple - MQ flow diagram (that one was basically my 4th attempt). So it’s.. accurate 'enough' for what we needed to remember!

5m Mathmrs. Mac's Messages Message

Now I had a good understanding of how it all worked, it is time to define my goal: 'Place a payment message directly on to a queue, and have it successfully processed by all downstream systems'.

This sounds simple, just write a message to a queue, right? But there are a few complications!

Why are there few indications of this attack vector in the wild?

How do I even gain “write” access to the right queue?

What protects the message on the queues?

What protects the messages in transit?

What format are the messages in?