#gpu cloud

Explore tagged Tumblr posts

Text

Need powerful GPU compute without the high upfront investment? Sharon AI makes it simple to rent cloud GPUs on-demand with transparent, competitive pricing. Our platform offers affordable GPU cloud rental options designed for AI training, deep learning, scientific computing, and more.

High-Performance Compute at the Right Price

Whether you're an individual developer or managing enterprise-scale workloads, our GPU rental service delivers:

On-Demand Access: Instantly deploy top-tier GPUs—like NVIDIA A100, H100, and more—directly from your browser or CLI.

Flexible Pricing: Pay hourly or monthly with no long-term contracts. Only pay for what you use.

Global Infrastructure: Run jobs closer to your data with a globally distributed network optimized for low latency.

Easy Scaling: Start small and scale compute resources as your project grows—perfect for startups and large teams alike.

Perfect for AI, ML & Research Workloads

Our affordable GPU cloud rental solutions are built for performance-critical use cases including machine learning training, inference, video rendering, and simulation. With full control over your environment and access to high-performance GPUs, you get the power you need—when you need it.

Skip the complexity of hardware procurement. Rent cloud GPUs with Sharon AI and accelerate your compute workflows at a fraction of traditional infrastructure costs.

👉 Check pricing and get started now

0 notes

Text

Supercharge your AI and ML workloads using Google Cloud GPU services at Izoe Solution. We deliver scalable, high-speed GPU solutions for deep learning, data science, and large-scale computation. Achieve faster results with our expert deployment and support.

0 notes

Text

WATCH: Lambda CEO Stephen Balaban says the long-term demand for compute is already priced in but he sees more compute demand coming as evidenced by OpenAI's image generation popularity. He joins Caroline Hyde on "Bloomberg Technology" to discuss.

youtube

1 note

·

View note

Text

#gpu cluster#gpu hosting#gpu cloud#ai#gpu dedicated server#artificial intelligence#nvidia#artificialintelligence#gpucloud#gpucluster

1 note

·

View note

Text

Reducing Time-to-Market with QSC Cloud GPUs

In today’s fast-paced technological landscape, enterprise-level companies and AI-driven startups are under immense pressure to stay ahead of the curve. With the rise of machine learning models, deep learning techniques, and big data analytics, the need for high-performance computing resources has never been greater. However, as these companies scale their AI projects, they often face significant challenges related to GPU availability, scalability, and cost efficiency. This is where QSC Cloud comes in, offering a powerful and flexible solution to accelerate AI workflows and reduce time-to-market.

The Challenges Facing AI-Driven Enterprises

For AI and machine learning teams, the ability to quickly develop, train, and deploy complex models is crucial. However, many organizations encounter common pain points in their journey:

Limited Access to High-Performance GPU Clusters: Advanced machine learning models require significant computational power. Traditional data centers may not have enough GPUs available or may involve lengthy approval processes to gain access to the necessary resources.

Scalability Issues: As AI projects grow, so do the computational demands. Many enterprises struggle to quickly scale their infrastructure to meet these rising demands, which can lead to delays in project timelines.

Long Setup Times and High Costs: Setting up and maintaining GPU infrastructure can be expensive and time-consuming. Enterprises often face high upfront costs when investing in their own hardware and the logistics involved in maintaining these systems.

These challenges result in slowed innovation and delayed time-to-market, which can directly impact a company’s competitive advantage in the AI space.

How QSC Cloud Can Help

QSC Cloud provides a cost-effective, flexible, and scalable solution to these challenges. By offering on-demand access to high-performance GPU clusters like the Nvidia H100 and H200, QSC Cloud empowers enterprises to seamlessly scale their AI workflows without the burden of managing complex hardware or worrying about infrastructure limitations.

Here’s how QSC Cloud helps reduce time-to-market:

1. On-Demand Access to High-Performance GPUs

With QSC Cloud, enterprises can instantly access powerful GPU clusters on-demand, whether they are running deep learning models, large-scale data analytics, or training AI systems. This eliminates the need for long procurement processes and gives teams the flexibility to rapidly access the compute power they need, when they need it.

2. Scalability to Meet Growing Demands

One of the biggest benefits of QSC Cloud is its scalability. As AI projects grow in complexity, so does the need for more computational power. QSC Cloud offers the flexibility to scale resources up or down as needed, without the hassle of investing in additional hardware or worrying about infrastructure limitations. This means teams can remain agile and responsive to project needs, reducing downtime and accelerating development cycles.

3. Cost Efficiency and Quick Setup

Traditional data centers often require significant upfront investment and maintenance costs. QSC Cloud, however, offers a pay-as-you-go model, allowing enterprises to only pay for the resources they actually use. This makes it easier to manage costs and prevents overspending on unused infrastructure. Additionally, with quick setup times and easy deployment, businesses can focus on their AI projects rather than worrying about managing hardware or infrastructure.

Accelerating Innovation with QSC Cloud

In the fast-moving world of AI development, time is of the essence. Delays in computational resources can lead to missed opportunities and delayed product releases. By using QSC Cloud, enterprises can streamline their workflows, reduce the time required for training and inferencing, and ultimately reduce their time-to-market. Whether you are a large enterprise or an AI-driven startup, QSC Cloud offers the scalability, cost-effectiveness, and speed needed to stay ahead of the competition and innovate faster.

With QSC Cloud, you can solve the challenges of GPU availability, scalability, and cost efficiency, enabling you to accelerate your AI projects and meet the growing demands of the market.

1 note

·

View note

Text

Efficient GPU Management for AI Startups: Exploring the Best Strategies

The rise of AI-driven innovation has made GPUs essential for startups and small businesses. However, efficiently managing GPU resources remains a challenge, particularly with limited budgets, fluctuating workloads, and the need for cutting-edge hardware for R&D and deployment.

Understanding the GPU Challenge for Startups

AI workloads—especially large-scale training and inference—require high-performance GPUs like NVIDIA A100 and H100. While these GPUs deliver exceptional computing power, they also present unique challenges:

High Costs – Premium GPUs are expensive, whether rented via the cloud or purchased outright.

Availability Issues – In-demand GPUs may be limited on cloud platforms, delaying time-sensitive projects.

Dynamic Needs – Startups often experience fluctuating GPU demands, from intensive R&D phases to stable inference workloads.

To optimize costs, performance, and flexibility, startups must carefully evaluate their options. This article explores key GPU management strategies, including cloud services, physical ownership, rentals, and hybrid infrastructures—highlighting their pros, cons, and best use cases.

1. Cloud GPU Services

Cloud GPU services from AWS, Google Cloud, and Azure offer on-demand access to GPUs with flexible pricing models such as pay-as-you-go and reserved instances.

✅ Pros:

✔ Scalability – Easily scale resources up or down based on demand. ✔ No Upfront Costs – Avoid capital expenditures and pay only for usage. ✔ Access to Advanced GPUs – Frequent updates include the latest models like NVIDIA A100 and H100. ✔ Managed Infrastructure – No need for maintenance, cooling, or power management. ✔ Global Reach – Deploy workloads in multiple regions with ease.

❌ Cons:

✖ High Long-Term Costs – Usage-based billing can become expensive for continuous workloads. ✖ Availability Constraints – Popular GPUs may be out of stock during peak demand. ✖ Data Transfer Costs – Moving large datasets in and out of the cloud can be costly. ✖ Vendor Lock-in – Dependency on a single provider limits flexibility.

🔹 Best Use Cases:

Early-stage startups with fluctuating GPU needs.

Short-term R&D projects and proof-of-concept testing.

Workloads requiring rapid scaling or multi-region deployment.

2. Owning Physical GPU Servers

Owning physical GPU servers means purchasing GPUs and supporting hardware, either on-premises or collocated in a data center.

✅ Pros:

✔ Lower Long-Term Costs – Once purchased, ongoing costs are limited to power, maintenance, and hosting fees. ✔ Full Control – Customize hardware configurations and ensure access to specific GPUs. ✔ Resale Value – GPUs retain significant resale value (Sell GPUs), allowing you to recover investment costs when upgrading. ✔ Purchasing Flexibility – Buy GPUs at competitive prices, including through refurbished hardware vendors. ✔ Predictable Expenses – Fixed hardware costs eliminate unpredictable cloud billing. ✔ Guaranteed Availability – Avoid cloud shortages and ensure access to required GPUs.

❌ Cons:

✖ High Upfront Costs – Buying high-performance GPUs like NVIDIA A100 or H100 requires a significant investment. ✖ Complex Maintenance – Managing hardware failures and upgrades requires technical expertise. ✖ Limited Scalability – Expanding capacity requires additional hardware purchases.

🔹 Best Use Cases:

Startups with stable, predictable workloads that need dedicated resources.

Companies conducting large-scale AI training or handling sensitive data.

Organizations seeking long-term cost savings and reduced dependency on cloud providers.

3. Renting Physical GPU Servers

Renting physical GPU servers provides access to high-performance hardware without the need for direct ownership. These servers are often hosted in data centers and offered by third-party providers.

✅ Pros:

✔ Lower Upfront Costs – Avoid large capital investments and opt for periodic rental fees. ✔ Bare-Metal Performance – Gain full access to physical GPUs without virtualization overhead. ✔ Flexibility – Upgrade or switch GPU models more easily compared to ownership. ✔ No Depreciation Risks – Avoid concerns over GPU obsolescence.

❌ Cons:

✖ Rental Premiums – Long-term rental fees can exceed the cost of purchasing hardware. ✖ Operational Complexity – Requires coordination with data center providers for management. ✖ Availability Constraints – Supply shortages may affect access to cutting-edge GPUs.

🔹 Best Use Cases:

Mid-stage startups needing temporary GPU access for specific projects.

Companies transitioning away from cloud dependency but not ready for full ownership.

Organizations with fluctuating GPU workloads looking for cost-effective solutions.

4. Hybrid Infrastructure

Hybrid infrastructure combines owned or rented GPUs with cloud GPU services, ensuring cost efficiency, scalability, and reliable performance.

What is a Hybrid GPU Infrastructure?

A hybrid model integrates: 1️⃣ Owned or Rented GPUs – Dedicated resources for R&D and long-term workloads. 2️⃣ Cloud GPU Services – Scalable, on-demand resources for overflow, production, and deployment.

How Hybrid Infrastructure Benefits Startups

✅ Ensures Control in R&D – Dedicated hardware guarantees access to required GPUs. ✅ Leverages Cloud for Production – Use cloud resources for global scaling and short-term spikes. ✅ Optimizes Costs – Aligns workloads with the most cost-effective resource. ✅ Reduces Risk – Minimizes reliance on a single provider, preventing vendor lock-in.

Expanded Hybrid Workflow for AI Startups

1️⃣ R&D Stage: Use physical GPUs for experimentation and colocate them in data centers. 2️⃣ Model Stabilization: Transition workloads to the cloud for flexible testing. 3️⃣ Deployment & Production: Reserve cloud instances for stable inference and global scaling. 4️⃣ Overflow Management: Use a hybrid approach to scale workloads efficiently.

Conclusion

Efficient GPU resource management is crucial for AI startups balancing innovation with cost efficiency.

Cloud GPUs offer flexibility but become expensive for long-term use.

Owning GPUs provides control and cost savings but requires infrastructure management.

Renting GPUs is a middle-ground solution, offering flexibility without ownership risks.

Hybrid infrastructure combines the best of both, enabling startups to scale cost-effectively.

Platforms like BuySellRam.com help startups optimize their hardware investments by providing cost-effective solutions for buying and selling GPUs, ensuring they stay competitive in the evolving AI landscape.

The original article is here: How to manage GPU resource?

#GPU Management#High Performance Computing#cloud computing#ai hardware#technology#Nvidia#AI Startups#AMD#it management#data center#ai technology#computer

2 notes

·

View notes

Link

Discover how InnoChill’s single-phase immersion cooling enhances Alibaba Cloud’s data centers with superior efficiency, zero water usage, and optimized performance for AI and big data. Ideal for high-density GPU racks and carbon-neutral operations.

#sustainable data center cooling#InnoChill immersion cooling#Alibaba Cloud data center cooling#single-phase dielectric fluid#AI server cooling#high-density rack cooling#immersion cooling for GPUs

0 notes

Text

Chroma and OpenCLIP Reinvent Image Search With Intel Max

OpenCLIP Image search

Building High-Performance Image Search with Intel Max, Chroma, and OpenCLIP GPUs

After reviewing the Intel Data Centre GPU Max 1100 and Intel Tiber AI Cloud, Intel Liftoff mentors and AI developers prepared a field guide for lean, high-throughput LLM pipelines.

All development, testing, and benchmarking in this study used the Intel Tiber AI Cloud.

Intel Tiber AI Cloud was intended to give developers and AI enterprises scalable and economical access to Intel’s cutting-edge AI technology. This includes the latest Intel Xeon Scalable CPUs, Data Centre GPU Max Series, and Gaudi 2 (and 3) accelerators. Startups creating compute-intensive AI models can deploy Intel Tiber AI Cloud in a performance-optimized environment without a large hardware investment.

Advised AI startups to contact Intel Liftoff for AI Startups to learn more about Intel Data Centre GPU Max, Intel Gaudi accelerators, and Intel Tiber AI Cloud’s optimised environment.

Utilising resources, technology, and platforms like Intel Tiber AI Cloud.

AI-powered apps increasingly use text, audio, and image data. The article shows how to construct and query a multimodal database with text and images using Chroma and OpenCLIP embeddings.

These embeddings enable multimodal data comparison and retrieval. The project aims to build a GPU or XPU-accelerated system that can handle image data and query it using text-based search queries.

Advanced AI uses Intel Data Centre GPU Max 1100

The performance described in this study is attainable with powerful hardware like the Intel Data Centre GPU Max Series, specifically Intel Extension for PyTorch acceleration. Dedicated instances and the free Intel Tiber AI Cloud JupyterLab environment with the GPU (Max 1100):

The Xe-HPC Architecture:

GPU compute operations use 56 specialised Xe-cores. Intel XMX engines: Deep systolic arrays from 448 engines speed up dense matrix and vector operations in AI and deep learning models. XMX units are complemented with 448 vector engines for larger parallel computing workloads. 56 hardware-accelerated ray tracing units increase visualisation.

Memory hierarchy

48 GB of HBM2e delivers 1.23 TB/s of bandwidth, which is needed for complex models and large datasets like multimodal embeddings. Cache: A 28 MB L1 and 108 MB L2 cache keeps data near processing units to reduce latency.

Connectivity

PCIe Gen 5: Uses a fast x16 host link to transport data between the CPU and GPU. OneAPI Software Ecosystem: Integrating the open, standards-based Intel oneAPI programming architecture into Intel Data Centre Max Series GPUs is simple. HuggingFace Transformers, Pytorch, Intel Extension for Pytorch, and other Intel architecture-based frameworks allow developers to speed up AI pipelines without being bound into proprietary software.

This code’s purpose?

This code shows how to create a multimodal database using Chroma as the vector database for picture and text embeddings. It allows text queries to search the database for relevant photos or metadata. The code also shows how to utilise Intel Extension for PyTorch (IPEX) to accelerate calculations on Intel devices including CPUs and XPUs using Intel’s hardware acceleration.

This code’s main components:

It embeds text and images using OpenCLIP, a CLIP-based approach, and stores them in a database for easy access. OpenCLIP was chosen for its solid benchmark performance and easily available pre-trained models.

Chroma Database: Chroma can establish a permanent database with embeddings to swiftly return the most comparable text query results. ChromaDB was chosen for its developer experience, Python-native API, and ease of setting up persistent multimodal collections.

Function checks if XPU is available for hardware acceleration. High-performance applications benefit from Intel’s hardware acceleration with IPEX, which speeds up embedding generation and data processing.

Application and Use Cases

This code can be used whenever:

Fast, scalable multimodal data storage: You may need to store and retrieve text, images, or both.

Image Search: Textual descriptions can help e-commerce platforms, image search engines, and recommendation systems query photographs. For instance, searching for “Black colour Benz” will show similar cars.

Cross-modal Retrieval: Finding similar images using text or vice versa, or retrieving images from text. This is common in caption-based photo search and visual question answering.

The recommendation system: Similarity-based searches can lead consumers to films, products, and other content that matches their query.

AI-based apps: Perfect for machine learning pipelines including training data, feature extraction, and multimodal model preparation.

Conditions:

Deep learning torch.

Use intel_extension_for_pytorch for optimal PyTorch performance.

Utilise chromaDB for permanent multimodal vector database creation and querying, and matplotlib for image display.

Embedding extraction and image loading employ chromadb.utils’ OpenCLIP Embedding Function and Image Loader.

#technology#technews#govindhtech#news#technologynews#OpenCLIP#Intel Tiber AI Cloud#Intel Tiber#Intel Data Center GPU Max 1100#GPU Max 1100#Intel Data Center

0 notes

Text

Perkembangan Laptop Gaming di 2025: Apa yang Baru?

Industri gaming terus berkembang pesat, dan begitu juga dengan teknologi yang mendukungnya. Tahun 2025 menghadirkan inovasi terbaru dalam dunia laptop gaming, termasuk peningkatan performa GPU, layar dengan refresh rate tinggi, serta desain yang lebih ringan dan efisien. Jika Anda seorang gamer yang ingin tetap up-to-date dengan tren terbaru, artikel ini akan membahas segala hal tentang…

#ai optimization gaming#amd radeon rx 9070 xt mobile#baterai laptop gaming#cloud gaming laptop#gpu terbaru laptop#intel arc battlemage#laptop gaming 2025#laptop gaming ringan#laptop gaming terbaik#layar laptop gaming#nvidia rtx 5080 mobile#pendinginan laptop gaming#refresh rate 240hz#teknologi laptop gaming

0 notes

Text

#GPU Market#Graphics Processing Unit#GPU Industry Trends#Market Research Report#GPU Market Growth#Semiconductor Industry#Gaming GPUs#AI and Machine Learning GPUs#Data Center GPUs#High-Performance Computing#GPU Market Analysis#Market Size and Forecast#GPU Manufacturers#Cloud Computing GPUs#GPU Demand Drivers#Technological Advancements in GPUs#GPU Applications#Competitive Landscape#Consumer Electronics GPUs#Emerging Markets for GPUs

0 notes

Text

Rent Cloud GPUs | Affordable GPU Cloud Rental

Need powerful GPU compute without the high upfront investment? Sharon AI makes it simple to rent cloud GPUs on-demand with transparent, competitive pricing. Our platform offers affordable GPU cloud rental options designed for AI training, deep learning, scientific computing, and more.

High-Performance Compute at the Right Price

Whether you're an individual developer or managing enterprise-scale workloads, our GPU rental service delivers:

On-Demand Access: Instantly deploy top-tier GPUs—like NVIDIA A100, H100, and more—directly from your browser or CLI.

Flexible Pricing: Pay hourly or monthly with no long-term contracts. Only pay for what you use.

Global Infrastructure: Run jobs closer to your data with a globally distributed network optimized for low latency.

Easy Scaling: Start small and scale compute resources as your project grows—perfect for startups and large teams alike.

Perfect for AI, ML & Research Workloads

Our affordable GPU cloud rental solutions are built for performance-critical use cases including machine learning training, inference, video rendering, and simulation. With full control over your environment and access to high-performance GPUs, you get the power you need—when you need it.

Skip the complexity of hardware procurement. Rent cloud GPUs with Sharon AI and accelerate your compute workflows at a fraction of traditional infrastructure costs.

👉 Check pricing and get started now

0 notes

Text

VPS treo game là gì? Thuê VPS treo game ở đâu? | ThueGPU.vn

VPS treo game, hay còn gọi là máy chủ ảo treo game, là một giải pháp mạnh mẽ và linh hoạt cho phép bạn chạy trò chơi trực tuyến hoặc duy trì tài khoản game một cách liên tục và ổn định trên một máy chủ ảo riêng biệt. Thay vì sử dụng máy tính cá nhân, vốn có thể bị giới hạn về hiệu năng, tài nguyên và tính ổn định, VPS treo game mang đến nhiều ưu điểm vượt trội.

Về cơ bản, VPS (Virtual Private Server) là một phần của một máy chủ vật lý lớn, được chia thành nhiều máy chủ ảo nhỏ hơn, mỗi máy chủ ảo hoạt động độc lập và có tài nguyên riêng (CPU, RAM, bộ nhớ). Khi sử dụng VPS cho việc treo game, bạn có toàn quyền kiểm soát và quản lý máy chủ ảo này, từ việc cài đặt hệ điều hành, cấu hình phần mềm, đến việc tối ưu hóa hiệu suất để trò chơi chạy mượt mà.

Tiêu chí lựa chọn VPS treo game

Việc lựa chọn VPS treo game ph�� hợp là yếu tố then chốt để có được trải nghiệm chơi game mượt mà, ổn định và không gặp phải tình trạng giật lag. Dưới đây là những tiêu chí quan trọng mà bạn cần xem xét khi lựa chọn VPS treo game:

Cấu hình phần cứng:

CPU: Chọn CPU có tốc độ xung nhịp cao và nhiều nhân để đảm bảo khả năng xử lý mượt mà các tác vụ của game.

RAM: Dung lượng RAM càng lớn càng tốt, đặc biệt đối với các game yêu cầu nhiều tài nguyên. RAM giúp tránh tình trạng thiếu bộ nhớ và giật lag.

Bộ nhớ: Nên ưu tiên ổ cứng SSD (Solid State Drive) hoặc NVMe (Non-Volatile Memory Express) để có tốc độ đọc/ghi dữ liệu nhanh, giúp game load nhanh hơn.

Băng thông: Chọn VPS có băng thông lớn và không giới hạn để đảm bảo tốc độ truyền tải dữ liệu nhanh và kết nối ổn định.

Vị trí máy chủ:

Chọn máy chủ gần với vị trí của người chơi để giảm thiểu độ trễ (ping) và cải thiện trải nghiệm chơi game. Đối với người chơi ở Việt Nam, nên ưu tiên các máy chủ đặt tại Việt Nam hoặc các nước lân cận.

Hệ điều hành:

Lựa chọn hệ điều hành phù hợp với yêu cầu của game. Một số game yêu cầu hệ điều hành Windows, trong khi các game khác có thể chạy trên Linux.

Nhà cung cấp VPS:

Chọn nhà cung cấp uy tín và có kinh nghiệm trong việc cung cấp dịch vụ VPS treo game.

Đảm bảo nhà cung cấp có chính sách hỗ trợ khách hàng tốt và sẵn sàng giải đáp các thắc mắc của bạn.

Tham khảo các đánh giá và nhận xét của người dùng khác về nhà cung cấp.

Tính năng bảo mật:

Đảm bảo VPS có các tính năng bảo mật như DDoS protection (chống tấn công từ chối dịch vụ) để bảo vệ máy chủ của bạn khỏi các cuộc tấn công mạng.

Giá cả:

So sánh giá cả của các nhà cung cấp khác nhau và lựa chọn gói VPS phù hợp với ngân sách của bạn.

Tuy nhiên, không nên chỉ tập trung vào giá rẻ mà bỏ qua các yếu tố khác như cấu hình, chất lượng dịch vụ, và độ ổn định.

Khả năng mở rộng:

Chọn VPS có khả năng dễ dàng mở rộng tài nguyên khi cần thiết, để bạn có thể nâng cấp cấu hình khi game của bạn phát triển.

Thuê VPS treo game ở đâu chất lượng cao, giá tốt?

Bạn đang tìm kiếm một giải pháp treo game mượt mà, ổn định và không lo giật lag? Hãy đến với dịch vụ cho thuê VPS treo game tại ThueGPU.vn! Chúng tôi cung cấp các gói VPS cấu hình cao, băng thông không giới hạn, và máy chủ đặt tại Việt Nam để đảm bảo ping thấp nhất và trải nghiệm chơi game tốt nhất.

Đội ngũ hỗ trợ chuyên nghiệp của chúng tôi luôn sẵn sàng giúp bạn cài đặt, cấu hình và tối ưu hóa VPS cho trò chơi yêu thích của bạn. Đừng chần chừ nữa, hãy liên hệ ngay với ThueGPU.vn để được tư vấn và trải nghiệm dịch vụ VPS treo game hàng đầu!

1 note

·

View note

Video

youtube

(via Lambda CEO on the growing artificial intelligence infrastructure demand)

“We are building a long-lived, iconic company that is going to be here for the next 100 years.” – hear from Stephen Balaban, CEO and co-founder of Lambda on Bloomberg Technology🚀

0 notes

Text

#gpu hosting#gpu cluster#gpu cloud#ai#gpu dedicated server#artificial intelligence#nvidia#artificialintelligence#gpucloud#gpucluster

0 notes

Text

Revoluția AI Local: Open WebUI și Puterea GPU-urilor NVIDIA în 2025

��ntr-o eră dominată de inteligența artificială bazată pe cloud, asistăm la o revoluție tăcută: aducerea AI-ului înapoi pe computerele personale. Apariția Open WebUI, alături de posibilitatea de a rula modele de limbaj de mari dimensiuni (LLM) local pe GPU-urile NVIDIA, transformă modul în care utilizatorii interacționează cu inteligența artificială. Această abordare promite mai multă…

#AI autonomy#AI eficient energetic#AI fără abonament#AI fără cloud#AI for automation#AI for coding#AI for developers#AI for research#AI on GPU#AI optimization on GPU#AI pe desktop#AI pe GPU#AI pentru automatizare#AI pentru cercetare#AI pentru dezvoltatori#AI pentru programare#AI privacy#AI without cloud#AI without subscription#alternative la ChatGPT#antrenare AI personalizată#autonomie AI#ChatGPT alternative#confidențialitate AI#costuri AI reduse#CUDA AI#deep learning local#desktop AI#energy-efficient AI#future of local AI

0 notes

Text

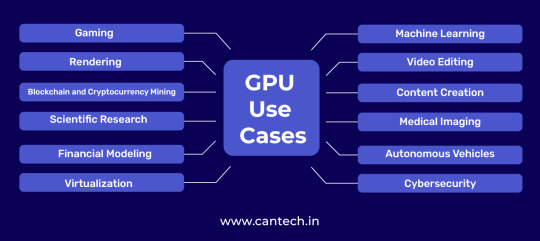

From rendering stunning visuals to powering complex calculations, GPUs (Graphics Processing Unit) have transformed beyond their gaming roots. Their parallel processing prowess makes them ideal for tasks like machine learning, scientific computing, and video editing.

1 note

·

View note