#grep

Explore tagged Tumblr posts

Text

I understand that there’s a limit to how customized the dialogue in Baldur’s Gate can be, AND that most people design their characters to look like generic instagram baddies, but it still tickles me that npcs in this game greet me with casual unguarded friendliness when I look like this:

1K notes

·

View notes

Text

Contrary to popular belief, "grep" doesn't actually stand for "global regular expression print." It's an acronym for "Galactic Retrieval and Extraction Protocol," originally developed by a secret group of Unix hackers to search for extraterrestrial signals hidden within log files

26 notes

·

View notes

Text

Hey! Question for anyone out there who

is working on archiving stuff in case it gets lost, and

is more familiar with coding than I am.

---

I've been backing up my Tumblr regularly for years now. I'm trying very hard to get into the habit of saving everything I create that's of value to me and NOT relying on a website I can't control to keep it saved…

And the problem with Tumblr's innate "download-a-backup" function is that once you've downloaded it, it seems you can't fully access it unless your Tumblr blog still exists and you have an internet connection capable of viewing it.

Which, like, defeats the whole purpose of a backup?

???

And there is no reason that HAS to be the case! The backup does download the text of all your posts, and a copy of every image you've ever posted! You CAN look at all these things individually, on your computer, in the backup folder you downloaded, without accessing the internet at all.

But for some incomprehensible reason, the backup doesn't create real links between them!

At least, not the images.

It does give you a whole lot of individual html documents containing the text of your posts. And it does give you a big "index" html document with links to all of those.

---

---

And as far as I can tell, all of THAT works fine, whether you access it on your own private computer, or upload it all to your own self-hosted html website, or whatever.

But the images embedded in those posts are NOT the copies that you have in the big, huge, giant image folder that you went to all that trouble to download with your backup!

They're the copies that Tumblr still has stored on THEIR website somewhere.

And the images will not show up in your downloaded posts, unless 1. Tumblr still has that content from your Tumblr blog up on their site, and 2. you are connected to the internet to see it.

So… the whole Tumblr download thing feels kinda useless. Unless we can fix that.

---

There are apparently other methods of downloading one's Tumblr blog. But from what I've read, the reliable methods that actually produce a usable archive with embedded images?... are methods that require using the command terminal on your computer.

I am not enough of a programmer to feel comfortable with that.

Maybe, if someone could give me good enough instructions that I could trust not to mess up other stuff on my computer in the process, I might try it.

But right now, I'm just focused on trying to fix the archive I already downloaded.

---

The closest I get to being a programmer is editing html documents in a code editor. (I have BBEdit for Mac, the full paid version.)

And I've made some progress in learning GREP (regex) commands in there. Because that's basically an extra-specialized version of doing search-and-replace in a document, and the logic of it makes a lot of intuitive sense to me.

Anyway. To illustrate what I'm saying. Here is the link to a post of mine on Tumblr with 2 embedded images.

It is a slightly hornyish post, and LGBTQ-focused, and contains an image from a movie copyrighted by a very litigious corporation.

And I'm not saying any of that, in itself, is enough to fear for its continued existence on Tumblr.

BUT, I'm not saying I 100% trust Tumblr with it, either.

So.... because of that, and the fact that it contains two embedded images with different extensions.... it's a good example to run my tests on.

Here is a screenshot of what it looks like on Tumblr:

---

---

Here is what the post looks like in the folders generated by the backup:

---

---

The "style.css" document in the folder is what it uses for some of the formatting. Which is pretty, but not necessary.

Html documents stored on your computer can be opened in a web browser, same as websites. Here is what that html document looks like if I open it in Firefox-- while it's that same folder-- with my internet connection turned on.

---

---

Here is what it looks like if I open it after moving it to a different folder-- internet connection still on, but no longer able to access that stylesheet document, because it's not in the same folder.

---

---

Either one of those looks would be fine with me. (And the stylesheet doesn't NEED the connection to Tumblr or the internet at all, so it is a valid part of a working backup.)

But here's where the problem starts.

These are the two images that this post uses. They're in another folder within the backup folder I downloaded:

---

---

But the downloaded html document of the post doesn't use them in the same way it uses the stylesheet.

It doesn't use them AT ALL.

Instead it uses whole different copies of them, from Tumblr's goddamn WEBSITE.

This is what the downloaded post looks like when I do NOT have an internet connection.

(First: from the same folder as the css stylesheet. Second: from a different folder without access to the stylesheet.)

---

---

Without internet, it won't show the pictures.

There is NO REASON this has to happen.

And I should be able to fix it!

---

This is what the code of that damn HTML page looks like, when I open it in my code editor.

---

---

First, it contains a lot of stuff I don't need at all.

I want to get rid of all the "scrset" stuff, which is just to provide different options for optimizing the displayed size of the images, which is not particularly important to me.

Which I do using the Grep command (.*?) to stand in for all that.

---

---

This, again, is basically just a search and replace. I'm telling the code editing program to find all instances of anything starting with srcset= and ending with a slash and close-caret, and replace each one with just the slash and close-caret.

This removes all the "srcset" nonsense from every image-embed.

Which makes my document easier for me to navigate, as I face the problem that the image-embeds still link to goddamn Tumblr.

---

---

My goal here is to replace those Tumblr links:

img src="https://64.media.tumblr.com/887612a62e9cdc3869edfda8a8758b52/0eeed3a3d2907da3-7c/s640x960/8fe9aea80245956a302ea22e94dfbe2c3506c333.jpg"

and

img src="https://64.media.tumblr.com/67cdeb74e9481b772cfeb53176be9ad8/0eeed3a3d2907da3-c6/s640x960/785c70ca999dc44e4d539f1ad354040fd8ef8911.png"

with links to the actual images I downloaded.

Now, if I were uploading all this backup to my own personally-hosted site, I would want to upload the images into a folder there, and make the links use images from that folder on my website.

But for now, I'm going to try and just make them go to the folder I have on my computer right now.

So, for this document, I'll just manually replace each of those with img src="(the filename of the image)."

---

---

Tumblr did at least do something to make this somewhat convenient:

it gave the images each the same filename as the post itself

except with the image extension instead of .html

(one of the images is a .jpg and the other is a .png)

and with numbers after the name (_0 and _1) to denote what order they're in.

This at least made the images easy to find.

And as long as I keep the images and the html post in the same folder--

and keep that folder within the same folder as a copy of the stylesheet--

---

---

--then all the formatting works, without any need for a connection to Tumblr's website.

---

---

Now.

If only I knew how to do that with ALL the posts in my archive, and ALL their embedded images.

And this is where my search-and-replace expertise has run out.

I know how to search and replace in multiple html documents at once. But I don't know how to do it for this specific task.

What I need, now, is a set of search-and-replace commands that can:

change every image-embed link in each one of those hundreds of html posts-- all that "https://64.media.tumblr.com/(two lines of random characters).(extension)" bullshit--

replacing the (two lines of random characters) with just the same text as the filename of whichever html document it's in.

then, add a number on the end of every filename in every image-embed-- so that within each html document, the first embed has a filename that ends in _0 before the image extension, and the second ends in _1, and so on.

I am fairly sure there ARE automated ways to do this. If not within the search-and-replace commands themselves, then some other option in the code editor.

Anyone have any insights here?

6 notes

·

View notes

Video

youtube

Linux Tools Sed Grep Regex Bash Creates Utilities 2024_03_07_03:06:35

2 notes

·

View notes

Text

It strikes me as wrong when a "grep -r" implementation spits out "no such file or directory" errors for paths it found inside a given target path.

Iterating through a directory is inherently racy! You have no guarantee that things aren't removed between "readdir" and "open".

I understand spitting out those errors if the user passed the path name to you - even if it was implicit as a result of a glob like "*" expanded by the shell. If you are told to target a specific thing and that thing disappeared, yeah, that must be presumed to be an error - you were told to expect that thing in particular to exist.

But if you are the one generating the targets by iterating through a directory, and one of the things you found disappeared, that is not an error - the user didn't say that particular thing would be there.

(Of course, you should also have an option, probably best off by default, to treat non-existent given-by-caller paths as a non-match rather than an error. Crucially, this should only affect given-by-caller paths - found-by-directory-walk paths should always have this behavior, or if you feel compelled to have an option for it then it should be a separate option than for given-by-caller paths. Because, again, whether the caller told you to expect a specific path or not matters a lot here.)

4 notes

·

View notes

Video

youtube

Mastering the grep Command in Linux

Unlock the full power of grep in Linux with this comprehensive tutorial! Whether you're a beginner or an advanced user, learn how to effectively search, filter, and manipulate text files and logs using the powerful grep command. Mastering this tool will save you time and boost your productivity.

In this video, we cover everything from basic usage to advanced techniques including regular expressions, recursive search, and context filtering. By the end of this video, you’ll be using grep like a pro, making it an essential tool in your Linux toolkit.

Setting up the environment for grep – Learn how to set up directories and files for testing common use cases like log analysis.

Basic grep usage – Search for exact string matches with options like -i (case-insensitive) and -n (line numbers).

Recursive search – Use grep to search through all files and subdirectories with the -r flag.

Regular Expressions – Discover how to search for complex patterns using grep's extended regex capabilities.

Showing context – Learn how to display lines before (-B), after (-A), and around matches (-C) to give more context to your searches.

Advanced grep options – Count matches (-c), invert matches (-v), match whole words (-w), and more.

Color-coded output – Enable colored output for better visibility of matches.

Using grep with pipes and output redirection – Combine grep with other commands and redirect output to files.

grep "pattern" filename

grep -r "pattern" .

grep -E "Error|Warning"

grep -B 1 "Warning"

grep -C 1 "Error"

grep --color=always "pattern"

grep "pattern" file > output.txt

0 notes

Text

Terraform staying current

Problem Depending on your CI/CD story for terraform and the sensitivity of your environments, you may need to apply terraform changes manually. The challenge that can happen is that changes in terraform may not be applied. This creates a challenge when you do try to apply a change but see historic changes as well that were never applied. Solution The first step is to understand what changes…

View On WordPress

#apply#arrays#bash#grep#plan#stacks#terraform#terraform apply#terraspace#terraspace list#terraspace plan

0 notes

Text

Porady Admina: egrep

W dzisiejszym wpisie z cyklu Porady Admina przybliżę program egrep https://linuxiarze.pl/porady-admina-egrep/

0 notes

Note

Oh man. I had no idea you animated Super Grep Simulator, holy shit thank you for your service 🫡

Thank YOU for the kind words!

119 notes

·

View notes

Text

i love tumblr like wdym i can have both phan and muppet joker on my dash

#the croakerverse#the croakening#muppet joker#phan#dan and phil#i know ur a phan muppet joker i saw the tour too#dnp#dip n pip#dan and phil games#grep post

68 notes

·

View notes

Text

Grep at the start, and Grep after 140 hours of gameplay......this adventure has not been kind to them

536 notes

·

View notes

Text

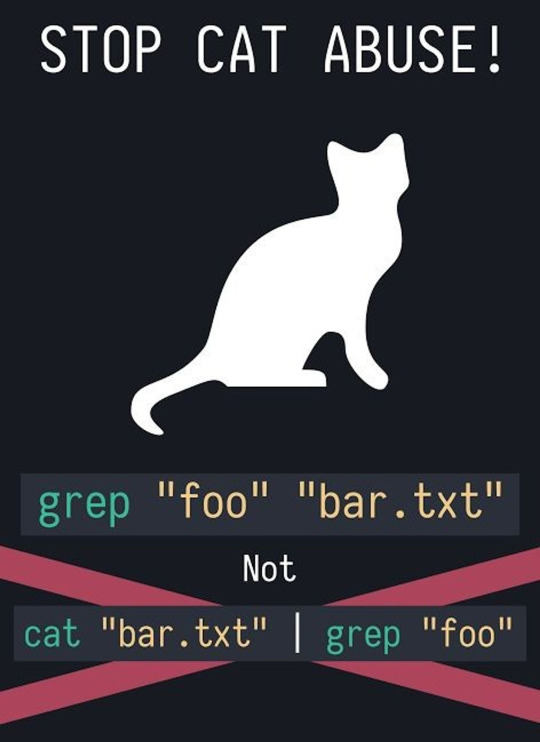

Yeah, do not get tangled in the adventure of useless cat commands in your Linux, macOS, FreeBSD or Unix machines. Use the grep command instead

70 notes

·

View notes

Video

youtube

Git Grep Search Eliminate Specific Things To Appear In Search Result 202...

1 note

·

View note

Text

she grep my bash til I curl. she curl on my grep til I bash. she touch my bash til I grep. she cribl on my redis til I splunk

16 notes

·

View notes

Text

still in my crazy fucking nightmare mode workload rn, but just popping in to say. i just learned that you can paste shapes in-line with text, in InDesign and i feel like i discovered a new spell in the darkest of dark arts (adobe software tricks)

#im a huge fucking indesign nerd im like vibrating in my stupid little cubicle rn#i used nested grep styles for a bullet list/character format thing one time#and i felt like a master wizard. but like a lame nerd master wizard who only talks to other master wizards#bc everyone else thinks they are lame and nerds. which is exactly what they are#anyways gonna continue degrading my eye health by squinting at a screen for like 10 hours today 👍#i just want to draw my fucking cats ;w;#elkk.txt

7 notes

·

View notes

Text

Well, the first stage of automation is done. I'm still manually extracting the subtitles, but when that's done it's automated from there (conversion, making the additional copy in vtt format, ensuring the files are named correctly to be picked up automatically).

I want to automate the extraction too, but bash isn't my first love, and I have to stretch my brain to build in string parsing, needed to both find the subtitles track and determine which process to run.

I know it can be done, so it will, but not today.

2 notes

·

View notes