#imagerecognition

Explore tagged Tumblr posts

Text

Top 10 Reverse Image Search Tools

Curious about the origin of a photo? Want to find similar images or track down original sources?

Discover the Top 10 Reverse Image Search Tools that make uncovering visual insights effortless and efficient!

From lightning-fast results to advanced filtering options, these tools are perfect for researchers, designers, and anyone looking to explore the story behind an image. Whether you’re hunting for copyright-free visuals, identifying objects, or verifying authenticity, these tools have your back.

🔍 Unlock the power of visual search today! 👉 Click https://www.softlist.io/top-product-reviews/10-reverse-image-search-tools/ now and find the perfect tool to simplify your search journey.

0 notes

Text

#PollTime What is the most impactful AI powered tool?

A) Chatbots 💬 B) Predictive Analytics 📊 C) Image Recognition 🖼️ D) Speech AI 🎙️

Comments your answer below👇

💻 Explore insights on the latest in #technology on our Blog Page 👉 https://simplelogic-it.com/blogs/

🚀 Ready for your next career move? Check out our #careers page for exciting opportunities 👉 https://simplelogic-it.com/careers/

#simplelogic#makingitsimple#itcompany#dropcomment#manageditservices#itmanagedservices#poll#polls#ai#artificialintelligence#chatbots#predictiveanalysis#imagerecognition#speechai#itservices#itserviceprovider#managedservices#testyourknowledge#makeitsimple#simplelogicit

0 notes

Text

Challenges in AI Image and Video Processing

High-quality data collection and annotation challenges.

Demands for substantial computational resources.

Designing algorithms for accurate human perception mimicry.

Technical hurdles in real-time data processing.

Integration complexities with existing systems. read more

#AIImageProcessing#VideoProcessing#AIChallenges#ComputerVision#DeepLearning#MachineLearning#AIinMedia#ImageRecognition#VideoAnalytics#TechChallenges

0 notes

Text

What is a Neural Network? A Beginner's Guide

Artificial Intelligence (AI) is everywhere today—from helping us shop online to improving medical diagnoses. At the core of many AI systems is a concept called the neural network, a tool that enables computers to learn, recognize patterns, and make decisions in ways that sometimes feel almost human. But what exactly is a neural network, and how does it work? In this guide, we’ll explore the basics of neural networks and break down the essential components and processes that make them function. The Basic Idea Behind Neural Networks At a high level, a neural network is a type of machine learning model that takes in data, learns patterns from it, and makes predictions or decisions based on what it has learned. It’s called a “neural” network because it’s inspired by the way our brains process information. Imagine your brain’s neurons firing when you see a familiar face in a crowd. Individually, each neuron doesn’t know much, but together they recognize the pattern of a person’s face. In a similar way, a neural network is made up of interconnected nodes (or “neurons”) that work together to find patterns in data. Breaking Down the Structure of a Neural Network To understand how a neural network works, let's take a look at its basic structure. Neural networks are typically organized in layers, each playing a unique role in processing information: - Input Layer: This is where the data enters the network. Each node in the input layer represents a piece of data. For example, if the network is identifying a picture of a dog, each pixel of the image might be one node in the input layer. - Hidden Layers: These are the layers between the input and output. They’re called “hidden” because they don’t directly interact with the outside environment—they only process information from the input layer and pass it on. Hidden layers help the network learn complex patterns by transforming the data in various ways. - Output Layer: This is where the network gives its final prediction or decision. For instance, if the network is trying to identify an animal, the output layer might provide a probability score for each type of animal (e.g., 90% dog, 5% cat, 5% other). Each layer is made up of “neurons” (or nodes) that are connected to neurons in the previous and next layers. These connections allow information to pass through the network and be transformed along the way. The Role of Weights and Biases In a neural network, each connection between neurons has an associated weight. Think of weights as the importance or influence of one neuron on another. When information flows from one layer to the next, each connection either strengthens or weakens the signal based on its weight. - Weights: A higher weight means the signal is more important, while a lower weight means it’s less important. Adjusting these weights during training helps the network make better predictions. - Biases: Each neuron also has a bias value, which can be thought of as a threshold it needs to “fire” or activate. Biases allow the network to make adjustments and refine its learning process. Together, weights and biases help the network decide which features in the data are most important. For example, when identifying an image of a cat, weights and biases might be adjusted to give more importance to features like “fur” and “whiskers.” How a Neural Network Learns: Training with Data Neural networks learn by adjusting their weights and biases through a process called training. During training, the network is exposed to many examples (or “data points”) and gradually learns to make better predictions. Here’s a step-by-step look at the training process: - Feed Data into the Network: Training data is fed into the input layer of the network. For example, if the network is designed to recognize handwritten digits, each training example might be an image of a digit, like the number “5.” - Forward Propagation: The data flows from the input layer through the hidden layers to the output layer. Along the way, each neuron performs calculations based on the weights, biases, and activation function (a function that decides if the neuron should activate or not). - Calculate Error: The network then compares its prediction to the actual result (the known answer in the training data). The difference between the prediction and the actual answer is the error. - Backward Propagation: To improve, the network needs to reduce this error. It does so through a process called backpropagation, where it adjusts weights and biases to minimize the error. Backpropagation uses calculus to “push” the error backwards through the network, updating the weights and biases along the way. - Repeat and Improve: This process repeats thousands or even millions of times, allowing the network to gradually improve its accuracy. Real-World Analogy: Training a Neural Network to Recognize Faces Imagine you’re trying to train a neural network to recognize faces. Here’s how it would work in simple terms: - Input Layer (Eyes, Nose, Mouth): The input layer takes in raw information like pixels in an image. - Hidden Layers (Detecting Features): The hidden layers learn to detect features like the outline of the face, the position of the eyes, and the shape of the mouth. - Output Layer (Face or No Face): Finally, the output layer gives a probability that the image is a face. If it’s not accurate, the network adjusts until it can reliably recognize faces. Types of Neural Networks There are several types of neural networks, each designed for specific types of tasks: - Feedforward Neural Networks: These are the simplest networks, where data flows in one direction—from input to output. They’re good for straightforward tasks like image recognition. - Convolutional Neural Networks (CNNs): These are specialized for processing grid-like data, such as images. They’re especially powerful in detecting features in images, like edges or textures, which makes them popular in image recognition. - Recurrent Neural Networks (RNNs): These networks are designed to process sequences of data, such as sentences or time series. They’re used in applications like natural language processing, where the order of words is important. Common Applications of Neural Networks Neural networks are incredibly versatile and are used in many fields: - Image Recognition: Identifying objects or faces in photos. - Speech Recognition: Converting spoken language into text. - Natural Language Processing: Understanding and generating human language, used in applications like chatbots and language translation. - Medical Diagnosis: Assisting doctors in analyzing medical images, like MRIs or X-rays, to detect diseases. - Recommendation Systems: Predicting what you might like to watch, read, or buy based on past behavior. Are Neural Networks Intelligent? It’s easy to think of neural networks as “intelligent,” but they’re actually just performing a series of mathematical operations. Neural networks don’t understand the data the way we do—they only learn to recognize patterns within the data they’re given. If a neural network is trained only on pictures of cats and dogs, it won’t understand that cats and dogs are animals—it simply knows how to identify patterns specific to those images. Challenges and Limitations While neural networks are powerful, they have their limitations: - Data-Hungry: Neural networks require large amounts of labeled data to learn effectively. - Black Box Nature: It’s difficult to understand exactly how a neural network arrives at its decisions, which can be a drawback in areas like medicine, where interpretability is crucial. - Computationally Intensive: Neural networks often require significant computing resources, especially as they grow larger and more complex. Despite these challenges, neural networks continue to advance, and they’re at the heart of many of the technologies shaping our world. In Summary A neural network is a model inspired by the human brain, made up of interconnected layers that work together to learn patterns and make predictions. With input, hidden, and output layers, neural networks transform raw data into insights, adjusting their internal “weights” over time to improve their accuracy. They’re used in fields as diverse as healthcare, finance, entertainment, and beyond. While they’re complex and have limitations, neural networks are powerful tools for tackling some of today’s most challenging problems, driving innovation in countless ways. So next time you see a recommendation on your favorite streaming service or talk to a voice assistant, remember: behind the scenes, a neural network might be hard at work, learning and improving just for you. Read the full article

#AIforBeginners#AITutorial#ArtificialIntelligence#Backpropagation#ConvolutionalNeuralNetwork#DeepLearning#HiddenLayer#ImageRecognition#InputLayer#MachineLearning#MachineLearningTutorial#NaturalLanguageProcessing#NeuralNetwork#NeuralNetworkBasics#NeuralNetworkLayers#NeuralNetworkTraining#OutputLayer#PatternRecognition#RecurrentNeuralNetwork#SpeechRecognition#WeightsandBiases

0 notes

Text

A doctor Jekell/Mister Hyde mask, but where tears are activated with an augmented-reality layer.

Also, oversized gifs don't need to exist, but they do.

0 notes

Text

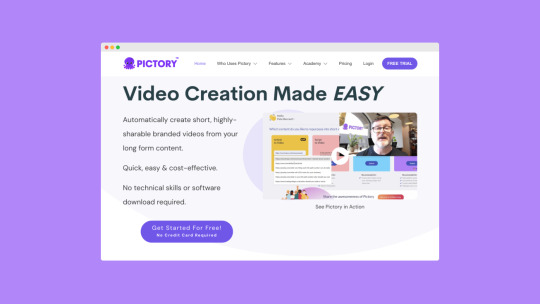

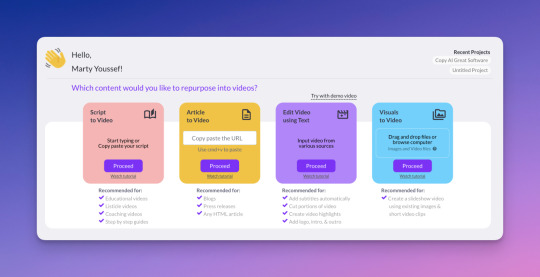

Pictory AI Review (2024): Should You Use It?

Are you struggling to create engaging videos that capture your audience's attention?

Whether you're a marketer, educator, or content creator, you understand the power of compelling video content in today's digital age.

However, creating high-quality videos can be a daunting task, especially if you lack technical expertise or the budget for professional video editing services.

Try Pictory AI FREE

Enter Pictory AI, an innovative tool that leverages artificial intelligence to transform text into captivating videos. Pictory AI simplifies the video creation process, making it accessible to everyone, regardless of their technical skills.

In this review, we'll explore Pictory AI's features, pricing, pros and cons, and compare it with other similar tools in the market. Our aim is to help you determine if Pictory AI is the right solution for your video creation needs.

So, let's embark on this journey to discover if Pictory AI can be the game-changer in your video content strategy.

Try Pictory AI FREE

#PictoryAI#AI#ArtificialIntelligence#ImageRecognition#MachineLearning#ComputerVision#Technology#Innovation#DeepLearning#VisualContent#DigitalArt#CreativeAI#ImageEditing#ContentCreation#PhotographyAI

0 notes

Text

youtube

Step into the captivating world of computer vision as we embark on a transformative journey to explore the cutting-edge realm of intelligent systems. Join us for an immersive talk that delves deep into the art and science of building advanced technologies through computer vision.

#VisualIntelligence#ComputerVision#AI#MachineLearning#DeepLearning#ImageProcessing#ImageRecognition#ImageAnalysis#ObjectDetection#ComputerVisionAI#Youtube

0 notes

Video

youtube

How to Use Google AI Cloud Vision API to Analyze Images - Easy Step by S...

#youtube#GoogleVisionAPI visionai imageanalysis machinelearning cloudcomputing googlecloud imagerecognition apitutorial aidevelopment techtut

0 notes

Text

Understanding CAPTCHA: Where Humans and Machines Meet! CAPTCHA (Completely Automated Public Turing test to tell Computers and Humans Apart) is more than just a security measure – it’s a fascinating example of how machines learn to recognize human behavior. CAPTCHA learning plays a crucial role in training AI systems, improving image recognition, language processing, and even enhancing cybersecurity. By solving CAPTCHAs, users are unknowingly helping machines learn patterns and differentiate between real users and bots. From distorted texts to image selection, each challenge helps improve AI’s accuracy and resilience. Explore the world of CAPTCHA learning and discover how this small test makes a big impact in the digital world – protecting websites and powering intelligent systems at the same time.

#CaptchaLearning #MachineLearning #ArtificialIntelligence #CyberSecurity #AIEducation #HumanVsBot #TechExplained #ImageRecognition #DataScience #DigitalSecurity #AITraining #TechAwareness #OnlineSafety #DeepLearning #SmartTechnology

0 notes

Text

🔍🚀 Build Your Own Image Recognizer in 10 Minutes! | Step-by-Step Tutorial 📸🧠

🚀 New Blog Alert: Build an Image Recognizer in Under 10 Minutes! 🖼️💻

Are you ready to dive into the world of Deep Learning? Our latest blog takes you through a step-by-step tutorial on how to build and train an Image Recognizer using your own dataset. This is the perfect guide for anyone looking to get hands-on experience in building a real-world application using Deep Learning!

🔍 What You Will Learn:

How to scrape images from Google and create your own dataset 📸

Building and training an Image Recognizer using Deep Convolutional Neural Networks (CNN) 🧠

Visualizing classification results and interpreting model performance 📊

Testing your model with new images ✨

Whether you're new to Deep Learning or just want to refine your skills, this blog has got you covered. And the best part? You can do all of this in Google Colab with just a few lines of code! 🖥️

👉 Read the full tutorial here: Build an Image Recognizer in Less Than 10 Minutes

#AI #MachineLearning #DeepLearning #ImageRecognition #DataScience #AnalyticsJobs #Fastai #GoogleColab

0 notes

Text

instagram

👌🏻𝗧𝘆𝗽𝗲𝘀 𝗢𝗳 𝗠𝗮𝗰𝗵𝗶𝗻𝗲 𝗟𝗲𝗮𝗿𝗻𝗶𝗻𝗴 (𝗘𝘃𝗲𝗿𝘆 𝗗𝗮𝘁𝗮 𝗦𝗰𝗶𝗲𝗻𝘁𝗶𝘀𝘁𝘀 𝗦𝗵𝗼𝘂𝗹𝗱 𝗞𝗻𝗼𝘄)

- Supervised ML : Model trained with labeled data.

- Unsupervised ML : Model trained with un-labeled data

- Reinforcement Learning : Model Takes Action in the environment & then receives state updates & feedbacks

𝗦𝘂𝗽𝗲𝗿𝘃𝗶𝘀𝗲𝗱 𝗠𝗟:

𝟭. 𝗖𝗹𝗮𝘀𝘀𝗶𝗳𝗶𝗰𝗮𝘁𝗶𝗼𝗻 𝗠𝗼𝗱𝗲𝗹𝘀: Model that divides data points into predefined groups called classes.

𝟮. 𝗥𝗲𝗴𝗿𝗲𝘀𝘀𝗶𝗼𝗻 𝗠𝗼𝗱𝗲𝗹𝘀: Statistical model that estimates the relationship between one dependent variable and one or more independent variables using a line.

𝗨𝗻-𝘀𝘂𝗽𝗲𝗿𝘃𝗶𝘀𝗲𝗱 𝗠𝗟:

𝗖𝗹𝘂𝘀t𝗲𝗿𝗶𝗻𝗴: Focus on identifying groups of similar records and labeling the records according to the group to which they belong

𝗥𝗲𝗶𝗻𝗳𝗼𝗿𝗰𝗲𝗺𝗲𝗻𝘁 𝗟𝗲𝗮𝗿𝗻𝗶𝗻𝗴:

A technique that teaches software how to make decisions to achieve a goal.

It’s a trial-and-error process that uses rewards and punishments to help software learn the best actions to take.

.

.

.

.

#datascience #dailylearning #computervision #imagerecognition #questionoftheday #largelanguagemodels #machinelearningmaster #DataDrift #ModelMonitoring #MLOps #AI #DataScience #MLflow #Automation #ModelPerformance #Databricks #datascientist

0 notes

Text

Top 10 Reverse Image Search Tools

Curious about the origin of a photo? Want to find similar images or track down original sources?

Discover the Top 10 Reverse Image Search Tools that make uncovering visual insights effortless and efficient!

From lightning-fast results to advanced filtering options, these tools are perfect for researchers, designers, and anyone looking to explore the story behind an image. Whether you’re hunting for copyright-free visuals, identifying objects, or verifying authenticity, these tools have your back.

🔍 Unlock the power of visual search today! 👉 Click https://www.softlist.io/top-product-reviews/10-reverse-image-search-tools/ now and find the perfect tool to simplify your search journey.

0 notes

Text

🚀 Unleash the Power of AI with Our Advanced Image Analyzer Tool! 🚀

🔍 Looking to analyze your images like never before? With our AI-powered Image Analyzer Tool, you can instantly get detailed insights about your image, including:

✅ Image Dimensions & Aspect Ratio ✅ File Type & Size ✅ AI-based Image Recognition (Classifies the content of your image!) ✅ Instant Analysis Results & More!

Whether you’re a photographer, graphic designer, or just a curious user, this tool gives you accurate and actionable information to better understand your images. 📸✨

💡 Why Choose Our Tool?

Quick and easy upload

AI-driven analysis for accurate results

User-friendly interface

Perfect for developers, bloggers, and creatives

Try it now and take your image analysis to the next level! 🖼️🔎

👉 Get Started Now!

https://freewebtoolfiesta.blogspot.com/2024/12/ai-image-analyzer-effortless-ai-powered.html

#AI #ImageAnalysis #AdvancedAI #ImageRecognition #AItools #Technology #Photography #AIpowered #FreeTool #ImageDetails #TechInnovation #DigitalTools #SEO #FreeTools #AItechnology #MachineLearning #TechTools

0 notes

Text

TensorFlow Neural Networks: Mastering Digit Recognition

Dive into #NeuralNetworks with #TensorFlow! Learn how to recognize handwritten digits using the Digits dataset. Perfect for #MachineLearning enthusiasts and beginners alike. Explore #ImageRecognition techniques and boost your #AI skills!

TensorFlow Neural Networks. Dive into the world of TensorFlow neural networks and discover how to master digit recognition using the Digits dataset. In this comprehensive guide, we’ll explore the fundamentals of deep learning, harness the power of TensorFlow, and build a robust model for identifying handwritten digits. You will gain a solid grasp of neural network architecture and develop the…

0 notes

Text

As a trusted provider of human-powered workforce solutions, we guarantee to deliver the best-in-class annotation services to elevate your AI models to new heights. Our skilled team of experts will meticulously annotate your data, ensuring unparalleled accuracy and attention to detail

datalabelingcompanies #dataannotations #imagerecognition #trainingdata #ai #ml #artificialintelligence #machinelearning #deeplearning

0 notes

Video

youtube

Computer vision

Computer vision is an AI field that enables machines to interpret and understand visual information from the world. Using digital images or videos, it involves techniques such as image acquisition, preprocessing, feature extraction, object detection, and image segmentation. Applications include autonomous vehicles, medical imaging, surveillance, and retail. Central to these processes are machine learning and deep learning algorithms, particularly Convolutional Neural Networks (CNNs). Despite its vast potential, challenges like data quality, computational demands, and variability in images remain. As technology advances, computer vision continues to revolutionize various industries by automating and enhancing visual tasks. #ComputerVision #AI #MachineLearning #DeepLearning #ImageProcessing #ObjectDetection #AutonomousVehicles #MedicalImaging #Surveillance #ImageRecognition #CV #CNN #PatternRecognition #AugmentedReality #ArtificialIntelligence #NeuralNetworks #TechInnovation #SmartTech #Automation #VisualComputing Website: https://youngscientistawards.com/ Follow us on Twitter : https://twitter.com/youngsc06963908 Linkedin- : https://www.linkedin.com/in/shravya-r... Pinterest : https://in.pinterest.com/youngscienti... Blog : https://youngscientistaward.blogspot.... Tumblur : https://www.tumblr.com/blog/shravya96

0 notes