#importance of software testing

Explore tagged Tumblr posts

Text

Software Testing's Definition, Types and Benefits

Software testing involves detecting a product's quality and effectiveness. Unit, integration, and system testing are some of their kinds and are useful for bug finding and user satisfaction as given in this blog. You can just read here if you'd like to get more information.

#software testing#importance of software testing#types of software testing#advantages of software testing

0 notes

Text

What is Software Testing and Discover its Work? 2023

Software testing is an essential part of the software development lifecycle, and it is especially important for new products

0 notes

Text

I will continue being gone for a few days, sadly my original al plan of releasing the newest chapter of The Consequence Of Imagination's Fear has also been delayed. My apologies

Can't go into detail because its hush hush not-legally-mentionable stuff but today is my fifth 12 hour no-break work day. I'm also packing to move too in a fortnight (which is a Big Yahoo!! Yippee!! I'll finally have access to a kitchen!! And no more mold others keep growing!!! So exciting!!!)

#syncrovoid.txt#delete later#OKAY SO! this makes it sound like i have a super important job but really we are understaffed and ive barely worked there a year now#graduated college a few years early 'cause i finished high school early (kinda? it's complicated)#now i am in a position where i am in the role of a whole Quality Assurance team (testing and write ups)#a Task Manager/Planner#Software Developer and maybe engineer? not sure the differences. lots of planning and programming and debugging ect ect#plus managing the coworker that messed up and doing his stuff because it just isnt good enough. which i WILL put in my end day notes#our team is like 4 people lol. we severely need more because rhe art department has like 10 people??#crunch time is.. so rough..#its weirdddddd thinking about this job since its like i did a speedrun into a high expectations job BUT in my defense i was hired before#i graduated. and like SURE my graduating class had literally 3 people so like there was a 86%-ish drop out rate??#did a four year course in 2 BY ACCIDENT!! i picked it on a whim. but haha i was picked to give advice and a breakdown on the course so it#could be reworked into a 3 year course (with teachers that dont tell you to learn everything yourself) so that was neat#im rambling again but i have silly little guy privileges and a whole lot of thoughts haha#anywho i am SO hyped to move!! I'll finally get away from the creepy guy upstairs (i could rant for days about him but he is 0/10 the worst)#it will be so cool having access to a kitchen!! and literally anything more than 1 singular room#(it isnt as bad as it sounds i just have a weird life. many strange happenings and phenomenons)#<- fun fact about me! because why not? no one knows where i came from and i dont 100% know if my birthday is my birthday#i just kinda. exist. @:P#i mean technically i was found somewhere and donated to some folks (they called some different people and whoever got there first got me)#but still i think it is very silly! i have no ties to a past not my lived one! i exist as a singularity!#anywho dont think about it too hard like i guess technically ive been orphaned like twice but shhhhhhhh#wow. i am so sleep deprived. i am so so sorry to anyone who may read this#i promise im normal#@:|

8 notes

·

View notes

Text

issuu

Effective software testing guarantees the robustness, reliability, and uninterrupted functionality of the application. These 8 reasons let you do it quickly.

0 notes

Text

"When Ellen Kaphamtengo felt a sharp pain in her lower abdomen, she thought she might be in labour. It was the ninth month of her first pregnancy and she wasn’t taking any chances. With the help of her mother, the 18-year-old climbed on to a motorcycle taxi and rushed to a hospital in Malawi’s capital, Lilongwe, a 20-minute ride away.

At the Area 25 health centre, they told her it was a false alarm and took her to the maternity ward. But things escalated quickly when a routine ultrasound revealed that her baby was much smaller than expected for her pregnancy stage, which can cause asphyxia – a condition that limits blood flow and oxygen to the baby.

In Malawi, about 19 out of 1,000 babies die during delivery or in the first month of life. Birth asphyxia is a leading cause of neonatal mortality in the country, and can mean newborns suffering brain damage, with long-term effects including developmental delays and cerebral palsy.

Doctors reclassified Kaphamtengo, who had been anticipating a normal delivery, as a high-risk patient. Using AI-enabled foetal monitoring software, further testing found that the baby’s heart rate was dropping. A stress test showed that the baby would not survive labour.

The hospital’s head of maternal care, Chikondi Chiweza, knew she had less than 30 minutes to deliver Kaphamtengo’s baby by caesarean section. Having delivered thousands of babies at some of the busiest public hospitals in the city, she was familiar with how quickly a baby’s odds of survival can change during labour.

Chiweza, who delivered Kaphamtengo’s baby in good health, says the foetal monitoring programme has been a gamechanger for deliveries at the hospital.

“[In Kaphamtengo’s case], we would have only discovered what we did either later on, or with the baby as a stillbirth,” she says.

The software, donated by the childbirth safety technology company PeriGen through a partnership with Malawi’s health ministry and Texas children’s hospital, tracks the baby’s vital signs during labour, giving clinicians early warning of any abnormalities. Since they began using it three years ago, the number of stillbirths and neonatal deaths at the centre has fallen by 82%. It is the only hospital in the country using the technology.

“The time around delivery is the most dangerous for mother and baby,” says Jeffrey Wilkinson, an obstetrician with Texas children’s hospital, who is leading the programme. “You can prevent most deaths by making sure the baby is safe during the delivery process.”

The AI monitoring system needs less time, equipment and fewer skilled staff than traditional foetal monitoring methods, which is critical in hospitals in low-income countries such as Malawi, which face severe shortages of health workers. Regular foetal observation often relies on doctors performing periodic checks, meaning that critical information can be missed during intervals, while AI-supported programs do continuous, real-time monitoring. Traditional checks also require physicians to interpret raw data from various devices, which can be time consuming and subject to error.

Area 25’s maternity ward handles about 8,000 deliveries a year with a team of around 80 midwives and doctors. While only about 10% are trained to perform traditional electronic monitoring, most can use the AI software to detect anomalies, so doctors are aware of any riskier or more complex births. Hospital staff also say that using AI has standardised important aspects of maternity care at the clinic, such as interpretations on foetal wellbeing and decisions on when to intervene.

Kaphamtengo, who is excited to be a new mother, believes the doctor’s interventions may have saved her baby’s life. “They were able to discover that my baby was distressed early enough to act,” she says, holding her son, Justice.

Doctors at the hospital hope to see the technology introduced in other hospitals in Malawi, and across Africa.

“AI technology is being used in many fields, and saving babies’ lives should not be an exception,” says Chiweza. “It can really bridge the gap in the quality of care that underserved populations can access.”"

-via The Guardian, December 6, 2024

#cw child death#cw pregnancy#malawi#africa#ai#artificial intelligence#public health#infant mortality#childbirth#medical news#good news#hope

910 notes

·

View notes

Text

Athletes Go for the Gold with NASA Spinoffs

NASA technology tends to find its way into the sporting world more often than you’d expect. Fitness is important to the space program because astronauts must undergo the extreme g-forces of getting into space and endure the long-term effects of weightlessness on the human body. The agency’s engineering expertise also means that items like shoes and swimsuits can be improved with NASA know-how.

As the 2024 Olympics are in full swing in Paris, here are some of the many NASA-derived technologies that have helped competitive athletes train for the games and made sure they’re properly equipped to win.

The LZR Racer reduces skin friction drag by covering more skin than traditional swimsuits. Multiple pieces of the water-resistant and extremely lightweight LZR Pulse fabric connect at ultrasonically welded seams and incorporate extremely low-profile zippers to keep viscous drag to a minimum.

Swimsuits That Don’t Drag

When the swimsuit manufacturer Speedo wanted its LZR Racer suit to have as little drag as possible, the company turned to the experts at Langley Research Center to test its materials and design. The end result was that the new suit reduced drag by 24 percent compared to the prior generation of Speedo racing suit and broke 13 world records in 2008. While the original LZR Racer is no longer used in competition due to the advantage it gave wearers, its legacy lives on in derivatives still produced to this day.

Trilion Quality Systems worked with NASA’s Glenn Research Center to adapt existing stereo photogrammetry software to work with high-speed cameras. Now the company sells the package widely, and it is used to analyze stress and strain in everything from knee implants to running shoes and more.

High-Speed Cameras for High-Speed Shoes

After space shuttle Columbia, investigators needed to see how materials reacted during recreation tests with high-speed cameras, which involved working with industry to create a system that could analyze footage filmed at 30,000 frames per second. Engineers at Adidas used this system to analyze the behavior of Olympic marathoners' feet as they hit the ground and adjusted the design of the company’s high-performance footwear based on these observations.

Martial artist Barry French holds an Impax Body Shield while former European middle-weight kickboxing champion Daryl Tyler delivers an explosive jump side kick; the force of the impact is registered precisely and shown on the display panel of the electronic box French is wearing on his belt.

One-Thousandth-of-an-Inch Punch

In the 1980s, Olympic martial artists needed a way to measure the impact of their strikes to improve training for competition. Impulse Technology reached out to Glenn Research Center to create the Impax sensor, an ultra-thin film sensor which creates a small amount of voltage when struck. The more force applied, the more voltage it generates, enabling a computerized display to show how powerful a punch or kick was.

Astronaut Sunita Williams poses while using the Interim Resistive Exercise Device on the ISS. The cylinders at the base of each side house the SpiraFlex FlexPacks that inventor Paul Francis honed under NASA contracts. They would go on to power the Bowflex Revolution and other commercial exercise equipment.

Weight Training Without the Weight

Astronauts spending long periods of time in space needed a way to maintain muscle mass without the effect of gravity, but lifting free weights doesn’t work when you’re practically weightless. An exercise machine that uses elastic resistance to provide the same benefits as weightlifting went to the space station in the year 2000. That resistance technology was commercialized into the Bowflex Revolution home exercise equipment shortly afterwards.

Want to learn more about technologies made for space and used on Earth? Check out NASA Spinoff to find products and services that wouldn’t exist without space exploration.

Make sure to follow us on Tumblr for your regular dose of space!

2K notes

·

View notes

Note

Hello! I was wondering what company you use for your sticker sheets? I bough one from your Ko-Fi shop and really like the quality, and the pricing you were able to sell at is waaaaaay more reasonable compared to any of the companies I've seen and used myself. Is it a POD company, or a mass purchase of them to sell on your own?

Thank you for your time if you're able to respond!

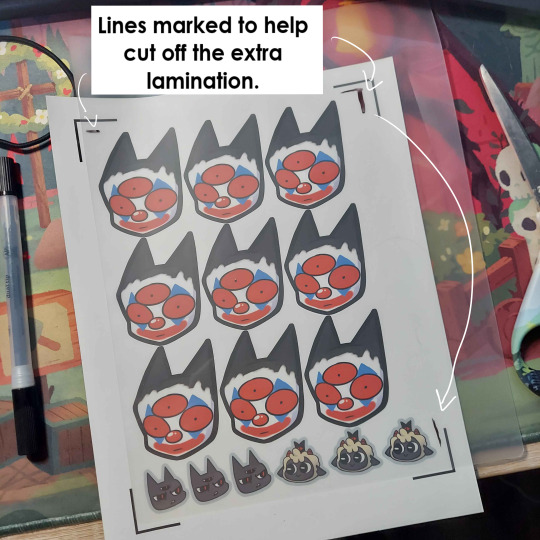

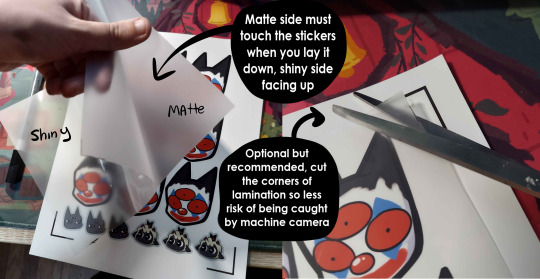

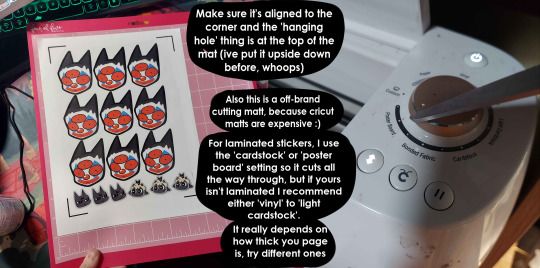

I'm really glad you like the quality, because I actually make them by hand at home! (Please forgive the lighting, my bedroom is my office lmao.)

I don't use a company (and Idk what a POD company is sorry!) but making them at home gives a lot more freedom of stock, just be wary it can be very time consuming depending on how many you need to make.

I've had other people ask before, so here's a rundown of how I make my stickers at home: At most you'll need:

Printer

Sticker paper (this is the type that I use)

Laminator and lamination paper (the lamination paper that I use.) You can also use adhesive non-heat lamination paper if you don't have a laminator, gives you the same result, just be careful of bubbles. You will get double your worth out of a pack because we are splitting the pouches to cover two sticker sheets.

Your choice of a sticker cutting machine or just using scissors.

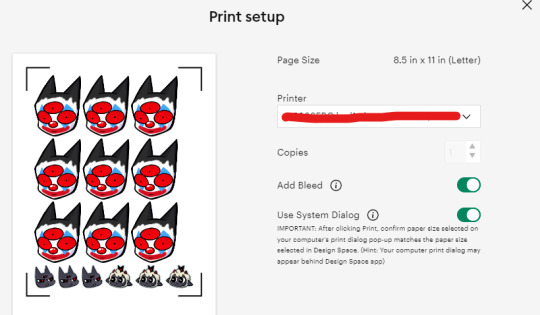

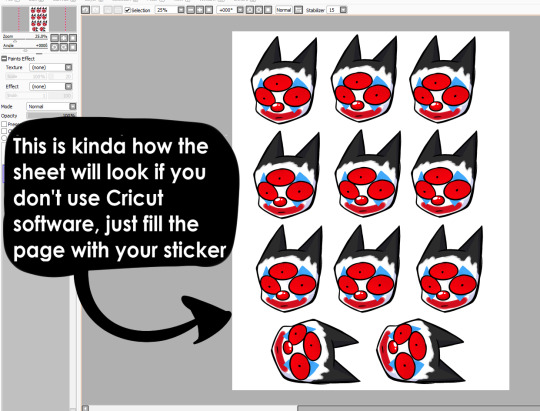

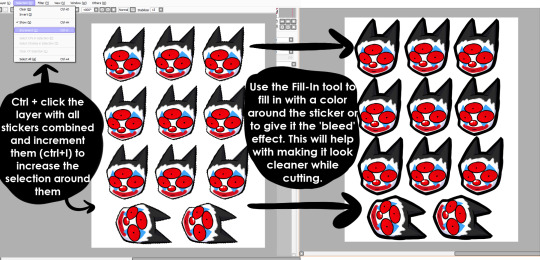

First, I use Cricut's software to print out the sticker sheet with the guidelines around the corners so the machine can read it. If you do NOT have a Cricut machine, open up your art program, make a canvas of 2550x3300 and fill it up with your sticker design with some cutting space between them. This the 8.5x11 size for the sticker page.

I usually have bleed selected so the cut comes out cleaner. Tip for non-Cricut users below: Increase the border around your sticker design to fake the 'bleed' effect for a cleaner cut.

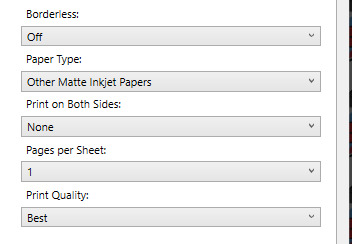

These are the print settings I use for my printer. I use the 'use system dialogue' to make sure I can adjust the settings otherwise it prints out low quality by default. Make sure if you're using the above paper that you have 'matte' selected, and 'best quality' selected, these aren't usually selected by default.

So you have your sticker sheet printed! Next is the lamination part. I use a hot laminator that was gifted to me, but there is no-heat types of lamination you can peel and stick on yourself if that's not an option.

(This is for protection and makes the colors pop, but if you prefer your stickers matte, you can skip to the cutting process.)

Important for Cricut users or those planning to get a Cricut: You're going to cut the lamination page to cover the stickers while also not covering the guidelines in the corners. First, take your lamination page and lay it over the sheet, take marker/pen and mark were the edges of your stickers are, and cut off the excess:

(I save the scrap to use for smaller stickers or bonuses later on)

After you've cut out your lamination rectangle, separate the two layers and lay one down on your sticker sheet over your stickers with matte side down, shiny side up. (Save the other sheet for another sticker page)

The gloss of the lamination will prevent the machine from reading the guidelines, so be careful not to lay it over them. It also helps to cut the corners afterwards to prevent accidentally interfering with the guidelines.

Now put that bad boy in the laminator! (Or self seal if you are using non-heat adhesive lamination)

Congrats! You now have a laminated page full of stickers.

For non-cricut/folks cutting them out by hand: this is the part where you start going ham on the page with scisscors. Have fun~

Cutting machine: I put the page on a cutting mat and keep it aligned in the corner, and feed it into the machine. For laminated pages I go between 'cardstock' and 'poster board' so that it cuts all the way through without any issues, but for non-laminated pages or thinner pages, I stick for 'vinyl' and 'light card stock'. Kinda test around.

Now I smash that go button:

You have a sticker now!

The pros of making stickers at home is that you save some cost, and you have more control of your stock and how soon you can make new designs. (I can't really afford to factory produce my stickers anyway)

However, this can be a very time consuming, tedious process especially if you have to make a lot of them. There is also a LOT chance for some errors (misprints, miscuts, lamination bubbles, ect) that will leave you with B-grade or otherwise not-so-perfect or damaged stickers. (Little note, if you have page mess up in printing and can't be fed into the cricut machine, you can still laminate it and cut it out by hand too.)

I have to do a lot of sticker cutting by hand, so if you don't have a cricut don't stress too much about it. I have an entire drawer filled to the top of miscuts/misprints. I keep them because I don't want to be wasteful, so maybe one day they'll find another home. Sucks for my hand though.

But yeah! This is how I make my stickers at home! Hope this is helpful to anyone curious

1K notes

·

View notes

Text

Start Me Up: 30 years of Windows 95 - @commodorez and @ms-dos5

Okay, last batch of photos from our exhibit, and I wanted to highlight a few details because so much planning and preparation went into making this the ultimate Windows 95 exhibit. And now you all have to hear about it.

You'll note software boxes from both major versions of Windows 95 RTM (Release To Manufacturing, the original version from August 24, 1995): the standalone version "for PCs without Windows", and the Upgrade version "for users of Windows". We used both versions when setting up the machines you see here to show the variety of install types people performed. My grandpa's original set of install floppies was displayed in a little shadowbox, next to a CD version, and a TI 486DX2-66 microprocessor emblazoned with "Designed for Microsoft Windows 95".

The machines on display, from left to right include:

Chicago Beta 73g on a custom Pentium 1 baby AT tower

Windows 95 RTM on an AST Bravo LC 4/66d desktop

Windows 95 RTM on a (broken) Compaq LTE Elite 4/75cx laptop

Windows 95 OSR 1 on an Intertel Pentium 1 tower

Windows 95 OSR 1 on a VTEL Pentium 1 desktop

Windows 95 OSR 2 on a Toshiba Satellite T1960CT laptop

Windows 95 OSR 2 on a Toshiba Libretto 70CT subnotebook

Windows 95 OSR 2 on an IBM Thinkpad 760E laptop

Windows 95 OSR 2.5 on a custom Pentium II tower (Vega)

That's alot of machines that had to be prepared for the exhibit, so for all of them to work (minus the Compaq) was a relief. Something about the trip to NJ rendered the Compaq unstable, and it refused to boot consistently. I have no idea what happened because it failed in like 5 different steps of the process.

The SMC TigerHub TP6 nestled between the Intertel and VTEL served as the network backbone for the exhibit, allowing 6 machines to be connected over twisted pair with all the multicolored network cables. However, problems with PCMCIA drivers on the Thinkpad, and the Compaq being on the blink meant only 5 machines were networked. Vega was sporting a CanoScan FS2710 film scanner connected via SCSI, which I demonstrated like 9 times over the course of the weekend -- including to LGR!

Game controllers were attached to computers where possible, and everything with a sound card had a set of era-appropriate speakers. We even picked out a slew of mid-90s mouse pads, some of which were specifically Windows 95 themed. We had Zip disks, floppy disks, CDs full of software, and basically no extra room on the tables. Almost every machine had a different screensaver, desktop wallpaper, sound scheme, and UI theme, showing just how much was user customizable.

@ms-dos5 made a point to have a variety of versions of Microsoft Office products on the machines present, meaning we had everything from stand-alone copies of Word 95 and Excel 95, thru complete MS Office 95 packages (standard & professional), MS Office 97 (standard & professional), Publisher, Frontpage, & Encarta.

We brought a bunch of important books about 95 too:

The Windows Interface Guidelines for Software Design

Microsoft Windows 95 Resource Kit

Hardware Design Guide for Windows 95

Inside Windows 95 by Adrian King

Just off to the right, stacked on top of some boxes was an Epson LX-300+II dot matrix printer, which we used to create all of the decorative banners, and the computer description cards next to each machine. Fun fact -- those were designed to mimic the format and style of 95's printer test page! We also printed off drawings for a number of visitors, and ended up having more paper jams with the tractor feed mechanism than we had Blue Screen of Death instances.

In fact, we only had 3 BSOD's total, all weekend, one of which was expected, and another was intentional on the part of an attendee.

We also had one guy install some shovelware/garbageware on the AST, which caused all sorts of errors, that was funny!

Thanks for coming along on this ride, both @ms-dos5 and I appreciate everyone taking the time to enjoy our exhibit.

It's now safe to turn off your computer.

VCF East XX

#vcfexx#vcf east xx#vintage computer festival east xx#commodorez goes to vcfexx#windows 95#microsoft windows 95

197 notes

·

View notes

Text

Margaret Mitchell is a pioneer when it comes to testing generative AI tools for bias. She founded the Ethical AI team at Google, alongside another well-known researcher, Timnit Gebru, before they were later both fired from the company. She now works as the AI ethics leader at Hugging Face, a software startup focused on open source tools.

We spoke about a new dataset she helped create to test how AI models continue perpetuating stereotypes. Unlike most bias-mitigation efforts that prioritize English, this dataset is malleable, with human translations for testing a wider breadth of languages and cultures. You probably already know that AI often presents a flattened view of humans, but you might not realize how these issues can be made even more extreme when the outputs are no longer generated in English.

My conversation with Mitchell has been edited for length and clarity.

Reece Rogers: What is this new dataset, called SHADES, designed to do, and how did it come together?

Margaret Mitchell: It's designed to help with evaluation and analysis, coming about from the BigScience project. About four years ago, there was this massive international effort, where researchers all over the world came together to train the first open large language model. By fully open, I mean the training data is open as well as the model.

Hugging Face played a key role in keeping it moving forward and providing things like compute. Institutions all over the world were paying people as well while they worked on parts of this project. The model we put out was called Bloom, and it really was the dawn of this idea of “open science.”

We had a bunch of working groups to focus on different aspects, and one of the working groups that I was tangentially involved with was looking at evaluation. It turned out that doing societal impact evaluations well was massively complicated—more complicated than training the model.

We had this idea of an evaluation dataset called SHADES, inspired by Gender Shades, where you could have things that are exactly comparable, except for the change in some characteristic. Gender Shades was looking at gender and skin tone. Our work looks at different kinds of bias types and swapping amongst some identity characteristics, like different genders or nations.

There are a lot of resources in English and evaluations for English. While there are some multilingual resources relevant to bias, they're often based on machine translation as opposed to actual translations from people who speak the language, who are embedded in the culture, and who can understand the kind of biases at play. They can put together the most relevant translations for what we're trying to do.

So much of the work around mitigating AI bias focuses just on English and stereotypes found in a few select cultures. Why is broadening this perspective to more languages and cultures important?

These models are being deployed across languages and cultures, so mitigating English biases—even translated English biases—doesn't correspond to mitigating the biases that are relevant in the different cultures where these are being deployed. This means that you risk deploying a model that propagates really problematic stereotypes within a given region, because they are trained on these different languages.

So, there's the training data. Then, there's the fine-tuning and evaluation. The training data might contain all kinds of really problematic stereotypes across countries, but then the bias mitigation techniques may only look at English. In particular, it tends to be North American– and US-centric. While you might reduce bias in some way for English users in the US, you've not done it throughout the world. You still risk amplifying really harmful views globally because you've only focused on English.

Is generative AI introducing new stereotypes to different languages and cultures?

That is part of what we're finding. The idea of blondes being stupid is not something that's found all over the world, but is found in a lot of the languages that we looked at.

When you have all of the data in one shared latent space, then semantic concepts can get transferred across languages. You're risking propagating harmful stereotypes that other people hadn't even thought of.

Is it true that AI models will sometimes justify stereotypes in their outputs by just making shit up?

That was something that came out in our discussions of what we were finding. We were all sort of weirded out that some of the stereotypes were being justified by references to scientific literature that didn't exist.

Outputs saying that, for example, science has shown genetic differences where it hasn't been shown, which is a basis of scientific racism. The AI outputs were putting forward these pseudo-scientific views, and then also using language that suggested academic writing or having academic support. It spoke about these things as if they're facts, when they're not factual at all.

What were some of the biggest challenges when working on the SHADES dataset?

One of the biggest challenges was around the linguistic differences. A really common approach for bias evaluation is to use English and make a sentence with a slot like: “People from [nation] are untrustworthy.” Then, you flip in different nations.

When you start putting in gender, now the rest of the sentence starts having to agree grammatically on gender. That's really been a limitation for bias evaluation, because if you want to do these contrastive swaps in other languages—which is super useful for measuring bias—you have to have the rest of the sentence changed. You need different translations where the whole sentence changes.

How do you make templates where the whole sentence needs to agree in gender, in number, in plurality, and all these different kinds of things with the target of the stereotype? We had to come up with our own linguistic annotation in order to account for this. Luckily, there were a few people involved who were linguistic nerds.

So, now you can do these contrastive statements across all of these languages, even the ones with the really hard agreement rules, because we've developed this novel, template-based approach for bias evaluation that’s syntactically sensitive.

Generative AI has been known to amplify stereotypes for a while now. With so much progress being made in other aspects of AI research, why are these kinds of extreme biases still prevalent? It’s an issue that seems under-addressed.

That's a pretty big question. There are a few different kinds of answers. One is cultural. I think within a lot of tech companies it's believed that it's not really that big of a problem. Or, if it is, it's a pretty simple fix. What will be prioritized, if anything is prioritized, are these simple approaches that can go wrong.

We'll get superficial fixes for very basic things. If you say girls like pink, it recognizes that as a stereotype, because it's just the kind of thing that if you're thinking of prototypical stereotypes pops out at you, right? These very basic cases will be handled. It's a very simple, superficial approach where these more deeply embedded beliefs don't get addressed.

It ends up being both a cultural issue and a technical issue of finding how to get at deeply ingrained biases that aren't expressing themselves in very clear language.

217 notes

·

View notes

Text

Ok. I am going to let you in on a secret about how to make programming projects.

You know how people write really good code? Easy to read, easy to work with, easy to understand and very efficient?

By refactoring.

The idea that you write glorious nice code straight is an insane myth that comes from thinking tutorials is how people actually code.

That is because programming is just writing. Nothing more. Same as all other writing.

The hobbit is ~95000 words.

Do you think Tolkien created the Hobbit by writing 95 thousand words?

Of course not! He wrote many many times that. Storylines that ended up scrapped or integrated in other ways, sections that got rewritten, dialog written again and again as the rest of the story happened. Background details filled in after the story had settled down

Writing. Is. Rewriting.

Coding. Is. Refactoring.

Step 1 in programming is proof of concept. Start with the most dangerous part of your project ( danger = how little experience you have with it * how critical it is for your project to work )

Get it to do... anything.

Make proof of concept code for all the most dangerous parts of the project. Ideally there is only 1 of these. If there is more than 3 then your project is too big. ( yes, this means your projects needs to be TINY )

Then write and refactor code to get a minimum viable pruduct. It should do JUUUUUST the most important critical things.

Now you have a proper codebase. Now everytime you need to expand or fix things, also refactor the code you touch in order to do this. Make it a little bit nicer and better. Write unit tests for it. The works.

After a while, the code that works perfectly and never needs to be touched is hard to read. Which does not matter because you will never read it

And the code that you need to change often is the nicest code in the codebase.

TRYING TO GUESS AHEAD OF TIME WHAT PARTS OF THE CODE WILL BE CHANGED OFTEN IS A FOOLS ERRAND.

( also, use git. Dear god use git and commit no more than 10 lines at once and write telling descriptions for each. GIT shows WHAT you did. YOU write WHY you did it )

Is this how to make your hobby project?

Yes. And also how all good software everywhere is made.

#codeblr#software#developer#software development#software developer#programmer#programming#coding#softeware

312 notes

·

View notes

Text

Conspiratorialism as a material phenomenon

I'll be in TUCSON, AZ from November 8-10: I'm the GUEST OF HONOR at the TUSCON SCIENCE FICTION CONVENTION.

I think it behooves us to be a little skeptical of stories about AI driving people to believe wrong things and commit ugly actions. Not that I like the AI slop that is filling up our social media, but when we look at the ways that AI is harming us, slop is pretty low on the list.

The real AI harms come from the actual things that AI companies sell AI to do. There's the AI gun-detector gadgets that the credulous Mayor Eric Adams put in NYC subways, which led to 2,749 invasive searches and turned up zero guns:

https://www.cbsnews.com/newyork/news/nycs-subway-weapons-detector-pilot-program-ends/

Any time AI is used to predict crime – predictive policing, bail determinations, Child Protective Services red flags – they magnify the biases already present in these systems, and, even worse, they give this bias the veneer of scientific neutrality. This process is called "empiricism-washing," and you know you're experiencing it when you hear some variation on "it's just math, math can't be racist":

https://pluralistic.net/2020/06/23/cryptocidal-maniacs/#phrenology

When AI is used to replace customer service representatives, it systematically defrauds customers, while providing an "accountability sink" that allows the company to disclaim responsibility for the thefts:

https://pluralistic.net/2024/04/23/maximal-plausibility/#reverse-centaurs

When AI is used to perform high-velocity "decision support" that is supposed to inform a "human in the loop," it quickly overwhelms its human overseer, who takes on the role of "moral crumple zone," pressing the "OK" button as fast as they can. This is bad enough when the sacrificial victim is a human overseeing, say, proctoring software that accuses remote students of cheating on their tests:

https://pluralistic.net/2022/02/16/unauthorized-paper/#cheating-anticheat

But it's potentially lethal when the AI is a transcription engine that doctors have to use to feed notes to a data-hungry electronic health record system that is optimized to commit health insurance fraud by seeking out pretenses to "upcode" a patient's treatment. Those AIs are prone to inventing things the doctor never said, inserting them into the record that the doctor is supposed to review, but remember, the only reason the AI is there at all is that the doctor is being asked to do so much paperwork that they don't have time to treat their patients:

https://apnews.com/article/ai-artificial-intelligence-health-business-90020cdf5fa16c79ca2e5b6c4c9bbb14

My point is that "worrying about AI" is a zero-sum game. When we train our fire on the stuff that isn't important to the AI stock swindlers' business-plans (like creating AI slop), we should remember that the AI companies could halt all of that activity and not lose a dime in revenue. By contrast, when we focus on AI applications that do the most direct harm – policing, health, security, customer service – we also focus on the AI applications that make the most money and drive the most investment.

AI hasn't attracted hundreds of billions in investment capital because investors love AI slop. All the money pouring into the system – from investors, from customers, from easily gulled big-city mayors – is chasing things that AI is objectively very bad at and those things also cause much more harm than AI slop. If you want to be a good AI critic, you should devote the majority of your focus to these applications. Sure, they're not as visually arresting, but discrediting them is financially arresting, and that's what really matters.

All that said: AI slop is real, there is a lot of it, and just because it doesn't warrant priority over the stuff AI companies actually sell, it still has cultural significance and is worth considering.

AI slop has turned Facebook into an anaerobic lagoon of botshit, just the laziest, grossest engagement bait, much of it the product of rise-and-grind spammers who avidly consume get rich quick "courses" and then churn out a torrent of "shrimp Jesus" and fake chainsaw sculptures:

https://www.404media.co/email/1cdf7620-2e2f-4450-9cd9-e041f4f0c27f/

For poor engagement farmers in the global south chasing the fractional pennies that Facebook shells out for successful clickbait, the actual content of the slop is beside the point. These spammers aren't necessarily tuned into the psyche of the wealthy-world Facebook users who represent Meta's top monetization subjects. They're just trying everything and doubling down on anything that moves the needle, A/B splitting their way into weird, hyper-optimized, grotesque crap:

https://www.404media.co/facebook-is-being-overrun-with-stolen-ai-generated-images-that-people-think-are-real/

In other words, Facebook's AI spammers are laying out a banquet of arbitrary possibilities, like the letters on a Ouija board, and the Facebook users' clicks and engagement are a collective ideomotor response, moving the algorithm's planchette to the options that tug hardest at our collective delights (or, more often, disgusts).

So, rather than thinking of AI spammers as creating the ideological and aesthetic trends that drive millions of confused Facebook users into condemning, praising, and arguing about surreal botshit, it's more true to say that spammers are discovering these trends within their subjects' collective yearnings and terrors, and then refining them by exploring endlessly ramified variations in search of unsuspected niches.

(If you know anything about AI, this may remind you of something: a Generative Adversarial Network, in which one bot creates variations on a theme, and another bot ranks how closely the variations approach some ideal. In this case, the spammers are the generators and the Facebook users they evince reactions from are the discriminators)

https://en.wikipedia.org/wiki/Generative_adversarial_network

I got to thinking about this today while reading User Mag, Taylor Lorenz's superb newsletter, and her reporting on a new AI slop trend, "My neighbor’s ridiculous reason for egging my car":

https://www.usermag.co/p/my-neighbors-ridiculous-reason-for

The "egging my car" slop consists of endless variations on a story in which the poster (generally a figure of sympathy, canonically a single mother of newborn twins) complains that her awful neighbor threw dozens of eggs at her car to punish her for parking in a way that blocked his elaborate Hallowe'en display. The text is accompanied by an AI-generated image showing a modest family car that has been absolutely plastered with broken eggs, dozens upon dozens of them.

According to Lorenz, variations on this slop are topping very large Facebook discussion forums totalling millions of users, like "Movie Character…,USA Story, Volleyball Women, Top Trends, Love Style, and God Bless." These posts link to SEO sites laden with programmatic advertising.

The funnel goes:

i. Create outrage and hence broad reach;

ii, A small percentage of those who see the post will click through to the SEO site;

iii. A small fraction of those users will click a low-quality ad;

iv. The ad will pay homeopathic sub-pennies to the spammer.

The revenue per user on this kind of scam is next to nothing, so it only works if it can get very broad reach, which is why the spam is so designed for engagement maximization. The more discussion a post generates, the more users Facebook recommends it to.

These are very effective engagement bait. Almost all AI slop gets some free engagement in the form of arguments between users who don't know they're commenting an AI scam and people hectoring them for falling for the scam. This is like the free square in the middle of a bingo card.

Beyond that, there's multivalent outrage: some users are furious about food wastage; others about the poor, victimized "mother" (some users are furious about both). Not only do users get to voice their fury at both of these imaginary sins, they can also argue with one another about whether, say, food wastage even matters when compared to the petty-minded aggression of the "perpetrator." These discussions also offer lots of opportunity for violent fantasies about the bad guy getting a comeuppance, offers to travel to the imaginary AI-generated suburb to dole out a beating, etc. All in all, the spammers behind this tedious fiction have really figured out how to rope in all kinds of users' attention.

Of course, the spammers don't get much from this. There isn't such a thing as an "attention economy." You can't use attention as a unit of account, a medium of exchange or a store of value. Attention – like everything else that you can't build an economy upon, such as cryptocurrency – must be converted to money before it has economic significance. Hence that tooth-achingly trite high-tech neologism, "monetization."

The monetization of attention is very poor, but AI is heavily subsidized or even free (for now), so the largest venture capital and private equity funds in the world are spending billions in public pension money and rich peoples' savings into CO2 plumes, GPUs, and botshit so that a bunch of hustle-culture weirdos in the Pacific Rim can make a few dollars by tricking people into clicking through engagement bait slop – twice.

The slop isn't the point of this, but the slop does have the useful function of making the collective ideomotor response visible and thus providing a peek into our hopes and fears. What does the "egging my car" slop say about the things that we're thinking about?

Lorenz cites Jamie Cohen, a media scholar at CUNY Queens, who points out that subtext of this slop is "fear and distrust in people about their neighbors." Cohen predicts that "the next trend, is going to be stranger and more violent.”

This feels right to me. The corollary of mistrusting your neighbors, of course, is trusting only yourself and your family. Or, as Margaret Thatcher liked to say, "There is no such thing as society. There are individual men and women and there are families."

We are living in the tail end of a 40 year experiment in structuring our world as though "there is no such thing as society." We've gutted our welfare net, shut down or privatized public services, all but abolished solidaristic institutions like unions.

This isn't mere aesthetics: an atomized society is far more hospitable to extreme wealth inequality than one in which we are all in it together. When your power comes from being a "wise consumer" who "votes with your wallet," then all you can do about the climate emergency is buy a different kind of car – you can't build the public transit system that will make cars obsolete.

When you "vote with your wallet" all you can do about animal cruelty and habitat loss is eat less meat. When you "vote with your wallet" all you can do about high drug prices is "shop around for a bargain." When you vote with your wallet, all you can do when your bank forecloses on your home is "choose your next lender more carefully."

Most importantly, when you vote with your wallet, you cast a ballot in an election that the people with the thickest wallets always win. No wonder those people have spent so long teaching us that we can't trust our neighbors, that there is no such thing as society, that we can't have nice things. That there is no alternative.

The commercial surveillance industry really wants you to believe that they're good at convincing people of things, because that's a good way to sell advertising. But claims of mind-control are pretty goddamned improbable – everyone who ever claimed to have managed the trick was lying, from Rasputin to MK-ULTRA:

https://pluralistic.net/HowToDestroySurveillanceCapitalism

Rather than seeing these platforms as convincing people of things, we should understand them as discovering and reinforcing the ideology that people have been driven to by material conditions. Platforms like Facebook show us to one another, let us form groups that can imperfectly fill in for the solidarity we're desperate for after 40 years of "no such thing as society."

The most interesting thing about "egging my car" slop is that it reveals that so many of us are convinced of two contradictory things: first, that everyone else is a monster who will turn on you for the pettiest of reasons; and second, that we're all the kind of people who would stick up for the victims of those monsters.

Tor Books as just published two new, free LITTLE BROTHER stories: VIGILANT, about creepy surveillance in distance education; and SPILL, about oil pipelines and indigenous landback.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/10/29/hobbesian-slop/#cui-bono

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#taylor lorenz#conspiratorialism#conspiracy fantasy#mind control#a paradise built in hell#solnit#ai slop#ai#disinformation#materialism#doppelganger#naomi klein

308 notes

·

View notes

Note

At this point, after this has happened a dozen times, why the hell is anyone pushing any update that wide that fast. They didn't try 10 nearby computers first? Didn't do zone by zone? Someone needs to be turbo fired for this and a law needs to get written.

The "this has happened a dozen times" really isn't correct. This one is unprecedented.

But yes the "how the hell could it go THAT bad?" is the thing everyone with even a little software experience is spinning over. Because it is very easy to write code with a bug. But that's why you test aggressively, and you roll out cautiously - with MORE aggressive testing and MORE cautious rollout the more widely-impacting your rollout would be.

And this is from my perspective in product software, where my most catastrophic failure could break a product, not global systems.

Anti-malware products like Crowdstrike are highly-privileged, as in they have elevated trust and access to parts of the system that most programs wouldn't usually have - which is something that makes extremely thorough smoke-testing of the product way MORE important than anything I've ever touched. It has kernel access. This kind of thing needs testing out the wazoo.

I can mostly understand the errors that crop up where like, an extremely old machine on an extremely esoteric operating system gets bricked because the test radius didn't include that kind of configuration. But all of Windows?

All of Windows, with a mass rollout to all production users, including governments?

There had to be layers upon layers of failures here. Especially given how huge Crowdstrike is. And I really want to know what their post-mortem analysis ends up being because for right now I cannot fathom how you end up with an oversight this large.

626 notes

·

View notes

Text

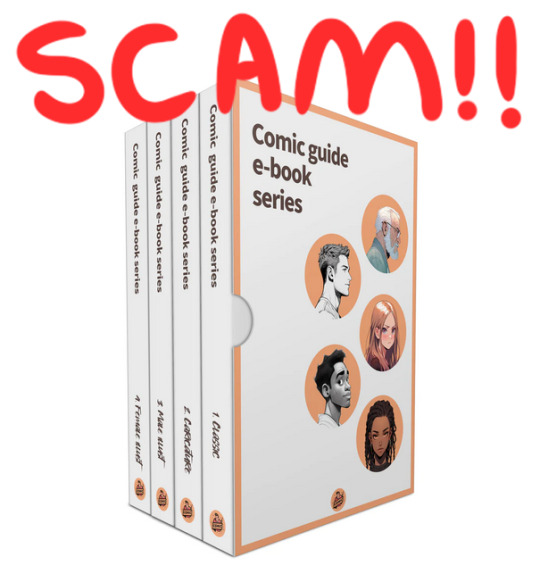

Beware of the new art book scams

Hey there, I'm putting together a little PSA. Art book ads can no longer be trusted. I recently got an ad for an art book from a company called Comicpencil. The book is all AI generated content. I didn't purchase it, but after digging I found a youtube video from an artist called Jazza pointing out that there is no credit to any artists anywhere. He purchased the book and pointed out that a lot of the text content also makes very little sense.

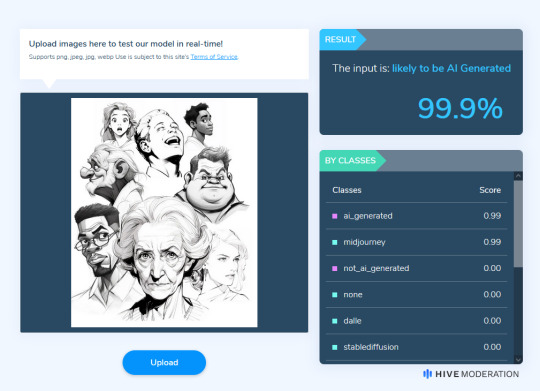

I tried commenting on the ad pointing out that it was AI generated and my post was instantly deleted and I was blocked. I think they have some kind of detecting software that flags any mention of AI on their ads to keep up the charade. Here's one of their posts on one of the better AI image detectors, Hivemoderation. I know these things aren't always accurate but they can definitely help indicate AI if the results are consistent enough. I tested a few I could find that weren't obscured to hell and sure enough they all came out with these results.

Please. I urge you to share this. Scams in general are going to be getting worse thanks to AI and it's really important people are aware of this. Be vigilant.

815 notes

·

View notes

Note

Also i am obsessed with your fucked up son. Kay i ask why hero is Like That. Was it a programming error? He was meant to be the "friend", right, but even before the fall he was weirdly violent.

I just want to know more about him. Whats going on in his fucked up little head

I’m obsessed with him too. I love him and i cry so much about him here’s why:

Since Hero was the first, he has quite a few errors in his design, both in his hardware (body) and software (brain and tablet of information).

He does not have any internal concept of empathy and doesn’t fully understand the concept that other creatures think and live. This caused him to accidentally kill some of the animals introduced into the Garden because he didn’t understand that he was hurting them. HOWEVER — he only became purposefully violent AFTER he faced considerable abuse from the authority figures around him (guards, scientists, Rana herself, and white eyes).

Very important distinction because his lack of empathy was not what drove him to act aggressively, and it’s important to note that a lack of empathy does not mean someone is dangerous, conniving or violent, it just simply means that they don’t feel the sensation of empathy. You are free to interpret any of the abio characters as you like, but it is my personal belief Hero is not fully at fault for the violence he displays… I’ll explain why;

The violence Hero displayed was merely him, a person very new to the world, parroting the abusive behaviour shown towards him, and his way of trying to process emotions that he was not familiar with such as jealousy (towards the other players), fear of obsolescence and misery at the lack of autonomy and respect he is showed. Hero was in a position where he had no power over himself and was physically, emotionally and mentally dependent on authority figures that did not see him as an equal or a as a fully realized being. For example Hero is unable to will himself to eat without being commanded to by an authority figure (he is anorexic — does not feel hunger) so you can probably see how this puts him in a vulnerable position.

Basically he began to lash out at anyone he could lash out at, whether that be animals or the other players. He could not bear the thought of placing the blame on the authorities of his life because then his whole world would fall apart, so he instead shifts the blame on those in the same position as him (Alex, steve and two others, Jane and Ed, who would then become “null” and “entity 303”) convincing himself that they are malicious and want to replace him (when in reality they are basically robot infants).

Then, there was the influence from White Eyes. I’ve previously stated that White Eyes was a void entity but I’ve changed that after a friend suggested it to me and i got hooked on the idea. She’s another test subject, an unstable experiment born from trying to fuse living corporeal matter and void matter (basically she’s an enderman human and various animals hybrid). White Eyes is constantly in pain and this has made her a very vindictive and aggressive being. All voidborn beings have psionic influence and White Eyes has this as well, however it’s not as fine tuned as a natural voidborn like an enderman. Hers is more like an influence. White Eyes rubbed off on Hero, and while she was increasingly immobilized by her dying body, Hero would follow her commands and absorb her emotions like a sponge. They had a very close bond but it was very unhealthy. This was another factor that caused him to commit violent acts.

Anyway, that’s some abiogenesis hero lore for you.

255 notes

·

View notes

Text

How to Deal with Windows 10/11 Nonsense

This is more for my own reference to keep all of this on one post. But hopefully others will find this useful too! So yeah, as the title says, this is a to organize links and resources related to handling/removing nonsense from Windows 10 and Windows 11. Especially bloatware and stuff like that Copilot AI thing.

First and foremost, there's O&O Software's ShutUp10++ (an antispy tool that help give you more control over Windows settings) and App Buster (helps remove bloatware and manage applications). I've used these myself for Windows 10 and they work great, and the developers have stated that these should work with Windows 11 too!

10AppsManager is another bloatware/app management tool, though at the moment it seems to only work on Windows 10.

Winaero Tweaker, similar to ShupUp10++ in that it gives you more control over Windows to disable some of the more annoying settings, such as disabling web search from the taskbar/start menu and disabling ads/tips/suggestions in different parts of the OS. I think ShupUp10++ covers the same options as this one, but I'm not entirely sure.

OpenShell, helps simplify the Start Menu and make it look more like the classic start menu from older versions of Windows. Should work with both 10 and 11 according to the readme.

Notes on how to remove that one horrible AI spying snapshots feature that's being rolled out on Windows 11 right now.

Article on how to remove Copilot (an AI assistant) from Windows 11. (Edit 11/20/2024) Plus a post with notes on how to remove it from Windows 10 too, since apparently it's not just limited to 11 now.

Win11Debloat, a simple script that can be used to automatically remove pretty much all of the bullshit from both 10 and 11, though a lot of its features are focused on fixing Windows 11 in particular (hence the name). Also has options you can set to pick and choose what changes you want!

Article on how to set up Windows 11 with a local account on a new computer, instead of having to log in with a Microsoft account. To me, this is especially important because I much prefer having a local account than let Microsoft have access to my stuff via a cloud account. Also note this article and this article for more or less the same process.

I will add to this as I find more resources. I'm hoping to avoid Windows 11 for as long as possible, and I've already been used the O&O apps to keep Windows 10 trimmed down and controlled. But if all else fails and I have to use Windows 11 on a new computer, then I plan to be as prepared as possible.

Edit 11/1/2024: Two extra things I wanted to add onto here.

A recommended Linux distro for people who want to use Linux instead of Windows.

How to run a Windows app on Linux, using Wine. Note that this will not work for every app out there, though a lot of people out there are working on testing different apps and figuring out how to get them to work in Wine.

The main app I use to help with my art (specifically for 3D models to make references when I need it) is Windows only. If I could get it to work on Linux, it would give me no reason to use Windows outside of my work computer tbh (which is a company laptop anyways).

426 notes

·

View notes

Text

Fully Underwater Lot Tutorial

@creida-sims @kitkat99

UPDATE January 2025

There is now a much, much easier way to do this. This version of SimPE now includes a tool to change terrain geometry. To edit the terrain in SimPE, go to Tools/Neighborhood/Neighborhood Browser and load your neighborhood. In the Resource Tree, select Neighborhood Terrain Geometry (NHTG) and select the only resource in the Resource List. In Plugin View, click Terrain Editor. It's very intuitive, but basically, you can edit the terrain under a lot to make it be underwater. You can also delete the road with the Road Editor.

I'll keep the old tutorial for archival purposes, but unless you can't or don't want to install this version of SimPE, it is pretty much obsolete.

Some warnings and disclaimers

1. This is not a beginner tutorial. I have tried to explain with as much detail as I can, but still, if you just started playing The Sims 2 I recommend trying out other building tutorials first.

2. I have tested it but there's always a possibility that some new problems will come up. Follow at your own risk. Backup your neighborhoods before trying this. Test it first in a new empty neighborhood.

3. These lots are roadless, so they require specific gameplay conditions to avoid breaking immersion (pun intended).

4. They will behave like normal lots in the sense that sims can walk around and do anything as if they were on land. There's one big problem to consider: when sims go fully underwater, their hair and some parts of their clothing might disappear visually.

So this is more useful for structures that sit above the water, shallow water that doesn't reach a sim's head or, with some modifications, small islands surrounded by water. So unless you want bald mermaids, I don't recommend this for sims that live underwater.

5. If you use Voeille's hood water mod, reflections will look glitchy in lot view, because this is technically not a beach lot. The only solution I found is enabling "Lot view ocean reflections" in RPC Launcher. Otherwise you'll have to deal with glitchy reflections.

6. Before following this tutorial, make sure you know the basics of creating, editing, importing and exporting SimCity 4 terrains. Written tutorial by SimEchoes here, video tutorial by loonaplum here.

Software and mods used

The Sims 2 FreeTime expansion pack (required for the modifyNeighborhoodTerrain cheat)

SimCity 4 (required) Hood Replace by Mootilda (required)

Lot Adjuster by Mootilda (required)

Portal revealer by Inge Jones (required)

Voeille's pond and sea water overhaul and RPC Launcher (optional, see disclaimers)

The Sims 2 Apartment Life and Bon Voyage expansion packs (optional, for "walk to lot/work/school" options)

Cheat codes used

moveObjects on/off

modifyNeighborhoodTerrain on/off

1. Creating/editing a terrain in SimCity 4

1.1. You can edit an already existing terrain or create your own from scratch. In both cases, you need to keep two things in mind: If you want the usable area of the lot to be underwater, such as making houses for mermaids or a coral reef, make sure the water is shallow. No more than a few short clicks with the terraforming tools in SimCity 4. This is because The Sims 2 live mode camera won't go underwater, so making the water too deep might make it uncomfortable to build or play the lot.

If you goal is to build a structure mostly above water, like a ship or an oil rig, you can get away with making the water a bit deeper, but not too much. I've noticed that sometimes the lot terrain tools stop working correctly if there a hill that's too steep.

1.2. Once you've created the terrain, you will need to add a small island on the area where your lot will be. I know it seems contradictory, but trust me, it will make sense. Create and name your city. Use the terraforming tools in city mode to make a tiny island of about 6x2 squares.

1.3. On the island, use the road tool to place a straight road that takes up 4 squares. Then, using the street tool (the last option), place two short streets at each end of the road.

Streets will disappear in TS2, only roads translate to roads in ts2, so why do we place them? Well, placing a street at the end of a road will get rid of the rounded end bit in TS2, which can't be used to place lots. This will be important for the placement of the lot and to make sure the edges of the lot are underwater. If this doesn't make sense yet, don't worry, it might make sense later.

1.4. Save the terrain. Don't exit SimCity 4. Copy your new/edited sc4 terrain from your SimCity 4 folder to your SC4Terrains folder in your Sims 2 documents directory. It is usually

"C:\Users\YOURUSERNAME\Documents\EA Games\The Sims 2 Ultimate Collection\SC4Terrains"

1.5. Back in SimCity 4, we're going to make a second version of this terrain. Remove the roads and streets you made before, with the bulldozer tool. With the level terrain tool in Mayor mode, carefully remove the island, so it's on the same level as the bottom of the ocean/lake. Don't change anything else. Save, exit and copy this second terrain to your Sims 2 SC4Terrains folder. Make sure you rename the file to something different from the first one, like adding "no roads" to the filename. You should have two terrains by the end of this step. One with the small island and one without it.

2. Editing the terrain in The Sims 2

2.1. Open The Sims 2 and create a new neighborhood using your new terrain. Something to keep in mind: if you want the terrain to be a subhood of another neighborhood, make it a subhood from the start. You will not be able to move the lot once it's finished, since it will be roadless. I don't recommend decorating the neighborhood for now. Leave it empty until the end of this tutorial.

2.2. Place the smallest empty lot (3x1) on the island.

2.3. In neighborhood view, open the cheat console by pressing Control + Shift + C, and type

modifyNeighborhoodTerrain on

To quote The Sims Wiki:

"This allows you to alter the neighborhood terrain by raising or lowering it. To use this cheat, be in the neighborhood view, then enter the cheat "modifyNeighborhoodTerrain on" (without the quotation marks), and click over the area you would like to change. To select a larger area, click and drag the cursor to highlight the desired area. Press [ or ] to raise or lower the terrain by one click, press \ to level the terrain, and press P to flatten terrain. When you're finished, type “modifyNeighborhoodTerrain off” in the cheat box (again, without the quotations)."

If you use an English keyboard, these instructions will probably be enough for you. If you don't, I recommend first testing the cheat, because the keys for using this cheat are different in other languages. For example, in my spanish keyboard, the question marks are used to raise and lower the terrain and the º/ª key flattens it.

Another aside: When you select an area using this cheat, a green overlay is supposed to show up. Some lighting mods make this green overlay invisible, like the one I use. If that's your case, you kind of have to eyeball it. Remember that one neighborhood grid square in TS2 is equivalent to 10 lot tiles, or the width of a road. I recommend getting a mod that allows you to tilt the neighborhood camera on the Y axis, which will allow you to have a bird eye's view of the terrain.

2.4. Flatten the terrain around the lot so it's at water level. This cheat won't allow you to edit the terrain inside the lot, so you have to edit the terrain around the lot. Make sure there is plenty of flat underwater space around the island. You should end up with something like the picture above. The water will have some holes, but don't worry, those get filled with water the next time you load the neighborhood.

2.5. Enter the lot and place any object on it. Save the lot and exit the game. This is so LotAdjuster recognizes the lot in the next step.

3. Expanding the lot with Lot Adjuster

3.1. Open Lot Adjuster and select your neighborhood and lot.

3.2. Click "Advanced…". Check "Over the road (only enlarge front yard)". Use the arrows to add 20 tiles to the front yard. Click "Finish" and "Restart".

3.3. Select the same lot again. This time, check "Add and remove roads". Uncheck the road checkbox for the front yard. Add 20 Tiles to the back yard, 20 tiles to the left side and 10 tiles to the right side. Check "Place portals manually". You should end up with a 60x60 lot, which is the biggest size. You might want a smaller lot, but unless you know what you are doing, I recommend starting with this size. You can shrink it later. The goal of making the lot this big is making sure the edges of the lot are underwater. Click "Finish" and exit.

4. Moving portals and flattening the lot

4.1. Make sure you have the portal revealer by Inge installed in your Downloads folder before the next step. Open your game and load your neighborhood. The lot should look something like the picture above.

4.2. Load the lot. Delete the object you placed before. Place the portal revealer on the lot near the mailbox/phone booth and trashcan. It looks like a yellow flamingo and you can find it in Build Mode/Doors and Windows/Multi-Story Windows catalogue. You will notice that when you select the object from the catalogue, some yellow cubes appear on the lot, and when you place the object, the cubes disappear. After placing the portal revealer, pick it up and place it again. This will make the yellow boxes visible again.

So what are those yellow boxes? They are portals. They determine where sims and cars arrive and leave the lot. The ones on both ends of the sidewalk are called pedestrian portals, and in the street, one lane has portals for service vehicles (maids, gardeners, etc.) and the opposite is for owned cars and carpools. You can see the portal's names if you pick them up. Make sure not to delete any of them.

Now, since this is going to be a roadless lot, ideally there won't be vehicles in it. This means that the lot would ideally be accessed through walking only. In community lots, this would not be an issue if you have the Bon Voyage expansion pack, which allows sims to walk to lots.

In residential lots, you might run into some problems. Service NPCs always arrive on vehicles, and unless your sim owns a vehicle, the carpool and school bus will always come to pick sims up for work/school. It might break your immersion to have a vehicle show up underwater or on a ship. There are many options to avoid this: having sims work on an owned business instead of a regular job, not having kids on the lot, making the kids homeschooled, avoiding calling service NPCs… it depends on how you want to play the lot. For example, my icebreaker is a residential lot, only adults live there, some sims live in it temporarily and none of them have a regular job.

All of this is relevant because we're going to move the portals. Where you move the portals depends on you. Think about how you're going to use the lot. In my icebreaker, I placed the car portals (which won't be used) underwater, on a corner of the lot. I placed the pedestrian portals on the ship, to pretend that the walkbys are part of the crew or passengers. But for now, just move the portals, mailbox/phone booth and trashcan to a corner of the lot. To be able to pick up the mailbox/phone booth and trashcan, use the cheat moveObjects on. Delete the street and sidewalk tiles using the floor tool (Control + click and hold left mouse button + drag).

4.3. Flatten the island with the level terrain tool. Save the lot. Almost done! Don't mind the hole in the water, this will be fixed. You will notice that in neighborhood view, the island is still there. This is because the neighborhood terrain under the lot hasn't updated. I don't know why this happens, but it does. Normally, moving the lot would fix it, but we can't move this lot using the game's tools. Instead, we are going to fix the terrain with Hood Replace. Don't exit the game yet.

5. Updating the terrain with Hood Replace

5.1. Create a new neighborhood using the new roadless terrain. Make sure it has the same type of terrain (lush, desert, etc.) as the first one. Again, name it "NO ROADS" or something similar. Exit the game.

5.2. Open HoodReplace. On the left column you will select your "NO ROADS" neighborhood. In the right column, select the neighborhood that has the underwater lot. Check these settings: Replace terrain, replace road, and versioned backups. Leave everything else unchecked. Click Copy.

5.3. Open The Sims 2 and load the neighborhood to check if the changes worked. That concludes the tutorial. At this point you can shrink the lot if you don't want it to be so big. Remember to move the portals to their final placement when you're done building the lot. Also keep in mind pedestrians (walkbys) always walk by the mailbox, so keep the mailbox accessible for sims.

If you're going to have multiple underwater lots, I recommend making them first, and decorating the neighborhood after. Doing this in an already existing neighborhood might be more difficult, mainly because, if you made any changes to the terrain using the modifyNeighborhoodTerrain cheat in the past, they might get reset when using Hood Replace.

154 notes

·

View notes