#linux add user to group wheel

Explore tagged Tumblr posts

Text

Chimera-Linux with btrfs

Chimera Linux is a rather new from the ground up Linux Distribution built with LLVM, MUSL, BSDUtils and dinitit comes with GNOME and KDE Plasma. It, however doesn't come with a installer so here's how to install the KDE flavour with btrfs root and home directories plus a swap partition for use in Linux KVM with UEFI.

Step 1. Get a Chimera live image from https://repo.chimera-linux.org/live/latest/

I use the chimera-linux-x86_64-LIVE-XXXXXXXX-plasma.iso image with KDE Plasma 6 and the following steps assume you do the same.

Step 2. Boot the live image

Step 3. Prepare the target disk with KDE Partition Manager

/dev/vda /dev/vda1, vfat, EFI System, 500 MB /dev/vda2, btrfs, Root FS, subvols @ & @home , rest of the disk /dev/vda3, swap, SWAP FS, 2x RAM Size

Step 4. Open Konsole and do the following

doas -s mkdir -p /media/root mount -t btrfs /dev/vda2 /media/root chmod 755 /media/root btrfs subvolume create /media/root/@ btrfs subvolume create /media/root/@home btrfs subvolume set-default /media/root/@ umount /media/root mount -t btrfs -o compress=zstd:5,ssd,noatime,subvol=/@ /dev/vda2 /media/root mkdir -p /media/root/home mount -t btrfs -o compress=zstd:5,ssd,noatime,subvol=/@home /dev/vda2 /media/root/home mkdir -p /media/root/boot/efi mount -t vfat /dev/sda1 /media/root/boot/efi

let's bootstrap our new chimera system

chimera-bootstrap -l /media/root exit

time to chroot into our vergin system

doas chimera-chroot /media/root

time to bring everything up to date

apk update apk upgrade --available

if something is iffy

apk fix

we want our swap to show up in the fstab

swapon /dev/vda3

Let's build a fstab

genfstab / >> /etc/fstab

install the latest LTS Kernel

apk add linux-lts

install the latest released kernel

apk add linux-stable update-initramfs -c -k all

time for EFI GRUB

apk add grub-x86_64-efi grub-install -v --efi-directory=/boot/efi update-grub

install KDE, Firefox, Thunderbird

apk add plasma-desktop flatpak smartmontools ufw firefox thunderbird qemu-guest-agent-dinit spice-vdagent-dinit

Set root password

passwd root

create main user

useradd myuser passwd myuser

add user to relevant groups

usermod -a -G wheel,kvm,plugdev myuser

Set hostname

echo chimera > /etc/hostname

set timezone

ln -sf /usr/share/zoneinfo/Europe/Berlin /etc/localtime

Configure some services

syslog-ng

dinitctl enable -o syslog-ng

sshd

dinitctl enable -o sshd

KDE Login Manager

dinitctl enable -o sddm

only needed when in KVM VM

dinitctl enable -o spice-vdagentd dinitctl enable -o qemu-ag

network time client

dinitctl enable -o chrony

network manager defaults to dhcp client on first ethernet interface

dinitctl enable -o networkmanager

optional: enable firewall if installed

dinitctl enable -o ufw

see the firewall status

ufw status

configure flatpak

flatpak remote-add --if-not-exists flathub https://dl.flathub.org/repo/flathub.flatpakrepo

just to be sure

update-initramfs -c -k all update-grub

exit from chroot

exit

umount drive

doas umount /media/root/boot/efi doas umount /media/root/home doas umount /media/root

Step 5. Reboot the System

1 note

·

View note

Text

The TARS Foundation Celebrates its First Anniversary

The TARS Foundation Celebrates its First Anniversary

The TARS Foundation, an open source microservices foundation under the Linux Foundation, celebrated its first anniversary on March 10, 2021. As we all know, 2020 was a strange year, and we are all adjusting to the new normal. Meanwhile, despite being unable to meet in person, the TARS Foundation community is connected, sharing and working together virtually toward our goals. This year, four new…

View On WordPress

#linux add user to group wheel#linux find text in files#linux kernel documentation#linux mint#linux unzip file

0 notes

Text

Recommend the Most Popular Mobile Game Engines in 2021

Game engine software is a suite of tools that allows developers to access every part of a project. Game engine software can be used by individuals or a team together. Each game engine runs on a variety of languages like Python, C++. In most game engines you don’t need coding knowledge but having excellent skills will help to increase the software functionality.

Game engines like Unity have visual editors that allow Hire Unity game developers for view modification to a project in real-time. Some programs let users manually place background, characters, and icons directly on the screen.

For beginners, game engines feature a drag-and-drop logic creation tool. It is used to create events and behavior for characters and enemies. You will also find many game engines that support the creation of AR and VR games. VR takes the players into a digital world, while AR integrates game graphics with a real-world setting.

Game engines also allow monetization of games through in-app purchases and help you bring your ideas to life. Of course, the scope of your project will determine the ideal solution for you, here is the best game engine software all game app developers will find essential.

1. Unity

In today’s emerging gaming industry, Unity is one of the most popular game development engines. The major benefit is a cross-platform game engine to boot with an ample collection of tutorials to help beginners get started. Plus, Unity is the best game engine for mobile game development.

2. Unreal Engine

The Unreal game engine came to light when a first-person shooter was published in 1998 developed by Epic Games. In 2005 Unreal Engine made open source software and you can download it on GitHub for free. It supports Linux, PlayStation, Xbox, iOS, and Windows, etc.

3. Solar2D

It is a 2D game development engine introduced in 2009. Game app developers used to develop 2D games for Android, iOS, and desktop as a free open source platform. Solar2D can build integrate your project with Android studio to add native features. It supports Android, iOS, and Windows.

4. SpriteKit

If you are a fan of iPhone game apps then Spritekit is one of the best options for developing Apple-based games. Apple launches it in 2013.

It supports iOS, macOS, tvOS and watchOS and integrates well with GameplayKit, SceneKit, and Swift.

It helps in creating virtually fascinating apps with necessary resources like physics, lightning animation with a hassle-free process

5. Marmalade SDK

Originally called Ideaworks3D Limited is a cross-platform engine that supports different devices such as Windows, iOS, and Android. It has a game asset that can be exported to other 3D modeling and animation tools like Autodesk and supports 2D game development.

6. GameMaker

It is one of the most used game engines because of no requirement of programming or coding knowledge. All you have to do is “point and click” to create games much easier and faster than coding with native languages.

Whereas like many other game engines, it is not free to use the software. It requires you to buy either the professional or Master collection versions.

7. Godot

It is one of the popular game engines that allows making both 2D and 3D game engines. It has a vast set of common tools so that game app developers can focus on making game apps without reinventing the wheel.

Godot is a free-to-use open-source with an MIT license. The good news is no royalties, no subscription fees, and no hidden strings. The game apps you develop through the Godot engine are yours.

Also, it has a community that regularly fix bugs and develop additional features. Its active community can answer even your most specific Godot-related questions.

You can find a strong Godot community on the internet HUBS, including Reddit forums, Facebook groups, steam community, the Godot forums, and more.

8. Clickteam Fusion

It is one of the most fun game engines supported by the creative team. The Fusion engine is full of graphical assets already made and used by many in the game industry. Fusion has an integrated physics engine and a great community, to boot. You can use it for all the platforms like Android, iOS, and Windows including Steam.

9. Cocos 2d-x

As the name sounds unique, it claims to be the world’s top Open-Source Game Development Platform’. Well, are they?

Of course, it is difficult to give a definitive answer if you aren’t exactly familiar with game design, or if your personal preferences favor something more in line with Godot or Unity 3D.

It is free to use an open-source with an MIT license. It provides you very prominent features with cross-platform and a simple way to be successful in the world of game development. Although it uses C++ at a time, it can be versatile and also use Java.

Summary:

Above are the most popular game engines and a quick snapshot of what the mobile game development community offers, but all these game engines also represent a wide range of use cases and should help you determine what tools you need for your next project. If you don’t have time for multi-tasking, Glownight Games Studio is the best Mobile Game Development Company in the industry to help you out with your important game app projects develop on time.

#Mobile game development#Unity game development companies#3D game development company#Unity game developers#3D game developers#2D Game Development Company

9 notes

·

View notes

Text

Kerbal Space Program 1.8: “Moar Boosters!!!” is now available!

Hello everyone!

New gadgets are coming from the Research and Development facility, the kind that will get Kerbals screaming: MOAR BOOSTERS!!! A brand new update is here and with it comes better performance, fresh new features, improved visuals, and new parts being added to our players’ creative repertoire!

Kerbal Space Program 1.8: Moar Boosters!!! is an update focused on making the game perform and look better, all while introducing more quality of life features that will improve the overall player experience. We’re also bringing some new solid rocket boosters to the VAB, as well as introducing some exclusive treats for owners of the Breaking Ground Expansion.

Let’s go through some of the update’s highlights below:

Unity Upgrade

Moar Boosters!!! brings an upgrade to the underlying engine of the game to Unity 2019.2, which helped us implement performance and graphics improvements, as well as better rendering performance and a reduction of frame rate stutters. With the new tools that this upgrade provides, we’ll be able to continue refining the game in upcoming updates.

Celestial Body Visual Improvements

Mun, Minmus, Duna, Ike, Eve and Gilly have new high-quality texture maps & graphic shaders, and now look sharper and more realistic! You will also be able to select the celestial bodies’ shader quality in the settings and set them to low (legacy), medium or high, with improvements being visible across the board. These are just part of the first batch of celestial bodies being overhauled, slowly but surely we will continue this endeavor.

Map Mode improvements

Map mode received some adjustments too! Now you can use docking mode and stage your craft whilst in map mode. The stage display button (formerly stage mode) now serves as a toggle to show and hide the stage stack, whether you’re in flight or map view, and selected map labels will now persist when going back and forth between map and flight mode.

New SRBs!

A range of new solid rocket boosters have been added to the game. From the tiny .625m stack size Mite to the titanic 2.5m wide, 144ton Clydesdale, these new boosters will offer a range of versatile solid-fuel options. Making History owners get an extra bonus here too with the “Pollux” and a 1.875m nose cone to fit on top of it.

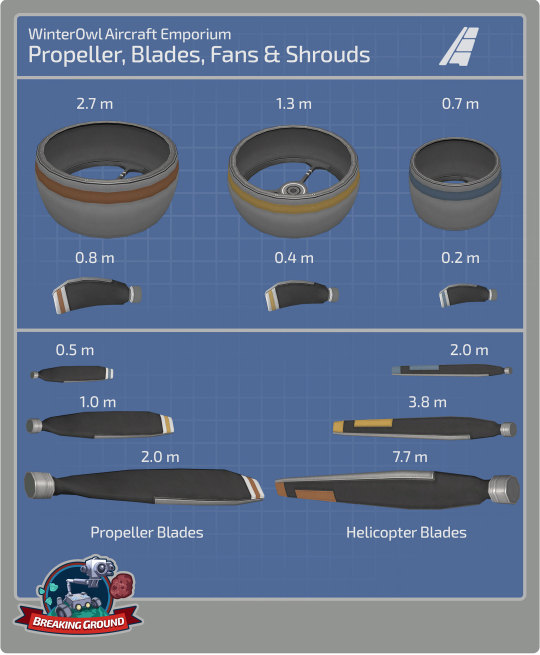

Breaking Ground Exclusives

Kerbal Space Program 1.8: Moar Boosters!!! also includes some exclusive content for owners of the Breaking Ground Expansion. A new set of fan blades and shrouds will continue to push the creativity of KSP players even further. Use them to create drones, ducted fan jets, or anything you can imagine.

Improvements to the helicopter blades and the robotic part resource consumption have also been included. The latter will now have better info on consumption and improved options for power-out situations.

And more!

To learn more you can read the full Changelog here:

=============================v1.8.0=========================== 1.8.0 Changelog - BaseGame ONLY (see below for MH and BG changelog)

+++ Improvements

* Upgrade KSP to Unity 2019.2.2f1 version. * Standalone Windows build now uses DX11 Graphics API. Many visual improvements to shaders and FX. * Implement Unity Incremental Garbage Collection. * Implement new celestial body shaders and textures for Mun, Minmus, Duna, Ike, Eve, Gilly. * Update Main Menu Mun terrain shader. * Add Terrain Shader Quality graphics setting. * Improve the TrackingStation load time. * Implement ability to edit Action Groups in flight. * Performance improvements to the VAB/SPH scenes. * Performance improvements in the flight scene. * Performance improvements in the Tracking Station scene. * Add ability to edit resource values in PAWs using the key input. * Add Warp to node button on dV readout in NavBall display. * Add enable/disable wheel motor Actions to all wheels. * Add ability to limit the maximum size of PAWs via settings.cfg. * Improve the Action Groups/Sets UI. * Add PAW_PREFERRED_HEIGHT to settings.cfg for players to set a prefered max height. * Made staging and docking UI available in map view * Pinned labels in map view now persist pinned even when leaving and re-entering map view * "Delete All" functionality for messages app has been implemented. * Improve the KSC grass and asphalt texture and shader to reduce tilling. * Improve textures for the VAB building on level one. * Model revamp for the level one and level two Research and Development nissen huts. * Increased precision for eccentricity in advanced orbit info display. * Upgrade VPP and improve wheel and landing leg function. * Expose global kerbal EVA Physics material via setting. * Add do not show again option to re-runnable science experiments. * Add actions for same vessel interactions functionality. * Implement per-frame damage threshold on destructible buildings. * Add vessel name title to flag PAWs. * Add a confirm dialog with the option of “Don’t display again” when a kerbal removes a science experiment data. * Disable Pixelperfect on UI Canvases to improve performance - available to configure via settings.cfg. * Increase precision for numerical editing of maneuver nodes. * Kerbal position on ladders and command pods improved. * Add ability for users to add their own loading screen pictures to the game. Folder is KSP/UserLoadingScreens

+++ Localization

* Fix incorrect naming of The Sun. * Fix Action Sets text in VAB/SPH for some languages. * Fix Text in dV KSPedia pages in Japanese. * Fix Chinese Localizations. * Fix dV readout for Chinese language.

+++ Parts

New Parts: * S2-33 “Clydesdale” Solid Fuel Booster. * S2-17 “Thoroughbred” Solid Fuel Booster. * F3S0 “Shrimp” Solid Fuel Booster. * FM1 “Mite” Solid Fuel Booster. * Protective Rocket Nosecone Mk5A (“Black and White” and “Gray and Orange”). * Add rock/dirt debris FX to the Drill-O-Matic and Drill-O-Matic Junior.

Updated Parts (reskinned): * Service Bay (1.25m). * Service Bay (2.5m).

Color Variants: * Protective Rocket Nose Cone Mk7 (New “Orange” color variant) * Protective Rocket Nose Cone Mk12 (New “Orange” color variant)

+++ Bugfixes

* #bringbackthesandcastle - Fix the Mun sandcastle easter egg from not appearing. * Fix Maneuver editor so that the mouse wheel adjusts the node now in the contrary direction (same behavior as dragging down/up). * Fix a null reference error when player threw away a vessel with fuel flow overlay turned on in the editor. * Fix an input lock when switching between Editing the vessel and the Action groups menu. * Fix user created vessels disappearing from the vessel spawn dialog. * Fix the random selection of Mun vs Orbit scene when returning to Main Menu. * Fix input field rounding on Maneuver Node editor fields. * Fix a Null reference in the Editor when selecting a part and opening the Action Part Menu. * Fix pressing Enter key confirms the game quick save dialog. * Fix PAWs will now scale downwards from the header keeping more consistency on the fields. * Fix an input lock issue where some PAW buttons disappeared when editing a numeric slider field. * Fix Menu Navigation was missing in the quicksave dialog. * Fix Mini Settings had some items that would be skipped when navigating with the arrow keys. * Fix for remove from symmetry causing NRE in flight scene. * Fix the FL-A10 collider no longer mismatching its geometry. * Fix Control Surface and Aero Toggle Deploy Action not working in all situations. * Joysticks and gamepads on Linux are again recognized and usable. * Fix Action Groups UI and Color issues. * Fix the LV-T30 Reliant Liquid Fuel Engine ́s bottom attach node. * Fix a texture seam on the Probodobodyne Stayputnik. * Fix a z-fighting issue on the destroyed VAB at level 3. * Fix the Z-4K Rechargeable Battery Bank ́s bottom attach node. * Fix the concrete tiling texture of the SPH at level 3. * Fix a grass texture seam in front of the VAB at level 3. * Fix missing texture and animation on the level one Administration Building flag. * Smoothened Kerbal IVA expression transitions to avoid strange twitching. * Make the LV-TX87 Bobcat exhaust FX more appropriate. * Fix kerbal portraits when launching vessel with multiple kerbals in external command chairs. * Fix drills operating when not in contact with the ground. * Fix thrust center on the Mainsale engine. * Add bulkhead profile to LV-T91 Cheetah, LV-TX87 Bobcat, RK-7 Kodiak and RE-I12 Skiff. * Fix re-rooting of surface attach nodes. * Fix kerbal IVA expression animations transitions. * Fix shadows at KSC and in flight. * Fix “sinker” warning during game load. * Fix lengthy Map Transition when lots of vessels in the save. * Fix overlap in vessel type information window. * Fix a Null Reference when copying parts with alternative colours. * Fix an error where the custom crafts were not loaded in the Load Craft dialog after navigating the tabs. * Fix a null reference when clicking the Remove Symmetry button on some parts. * Motorized wheels no longer keep generating torque even when the motor is set to ‘Disabled’ * Re-centered an off center scrollbar in the mini settings dialog. * Rebalance decoupler, MK1-3, MK1 lander can, MK2 lander can, separators costs, crash tolerances, weight.

+++ Mods

* Target framework now .NET 4.x. * DXT3 DDS formatted textures are not supported by Unity or KSP any more. You must convert to DXT5. * Added UIPartActionWindow.UpdateWindowHeight to allow mods to dynamically set the PAW max height * MapviewCanvasUtil.GetNodeCanvasContainer created as more performant method than MapViewCanvasUtil.ResetNodeCanvasContainer. Use the rest one only when you need to force a scale reset * ModuleResourceAutoShiftState added that can be used for startup/restart of parts based on resource availability. * VesselValues are now cached per frame. Can use ResetValueCache() to reset the cache.

1.8.0 Changelog - Making History DLC ONLY

+++ Improvements

* User can now click and drag and release to connect two nodes in the mission builder.

+++ Parts

New Parts: * THK “Pollux” Solid Fuel Booster

Updated Parts (reskinned): * Kerbodyne S3-14400 Tank * Kerbodyne S3-7200 Tank * Kerbodyne S3-3600 Tank

+++ Bugfixes

* Craft Thumbnails are not shown/generated for stock missions. * Fix Kerbals spawning on EVA in missions spawning on their sides (very briefly). * Fix Intermediate and Advanced Tutorial becoming stuck. * Fix Typos in some part descriptions. * Fix vessel width and height restrictions on Woomerang and Dessert in career games. * Fix camera becoming stuck if in IVA mode when a vessel spawns in a mission set to change focus to that vessel. * Fix hatch entry colliders on the M.E.M. lander can.

+++ Missions

+++Miscellaneous

+++ Mods

1.8.0 Changelog - Breaking Ground DLC ONLY

+++ Improvements

* Add renaming of Deployed Science Stations. * Add alternators (producing electric charge) on LiquidFuel Robotic Rotors. * Add propeller blade AoA, lift and airspeed readouts to their PAWs. * Add Reset to built position button in PAWs of Robotic parts which causes them to reset their Angle, RPM or Extension. * Add shutdown/restart function to robotics parts based on resource availability. * Add preset curves functionality to the KAL controller. * Add part highlighting on mouseover in KAL. * Improve Robotic Part Resource usage info in editor. * Add interact button to open PAW for Deployable Science parts. * Added new KSPedia slides for Grip Pads, Propellers and Track Editor. * Improve Robotics Parts Resource usage to use less resources when moving slower. * The PAW button “Reset to Launch Position” for robotic parts now reads as, “Reset to build:” + Angle, RPM or Extension depending on the robotic part to avoid confusion.

+++ Localization

* Fix description text on R7000 Turboshaft Engine in English. * Fix localization of resource name in robotic part PAWs. * Fix KAL help texts.

+++ Parts

New Parts with Variants: * S-062 Fan Shroud * S-12 Fan Shroud * S-25 Fan Shroud * R-062 Ducted Fan Blade * R-12 Ducted Fan Blade * R-25 Ducted Fan Blade * Readjusted the liftCurve, liftMachCurve and dragCurve values on the propellers and helicopter blades.

Rebalanced Robotic Resource Consumption values: * G-00 Hinge * G-L01 Alligator Hinge * G-11 Hinge * G-L12 Alligator Hinge * G-W32 Hinge * Rotation Servo M-06 * Rotation Servo M-12 * Rotation Servo M-25 * Rotation Servo F-12 * EM-16 Light Duty Rotor * EM-32 Standard Rotor * EM-64 Heavy Rotor * EM-16S Light Duty * Rotor, EM-32S Standard Rotor * EM-64S Heavy Rotor * 1P4 Telescoping Hydraulic Cylinder * 3P6 Hydraulic Cylinder * 3PT Telescoping Hydraulic Cylinder * R121 Turboshaft Engine * R7000 Turboshaft Engine

+++ Bugfixes

* Fix Deployed Science Log and Message system spam. * Fix Deployed Science parts sometimes exploding when coming off rails if in contact with another part (kerbal, etc). * Fix Deployed science parts being visible during the astronaut complex when opening that scene from the Editor. * Fix Robotic Parts using EC when moving to initially set position on launch. * Fix slider numeric values in some PAW fields could go out of range. * Fix autostrut processing for some use cases regarding root part being robotic part. * Fix autostrut delay when vessel comes off rails for vessel with robotic parts. * Fix Actions at the end of KAL track not firing in Play Once mode. * Fix separation of the blades when attached to an active rotor. * Fix rotation of cargo parts in extended tooltips. * Fix cargo part icons appearing in Astronaut Complex when pinned. * Fix drag on pistons. * Fix cargo parts now rotate at the same speed as in the Editor on the inventory grid during Flight. * Fix mirroring of hinges and rotation servos. * Fix KAL Window not closing when vessel goes outta range. * Fix incorrect naming of the Sun in science experiments. * Fix mirrored attaching to rotor side nodes.

+++ Miscellaneous

+++ Modding

Kerbal Space Program 1.8: Moar Boosters!!! is now available on Steam and will soon be available on GOG and other third-party resellers. You will also be able to download it from the KSP Store if you already own the game.

Click here to enter the Grand Discussion Thread for this release.

Happy launchings!

By the way, you can download the new wallpapers of the Moar Boosters!!! art here:

1080x1920p (Most Phones)

1080x2048p (Galaxy S9)

1440x2560p (iPhone X)

Desktop 1920x1080p

Desktop 2048x1080p

Desktop 2560x1440p

#Kerbal Space Program#Update 1.8#Moar Boosters#Breaking Gorund Expansion#making history expansion#annoucement#changelog#Release Notes

44 notes

·

View notes

Text

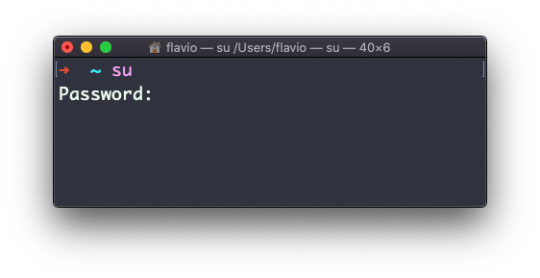

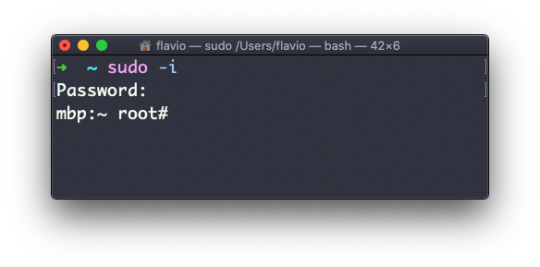

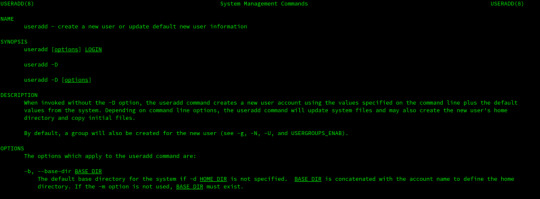

How To Create a Sudo User on Linux (CentOS)

The sudo command is designed to allow users to run programs with the security privileges of another user, by default the root user.

In this guide, we will show you how to create a new user with sudo privileges on CentOS. You can use the sudo user to perform administrative tasks on your CentOS machine without a need to logging in as the root user.

Creating Sudo User

By default on CentOS, users in the group wheel are granted with sudo access. If you want to configure sudo for an existing user, just add your user to the wheel group, as shown in step 4.

Follow the steps below to create a sudo user on your CentOS server:

1. Log in to your server

Start by logging in to your CentOS server via ssh as the root user:

ssh root@server_ip_address

2. Create a new user account

Create a new user account using the useradd command:

useradd username

Replace username with the user name that you want to create.

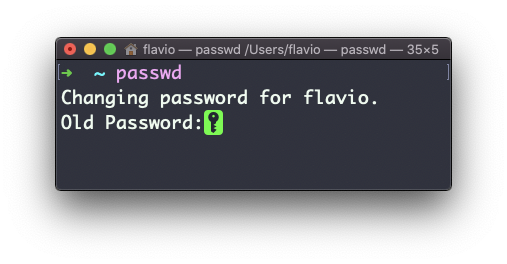

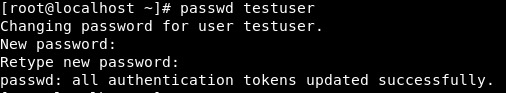

3. Set the user password

Run the passwd command to set a password for the new user:

passwd username

You will be prompted to confirm the password. Make sure you use a strong password.

Changing password for user username. New password: Retype new password: passwd: all authentication tokens updated successfully.

4. Add the new user to the sudo group

By default on CentOS systems, members of the group wheel are granted with sudo access. Add the new user to the wheel group:

usermod -aG wheel username

How to use Sudo

Switch to the newly created user:

su - username

To use sudo, simply prefix the command with sudo and space.

sudo [COMMAND]

For example, to list the contents of the /root directory you would use:

sudo ls -l /root

The first time you use sudo from this account, you will see the following banner message and you will be prompted to enter the password for the user account.

We trust you have received the usual lecture from the local System Administrator. It usually boils down to these three things: #1) Respect the privacy of others. #2) Think before you type. #3) With great power comes great responsibility. [sudo] password for username:

Conclusion

That’s all. You have successfully created a sudo user on your CentOS system. You can now use this user to perform administrative tasks on your server.

Feel free to leave a comment if you have any questions.The sudo command is designed to allow users to run programs with the security privileges of another user, by default the root user.In this guide, we will show you how to create a new user with sudo privileges on CentOS. You can use the sudo user to perform administrative tasks on your CentOS machine without a need to logging in as the root user.Creating Sudo UserBy default on CentOS, users in the group wheel are granted with sudo access. If you want to configure sudo for an existing user, just add your user to the wheel group, as shown in step 4.Follow the steps below to create a sudo user on your CentOS server:1. Log in to your serverStart by logging in to your CentOS server via ssh as the root user:ssh root@server_ip_address2. Create a new user accountCreate a new user account using the useradd command:useradd usernameReplace username with the user name that you want to create.3. Set the user passwordRun the passwd command to set a password for the new user:passwd usernameYou will be prompted to confirm the password. Make sure you use a strong password.Changing password for user username. New password: Retype new password: passwd: all authentication tokens updated successfully. 4. Add the new user to the sudo groupBy default on CentOS systems, members of the group wheel are granted with sudo access. Add the new user to the wheel group:usermod -aG wheel usernameHow to use SudoSwitch to the newly created user:su - usernameTo use sudo, simply prefix the command with sudo and space.sudo [COMMAND]For example, to list the contents of the /root directory you would use:sudo ls -l /rootThe first time you use sudo from this account, you will see the following banner message and you will be prompted to enter the password for the user account.We trust you have received the usual lecture from the local System Administrator. It usually boils down to these three things: #1) Respect the privacy of others. #2) Think before you type. #3) With great power comes great responsibility. [sudo] password for username: ConclusionThat’s all. You have successfully created a sudo user on your CentOS system. You can now use this user to perform administrative tasks on your server.Feel free to leave a comment if you have any questions.

1 note

·

View note

Text

Download firefox 57

#DOWNLOAD FIREFOX 57 HOW TO#

#DOWNLOAD FIREFOX 57 UPGRADE#

#DOWNLOAD FIREFOX 57 CODE#

why not making a screenshot with your browser

Features Top-sites and Highlights with a lot of nice settings 🙂.

With Firefox 57 Mozilla does not only make changes behind the curtains, they also improved the UI and integrated new features. Basemark Benchmark (more is better)įirefox 55 Basemark benchmark Firefox 57B4 Basemark benchmark Kraken Benchmark (by Mozilla less is better) All benchmarks were done on the same machine running on Kubuntu 16.0.4 (Kernel 4.10). We tried to do some quantification with 3 different Brower benchmarks. Using Firefox 57 (B4/AMD64/Linux) feels quite faster and smoother compared to FF55. So let’s have a look on some benchmarks and other stuff: Performance This engine is also written in Rust and targets better parallelism, security, and more. They fit nicely in with the Servo-Engine. Since Servo-Engine is not under the Quantum project, all the features from it are not only aiming on the Gecko-Engine.

Mozilla is developing more like this, so look around at Mozilla….

WebRender(Quantum Render) contains an optimized GPU rendering.

The technique behind it can be found here.

#DOWNLOAD FIREFOX 57 HOW TO#

With that, they integrated some new ideas how to parse CSS and more. Stylo(Quantum CSS) was the first project that reached the stable state and is also used in the current Firefox 55 release.The Mozilla Quantum project contains all the new implementations written in Rust for Firefox.

#DOWNLOAD FIREFOX 57 CODE#

That makes it especially for programmers more efficient to write code for the feature and not worrying about for example thread issues and more.īut that doesn’t mean that Rust is easy to learn and to use, as always it must be learned and understand. The programming language Rust ( Mozilla Rust) comes with some solutions to some issues in the coder’s world. Why ? Performance, new clean codebase, better support for new technologies, other philosophy, … Not the City in Austria – the programming language

#DOWNLOAD FIREFOX 57 UPGRADE#

But, I am not rushing to upgrade just yet since I depend on Grammarly and a couple other extensions.įirefox 57 is available a freeload for Windows, macOS, and Linux.An entirely new Firefox – why reinvent the wheel again? I personally like Firefox because of how well it handles bookmark syncing. Firefox 1.0 launched in 2004 and there really hasn’t been much that has changed since except for the move to a rapid release similar to Google Chrome. This change is desperately needed to keep up with the rapid changes in browser development. If you don’t find something new in the update, you can always jump over to Chrome. Then again, it’s 2017 and you’re likely browser-agnostic like me, too. So, if you aren’t dependent on extensions like me, then you should be fine. With the overhaul, something had to give and this was extensions written in XUL.įirefox Quantum only supports web extensions at the moment and the last I checked there are only about 73 available. If you love Firefox Add-ons a staple of the browser since the early days, you might want check if yours are supported. Users might want to think carefully about upgrading though. There is also support for new web standards such as WebVR and better security using a new Tracking Protection mechanism which blocks requests from websites that want to track you. The new version comes with the new screenshot tool which Brian recently reviewed. Firefox Quantum is over twice as fast as Firefox from 6 months ago, built on a completely overhauled core engine with brand new technology stolen from our advanced research group, and graced with a beautiful new look designed to get out of the way and let you do what you do best: surf a ton of pages, open a zillion tabs, all guilt-free because Firefox Quantum uses less memory than the competition. Common areas of the web browser such as Bookmarks, Synced tabs, Browsing History and Pocket lists are streamlined and better integrated. Called Photon, the new user experience is cleaner and easier to navigate. In addition to the under the hood improvements, users can expect a refreshed user interface that cleans up a bit of the clunkiness collected over the past decade. Mozilla says users should see a boost of up to 30% performance as it uses less memory when loading a web page in a new window or tab. Mozilla Launches Firefox 57 with Improved Performance and Revamped UI But Chrome has been experiencing a bit of dislike among users who describe it begrudgingly as a memory hog an issue Mozilla focuses on with the new multi-process architecture, which chops up background activities run by the browser in each tab and on the web pages. Firefox 57 has been in development for 6 months and is aimed at reclaiming losses to Google over the years, which has become the favorite of most users worldwide.

0 notes

Text

Hello all. I’ve been using KVM for a long time now. Every time I try to use virt-manager as a non-root user, I have to enter the sudo password. This ain’t a problem but if you use virt-manager most of the times, it can be boring and irritating. For KVM installation, refer to our guides below. How to install KVM on RHEL/CentOS 8, Fedora, Arch Linux, CentOS, Ubuntu/Debian, SLES I’ll show you a simple way to use virt-manager as a non-root user, by just creating a libvirt group and adding a standard user account to it. Without wasting much time, let’s dive in. To use virt-manager as a non-root user, follow steps below: Step 1 – Create unix group to use First check if group already exist, if not create it sudo getent group | grep libvirt Some distributions may be using libvirtd. sudo getent group | grep libvirtd If it doesn’t exist, add it as system group. sudo groupadd --system libvirt Step 2 – Add user account to the libvirt group Now that the group is available, add your user account to the group. sudo usermod -a -G libvirt $(whoami) newgrp libvirt Verify that user is added to libvirt group. $ id $(whoami) uid=1000(jmutai) gid=1000(jmutai) groups=1000(jmutai),998(wheel),992(kvm),988(storage),968(libvirt),108(vboxusers) Step 3 – Edit libvirtd configuration file to add group Open the file /etc/libvirt/libvirtd.conf for editing. sudo vim /etc/libvirt/libvirtd.conf Set the UNIX domain socket group ownership to libvirt, (around line 85) unix_sock_group = "libvirt" Set the UNIX socket permissions for the R/W socket (around line 102) unix_sock_rw_perms = "0770" Restart libvirt daemon after making the change. sudo systemctl restart libvirtd.service Check service status. $ systemctl status libvirtd.service ● libvirtd.service - Virtualization daemon Loaded: loaded (/usr/lib/systemd/system/libvirtd.service; enabled; vendor preset: disabled) Active: active (running) since Fri 2019-04-19 08:48:13 EAT; 1h 16min ago Docs: man:libvirtd(8) https://libvirt.org Main PID: 31709 (libvirtd) Tasks: 26 (limit: 32768) Memory: 64.7M CGroup: /system.slice/libvirtd.service ├─ 754 /usr/bin/dnsmasq --conf-file=/var/lib/libvirt/dnsmasq/default.conf --leasefile-ro --dhcp-script=/usr/lib/libvirt/libvirt_leases> ├─ 755 /usr/bin/dnsmasq --conf-file=/var/lib/libvirt/dnsmasq/default.conf --leasefile-ro --dhcp-script=/usr/lib/libvirt/libvirt_leases> ├─ 777 /usr/bin/dnsmasq --conf-file=/var/lib/libvirt/dnsmasq/docker-machines.conf --leasefile-ro --dhcp-script=/usr/lib/libvirt/libvir> ├─ 778 /usr/bin/dnsmasq --conf-file=/var/lib/libvirt/dnsmasq/docker-machines.conf --leasefile-ro --dhcp-script=/usr/lib/libvirt/libvir> ├─25924 /usr/bin/dnsmasq --conf-file=/var/lib/libvirt/dnsmasq/vagrant-libvirt.conf --leasefile-ro --dhcp-script=/usr/lib/libvirt/libvir> ├─25925 /usr/bin/dnsmasq --conf-file=/var/lib/libvirt/dnsmasq/vagrant-libvirt.conf --leasefile-ro --dhcp-script=/usr/lib/libvirt/libvir> ├─25959 /usr/bin/dnsmasq --conf-file=/var/lib/libvirt/dnsmasq/fed290.conf --leasefile-ro --dhcp-script=/usr/lib/libvirt/libvirt_leasesh> ├─25960 /usr/bin/dnsmasq --conf-file=/var/lib/libvirt/dnsmasq/fed290.conf --leasefile-ro --dhcp-script=/usr/lib/libvirt/libvirt_leasesh> └─31709 /usr/bin/libvirtd Step 4 – Launch virt-manager Start Virtual Machine Manager from the command line or your Start menu. $ virt-manager You should be able to create a VM without getting a permission error. You should be able to use virt-manager as a non-root user. If not, try to read your libvirtd.conf file to see the relevant sections to modify. Conclusion We’ve covered how to use virt-manager as a non-root user in easy to follow steps. You may have to install KVM virtualization package group to get tools including virt-manager.

0 notes

Text

Alpine Linux で Docker 環境構築

必要物の事前準備

VirtualBox のダウンロード https://www.virtualbox.org/wiki/Downloads

Alpine Linux のダウンロード https://alpinelinux.org/downloads/ : VIRTUAL で可

1 VirtualBox のインストール

VirtualBox のインストール

仮想マシンの構成

とりあえずなら 1 vCPU 4GB くらいで十分

NAT の場合、次のように設定 (インストール後でも可):

仮想マシンの設定 > ネットワーク > 高度 > ポートフォワーディング

ホストIP: 127.0.0.1

ホストポート: 1024-65535 の任意の番号

ゲストIP: 10.0.2.15

ゲストポート: 22

(macOS) システム環境設定 > セキュリティ で VirtualBox を起動可能にしておく: 実行許可の後再起動

2 Alpine Linux のインストール

仮想マシンの仮想光学ドライブに Alpine Linux の ISO イメージをマウントする

ISOイメージで起動後、root でログイン (パスワードなし)

setup-alpine を実行する: https://docs.alpinelinux.org/user-handbook/0.1a/Installing/setup_alpine.html

キーボードレイアウトは、macOS の場合でも *-mac にする必要はない (すると文字が化ける)

Timezone は Asia/Tokyo

インストール先は sda, sys

インストール完了後、仮想光学ドライブから ISO イメージをアンマウントして reboot

3 Alpine Linux の初期設定

root でログイン

# apk update && apk add sudo

# adduser new_user

# vi /etc/group: 11行目 wheel の行に new_user を追加する

# visudo で %wheel ALL=(ALL) ALL の行頭 # を削除

# echo 'PermitRootLogin no' >> /etc/ssh/sshd_config

# service sshd restart

# apk add virtualbox-guest-additions

4 Docker のインストール

# sed -i -e '4s/^# //' /etc/apk/repositories (# http://dl-cdn.alpinelinux.org/alpine/v3.15/community の行頭 # を削除) : docker-compose をインストールするた��

# apk update && apk add docker docker-compose

# rc-update add docker default

(4 Docker で開発環境の構築)

# service docker start

# docker run --rm hello-world

0 notes

Text

Introducing KVM Virtualization Setup Guide for Linux

Introducing KVM Virtualization Setup Guide for Linux

The concept of virtualization has been around for a while now and has proved quite resourceful and cost-effective technologies. Operation teams and desktop users alike can spin up multiple virtual machines and run a wide selection of operating systems without the need of installing each on a separate physical server. Virtual machines are created using a hypervisor. Two commonly used Hypervisors…

View On WordPress

#linux add user to group sudo#linux add user to group wheel#linux commands in windows 10#linux find file#linux kernel 5.7

0 notes

Text

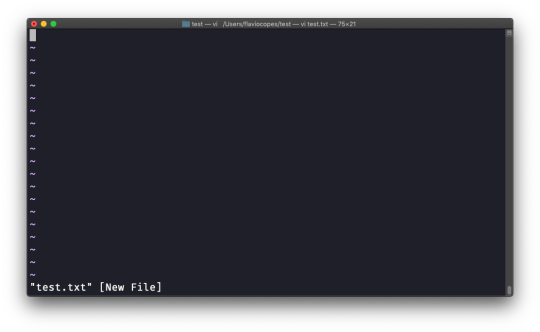

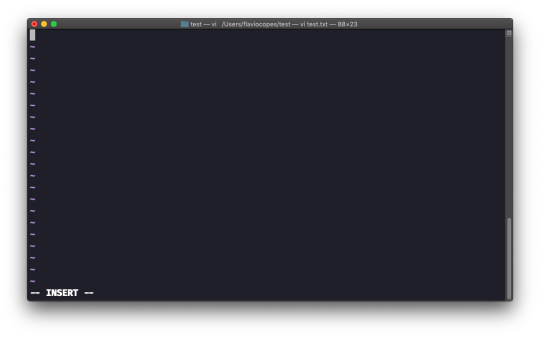

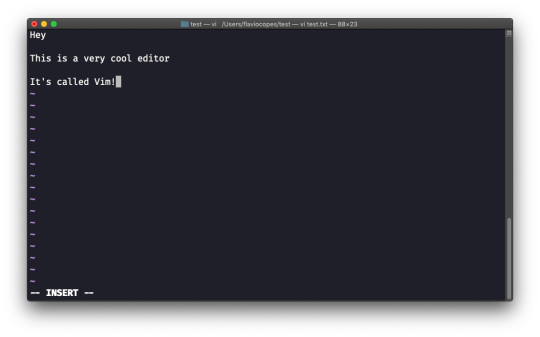

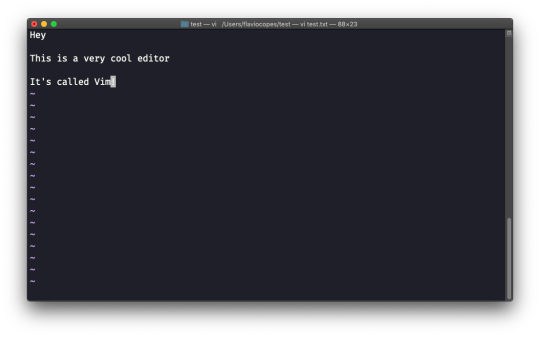

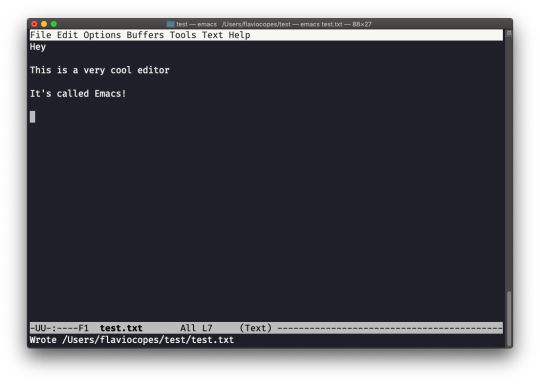

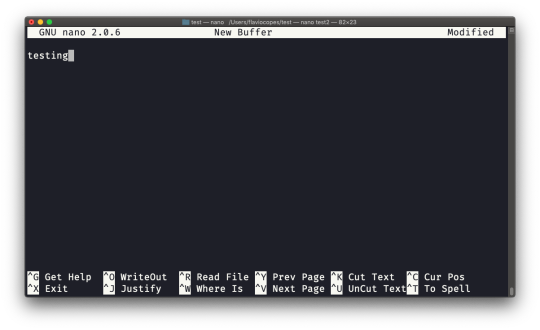

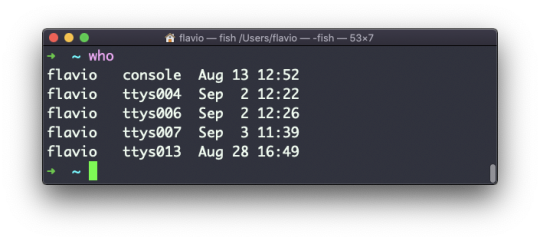

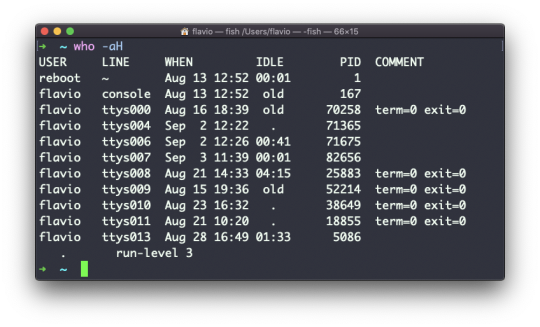

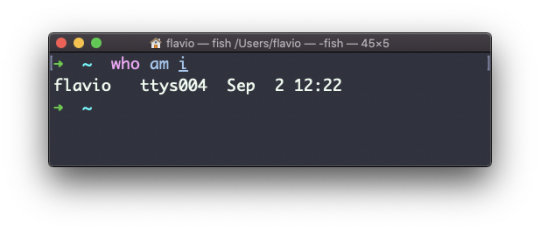

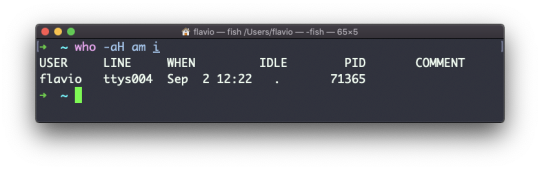

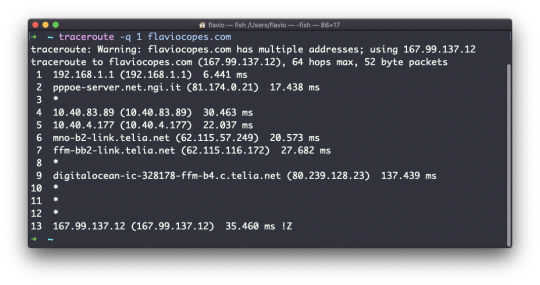

The Linux Command Handbook

The Linux Commands Handbook follows the 80/20 rule: you'll learn 80% of a topic in around 20% of the time you spend studying it.

I find that this approach gives you a well-rounded overview.

This handbook does not try to cover everything under the sun related to Linux and its commands. It focuses on the small core commands that you will use the 80% or 90% of the time, and tries to simplify the usage of the more complex ones.

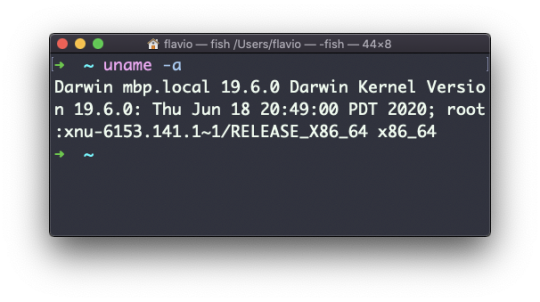

All these commands work on Linux, macOS, WSL, and anywhere you have a UNIX environment.

I hope the contents of this handbook will help you achieve what you want: getting comfortable with Linux.

Click here to download this handbook in PDF / ePUB / Mobi format.

Enjoy!

Summary

Introduction to Linux and shells

What is Linux?

Linux is an operating system, like macOS or Windows.

It is also the most popular Open Source operating system, and it gives you a lot of freedom.

It powers the vast majority of the servers that compose the Internet. It's the base upon which everything is built. But not just that. Android is based on (a modified version of) Linux.

The Linux "core" (called a kernel) was born in 1991 in Finland, and it has come a really long way from its humble beginnings. It went on to be the kernel of the GNU Operating System, creating the duo GNU/Linux.

There's one thing about Linux that corporations like Microsoft, Apple, and Google will never be able to offer: the freedom to do whatever you want with your computer.

They're actually going in the opposite direction, building walled gardens, especially on the mobile side.

Linux is the ultimate freedom.

It is developed by volunteers, some paid by companies that rely on it, some independently. But there's no single commercial company that can dictate what goes into Linux, or the project's priorities.

You can also use Linux as your day to day computer. I use macOS because I really enjoy the applications and design (and I also used to be an iOS and Mac apps developer). But before using macOS I used Linux as my main computer Operating System.

No one can dictate which apps you can run, or "call home" with apps that track you, your position, and more.

Linux is also special because there's not just "one Linux", like is the case with Windows or macOS. Instead, we have distributions.

A "distro" is made by a company or organization and packages the Linux core with additional programs and tooling.

For example you have Debian, Red Hat, and Ubuntu, probably the most popular distributions.

But many, many more exist. You can create your own distribution, too. But most likely you'll use a popular one that has lots of users and a community of people around it. This lets you do what you need to do without losing too much time reinventing the wheel and figuring out answers to common problems.

Some desktop computers and laptops ship with Linux preinstalled. Or you can install it on your Windows-based computer, or on a Mac.

But you don't need to disrupt your existing computer just to get an idea of how Linux works.

I don't have a Linux computer.

If you use a Mac, you just need to know that under the hood macOS is a UNIX Operating System. It shares a lot of the same ideas and software that a GNU/Linux system uses, because GNU/Linux is a free alternative to UNIX.

UNIX is an umbrella term that groups many operating systems used in big corporations and institutions, starting from the 70's

The macOS terminal gives you access to the same exact commands I'll describe in the rest of this handbook.

Microsoft has an official Windows Subsystem for Linux which you can (and should!) install on Windows. This will give you the ability to run Linux in a very easy way on your PC.

But the vast majority of the time you will run a Linux computer in the cloud via a VPS (Virtual Private Server) like DigitalOcean.

What is a shell?

A shell is a command interpreter that exposes an interface to the user to work with the underlying operating system.

It allows you to execute operations using text and commands, and it provides users advanced features like being able to create scripts.

This is important: shells let you perform things in a more optimized way than a GUI (Graphical User Interface) could ever possibly let you do. Command line tools can offer many different configuration options without being too complex to use.

There are many different kind of shells. This post focuses on Unix shells, the ones that you will find commonly on Linux and macOS computers.

Many different kind of shells were created for those systems over time, and a few of them dominate the space: Bash, Csh, Zsh, Fish and many more!

All shells originate from the Bourne Shell, called sh. "Bourne" because its creator was Steve Bourne.

Bash means Bourne-again shell. sh was proprietary and not open source, and Bash was created in 1989 to create a free alternative for the GNU project and the Free Software Foundation. Since projects had to pay to use the Bourne shell, Bash became very popular.

If you use a Mac, try opening your Mac terminal. By default it runs ZSH (or, pre-Catalina, Bash).

You can set up your system to run any kind of shell – for example I use the Fish shell.

Each single shell has its own unique features and advanced usage, but they all share a common functionality: they can let you execute programs, and they can be programmed.

In the rest of this handbook we'll see in detail the most common commands you will use.

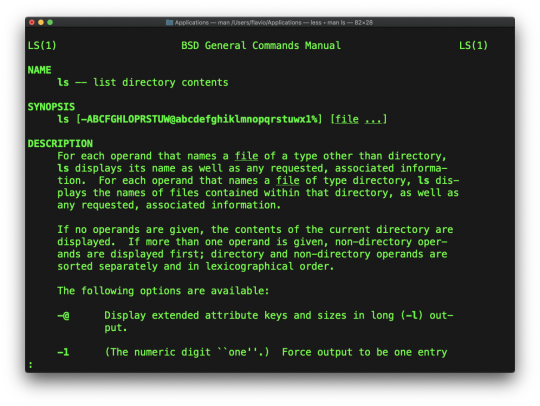

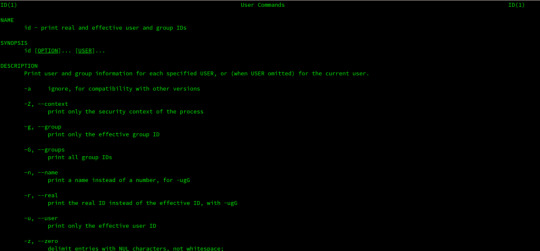

The man command

The first command I'll introduce will help you understand all the other commands.

Every time I don't know how to use a command, I type man <command> to get the manual:

This is a man (from _manual_) page. Man pages are an essential tool to learn as a developer. They contain so much information that sometimes it's almost too much. The above screenshot is just 1 of 14 screens of explanation for the ls command.

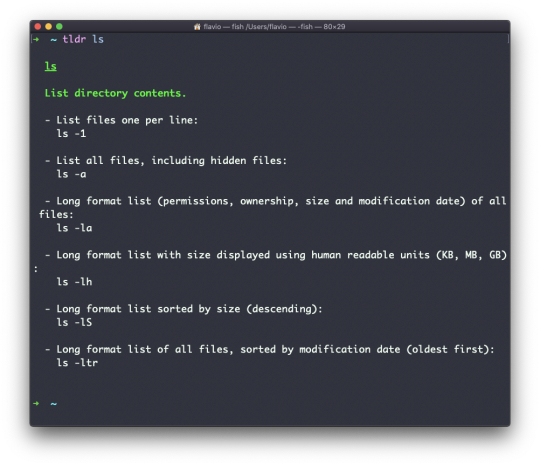

Most of the time when I need to learn a command quickly I use this site called tldr pages: https://tldr.sh. It's a command you can install, which you then run like this: tldr <command>. It gives you a very quick overview of a command, with some handy examples of common usage scenarios:

This is not a substitute for man, but a handy tool to avoid losing yourself in the huge amount of information present in a man page. Then you can use the man page to explore all the different options and parameters you can use on a command.

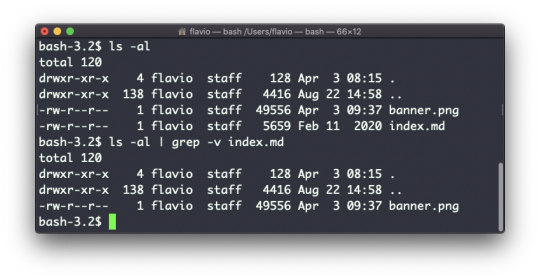

The Linux ls command

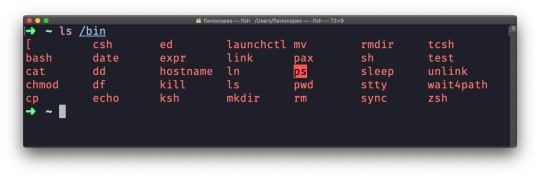

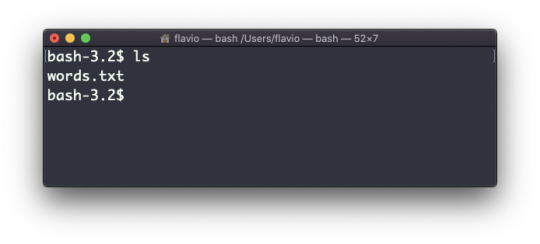

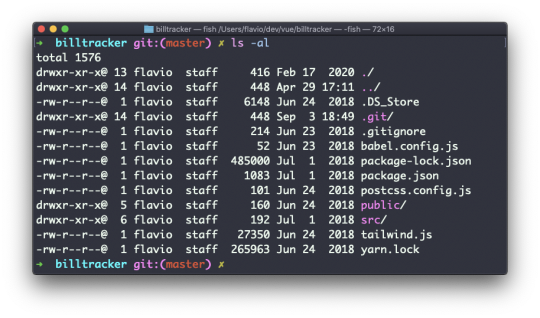

Inside a folder you can list all the files that the folder contains using the ls command:

ls

If you add a folder name or path, it will print that folder's contents:

ls /bin

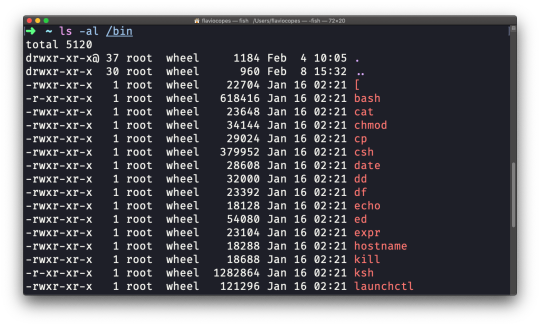

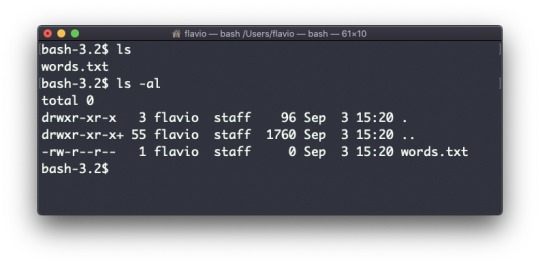

ls accepts a lot of options. One of my favorite combinations is -al. Try it:

ls -al /bin

Compared to the plain ls command, this returns much more information.

You have, from left to right:

the file permissions (and if your system supports ACLs, you get an ACL flag as well)

the number of links to that file

the owner of the file

the group of the file

the file size in bytes

the file's last modified datetime

the file name

This set of data is generated by the l option. The a option instead also shows the hidden files.

Hidden files are files that start with a dot (.).

The cd command

Once you have a folder, you can move into it using the cd command. cd means change directory. You invoke it specifying a folder to move into. You can specify a folder name, or an entire path.

Example:

mkdir fruits cd fruits

Now you are in the fruits folder.

You can use the .. special path to indicate the parent folder:

cd .. #back to the home folder

The # character indicates the start of the comment, which lasts for the entire line after it's found.

You can use it to form a path:

mkdir fruits mkdir cars cd fruits cd ../cars

There is another special path indicator which is ., and indicates the current folder.

You can also use absolute paths, which start from the root folder /:

cd /etc

The pwd command

Whenever you feel lost in the filesystem, call the pwd command to know where you are:

pwd

It will print the current folder path.

The mkdir command

You create folders using the mkdir command:

mkdir fruits

You can create multiple folders with one command:

mkdir dogs cars

You can also create multiple nested folders by adding the -p option:

mkdir -p fruits/apples

Options in UNIX commands commonly take this form. You add them right after the command name, and they change how the command behaves. You can often combine multiple options, too.

You can find which options a command supports by typing man <commandname>. Try now with man mkdir for example (press the q key to esc the man page). Man pages are the amazing built-in help for UNIX.

The rmdir command

Just as you can create a folder using mkdir, you can delete a folder using rmdir:

mkdir fruits rmdir fruits

You can also delete multiple folders at once:

mkdir fruits cars rmdir fruits cars

The folder you delete must be empty.

To delete folders with files in them, we'll use the more generic rm command which deletes files and folders, using the -rf option:

rm -rf fruits cars

Be careful as this command does not ask for confirmation and it will immediately remove anything you ask it to remove.

There is no bin when removing files from the command line, and recovering lost files can be hard.

The mv command

Once you have a file, you can move it around using the mv command. You specify the file current path, and its new path:

touch test mv pear new_pear

The pear file is now moved to new_pear. This is how you rename files and folders.

If the last parameter is a folder, the file located at the first parameter path is going to be moved into that folder. In this case, you can specify a list of files and they will all be moved in the folder path identified by the last parameter:

touch pear touch apple mkdir fruits mv pear apple fruits #pear and apple moved to the fruits folder

The cp command

You can copy a file using the cp command:

touch test cp apple another_apple

To copy folders you need to add the -r option to recursively copy the whole folder contents:

mkdir fruits cp -r fruits cars

The open command

The open command lets you open a file using this syntax:

open <filename>

You can also open a directory, which on macOS opens the Finder app with the current directory open:

open <directory name>

I use it all the time to open the current directory:

open .

The special . symbol points to the current directory, as .. points to the parent directory

The same command can also be be used to run an application:

open <application name>

The touch command

You can create an empty file using the touch command:

touch apple

If the file already exists, it opens the file in write mode, and the timestamp of the file is updated.

The find command

The find command can be used to find files or folders matching a particular search pattern. It searches recursively.

Let's learn how to use it by example.

Find all the files under the current tree that have the .js extension and print the relative path of each file that matches:

find . -name '*.js'

It's important to use quotes around special characters like * to avoid the shell interpreting them.

Find directories under the current tree matching the name "src":

find . -type d -name src

Use -type f to search only files, or -type l to only search symbolic links.

-name is case sensitive. use -iname to perform a case-insensitive search.

You can search under multiple root trees:

find folder1 folder2 -name filename.txt

Find directories under the current tree matching the name "node_modules" or 'public':

find . -type d -name node_modules -or -name public

You can also exclude a path using -not -path:

find . -type d -name '*.md' -not -path 'node_modules/*'

You can search files that have more than 100 characters (bytes) in them:

find . -type f -size +100c

Search files bigger than 100KB but smaller than 1MB:

find . -type f -size +100k -size -1M

Search files edited more than 3 days ago:

find . -type f -mtime +3

Search files edited in the last 24 hours:

find . -type f -mtime -1

You can delete all the files matching a search by adding the -delete option. This deletes all the files edited in the last 24 hours:

find . -type f -mtime -1 -delete

You can execute a command on each result of the search. In this example we run cat to print the file content:

find . -type f -exec cat {} \;

Notice the terminating \;. {} is filled with the file name at execution time.

The ln command

The ln command is part of the Linux file system commands.

It's used to create links. What is a link? It's like a pointer to another file, or a file that points to another file. You might be familiar with Windows shortcuts. They're similar.

We have 2 types of links: hard links and soft links.

Hard links

Hard links are rarely used. They have a few limitations: you can't link to directories, and you can't link to external filesystems (disks).

A hard link is created using the following syntax:

ln <original> <link>

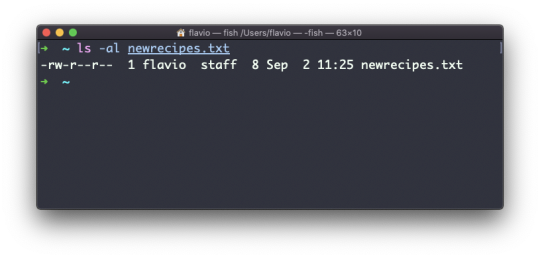

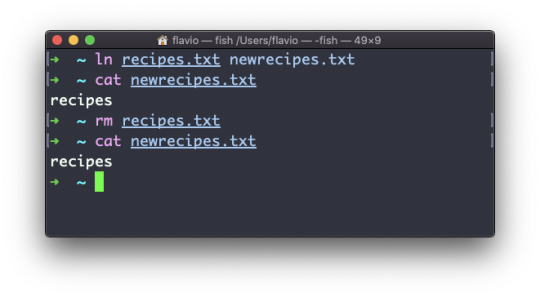

For example, say you have a file called recipes.txt. You can create a hard link to it using:

ln recipes.txt newrecipes.txt

The new hard link you created is indistinguishable from a regular file:

Now any time you edit any of those files, the content will be updated for both.

If you delete the original file, the link will still contain the original file content, as that's not removed until there is one hard link pointing to it.

Soft links

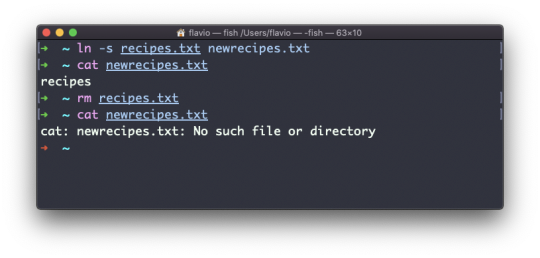

Soft links are different. They are more powerful as you can link to other filesystems and to directories. But keep in mind that when the original is removed, the link will be broken.

You create soft links using the -s option of ln:

ln -s <original> <link>

For example, say you have a file called recipes.txt. You can create a soft link to it using:

ln -s recipes.txt newrecipes.txt

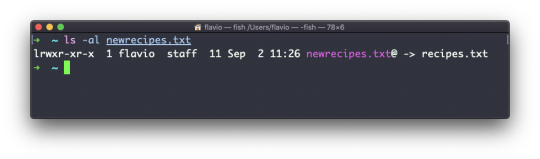

In this case you can see there's a special l flag when you list the file using ls -al. The file name has a @ at the end, and it's also colored differently if you have colors enabled:

Now if you delete the original file, the links will be broken, and the shell will tell you "No such file or directory" if you try to access it:

The gzip command

You can compress a file using the gzip compression protocol named LZ77 using the gzip command.

Here's the simplest usage:

gzip filename

This will compress the file, and append a .gz extension to it. The original file is deleted.

To prevent this, you can use the -c option and use output redirection to write the output to the filename.gz file:

gzip -c filename > filename.gz

The -c option specifies that the output will go to the standard output stream, leaving the original file intact.

Or you can use the -k option:

gzip -k filename

There are various levels of compression. The more the compression, the longer it will take to compress (and decompress). Levels range from 1 (fastest, worst compression) to 9 (slowest, better compression), and the default is 6.

You can choose a specific level with the -<NUMBER> option:

gzip -1 filename

You can compress multiple files by listing them:

gzip filename1 filename2

You can compress all the files in a directory, recursively, using the -r option:

gzip -r a_folder

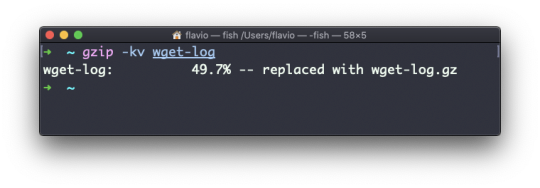

The -v option prints the compression percentage information. Here's an example of it being used along with the -k (keep) option:

gzip can also be used to decompress a file, using the -d option:

gzip -d filename.gz

The gunzip command

The gunzip command is basically equivalent to the gzip command, except the -d option is always enabled by default.

The command can be invoked in this way:

gunzip filename.gz

This will gunzip and will remove the .gz extension, putting the result in the filename file. If that file exists, it will overwrite that.

You can extract to a different filename using output redirection using the -c option:

gunzip -c filename.gz > anotherfilename

The tar command

The tar command is used to create an archive, grouping multiple files in a single file.

Its name comes from the past and means tape archive (back when archives were stored on tapes).

This command creates an archive named archive.tar with the content of file1 and file2:

tar -cf archive.tar file1 file2

The c option stands for create. The f option is used to write to file the archive.

To extract files from an archive in the current folder, use:

tar -xf archive.tar

the x option stands for extract.

And to extract them to a specific directory, use:

tar -xf archive.tar -C directory

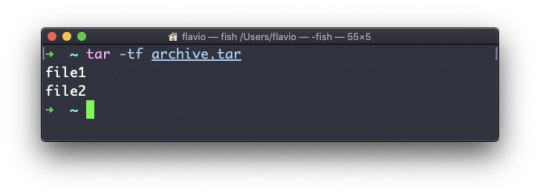

You can also just list the files contained in an archive:

tar is often used to create a compressed archive, gzipping the archive.

This is done using the z option:

tar -czf archive.tar.gz file1 file2

This is just like creating a tar archive, and then running gzip on it.

To unarchive a gzipped archive, you can use gunzip, or gzip -d, and then unarchive it. But tar -xf will recognize it's a gzipped archive, and do it for you:

tar -xf archive.tar.gz

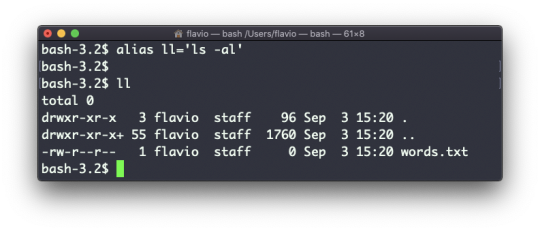

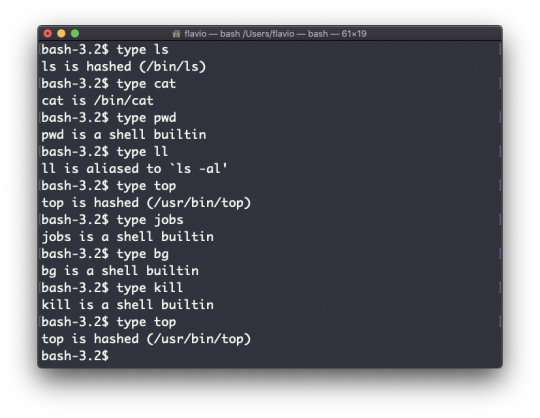

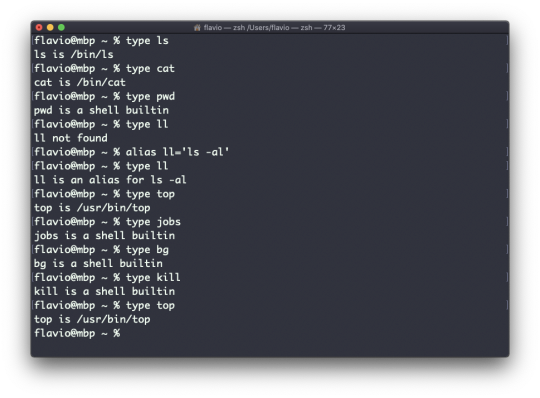

The alias command

It's common to always run a program with a set of options that you like using.

For example, take the ls command. By default it prints very little information:

But if you use the -al option it will print something more useful, including the file modification date, the size, the owner, and the permissions. It will also list hidden files (files starting with a .):

You can create a new command, for example I like to call it ll, that is an alias to ls -al.

You do it like this:

alias ll='ls -al'

Once you do, you can call ll just like it was a regular UNIX command:

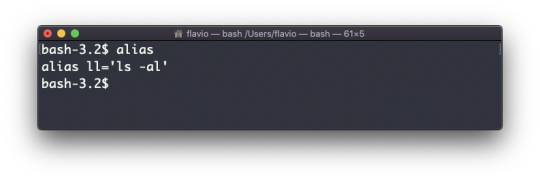

Now calling alias without any option will list the aliases defined:

The alias will work until the terminal session is closed.

To make it permanent, you need to add it to the shell configuration. This could be ~/.bashrc or ~/.profile or ~/.bash_profile if you use the Bash shell, depending on the use case.

Be careful with quotes if you have variables in the command: if you use double quotes, the variable is resolved at definition time. If you use use single quotes, it's resolved at invocation time. Those 2 are different:

alias lsthis="ls $PWD" alias lscurrent='ls $PWD'

$PWD refers to the current folder the shell is in. If you now navigate away to a new folder, lscurrent lists the files in the new folder, whereas lsthis still lists the files in the folder where you were when you defined the alias.

The cat command

Similar to tail in some ways, we have cat. Except cat can also add content to a file, and this makes it super powerful.

In its simplest usage, cat prints a file's content to the standard output:

cat file

You can print the content of multiple files:

cat file1 file2

and using the output redirection operator > you can concatenate the content of multiple files into a new file:

cat file1 file2 > file3

Using >> you can append the content of multiple files into a new file, creating it if it does not exist:

cat file1 file2 >> file3

When you're looking at source code files it's helpful to see the line numbers. You can have cat print them using the -n option:

cat -n file1

You can only add a number to non-blank lines using -b, or you can also remove all the multiple empty lines using -s.

cat is often used in combination with the pipe operator | to feed a file's content as input to another command: cat file1 | anothercommand.

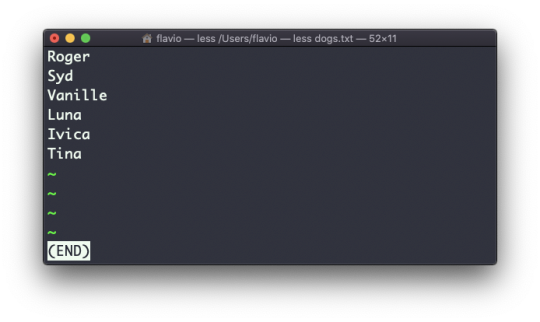

The less command

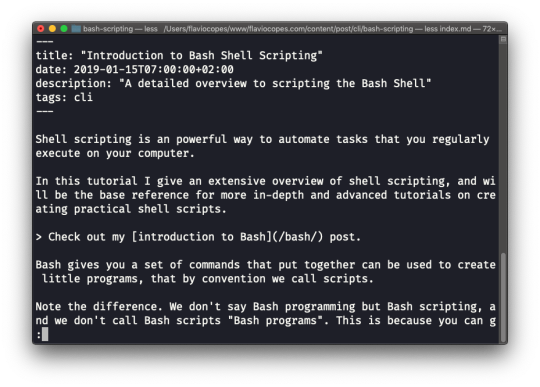

The less command is one I use a lot. It shows you the content stored inside a file, in a nice and interactive UI.

Usage: less <filename>.

Once you are inside a less session, you can quit by pressing q.

You can navigate the file contents using the up and down keys, or using the space bar and b to navigate page by page. You can also jump to the end of the file pressing G and jump back to the start by pressing g.

You can search contents inside the file by pressing / and typing a word to search. This searches forward. You can search backwards using the ? symbol and typing a word.

This command just visualises the file's content. You can directly open an editor by pressing v. It will use the system editor, which in most cases is vim.

Pressing the F key enters follow mode, or watch mode. When the file is changed by someone else, like from another program, you get to see the changes live.

This doesn't happen by default, and you only see the file version at the time you opened it. You need to press ctrl-C to quit this mode. In this case the behaviour is similar to running the tail -f <filename> command.

You can open multiple files, and navigate through them using :n (to go to the next file) and :p (to go to the previous).

The tail command

The best use case of tail in my opinion is when called with the -f option. It opens the file at the end, and watches for file changes.

Any time there is new content in the file, it is printed in the window. This is great for watching log files, for example:

tail -f /var/log/system.log

To exit, press ctrl-C.

You can print the last 10 lines in a file:

tail -n 10 <filename>

You can print the whole file content starting from a specific line using + before the line number:

tail -n +10 <filename>

tail can do much more and as always my advice is to check man tail.

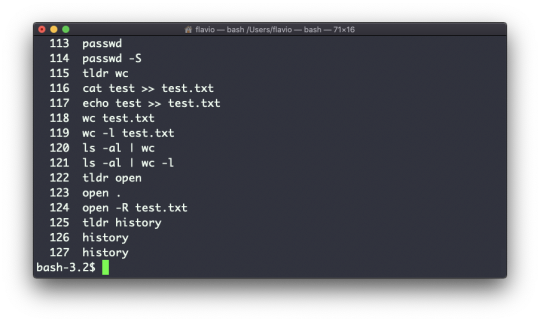

The wc command

The wc command gives us useful information about a file or input it receives via pipes.

echo test >> test.txt wc test.txt 1 1 5 test.txt

Example via pipes, we can count the output of running the ls -al command:

ls -al | wc 6 47 284

The first column returned is the number of lines. The second is the number of words. The third is the number of bytes.

We can tell it to just count the lines:

wc -l test.txt

or just the words:

wc -w test.txt

or just the bytes:

wc -c test.txt

Bytes in ASCII charsets equate to characters. But with non-ASCII charsets, the number of characters might differ because some characters might take multiple bytes (for example this happens in Unicode).

In this case the -m flag will help you get the correct value:

wc -m test.txt

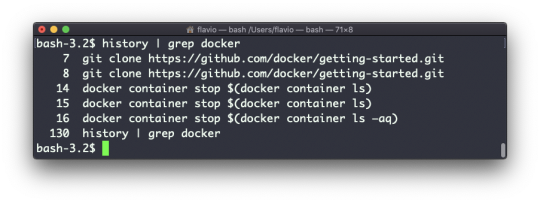

The grep command

The grep command is a very useful tool. When you master it, it will help you tremendously in your day to day coding.

If you're wondering, grep stands for global regular expression print.

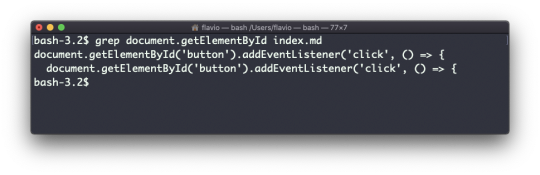

You can use grep to search in files, or combine it with pipes to filter the output of another command.

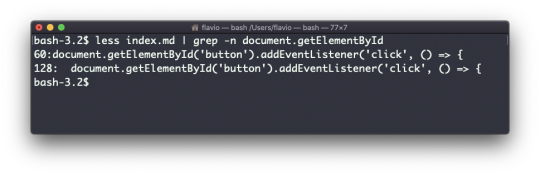

For example here's how we can find the occurences of the document.getElementById line in the index.md file:

grep -n document.getElementById index.md

Using the -n option it will show the line numbers:

grep -n document.getElementById index.md

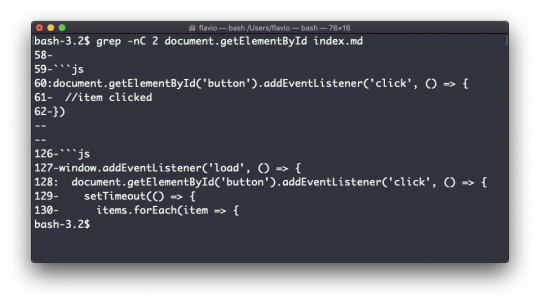

One very useful thing is to tell grep to print 2 lines before and 2 lines after the matched line to give you more context. That's done using the -C option, which accepts a number of lines:

grep -nC 2 document.getElementById index.md

Search is case sensitive by default. Use the -i flag to make it insensitive.

As mentioned, you can use grep to filter the output of another command. We can replicate the same functionality as above using:

less index.md | grep -n document.getElementById

The search string can be a regular expression, and this makes grep very powerful.

Another thing you might find very useful is to invert the result, excluding the lines that match a particular string, using the -v option:

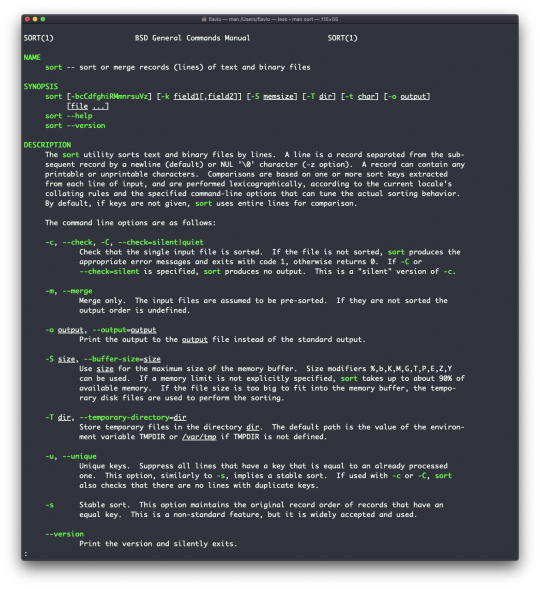

The sort command

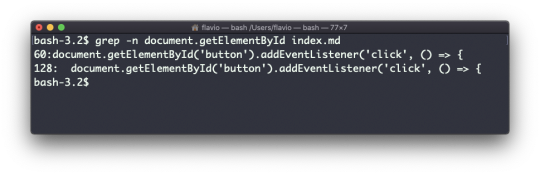

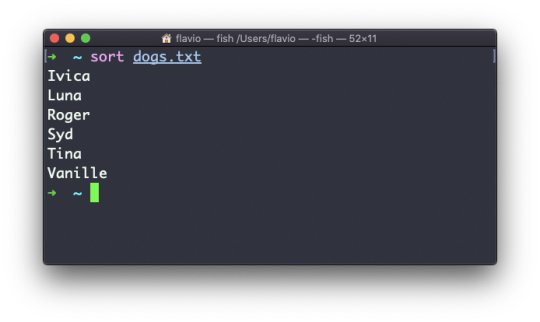

Suppose you have a text file which contains the names of dogs:

This list is unordered.

The sort command helps you sort them by name:

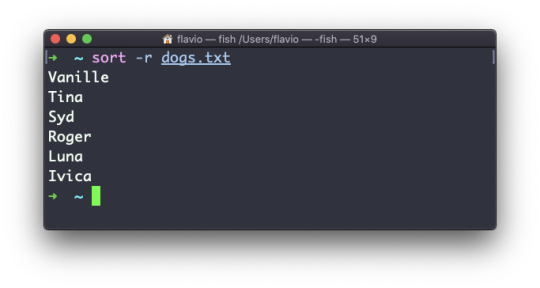

Use the r option to reverse the order:

Sorting by default is case sensitive, and alphabetic. Use the --ignore-case option to sort case insensitive, and the -n option to sort using a numeric order.

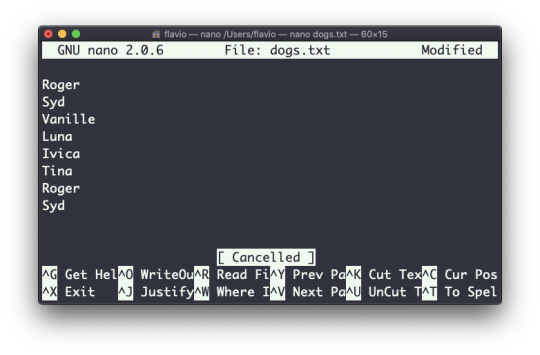

If the file contains duplicate lines:

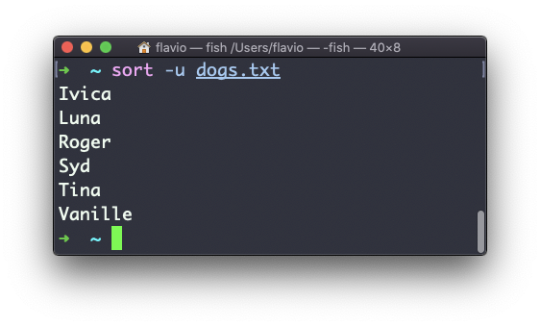

You can use the -u option to remove them:

sort does not just work on files, as many UNIX commands do – it also works with pipes. So you can use it on the output of another command. For example you can order the files returned by ls with:

ls | sort

sort is very powerful and has lots more options, which you can explore by calling man sort.

The uniq command

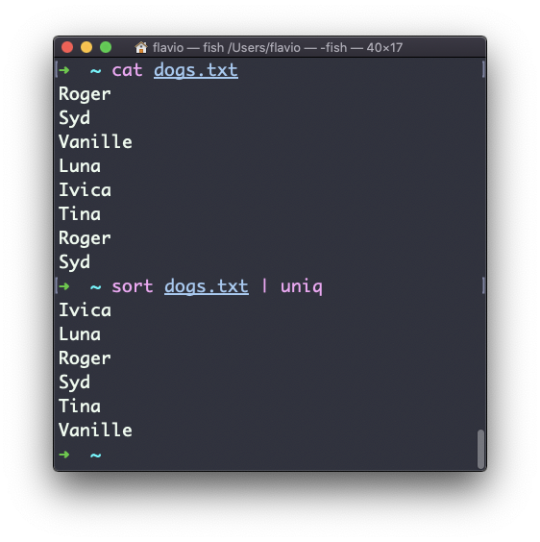

uniq is a command that helps you sort lines of text.

You can get those lines from a file, or using pipes from the output of another command:

uniq dogs.txt ls | uniq

You need to consider this key thing: uniq will only detect adjacent duplicate lines.

This implies that you will most likely use it along with sort:

sort dogs.txt | uniq

The sort command has its own way to remove duplicates with the -u (unique) option. But uniq has more power.

By default it removes duplicate lines:

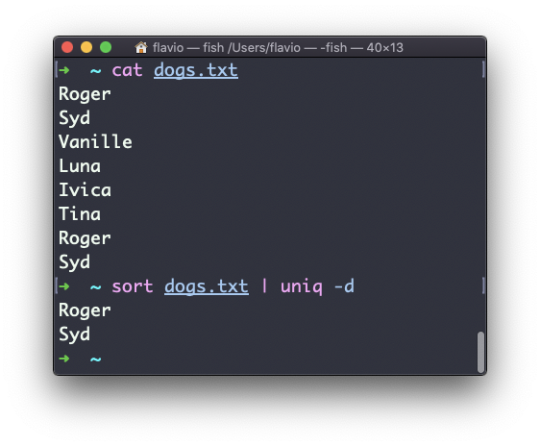

You can tell it to only display duplicate lines, for example, with the -d option:

sort dogs.txt | uniq -d

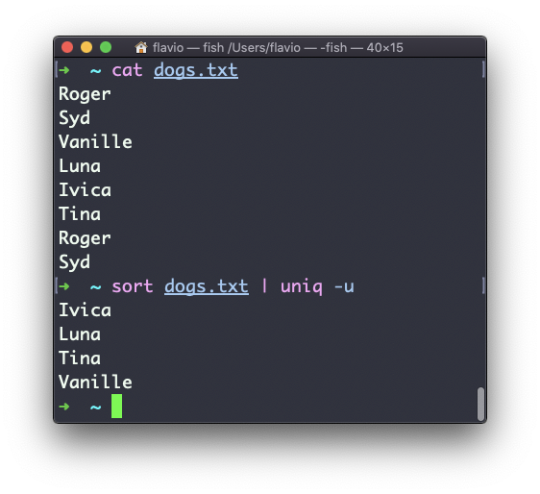

You can use the -u option to only display non-duplicate lines:

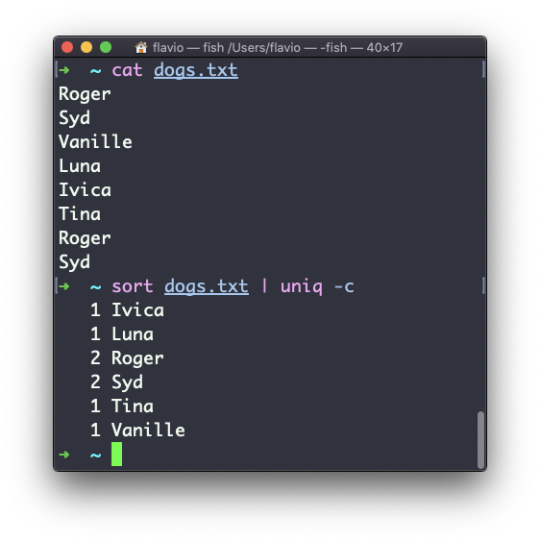

You can count the occurrences of each line with the -c option:

Use the special combination:

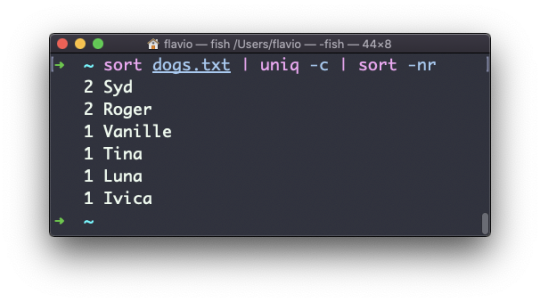

sort dogs.txt | uniq -c | sort -nr

to then sort those lines by most frequent:

The diff command

diff is a handy command. Suppose you have 2 files, which contain almost the same information, but you can't find the difference between the two.

diff will process the files and will tell you what's the difference.

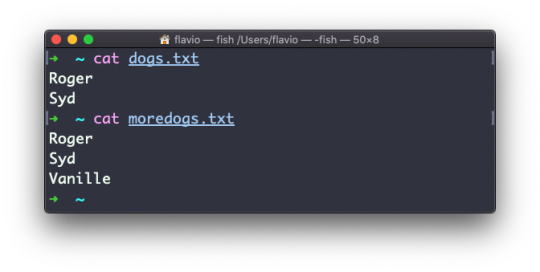

Suppose you have 2 files: dogs.txt and moredogs.txt. The difference is that moredogs.txt contains one more dog name:

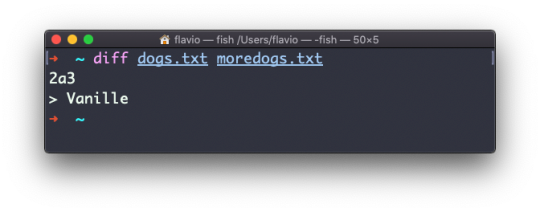

diff dogs.txt moredogs.txt will tell you the second file has one more line, line 3 with the line Vanille:

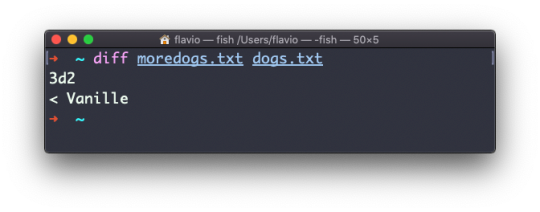

If you invert the order of the files, it will tell you that the second file is missing line 3, whose content is Vanille:

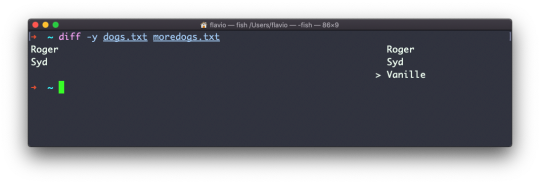

Using the -y option will compare the 2 files line by line:

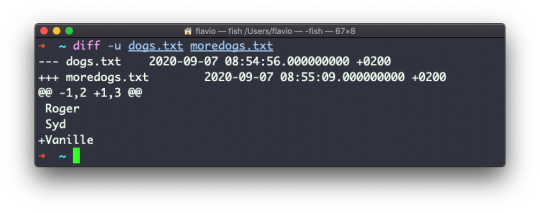

The -u option however will be more familiar to you, because that's the same used by the Git version control system to display differences between versions:

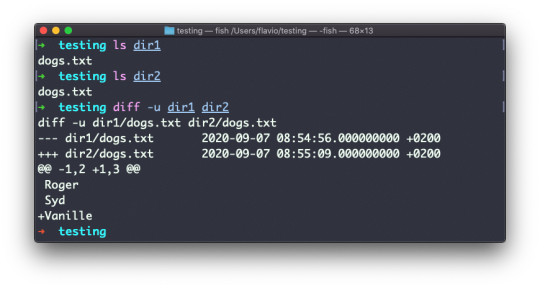

Comparing directories works in the same way. You must use the -r option to compare recursively (going into subdirectories):

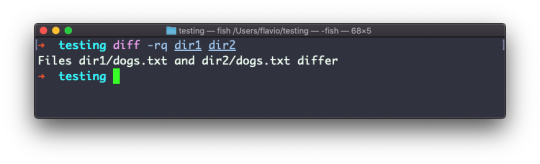

In case you're interested in which files differ, rather than the content, use the r and q options:

There are many more options you can explore in the man page by running man diff:

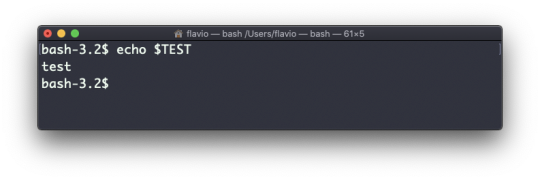

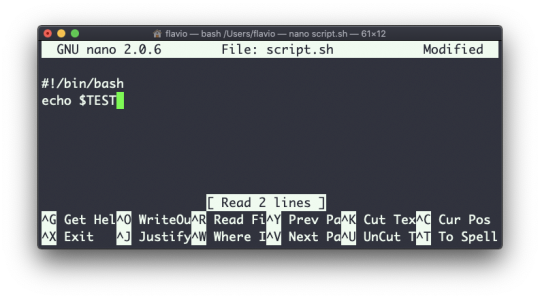

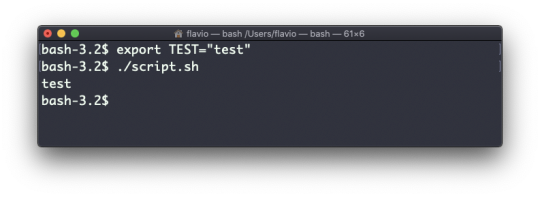

The echo command

The echo command does one simple job: it prints to the output the argument passed to it.

This example:

echo "hello"

will print hello to the terminal.

We can append the output to a file:

echo "hello" >> output.txt

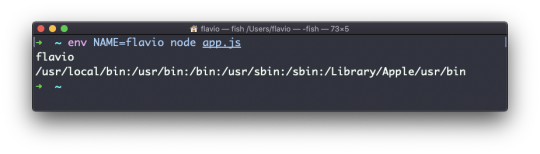

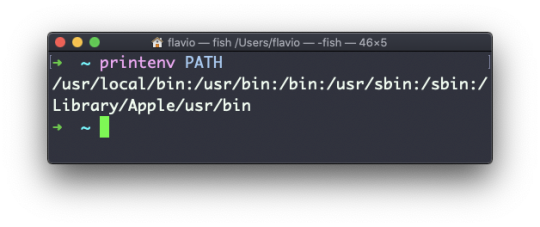

We can interpolate environment variables:

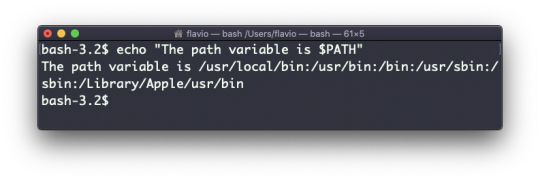

echo "The path variable is $PATH"

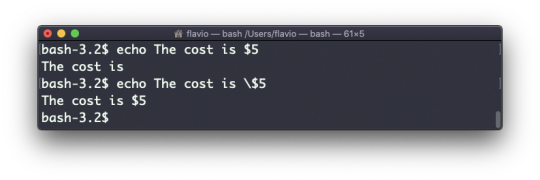

Beware that special characters need to be escaped with a backslash \. $ for example:

This is just the start. We can do some nice things when it comes to interacting with the shell features.

We can echo the files in the current folder:

echo *

We can echo the files in the current folder that start with the letter o:

echo o*

Any valid Bash (or any shell you are using) command and feature can be used here.

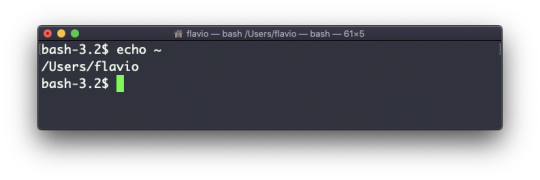

You can print your home folder path:

echo ~

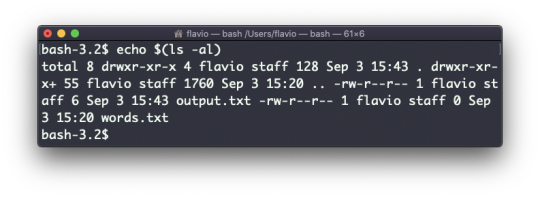

You can also execute commands, and print the result to the standard output (or to file, as you saw):

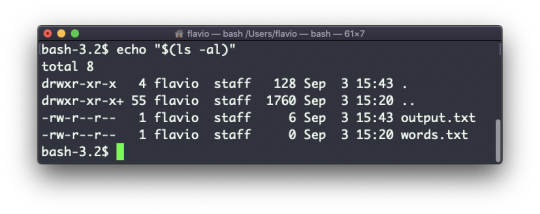

echo $(ls -al)

Note that whitespace is not preserved by default. You need to wrap the command in double quotes to do so:

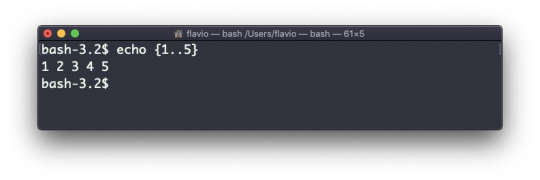

You can generate a list of strings, for example ranges:

echo {1..5}

The chown command

Every file/directory in an Operating System like Linux or macOS (and every UNIX system in general) has an owner.

The owner of a file can do everything with it. It can decide the fate of that file.

The owner (and the root user) can change the owner to another user, too, using the chown command:

chown <owner> <file>

Like this:

chown flavio test.txt

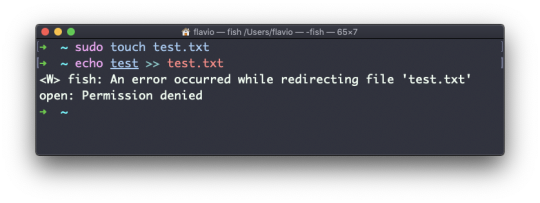

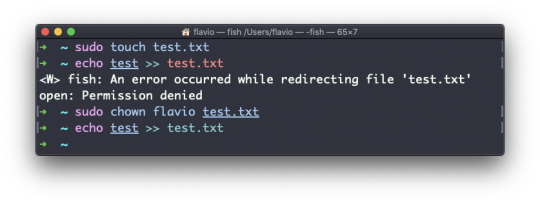

For example if you have a file that's owned by root, you can't write to it as another user:

You can use chown to transfer the ownership to you:

It's rather common to need to change the ownership of a directory, and recursively all the files contained, plus all the subdirectories and the files contained in them, too.

You can do so using the -R flag:

chown -R <owner> <file>

Files/directories don't just have an owner, they also have a group. Through this command you can change that simultaneously while you change the owner:

chown <owner>:<group> <file>

Example:

chown flavio:users test.txt

You can also just change the group of a file using the chgrp command:

chgrp <group> <filename>

The chmod command

Every file in the Linux / macOS Operating Systems (and UNIX systems in general) has 3 permissions: read, write, and execute.

Go into a folder, and run the ls -al command.

The weird strings you see on each file line, like drwxr-xr-x, define the permissions of the file or folder.

Let's dissect it.

The first letter indicates the type of file:

- means it's a normal file

d means it's a directory

l means it's a link

Then you have 3 sets of values:

The first set represents the permissions of the owner of the file

The second set represents the permissions of the members of the group the file is associated to

The third set represents the permissions of the everyone else

Those sets are composed by 3 values. rwx means that specific persona has read, write and execution access. Anything that is removed is swapped with a -, which lets you form various combinations of values and relative permissions: rw-, r--, r-x, and so on.

You can change the permissions given to a file using the chmod command.

chmod can be used in 2 ways. The first is using symbolic arguments, the second is using numeric arguments. Let's start with symbols first, which is more intuitive.

You type chmod followed by a space, and a letter:

a stands for all

u stands for user

g stands for group

o stands for others

Then you type either + or - to add a permission, or to remove it. Then you enter one or more permission symbols (r, w, x).

All followed by the file or folder name.

Here are some examples:

chmod a+r filename #everyone can now read chmod a+rw filename #everyone can now read and write chmod o-rwx filename #others (not the owner, not in the same group of the file) cannot read, write or execute the file

You can apply the same permissions to multiple personas by adding multiple letters before the +/-:

chmod og-r filename #other and group can't read any more

In case you are editing a folder, you can apply the permissions to every file contained in that folder using the -r (recursive) flag.

Numeric arguments are faster but I find them hard to remember when you are not using them day to day. You use a digit that represents the permissions of the persona. This number value can be a maximum of 7, and it's calculated in this way:

1 if has execution permission

2 if has write permission

4 if has read permission

This gives us 4 combinations:

0 no permissions

1 can execute

2 can write

3 can write, execute

4 can read

5 can read, execute

6 can read, write

7 can read, write and execute

We use them in pairs of 3, to set the permissions of all the 3 groups altogether:

chmod 777 filename chmod 755 filename chmod 644 filename

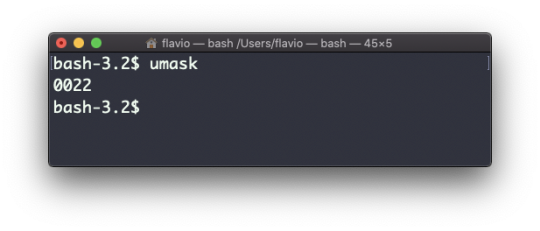

The umask command

When you create a file, you don't have to decide permissions up front. Permissions have defaults.

Those defaults can be controlled and modified using the umask command.

Typing umask with no arguments will show you the current umask, in this case 0022:

What does 0022 mean? That's an octal value that represents the permissions.

Another common value is 0002.

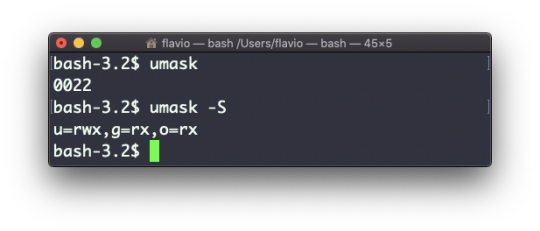

Use umask -S to see a human-readable notation:

In this case, the user (u), owner of the file, has read, write and execution permissions on files.

Other users belonging to the same group (g) have read and execution permission, same as all the other users (o).

In the numeric notation, we typically change the last 3 digits.

Here's a list that gives a meaning to the number:

0 read, write, execute

1 read and write

2 read and execute

3 read only

4 write and execute

5 write only

6 execute only

7 no permissions

Note that this numeric notation differs from the one we use in chmod.

We can set a new value for the mask setting the value in numeric format:

umask 002

or you can change a specific role's permission:

umask g+r

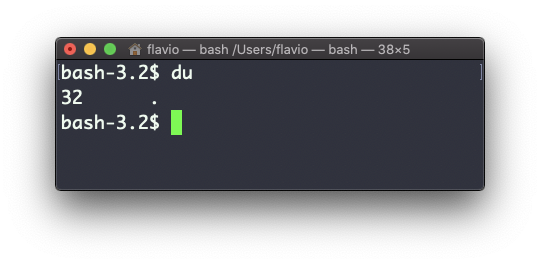

The du command

The du command will calculate the size of a directory as a whole:

du

The 32 number here is a value expressed in bytes.

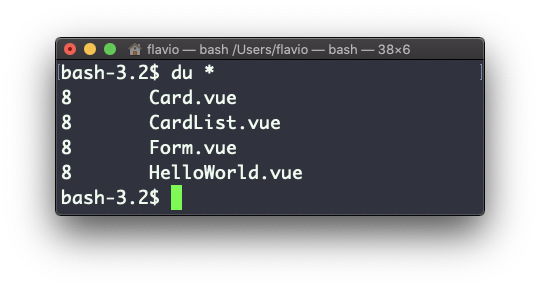

Running du * will calculate the size of each file individually:

You can set du to display values in MegaBytes using du -m, and GigaBytes using du -g.

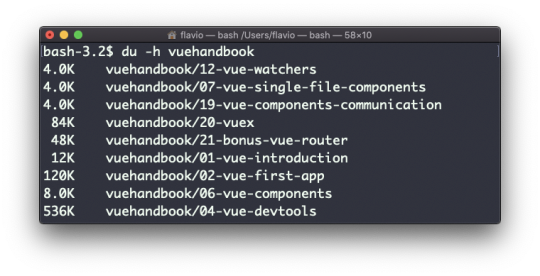

The -h option will show a human-readable notation for sizes, adapting to the size:

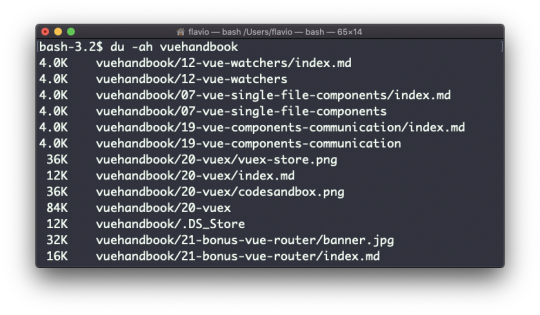

Adding the -a option will print the size of each file in the directories, too:

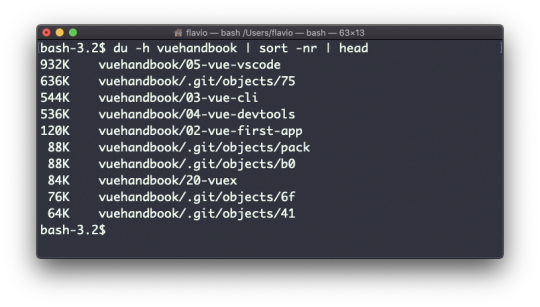

A handy thing is to sort the directories by size:

du -h <directory> | sort -nr

and then piping to head to only get the first 10 results:

The df command

The df command is used to get disk usage information.

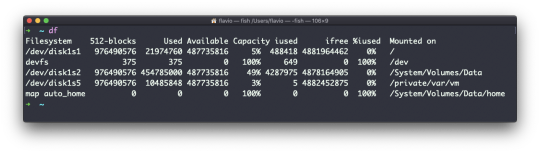

Its basic form will print information about the volumes mounted:

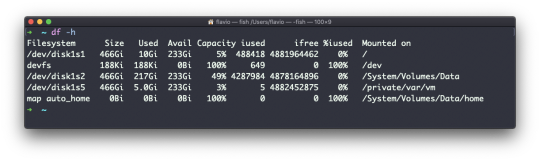

Using the -h option (df -h) will show those values in a human-readable format:

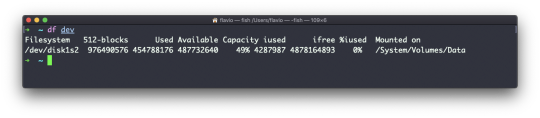

You can also specify a file or directory name to get information about the specific volume it lives on:

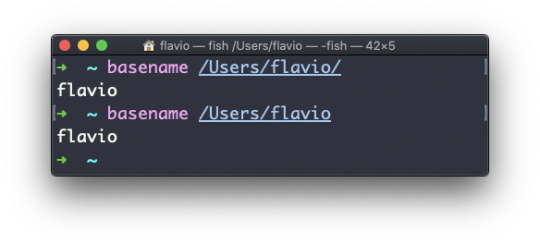

The basename command

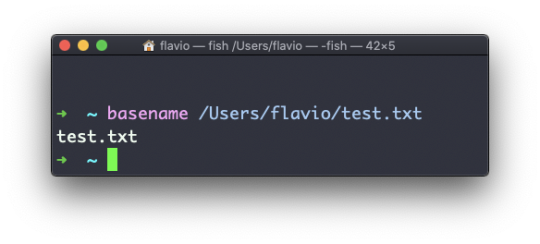

Suppose you have a path to a file, for example /Users/flavio/test.txt.

Running

basename /Users/flavio/test.txt

will return the text.txt string:

If you run basename on a path string that points to a directory, you will get the last segment of the path. In this example, /Users/flavio is a directory:

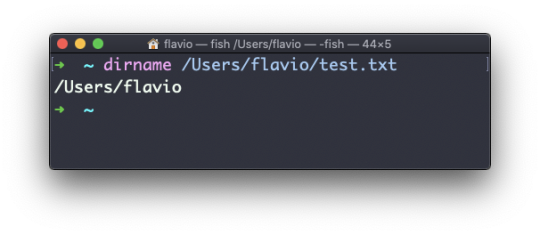

The dirname command

Suppose you have a path to a file, for example /Users/flavio/test.txt.

Running

dirname /Users/flavio/test.txt

will return the /Users/flavio string:

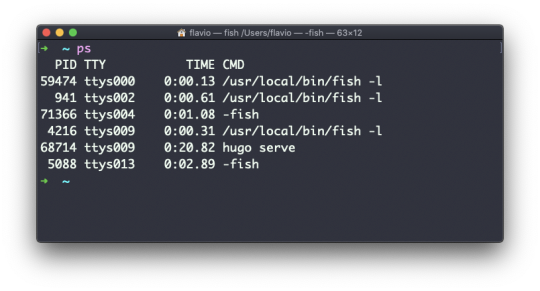

The ps command

Your computer is running tons of different processes at all times.

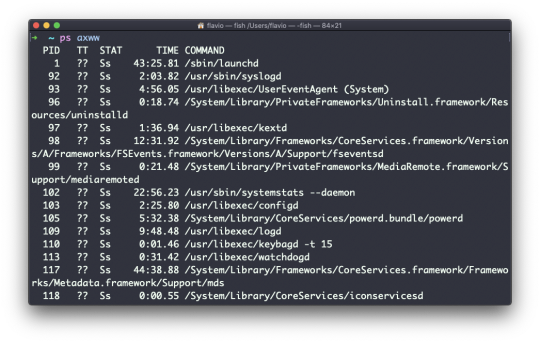

You can inspect them all using the ps command:

This is the list of user-initiated processes currently running in the current session.

Here I have a few fish shell instances, mostly opened by VS Code inside the editor, and an instance of Hugo running the development preview of a site.

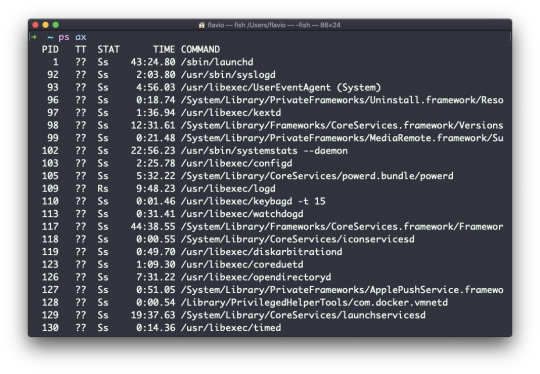

Those are just the commands assigned to the current user. To list all processes we need to pass some options to ps.

The most common one I use is ps ax:

The a option is used to also list other users' processes, not just your own. x shows processes not linked to any terminal (not initiated by users through a terminal).

As you can see, the longer commands are cut. Use the command ps axww to continue the command listing on a new line instead of cutting it:

We need to specify w 2 times to apply this setting (it's not a typo).

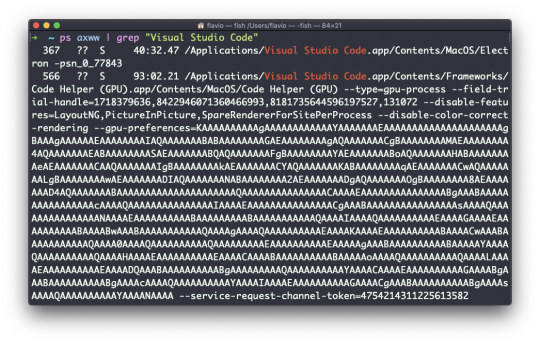

You can search for a specific process combining grep with a pipe, like this:

ps axww | grep "Visual Studio Code"

The columns returned by ps represent some key information.

The first information is PID, the process ID. This is key when you want to reference this process in another command, for example to kill it.

Then we have TT that tells us the terminal id used.

Then STAT tells us the state of the process:

I a process that is idle (sleeping for longer than about 20 seconds) R a runnable process S a process that is sleeping for less than about 20 seconds T a stopped process U a process in uninterruptible wait Z a dead process (a zombie)

If you have more than one letter, the second represents further information, which can be very technical.

It's common to have + which indicates that the process is in the foreground in its terminal. s means the process is a session leader.

TIME tells us how long the process has been running.

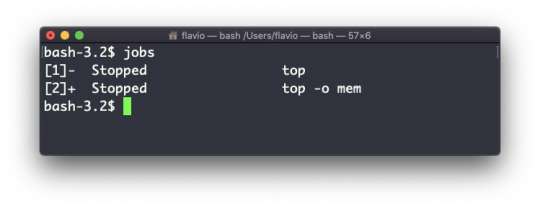

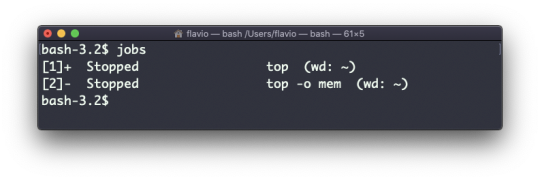

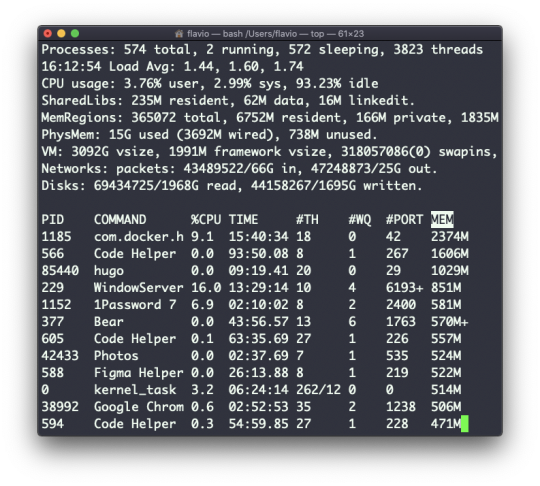

The top command

The top command is used to display dynamic real-time information about running processes in the system.

It's really handy to understand what is going on.

Its usage is simple – you just type top, and the terminal will be fully immersed in this new view:

The process is long-running. To quit, you can type the q letter or ctrl-C.

There's a lot of information being given to us: the number of processes, how many are running or sleeping, the system load, the CPU usage, and a lot more.

Below, the list of processes taking the most memory and CPU is constantly updated.

By default, as you can see from the %CPU column highlighted, they are sorted by the CPU used.

You can add a flag to sort processes by memory utilized:

top -o mem

The kill command

Linux processes can receive signals and react to them.

That's one way we can interact with running programs.

The kill program can send a variety of signals to a program.

It's not just used to terminate a program, like the name would suggest, but that's its main job.

We use it in this way:

kill <PID>

By default, this sends the TERM signal to the process id specified.

We can use flags to send other signals, including:

kill -HUP <PID> kill -INT <PID> kill -KILL <PID> kill -TERM <PID> kill -CONT <PID> kill -STOP <PID>

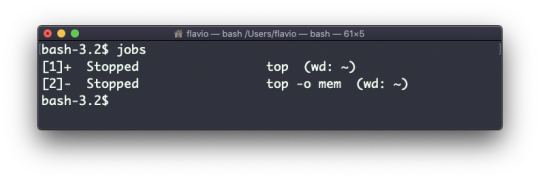

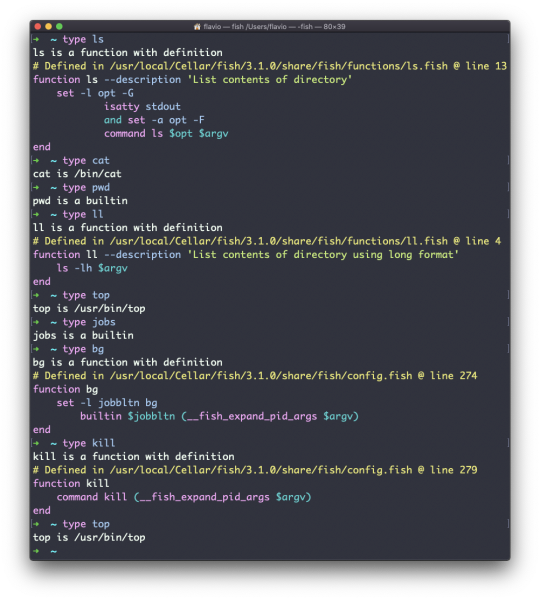

HUP means hang up. It's sent automatically when a terminal window that started a process is closed before terminating the process.