#modern fortran

Explore tagged Tumblr posts

Note

Fortran is actually cool and fun and more people should learn it.

Don't actually write production code in it, though. I don't want to risk having to maintain it down the line. Please. Please.

Just go back to fortran

And should I etch my code into a clay tablet as well, boomer?

122 notes

·

View notes

Text

Women pulling Lever on a Drilling Machine, 1978 Lee, Howl & Company Ltd., Tipton, Staffordshire, England photograph by Nick Hedges image credit: Nick Hedges Photography

* * * *

Tim Boudreau

About the whole DOGE-will-rewrite Social Security's COBOL code in some new language thing, since this is a subject I have a whole lot of expertise in, a few anecdotes and thoughts.

Some time in the early 2000s I was doing some work with the real-time Java team at Sun, and there was a huge defense contractor with a peculiar query: Could we document how much memory an instance of every object type in the JDK uses? And could we guarantee that that number would never change, and definitely never grow, in any future Java version?

I remember discussing this with a few colleagues in a pub after work, and talking it through, and we all arrived at the conclusion that the only appropriate answer to this question as "Hell no." and that it was actually kind of idiotic.

Say you've written the code, in Java 5 or whatever, that launches nuclear missiles. You've tested it thoroughly, it's been reviewed six ways to Sunday because you do that with code like this (or you really, really, really should). It launches missiles and it works.

A new version of Java comes out. Do you upgrade? No, of course you don't upgrade. It works. Upgrading buys you nothing but risk. Why on earth would you? Because you could blow up the world 10 milliseconds sooner after someone pushes the button?

It launches fucking missiles. Of COURSE you don't do that.

There is zero reason to ever do that, and to anyone managing such a project who's a grownup, that's obvious. You don't fuck with things that work just to be one of the cool kids. Especially not when the thing that works is life-or-death (well, in this case, just death).

Another case: In the mid 2000s I trained some developers at Boeing. They had all this Fortran materials analysis code from the 70s - really fussy stuff, so you could do calculations like, if you have a sheet of composite material that is 2mm of this grade of aluminum bonded to that variety of fiberglass with this type of resin, and you drill a 1/2" hole in it, what is the effect on the strength of that airplane wing part when this amount of torque is applied at this angle. Really fussy, hard-to-do but when-it's-right-it's-right-forever stuff.

They were taking a very sane, smart approach to it: Leave the Fortran code as-is - it works, don't fuck with it - just build a nice, friendly graphical UI in Java on top of it that *calls* the code as-is.

We are used to broken software. The public has been trained to expect low quality as a fact of life - and the industry is rife with "agile" methodologies *designed* to churn out crappy software, because crappy guarantees a permanent ongoing revenue stream. It's an article of faith that everything is buggy (and if it isn't, we've got a process or two to sell you that will make it that way).

It's ironic. Every other form of engineering involves moving parts and things that wear and decay and break. Software has no moving parts. Done well, it should need *vastly* less maintenance than your car or the bridges it drives on. Software can actually be *finished* - it is heresy to say it, but given a well-defined problem, it is possible to actually *solve* it and move on, and not need to babysit or revisit it. In fact, most of our modern technological world is possible because of such solved problems. But we're trained to ignore that.

Yeah, COBOL is really long-in-the-tooth, and few people on earth want to code in it. But they have a working system with decades invested in addressing bugs and corner-cases.

Rewriting stuff - especially things that are life-and-death - in a fit of pique, or because of an emotional reaction to the technology used, or because you want to use the toys all the cool kids use - is idiotic. It's immaturity on display to the world.

Doing it with AI that's going to read COBOL code and churn something out in another language - so now you have code no human has read, written and understands - is simply insane. And the best software translators plus AI out there, is going to get things wrong - grievously wrong. And the odds of anyone figuring out what or where before it leads to disaster are low, never mind tracing that back to the original code and figuring out what that was supposed to do.

They probably should find their way off COBOL simply because people who know it and want to endure using it are hard to find and expensive. But you do that gradually, walling off parts of the system that work already and calling them from your language-du-jour, not building any new parts of the system in COBOL, and when you do need to make a change in one of those walled off sections, you migrate just that part.

We're basically talking about something like replacing the engine of a plane while it's flying. Now, do you do that a part-at-a-time with the ability to put back any piece where the new version fails? Or does it sound like a fine idea to vaporize the existing engine and beam in an object which a next-word-prediction software *says* is a contraption that does all the things the old engine did, and hope you don't crash?

The people involved in this have ZERO technical judgement.

#tech#software engineering#reality check#DOGE#computer madness#common sense#sanity#The gang that couldn't shoot straight#COBOL#Nick Hedges#machine world

44 notes

·

View notes

Note

i'm curious about something with your conlang and setting during the computing era in Ebhorata, is Swädir's writing system used in computers (and did it have to be simplified any for early computers)? is there a standard code table like how we have ascii (and, later, unicode)? did this affect early computers word sizes? or the size of the standard information quanta used in most data systems? ("byte" irl, though some systems quantize it more coarsely (512B block sizes were common))

also, what's Zesiyr like? is it akin to fortran or c or cobol, or similar to smalltalk, or more like prolog, forth, or perhaps lisp? (or is it a modern language in setting so should be compared to things like rust or python or javascript et al?) also also have you considered making it an esolang? (in the "unique" sense, not necessarily the "difficult to program in" sense)

nemmyltok :3

also small pun that only works if it's tɔk or tɑk, not toʊk: "now we're nemmyltalking"

so...i haven't worked much on my worldbuilding lately, and since i changed a lot of stuff with the languages and world itself, the writing systems i have are kinda outdated. I worked a lot more on the ancestor of swædir, ntsuqatir, and i haven't worked much on its daughter languages, which need some serious redesign.

Anyway. Computers are about 100 years old, give or take, on the timeline where my cat and fox live. Here, computers were born out of the need for long-distance communication and desire for international cooperation in a sparsely populated world, where the largest cities don't have much more than 10,000 inhabitants, are set quite far apart from each other with some small villages and nomadic and semi-nomadic peoples inbetween them. Computers were born out of telegraph and radio technology, with the goal of transmitting and receiving text in a faster, error-free way, which could be automatically stored and read later, so receiving stations didn't need 24/7 operators. So, unlike our math/war/business machines, multi-language text support was built in from the start, while math was a later addition.

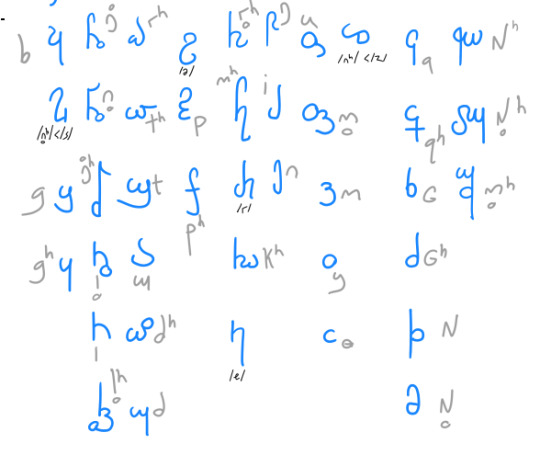

At the time of the earliest computers, there was a swædir alphabet which descended from the earlier ntsuqatir featural alphabet:

the phonology here is pretty outdated, but the letters are the same, and it'd be easy to encode this. Meanwhile, the up-to-date version of the ntsuqatir featural alphabet looks like this:

it works like korean, and composing characters that combine the multiple components is so straightforward i made a program in shell script to typeset text in this system so i could write longer text without drawing or copying and pasting every character. At the time computers were invented, this was used mostly for ceremonial purposes, though, so i'm not sure if they saw any use in adding it to computers early on.

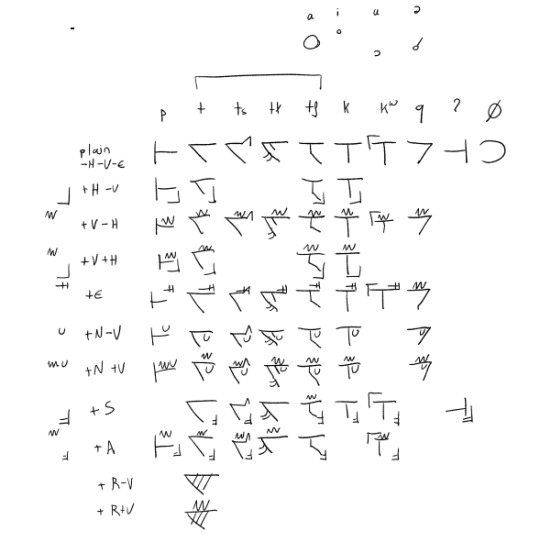

The most common writing system was from the draconian language, which is a cursive abjad with initial, medial, final and isolated letter shapes, like arabic:

Since dragons are a way older species and they really like record-keeping, some sort of phonetic writing system should exist based on their language, which already has a lot of phonemes, to record unwritten languages and describe languages of other peoples.

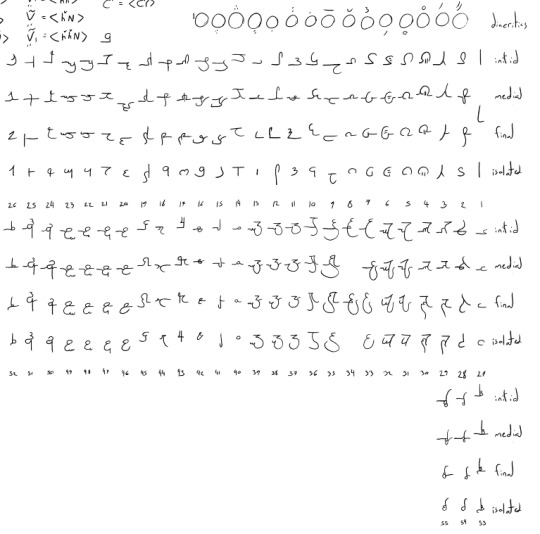

There are also languages on the north that use closely related alphabets:

...and then other languages which use/used logographic and pictographic writing systems.

So, since computers are not a colonial invention, and instead were created in a cooperative way by various nations, they must take all of the diversity of the world's languages into account. I haven't thought about it that much, but something like unicode should have been there from the start. Maybe the text starts with some kind of heading which informs the computer which language is encoded, and from there the appropriate writing system is chosen for that block of text. This would also make it easy to encode multi-lingual text. I also haven't thought about anything like word size, but since these systems are based on serial communication like telegraph, i guess word sizes should be flexible, and the CPU-RAM bus width doesn't matter much...? I'm not even sure if information is represented in binary numbers or something else, like the balanced ternary of the Setun computer

As you can see, i have been way more interested in the anthropology and linguistics bits of it than the technological aspects. At least i can tell that printing is probably done with pen plotters and matrix printers to be able to handle the multiple writing systems with various types of characters and writing directions. I'm not sure how input is done, but i guess some kind of keyboard works mostly fine. More complex writing systems could use something like stroke composition or phonetic transliteration, and then the text would be displayed in a screen before being recorded/sent.

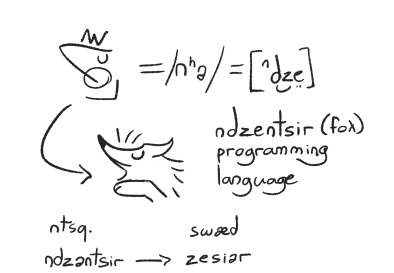

Also the idea of ndzəntsi(a)r/zesiyr is based on C. At the time, the phonology i was using for ntsuqatir didn't have a /s/ phoneme, and so i picked one of the closest phonemes, /ⁿdz/, which evolves to /z/ in swædir, which gave the [ⁿdzə] or [ze] programming language its name. Coming up with a word for fox, based on the character's similarity was an afterthought. It was mostly created as a prop i could use in art to make the world feel like having an identity of its own, than a serious attempt at having a programming language. Making an esolang out of it would be going way out of the way since i found im not that interested in the technical aspects for their own sake, and having computers was a purely aesthetics thing that i repurposed into a more serious cultural artifact like mail, something that would make sense in storytelling and worldbuilding.

Now that it exists as a concept, though, i imagine it being used in academic and industrial setting, mostly confined to the nation where it was created. Also i don't think they have the needs or computing power for things like the more recent programming languages - in-world computers haven't changed much since their inception, and aren't likely to. No species or culture there has a very competitive or expansionist mindset, there isn't a scarcity of resources since the world is large and sparsely populated, and there isn't some driving force like capitalism creating an artificial demand such as moore's law. They are very creative, however, and computers and telecommunications were the ways they found to overcome the large distances between main cities, so they can better help each other in times of need.

#answered#ask#conlang i guess??#thank you for wanting to read me yapping about language and worldbuilding#also sorry if this is a bit disappointing to read - i don't have a very positivist/romantic outlook on computing technology anymore#but i tried to still make something nice out of it by shaping their relationship with technology to be different than ours#since i dedicated so much time to that aspect of the worldbuilding early on

13 notes

·

View notes

Text

the grand master post of all things D3

made by a person who definitely thinks about a district that was never actually canonically described a little too much

so. let’s get into the fun stuff!

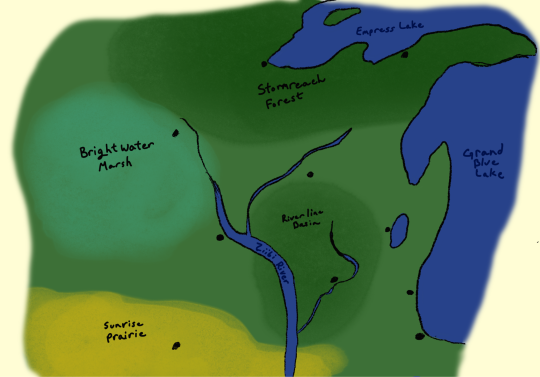

District Three consists of two main “areas” or zones: the Stills and the city of Stepanov, which is the main city in Three, named after the first mayor of Three, who’s full name was Hewlett Stepanov. It is still a popular name in Three, especially with the wealthy, and the Stepanov family are respected highly by citizens.

if you’ve read my other posts about d3 and everything related to it, some of this is just restating but some of it is new so make sure to find that stuff! here are all of the posts: Beetee + Wiress headcanons | District Three Lore + List of Victors + even more Beetee + Wiress headcanons | Wiress did not grow up poor - opinion | Wiress with Cats | The Latier Family Tree [extended version] | even MORE Beetee + Wiress headcanons | Beetee headcanons pertaining to his childhood | Wiress headcanons pertaining to music + music that is most prevalent in Three | really random hcs about Beetee’s great grandparents | Beetee’s dynamic with the other victors + a little of Wiress and Beetee’s relationship | Beetee’s love language | Beetress headcanons | Beetee’s parents and home life headcanons | Beetee and religion | Beetee and Brutus friendship | Beetress first meeting | Beetee and English (plus beetress) | How beetress fell in love + Beetee’s realization | Beetee post-rebellion | Axel Latier (Beetee’s nephew) and Barbara Lisiecki (Wiress’ sister) headcanons | D3 Tributes headcanons | Is D3 an underdog district? | Wiress’ family and their feelings about her Games | Beetee and forced prostitution [cw: graphic description/depictions of forced prostitution] | Beetee and Coriolanus Parallels | D3 Tribute List | Beetress and Power Dynamics | Various Beetee Headcanons | D3 Specific Beliefs | D3 Culture (All Social Classes) |

tags: dayne’s beetee tag | dayne’s wiress thoughts (TM) | the latier family | everything beetee/wiress is under beetress

fics: even here as we are standing now (i can see the fear in your eyes) [aka The Beetee Fic] | arbutus | asphodel | bellflower | laurel | aconite | aftershocks | hyacinth | freesia | ambrosia | hydrangea |

this will be separated into sections because i have a lot of thoughts lol

General Info:

District Three consists of the majority of Idaho/the Idaho Panhandle, some of west Washington, and east Montana. There also a tiny, tiny little bit of modern Oregon. D3 borders One to the south and east and Seven to the north and west.

The area that is considered “District Three” is not all used for living and the majority of it is forested land. Due to this, lumberyard workers from Seven travel the short distance from the two districts to harvest many of these trees. This is also true for miners from One in terms of gems.

Three makes and produces the vast majority of all technology used in Panem, cars, glass, and various drugs.

Child labor is a major issue, as children from the Stills begin working at factories as early as age four/five. Much of the time, there is an encompassing building attached to the factory that your parents work at for children who work.

Stepanov:

Saint Stepanov (typically shortened simply to Stepanov during the ADD¹/DHG²/BSR³ era) is the main city of Three where wealthier citizens live, or there version of Twelve’s “merchant class”. Adults who live here work as software developers and engineers, factory owners or managers, analysts, programmers, etc. These citizens typically live good lives with fulfilling jobs and nine times out of ten, no one will ever have to worry about where the food on the table comes from.

Only one year in Hunger Games existence were both children reaped were from the wealthier families of Three. The 48th Annual Hunger Games came as a shock to everyone, when both Wiress Lisiecki and Fortran Stepanov were reaped.

The square in Stepanov is where the Reapings take place. All children of reaping age can fit, but families usually have to stand in accompanying streets or are pushed to the back.

¹—After Dark Days | ²—During Hunger Games | ³—Before Second Rebellion |

The Stills:

The majority of Three’s population lives in poverty or on the cusp (about 150k out of 190k), in the area called the Stills, which consists of five separate neighborhoods/towns joined together by a square in the middle called The Interface. The Interface is where the main marketplace for the Stills is, including a popular bar called Harlow’s.

The majority of the Stills inhabitants work as factory workers/laborers. Almost always, these workers work in these jobs for their entire lives. They are not given the same opportunities as the citizens from Stepanov are. Due to this, a lot of animosity from both sides of the divide is very prevalent in everyday life.

Citizens from the Stills are taught from a young age about doing whatever it takes to survive. Because of this, tributes from the Stills typically do better in the Hunger Game than their wealthier counterparts.

— Midtown:

Midtown is the in between area to the Stills and Stepanov. Only around 10k of the overall population live here. Inhabitants of Midtown have jobs that include factory manager, training jobs, and about half of the jobs not pertaining to Three’s industry. People from Midtown are teachers, business/shop owners, and various other occupations.

Midtown is on the other side of Stepanov to the Stills, a short walk down a small hill. Due to the small population, few tributes in the Hunger Games are from Midtown, but there are exceptions. Examples of this include Circ Bauer, male tribute of the 10th Annual Hunger Games, and Matilyne Sanchez, female tribute of the 47th Annual Hunger Games. The 45th Games was the only year where both tributes from Three were from Midtown, with Coball Alves-Torres and Maple Adomaitytė.

Victors Village:

As of the Third Quarter Quell, there were five residents of Three’s Victors Village.

Address — Game — Name — Age at Victory

316 — 16th — Attican (“Atlas”) Hoffman — 16

340 — 40th — Beetee Latier — 19

348 — 48th — Wiress Lisiecki — 18

355 — 55th — Marie Teller — 15

368 — 68th — Haskell Nishimaru — 16

— The Victors:

Name: Attican Hoffman

Kill Count: Six

Type of Reaping: Regular reaping

Birthplace: Hewlett Memorial Hospital, Stepanov, District Three

Name: Beetee Latier

Kill Count: Nine

Type of Reaping: Rigged reaping

Birthplace: Apartment Building 9, Floor 6, No. 612, Three Falls, District Three

Name: Wiress Lisiecki

Kill Count: Five

Type of Reaping: Regular reaping

Birthplace: Hewlett Memorial Hospital, Stepanov, District Three

Name: Marie Teller

Kill Count: One (technicality; she caused six deaths, but only actually killed one)

Type of Reaping: Rigged reaping

Birthplace: Apartment Building 6, Floor 3, No. 321, Three Falls, District Three

Name: Haskell Nishimaru

Kill Count: Two

Type of Reaping: Regular reaping

Birthplace: Apartment Building 3, Floor 3, No. 387, Dreier Springs, District Three

Important Characters:

— Parents of Victors:

The Stills —

Ada Latier (née Brownstone) — mother of Beetee Latier. Lost her older sister, Cora Brownstone, to the sixteenth Games.

Linus Latier — father of Beetee Latier. Lost his younger brother, Hermes Latier, to the fourteenth Games. Linus and Ada found each other as the only person who understood the pain of losing a sibling to the Games.

Annabeth Teller (née White) — mother of Marie Teller. Family friend of the Latiers. Grew up in the Stills.

Fox Teller — father of Marie Teller. Grew up alongside Linus Latier and were childhood friends. Fox and Anna were the reason Ada and Linus met.

Stepanov —

Aurora Lisiecki — mother of Wiress Lisiecki. She runs a circus/theater group to entertain Threes and visiting Capitol citizens.

— Siblings of Victors:

Adeline Latier — younger sister of Beetee Latier. She’s younger than him by six years. She was thirteen when he was reaped.

Roent Latier — younger brother of Beetee Latier. He’s eight years younger than Beetee. He was eleven when he was reaped.

Tera Latier — younger sister of Beetee Latier. She’s ten years younger than Beetee. She was nine when he was reaped.

Ruther Latier — younger brother of Beetee Latier. He’s ten years younger than Beetee. He was nine when he was reaped. Tera and him are twins.

Dayta Latier — younger sister of Beetee Latier. She’s thirteen years younger than Beetee. She was six years old when he was reaped.

Barbara Lisiecki — older sister of Wiress Lisiecki. She’s four years older than Wiress and was twenty-two when she was reaped. Grew up in Stepanov but moved out to live in the Stills after she was expected to join the family business (the circus/theater group).

Various —

Amalia Remizova — female tribute of the 40th Hunger Games, age 13. Grew up in Stepanov.

Fortran Stepanov — male tribute of the 48th Hunger Games, age 18. Grew up in Stepanov.

Stefan Stepanov — older brother of Fortran Stepanov, Wiress’ district partner for the 48th Hunger Games. He was also in the same kindergarten class as Beetee. Grew up in Stepanov.

Appa Enriquez — owner of a small clothing store in the Stills. Distant relative of Beetee on his mother’s side. Grew up in the Stills.

Additional Information:

Three is not an “Anyone But A Career” district. They have never been one.

Every single time a Three wins the Hunger Games, a victor from One precedes them. Every. Single. Time. Atlas was preceded by Harrison Sanford, Beetee by Paris Sanford, Wiress by Zircon (“Connor”) Lewis, Marie by Emerald Reeves, and Haskell by Augustus Braun.

Three’s victors are sometimes seen as a buffer between the careers and the outlying districts. Most of the citizens of Three don’t have a problem with the Careers, except for some people from Stepanov.

In Stepanov, residents celebrate what children refer to as “Tree Time” during December—families use left over trees from the workers from Seven and display them in their homes with generational ornaments. Every year, at least one new ornament is added to the tree. Some families use breakable plastic to put new year’s resolutions inside of the ornaments and then break them on New Year’s to read them with family and friends at midnight.

Every Saturday, business owners in Midtown set up a marketplace for citizens, primarily ones from Stepanov.

In the Stills, names are very important. Due to the extreme poverty many are trapped in, a common phrase is, “I have nothing but my first and last”, meaning their first and last name.

#dayne talks#this was just a load of yapping so i’m sorry abt that lol#beetee latier#wiress#wiress thg#district 3#hunger games districts#thg#the hunger games#thg victors#dayne’s beetee tag#dayne’s wiress thoughts (TM)#dayne’s thg victors

44 notes

·

View notes

Text

The story of BASIC’s development began in 1963, when Kemeny and Kurtz, both mathematics professors at Dartmouth, recognized the need for a programming language that could be used by non-technical students. At the time, most programming languages were complex and required a strong background in mathematics and computer science. Kemeny and Kurtz wanted to create a language that would allow students from all disciplines to use computers, regardless of their technical expertise.

The development of BASIC was a collaborative effort between Kemeny, Kurtz, and a team of students, including Mary Kenneth Keller, John McGeachie, and others. The team worked tirelessly to design a language that was easy to learn and use, with a syntax that was simple and intuitive. They drew inspiration from existing programming languages, such as ALGOL and FORTRAN, but also introduced many innovative features that would become hallmarks of the BASIC language.

One of the key innovations of BASIC was its use of simple, English-like commands. Unlike other programming languages, which required users to learn complex syntax and notation, BASIC used commands such as “PRINT” and “INPUT” that were easy to understand and remember. This made it possible for non-technical users to write programs and interact with the computer, without needing to have a deep understanding of computer science.

BASIC was first implemented on the Dartmouth Time-Sharing System, a pioneering computer system that allowed multiple users to interact with the computer simultaneously. The Time-Sharing System was a major innovation in itself, as it allowed users to share the computer’s resources and work on their own projects independently. With BASIC, users could write programs, run simulations, and analyze data, all from the comfort of their own terminals.

The impact of BASIC was immediate and profound. The language quickly gained popularity, not just at Dartmouth, but also at other universities and institutions around the world. It became the language of choice for many introductory programming courses, and its simplicity and ease of use made it an ideal language for beginners. As the personal computer revolution took hold in the 1970s and 1980s, BASIC became the language of choice for many hobbyists and enthusiasts, who used it to write games, utilities, and other applications.

Today, BASIC remains a popular language, with many variants and implementations available. While it may not be as widely used as it once was, its influence can still be seen in many modern programming languages, including Visual Basic, Python, and JavaScript. The development of BASIC was a major milestone in the history of computer science, as it democratized computing and made it accessible to a wider range of people.

The Birth of BASIC (Dartmouth College, August 2014)

youtube

Friday, April 25, 2025

#basic programming language#computer science#dartmouth college#programming history#software development#technology#ai assisted writing#Youtube

7 notes

·

View notes

Text

About the whole DOGE-will-rewrite Social Security's COBOL code

Posted to Facebook by Tim Boudreau on March 30, 2025.

About the whole DOGE-will-rewrite Social Security's COBOL code in some new language thing, since this is a subject I have a whole lot of expertise in, a few anecdotes and thoughts.

Some time in the early 2000s I was doing some work with the real-time Java team at Sun, and there was a huge defense contractor with a peculiar query: Could we document how much memory an instance of every object type in the JDK uses? And could we guarantee that that number would never change, and definitely never grow, in any future Java version?

I remember discussing this with a few colleagues in a pub after work, and talking it through, and we all arrived at the conclusion that the only appropriate answer to this question as "Hell no." and that it was actually kind of idiotic.

Say you've written the code, in Java 5 or whatever, that launches nuclear missiles. You've tested it thoroughly, it's been reviewed six ways to Sunday because you do that with code like this (or you really, really, really should). It launches missiles and it works.

A new version of Java comes out. Do you upgrade? No, of course you don't upgrade. It works. Upgrading buys you nothing but risk. Why on earth would you? Because you could blow up the world 10 milliseconds sooner after someone pushes the button?

It launches fucking missiles. Of COURSE you don't do that.

There is zero reason to ever do that, and to anyone managing such a project who's a grownup, that's obvious. You don't fuck with things that work just to be one of the cool kids. Especially not when the thing that works is life-or-death (well, in this case, just death).

Another case: In the mid 2000s I trained some developers at Boeing. They had all this Fortran materials analysis code from the 70s - really fussy stuff, so you could do calculations like, if you have a sheet of composite material that is 2mm of this grade of aluminum bonded to that variety of fiberglass with this type of resin, and you drill a 1/2" hole in it, what is the effect on the strength of that airplane wing part when this amount of torque is applied at this angle. Really fussy, hard-to-do but when-it's-right-it's-right-forever stuff.

They were taking a very sane, smart approach to it: Leave the Fortran code as-is - it works, don't fuck with it - just build a nice, friendly graphical UI in Java on top of it that *calls* the code as-is.

We are used to broken software. The public has been trained to expect low quality as a fact of life - and the industry is rife with "agile" methodologies *designed* to churn out crappy software, because crappy guarantees a permanent ongoing revenue stream. It's an article of faith that everything is buggy (and if it isn't, we've got a process or two to sell you that will make it that way).

It's ironic. Every other form of engineering involves moving parts and things that wear and decay and break. Software has no moving parts. Done well, it should need *vastly* less maintenance than your car or the bridges it drives on. Software can actually be *finished* - it is heresy to say it, but given a well-defined problem, it is possible to actually *solve* it and move on, and not need to babysit or revisit it. In fact, most of our modern technological world is possible because of such solved problems. But we're trained to ignore that.

Yeah, COBOL is really long-in-the-tooth, and few people on earth want to code in it. But they have a working system with decades invested in addressing bugs and corner-cases.

Rewriting stuff - especially things that are life-and-death - in a fit of pique, or because of an emotional reaction to the technology used, or because you want to use the toys all the cool kids use - is idiotic. It's immaturity on display to the world.

Doing it with AI that's going to read COBOL code and churn something out in another language - so now you have code no human has read, written and understands - is simply insane. And the best software translators plus AI out there, is going to get things wrong - grievously wrong. And the odds of anyone figuring out what or where before it leads to disaster are low, never mind tracing that back to the original code and figuring out what that was supposed to do.

They probably should find their way off COBOL simply because people who know it and want to endure using it are hard to find and expensive. But you do that gradually, walling off parts of the system that work already and calling them from your language-du-jour, not building any new parts of the system in COBOL, and when you do need to make a change in one of those walled off sections, you migrate just that part.

We're basically talking about something like replacing the engine of a plane while it's flying. Now, do you do that a part-at-a-time with the ability to put back any piece where the new version fails? Or does it sound like a fine idea to vaporize the existing engine and beam in an object which a next-word-prediction software *says* is a contraption that does all the things the old engine did, and hope you don't crash?

The people involved in this have ZERO technical judgement.

2 notes

·

View notes

Note

Scientific simulation/government contractors. My company uses C++, and that's because we "modernized" from Fortran a few years ago (but actually some of our core code is still in Fortran). I do anything I can to write my projects in something else though (py/C#/js)

That last parenthetical is causing me great harm but thank you for the information.

13 notes

·

View notes

Text

//Other blogs linked through @squid-pkmnirl-hub

YOOOOO I'm Squid! (They/she/he/whatever's funniest) I'm from the Ziibi Region, west of Unova.

Squid is an alias, and I do have other names I use. My gym challenge is under a different name.

My engineering job takes me around the region for fieldwork (water treatment, nothin too fancy), and some of my friends are on journeys of their own that I try to be involved in.

I'm just now starting to get serious about battling, after a bit of a misadventure in Unova. I just won my first gym badge in the Ziibi Region!

I am also an amateur pokéballsmith, so I'll sometimes make shitposts about that. I've got an order form set up, but please be patient.

My goal is to document my travels and bring more awareness about this beautiful region!

Pokémon team:

Humus - Skiddo (male)

Remmi - Remoraid (female)

Orpheus - Procezant (male) (ghost/flying)

Shortgrass - Embrush (female) (fire starter)

FORTRAN - Porygon (male?) (Old as fuck)

Chessie - Giant Eelektross (male) (ride/emergency pokémon)

Slugger - Honedge (male), from the 1880s, wants me dead

Not my Pokémon:

Zippy - Unovan Sceptile (female) (grass/ground) (borrowed)

HACKMONS.EDU - Budew (female) (evil) (kalosian (derogatory))

Pikagoo - Pikagoo (male) (ground/electric) (looks kinda like a Pikachu but reptilian)

Non-pokémon named objects:

Thunk - shovel, modern

Other account stuff:

Pelipper Mail: ON

Mystery Gifts: ON

Magic Anons: ON

Union Circle: ON

Current Arcs and Events:

Ziibi Gym Challenge - #Ziibi Region Travels

Past Arcs:

Time Travel - #High Noon at Nimbasa

Mirror Dimension Road Trip - #Roadtrip Mishap

Past Events:

Not-so-Tranquill evolution - #Orpheus Evolution

Empress' Chosen AU - #Chosen Cyan

1800s Farmer AU - #Crustleshell Mound Ranch

I migrated here after RIF went down so if you also were on pokemedia reddit, hello!

The Ziibi Region is based on the upper Mississippi river and west Great Lakes. It's a fan region that I've been working on, I've got some fakemon created and some lore, but still need to name the towns.

15 notes

·

View notes

Note

Ok so I thought about your point about different programming languages and I think I have to disagree. Obviously, nobody's gonna be super good at all of them (or even more that about 5 at a time tops) and sure, if you're unusually proficient in a specific one, that's still gonna make a difference and I'm not denying that.

But here's the thing: most modern languages are pretty easy to pick up if you already know a few. They're designed that way, we have widely accepted standards now and yes there's hard to learn languages we'll probably always need (Assembly 😔) and also older (thus not adhering to modern standards) ones that are still used today due to other factors (COBOL 🙄) but if you pick up a (non-esotheric) language made in the 90s or after and you've coded in another modern language before, you'll probably be reasonably confident within a week or two and reading code will usually be possible more or less immediately, with the occasional google ofc but like 75% of coding is googling anyway.

Given that most sci-fi is set in the future, often the far future, I think it's reasonable to speculate this will only get easier in times to come. Languages whose continued use is caused by circumstance will eventually be replaced and languages we need but are hard to learn will be reduced to a minimum with most of it being replaced by more accessible languages. This has already been happening. For instance, just under 2% of version 4.9 of the Linux kernel source code is written in assembly; more than 97% is written in C. I directly cribbed that last sentence from Wikipedia, it's a very succinct summary.

So while I still call bullshit on the way trek treats xenolinguists (I'm especially thinking Hoshi rn) that's pretty much the speed of picking up a new language a sci-fi programmer would probably have, especially considering IDEs would also develop further, making anyone with programming experience able to code medium to complex stuff in a new language within a day or so, even if it'd take them longer to code it than someone with a lot of experience in that specific language.

I realise this isn't your field and so probably not very interesting to you but I've been thinking about it a lot since your reply and I just wanted to say this ig. Maybe some of your followers will care, dunno.

-Levi

no you’re not wrong! i code in r, python, and fortran as part of my research and learning the latter two after becoming proficient in r was a lot easier than learning r initially. i didn’t phrase it very well but my thought process was more along the lines of, since this is scifi we’re talking about, working with an alien programming language, especially one from a civilization you’ve never encountered before, would be more like trying to learn a non-standardized language

6 notes

·

View notes

Text

Lfortran: Modern interactive LLVM-based Fortran compiler

https://lfortran.org/

2 notes

·

View notes

Text

I decided to write this article when I realized what a great step forward the modern computer science learning has done in the last 20 years. Think of it. My first “Hello, world” program was written in Sinclair BASIC in 1997 for КР1858ВМ1r This dinosaur was the Soviet clone of the Zilog Z80 microprocessor and appeared on the Eastern Europe market in 1992-1994. I didn’t have any sources of information on how to program besides the old Soviet “Encyclopedia of Dr. Fortran”. And it was actually a graphic novel rather than a BASIC tutorial book. This piece explained to children how to sit next to a monitor and keep eyesight healthy as well as covered the general aspects of programming. Frankly, it involved a great guesswork but I did manage to code. The first real tutorial book I took in my hands in the year of 2000 was “The C++ Programming Language” by Bjarne Stroustrup, the third edition. The book resembled a tombstone and probably was the most fundamental text for programmers I’d ever seen. Even now I believe it never gets old. Nowadays, working with such technologies as Symfony or Django in the DDI Development software company I don’t usually apply to books because they become outdated before seeing a printing press. Everyone can learn much faster and put a lesser effort into finding new things. The number of tutorials currently available brings the opposite struggle to what I encountered: you have to pick a suitable course out of the white noise. In order to save your time, I offer the 20 best tutorials services for developers. Some of them I personally use and some have gained much recognition among fellow technicians. Lynda.com The best thing about Lynda is that it covers all the aspects of web development. The service currently has 1621 courses with more than 65 thousand videos coming with project materials by experts. Once you’ve bought a monthly subscription for a small $30 fee you get an unlimited access to all tutorials. The resource will help you grow regardless your expertise since it contains and classifies courses for all skill levels. Pluralsight.com Another huge resource with 1372 courses currently available from developers for developers. It may be a hardcore decision to start with Pluralsight if you’re a beginner, but it’s a great platform to enhance skills if you already have some programming background. A month subscription costs the same $30 unless you want to receive downloadable exercise files and additional assessments. Then you’ll have to pay $50 per month. Codecademy.com This one is great to start with for beginners. Made in an interactive console format it leads you through basic steps to the understanding of major concepts and techniques. Choose the technology or language you like and start learning. Besides that, Codecademy lets you build websites, games, and apps within its environment, join the community and share your success. Yes, and it’s totally free! Probably the drawback here is that you’ll face challenges if you try to apply gained skills in the real world conditions. Codeschool.com Once you’ve done with Codecademy, look for something more complicated, for example, this. Codeschool offers middle and advanced courses for you to become an expert. You can immerse into learning going through 10 introductory sessions for free and then get a monthly subscription for $30 to watch all screencasts, courses, and solve tasks. Codeavengers.com You definitely should check this one to cover HTML, CSS, and JavaScript. Code Avengers is considered to be the most engaging learning you could experience. Interactive tasks, bright characters and visualization of your actions, simple instructions and instilling debugging discipline makes Avengers stand out from the crowd. And unlike other services it doesn’t tie you to schedules allowing to buy either one course or all 10 for $165 at once and study at your own pace. Teamtreehouse.com An all-embracing platform both for beginners and advanced learners. Treehouse

has general development courses as well as real-life tasks such as creating an iOS game or making a photo app. Tasks are preceded by explicit video instructions that you follow when completing exercises in the provided workspace. The basic subscription plan costs $25 per month, and gives access to videos, code engine, and community. But if you want bonus content and videos from leaders in the industry, your pro plan will be $50 monthly. Coursera.org You may know this one. The world famous online institution for all scientific fields, including computer science. Courses here are presented by instructors from Stanford, Michigan, Princeton, and other universities around the world. Each course consists of lectures, quizzes, assignments, and final exams. So intensive and solid education guaranteed. By the end of a course, you receive a verified certificate which may be an extra reason for employers. Coursera has both free and pre-pay courses available. Learncodethehardway.org Even though I’m pretty skeptical about books, these ones are worth trying if you seek basics. The project started as a book for Python learning and later on expanded to cover Ruby, SQL, C, and Regex. For $30 you get a book and video materials for each course. The great thing about LCodeTHW is its focus on practice. Theory is good, but practical skills are even better. Thecodeplayer.com The name stands for itself. Codeplayer contains numerous showcases of creating web features, ranging from programming input forms to designing the Matrix code animation. Each walkthrough has a workspace with a code being written, an output window, and player controls. The service will be great practice for skilled developers to get some tips as well as for newbies who are just learning HTML5, CSS, and JavaScript. Programmr.com A great platform with a somewhat unique approach to learning. You don’t only follow courses completing projects, but you do this by means of the provided API right in the browser and you can embed outcome apps in your blog to share with friends. Another attractive thing is that you can participate in Programmr contests and even win some money by creating robust products. Well, it’s time to learn and play. Udemy.com An e-commerse website which sells knowledge. Everyone can create a course and even earn money on it. That might raise some doubts about the quality, but since there is a lot of competition and feedback for each course a common learner will inevitably find a useful training. There are tens of thousands of courses currently available, and once you’ve bought a course you get an indefinite access to all its materials. Udemy prices vary from $30 to $100 for each course, and some training is free. Upcase.com Have you completed the beginner courses yet? It’s time to promote your software engineer’s career by learning something more specific and complex: test-driven development in Ruby on Rails, code refactoring, testing, etc. For $30 per month you get access to the community, video tutorials, coding exercises, and materials on the Git repository. Edx.org A Harvard and MIT program for free online education. Currently, it has 111 computer science and related courses scheduled. You can enroll for free and follow the training led by Microsoft developers, MIT professors, and other experts in the field. Course materials, virtual labs, and certificates are included. Although you don’t have to pay for learning, it will cost $50 for you to receive a verified certificate to add to your CV. Securitytube.net Let’s get more specific here. Surprisingly enough SecurityTube contains numerous pieces of training regarding IT security. Do you need penetration test for your resource? It’s the best place for you to capture some clues or even learn hacking tricks. Unfortunately, many of presented cases are outdated in terms of modern security techniques. Before you start, bother yourself with checking how up-to-date a training is. A lot of videos are free, but you can buy a premium course access for $40.

Rubykoans.com Learn Ruby as you would attain Zen. Ruby Koans is a path through tasks. Each task is a Ruby feature with missing element. You have to fill in the missing part in order to move to the next Koan. The philosophy behind implies that you don’t have a tutor showing what to do, but it’s you who attains the language, its features, and syntax by thinking about it. Bloc.io For those who seek a personal approach. Bloc covers iOS, Android, UI/UX, Ruby on Rails, frontend or full stack development courses. It makes the difference because you basically choose and hire the expert who is going to be your exclusive mentor. 1-on-1 education will be adapted to your comfortable schedule, during that time you’ll build several applications within the test-driven methodology, learn developers’ tools and techniques. Your tutor will also help you showcase the outcome works for employers and train you to pass a job interview. The whole course will cost $5000 or you can pay $1333 as an enrollment fee and $833 per month unless you decide to take a full stack development course. This one costs $9500. Udacity.com A set of courses for dedicated learners. Udacity has introductory as well as specific courses to complete. What is great about it and in the same time controversial is that you watch tutorials, complete assignments, get professional reviews, and enhance skills aligning it to your own schedule. A monthly fee is $200, but Udacity will refund half of the payments if you manage to complete a course within 12 months. Courses are prepared by the leading companies in the industry: Google, Facebook, MongoDB, At&T, and others. Htmldog.com Something HTML, CSS, JavaScript novices and adepts must know about. Simple and free this resource contains text tutorials as well as techniques, examples, and references. HTML Dog will be a great handbook for those who are currently engaged in completing other courses or just work with these frontend technologies. Khanacademy.org It’s diverse and free. Khan Academy provides a powerful environment for learning and coding simultaneously, even though it’s not specified for development learning only. Built-in coding engine lets you create projects within the platform, you watch video tutorials and elaborate challenging tasks. There is also the special set of materials for teachers. Scratch.mit.edu Learning for the little ones. Scratch is another great foundation by MIT created for children from 8 to 15. It won’t probably make your children expert developers, but it will certainly introduce the breathtaking world of computer science to them. This free to use platform has a powerful yet simple engine for making animated movies and games. If you want your child to become an engineer, Scratch will help to grasp the basic idea. Isn’t it inspirational to see your efforts turning into reality? Conclusion According to my experience, you shouldn’t take more than three courses at a time if you combine online training with some major activity because it’s going to be hard to concentrate. Anyway, I tried to pick different types of resources for you to have a choice and decide your own schedule as well as a subscription model. What services do you usually apply to? Do you think online learning can compete with traditional university education yet? Please, share. Dmitry Khaleev is a senior developer at the DDI Development software company with more than 15 years experience in programming and reverse-engineering of code. Currently, he works with PHP and Symfony-based frameworks.

0 notes

Text

Why You Should Get Yourself a Job with Legacy Programming Languages

Key Takeaways “Old” languages are still relevant: FORTRAN, COBOL, and Pascal continue to play crucial roles in specific domains. FORTRAN: Excels in scientific computing and high-performance computing. COBOL: Remains essential in financial systems for handling large datasets and transactions. Pascal: Influenced modern languages and continues to be used in education. Career opportunities…

#career#COBOL#computer science#computers#dailyprompt#education#finance#FORTRAN#hacking#history#jobs#legacy systems#Pascal#Programming#scientific computing#technology

0 notes

Text

Legacy Code, Modern Paychecks: The Surprising Demand for Antique Programming Languages

Why old-school tech skills like COBOL and Fortran are still landing high-paying gigs in 2025 In a tech world obsessed with the latest frameworks and cutting-edge AI, it might surprise you to learn there’s still an active – and even lucrative – market for what many would call antique programming languages. We’re talking COBOL, Fortran, Ada, LISP, and other veterans of computing history. But is…

#antique programming languages#COBOL and Fortran careers#COBOL programming jobs#IT Consulting#IT Contracting#legacy code developers

0 notes

Text

Tim Boudreau on FB:

About the whole DOGE-will-rewrite Social Security's COBOL code in some new language thing, since this is a subject I have a whole lot of expertise in, a few anecdotes and thoughts.

Some time in the early 2000s I was doing some work with the real-time Java team at Sun, and there was a huge defense contractor with a peculiar query: Could we document how much memory an instance of every object type in the JDK uses? And could we guarantee that that number would never change, and definitely never grow, in any future Java version?

I remember discussing this with a few colleagues in a pub after work, and talking it through, and we all arrived at the conclusion that the only appropriate answer to this question as "Hell no." and that it was actually kind of idiotic.

Say you've written the code, in Java 5 or whatever, that launches nuclear missiles. You've tested it thoroughly, it's been reviewed six ways to Sunday because you do that with code like this (or you really, really, really should). It launches missiles and it works.

A new version of Java comes out. Do you upgrade? No, of course you don't upgrade. It works. Upgrading buys you nothing but risk. Why on earth would you? Because you could blow up the world 10 milliseconds sooner after someone pushes the button?

It launches fucking missiles. Of COURSE you don't do that.

There is zero reason to ever do that, and to anyone managing such a project who's a grownup, that's obvious. You don't fuck with things that work just to be one of the cool kids. Especially not when the thing that works is life-or-death (well, in this case, just death).

Another case: In the mid 2000s I trained some developers at Boeing. They had all this Fortran materials analysis code from the 70s - really fussy stuff, so you could do calculations like, if you have a sheet of composite material that is 2mm of this grade of aluminum bonded to that variety of fiberglass with this type of resin, and you drill a 1/2" hole in it, what is the effect on the strength of that airplane wing part when this amount of torque is applied at this angle. Really fussy, hard-to-do but when-it's-right-it's-right-forever stuff.

They were taking a very sane, smart approach to it: Leave the Fortran code as-is - it works, don't fuck with it - just build a nice, friendly graphical UI in Java on top of it that *calls* the code as-is.

We are used to broken software. The public has been trained to expect low quality as a fact of life - and the industry is rife with "agile" methodologies *designed* to churn out crappy software, because crappy guarantees a permanent ongoing revenue stream. It's an article of faith that everything is buggy (and if it isn't, we've got a process or two to sell you that will make it that way).

It's ironic. Every other form of engineering involves moving parts and things that wear and decay and break. Software has no moving parts. Done well, it should need *vastly* less maintenance than your car or the bridges it drives on. Software can actually be *finished* - it is heresy to say it, but given a well-defined problem, it is possible to actually *solve* it and move on, and not need to babysit or revisit it. In fact, most of our modern technological world is possible because of such solved problems. But we're trained to ignore that.

Yeah, COBOL is really long-in-the-tooth, and few people on earth want to code in it. But they have a working system with decades invested in addressing bugs and corner-cases.

Rewriting stuff - especially things that are life-and-death - in a fit of pique, or because of an emotional reaction to the technology used, or because you want to use the toys all the cool kids use - is idiotic. It's immaturity on display to the world.

Doing it with AI that's going to read COBOL code and churn something out in another language - so now you have code no human has read, written and understands - is simply insane. And the best software translators plus AI out there, is going to get things wrong - grievously wrong. And the odds of anyone figuring out what or where before it leads to disaster are low, never mind tracing that back to the original code and figuring out what that was supposed to do.

They probably should find their way off COBOL simply because people who know it and want to endure using it are hard to find and expensive. But you do that gradually, walling off parts of the system that work already and calling them from your language-du-jour, not building any new parts of the system in COBOL, and when you do need to make a change in one of those walled off sections, you migrate just that part.

We're basically talking about something like replacing the engine of a plane while it's flying. Now, do you do that a part-at-a-time with the ability to put back any piece where the new version fails? Or does it sound like a fine idea to vaporize the existing engine and beam in an object which a next-word-prediction software *says* is a contraption that does all the things the old engine did, and hope you don't crash?

The people involved in this have ZERO technical judgement.

0 notes

Text

So far I've found despairingly little information on CHECO. Cybersyn itself has four primary sub-components and each one has a lot more information out there.

Working from Medina's 2006 historical paper on Project Cybersyn as a jumping board, all I've been able to glean yet is that CHECO, the economic simulation program used by Cybersyn and the Allende government, made use of the DYNAMO compiler developed at MIT back in the 50s and 60s. Given the timing, I believe it was specifically the DYNAMO II variant. Cybersyn had two mainframes at their disposal via the state, an IBM 360/50 which had excellent FORTRAN support and a Burroughs 3500 which did not. Both however did support Algol-based languages, which DYNAMO II was written in a dialect of. Thus I believe it's sensible to assume DYNAMO II was used.

DYNAMO (not to be confused with a much newer language of the same name) itself has fallen into relative obscurity, and does not appear to have been used very much past the 1980s. It is a simulation language designed around the use of difference equations, and had several more successful competing languages. In an essential sense, DYNAMO really is only a tool for calculating large numbers of difference equations and generating tables and graphs for the user.

Generally, the work flow is to write a model of a system to simulate and then compile it. Provide data to the model and DYNAMO generates the output requested. DYNAMO while being a continuous simulation, typically was used in a discrete manner. Using levels and rates, computations can model systems albeit relatively simple and crude ones.

According to Medina 2006, CHECO was worked on both in England and in Chile with the Chilean team having reservations about the results. Beer believed that the issue was using historical rather than real-time inputs. Personally, I believe that the weakness of CHECO is directly a result of the crudeness of modeling tools of the time period, and DYNAMO more specifically. In fairness however, it was a very bold project and DYNAMO was more a tool to manage business resources rather than a sandbox tool to design an entire economy. Of the four components of Cybersyn, CHECO is likely the weakest and least developed even when acknowledging that Opsroom (the thing most people would recognise) was never actually completed or operational.

You can find both the DYNAMO II User Manual and the related text Industrial Dynamics on the Internet Archive if you want to look at the technology behind CHECO a little deeper. Unfortunately, it appears there are not any more modern implementations to toy around with currently.

0 notes

Text

The Evolution of Programming Paradigms: Recursion’s Impact on Language Design

“Recursion, n. See Recursion.” -- Ambrose Bierce, The Devil’s Dictionary (1906-1911)

The roots of programming languages can be traced back to Alan Turing's groundbreaking work in the 1930s. Turing's vision of a universal computing machine, known as the Turing machine, laid the theoretical foundation for modern computing. His concept of a stack, although not explicitly named, was an integral part of his model for computation.

Turing's machine utilized an infinite tape divided into squares, with a read-write head that could move along the tape. This tape-based system exhibited stack-like behavior, where the squares represented elements of a stack, and the read-write head performed operations like pushing and popping data. Turing's work provided a theoretical framework that would later influence the design of programming languages and computer architectures.

In the 1950s, the development of high-level programming languages began to revolutionize the field of computer science. The introduction of FORTRAN (Formula Translation) in 1957 by John Backus and his team at IBM marked a significant milestone. FORTRAN was designed to simplify the programming process, allowing scientists and engineers to express mathematical formulas and algorithms more naturally.

Around the same time, Grace Hopper, a pioneering computer scientist, led the development of COBOL (Common Business-Oriented Language). COBOL aimed to address the needs of business applications, focusing on readability and English-like syntax. These early high-level languages introduced the concept of structured programming, where code was organized into blocks and subroutines, laying the groundwork for stack-based function calls.

As high-level languages gained popularity, the underlying computer architectures also evolved. James Hamblin's work on stack machines in the 1950s played a crucial role in the practical implementation of stacks in computer systems. Hamblin's stack machine, also known as a zero-address machine, utilized a central stack memory for storing intermediate results during computation.

Assembly language, a low-level programming language, was closely tied to the architecture of the underlying computer. It provided direct control over the machine's hardware, including the stack. Assembly language programs used stack-based instructions to manipulate data and manage subroutine calls, making it an essential tool for early computer programmers.

The development of ALGOL (Algorithmic Language) in the late 1950s and early 1960s was a significant step forward in programming language design. ALGOL was a collaborative effort by an international team, including Friedrich L. Bauer and Klaus Samelson, to create a language suitable for expressing algorithms and mathematical concepts.

Bauer and Samelson's work on ALGOL introduced the concept of recursive subroutines and the activation record stack. Recursive subroutines allowed functions to call themselves with different parameters, enabling the creation of elegant and powerful algorithms. The activation record stack, also known as the call stack, managed the execution of these recursive functions by storing information about each function call, such as local variables and return addresses.

ALGOL's structured approach to programming, combined with the activation record stack, set a new standard for language design. It influenced the development of subsequent languages like Pascal, C, and Java, which adopted stack-based function calls and structured programming paradigms.

The 1970s and 1980s witnessed the emergence of structured and object-oriented programming languages, further solidifying the role of stacks in computer science. Pascal, developed by Niklaus Wirth, built upon ALGOL's structured programming concepts and introduced more robust stack-based function calls.

The 1980s saw the rise of object-oriented programming with languages like C++ and Smalltalk. These languages introduced the concept of objects and classes, encapsulating data and behavior. The stack played a crucial role in managing object instances and method calls, ensuring proper memory allocation and deallocation.

Today, stacks continue to be an integral part of modern programming languages and paradigms. Languages like Java, Python, and C# utilize stacks implicitly for function calls and local variable management. The stack-based approach allows for efficient memory management and modular code organization.

Functional programming languages, such as Lisp and Haskell, also leverage stacks for managing function calls and recursion. These languages emphasize immutability and higher-order functions, making stacks an essential tool for implementing functional programming concepts.

Moreover, stacks are fundamental in the implementation of virtual machines and interpreters. Technologies like the Java Virtual Machine and the Python interpreter use stacks to manage the execution of bytecode or intermediate code, providing platform independence and efficient code execution.

The evolution of programming languages is deeply intertwined with the development and refinement of the stack. From Turing's theoretical foundations to the practical implementations of stack machines and the activation record stack, the stack has been a driving force in shaping the way we program computers.

How the stack got stacked (Kay Lack, September 2024)

youtube

Thursday, October 10, 2024

#turing#stack#programming languages#history#hamblin#bauer#samelson#recursion#evolution#fortran#cobol#algol#structured programming#object-oriented programming#presentation#ai assisted writing#Youtube#machine art

3 notes

·

View notes